The proposal introduced in this paper is a data-analytics-based method for predicting the delivery speed of software enhancement projects, which has three parts. The first part is the most important, because it gives the SMFC the character of a model belonging to the minimalist machine learning paradigm. This first part consists of a set of variable transformations, which allow the generation of a two-dimensional model. The importance of this model is that it describes the problem to be solved. The second part is a simple linear regression (SLR), while the third part consists of applying a metaheuristic search to optimize the parameters of the SLR model.

4.1. Variable Transformation and Overview

The first novel characteristic of SMFC is that it always generates a two-dimensional model, in contrast to the behavior of machine learning techniques such as SVM, in which the input vectors are mapped into a highly dimensional space [

34]. In this sense, the basic assumption is that as an advantage evident of this proposal, regardless of the number of predictors (independent variables), it is always possible to find a transformation that generates a two-dimensional model whose representation space is the Cartesian plane.

An additional original characteristic of SMFC consists of that, for its training, both independent and dependent variables are considered for transformation. This is remarkable due to the novelty of including the dependent variable into the transformed independent variable. Currently, the authors do not have any knowledge about any study taking advantage of this issue.

In the acronym SMFC, FC means “Feature Construction”. In this model, those features are built by means of elemental transformations from the two types of variables: independent and dependent.

Let a problem in the software engineering field, whose set of involved variables, V, includes (without loss of generality), a dependent variable

and a set

of independent variables (i.e., predictors), whose cardinality can be higher than one as follows:

The transformations are next described by means of illustrative cases.

First illustrative case: The problem regarding the delivery speed of software enhancement projects of our study involves a dependent variable , as well as a set of two independent variables , thus, the cardinality for the set of independent variables is two.

Let us now consider a finite set of n elemental transformations with , where each can be either an arithmetic operation (involving the problem variables, and even some other real parameters), a linear function, a nonlinear function (such as trigonometric, logarithmic, or exponential functions), an elemental statistical operation, or another option.

The main objective of the FC is to select a collection of elemental transformations and apply each of them to specific values of elements from the power set , such that a collection of points on the plane is obtained. This collection corresponds to pairs of specific values that involve dependent and independent variables of the problem.

Second illustrative case: The following power set is obtained from the first illustrative case:

Third illustrative case: There exists an infinite quantity of possibilities for selecting transformation combinations

with elements from

, which can be combined from either real parameters or results of other transformations

applied to other elements from

, such that a pair of specific values is obtained by case. For instance, if

was the sum of real numbers and then that

is applied to the two independent variables, then we would have

; if it also happens that

is the power function of real numbers, we could apply

to the following two arguments: dependent variable (i.e.,

), and the result obtained from

, which would result in:

Fourth illustrative case: Now we must select those combinations that have the best fit regarding the specific problem we want to solve. This selection is achieved from that infinite quantity of transformation combinations

with elements from

, described in the third illustrative case. SMFC includes the application of the selected transformations to those specific values corresponding to the selected variables under a convenient order, such as a set of pairs of values is obtained. An SLR model is then applied to this data set of pairs. Since the training data set of the problem to be solved consists of

software enhancement projects:

then each set of specific values corresponds to one of the projects. For instance, consider the first software project having as specific values the following ones:

;

and

. The resulting values are obtained after applying the transformations described in the third illustrative case as follows:

and

This procedure is then performed over each project in the rest of the training data set.

In accordance with the concepts described in the previous four illustrative cases, the SMFC general algorithm consists of applying all the transformations

to those specific values of the selected elements

, as well as to the obtained values from some transformations in the proper order; that is, the transformation

will be applied either to an element

, or to any value

obtained from either the application of one or more transformations

or from:

This procedure is iteratively performed for all values.

So far, we have obtained the values for all the training data set in the transformation space. The fifth illustrative case will describe how the mentioned values are converted in a problem that allows one to apply an SLR model.

Fifth illustrative case: real values are obtained once the application of all the transformations defined in the third illustrative case are performed to the

software enhancement projects included in the training data set of the fourth illustrative case. It means that a specific result:

is obtained for each project

, where

.

As example, a value of:

was obtained for the first software project.

The transformations and described in the third, fourth, and fifth illustrative cases were presented with an explanatory objective. The original model introduced in our study corresponds to a particular case of the SMFC general algorithm. SMFC is applied to the solution of the described problem, that is, to delivery speed prediction of software enhancement projects with and as the independent variables.

SMFC includes the following five transformations (two of them correspond to the “product of a real parameter by a variable” type):

: Product of the real parameter by a variable;

: Product of the real parameter by a variable;

: Arithmetic addition operation;

: Natural logarithm function ;

: Arithmetic product operation.

These five transformations are applied to each in the following order:

is applied to for obtaining ;

is applied to for obtaining ;

is applied to and for obtaining ;

is applied to result for obtaining ;

is applied to both and for obtaining .

Finally, the following

result is obtained for each

:

4.4. The SMFC Model

Let us start with a training data set of

software enhancement projects:

Each training software enhancement project contains two independent variables and a dependent variable (i.e., and act as predictors for ).

Note that the graph of this problem lies in the three-dimensional space, because three variables are involved. To generate a graph, we should first graph the ordered pair on an X–Y plane and then locate the value of C on the Z axis.

If we were to consider the values with which the fourth illustrative case was exemplified, we would have that the pair would be plotted on the X–Y plane, and for that point the value would be located on the Z axis.

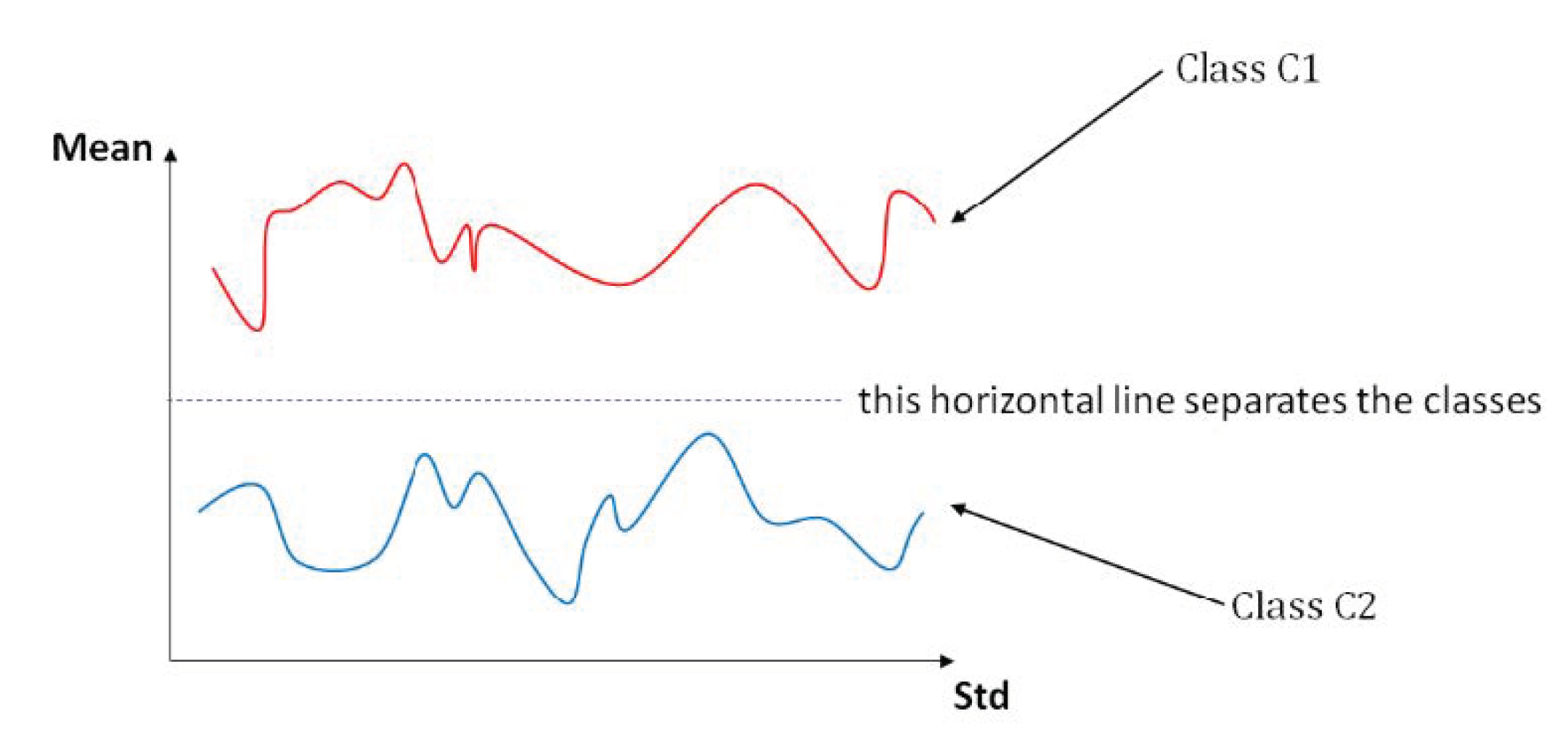

One of the great advantages of models that belong to minimalist machine learning was emphasized in

Section 3; with the new paradigm it is possible to reduce any problem of pattern classification to a graphical problem on the Cartesian plane.

Since the SMFC model belongs to the new paradigm because it is an adaptation to the regression task, the graph of the values of the variables of each project can already be expressed in the Cartesian plane with all the advantages that this brings. The application of expression 20 allows us to work in the Cartesian plane, no longer in three-dimensional space, as it is mandatory to do with the original data, without transforming.

The four algorithmic steps including the three SMFC parts described in the three previous subsections are described and exemplified next:

Step 1:

The five transformations described in

Section 4.1 are applied to each software enhancement project

with

being taken from the training set, such that the following variable transformation is obtained:

where

and

are the transformation parameters, and

is the resulting transformed variable.

Note that is a real value, and all original variables intervene in the creation of the transformed variable: both independent and dependent.

In expression (20), the value of for each project is obtained through a small number of elemental operations. Consequently, the processing of the projects of the training set has a running time complexity .

This means that in Step 1 corresponding to the learning phase, the part of our proposal related to minimalist machine learning has linear complexity.

Step 2:

An SLR is applied to the variables using the values to be graphically represented on the X-axis and the values of to be graphically represented on the Y-axis. This is done to fit the values into a linear function.

After completing this step, it is now possible to graph the problem on the Cartesian plane.

Step 3:

An value implicitly having the predicted value is obtained by each pair of and parameter values, as well as to each software enhancement project with taken from the training set. The term can be algebraically expressed in an explicit manner for the expression.

Since the software enhancement projects belong to the training set, to each project

with

is previously known its correct value for

; therefore, it is possible to calculate the absolute residual (AR) generated from the SLR to that

:

In this article, absolute residuals are used as prediction criterion to evaluate the performance.

Now, simulated annealing is applied for finding the

and

parameter values minimizing the mean of the absolute residuals (MAR):

After applying the previous three steps to the problem data, the SLR model has been generated having the and optimized parameters: and .

Step 4 is of great importance because it consists of applying the SLR model obtained with the three previous steps to the test patterns. For each project, with the application of Step 4, a value for delivery speed can be estimated. It is now possible to estimate the delivery speed for testing patterns.

Step 4:

Let be the index of a software enhancement project belonging to the testing data set.

The value is localized on the X-axis of the SLR optimized by the and parameters. Then, its corresponding is obtained.

The

predicted value can be implicitly expressed from the

expression as follows:

Note that in Expression (23) all the values are known, except for

. By means of elementary algebraic operations, this value is obtained:

In Expression (24), the value of for each testing project is obtained through a small number of elemental operations. Consequently, the processing of each of the testing projects has a running time complexity .

This means that in Step 4 corresponding to the operation phase, the part of our proposal related to minimalist machine learning has constant complexity.

From the value obtained in (24) and the

value that is known from the formulation of the problem, the absolute error is calculated for project

:

Finally, with all

values, the performance of the SMFC model is estimated by calculating the mean of the absolute error: