Abstract

This paper investigates the distributed fusion estimation of a signal for a class of multi-sensor systems with random uncertainties both in the sensor outputs and during the transmission connections. The measured outputs are assumed to be affected by multiplicative noises, which degrade the signal, and delays may occur during transmission. These uncertainties are commonly described by means of independent Bernoulli random variables. In the present paper, the model is generalised in two directions: at each sensor, the degradation in the measurements is modelled by sequences of random variables with arbitrary distribution over the interval [0, 1]; transmission delays are described using three-state homogeneous Markov chains (Markovian delays), thus modelling dependence at different sampling times. Assuming that the measurement noises are correlated and cross-correlated at both simultaneous and consecutive sampling times, and that the evolution of the signal process is unknown, we address the problem of signal estimation in terms of covariances, using the following distributed fusion method. First, the local filtering and fixed-point smoothing algorithms are obtained by an innovation approach. Then, the corresponding distributed fusion estimators are obtained as a matrix-weighted linear combination of the local ones, using the mean squared error as the criterion of optimality. Finally, the efficiency of the algorithms obtained, measured by estimation error covariance matrices, is shown by a numerical simulation example.

1. Introduction

Sensor network systems are of great research interest because of their potential application in a wide range of fields, including target tracking, integrated navigation, military surveillance, mobile robotics and traffic control. In a multi-sensor environment, the information provided by each sensor is transmitted to a processing centre where it is combined or fused by different methods according to how the question of fusion estimation is addressed. The information provided by multiple sensors is normally processed by one of the following methods: either it is centralised, with the sensor outputs being sent to a central processor to be fused, or it is distributed, by a process in which the local estimators are derived and then sent to the processing centre. Centralised fusion estimation provides optimal estimators when all the sensors are faultless, but has the disadvantage that its application imposes high computational costs and a heavy communication burden, especially when a large number of sensors must be considered. Distributed fusion estimation, on the other hand, does not generally provide optimal estimators, but reduces the computational load and is usually more suitable for large-scale sensor networks with random transmission failures, because of its parallel structure. Due to these advantages, the use of distributed fusion estimation in multiple-sensor systems has attracted considerable interest in recent years, with various approaches being taken; for detailed information, see [1,2,3,4,5,6,7] and the references therein.

In a multi-sensor environment, failures may occur both in signal measurements and during the transmission of measured outputs. In the first of these respects, problems such as aging, temporary failure or high levels of background noise may provoke, for example, missing measurements or stochastic sensor gain degradation. The missing measurement phenomenon has received considerable attention, and many studies have been conducted to determine specific distributed estimation algorithms, using different approaches (see [8,9,10]). Usually, a common way to model missing measurements is to consider variables with Bernoulli distribution whose values zero and one represent that the signal is completely absent or completely present in the measurement. However, such an assumption is restrictive in some practical situations since the communications in networked systems are not always perfect and there may be measurement fades/degrades; in such cases, the received measurement may contain only partial information about the signal. The signal estimation problem in case of sensor gain degradation in a multi-sensor environment has not been so extensively investigated, even though the phenomenon of sensor gain degradation (sensor fading measurement) occurs frequently in engineering practice, for example, in thermal sensors for vehicles or in platform-mounted sonar arrays receiving acoustic signals from the ocean. As in the case of missing measurements, conventional estimation algorithms are not applicable to the phenomenon of sensor gain degradation, and studies have been undertaken to obtain estimation algorithms in this situation. For example, Liu et al. [11] studied the optimal filtering problem for networked time-varying systems with stochastic gain degradation, using a recursive matrix equation approach, Liu et al. [12] obtained a minimum variance filtering algorithm for a class of time-varying systems and Liu et al. [13] designed filters distributed over a wireless sensor network located within a given sporadic communication topology.

Furthermore, the measured outputs may be transmitted to the processing centre via communication networks through imperfect channels or be affected by network congestion, and either of these problems can produce random uncertainties in the processed measurements, such as random delays. The possible influence of random delays on the performance of the estimators makes it necessary to develop new estimation algorithms that take account of this problem. For random delays modelled by independent Bernoulli random variables, which is a common assumption, various distributed fusion estimation algorithms have been derived (see, for example, [14,15,16,17,18]). Nevertheless, in real-world communication systems, current delays are usually correlated with previous ones. In this context, assuming that the random delays are modelled by Bernoulli random variables correlated at consecutive sampling times, distributed estimation algorithms were developed by [19,20]. A more general approach, in which the correlation is considered at different times, is to model the delays by means of Markov chains. Under this hypothesis, the estimation problem has been addressed considering a single sensor (see [21,22,23,24]), but to the best of our knowledge, it has not been extensively investigated in a multi-sensor environment. Relevant papers in this context include Ge et al. [25], who investigated the distributed estimation problem for continuous-time linear systems over sensor networks with heterogeneous Markovian coupling intercommunication delays, and García-Ligero et al. [26], who proposed fusion filtering and smoothing algorithms to estimate a signal from one-step delayed measurements with random delays modelled by Markov chains. Also, when the network connectivity is given by topology, relevant works on the consensus problem of linear continuous-time multi-agent systems, as [27,28], have considered continuous-time homogeneous Markov processes with finite state space to describe the communication topology among agents, corresponding each communication graph to a state of the Markov process.

In some conventional algorithms in network systems, the additive noises are assumed white and uncorrelated between the different sensors. However, in many practical applications of multisensor systems, a more realistic scenario is to consider that the noises at different sensors are cross-correlated. For example, in wireless communication, speech enhancement systems or global navigation satellite systems, the noises are usually correlated and cross-correlated. For this reason, research into sensor network systems under the assumption of correlated and/or cross-correlated noises is a promising field of activity, and numerous papers incorporating this assumption are now appearing (see, for example, [29,30,31,32,33]).

In this paper, our aim is to investigate the distributed fusion estimation problem in networked systems subject to stochastic sensor gain degradation and to random transmission delays of one or two steps. At each sensor, the deterioration of the measured output is modelled by sequences of arbitrary variables, with values in the range [0, 1], corresponding to a possible partial or total loss of the signal. In addition, the measurement noises are assumed to be correlated and cross-correlated at the same and at consecutive sampling times. The delays that can occur during the transmission of the measurements from each sensor to the local processing centre are described by different homogeneous Markov chains. In this context, we can derive least-squares distributed linear filter and fixed-point smoothers, without requiring full knowledge of the signal evolution model, only the first and second-order moments of the processes involved in the multi-sensor system are needed. In this process, we first use an innovation approach to derive algorithms for local estimators, including filter and fixed-point smoothers. This method simplifies the derivation because the innovation is a white process. The distributed fusion filter and the fixed-point smoothers are then obtained as the matrix-weighted linear combinations of the corresponding local estimators using the mean squared error as the optimality criterion.

The rest of this paper is structured as follows. In Section 2, we present the model considered and detail the assumptions according to which the distributed fusion estimation problem is addressed. In Section 3, distributed filtering and fixed-point smoothing estimators, together with their estimation error covariance matrices, are derived. Using an innovation approach, local least-squares linear filtering and smoothing algorithms are obtained for each sensor, and the cross-correlation matrices between any two local estimators are then calculated in order to derive the distributed fusion estimators. Section 4 provides a simulation example to illustrate the applicability of the proposed algorithms and analyses the performance of the estimators. Finally, we summarise the main conclusions drawn.

Notation.

The usual notation is used in this paper. Thus, denotes the set of all matrices. denotes a matrix partitioned into submatrices , stands for a block-diagonal matrix whose blocks are the sub-matrices , with i varying in the set of indices I, and is identity matrix. If the dimensions of vectors or matrices are not explicitly stated, they are assumed to be compatible with algebraic operations. The Kronecker product is denoted by the symbol ⊗, the minimum and maximum values of two real numbers are represented, respectively, by and and the Kronecker delta function is denoted as . For simplicity, is used for any function depending on the time instants k and s; analogously, if is a function depending on sensors i and we is written as

2. Problem Statement and Model Description

In this study, our aim is to investigate the least-squares (LS) linear estimation of a discrete-time random signal in a multi-sensor environment, using the distributed fusion method. At each sensor, problems such as aging, temporary failure or excessive background noise may deteriorate the signal measurement, causing a partial loss of information at the measured outputs. In addition, the measurement noises are assumed to be correlated and one-step cross-correlated both in a single sensor and between different sensors.

In order to perform the signal estimation, each sensor transmits its outputs to a local processor via communication channels; during this transmission, faults may occur due to limited communication bandwidth, congestion or defects in the channels, which can produce random delays in the processed measurements.

In this context, the LS distributed linear filter and fixed-point smoother of the signal are derived by a covariance-based approach; that is, we assume that the signal evolution model is unknown and that only the first and second order moments are known. Specifically:

Assumption 1.

The -dimensional signal process, , has a zero mean and its autocovariance function is expressed in a separable form as follows,

where are known matrices.

Consider m sensors and assume that, at each one, the sensor gain is randomly degraded and, therefore, partially deteriorated measured outputs may be obtained. Under this assumption, the measured outputs, , are described by

where, for the random variables quantify the sensor gain degradation, are known matrices and are measurement noises. The following assumptions are made concerning the measurement model (1):

Assumption 2.

The multiplicative noises are independent sequences of independent random variables taking values in with known means and variances, and

Note that modelling the sensor gain degradation by arbitrary random variables taking values in the interval describes not only partially degraded signals but also conventional missing signals (by considering only the values 0 and 1 for ), see, for example, Caballero-Águila et al. [20]. Therefore, the current model generalises the missing measurement model.

Assumption 3.

The measurement noises , have zero-mean and second-order moments given by

Note that this assumption is weaker and more realistic than the usual hypothesis of independent white measurement noises and reflects to conditions which occur in many real-life situations; for example, in wireless communication, speech enhancement systems or global navigation satellite systems, the measurement noises are usually correlated and cross-correlated.

As commented above, during the transmission of measurements from each sensor to its processing centre, random delays frequently occur. In this paper, the absence or presence of transmissions delays, and their magnitudes, at each sensor are modelled by random variables , that take values in , describing whether the measures arrive on time or are delayed by one or two sampling times. Specifically, if this means that the k-th measurement of the i-th sensor is delayed by a sampling periods; otherwise, if there is no delay in the arrival.

Then, the measurements received, which are denoted by are described as in García-Ligero et al. [24]:

Assumption 4.

are independent homogeneous Markov chains with the same state space, , known initial distributions and transition probability matrices where

Finally, the following hypothesis about the signal and processes involved in the measurement model is assumed in the derivation of the LS linear estimators.

Assumption 5.

For each , , and are mutually independent.

3. Distribution Fusion Estimation Problem

In this section, we address the LS linear estimation of a signal in a multi-sensor environment, using the distributed fusion method. This method involves first determining, in each local processor, LS linear estimators based on the delayed measurements received from the sensor itself. Then, all the local estimators are transmitted, over perfect connections, to a fusion centre from which the distributed estimator is obtained.

3.1. Local Filter and Fixed-Smoothing Algorithms

The fusion method is applied as follows; for each , we determine the local filters and fixed-point smoothers for the model described by (1) and (2) using an innovation approach. As it is known, the whiteness of the innovation process simplifies the derivation of the estimation algorithms, as well as the algorithms themselves, thus providing computational advantages. The innovation treatment is based on the equivalence existing between the observation process and the innovations , which are defined as where is the LS linear estimator of from the previous observations, . Since both processes provide the same information, the LS linear estimator of a random vector based on the observations , denoted by , is expressed as a linear combination of the innovations ; specifically:

where denotes the innovation covariance matrices.

The observation model in each individual sensor is, for vectorial observations, the same as was considered in García-Ligero et al. [24]. Therefore, the derivation of the local estimation algorithms is analogous to that performed in the paper cited and the proofs of the local LS linear filtering and fixed-smoothing algorithms can be omitted.

In order to simplify the expressions of the algorithms, the following notations are used:

3.1.1. Local LS Linear Filtering Algorithm

Under the model assumptions, for the local filters, and their error covariance matrices, are obtained as

The vectors are recursively calculated as

where the matrices satisfy

and

The matrices are obtained by

The innovations and their covariance matrices are given by

where

and

□

Next, for a recursive fixed-point smoothing algorithm of the signal based on is provided.

3.1.2. Local LS Linear Fixed-Smoothing Algorithm

Under the model assumptions, for the local fixed-point smoothers, , and their error covariance matrices, are calculated as follows:

with initial conditionsandgiven by (3) and (4), respectively.

The coefficientsare obtained as

where the matricessatisfy the following recursive expression

□

3.2. Distributed Ls Linear Algorithms

In this subsection, distributed filter and fixed-point smoothers, are derived as the matrix-weighted linear combinations of the corresponding local estimators, minimising the mean squared error; that is, where and minimises

The solution to this problem, as shown by García-Ligero et al. [26], is given by

and, then, the optimal distributed estimator of is

Theorem 1.

Let be the local estimators given by (3) and (7); then the optimal distributed fusion estimators , filters and fixed-point smoothers, are given by

where

The estimation error covariance matrices, are given by

Proof.

Note that expressions (9) and (10) require the knowledge of the cross-correlation matrices between any two local estimators, Algorithms to obtain these matrices are provided in Theorems 2 and 3 for the filters and smoothers, respectively.

Theorem 2.

For any the cross-correlation matrices between any two local filters, are obtained by

The covariance matrices verify the following recursive relation:

where are given by

The matrices in the sum of (13) are calculated by

The matrices in expression (13) are obtained as follows

The matrices in the sum of (15) are given by

where , , are calculated as

The innovation cross-covariance matrices in expression (14) satisfy

where the coefficients are given by

Proof.

See Appendix A. □

Theorem 3.

For any the cross-correlation matrices between any two local smoothers, are obtained by

with the initial condition given in Theorem 2.

The matrices and, for , are obtained as follows:

The matrices and are recursively obtained by

Proof.

See Appendix B. □

4. Simulation Study

In this section, a simulation example illustrates the applicability of the proposed algorithms, showing that estimator accuracy is influenced by the specific characteristics of the model (1)–(2), and in particular by sensor gain degradation and transmission delays.

Let us consider the same signal process as in [24]; specifically, a zero-mean scalar process, with covariance function hence, Assumption 1 is satisfied taking, for example,

Assume that this signal is measured by two sensors which provide the measured outputs described by model (1) with . The multiplicative noises which quantify the sensor’s gain degradation, are independent white sequences with different time-invariant probability distributions. Specifically:

- is uniformly distributed over

The measurement additive noises are defined as where and is a zero-mean Gaussian white process with constant variance equal to 0.5; hence, and

Assume furthermore that during the transmission of the measurements, random delays occur and that the information received can be expressed by model (2) where are homogeneous Markov chains with the same initial distribution, and (the first observation is not delayed), and with the following transition probability matrices:

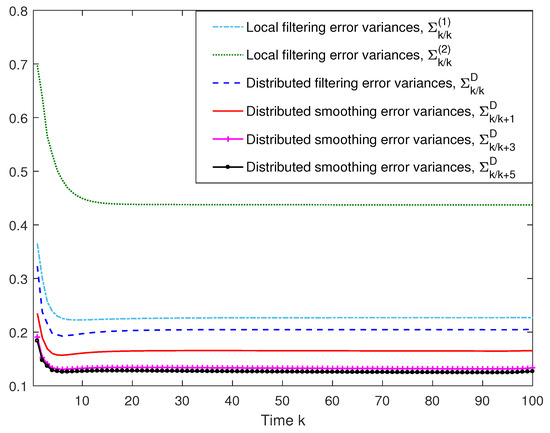

The effectiveness of the proposed distributed filtering and fixed-point smoothing estimators was compared by performing 100 iterations of the respective algorithms. The accuracy obtained in each case was determined by calculating the estimation error variances. Figure 1 presents the local filtering error variances, and the distributed filtering and fixed-point smoothing error variances, and shows, on the one hand, that the distributed filtering error variances are lower than those of every local filter and, on the other, that the error variances corresponding to the distributed smoothers are smaller than those of the distributed filter. We conclude, therefore, that the smoothers are more accurate than the filter. Moreover, the distributed smoothing error variances at each fixed-point k decrease as the number of available measurements, , increases, although the difference is almost insignificant for .

Figure 1.

Local filtering, distributed filtering and fixed-point smoothing error variances.

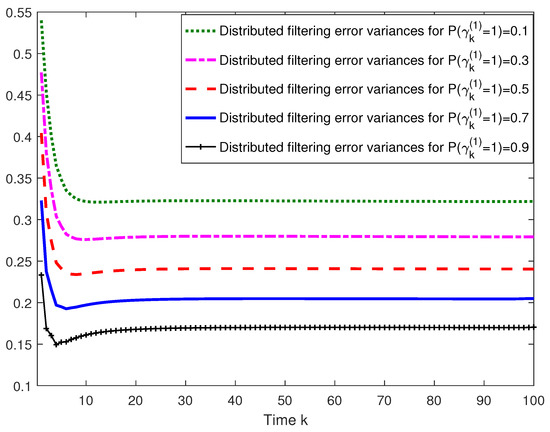

To determine the influence of estimator performance on sensor gain degradation, we calculated the distributed filtering error variances for different probability distributions of the random variables modelling the degradation. To do this, we set and varied the probabilities of the values 0.5 and 1. Figure 2 shows that the filtering error variances decrease as increases, thus confirming, as expected, that the distributed filtering performance improves when there is less signal degradation. An analogous study was conducted of the distributed smoothing error variances, from which similar conclusions were drawn.

Figure 2.

Distributed filtering error variances for different values of .

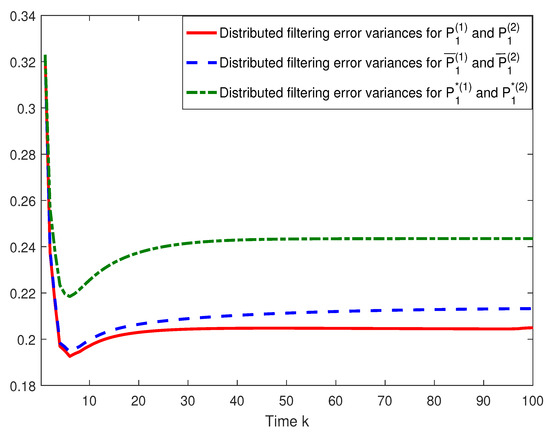

Next, we considered the use of various homogeneous Markov chains to model random delays in order to determine their influence on the accuracy of the proposed estimators. Concretely, we calculated the distributed filtering error variances, assuming that random delays can be appropriately modelled by homogeneous Markov chains with the same initial distribution and the following transition probability matrices:

The properties of the Markov chains lead us to conclude that the probabilities of no delay converge to the following constant values: 0.89, 0.77, 0.68, 0.60, 0.55 and 0.38 for , , and , respectively. Figure 3 shows the distributed filtering error variances for the different Markov chains considered. As expected, the performance of the distributed filtering estimators improved as the probabilities of no delay converged to higher values. Similar results were obtained for the smoothing error variances and, therefore, the same conclusions can be drawn for the distributed smoothers.

Figure 3.

Distributed filtering error variances for different transition probability matrices.

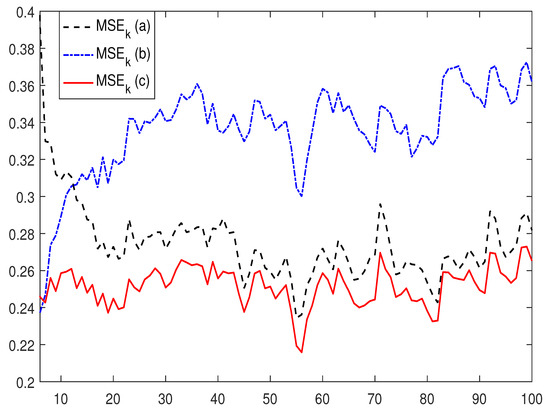

Finally, in order to show the performance of the proposed distributed filter, we conduct a comparative analysis between the proposed filter and the distributed filter obtained in the model without gain degradation and independent white measurement noises, and the distributed filter obtained when the delays during transmission are considered independent. To analyze the feasibility of the different distributed filters, the mean squared errors at each time instant k (MSE) of the different filters are calculated for 1000 independent simulations. Figure 4 shows that the MSE for the proposed filter are less than for the other filters, which is due to the fact that these filters do not take into account all the hypotheses inherent to the model under study.

Figure 4.

MSE for distributed filter (a) for systems without gain degradation and independent white measurement noises, (b) for systems with independent delays and (c) for systems at hand.

5. Conclusions

This paper describes how LS distributed fusion linear filter and fixed-point smoothers were derived for a class of discrete-time multi-sensors systems affected by stochastic sensor gain degradations, correlated measurement noises and one or two-step random transmission delays. The gain degradation in the different sensors is represented by independent white sequences of random variables with values in [0, 1], thus including the conventional missing signal phenomenon. The measurement noises are assumed to be correlated and cross-correlated at the same and at consecutive sampling times. The absence or presence of delays in the transmissions, due to the unreliability of the network, is described by different homogeneous discrete-time Markov chains. We address the distributed fusion estimation problem for networked systems with these characteristics, assuming that only the first and second-order moments of the processes involved in the observation model are available. Distributed filtering and fixed-point smoothing estimators are obtained as the LS matrix-weighted linear combination of the local ones. The filtering and fixed-point smoothing error variances, which are calculated offline, are used to measure accuracy of the proposed distributed estimators.

As indicated, in this paper we consider transmission delays described by different homogeneous discrete-time Markov chains. In Shang [34] two types of time delays are simultaneously considered; namely, signal transmission delays and signal processing delays. Hence, a challenging further research topic is to address the distributed estimation fusion problems considering these different types of delays.

Author Contributions

All authors contributed equally in deriving and implementing the proposed estimation algorithms and in writing this manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by Ministerio de Economía, Industria y Competitividad, Agencia Estatal de Investigación and Fondo Europeo de Desarrollo Regional FEDER (grant no. MTM2017-84199-P).

Conflicts of Interest

The authors declare they have no conflict of interest.

Appendix A. Proof of Theorem 2

The proof is performed in the following four steps.

- •

- •

- •

- Now, from (2) for and the model hypotheses, we haveTaking into account that (this expression for the signal predictor is obtained by a reasoning analogous to that carried out in García-Ligero et al. [24]), from the OPL we obtain . Then, as is uncorrelated with , denoting we haveand thus expression (15) is obtained.From (5) for and denoting , it is clear thatNext, we compute the two expectations in (A1):

- •

- From expression (6) for the innovation and denoting , it is clear thatThen, taking into account that and , we obtain (18).Now, using (2) for , the model assumptions and the fact that , it is clear thatThen, taking into account that (19) is obtained.

□

Appendix B. Proof of Theorem 3

For from the OPL, it is clear that . Next, we derived expression (21) for .

In order to calculate for , we use (7) for and from we obtain

- ∗

- To determine the first expectation in (A4), we use (2) for and taking into account that by the OPL , we obtainNow, expressing in the first sum and using (7) for in the second one, we haveand, again, from (7) for

- ∗

- then, expression (5) for together with and , leads us toAgain using expression (7) of the local smoother, after some manipulation, we obtain

References

- Ding, D.; Wang, Z.; Shen, B. Recent advances on distributed filtering for stochastic systems over sensor networks. Int. J. Gen. Syst. 2014, 43, 372–386. [Google Scholar] [CrossRef]

- Dong, H.; Wang, Z.; Ding, S.X.; Gao, H. A survey on distributed filtering and fault detection for sensor networks, Math. Probl. Eng. 2014, 2014, 858624. [Google Scholar] [CrossRef]

- Li, W.; Wang, Z.; Wei, G.; Ma, L.; Hu, J.; Ding, D. A survey on multisensor fusion and consensus filtering for sensor networks, Discret. Dyn. Nat. Soc. 2015, 2015, 683701. [Google Scholar] [CrossRef]

- Sun, S.L.; Lin, H.L.; Ma, J.; Li, X.Y. Multi-sensor distributed fusion estimation with applications in networked systems: A review paper. Inf. Fusion. 2017, 38, 122–134. [Google Scholar] [CrossRef]

- Yang, C.; Yang, Z.; Deng, Z. Robust weighted state fusion Kalman estimators for networked systems with mixed uncertainties. Inf. Fusion 2019, 45, 246–265. [Google Scholar] [CrossRef]

- Xia, J.; Gao, S.; Qi, X.; Zhanga, J.; Li, G. Distributed cubature H-infinity information filtering for target tracking against uncertain noise statistics. Signal Proccess. 2020, 177, 107725. [Google Scholar] [CrossRef]

- Hua, Z.; Hua, J.; Yanga, G. A survey on distributed filtering, estimation and fusion for nonlinear systems with communication constraints: New advances and prospects. Syst. Sci. Control. Eng. 2020, 8, 189–205. [Google Scholar] [CrossRef]

- Caballero-Águila, R.; García-Garrido, I.; Linares-Pérez, J. Information fusion algorithms for state estimation in multi-sensor systems with correlated missing measurements. Appl. Math. Comput. 2014, 226, 548–563. [Google Scholar] [CrossRef]

- Pang, C.; Sun, S. Fusion predictors for multi-sensor stochastic uncertain systems with missing measurements and unknown measurement disturbances. IEEE Sens. J. 2015, 15, 4346–4354. [Google Scholar] [CrossRef]

- García-Ligero, M.J.; Hermoso-Carazo, A.; Linares-Pérez, J. Distributed fusion estimation in networked systems with uncertain observations and Markovian random delays. Signal Process. 2015, 106, 114–122. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Z.; Zhou, D. Optimal filtering for networked systems with stochastic sensor gain degradation. Automatica 2014, 50, 1521–1525. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Z.; He, X.; Zhou, D.H. Minimum-variance recursive filtering over sensor with stochastic sensor gain degradation: Algorithms and performance analysis. IEEE Trans. Control. Net. Syst. 2016, 3, 265–274. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Z.; He, X.; Ghinea, G.; Alsaadi, F.E. A Resilient Approach to Distributed Filter Design for Time-Varying Systems Under Stochastic Nonlinearities and Sensor Degradation. IEEE Tans. Signal. Process. 2017, 65, 1300–1309. [Google Scholar] [CrossRef]

- Feng, J.; Zeng, M. Descriptor recursive estimation for multiple sensors with different delay rates. Int. J. Control. 2011, 84, 584–596. [Google Scholar] [CrossRef]

- Li, N.; Sun, S.; Ma, J. Multi-sensor distributed fusion filtering for networked systems with different delay and loss rates. Digit. Signal Process. 2014, 34, 29–38. [Google Scholar] [CrossRef]

- Chen, B.; Zhang, W.; Yu, L. Distributed fusion estimation with missing measurements, random transmission delays and packet dropouts. IEEE Trans. Automat. Control 2014, 59, 1961–1967. [Google Scholar] [CrossRef]

- Sun, S.; Xiao, W. Distributed fusion filter for networked stochastic uncertain systems with transmission delays and packet dropouts. Signal Process. 2017, 130, 268–278. [Google Scholar] [CrossRef]

- Caballero-Águila, R.; Hermoso-Carazo, A.; Linares-Pérez, J. Networked distributed fusion estimation under uncertain outputs with random transmission delays, packet losses and multi-packet processing. Signal Process. 2019, 156, 71–83. [Google Scholar] [CrossRef]

- Caballero-Ãguila, R.; Hermoso-Carazo, A.; Linares-Pérez, J. Networked Fusion Filtering from Outputs with Stochastic Uncertainties and Correlated Random Transmission Delays. Sensors 2016, 16, 847. [Google Scholar] [CrossRef]

- Caballero-Águila, R.; Hermoso-Carazo, A.; Linares-Pérez, J. Fusion estimation from multisensor observations with multiplicative noises and correlated random delays in transmission. Mathematics 2017, 5, 45. [Google Scholar] [CrossRef]

- Han, C.; Zhang, H. Linear optimal filtering for discrete-time systems with random jump delays. Signal Process. 2009, 89, 3097–3104. [Google Scholar] [CrossRef]

- Song, H.; Yu, L.; Zhang, W.A. H∞ filtering of network-based systems with random delay. Signal Process. 2009, 89, 615–622. [Google Scholar] [CrossRef]

- Han, C.; Zhang, H.; Fu, M. Optimal filtering for networked systems with Markovian communication delays. Automatica. 2013, 49, 3097–3104. [Google Scholar] [CrossRef]

- García-Ligero M., J.; Hermoso-Carazo, A.; Linares-Pérez, J. Least-squares estimators for systems with stochastic sensor gain degradation, correlated measurement noises and delays in transmission modelled by Markov chains. Int. J. Syst. Sci. 2020, 51, 731–745. [Google Scholar] [CrossRef]

- Ge, X.; Han, Q.L.; Jiang, X. Distributed H∞ filtering over sensor networks with heterogeneous Markovian coupling intercommunication delays. IET Contr. Theory Appl. 2014, 9, 82–90. [Google Scholar] [CrossRef]

- García-Ligero M., J.; Hermoso-Carazo, A.; Linares-Pérez, J. Distributed and centralized fusion estimation from multiple sensors with Markovian delays. Appl. Math. Comput. 2012, 219, 2932–2948. [Google Scholar] [CrossRef]

- Shang, Y. Couple-group consensus of continuous-time multi-agent systems under Markovian switching topologies. J. Frankl. Inst. 2015, 352, 4826–4844. [Google Scholar] [CrossRef]

- Shang, Y. Consensus seeking over Markovian switching networks with time-varying delays and uncertain topologies. Appl. Math. Comput. 2016, 273, 1234–1245. [Google Scholar] [CrossRef]

- Feng, J.; Wang, Z.; Zeng, M. Distributed weighted robust Kalman filter fusion for uncertain systems with autocorrelated and cross-correlated noises. Inf. Fusion. 2013, 14, 76–86. [Google Scholar] [CrossRef]

- Li, W.; Jia, Y.; Du, J. Distributed filtering for discrete-time linear systems with fading measurements and time-correlated noise. Digit. Signal Process. 2017, 60, 211–219. [Google Scholar] [CrossRef]

- Tian, T.; Sun, S.; Lin, H. Distributed fusion filter for multi-sensor systems with finite-step correlated noises. Inf. Fusion 2019, 46, 128–140. [Google Scholar] [CrossRef]

- Caballero-Águila, R.; Hermoso-Carazo, A.; Linares-Pérez, J. Least-Squares filtering algorithm in sensor networks with noise correlation and multiple random failures in transmission. Math. Probl. Eng. 2017, 2017, 1570719. [Google Scholar] [CrossRef]

- GarcÃa-Ligero, M.J.; Hermoso-Carazo, A.; Linares-Pérez, J. Estimation from a multisensor environment for systems with multiple packet dropouts and correlated measurement noises. Appl. Math. Model. 2017, 45, 324–332. [Google Scholar] [CrossRef]

- Shang, Y. On the Delayed Scaled Consensus Problems. Appl. Sci. 2017, 7, 713. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).