An Efficient Iterative Method Based on Two-Stage Splitting Methods to Solve Weakly Nonlinear Systems

Abstract

:1. Introduction

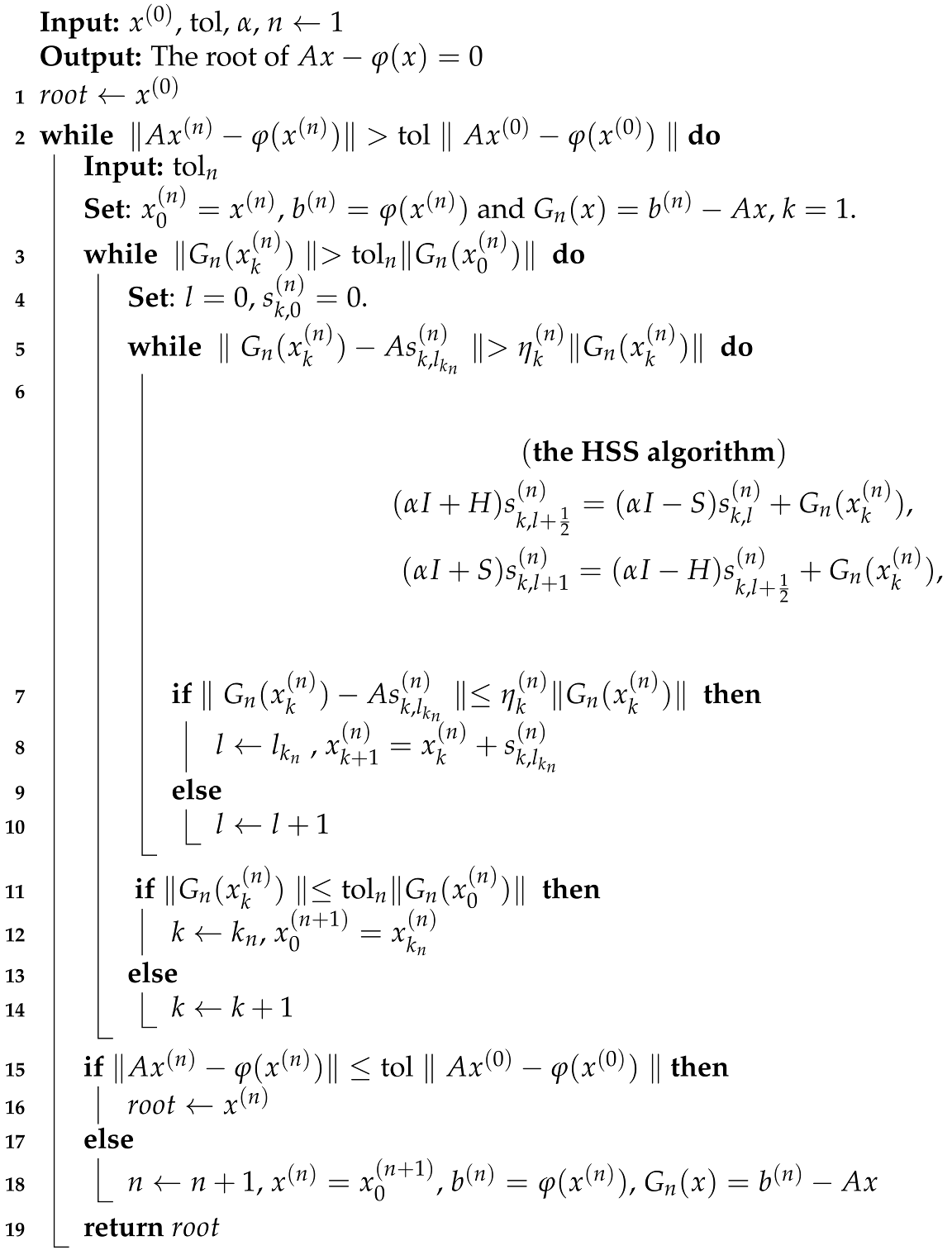

2. The New Algorithm

- (1)

- Consider the initial guess , , and the sequence of positive integers.

- (2)

- For until do:

- (2.1)

- Set .

- (2.2)

- For , apply Algorithm HSS asand obtain such that

- (2.3)

- Set .

2.1. Picard-HSS Iteration Method

- (1)

- Set ;

- (2)

- For , obtain from solving the following:

- (3)

- Set .

2.2. Nonlinear HSS-Like Iteration Method

2.3. Our Proposal Iterative Scheme

- For solving (11), one may use any inner solver; here, we use an HSS scheme. Next, for initial value and untilapply the HSS scheme as:

- (1)

- Set .

- (2)

- For , apply algorithm HSS (l is the counter of the number of HSS iterations):and obtain such that

- (3)

- Set ( is the required number of HSS inner iterations for satisfying (14)).

| Algorithm 1: JFHSS Algorithm |

|

3. Convergence of the New Method

4. Application

- Case 1

- , .

- Case 2

- , .

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Shen, W.; Li, C. Kantorovich-type convergence criterion for inexact Newton methods. Appl. Numer. Math. 2009, 59, 1599–1611. [Google Scholar] [CrossRef]

- An, H.-B.; Bai, Z.-Z. A globally convergent Newton-GMRES method for large sparse systems of nonlinear equations. Appl. Numer. Math. 2007, 57, 235–252. [Google Scholar] [CrossRef]

- Eisenstat, S.C.; Walker, H.F. Globally convergent inexact Newton methods. SIAM J. Optim. 1994, 4, 393–422. [Google Scholar] [CrossRef]

- Gomes-Ruggiero, M.A.; Lopes, V.L.R.; Toledo-Benavides, J.V. A globally convergent inexact Newton method with a new choice for the forcing term. Ann. Oper. Res. 2008, 157, 193–205. [Google Scholar] [CrossRef]

- Bai, Z.-Z. A class of two-stage iterative methods for systems of weakly nonlinear equations. Numer. Algorithms 1997, 14, 295–319. [Google Scholar] [CrossRef]

- Zhu, M.-Z. Modified iteration methods based on the Asymmetric HSS for weakly nonlinear systems. J. Comput. Anal. Appl. 2013, 15, 188–195. [Google Scholar]

- Axelsson, O.; Bai, Z.-Z.; Qiu, S.-X. A class of nested iteration schemes for linear systems with a coefficient matrix with a dominant positive definite symmetric part. Numer. Algorithms 2004, 35, 351–372. [Google Scholar] [CrossRef]

- Bai, Z.-Z.; Golub, G.H.; Ng, M.K. Hermitian and skew-Hermitian splitting methods for non-Hermitian positive definite linear systems. SIAM J. Matrix Anal. Appl. 2003, 24, 603–626. [Google Scholar] [CrossRef]

- Li, L.; Huang, T.-Z.; Liu, X.-P. Asymmetric Hermitian and skew-Hermitian splitting methods for positive definite linear systems. Comput. Math. Appl. 2007, 54, 147–159. [Google Scholar] [CrossRef] [Green Version]

- Bai, Z.-Z.; Yang, X. On HSS-based iteration methods for weakly nonlinear systems. Appl. Numer. Math. 2009, 59, 2923–2936. [Google Scholar] [CrossRef]

- Bai, Z.-Z.; Migallón, V.; Penadés, J.; Szyld, D.B. Block and asynchronous two-stage methods for mildly nonlinear systems. Numer. Math. 1999, 82, 1–20. [Google Scholar] [CrossRef]

- Zhu, M.-Z.; Zhang, G.-F. On CSCS-based iteration methods for Toeplitz system of weakly nonlinear equations. J. Comput. Appl. Math. 2011, 235, 5095–5104. [Google Scholar] [CrossRef] [Green Version]

- Bai, Z.-Z.; Guo, X.-P. On Newton-HSS methods for systems of nonlinear equations with positive-definite Jacobian matrices. J. Comput. Math. 2010, 28, 235–260. [Google Scholar]

- Li, X.; Wu, Y.-J. Accelerated Newton-GPSS methods for systems of nonlinear equations. J. Comput. Anal. Appl. 2014, 17, 245–254. [Google Scholar]

- Edwards, C.H. Advanced Calculus of Several Variables; Academic Press: New York, NY, USA, 1973. [Google Scholar]

- Cao, Y.; Tan, W.-W.; Jiang, M.-Q. A generalization of the positive-definite and skew-Hermitian splitting iteration. Numer. Algebra Control Optim. 2012, 2, 811–821. [Google Scholar]

- Bai, Z.-Z.; Golub, G.H.; Ng, M.K. On inexact Hermitian and skew-Hermitian splitting methods for non-Hermitian positive definite linear systems. Linear Algebra Appl. 2008, 428, 413–440. [Google Scholar] [CrossRef]

- Bai, Z.-Z.; Golub, G.H.; Lu, L.-Z.; Yin, J.-F. Block triangular and skew-Hermitian splitting methods for positive-definite linear systems. SIAM J. Sci. Comput. 2005, 26, 844–863. [Google Scholar] [CrossRef]

| N | 30 | 40 | 60 | 70 | 80 | 100 | ||

|---|---|---|---|---|---|---|---|---|

| , | JFHSS | CPU | 0.65 | 1.81 | 7.46 | 13.21 | 24.45 | 59.32 |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 9 | 11.08 | 10.75 | 10.75 | 10.41 | 10.91 | |||

| 1.86, −11 | 3.35, −11 | 1.70, −11 | 3.41, −11 | 3.54, −11 | 2.43, −11 | |||

| JFGPSS | CPU | 0.63 | 1.46 | 5.79 | 9.84 | 17.28 | 44.50 | |

| 12 | 12 | 11 | 11 | 11 | 11 | |||

| 14 | 12 | 11 | 11 | 11 | 11 | |||

| 8.78 | 8 | 7.64 | 7.45 | 7.90 | 8.73 | |||

| 5.45, −11 | 1.89, −11 | 7.69, −11 | 1.02, −10 | 9.63, −11 | 5.09, −11 | |||

| Nonlinear HSS-like | CPU | 0.82 | 2.03 | 8.26 | 14.60 | 24.65 | 61.35 | |

| 129 | 127 | 123 | 124 | 128 | 126 | |||

| 1.45, −10 | 1.53, −10 | 1.25, −10 | 1.10, −10 | 8.60, −11 | 8.91, −11 | |||

| Picard-HSS | - | - | - | - | - | - | - | |

| , | JFHSS | CPU | 1.04 | 2.71 | 11.32 | 19.87 | 31.48 | 76.13 |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 16.08 | 14.67 | 14.25 | 14.17 | 14 | 14.08 | |||

| 1.47, −10 | 9.30, −11 | 7.92, −11 | 8.80, −11 | 9.56, −11 | 6.56, −11 | |||

| JFGPSS | CPU | 0.85 | 2.20 | 8.57 | 14.26 | 23.50 | 54.90 | |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 14.42 | 12.42 | 10.58 | 10 | 9.84 | 9.91 | |||

| 1.57, −10 | 8.49, −11 | 3.33, −11 | 2.80, −11 | 2.38, −11 | 4.23, −11 | |||

| Nonlinear HSS-like | CPU | 1.32 | 2.94 | 12.11 | 20.51 | 33.88 | 80.97 | |

| 188 | 172 | 167 | 166 | 165 | 165 | |||

| 3.24, −10 | 2.50, −10 | 2.07, −10 | 2.32, −10 | 2.037, −10 | 1.81, −10 | |||

| Picard-HSS | - | - | - | - | - | - | - | |

| , | JFHSS | CPU | 0.80 | 2.24 | 9.34 | 14.56 | 23.77 | 60.21 |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 11.08 | 11 | 10.67 | 10.75 | 10.50 | 11.25 | |||

| 1.94, −10 | 1.70, −10 | 9.33, −11 | 9.94, −11 | 1.15, −10 | 8.77, −11 | |||

| JFGPSS | CPU | 0.56 | 1.51 | 6.55 | 12.47 | 21.03 | 55.50 | |

| 12 | 12 | 11 | 12 | 11 | 11 | |||

| 12 | 12 | 11 | 12 | 11 | 11 | |||

| 8.92 | 8.34 | 8.72 | 8.75 | 9.55 | 10.63 | |||

| 9.76, −11 | 7.68, −11 | 4.60, −10 | 6.35, −11 | 3.73, −10 | 3.78, −10 | |||

| Nonlinear HSS-like | - | - | - | - | - | - | - | |

| Picard-HSS | - | - | - | - | - | - | - | |

| , | JFHSS | CPU | 0.99 | 2.51 | 11.20 | 19.45 | 32.23 | 77.58 |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 16.08 | 14.67 | 14.25 | 14.17 | 14 | 14.08 | |||

| 5.88, −10 | 3.71, −10 | 3.20, −10 | 3.57, −10 | 3.75, −10 | 2.69, −10 | |||

| JFGPSS | CPU | 0.85 | 2.20 | 8.58 | 14.02 | 23.22 | 54.94 | |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 14.42 | 12.41 | 10.58 | 9.92 | 9.84 | 9.84 | |||

| 6.26, −10 | 3.44, −10 | 1.31, −10 | 1.63, −10 | 1.08, −10 | 2.08, −10 | |||

| Nonlinear HSS-like | - | - | - | - | - | - | - | |

| Picard-HSS | - | - | - | - | - | - | - | |

| , | JFHSS | CPU | 0.81 | 2.23 | 10.41 | 18.89 | 31.31 | 81.74 |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 14 | 14 | 14 | 14 | 14 | 14 | |||

| 10.85 | 12.83 | 11.28 | 11.71 | 11.64 | 12.93 | |||

| 1.47, −8 | 1.05, −8 | 7.50, −9 | 4.55, −9 | 3.08, −9 | 3.29, −9 | |||

| JFGPSS | CPU | 0.66 | 1.70 | 7.95 | 14.30 | 25.44 | 63.80 | |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 14 | 14 | 14 | 14 | 14 | 14 | |||

| 8.78 | 8 | 7.86 | 8.64 | 9.07 | 9.92 | |||

| 8.02, −9 | 3.11, −8 | 3.40, −9 | 2.32, −9 | 1.61, −9 | 6.16, −10 | |||

| Nonlinear HSS-like | - | - | - | - | - | - | - | |

| Picard-HSS | - | - | - | - | - | - | - | |

| , | JFHSS | CPU | 1.06 | 2.90 | 13.55 | 21.72 | 38.94 | 87.18 |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 14 | 14 | 14 | 13 | 14 | 13 | |||

| 14.93 | 14.36 | 14.86 | 14.62 | 14.57 | 15 | |||

| 2.06, −8 | 1.48, −8 | 9.76, −9 | 6.96, −9 | 6.58, −9 | 5.72, −9 | |||

| JFGPSS | CPU | 0.95 | 2.45 | 10.03 | 17.81 | 29.06 | 69.57 | |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 14 | 14 | 14 | 14 | 14 | 13 | |||

| 13.71 | 11.64 | 10.71 | 10.85 | 10.64 | 11.31 | |||

| 1.91, −8 | 1.30, −8 | 6.08, −9 | 3.26, −9 | 6.35, −9 | 3.23, −9 | |||

| Nonlinear HSS-like | - | - | - | - | - | - | - | |

| Picard-HSS | - | - | - | - | - | - | - |

| N | 30 | 40 | 60 | 70 | 80 | 100 | ||

|---|---|---|---|---|---|---|---|---|

| , | JFHSS | CPU | 0.73 | 2.03 | 9.54 | 16.99 | 27.27 | 65.47 |

| 11 | 11 | 11 | 12 | 12 | 12 | |||

| 12 | 12 | 12 | 13 | 13 | 13 | |||

| 11.25 | 11.41 | 11.92 | 11.23 | 10.92 | 12 | |||

| 1.64, −10 | 1.18, −10 | 1.43, −11 | 1.32, −11 | 1.95, −11 | 1.56, −11 | |||

| JFGPSS | CPU | 0.57 | 1.53 | 7.19 | 12.59 | 19.42 | 53.59 | |

| 11 | 11 | 11 | 11 | 11 | 12 | |||

| 12 | 12 | 12 | 12 | 12 | 13 | |||

| 9 | 8.25 | 8.75 | 8.92 | 8.25 | 9 | |||

| 5.45, −11 | 8.19, −11 | 8.56, −11 | 7.6, −11 | 5.27, −11 | 4.81, −12 | |||

| Nonlinear HSS-like | CPU | 0.82 | 2.30 | 9.86 | 14.38 | 29.31 | 59.91 | |

| 128 | 128 | 123 | 124 | 121 | 126 | |||

| 1.81, −10 | 1.43, −10 | 1.25, −10 | 1.10, −10 | 1.15, −10 | 1.06, −10 | |||

| Picard-HSS | - | - | - | - | - | - | - | |

| , | JFHSS | CPU | 0.98 | 2.63 | 11.73 | 20.51 | 36.07 | 77.20 |

| 11 | 11 | 11 | 11 | 12 | 12 | |||

| 12 | 12 | 12 | 12 | 13 | 13 | |||

| 16 | 15 | 14.50 | 14.67 | 14.30 | 14.62 | |||

| 2.49, −10 | 2.26, −10 | 2.03, −10 | 2.30, −10 | 2.61, −11 | 1.74, −11 | |||

| JFGPSS | CPU | 0.88 | 2.26 | 8.61 | 15.08 | 25.83 | 60.7 | |

| 11 | 11 | 11 | 11 | 12 | 11 | |||

| 12 | 12 | 12 | 12 | 13 | 12 | |||

| 14.33 | 12.08 | 10.58 | 10.66 | 9.69 | 11 | |||

| 2.04, −10 | 2.91, −10 | 1.91, −10 | 1.09, −10 | 1.64, −11 | 1.72, −10 | |||

| Nonlinear HSS-like | CPU | 1.15 | 3.85 | 12.52 | 19.61 | 37.70 | 79.26 | |

| 187 | 171 | 166 | 166 | 164 | 164 | |||

| 3.68, −10 | 3.07, −10 | 2.48, −10 | 2.17, −10 | 2.42, −10 | 2.13, −10 | |||

| Picard-HSS | - | - | - | - | - | - | - | |

| , | JFHSS | CPU | 0.72 | 2.28 | 9.39 | 16.62 | 28.53 | 67.23 |

| 11 | 11 | 11 | 12 | 11 | 11 | |||

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 11.41 | 11.33 | 11.41 | 11.75 | 12.08 | 12.34 | |||

| 1.62, −10 | 2.01, −10 | 1.64, −10 | 1.92, 10 | 2.89, −10 | 2.47, −10 | |||

| JFGPSS | CPU | 0.69 | 1.97 | 8.85 | 16.53 | 26.53 | 70.80 | |

| 11 | 11 | 11 | 11 | 12 | 11 | |||

| 12 | 12 | 12 | 12 | 13 | 12 | |||

| 10.91 | 11.16 | 11 | 11.42 | 11.42 | 12.34 | |||

| 2.18, −10 | 1.22, −10 | 1.21, −10 | 8.35, −11 | 1.15, −10 | 1.17, −10 | |||

| Nonlinear HSS-like | - | - | - | - | - | - | - | |

| Picard-HSS | - | - | - | - | - | - | - | |

| , | JFHSS | CPU | 0.97 | 2.59 | 11.06 | 20.24 | 33.75 | 79.80 |

| 11 | 11 | 11 | 11 | 11 | 11 | |||

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 15.92 | 15.08 | 14.50 | 14.58 | 14.66 | 14.92 | |||

| 9.65, −10 | 4.15, −10 | 4.31, −10 | 4.40, −10 | 3.82, −10 | 3.39, −10 | |||

| JFGPSS | CPU | 0.88 | 2.18 | 8.75 | 14.60 | 25.05 | 64.79 | |

| 11 | 11 | 11 | 11 | 11 | 11 | |||

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 14.50 | 12.42 | 10.66 | 10.42 | 10.58 | 11.08 | |||

| 5.06, −10 | 3.61, −10 | 2.53, −10 | 3.32, −10 | 2.95, −10 | 1.97, −10 | |||

| Nonlinear HSS-like | - | - | - | - | - | - | - | |

| Picard-HSS | - | - | - | - | - | - | - | |

| , | JFHSS | CPU | 0.77 | 2.33 | 10.82 | 19.56 | 32.13 | 85.32 |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 14 | 14 | 14 | 14 | 14 | 14 | |||

| 10.85 | 12.83 | 11.28 | 11.71 | 11.64 | 12.92 | |||

| 1.44, −8 | 1.36, −8 | 7.47, −9 | 4.56, −9 | 3.8, −9 | 3.54, −9 | |||

| JFGPSS | CPU | 0.65 | 1.74 | 8.00 | 14.54 | 25.02 | 64.19 | |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 14 | 14 | 14 | 14 | 14 | 14 | |||

| 8.78 | 8 | 8.28 | 8.64 | 9.07 | 9.86 | |||

| 8.03, −9 | 1.44, −8 | 3.35, −9 | 4.76, −9 | 1.69, −9 | 1.085, −9 | |||

| Nonlinear HSS-like | - | - | - | - | - | - | - | |

| Picard-HSS | - | - | - | - | - | - | - | |

| , | JFHSS | CPU | 1.08 | 2.97 | 11.27 | 22.45 | 39.98 | 89.15 |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 14 | 14 | 14 | 14 | 14 | 14 | |||

| 14.93 | 14.35 | 14.43 | 14.62 | 14.57 | 15 | |||

| 2.01, −8 | 1.49, −8 | 8.73, −9 | 6.97, −9 | 6.57, −9 | 5.72, −9 | |||

| JFGPSS | CPU | 0.99 | 2.41 | 10.15 | 17.98 | 29.33 | 67.45 | |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 14 | 14 | 14 | 14 | 14 | 13 | |||

| 13.78 | 11.64 | 10.71 | 10.86 | 10.64 | 11.31 | |||

| 1.70, −8 | 1.30,−8 | 6.02, −9 | 3.23, −9 | 6.34, −9 | 3.21, −9 | |||

| Nonlinear HSS-like | - | - | - | - | - | - | - | |

| Picard-HSS | - | - | - | - | - | - | - |

| N | 30 | 40 | 50 | 60 | 70 | 80 | 90 | 100 | ||

|---|---|---|---|---|---|---|---|---|---|---|

| HSS | 18 | 15 | 10.5 | 9 | 8 | 6 | 5.75 | 5.75 | ||

| 0.7226 | 0.6930 | 0.6743 | 0.6613 | 0.6513 | 0.6485 | 0.6459 | 0.6467 | |||

| 0.4047 | 0.3062 | 0.2462 | 0.2059 | 0.1769 | 0.1551 | 0.1381 | 0.1244 | |||

| 0.8971 | 0.9211 | 0.9360 | 0.9461 | 0.9535 | 0.9590 | 0.9634 | 0.9669 | |||

| 16.1290 | 12.1951 | 9.8039 | 8.1967 | 7.0423 | 6.1728 | 5.4945 | 4.9505 | |||

| 0.7236 | 0.6974 | 0.6783 | 0.6674 | 0.6608 | 0.6574 | 0.6562 | 0.6569 | |||

| GPSS | 11.25 | 9.5 | 8.5 | 7.5 | 7 | 6.5 | 6 | 5.5 | ||

| 0.5428 | 0.5140 | 0.5076 | 0.4983 | 0.4959 | 0.4902 | 0.4982 | 0.4983 | |||

| HSS | 26 | 22 | 16 | 13.5 | 12 | 10 | 8.75 | 8 | ||

| 0.7911 | 0.7663 | 0.6499 | 0.7399 | 0.0.7373 | 0.7302 | 0.7302 | 0.7242 | |||

| 0.1638 | 0.0938 | 0.0606 | 0.0424 | 0.0313 | 0.0241 | 0.0191 | 0.0155 | |||

| 0.9579 | 0.9757 | 0.9842 | 0.9889 | 0.9918 | 0.9937 | 0.9950 | 0.9959 | |||

| 32.2581 | 24.39 | 19.61 | 16.3934 | 14.0845 | 12.35 | 10.99 | 9.9010 | |||

| 0.7953 | 0.77 | 0.7512 | 0.7439 | 0.7343 | 0.728 | 0.7282 | 0.7270 | |||

| GPSS | 15 | 13 | 11 | 10 | 9 | 8 | 7.5 | 7 | ||

| 0.6424 | 0.6212 | 0.6144 | 0.6063 | 0.6036 | 0.6028 | 0.6090 | 0.6033 |

| q | 50 | 100 | 200 | 400 | 1200 | 2000 | ||

|---|---|---|---|---|---|---|---|---|

| 1.4 | 1.6 | 2.5 | 8 | 21.5 | 34 | |||

| JFHSS | CPU | 1.23 | 1.42 | 1.29 | 1.53 | 1.71 | 1.86 | |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 11.34 | 11.67 | 12 | 12.75 | 16.25 | 20.34 | |||

| 1.54, −14 | 2.2, −14 | 1.47, −14 | 6.87, −15 | 8.22, −15 | 1.3, −14 | |||

| Nonlinear HSS-like | CPU | 2.03 | 2.39 | 2.25 | 2.31 | 2.42 | 2.45 | |

| 129 | 137 | 140 | 146 | 160 | 167 | |||

| 1.1, −14 | 2.25, −14 | 2.31, −14 | 2.23, −14 | 2.4, −14 | 2.3, −14 | |||

| Picard-HSS | CPU | 7.96 | 8.31 | 7.76 | 8 | 8.60 | 8.86 | |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 121.1 | 131.91 | 126.75 | 145.34 | 146.34 | 147 | |||

| 1.1, −14 | 1.24, −14 | 1.57, −14 | 1.96, −14 | 1.84, −14 | 1.6, −14 | |||

| 0.8 | 1.4 | 2.6 | 4.8 | 13 | 20.5 | |||

| JFHSS | CPU | 5.25 | 5.31 | 5.5 | 5.93 | 6.21 | 6.28 | |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 13.66 | 14.58 | 15.083 | 16.08 | 17.34 | 17.58 | |||

| 2.42, −14 | 6.04, −15 | 6.36, −15 | 1.96, −14 | 6.15, −15 | 8.60, −15 | |||

| Nonlinear HSS-like | CPU | 8.87 | 11.828 | 10.02 | 10.31 | 11.28 | 11.85 | |

| 161 | 209 | 178 | 186 | 201 | 207 | |||

| 1.5, −14 | 1.59, −14 | 1.46, −14 | 1.57, −14 | 1.615, −14 | 1.46, −14 | |||

| Picard-HSS | CPU | 50.81 | 50.01 | 51.85 | 53.34 | 56.32 | 59.95 | |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 177.16 | 179.1 | 183.50 | 189.34 | 202.75 | 213.25 | |||

| 7.7, −15 | 9.67, −15 | 1.11, −14 | 1.23, −14 | 1.22, −14 | 1.26, −14 | |||

| 0.7 | 1 | 1.8 | 3.3 | 8.9 | 14.2 | |||

| JFHSS | CPU | 21.68 | 18.23 | 18.65 | 19.156 | 20.53 | 21.39 | |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 21 | 17.39 | 18.17 | 18.75 | 19.91 | 20.84 | |||

| 1.61, −14 | 6.73, −15 | 9.15, −15 | 8.39, −15 | 7.7, −15 | 4.71, −15 | |||

| Nonlinear HSS-like | CPU | 38.57 | 31.78 | 33.50 | 34.65 | 36.56 | 37.70 | |

| 246 | 206 | 213 | 221 | 235 | 242 | |||

| 1.17, −14 | 1.26, −14 | 1.26, −14 | 1.16, −14 | 1.19, −14 | 1.22, −14 | |||

| Picard-HSS | CPU | 219.54 | 217.45 | 266.83 | 225.37 | 228.60 | 248.35 | |

| 12 | 12 | 12 | 12 | 12 | 12 | |||

| 219.54 | 248.58 | 230.75 | 252 | 258.75 | 264.50 | |||

| 6.12, −15 | 7.7, −15 | 8.9, −15 | 1.0, −14 | 1.1, −14 | 1.1, −14 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amiri, A.; Darvishi, M.T.; Cordero, A.; Torregrosa, J.R. An Efficient Iterative Method Based on Two-Stage Splitting Methods to Solve Weakly Nonlinear Systems. Mathematics 2019, 7, 815. https://doi.org/10.3390/math7090815

Amiri A, Darvishi MT, Cordero A, Torregrosa JR. An Efficient Iterative Method Based on Two-Stage Splitting Methods to Solve Weakly Nonlinear Systems. Mathematics. 2019; 7(9):815. https://doi.org/10.3390/math7090815

Chicago/Turabian StyleAmiri, Abdolreza, Mohammad Taghi Darvishi, Alicia Cordero, and Juan Ramón Torregrosa. 2019. "An Efficient Iterative Method Based on Two-Stage Splitting Methods to Solve Weakly Nonlinear Systems" Mathematics 7, no. 9: 815. https://doi.org/10.3390/math7090815

APA StyleAmiri, A., Darvishi, M. T., Cordero, A., & Torregrosa, J. R. (2019). An Efficient Iterative Method Based on Two-Stage Splitting Methods to Solve Weakly Nonlinear Systems. Mathematics, 7(9), 815. https://doi.org/10.3390/math7090815