1. Introduction

Consider the following experimental situation. A listener is exposed to a sequence of auditory stimuli, generated by a stochastic chain, while electroencephalographic (EEG) signals are recorded from his scalp. Starting from Von Helmholtz [

1], a classical conjecture in neurobiology claims that the listener’s brain automatically identifies statistical regularities in the sequence of stimuli (see, for instance, [

2,

3]). If this is the case, then a signature of the stochastic chain generating the stimuli should somehow be encoded in the brain activity. The question is whether this signature can be identified in the EEG data recorded during the experiment. The goal of this paper is to discuss a new probabilistic framework in which this conjecture can be formally addressed.

To model the relationship between the random chain of auditory stimuli and the corresponding EEG data, we introduce a new class of stochastic processes. A process in this class has two components. The first one is a stochastic chain taking values in the set of auditory units. The second one is a sequence of functions corresponding to the sequence of EEG chunks recorded during the exposure of the successive auditory stimuli.

We use a stochastic chain with memory of variable length to model the dependence from the past characterizing the sequence of auditory stimuli. Stochastic chains with memory of variable length were introduced by Rissanen [

4], as a universal system for data compression. In his seminal paper, Rissanen observed that in many real life stochastic chains the dependence from the past has not a fixed length. Instead, it changes at each step as a function of the past itself. He called a

context the smallest final string of past symbols containing all the information required to predict the next symbol. The set of all contexts defines a partition of the past and can be represented by a rooted and labeled oriented tree. For this reason, many authors call stochastic chains with memory of variable length

context tree models. We adopt this terminology here. A non-exhaustive list of articles on context tree models, with applications in biology and linguistics, includes [

5,

6,

7,

8,

9,

10,

11,

12,

13].

An interesting point about stochastic chains with memory of variable length with finite context trees is that they are dense in the

-topology in the class of chains of infinite order with continuous and non-null transition probabilities and summable continuity rates. This result follows easily from Fernández and Galves [

14] and Duarte et al. [

15]. We refer the reader to these articles for definitions and more details.

Besides modeling the chain of auditory units, we must also model the relationship between the chain of stimuli and the sequence of EEG chunks. To that end, we assume that at each time step a new EEG chunk is chosen according to a probability measure (defined on suitable class of functions) which depends only on the context assigned to the sequence of auditory units generated up to that time. In particular, this implies that to describe the new class of stochastic chains introduced in this paper, we also need to consider a family of probability measures on the set of functions corresponding to the EEG chunks, indexed by the contexts of the context tree characterizing the chain of auditory stimuli.

In this probabilistic framework, the neurobiological question can now be rigorously addressed as follows. Is it possible to retrieve the context tree characterizing the chain of stimuli from the corresponding EEG data? This is a problem of statistical model selection in the class of stochastic processes we have just informally described.

This article is organized as follows. In

Section 2, we provide an informal overview of our approach. In

Section 3, we introduce the notation, recall what is a

context tree model and introduce the new class of

sequences of random objects driven by context tree models. A statistical procedure to select, given the data, a member on the class of sequences of random objects driven by context tree models is presented in

Section 4. The theoretical result supporting the proposed method, namely Theorem 1, is given in the same section. In

Section 5, we conduct a brief simulation study to illustrate the statistical selection procedure presented in

Section 4. The proof of Theorem 1 is given in

Section 6.

2. Informal Presentation of Our Approach

Volunteers are exposed to sequences of auditory stimuli generated by a context tree models while EEG signals are recorded. The auditory units used as stimuli are strong beats, weak beats or silent units, represented by symbols and 0, respectively.

The way the sequence of auditory units was generated can be informally described as follows. Start with the deterministic sequence

Then, replace each weak beat (symbol 1) by a silent unit (symbol 0) with probability in an independent way.

An example of a sequence produced by this procedure acting on the basic sequence would be

In the sequel, this stochastic chain is denoted by the symbols .

The stochastic chain just described can be generated step by step by an algorithm using only information from the past. We impose to the algorithm the condition that it uses, at each step, the shortest string of past symbols necessary to generate the next symbol.

This algorithm can be described as follows. To generate , given the past , we first look to the last symbol .

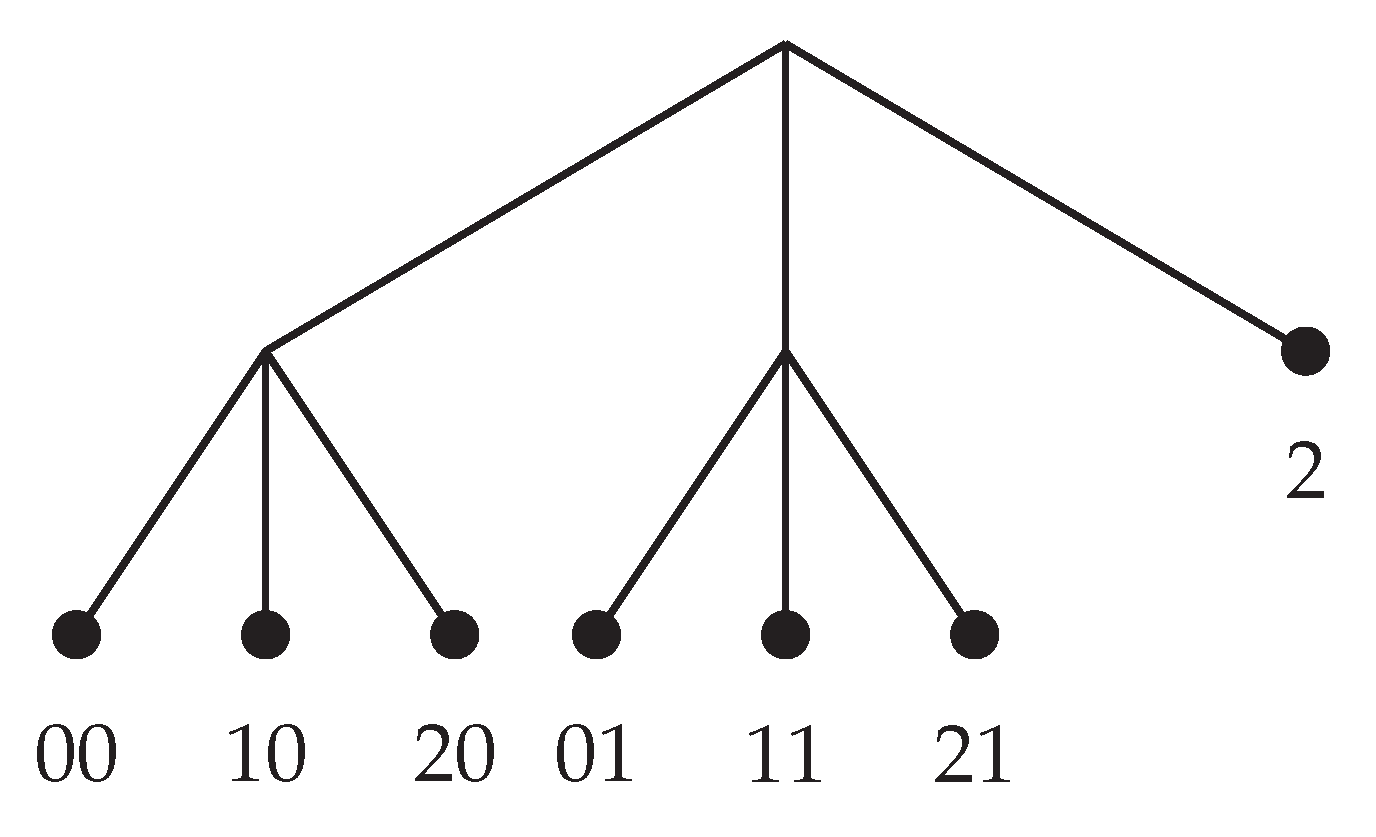

The algorithm described above is characterized by two elements. The first one is a partition of the set of all possible sequences of past units. This partition is represented by the set

In partition

, the string 00 represents the set of all strings ending by the ordered pair

; 10 represents the set of all strings ending by the ordered pair

, …; and finally the symbol 2 represents the set of all strings ending by 2. Following Rissanen [

4], let us call

context any element of this partition.

For instance, if

the context associated to this past sequence is 01.

The partition

of the past as described above can be represented by a rooted and labeled

tree (see

Figure 1) where each element of the partition is described as a leaf of the tree.

In the construction described above, for each sequence of past symbols, the algorithm first identifies the corresponding context w in the partition . Once the context w is identified, the algorithm chooses a next symbol using the transition probability . In other terms, each context w in defines a probability measure on . The family of transition probabilities indexed by elements of the partition is the second element characterizing the algorithm.

The families of transition probabilities associated to

are shown in

Table 1.

Using the notion of context tree, the neurobiological conjecture can now be rephrased as follows. Is the brain able to identify the context tree generating the sample of auditory stimuli? From an experimental point of view, the question is whether it is possible to retrieve the tree presented in

Figure 1 from the corresponding EEG data. To deal with this question we introduce a new statistical model selection procedure described below.

Let be the chunk of EEG data recorded while the volunteer is exposed to the auditory stimulus . Observe that is a continuous function taking values in , where is the number of electrodes. Its domain is the time interval of length, say T, during which the acoustic stimulus is presented.

The statistical procedure introduced in the paper can be informally described as follows. Given a sample of auditory stimuli and associated EEG chunks and for a suitable initial integer , do the following.

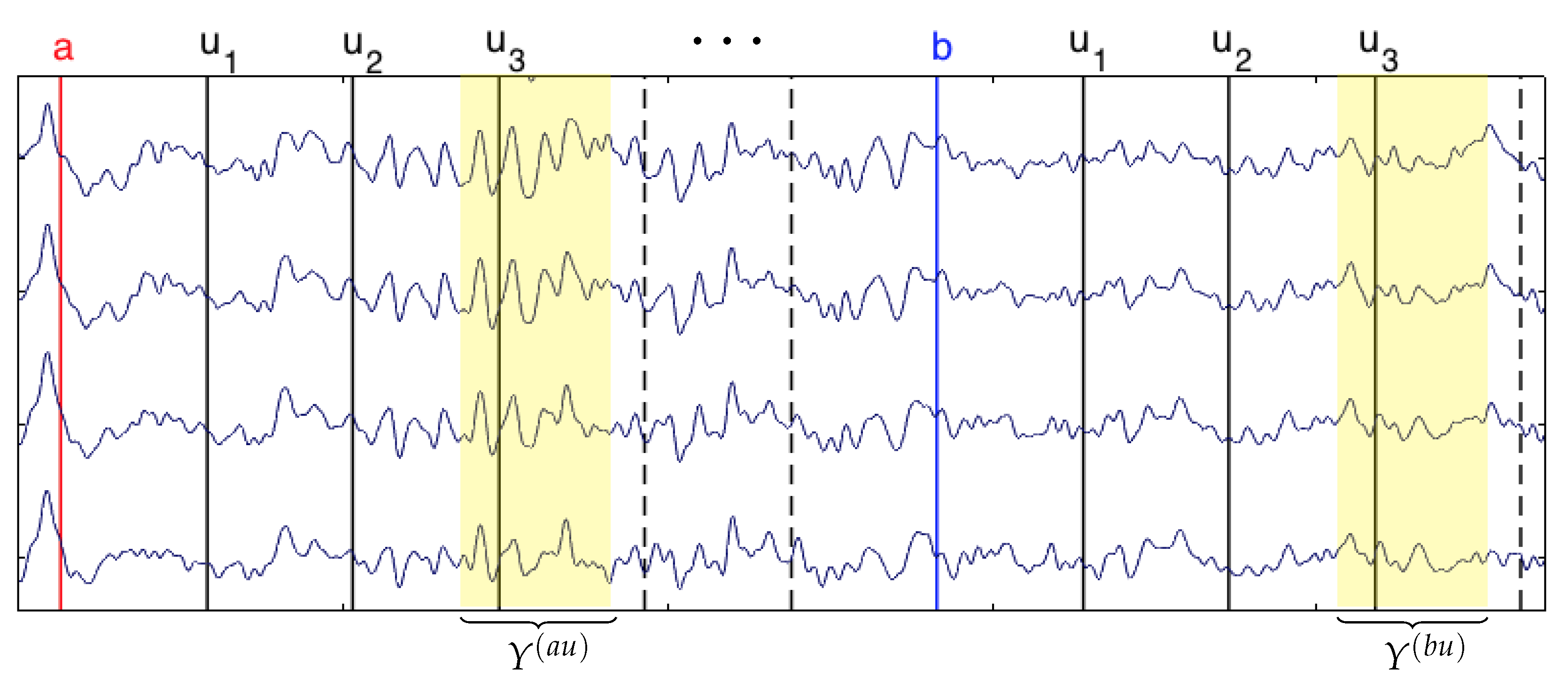

For each string of symbols in , identify all occurrences in the sequence of the string , obtained by concatenating the symbol and the string .

For each

, define the subsample of all EEG chunks

such that

(see

Figure 2).

For any pair , test the null hypothesis that the law of the EEG chunks and collected at Step 2 are equal.

- (a)

If the null hypothesis is not rejected for any pair of final symbols a and b, we conclude that the occurrence of a or b before the string do not affect the law of EEG chunks. Then, we start again the procedure with the one step shorter sequence .

- (b)

If the null hypothesis is rejected for at least one pair of final symbols a and b, we conclude that the law of EEG chunks depend on the entire string and we stop the pruning procedure.

We keep pruning the sequence until the null-hypothesis is reject for the first time.

Call the tree constituted by the strings which remained after the pruning procedure.

The question is whether coincides with the context tree generating the sequence of auditory stimuli.

An important technical issue must be clarified at this point, namely, how to test the equality of the laws of two subsamples of EEG chunks. This is done using the projective method informally explained below.

Suppose we have two samples of random functions, each sample composed by independent realizations of some fixed law. To test whether the two samples are generated by the same law, we choose at random a “direction” and project each function in the samples in this direction. This produces two new samples of real numbers. Now, we test whether the samples of the projected real numbers have the same law. Under suitable conditions, a theorem by Cuesta-Albertos et al. [

16] ensures that for almost all directions if the test does not reject the null hypothesis that the projected samples have the same law, then the original samples also have the same law.

The arguments informally sketched in this section are formally developed in the subsequent sections.

3. Notation and Definitions

Let A be a finite alphabet. Given two integers with , the string of symbols in A is often denoted by ; its length is . The empty string is denoted by ∅ and its length is . Fixing two strings u and v of elements of A, we denote by the string in obtained by the concatenation of u and v. By definition for any string . The string u is said to be a suffix of v if there exists a string s satisfying . This relation is denoted by . When , we say that u is a proper suffix of v and write . Hereafter, the set of all finite strings of symbols in A is denoted by . For any finite string with , we write to denote the one-step shorter string .

Definition 1. A finite subset τ of is a context tree if it satisfies the following conditions:

- 1.

Suffix Property. For no we have with .

- 2.

Irreducibility. No string belonging to τ can be replaced by a proper suffix without violating the suffix property.

The set can be identified with the set of leaves of a rooted tree with a finite set of labeled branches. The elements of are always denoted by .

The height of the context tree is defined as In the present paper, we only consider context trees with finite height.

Definition 2. Let τ and be two context trees. We say that τ is smaller than and write , if for every there exists such that .

Given a context tree , let be a family of probability measures on A indexed by the elements of . The pair is called a probabilistic context tree on A. Each element of is called a context. For any string with , we write to denote the only context in which is a suffix of .

Definition 3. A probabilistic context tree with height is irreducible if for any and there exist a positive integer and symbols such that Definition 4. Let be a probabilistic context tree on A. A stochastic chain taking values in A is called a context tree model compatible with if

- 1.

for any and any finite string such that , it holds thatwhere is the only context in τ which is a suffix of . - 2.

For any , there exists such that

With this notation, we can now introduce the class of random objects driven by a context tree model.

Definition 5. Let A be a finite alphabet, a probabilistic context tree on A, a measurable space and a family of probability measures on . The bivariate stochastic chain taking values in is a sequence of random objects driven by a context tree model compatible with and if the following conditions are satisfied:

- 1.

is a context tree model compatible with .

- 2.

The random elements are -measurable. Moreover, for any integers , any string and any sequence of -measurable sets,where is the context in τ assigned to the string of symbols .

Definition 6. A sequence of random objects driven by a context tree model compatible with and is identifiable if for any context there exists a context such that suf = suf and

The process is called the stimulus chain and is called the response chain.

The experimental situation described in

Section 2 can now be formally presented as follows.

The stimulus chain is a context tree model taking values in an alphabet having as elements symbols indicating the different types of auditory units appearing in the sequence of stimuli. We call its probabilistic context tree.

Each element of the response chain represents the EEG chunk recorded while the volunteer is exposed to the auditory stimulus . Thus, is a function taking values in , where T is the time distance between the onsets of two consecutive auditory stimuli and d the number of electrodes used in the analysis. The sample space F is the Hilbert space of -valued functions on having square integrable components. The Hilbert space F is endowed with its usual Borel -algebra .

Finally, is a family of probability measures on describing the laws of the EEG chunks.

From now on, the pair always denotes the Hilbert space endowed with its usual Borel -algebra.

4. Statistical Selection for Sequences of Random Objects Driven by Context Tree Models

Let , with and for , be a sample produced by a sequence of random objects driven by a context tree model compatible with and . Before introducing the statistical selection procedure, we need two more definitions.

Definition 7. Let τ be a context tree and fix a finite string . We define the branch in τ induced by s as the set . The set is called a terminal branch if for all it holds that for some .

Given a sample

of symbols in

A and a finite string

, the number of occurrences of

u in the sample

is defined as

Definition 8. Given integers , an admissible context tree of maximal height L for the sample of symbols in A, is any context tree τ satisfying

- 1.

if and only if and .

- 2.

Any string with is a suffix of some or has a suffix .

For any pair of integers

and any string

with

, call

the set of indexes belonging to

in which the string

u appears in sample

, that is

Observe that by definition . If , we set for each . Thus, is the subsample of induced by the string u.

Given

such that

and

, we define the empirical distribution associated to the projection of the sample

onto the direction

h as

where for any pair of functions

,

For a given pair

, with

and

, the Kolmogorov–Smirnov distance between the empirical distributions

and

is defined by

Finally, we define for any pair

such that

and

Our selection procedure can now be described as follows. Fix an integer and let be the largest admissible context tree of maximal height L for the sample . The largest means that if is any other admissible context tree of maximal height L for the sample , then

For any string

such that

is a terminal branch, we test the null hypothesis

using the test statistic

where

is a realization of a d-dimensional Brownian motion in

.

We reject the null hypothesis

when

, where

is a suitable threshold. When the null hypothesis

is not rejected, we prune the branch

in

and set as a new candidate context tree

On the other hand, if the null hypothesis is rejected, we keep in and stop testing for strings such that .

In each pruning step, take a string that induces a terminal branch in and has not been tested yet. This pruning procedure is repeated until no more pruning is performed. We denote by the final context tree obtained by this procedure. The formal description of the above pruning procedure is provided in Algorithm 1 as pseudocode.

| Algorithm 1 Pseudocode describing the pruning procedure used to select the tree . |

- Input:

A sample with and for , a positive threshold c and a positive integer L. - Output:

A tree

- 1:

- 2:

Flag “not visited” for all string s such that - 3:

for k in L to 1 do - 4:

while : , Flag “not visited” and is a terminal branch do - 5:

Choose a s such that , Flag “not visited” and is a terminal branch - 6:

Compute the test statistic to test - 7:

if then - 8:

Flag “visited” - 9:

else - 10:

- 11:

end if - 12:

end while - 13:

end for - 14:

Return

|

To state the consistency theorem, we need the following definitions.

Definition 9. A probability measure P defined on satisfies Carleman condition if all the absolute moments , are finite and Definition 10. Let P be a probability measure on . We say that P is continuous if is continuous for any , where is defined by Let V be a finite set of indexes and be a family of probability measures on . We say that is continuous if for all , the probability measure is continuous.

In what follows, let

, where

We say that

slowly enough as

if

Theorem 1. Let be a sample produced by a identifiable sequence of random objects driven by a context tree model compatible with and , and let be the context tree selected from the sample by Algorithm 1 with and threshold , where . If is irreducible and is continuous and satisfies Carleman condition, then for slowly enough as , The proof of Theorem 1 is presented in

Section 6.

5. Simulation Study

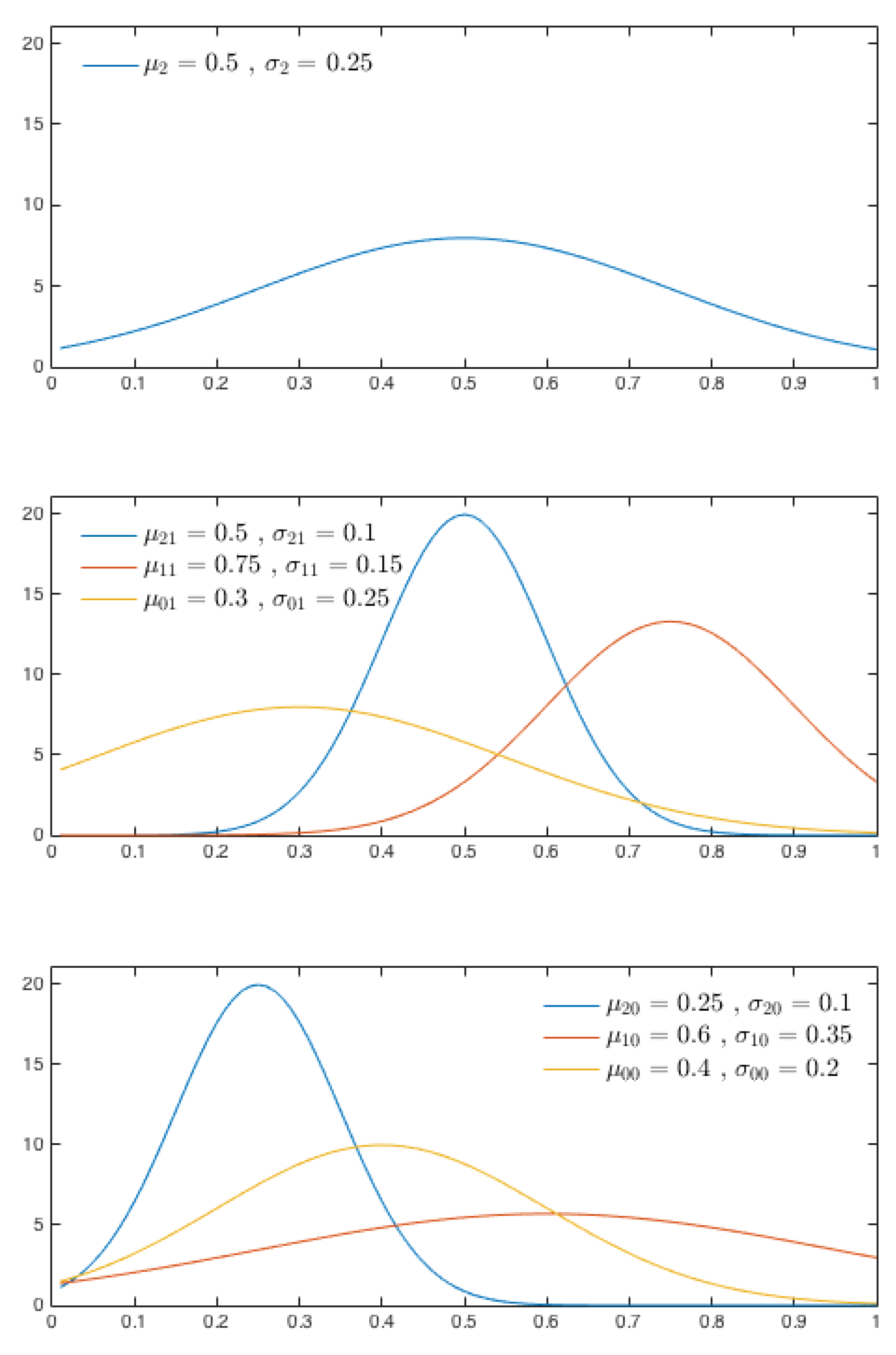

In this section, we illustrate the performance of Algorithm 1 by applying it in a toy example. We consider the context tree model compatible with

described in

Section 2 with

. For each

, we assume

is the law of a diffusion process with drift coefficient

and constant diffusion coefficient. For simplicity, all diffusion coefficients are assumed to be 1. For each context

, we assume

, where

K is a positive constant and

is the density of a Gaussian random variable with mean

and standard deviation

, restricted to the interval

. In the simulation, we take

. The shapes of the functions

and corresponding values of

and

are shown in

Figure 3. One can check that the assumptions of Theorem 1 are satisfied by this toy example.

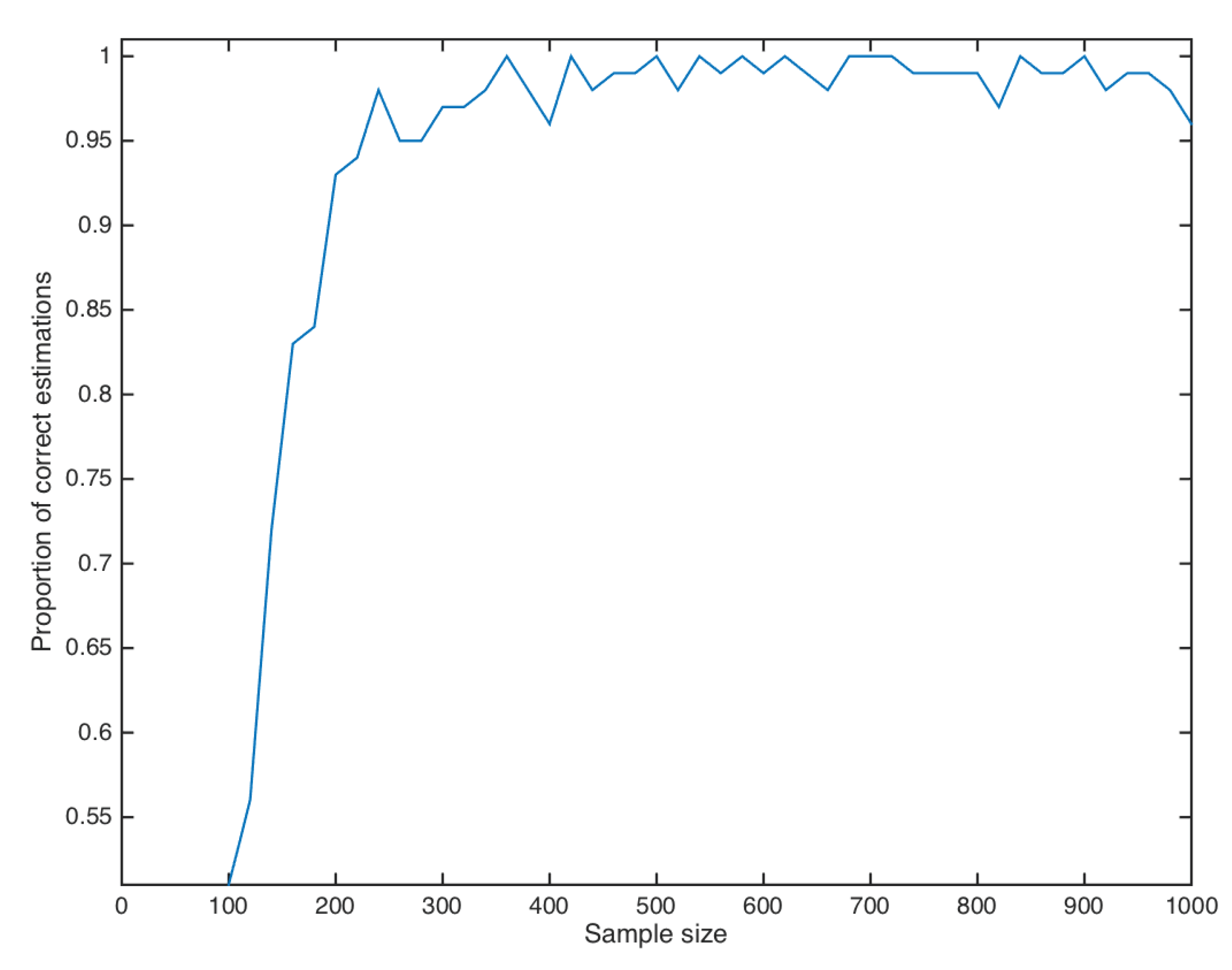

To numerically implement Algorithm 1, we assume that all trajectories of the diffusion processes are observed on equally spaces point

, where

for each

. For each sample size

we estimate the fraction of times Algorithm 1, with

and

, correctly identifies the context tree

based on 100 random samples of the model with size

The results are reported in

Figure 4.

6. Proof of Theorem 1

The proof of Theorem 1 is a direct consequence of Propositions 1 and 2 presented below.

Proposition 1. Let be a sample produced by a sequence of random objects driven by a context tree model compatible with and satisfying the assumptions of Theorem 1. Let and set . For any integer , context , direction , and strings such that and , it holds that In particular, for any as , we have Proof. The irreducibility of

implies that

P-a.s. both

and

tend to

as

n diverges. Thus, Theorem 3.1(a) of [

16] implies that the law of

is independent of the strings

u and

v, and also of the direction

. It also implies that

converges in distribution to

as

, where

is a Brownian Bridge. Since

, the first part of the result follows.

By the first part of the proof, for any fixed

, we have that for all

n large enough,

Thus, given

, take

such that

to deduce that for all

n large enough,

Since as , we have that for all n sufficiently large so that the result follows from the previous inequality. □

Proposition 2 reads as follows.

Proposition 2. Let be a sample produced by a identifiable sequence of random objects driven by a context tree model compatible with and , and let satisfying the assumptions of Theorem 1. Let and define . For any string such that is a terminal branch there exists a pair such that for almost all realization of a Brownian motion on ,whenever slowly enough as Proof. Since the sequence of random objects

is identifiable and

is a terminal branch, there exists a pair

whose associated distributions

and

on

F are different, and both

and

satisfy the Carleman condition. For each

, define

if

. Otherwise, we set

. The irreducibility of

implies that

as

P-a.s., where

C is a positive constant depending on

w and

.

Now, Theorem 3.1(b) of [

16] implies that, for almost all realization of a Brownian motion

W on

F,

Since and slowly enough, the result follows. □

Proof of Theorem 1. Let

be the set of contexts belonging to a terminal branch of

. Define also the following events

It follows from the definition of Algorithm 1 that

Thus, it is enough to prove that for any there exists such that and for all .

By the union bound, we see that

The sequence of random objects

is identifiable. Thus by observing that for each

,

is a terminal branch, we have that there exists

such that the associated distributions

and

on

F are different, and both

and

satisfies Carleman condition. Since

and

is finite, Proposition 2 implies that

as

, if

slowly enough. As a consequence, for any

there exists

such that

for all

.

Using again the union bound, we have

By observing that

is finite, the alphabet

A is finite and

we deduce from Proposition 1 and the inequality in Equation (

6) that, for any

, we have

for all

n large enough. This concludes the proof of the theorem. □