Abstract

A generalized high-order class for approximating the solution of nonlinear systems of equations is introduced. First, from a fourth-order iterative family for solving nonlinear equations, we propose an extension to nonlinear systems of equations holding the same order of convergence but replacing the Jacobian by a divided difference in the weight functions for systems. The proposed GH family of methods is designed from this fourth-order family using both the composition and the weight functions technique. The resulting family has order of convergence 9. The performance of a particular iterative method of both families is analyzed for solving different test systems and also for the Fisher’s problem, showing the good performance of the new methods.

1. Introduction

During the last few decades, there has been a wide amount of interest in designing iterative schemes for estimating both equations and systems of equations presenting nonlinearities. Since the problems of finding a zero of a system of nonlinear equations , where , are present in science and engineering, the iterative methods are an ideal candidate for finding the solutions.

There is extensive literature on iterative methods for solving nonlinear equations, good overviews can be found in [1,2]. However, the extension of schemes from equations to systems of equations is not always trivial. Based on the Kung–Traub’s conjecture for nonlinear equations [3], several optimal methods of three steps can be found in the recent works [4,5]. There are other interesting methods on the research that reach higher order of convergence [6].

In this paper, we present a new family of iterative methods for solving nonlinear systems of equations with convergence order nine. This class, named GH family, has two weight functions and four steps on its iterative expression. It needs one evaluation of the Jacobian matrix and four functional evaluations of the nonlinear function per iteration. Previously, a fourth-order family with only a weight function is proposed. This family is the basis for designing the iterative schemes of the GH family with a composition-type technique. The development of the family covers Section 2. In order to check the feasibility of the proposed schemes to solve nonlinear systems of equations, Section 3 shows the numerical results when the fourth-order scheme is used for solving Fisher’s partial differential equation and when the ninth-order family is applied to several test functions. Finally, Section 4 collects the main conclusions of the work.

Some basic definitions must be recalled for analyzing the order of convergence of the methods. Further details can be found in [4,7] and also the notation used in this work.

2. The GH Family for Solving Systems of Nonlinear Equations

In [8], a new family of iterative methods for solving nonlinear equations is introduced. Its iterative expression is

where is a weight function with . Its order of convergence is analyzed in the following result. A complete proof can be found in [8]. Our purpose in this paper is to extend this result for the case of multidimensional problems.

Theorem 1.

Let be a real sufficiently differentiable function in an open interval Ω and let be a simple root of . If is close enough to and satisfies conditions and , then all the iterative methods of family (1) converge to with fourth-order of convergence, their error equation being

where and , .

In order to extend family (1) for solving nonlinear systems, an alternative definition for variable is required. For this purpose, we develop the expression as follows:

So, the extension of family (1) for solving systems of nonlinear equations turns into

where

and is a matrix weight function. Furthermore, if denotes the space of all real matrices, then we can define (see [9]) such that its Fréchet derivatives satisfy:

- (a)

- , being , , and denotes the space of linear mappings from X to itself,

- (b)

- , where and .

- (c)

- , for and .

Moreover, the definition of in Label (4) uses the divided difference operator of F on , , defined in [10] as

The next result shows the order of convergence of family (3).

Theorem 2.

Let the nonlinear function be a sufficiently Fréchet differentiable in an open convex set Ω, a solution of the system . It must be also satisfied that is continuous and nonsingular in . Let us suppose that the initial guess is close enough to and satisfies , , and , where I denotes the identity matrix of size . Then, family (3) converges to with order of convergence 4, its error equation being

being the error in the kth iteration and , .

Proof.

Let us denote by for all k the error in iteration k. By using Taylor series expansions around , and can be expressed as

where , . In the same way, it holds that

As , we have and

Now, from the above developments,

The divided difference operator is defined by the formula of Gennochi–Hermite [10]

Expanding in Taylor series around x and integrating, we have

Now, the value of is given by:

where

Using the Taylor expansion of around 0, we get

Then,

Finally, the error equation of family (3) is

By applying conditions , and , the error equation turns into

so the iterative family (3) is fourth-order convergent. □

Trying to design a higher-order class with the same structure, we now consider the fourth-step iterative family:

where and are two matrix weight functions with variables defined by

Let us recall that the iterative scheme (13), from now on called GH family, has been obtained by a composition-type of the iterative family (3) with itself. The convergence of GH family is analyzed in the next result.

Theorem 3.

Let the nonlinear function be a sufficiently Fréchet differentiable in an open convex set Ω, a solution of the system . It must be also satisfied that is continuous and nonsingular in . Let us suppose that the initial estimation is close enough to and the weight functions and satisfy:

- (i)

- , , and .

- (ii)

- , , and .

Then, all the iterative methods of family (13) converge to with order of convergence 9.

Proof.

By using the developments in the proof of Theorem 2 (with more terms in the error expressions) and also the same way to proceed, we obtain for the second step of family (13)

From these expressions, we have

and then

Thus, it is obtained that

Then, the coefficients in (14) are

Then, being ,

Therefore, the Taylor development of variable of the weight function H is given by:

where

When the conditions in the theorem for the weight function H are applied, we have

the coefficients being

Finally,

being

Then, the error equation of GH family is:

where

As it can be proven that for , we have that GH family has order of convergence 9.

3. Numerical Experience

In this section, we are applying the iterative families (3) and (13) to several nonlinear systems of equations. In particular, the performance of family (3) is checked by solving the Fisher’s partial differential equation.

3.1. Application of Family (3) to Fisher’s Equation

Fisher’s equation [11]

represents a model of diffusion in population dynamics, where is the diffusion constant, r is the growth rate of the species, and c is the carrying capacity. In this section, a specific case of Fisher’s equation is solved using iterative methods. In this case, , so (15) gets into

The domain of x is the interval . The boundary conditions are and , for , while the initial condition is

Discretizing (16) and by using divided differences, the problem can be solved as a family of nonlinear systems. For this purpose, we first have selected a grid of points in the domain, , where represents the node in the spatial variable, set as , j is the index of the time variable, set as h and k are the spatial and time steps, respectively, and and are the number of subintervals for variables x and t, respectively. Then, an approximation of the solution at each point of the mesh will be obtained, that is, .

Applying backward differences to the time derivative and central differences to the spacial one, that is

the scheme in finite differences for the approximated problem is

for , . After some algebraic manipulations, (18) results in

where . Depending on the number of subintervals used in the discretization of the variable x, a nonlinear system of size can be found by solving (19). The nonlinear system defined for a fixed j is the following:

where matrix A is

Each system gives an approximated solution for the problem in a time step from the obtained in the instant , so we begin to solve the systems using the solution at provided by (17).

Using iterative methods for solving nonlinear systems, such as family (3), system (20) can be solved. In order to compare the numerical results, another iterative scheme with the same order of convergence as family (3) has been chosen. In this case, the Sharma et al. [12] method, denoted in this work by , is fourth-order convergent and has the iterative expression

where .

On the other hand, to check the numerical behavior of family (3), it is necessary to select a weight function satisfying conditions of Theorem 2, so it will be obtained a method of this family. Several functions satisfy the conditions of the theorem, some of them being:

An efficiency comparison between the proposed schemes is given in terms of the computational cost and the number of functional evaluations. This comparison helps to choose the more efficient method of family (3). For this purpose, we can use the efficiency index defined by Ostrowski [13] as , where p is the order of the method and d is the number of new functional evaluations per iteration required by the method. The computational cost for solving a linear system of equations depends on its size. As the proposed methods can be used for solving large systems of equations, this cost must be taken into account. Thus, we compare the performance of the methods with the computational efficiency index introduced in [7] as , where is the number of operations (products and quotients) per iteration.

Table 1 summarizes the results for the computational efficiency index and number of functional evaluations for each method, where , , and denote the resulting iterative schemes when family (3) is applied using the weight functions (22), respectively.

Table 1.

Funtional evaluations and computational efficiency index of methods , , , and .

For each method, Table 1 shows the number of different evaluations of the function (F), the Jacobian matrix (), and the number of different divided differences used by the method in each iteration (nDD). All the methods need n and evaluations to compute F and , respectively, and for each divided difference operator.

Regarding the operational cost, the value of Mv is the number of matrix–vector products, with operations for each product. To compute an inverse linear operator, one may solve a linear system of equations, where an LU decomposition is performed and two triangular systems must be solved, with a total cost of operations. However, for solving r linear systems with the same matrix of coefficients, the LU decomposition is computed only once, so the computational cost is only . The values of s1 and s2 are the number of linear systems that each scheme solves per iteration with matrix of coefficients or another matrix, respectively.

As the results of Table 1 show, among the methods belonging to family (3), the most efficient is method . Then, the numerical performance of the family is carried out with this method. In addition, method requires more functional evaluations than the other ones as it computes two Jacobian matrices in each iteration.

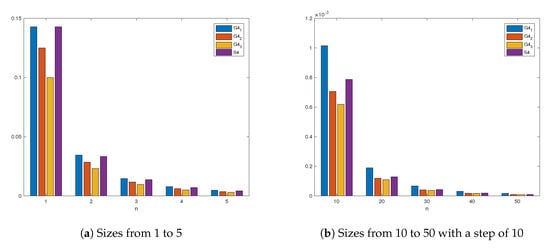

The results obtained in Table 1 can be observed in Figure 1, where the value of for the four methods by varying the size of the system (n) has been represented. As we can see, for small values of n, the indices of and show similar performance; meanwhile, when the value of n increases, the computational efficiency index of all methods decreases, but the index of method is greater than the rest.

Figure 1.

from Table 1 for methods , , , and and different sizes of the system.

We have solved system (20) using methods and for . For the numerical performance, we use software Matlab R2017b with variable precision arithmetics of 1000 digits of mantissa.The results of the application of the methods for solving the nonlinear system are collected in Table 2 varying the value of and . For every performance, the iteration procedure stops when or the number of iterations reaches the number 50. The value of iter represents the mean number of iterations needed when all the columns have been calculated and the terms represent the value . Moreover, the elapsed time in seconds to obtain the solution for the problem after 10 (consecutive) executions is shown.

Table 2.

Numerical results for and different values of and .

The results in Table 2 show the good performance of method for solving the Fisher’s problem. Method only needs two iterations to calculate a solution for the system, the mean number of iterations always being lower than that of method . For a fixed value of , when increases, so does the elapsed time, but the approximation to the solution is better since is smaller. In addition, the e-time is lower for method , so it reaches the solution with more computational efficiency and arithmetical precision than the other scheme.

3.2. Application of Family GH to Nonlinear Test Systems

According to the results obtained in Table 1 for methods , the numerical experiments for family (13) are developed by using the following weight functions:

which satisfy conditions of Theorem 3.

To compare the features of our method with other schemes of the literature, the numerical tests are also performed on two iterative schemes of order 8 that can be found in [5,14]. The method is named , for our iterative family using functions (23), for [5], and , for [14]. The computational efficiency index and the number of functional evaluations of these methods are collected in Table 3.

Table 3.

Funtional evaluations and computational efficiency index of methods , and .

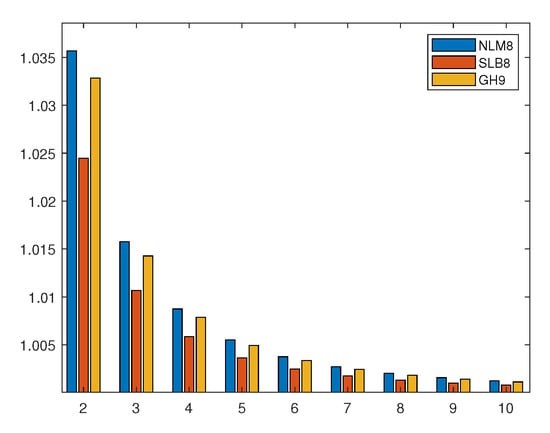

Method requires few functional evaluations, as low operational cost and has a competitive computational efficiency index (see Figure 2). We will see now that the numerical experiments confirm these results. For this purpose, methods , , and are applied to solve the following nonlinear systems:

Figure 2.

from Table 3 for methods , , and for different sizes of the system.

- (a)

- , where

- (b)

- , such that

- (c)

- , where

The results obtained from applying the methods for solving the nonlinear systems are collected in Table 4, Table 5 and Table 6. The stopping criteria now is a difference between two consecutive iterates lower than or the condition with a maximum number of iterations of 50. The results have been calculated using Matlab R2017b with variable precision arithmetics of 2000 digits of mantissa. In this way, the numerical noise is far enough to not affect the final result. The approximated computational order of convergence [15] represents an approximation of the order of convergence of each method.

Table 4.

Numerical results for .

Table 5.

Numerical results for .

Table 6.

Numerical results for .

The ACOC [15] is the approximated computational order of convergence defined as

It is a computational approximation of the theoretical order.

For every nonlinear system, the higher value of the ACOC is for the GH family, as expected. In general, our proposed scheme converges in less iterations than the other tested methods with very competitive error estimates.

4. Conclusions

Two families of iterative methods for solving nonlinear systems of equations have been introduced, the first one being a multidimensional extension of a previous scalar class of iterative methods. Both schemes are designed via the matrix weight functions procedure with only one evaluation of the Jacobian and its estimation using divided differences for systems. Two more steps are added to the fourth-order class, holding the Jacobian matrix evaluated in the same step, allowing us to reach order of convergence 9 for the GH family. The numerical tests confirm the quality of the iterative schemes for solving systems of equations of non-small size, improving the results of several methods in the literature.

Author Contributions

The individual contribution of the authors have been: conceptualization, J.R.T. and N.G.; software, F.I.C.; validation, F.I.C., A.C. and J.R.T.; formal analysis, N.G.; investigation, F.I.C.; writing—original draft preparation, N.G.; writing—review and editing, J.R.T. and A.C.

Funding

This research was partially supported by both Ministerio de Ciencia, Innovación y Universidades and Generalitat Valenciana, under grants PGC2018-095896-B-C22 (MCIU/AEI/FEDER/UE) and PROMETEO/ 2016/089, respectively.

Acknowledgments

The authors would like to thank the anonymous reviewers for their kind and constructive suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Petković, M.S.; Neta, B.; Petković, L.D.; Džunić, J. Multipoint Methods for Solving Nonlinear Equations: A survey. App. Math. Comput. 2014, 226, 635–660. [Google Scholar]

- Cordero, A.; Torregrosa, J.R. On the Design of Optimal Iterative Methods for Solving Nonlinear Equations. In Advances in Iterative Methods for Nonlinear Equations; Amat, S., Busquier, S., Eds.; Springer: Cham, Switzerland, 2016; pp. 79–111. [Google Scholar]

- Kung, H.T.; Traub, J.F. Optimal order of one-point and multipoint iteration. J. Assoc. Comput. Math. 1974, 21, 643–651. [Google Scholar] [CrossRef]

- Cordero, A.; Gómez, E.; Torregrosa, J.R. Efficient high-order iterative methods for solving nonlinear systems and their application on heat conduction problems. Complexity 2017, 2017, 6457532. [Google Scholar] [CrossRef]

- Sharma, J.; Arora, H. Improved Newton-like methods for solving systems of nonlinear equations. SeMA J. 2017, 74, 147–163. [Google Scholar] [CrossRef]

- Amiri, A.; Cordero, A.; Darvishi, M.; Torregrosa, J. Stability analysis of a parametric family of seventh-order iterative methods for solving nonlinear systems. App. Math. Comput. 2018, 323, 43–57. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martínez, E.; Torregrosa, J.R. A modified Newton-Jarratt’s composition. Numer. Algor. 2010, 55, 87–99. [Google Scholar] [CrossRef]

- Chicharro, F.I.; Cordero, A.; Garrido, N.; Torregrosa, J.R. Wide stability in a new family of optimal fourth-order iterative methods. Comput. Math. Methods 2019, 1, e1023. [Google Scholar] [CrossRef]

- Artidiello, S. Diseño, Implementación y Convergencia de Métodos Iterativos Para Resolver Ecuaciones y Sistemas No Lineales Utilizando Funciones Peso. Ph.D. Thesis, Servicio de publicaciones Universidad Politécnica de Valencia, Valencia, Spain, 2014. [Google Scholar]

- Ortega, J.M.; Reinhboldt, W.C. Iterative Solution of Nonlinear Equations in Several Variables; Academic Press: Cambridge, MA, USA, 1970. [Google Scholar]

- Fisher, R.A. The wave of advance of advantageous genes. Ann. Eugen. 1933, 7, 353–369. [Google Scholar] [CrossRef]

- Sharma, J.; Guha, R.; Sharma, R. An efficient fourth order weighted-Newton method for systems of nonlinear equations. Numer. Algor. 2013, 62, 307–323. [Google Scholar] [CrossRef]

- Ostrowski, A. Solution of Equations and Systems of Equations; Prentice-Hall: Upper Saddle River, NJ, USA, 1964. [Google Scholar]

- Soleymani, F.; Lotfi, T.; Bakhtiari, P. A multi-step class of iterative methods for nonlinear systems. Optim. Lett. 2014, 8, 1001–1015. [Google Scholar] [CrossRef]

- Cordero, A.; Torregrosa, J.R. Variants of Newton’s method using fifth order quadrature formulas. Appl. Math. Comput. 2007, 190, 686–698. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).