1. Introduction

Colorectal cancer (CRC) is a form of cancer that occurs globally and is one of the most common forms of cancer among both men and women in terms of the causes of human mortality [

1,

2]. Recently, reports have identified that the number of people with CRC younger than 50 years old is increasing, which means cancer screening is a more essential process than ever [

3,

4]. Cancer features unlimited division and appears in living anomalous cells in various organs, as well as when abnormal cells appear and grow in the colon, which is the case with CRC [

4]. The beginning of CRC involves 70% growth from adenomatous polyps, which can develop inside the colon lining. It grows slowly over a period of approximately 10 to 20 years [

4,

5,

6]. Evaluation of CRC diagnosis is critical [

4] because the survival rate is increased by timely detection, which is considered a significant process in cancer diagnosis. The main tools for diagnosis include medical imaging [

1], which can realistically display patients’ internal organs in order to enable more rapid screening and diagnosis by health care experts for continued planning and subsequent treatment procedures [

7].

Topograms are 2D overview images obtained from a tomographic machine. Topogram images are generated for screening and planning before advancing to the next procedural step, such as computer tomography (CT) scanning [

7]. These kinds of medical images easily and conveniently capture anterior, posterior, and lateral views of patients’ bodies [

7,

8]. With an identical process of colorectal polyp identification, colonography utilizes a scanning machine to acquire an overview image of the colorectal area to identify abnormalities of polyps so they can be removed before developing or spreading into severe cancer [

9]. However, the diagnostic process has several limitations, including that manual interpretation of medical images can be tedious, require a lot of time, and be subject to bias and human error [

1]. Medical imaging involves digital images that can be used for analysis by a computer. Therefore, image analysis based on computer-aided diagnosis (CAD) systems for medical image classification is essential in disease detection, screening, and diagnosis [

10]. Applying computer-aided screening for colorectal polyp classification and screening with multimedia summarization techniques [

11,

12] has advantages in increasing the capability of diagnosing colorectal polyps [

13].

Figure 1 illustrates the preliminary screening system concept for colonoscopy diagnosis to help physicians’ inspections. This screening system can be helpful for preliminary classification of colorectal topogram images, which can be used to plan the next step in diagnosis.

Deep learning has been popularly used since 1998 [

14], when an early deep learning method named LeNet was created with a convolutional neural network (CNN) for recognizing digitized handwriting. In 2006 [

15], deep learning became more powerful with fine-tuning, and was used to generate a better model of digitized handwritten image classification than the discriminative learning technique. Dimensionality reduction by adopting deep learning is also described in [

16]. The proposed method was an improvement compared to the traditional method of principal component analysis (PCA). More recently, deep learning in the development of CNN architecture has been joined with image classification by ImageNet [

17]. Since then, there has been development and application of deep learning in various fields, including in medical processes. Deep learning can assist health care experts by requiring less time for the screening process and improving the efficiency of diagnosis [

10]. The deep learning technique, especially in terms of CNN, has become widely applied in a variety of medical procedures, such as medical image reconstruction [

18], clinical report classification [

19], diagnosis [

20], identification of disease [

21], cancer detection [

22], disease screening [

23], and medical image classification [

24]. The success of CNN has increased in medical image analysis [

25], especially for colorectal polyp diagnostic procedures [

26,

27]. Several studies applied CNN as a solution for problems in medical images with CRC and colorectal polyps. Some studies used CNN for segmentation with magnetic resonance imaging (MRI), such as [

28], in which the combination of 3D CNN and 3D level-set for automated segmentation of colorectal cancer yielded segmentation accuracy of 93.78%. In addition, [

29] proposed a CNN with hybrid loss for automatic colorectal cancer segmentation and outperformed with an average surface distance of 3.83 mm and mean Dice similarity coefficient (DSC) of 0.721.

For CT imaging [

30], applications of CNN by transfer learning for electronic cleansing may improve accuracy from 89% to 94% for visualization of colorectal polyp images. Furthermore, the CNN developed by [

31] showed improved colorectal polyp classification performance by area under the curve (AUC) of 86 and accuracy of 83% on CT image datasets.

Several studies focused on endoscopic image datasets, such as [

32], which developed a CNN for detecting polyps in real time and validating a new colonoscopy image collection with detected polyps, obtaining AUC of 0.984%, sensitivity of 94.38%, and specificity of 95.92%. [

33] Creating a CNN for polyp detection enabled precise detection at 88.6% and recall at 71.6%. A polyp segmentation method with full CNN for different sizes and shapes of colorectal polyps used as ground truth images for evaluation was developed in [

34]. Segmentation accuracy of 97.77% was achieved. A CNN for real-time evaluation of endoscopic videos was proposed in [

35] to identify colorectal polyps, with achieved accuracy of 94%. Modified region-based CNN training on wireless capsule endoscopy images in [

36] provided detection performance precision of 98.46%, recall of 95.52%, F1 score of 96.67%, and F2 score of 96.10%.

Utilizing tissue image datasets, [

2] developed an experimental CNN with transfer learning and fine-tuning for histology in CRC diagnosis, in which the CNN provided good testing classification accuracy up to 90%. In [

37], large image sizes were applied with CNN and evaluated for colorectal cancer grading classification, achieving accuracy for two classes of 99.28% and three classes of 95.70%. In [

38], a CNN was trained by transfer learning, with achieved accuracy of 94.3% using an external testing dataset in nine classes. Many of the above studies obviously showed that CNN can be used for colorectal polyp classification in the context of screening to generate highly accurate and excellent results when using different kinds of medical image datasets, including MR, CT, tissue, and endoscopic images. However, a CNN method has not yet been utilized with colorectal topogram images, which could possibly be used to assist physicians in preliminary screening and rapid diagnosis.

There have been many improvements of CNN architecture since 2012. The classic architecture, called AlexNet [

39], demonstrated essential improvements over the previous architecture for image classification. More recently, several CNN architectures have been developed to enhance image classification performance, such as in VGGNet in 2014 [

40], GoogleNet, also known as Inception [

41], and ResNet [

42], established in 2015. These CNN architectures were developed under six main improvements: convolutional layer, pooling layer, activation function, loss function, regularization, and optimization [

43]. In 2017, Extreme Inception, also known as Xception, was developed, a version in the Inception family from the Xception architecture developed by Chollet [

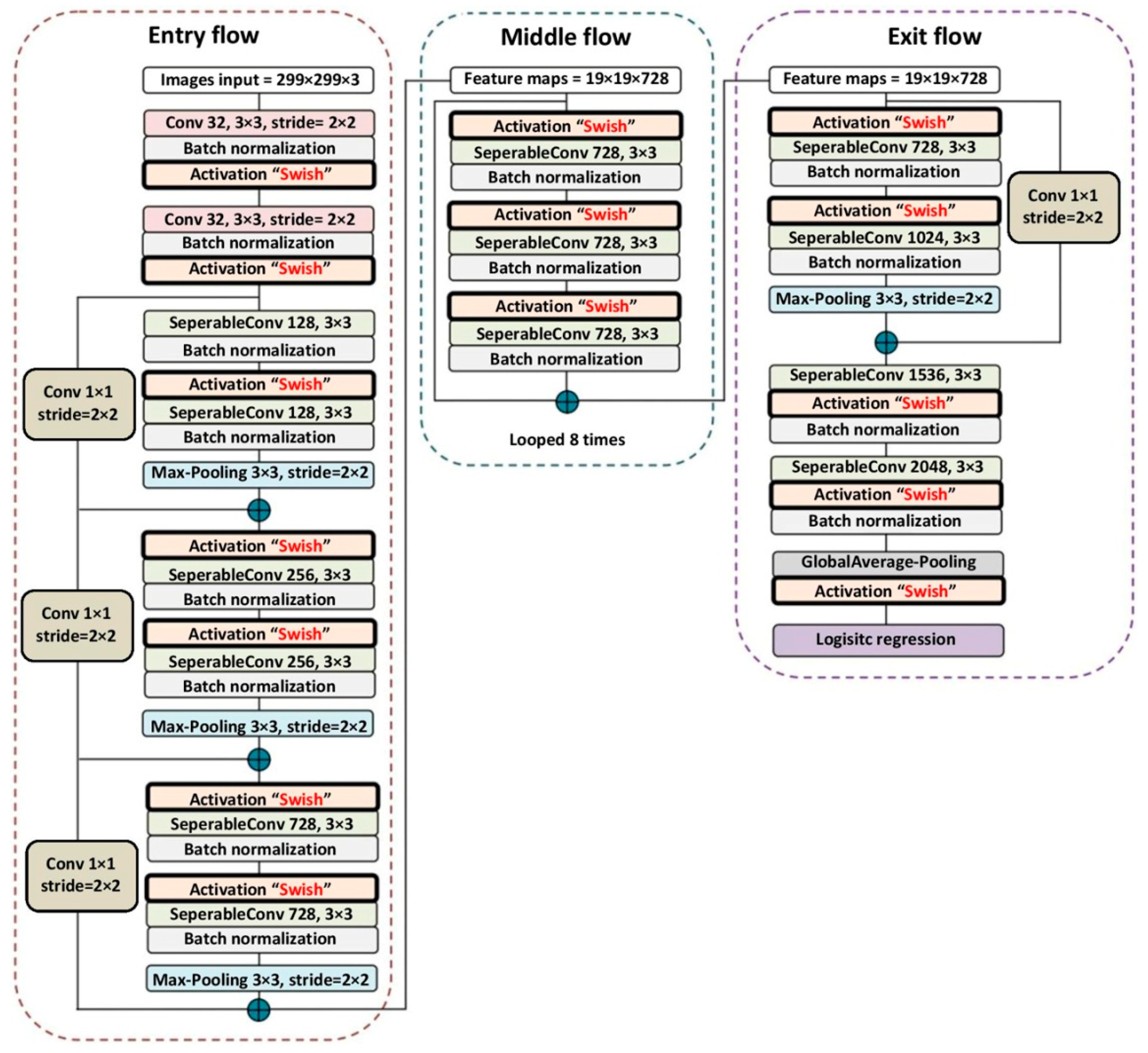

44] at Google. The Xception architecture concept is based on the Inception module [

41], with modifications and a combination of convolutional layers, inception modules, depth-wise separable convolutions, and residual connections to improve CNN performance. The results of Xception indicate that classification performance was improved compared to VGGNet, ResNet, and Inception v3 [

45]. The original Xception architecture used rectified linear unit (ReLU) [

46] for the activation function. The recent activation function, named Swish [

47], can enhance the image classification accuracy of NASNet-Mobile (established in 2018) [

48] and InceptionResNet v2 (released in 2016) [

49]. There has not been a study on the application of Swish with Xception. Replacing the ReLU with Swish [

47] inside Xception may enhance the classification performance compared to the original Xception and other CNN architectures.

The purpose of this study is to provide a novel modification of Xception by applying the Swish activation function to determine the possibility of developing a preliminary screening system for colorectal polyps, by training our proposed Xception with Swish model with a colonography topogram image dataset. The proposed system screens colorectal polyps into two classes: found and not found. In addition, we also classify polyps in three categories: small size, large size, and not found. Moreover, we compare the results with the original Xception architecture and other established modern CNN architectures that are also modified with Swish, and the performance of Xception with Swish indicates excellent results compared to other CNN methods.

The remainder of the paper is structured as follows: the Xception architecture, Swish activation function, and modification of Xception with Swish for preliminary screening of colorectal polyps are described in

Section 2. In

Section 3, we provide the materials and methods, including topogram image dataset, image augmentation method, specification of hardware and software, programming language, and colorectal polyp classification method, and compare the experimental results.

Section 4 presents more details on the experimental results and a discussion of the image classification models in the context of a preliminary colorectal polyp screening system. The conclusion of this study is presented in

Section 5.

5. Conclusions

CRC is a form of cancer that is a leading cause of human mortality and is increasing among younger generations. Thus, people without data should undergo CRC screening. A 2D topogram image can be generated for screening and planning before advancing to the next procedure. The deep learning technique of CNN is being used to generate effective models of image classification, especially in medical tasks for colorectal polyp diagnosis. However, it has not yet been utilized in the CNN method with colorectal topogram images, which could be used to assist physicians in preliminary screening and rapid diagnosis.

The use of an activation function, Swish, can improve the classification accuracy of CNN. There has never been a study about applying Swish with CNN of Xception architecture. Replacing the ReLU inside Xception with Swish may enhance the performance of image classification when compared to the original Xception and other CNN architectures. The purpose of the paper was to apply a new modification of Xception with the Swish activation function and discover the possibility of developing a novel preliminary screening system for colorectal polyps in the training of our proposed model with benchmark colonography of topogram image datasets and using an image augmentation method to enhance the image dataset. The proposed method was used in the context of colorectal screening to classify two classes of polyps, found and not found, and three size classifications of small, large, and polyp not found. Furthermore, the experimental results were compared with the original Xception and other CNN architectures using the modified architecture with Swish.

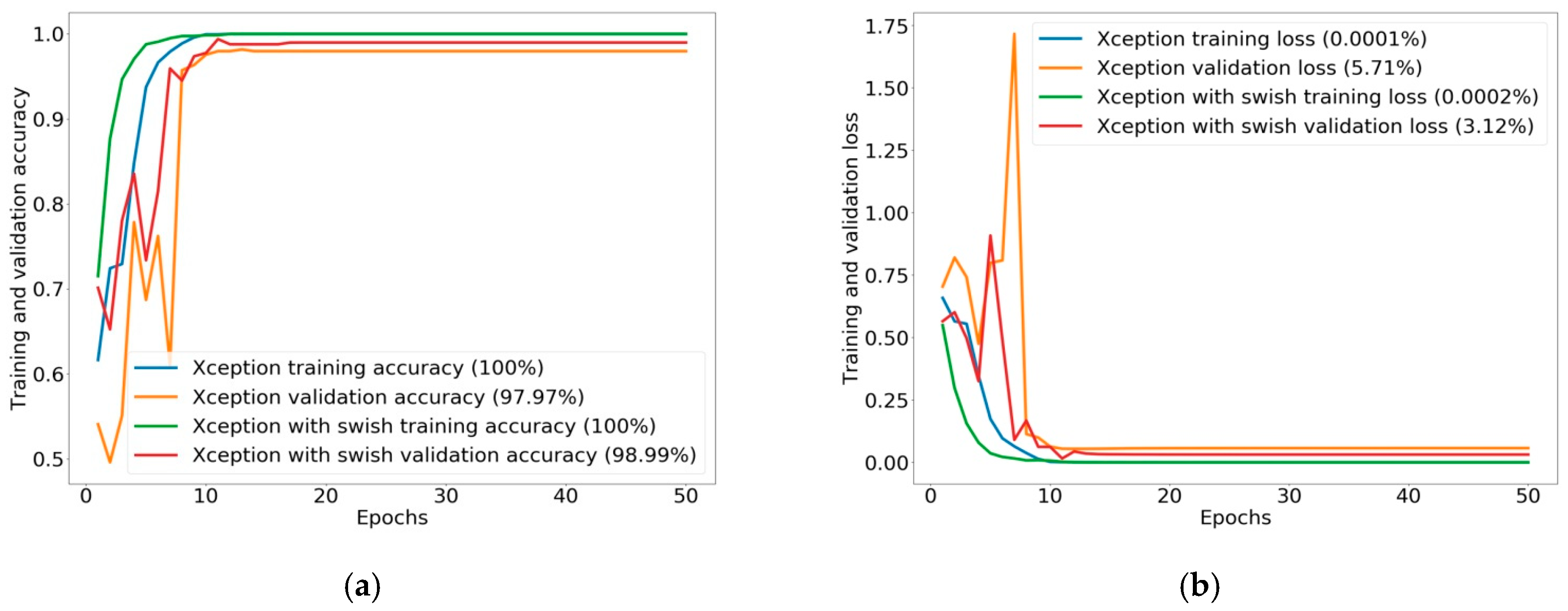

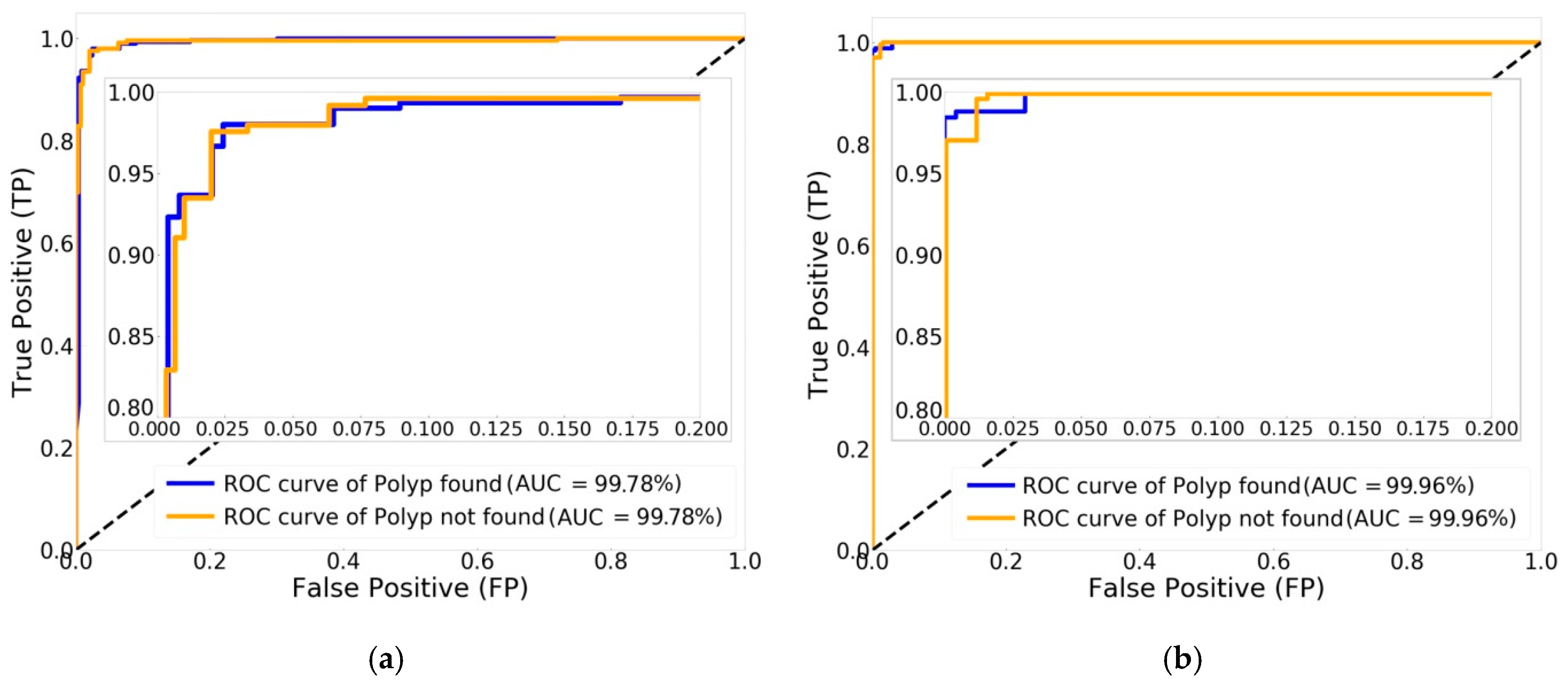

In the comparison of experimental results of classifying the two classes of polyp found and polyp not found, Xception was the best in the original CNN group, with an evaluation performance of 97.97%. However, the results show that Xception with Swish was improved, achieving increased classification performance of 98.99%, using more training time with the Swish activation function. The experimental results in the classification performance of two classes were also explained by ROC curve and AUC. Xception with Swish created ROC curves of nearly 100% with AUC of 99.96%, which is greater than the original Xception, with an AUC of 99.78%. With validation image data of 492 images split into two classes of polyps, 255 images were found, while 237 images were not found. The classification performance for validation demonstrated that Xception with Swish generated better classification than the original Xception in the class of polyp found, with 251 images correctly classified and four images misclassified, and in the class of polyp not found, with 236 images correctly classified and one image misclassified. Summarizing the validation image data for Xception with Swish, 487 images were correctly classified from a total of 492 images, while there were only five incorrectly classified images. For testing classification of two classes by applying 273 external images at 100%, the testing data show that Xception with Swish had true predictions for a total of 272 images, accounting for 99.63%, with false prediction of one image, or 0.37%, compared to true prediction by Xception of 269 images, or 98.51%, with false prediction of four images, or 1.47%.

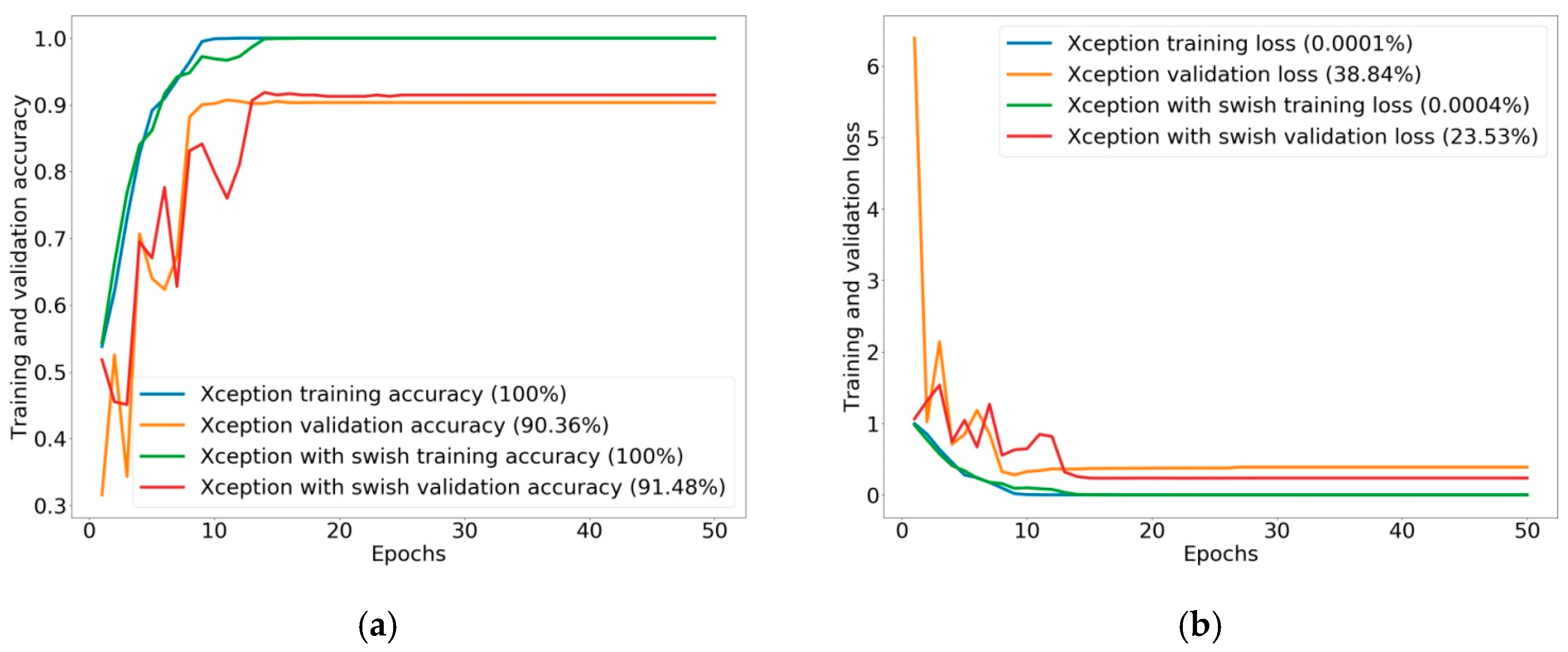

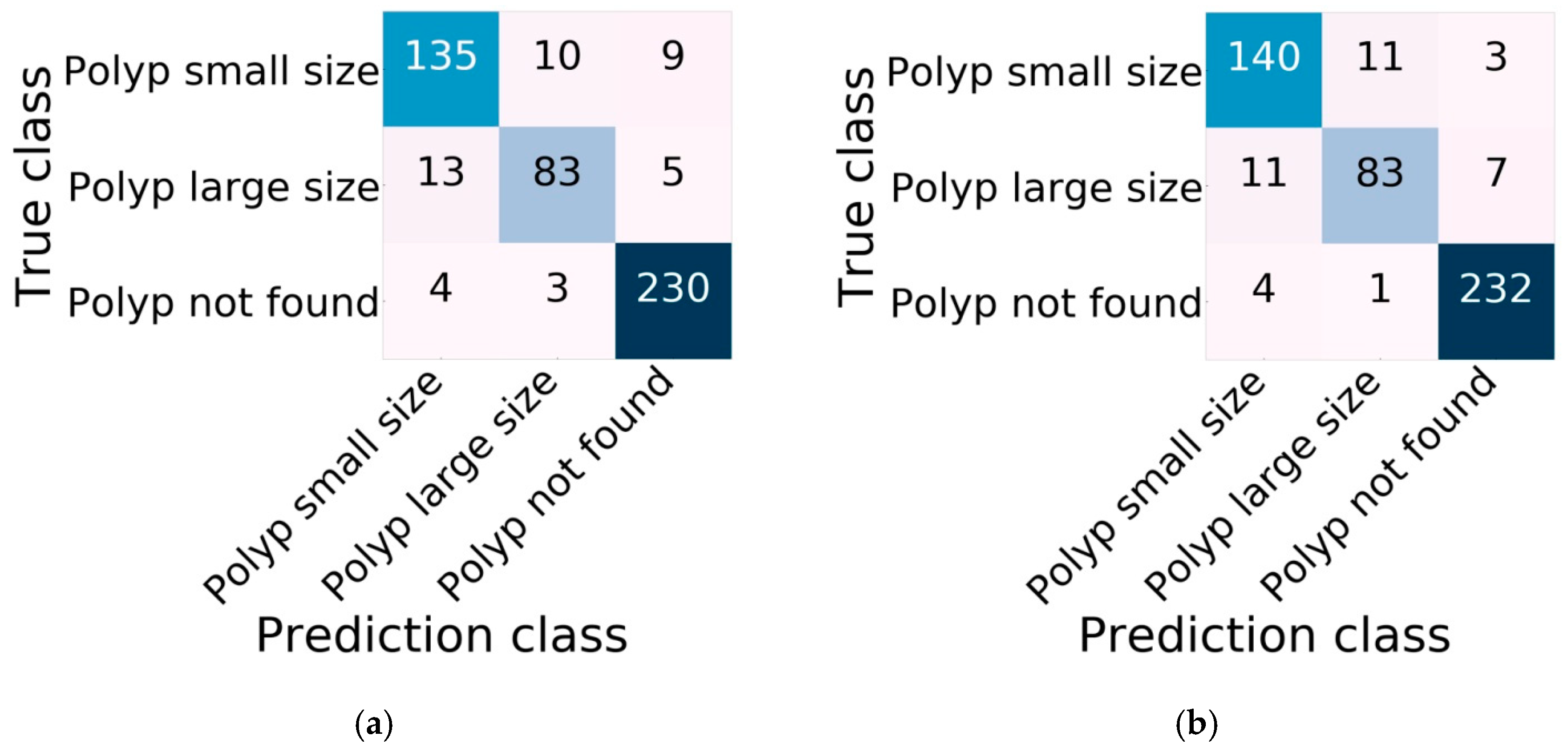

The three classes were defined as small size polyps, large size polyps, and polyp not found. In a comparison of evaluation classification performance, the Xception with Swish model was still improved, but more training time was needed for classification of added classes, increasing the accuracy to 91.48%. Another evaluation score also increased to 90%, while the original Xception produced accuracy of 90.36% by other evaluation indices based on 88%. For the ROC curve and AUC of classification in three classes, Xception showed an AUC of 98.04% for small size polyps, 97.47% for large size polyps, and 99.80% for polyp not found. Xception with Swish showed improved classification performance, with an AUC of 98.22% for small size polyps, 97.85% for large size polyps, and 99.89% for polyp not found.

The confusion matrix of classification performance on validation data was divided into three classes, with 154 images of small size polyps, 101 images of large size polyps, and 237 images of polyp not found. The proposed Xception with Swish model correctly classified small size polyps in 140 images, with misclassification of 14 images; correctly classified large size polyps in 83 images, with 18 images misclassified; and correctly classified 232 images of polyp not found, with five images misclassified. Xception with Swish correctly classified a total of 455 images, and incorrectly classified a total of 37 images. In comparison, the original Xception correctly classified a total of 448 images, with 44 images incorrectly classified. The totals of correctly and incorrectly classified images of the three classes indicate that Xception with Swish achieved better image classification than the original Xception, with many correctly classified and few incorrectly classified images. For testing classification of three classes, Xception with Swish still showed better classification, with total true predictions of 221 images, or 80.95%, and false predictions of 52 images, or 19.04%, compared to Xception, with total true predictions of 219 images, or 80.21%, and false predictions of 54 images, or 19.78%.

According to all of the experimental results in this study, the proposed Xception with Swish model achieved better image classification performance than several original CNN techniques, providing a reasonable basis and possibility for further development of a novel preliminary screening system for colorectal polyps to assist physicians in preliminary screening and rapid diagnosis.