1. Introduction

In this research, we will discuss the minimum distance problem between a point and a parametric curve in an

n-dimensional Euclidean space, and how to gain the closest point (footpoint) on the curve as well as its corresponding parameter, which is termed as the point projection or inversion problem of a parametric curve in an

n-dimensional Euclidean space. It is an important issue in the themes such as geometric modeling, computer graphics, computer-aided geometry design (CAGD) and computer vision [

1,

2]. Both projection and inversion are fundamental for a series of techniques, for instance, the interactive selection of curves and surfaces [

1,

3], the curve fitting [

1,

3], reconstructing curves [

2,

4,

5] and projecting a space curve onto a surface [

6]. This vital technique is also used in the ICP (iterative closest point) method for shape registration [

7].

The Newton-Raphson algorithm is deemed as the most classic one for orthogonal projection onto parametric curve and surface. Searching the root of a polynomial by a Newton-Raphson algorithm can be found in [

8,

9,

10,

11,

12]. In order to solve the adaptive smoothing for the standard finite unconstrained minimax problems, Polak et al. [

13] have presented a extended Newton’s algorithm where a new feedback precision-adjustment rule is used in their extended Newton’s algorithm. Once the Newton-Raphson method reaches its convergence, two advantages emerge and it converges very fast with high precision. However, the result relies heavily on a good guess of initial value in the neighborhood of the solution.

Meanwhile, the classic subdivision method consists of several procedures: Firstly, subdivide NURBS curve or surface into a set of Bézier sub-curves or patches and eliminate redundancy or unnecessary Bézier sub-curves or Bézier patches. Then, get the approximation candidate points. Finally, get the closest point through comparing the distances between the test point and candidate points. This technique is reflected in [

1]. Using new exclusion criteria within the subdivision strategy, the robustness for the projection of points on NURBS curves and surfaces in [

14] has been improved than that in [

1], but this criterion is sometimes too critical. Zou et al. [

15] use subdivision minimization techniques which rely on the convex hull characteristic of the Bernstein basis to impute the minimum distance between two point sets. They transform the problem into solving of

n-dimensional nonlinear equations, where

n variables could be represented as the tensor product Bernstein basis. Cohen et al. [

16] develop a framework for implementing general successive subdivision schemes for nonuniform B-splines to generate the new vertices and the new knot vectors which are satisfied with derived polygon. Piegl et al. [

17] repeatedly subdivide a NURBS surface into four quadrilateral patches and then project the test point onto the closest quadrilateral until it can find the parameter from the closest quadrilateral. Using multivariate rational functions, Elber et al. [

11] construct a solver for a set of geometric constraints represented by inequalities. When the dimension of the solver is greater than zero, they subdivide the multivariate function(s) so as to bind the function values within a specified domain. Derived from [

11] but with more efficiency, a hybrid parallel method in [

18] exploits both the CPU and the GPU multi-core architectures to solve systems under multivariate constraints. Those GPU-based subdivision methods essentially exploit the parallelism inherent in the subdivision of multivariate polynomial. This geometric-based algorithm improves in performance compared to the existing subdivision-based CPU. Two blending schemes in [

19] efficiently remove no-root domains, and hence greatly reduce the number of subdivisions. Through a simple linear combination of functions for a given system of nonlinear equations, no-root domain and searching out all control points for its Bernstein-Bézier basic with the same sign must be satisfied with the seek function. During the subdivision process, it can continuously create these kinds of functions to get rid of the no-root domain. As a result, van Sosin et al. [

20] efficiently form various complex piecewise polynomial systems with zero or inequality constraints in zero-dimensional or one-dimensional solution spaces. Based on their own works [

11,

20], Bartoň et al. [

21] propose a new solver to solve a non-constrained (piecewise) polynomial system. Two termination criteria are applied in the subdivision-based solver: the no-loop test and the single-component test. Once two termination criteria are satisfied, it then can get the domains which have a single monotone univariate solution. The advantage of these methods is that they can find all solutions, while their disadvantage is that they are computationally expensive and may need many subdivision steps.

The third classic methods for orthogonal projection onto parametric curve and surface are geometry methods. They are mainly classified into eight different types of geometry methods: tangent method [

22,

23], torus patch approximating method [

24], circular or spherical clipping method [

25,

26], culling technique [

27], root-finding problem with Bézier clipping [

28,

29], curvature information method [

6,

30], repeated knot insertion method [

31] and hybrid geometry method [

32]. Johnson et al. [

22] use tangent cones to search for regions with satisfaction of distance extrema conditions and then to solve the minimum distance between a point and a curve, but it is not easy to construct tangent cones at any time. A torus patch approximatively approaches for point projection on surfaces in [

24]. For the pure geometry method of a torus patch, it is difficult to achieve high precision of the final iterative parametric value. A circular clipping method can remove the curve parts outside a circle with the test point being the circle’s center, and the radius of the elimination circle will shrink until it satisfies the criteria to terminate [

26]. Similar to the algorithm [

26], a spherical clipping technique for computing the minimum distance with clamped B-spline surface is provided by [

25]. A culling technique to remove superfluous curves and surfaces containing no projection from the given point is proposed in [

27], which is in line with the idea in [

1]. Using Newton’s method for the last step [

1,

25,

26,

27], the special case of non-convergence may happen. In view of the convex-hull property of Bernstein-Bézier representations, the problem to be solved can be formulated as a univariate root-finding problem. Given a

parametric curve

and a point

p, the projection constraint problem can be formulated as a univariate root-finding problem

with a metric induced by the Euclidean scalar product in

. If the curve is parametrized by a (piece-wise) polynomial, then the fast root-finding schemes as a Bézier clipping [

28,

29] can be used. The only issue is the

discontinuities that can be checked in a post-process. One advantage of these methods is that they do not need any initial guess on the parameter value. They adopt the key technology of degree reduction via clipping to yield a strip bounded of two quadratic polynomials. Curvature information is found for computing the minimum distance between a point and a parameter curve or surface in [

6,

30]. However, it needs to consider the second order derivative and the method [

30] is not fit for

n-dimensional Euclidean space. Hu et al. [

6] have not proved the convergence of their two algorithms. Li et al. [

33] have strictly proved convergence analysis for orthogonal projection onto planar parametric curve in [

6]. Based on repeated knot insertion, Mørken et al. [

31] exploit the relationship between a spline and its control polygon and then present a simple and efficient method to compute zeros of spline functions. Li et al. [

32] present the hybrid second order algorithm which orthogonally projects onto parametric surface; it actually utilizes the composite technology and hence converges nicely with convergence order being 2. The geometric method can not only solve the problem of point orthogonal projecting onto parametric curve and surface but also compute the minimum distance between parametric curves and parametric surfaces. Li et al. [

23] have used the tangent method to compute the intersection between two spatial curves. Based on the methods in [

34,

35], they have extended to compute the Hausdorff distance between two B-spline curves. Based on matching a surface patch from one model to the other model which is the corresponding nearby surface patch, an algorithm for solving the Hausdorff distance between two freeform surfaces is presented in Kim et al. [

36], where a hierarchy of Coons patches and bilinear surfaces that approximate the NURBS surfaces with bounding volume is adopted. Of course, the common feature of geometric methods is that the ultimate solution accuracy is not very high. To sum up, these algorithms have been proposed to exploit diverse techniques such as Newton’s iterative method, solving polynomial equation roots methods, subdividing methods, geometry methods. A review of previous algorithms on point projection and inversion problem is obtained in [

37].

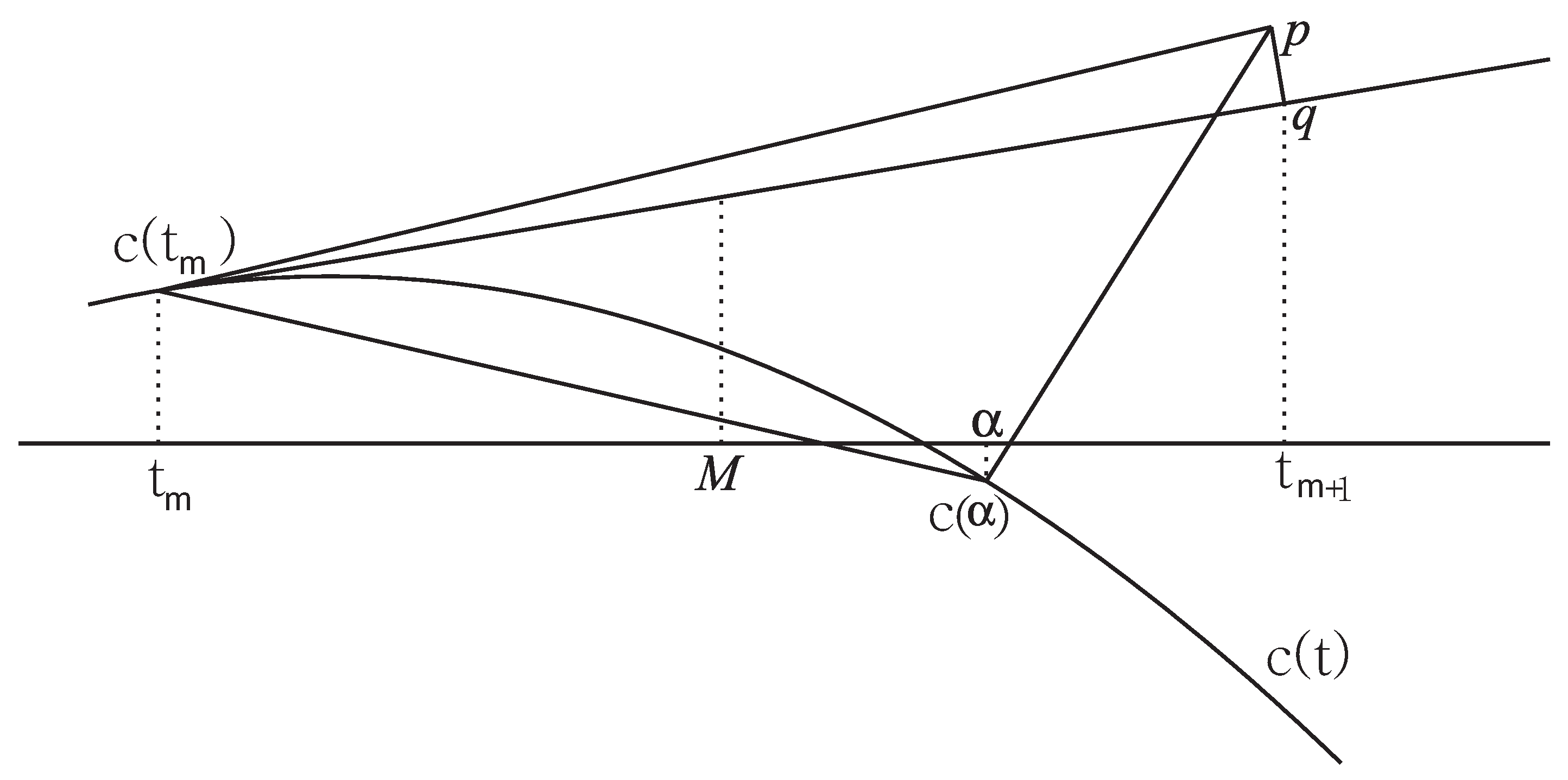

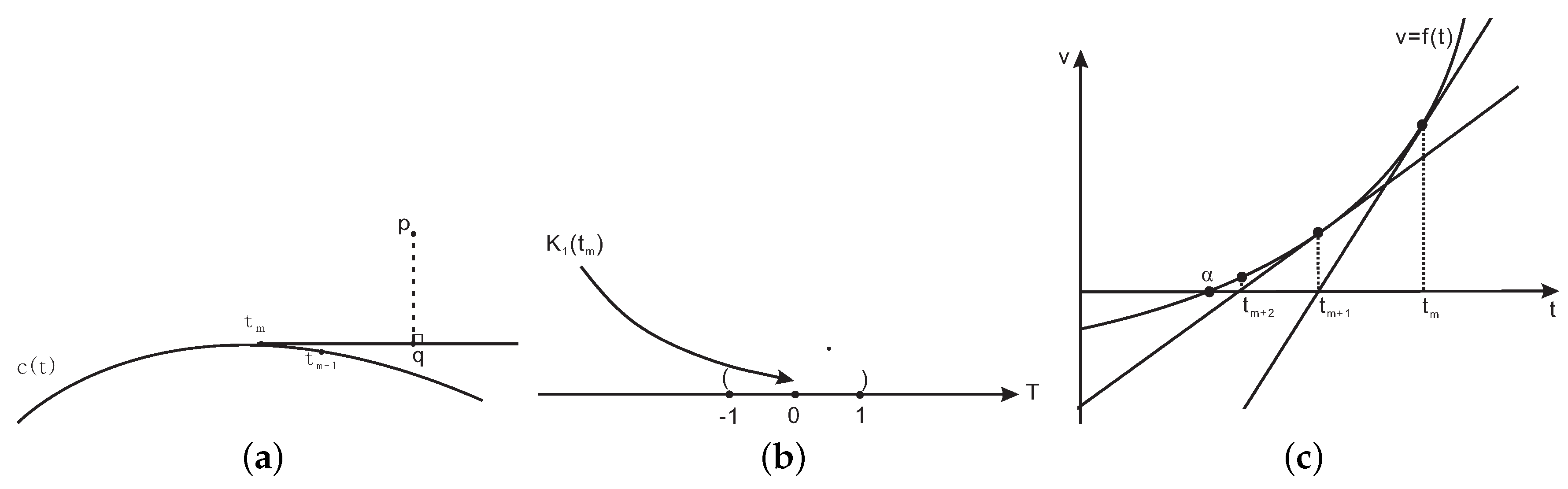

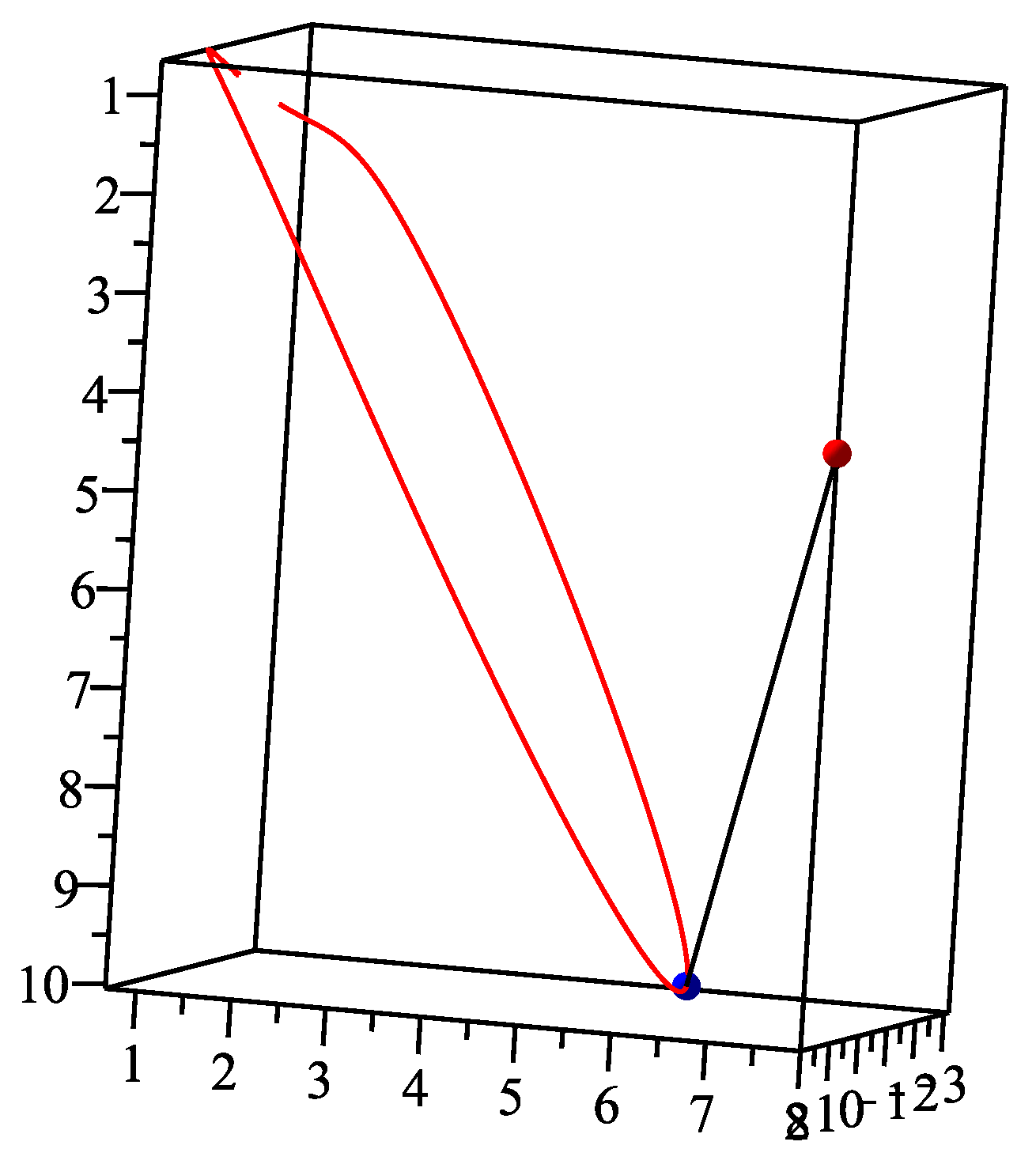

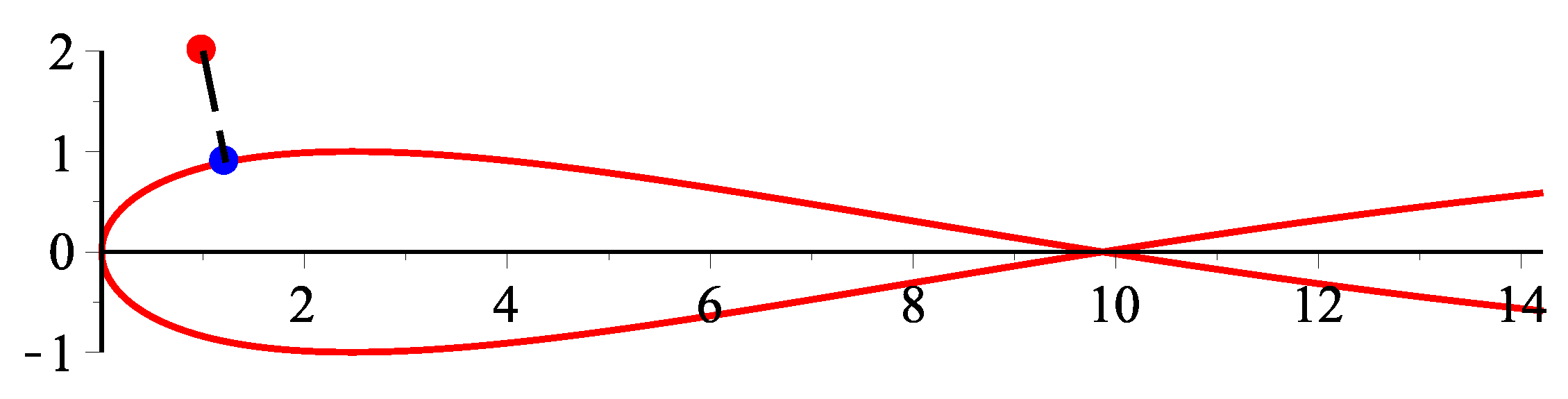

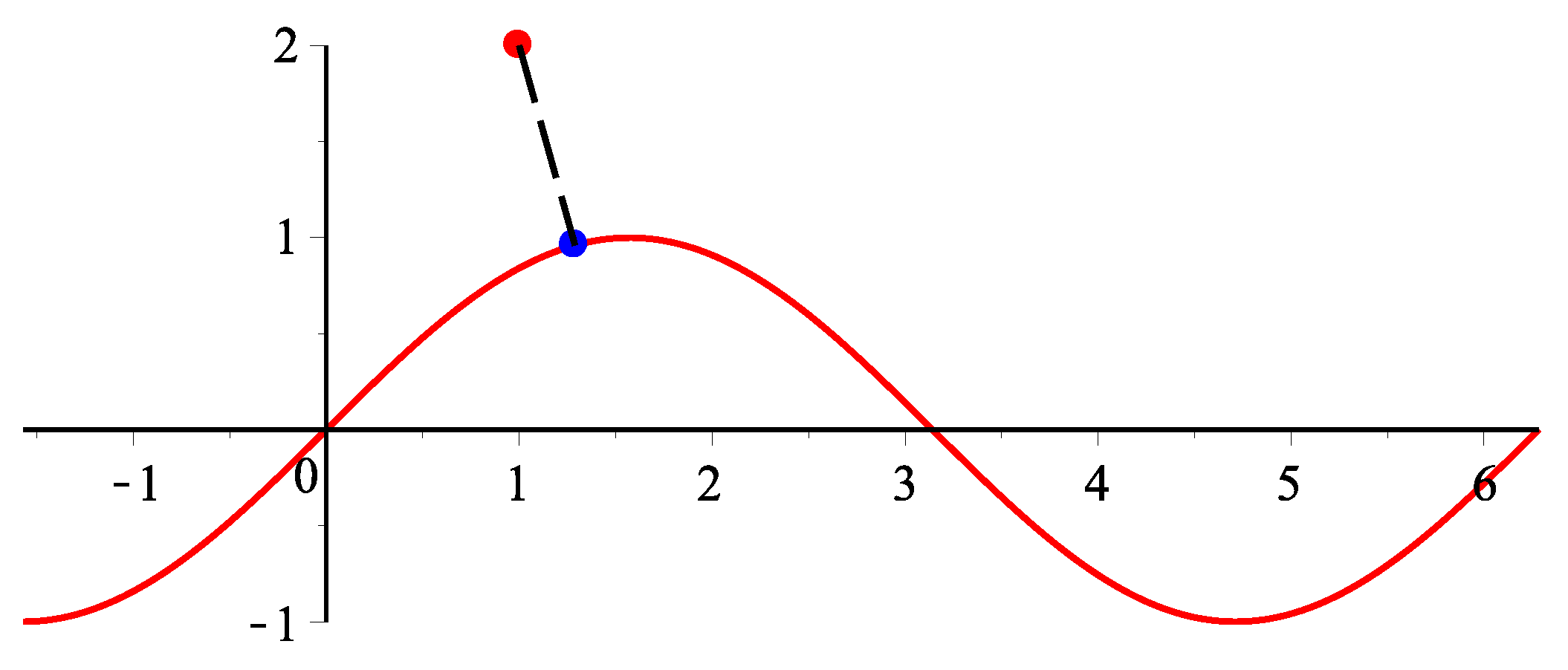

More specifically, using the tangent line or tangent plane with first order geometric information, a classical simple and efficient first order algorithm which orthogonally project onto parametric curve and surface is proposed in [

38,

39,

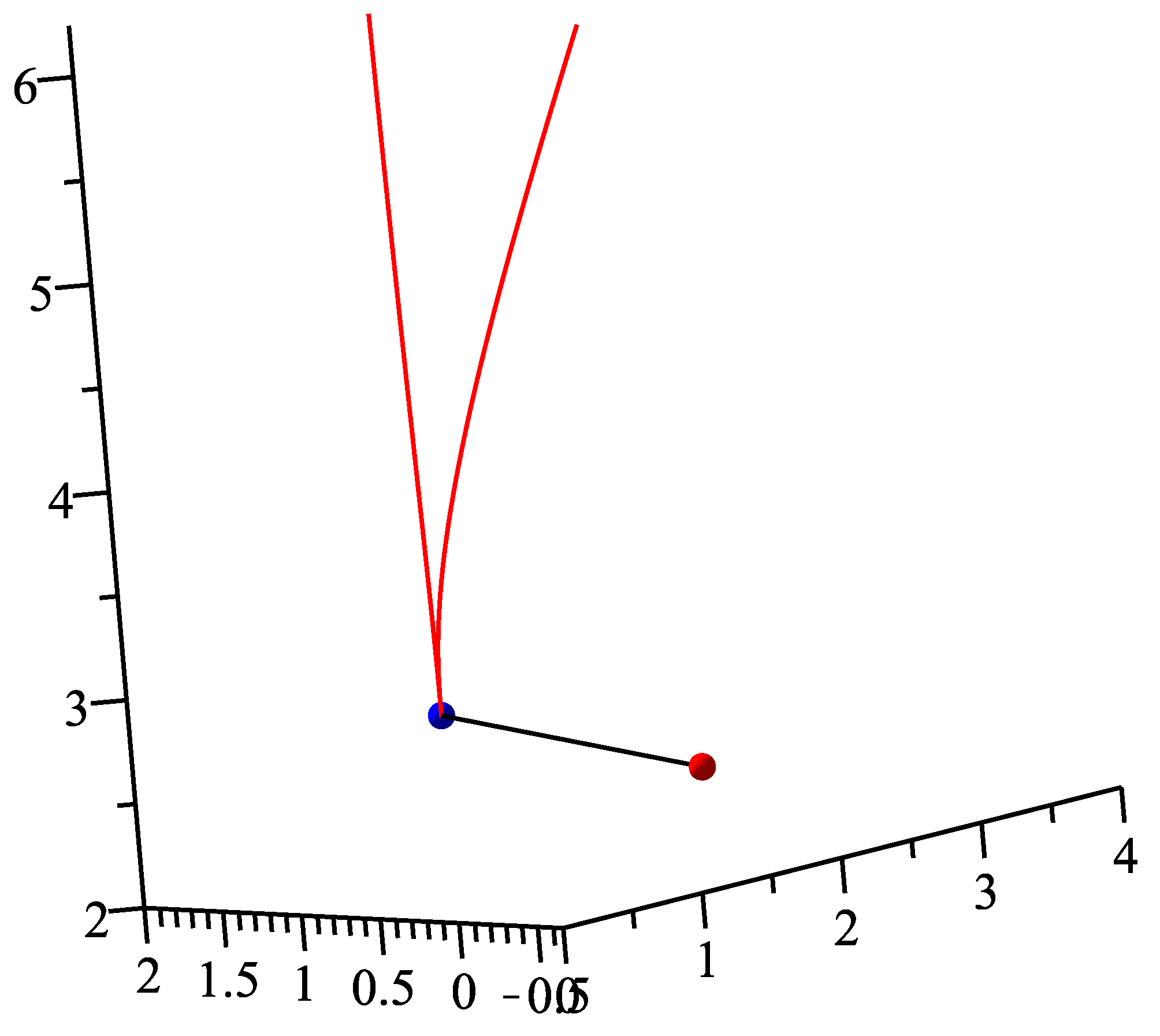

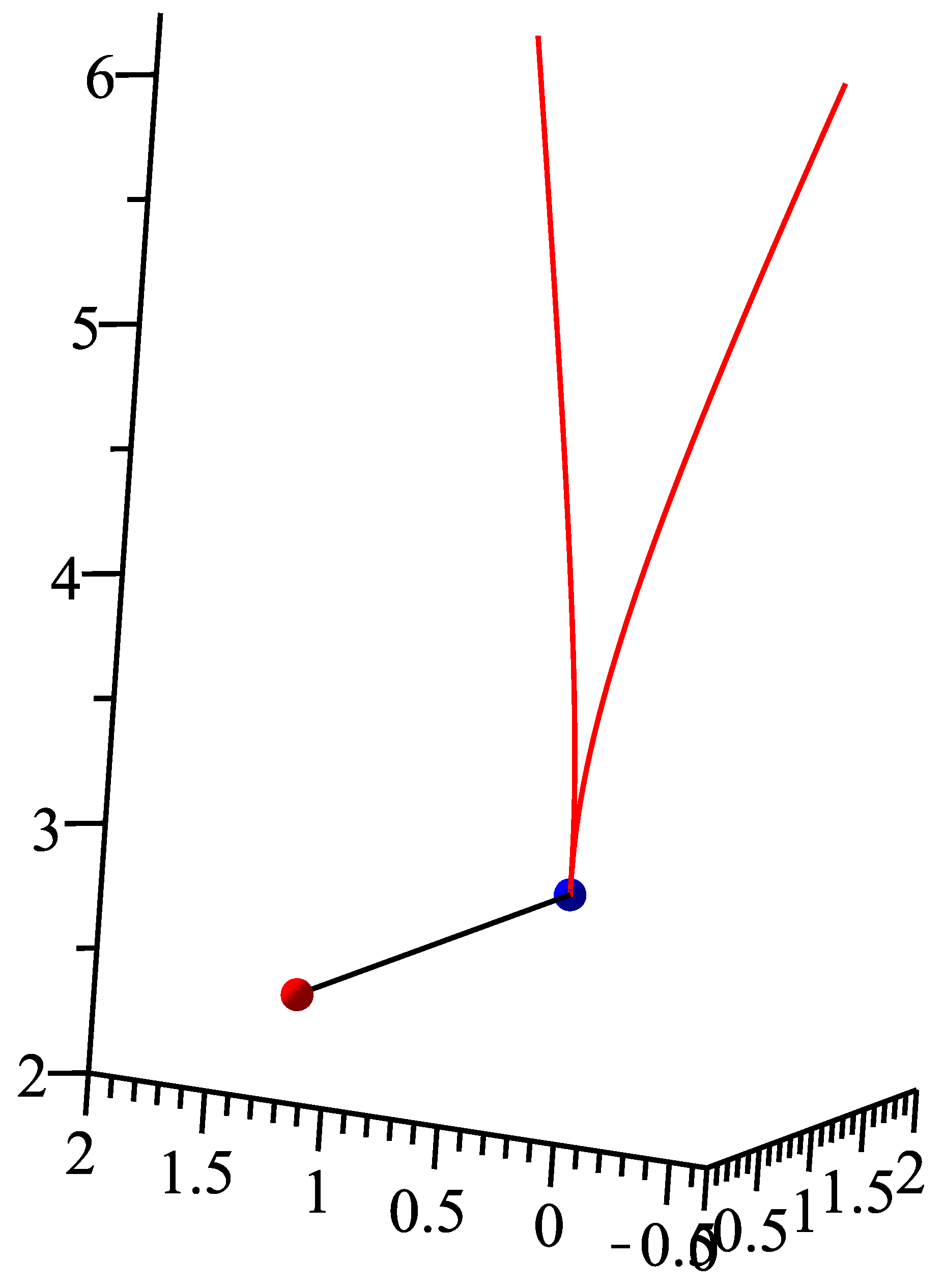

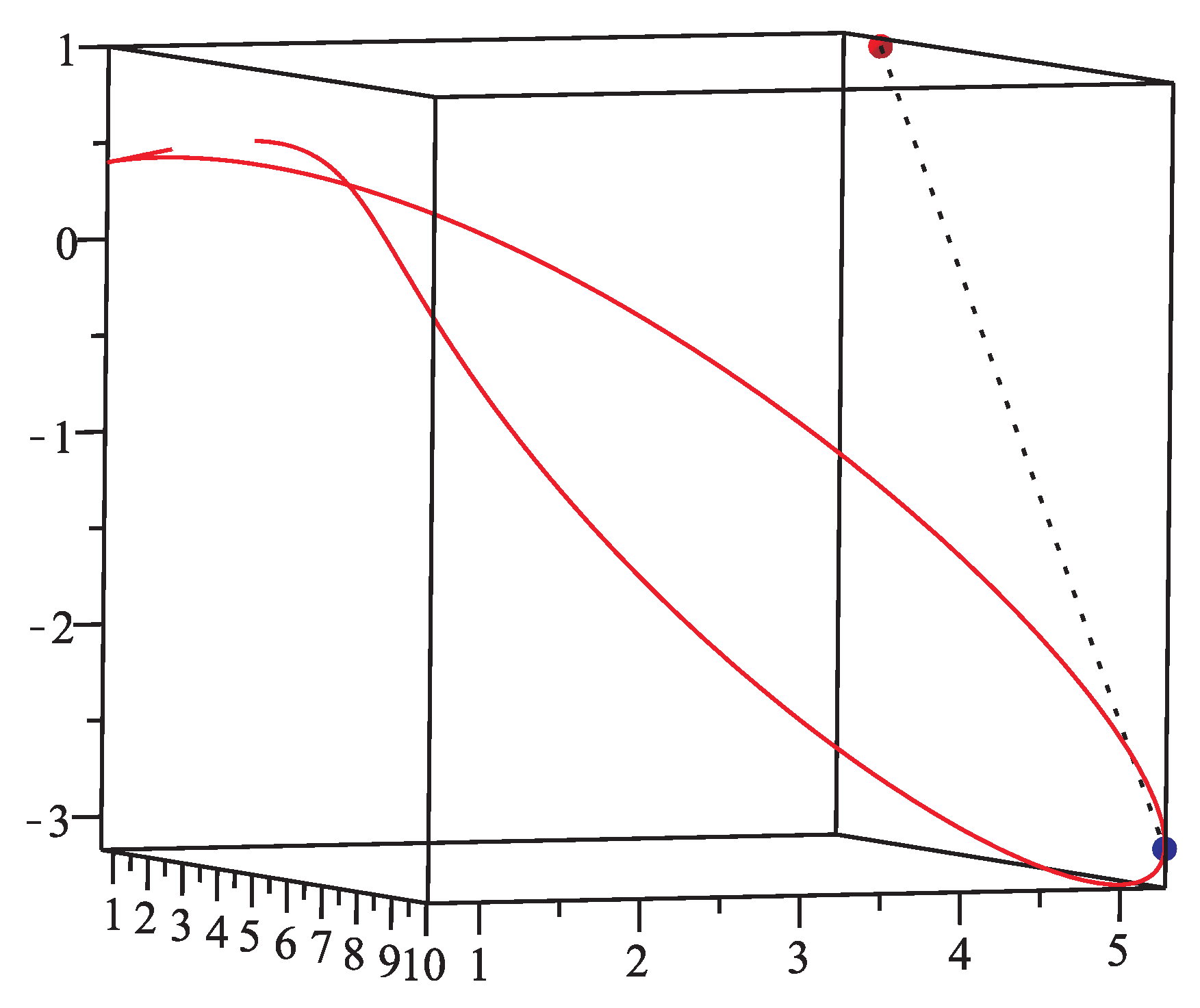

40] (H-H-H method). However, the proof of the convergence for the H-H-H method can not be found in this literature. In this research, we try to give two contributions. Firstly, we give proof that the algorithm is first order convergent and it does not depend on the initial value. We then provide some numerical examples to show its high convergence rate. Secondly, for several special cases where the H-H-H method is not convergent, there are two methods (Newton’s method and the H-H-H method) to combine our method. If the H-H-H method’s iterative parametric value is satisfied with the convergence condition of the Newton’s method, we then go to Newton’s method to increase the convergence process. Otherwise, we go on the H-H-H method until its iterative parametric value is satisfied with the convergence condition of the Newton’s method, and we then turn to it as above. This algorithm not only ensures the robustness of convergence, but also improves the convergence rate. Our hybrid method can go faster than the existing methods and ensures the independence to the initial value. Some numerical examples verify our conclusion.

The rest of this paper is arranged as follows. In

Section 2, convergence analysis of the H-H-H method is presented. In

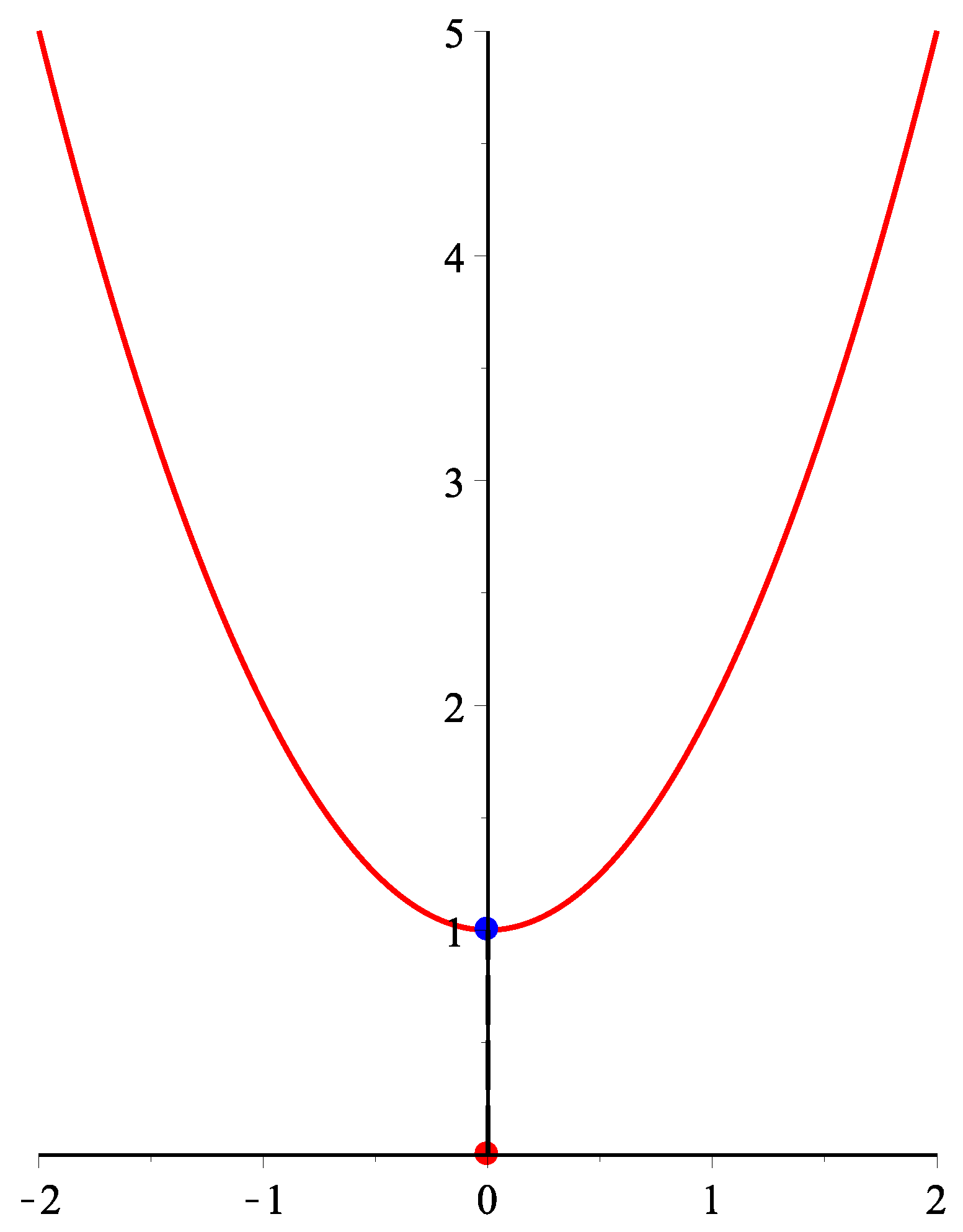

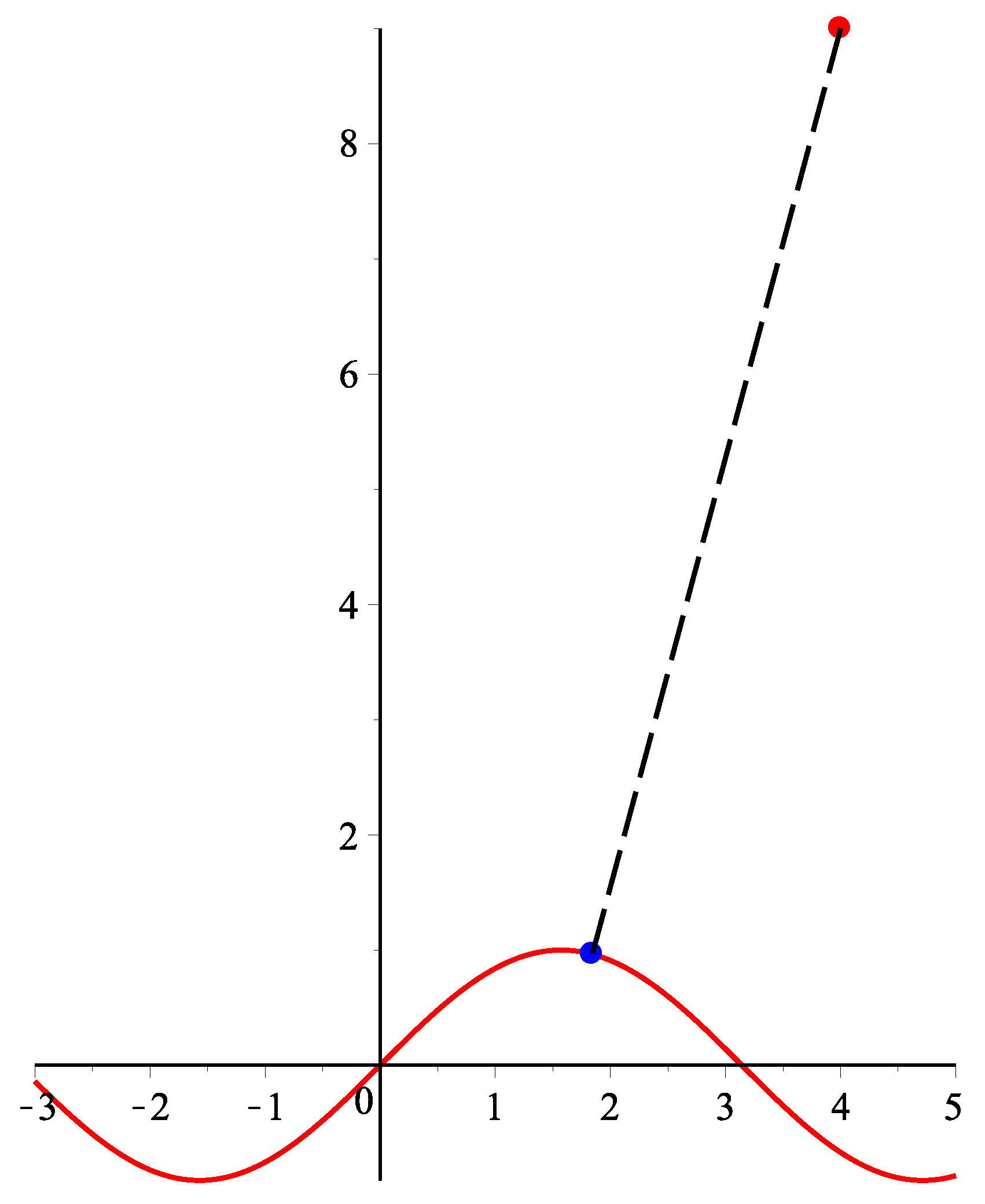

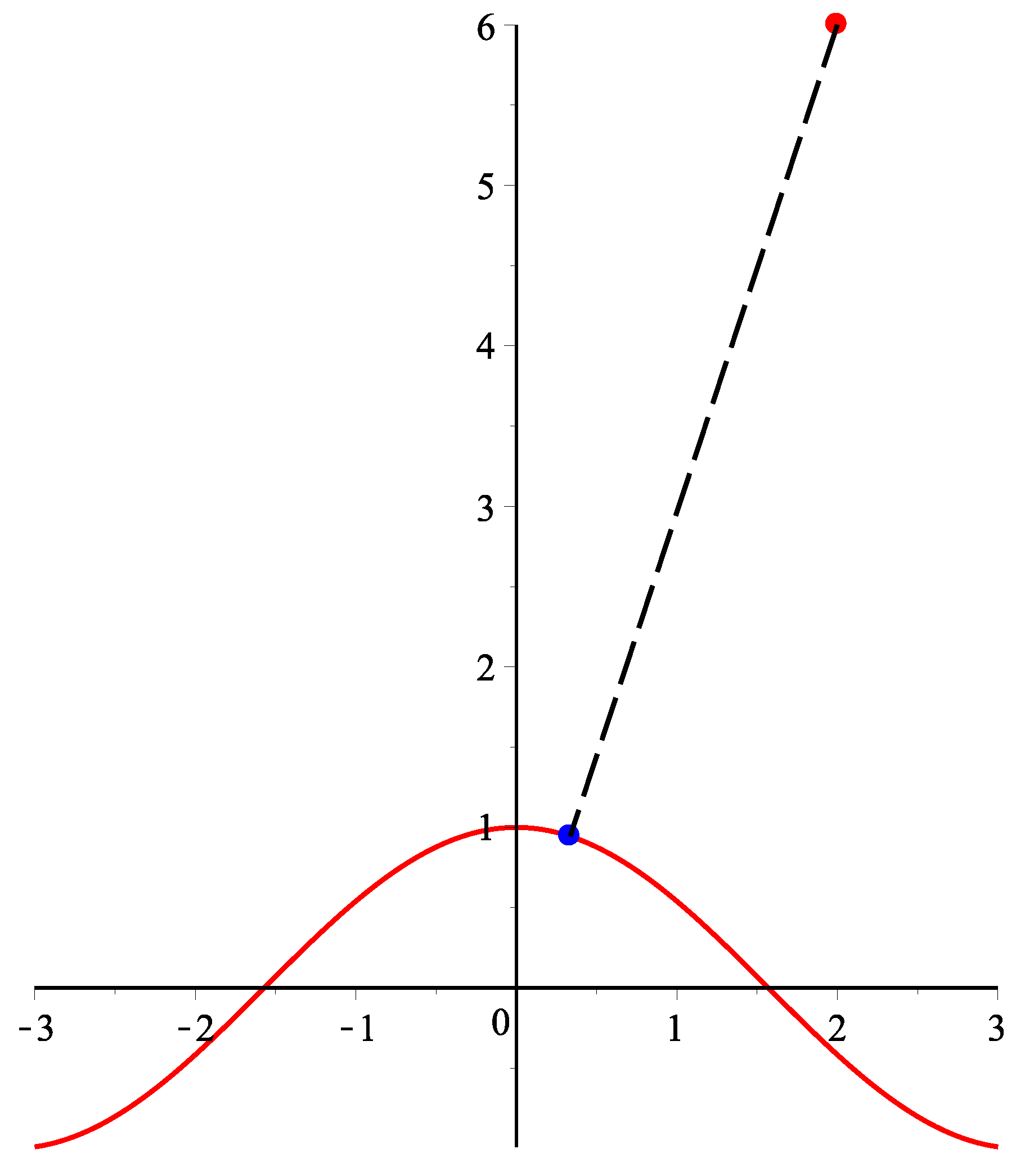

Section 3, for several special cases where the H-H-H method is not convergent, an improved our method is provided. Convergence analysis for our method is also provided in this section. In

Section 4, some numerical examples for our method are verified. In

Section 5, conclusions are provided.