Abstract

Orthogonal projection a point onto a parametric curve, three classic first order algorithms have been presented by Hartmann (1999), Hoschek, et al. (1993) and Hu, et al. (2000) (hereafter, H-H-H method). In this research, we give a proof of the approach’s first order convergence and its non-dependence on the initial value. For some special cases of divergence for the H-H-H method, we combine it with Newton’s second order method (hereafter, Newton’s method) to create the hybrid second order method for orthogonal projection onto parametric curve in an n-dimensional Euclidean space (hereafter, our method). Our method essentially utilizes hybrid iteration, so it converges faster than current methods with a second order convergence and remains independent from the initial value. We provide some numerical examples to confirm robustness and high efficiency of the method.

1. Introduction

In this research, we will discuss the minimum distance problem between a point and a parametric curve in an n-dimensional Euclidean space, and how to gain the closest point (footpoint) on the curve as well as its corresponding parameter, which is termed as the point projection or inversion problem of a parametric curve in an n-dimensional Euclidean space. It is an important issue in the themes such as geometric modeling, computer graphics, computer-aided geometry design (CAGD) and computer vision [1,2]. Both projection and inversion are fundamental for a series of techniques, for instance, the interactive selection of curves and surfaces [1,3], the curve fitting [1,3], reconstructing curves [2,4,5] and projecting a space curve onto a surface [6]. This vital technique is also used in the ICP (iterative closest point) method for shape registration [7].

The Newton-Raphson algorithm is deemed as the most classic one for orthogonal projection onto parametric curve and surface. Searching the root of a polynomial by a Newton-Raphson algorithm can be found in [8,9,10,11,12]. In order to solve the adaptive smoothing for the standard finite unconstrained minimax problems, Polak et al. [13] have presented a extended Newton’s algorithm where a new feedback precision-adjustment rule is used in their extended Newton’s algorithm. Once the Newton-Raphson method reaches its convergence, two advantages emerge and it converges very fast with high precision. However, the result relies heavily on a good guess of initial value in the neighborhood of the solution.

Meanwhile, the classic subdivision method consists of several procedures: Firstly, subdivide NURBS curve or surface into a set of Bézier sub-curves or patches and eliminate redundancy or unnecessary Bézier sub-curves or Bézier patches. Then, get the approximation candidate points. Finally, get the closest point through comparing the distances between the test point and candidate points. This technique is reflected in [1]. Using new exclusion criteria within the subdivision strategy, the robustness for the projection of points on NURBS curves and surfaces in [14] has been improved than that in [1], but this criterion is sometimes too critical. Zou et al. [15] use subdivision minimization techniques which rely on the convex hull characteristic of the Bernstein basis to impute the minimum distance between two point sets. They transform the problem into solving of n-dimensional nonlinear equations, where n variables could be represented as the tensor product Bernstein basis. Cohen et al. [16] develop a framework for implementing general successive subdivision schemes for nonuniform B-splines to generate the new vertices and the new knot vectors which are satisfied with derived polygon. Piegl et al. [17] repeatedly subdivide a NURBS surface into four quadrilateral patches and then project the test point onto the closest quadrilateral until it can find the parameter from the closest quadrilateral. Using multivariate rational functions, Elber et al. [11] construct a solver for a set of geometric constraints represented by inequalities. When the dimension of the solver is greater than zero, they subdivide the multivariate function(s) so as to bind the function values within a specified domain. Derived from [11] but with more efficiency, a hybrid parallel method in [18] exploits both the CPU and the GPU multi-core architectures to solve systems under multivariate constraints. Those GPU-based subdivision methods essentially exploit the parallelism inherent in the subdivision of multivariate polynomial. This geometric-based algorithm improves in performance compared to the existing subdivision-based CPU. Two blending schemes in [19] efficiently remove no-root domains, and hence greatly reduce the number of subdivisions. Through a simple linear combination of functions for a given system of nonlinear equations, no-root domain and searching out all control points for its Bernstein-Bézier basic with the same sign must be satisfied with the seek function. During the subdivision process, it can continuously create these kinds of functions to get rid of the no-root domain. As a result, van Sosin et al. [20] efficiently form various complex piecewise polynomial systems with zero or inequality constraints in zero-dimensional or one-dimensional solution spaces. Based on their own works [11,20], Bartoň et al. [21] propose a new solver to solve a non-constrained (piecewise) polynomial system. Two termination criteria are applied in the subdivision-based solver: the no-loop test and the single-component test. Once two termination criteria are satisfied, it then can get the domains which have a single monotone univariate solution. The advantage of these methods is that they can find all solutions, while their disadvantage is that they are computationally expensive and may need many subdivision steps.

The third classic methods for orthogonal projection onto parametric curve and surface are geometry methods. They are mainly classified into eight different types of geometry methods: tangent method [22,23], torus patch approximating method [24], circular or spherical clipping method [25,26], culling technique [27], root-finding problem with Bézier clipping [28,29], curvature information method [6,30], repeated knot insertion method [31] and hybrid geometry method [32]. Johnson et al. [22] use tangent cones to search for regions with satisfaction of distance extrema conditions and then to solve the minimum distance between a point and a curve, but it is not easy to construct tangent cones at any time. A torus patch approximatively approaches for point projection on surfaces in [24]. For the pure geometry method of a torus patch, it is difficult to achieve high precision of the final iterative parametric value. A circular clipping method can remove the curve parts outside a circle with the test point being the circle’s center, and the radius of the elimination circle will shrink until it satisfies the criteria to terminate [26]. Similar to the algorithm [26], a spherical clipping technique for computing the minimum distance with clamped B-spline surface is provided by [25]. A culling technique to remove superfluous curves and surfaces containing no projection from the given point is proposed in [27], which is in line with the idea in [1]. Using Newton’s method for the last step [1,25,26,27], the special case of non-convergence may happen. In view of the convex-hull property of Bernstein-Bézier representations, the problem to be solved can be formulated as a univariate root-finding problem. Given a parametric curve and a point p, the projection constraint problem can be formulated as a univariate root-finding problem with a metric induced by the Euclidean scalar product in . If the curve is parametrized by a (piece-wise) polynomial, then the fast root-finding schemes as a Bézier clipping [28,29] can be used. The only issue is the discontinuities that can be checked in a post-process. One advantage of these methods is that they do not need any initial guess on the parameter value. They adopt the key technology of degree reduction via clipping to yield a strip bounded of two quadratic polynomials. Curvature information is found for computing the minimum distance between a point and a parameter curve or surface in [6,30]. However, it needs to consider the second order derivative and the method [30] is not fit for n-dimensional Euclidean space. Hu et al. [6] have not proved the convergence of their two algorithms. Li et al. [33] have strictly proved convergence analysis for orthogonal projection onto planar parametric curve in [6]. Based on repeated knot insertion, Mørken et al. [31] exploit the relationship between a spline and its control polygon and then present a simple and efficient method to compute zeros of spline functions. Li et al. [32] present the hybrid second order algorithm which orthogonally projects onto parametric surface; it actually utilizes the composite technology and hence converges nicely with convergence order being 2. The geometric method can not only solve the problem of point orthogonal projecting onto parametric curve and surface but also compute the minimum distance between parametric curves and parametric surfaces. Li et al. [23] have used the tangent method to compute the intersection between two spatial curves. Based on the methods in [34,35], they have extended to compute the Hausdorff distance between two B-spline curves. Based on matching a surface patch from one model to the other model which is the corresponding nearby surface patch, an algorithm for solving the Hausdorff distance between two freeform surfaces is presented in Kim et al. [36], where a hierarchy of Coons patches and bilinear surfaces that approximate the NURBS surfaces with bounding volume is adopted. Of course, the common feature of geometric methods is that the ultimate solution accuracy is not very high. To sum up, these algorithms have been proposed to exploit diverse techniques such as Newton’s iterative method, solving polynomial equation roots methods, subdividing methods, geometry methods. A review of previous algorithms on point projection and inversion problem is obtained in [37].

More specifically, using the tangent line or tangent plane with first order geometric information, a classical simple and efficient first order algorithm which orthogonally project onto parametric curve and surface is proposed in [38,39,40] (H-H-H method). However, the proof of the convergence for the H-H-H method can not be found in this literature. In this research, we try to give two contributions. Firstly, we give proof that the algorithm is first order convergent and it does not depend on the initial value. We then provide some numerical examples to show its high convergence rate. Secondly, for several special cases where the H-H-H method is not convergent, there are two methods (Newton’s method and the H-H-H method) to combine our method. If the H-H-H method’s iterative parametric value is satisfied with the convergence condition of the Newton’s method, we then go to Newton’s method to increase the convergence process. Otherwise, we go on the H-H-H method until its iterative parametric value is satisfied with the convergence condition of the Newton’s method, and we then turn to it as above. This algorithm not only ensures the robustness of convergence, but also improves the convergence rate. Our hybrid method can go faster than the existing methods and ensures the independence to the initial value. Some numerical examples verify our conclusion.

The rest of this paper is arranged as follows. In Section 2, convergence analysis of the H-H-H method is presented. In Section 3, for several special cases where the H-H-H method is not convergent, an improved our method is provided. Convergence analysis for our method is also provided in this section. In Section 4, some numerical examples for our method are verified. In Section 5, conclusions are provided.

2. Convergence Analysis of the H-H-H Method

In this part, we will prove that the algorithm defined by Equations (2) or (3) is of first order convergence and its convergence does not rely on the initial value. Suppose a curve in an n-dimensional Euclidean space and a test point . The first order geometric method to compute the footpoint q of test point p can be implemented as below. Projecting test point p onto the tangent line of the parametric curve in an n-dimensional Euclidean space at gets a point q determined by and its derivative . The footpoint can be approximated as

Let , and repeatedly iterate the above process until is less than an error tolerance . This method is addressed as H-H-H method [38,39,40]. Furthermore, convergence of this method will not depend on the choice of the initial value. According to many of our test experiments, when the iterative parametric value approaches the target parametric value , the iteration step size becomes smaller and smaller, while the corresponding number of iterations becomes bigger and bigger.

Theorem 1.

The convergence order of the method defined by Equations (2) or (3) is one, and its convergence does not depend on the initial value.

Proof.

We adopt the numerical analysis method which is equivalent to those in the literature [41,42]. Firstly, we deduce the expression of footpoint q. Suppose that parameter curve is a curve in an n-dimensional Euclidean space , where the corresponding projecting point with parameter is orthogonal projecting of the test point onto the parametric curve . It is easy to indicate a relational expression

where and tangent vector . In order to solve the intersection (footpoint q) between the tangent line, which goes through the parametric curve at , and the perpendicular line, which is determined by the test point p, we try to express the equation of the tangent line as:

where and s is a parameter. In addition, the vector of line segment both going through the test point p and the point will be

where . Because the vector (6) and the tangent vector of Equation (5) are orthogonal to each other, the current parameter value s of Equation (5) is

Substituting (7) into (5), we have

Thus, the footpoint is determined by Equation (8).

Secondly, we deduce that the convergence order of the method defined by (2) or (3) is first order convergent. Our proof method absorbs the idea of [41,42]. Substituting (8) into (2), and simplifying, we get the relationship,

Using Taylor’s expansion, we get

where , and From (10) and (11) and combining with (4), the numerator of Equation (9) can be transformed into the following one:

where . By (11), the denominator of Equation (9) can be changed as follows:

where . Substituting Equations (12) and (13) into the right-hand side of Equation (9), we get

Using Taylor’s expansion by Maple 18, and through simplification, we get

where the symbol is the coefficient of the first order error of Equation (15). The result implies the iterative Equations (2) or (3) is of first order convergence.

Now, we try to interpret that Equations (2) or (3) do not depend on the initial value.

Our proof method absorbs the idea of references [43,44]. Without loss of generality, we only prove that convergence of Equations (2) or (3) does not depend on the initial value in two-dimensional case. As to convergence of Equations (2) or (3) not being dependent on the initial value in general n-dimensional Euclidean space case, it is completely equivalent to the two-dimensional case.

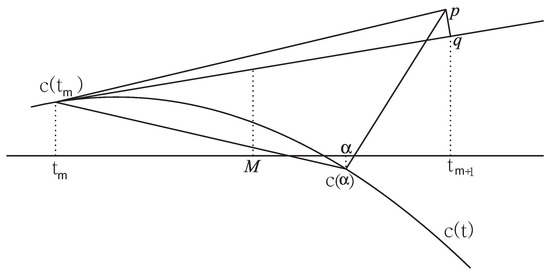

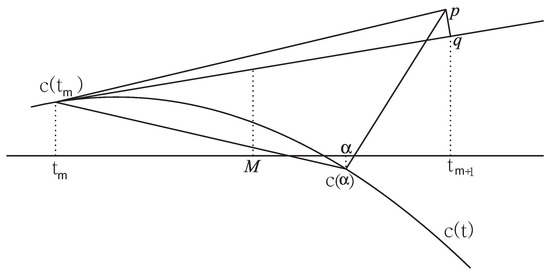

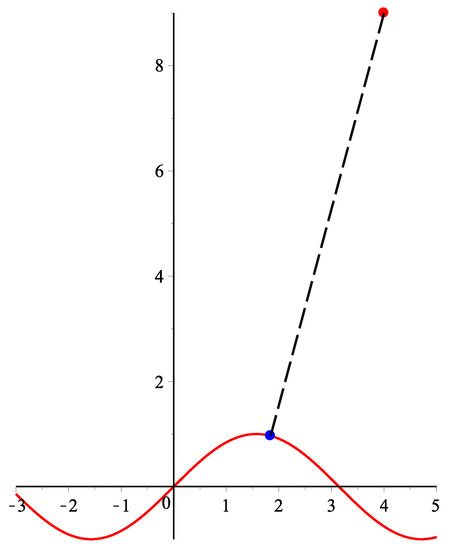

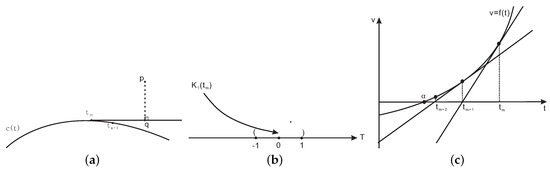

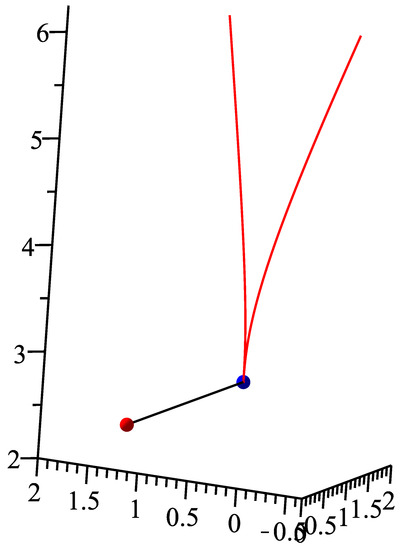

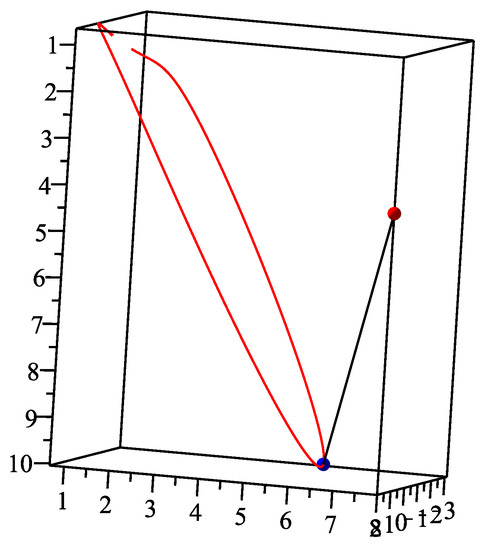

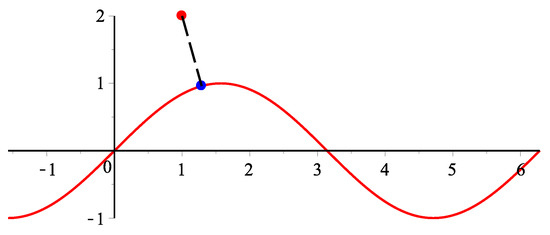

Firstly, we interpret Figure 1. For a horizontal axis t, there are two points are on the planar parametric curve . For the first point on the horizontal axis, the test point p orthogonal projects it onto the planar parametric curve and yields the second point and its corresponding parameter value on the horizontal axis. Then, by the iterative methods (2) or (3), the line segment connected by the point p and the point is perpendicular to the tangent line of the planar parametric curve at . The footpoint q is determined by the tangent line of the planar parametric curve through the point . Evidently, the parametric value of footpoint q can be used as the next iterative value. M is the corresponding parametric value of the middle point of the point and the footpoint q.

Figure 1.

Geometric illustration for convergence analysis.

Secondly, we prove the argument whose convergence of Equations (2) or (3) does not depend on the initial value. It is easy to know that t denotes the corresponding parameter for the first dimensional of the planar parametric curve on the two-dimensional plane. When the iterative Equations (2) or (3) start to run, we suppose that the iterative parameter value is satisfied with the inequality relationship and the corresponding parameter of the footpoint q is , as shown in Figure 1. The middle point of two points and is , i.e., , and, because of , then there exists an inequality . Equivalently, , which can be expressed as , where . If , we can get the same result through the same method. Thus, an iterative error expression in a two-dimensional plane is demonstrated. Thus, it is known that convergence of the iterative Equations (2) or (3) does not depend on the initial value in two-dimensional planes (see Figure 1). Furthermore, we could get the argument that convergence of the iterative Equations (2) or (3) does not depend on the initial value in an n-dimensional Euclidean space. The proof is completed. □

3. The Improved Algorithm

3.1. Counterexamples

In Section 2, convergence of the H-H-H method does not depend on the initial value. For special cases with non-convergence by the H-H-H method, we then enumerate nine counterexamples.

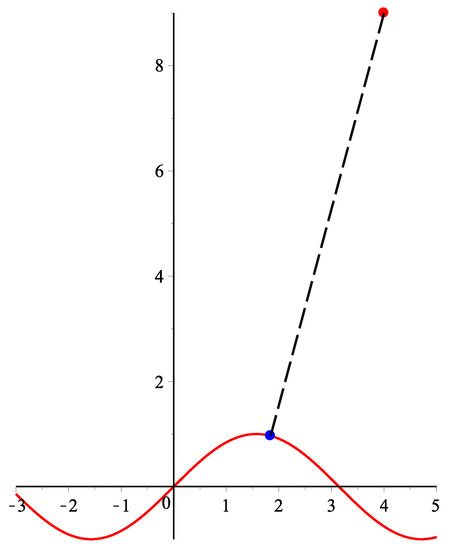

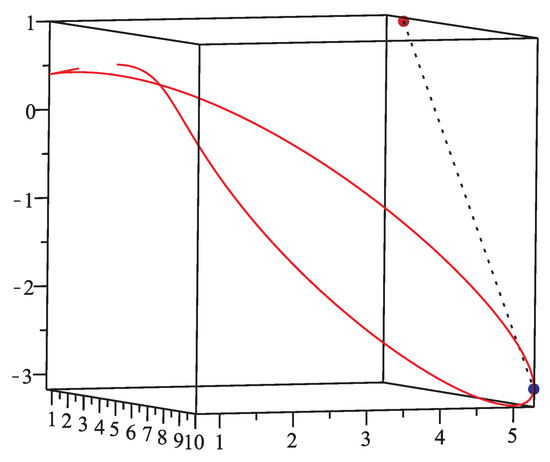

Counterexample 1.

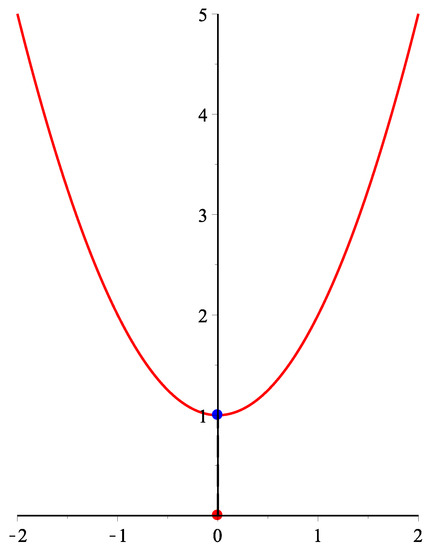

There are a parametric curve and a test point . The projection point and parametric value of the test point p are and , respectively. As to many initial values, the H-H-H method fails to converge to α. When the initial values are t = respectively, there repeatedly appear alternating oscillatory iteration values of 0.412415429665, −0.412415429665. Furthermore, for a parametric curve , , about and many initial values, the H-H-H method fails to converge to α (see Figure 2).

Figure 2.

Geometric illustration for counterexample 1.

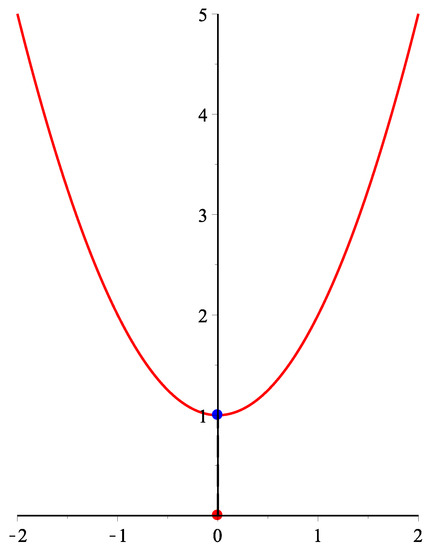

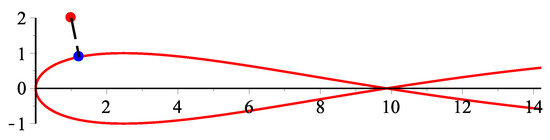

Counterexample 2.

There are a parametric curve and a test point . The projection point and parametric value of the test point p are and α = 0, respectively. For any initial value, the H-H-H method fails to converge to α. When the initial values are respectively, there repeatedly appear alternating oscillatory iteration values of 0.304949569175, −0.304949569175. Furthermore, for a parametric curve , , , , , , about point and any initial value, the H-H-H method fails to converge to α.

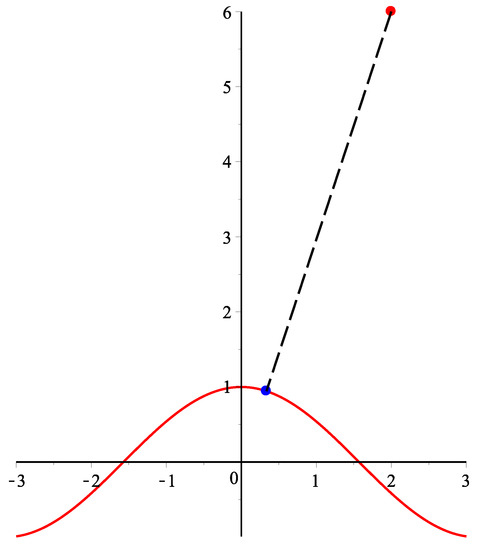

Counterexample 3.

There are a parametric curve and a test point . The projection point and parametric value of the test point p are and , respectively. For point p and any initial value, the H-H-H method fails to converge to α. When the initial values are respectively, there repeatedly appear alternating oscillatory iteration values of 2.165320, 0.0778704, 6.505971, 9.609789. In addition, for a parametric curve , , for any test point p and any initial value, the H-H-H method fails to converge to α (see Figure 3).

Figure 3.

Geometric illustration of counterexample 3.

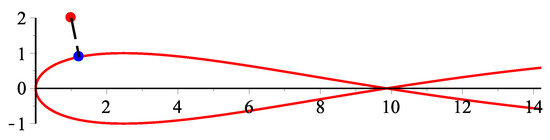

Counterexample 4.

There are a parametric curve and a test point . The projection point and parametric value of the test point p are , and , respectively. For test point p and any initial value, the H-H-H method fails to converge to α. When the initial value is , alternating oscillatory iteration values of 5.18741299662, 3.59425803253, −0.507188248308, 1.6901041247, 3.82746208506 repeatedly appear. When the initial value is t = 2, very irregular oscillatory iteration values of 0.652526561595, −0.720371663877, −2.39555359952, 0.365881194752, 2.06880954777, 3.18725085474, 1.71447110647, etc. appear In addition, for a parametric curve , , for any test point p and any initial value, the H-H-H method fails to converge to α (see Figure 4).

Figure 4.

Geometric illustration of counterexample 4.

Counterexample 5.

There are a parametric curve and a test point . The projection point and parametric value of the test point p are (7.310786, 7.310786, 7.310786, 7.310786, 0.8560612) and , respectively. For point p and any initial value, the H-H-H method fails to converge to α. When the initial values are respectively, there repeatedly appear alternating oscillatory iteration values of 7.24999006346, 6.37363460615. In addition, for a parametric curve , with a test point , for any initial value, the H-H-H method fails to converge to α.

Counterexample 6.

There are a parametric curve and a test point . The projection point and parametric value of the test point p are (5.883406, 5.883406, 5.883406, 5.883406, 0.9211469) and , respectively. For point p and any initial value, the H-H-H method fails to converge to α. When the initial values are respectively, there repeatedly appear alternating oscillatory iteration values of 4.17182145828, 7.80116702003. In addition, about a parametric curve , with a point , for any initial value, the H-H-H method fails to converge. The non-convergence explanation of the three counterexamples below are similar to the preceding six ones and omitted to save space.

Counterexample 7.

There are a parametric curve , , , , in five-dimensional Euclidean space and a test point . The projection point and parametric value of the test point p are and , respectively. For any initial value , the H-H-H method fails to converge. We also test many other examples, such as when parametric curve is completely symmetrical and the point is on the symmetrical axis of parametric curve. For any initial value , the same results remain.

Counterexample 8.

There are a parametric curve ,sin,sinsin, in five-dimensional Euclidean space and a test point . The corresponding orthogonal projection parametric value α are −3.493548, −2.280571, 1.875969, 4.791677, respectively. For any initial value , the H-H-H method fails to converge.

Counterexample 9.

There is a parametric curve (sin),cos sin,cos, in five-dimensional Euclidean space and a test point . The corresponding orthogonal projection parametric value α are −4.833375, −3.058735, 0.9730030, 3.738442, respectively. For any initial value , the H-H-H method fails to converge.

3.2. The Improved Algorithm

Due to the H-H-H method’s non-convergence for some special cases, the improved algorithm is presented to ensure the converge for any parametric curve, test point and initial value. The most classic Newton’s method can be expressed as

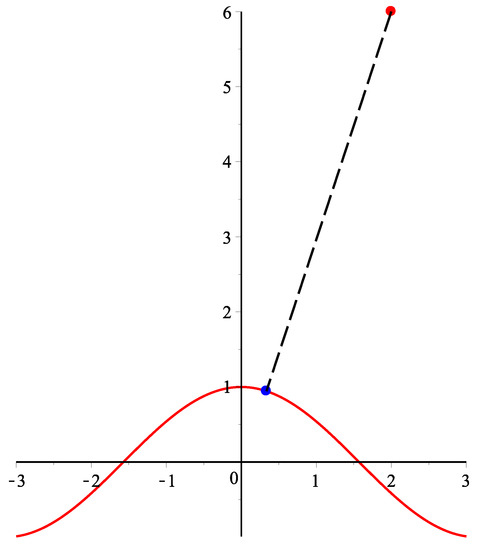

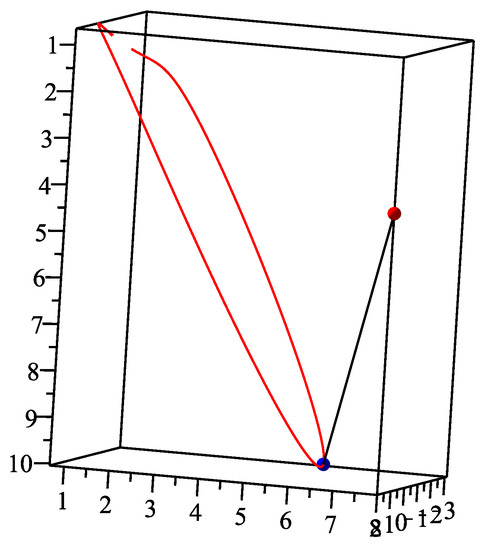

where , . It converges faster than the H-H-H method. However, the convergence of this depends on the chosen initial value. Only when the local convergence condition for the Newton’s method is satisfied, the method can acquire high effectiveness. In order to improve the robustness and rate of convergence, based on the the H-H-H method, our method is proposed. Combining the respective advantage of their two methods, if the iterative parametric value of the H-H-H method is satisfied with the convergence condition of the Newton’s method, we then go to the method to increase the convergence process. Otherwise, we continue the H-H-H method until it can generate iterative parametric value while satisfying the convergence condition by the Newton’s method, and we then go to the iterative process mentioned above. Thus, we run to the end of the whole process. The procedure not only ensures the robustness of convergence, but also improves the convergence rate. Using a hybrid strategy, our method is faster than current methods and independent from the initial value. Some numerical examples verify our conclusion. Our method can be realized as follows (see Figure 5).

Figure 5.

Geometric illustration for our method. (a) Running the H-H-H method; (b) Judging the H-H-H method whether being satisfied the convergence condition of fixed point theorem for the Newton’s iterative method; (c) Running the Newton’s iterative method.

Hybrid second order method

Input: Initial iterative value , test point p and parametric curve in an n-dimensional Euclidean space.

Output: The corresponding parameter determined by orthogonal projection point.

- Step 1.

- Initial iterative parametric value is input.

- Step 2.

- Using the iterative Equation (3), calculate the parametric value , and update to , namely, .

- Step 3.

- Determine whether absolute value of difference between the current and the new is near 0. If so, this algorithm is ended.

- Step 4.

- Substitute the new into , determine if .If ( {Using Newton’s iterative Equation (16), compute until absolute value of difference between the current and the new is near 0; then, this algorithm ends.}Else {turn to Step 2.}

Remark 1.

Firstly, a geometric illustration of our method in Figure 5 would be presented. Figure 5a illustrates the second step of our method where the next iterative parameter value is determined by the iterative Equation (3). During the iterative process, the step will become smaller and smaller. Thus, the next iterative parameter value comes close to parameter value but far from the footpoint q. If the third step of our method is not over, then our method goes into the fourth step. Figure 5b is judging condition of a fixed point theorem of the fourth step of our method. If , then it turns to the Newton’s method in Figure 5c until it runs to the end of the whole process of Newton’s second order iteration; otherwise, it goes to the second step in Figure 5a.

Secondly, we give an interpretation for the singularity case of the iterative Equation (16). As to some special cases where the H-H-H method is not convergent in Section 3.1, our method still converges. We test many examples for arbitrary initial value, arbitrary test point and arbitrary parametric curve and find that our method remains more robust to converge than the H-H-H method. If the first order derivative of the iterative Equation (16) develops into 0, i.e., about some non-negative integer m, we use a perturbed method to solve the special problem, which adopts the idea in [23,45]. Namely, the function could be increased by a very small positive number ε, i.e., , and then the iteration by Equation (16) is continued in order to calculate the parameter value. On the other hand, if the curve can be parametrized by a (piece-wise) polynomial, then the fast root-finding schemes such as Bézier clipping [28,29] are efficient ones. The only issue is the discontinuities that can be checked in a post-process. One then does not need any initial guess on the parameter value.

Thirdly, if the curve is only continuous, and the closest point can be exactly such a point, then the derivative is not well defined and our method may fail to find such a point. Namely, there are singular points on the parametric curve. We adopt the following technique to solve the problem of singularity. We use the methods [46,47,48] to find all singular points on the parametric curve and the corresponding parametric value of each singular point as many as possible. Then, the hybrid second order method comes into work. If the current iterative parametric value is the corresponding parametric value of a singular point, we make a very small perturbation ε to the current iterative parametric value , i.e., . The purpose of this behavior is to enable the hybrid second order method to run normally. Then, from all candidate points (singular points and orthogonal projection points), a corresponding point is selected so that the distance between the corresponding point and the test point is the minimum one. When the entire program terminates, the minimum distance and its corresponding parameter value are found.

3.3. Convergence Analysis of the Improved Algorithm

In this subsection, we prove the convergence analysis of our method.

Theorem 2.

In Reference [49] (Fixed Point Theorem)

If , for all ; furthermore, if exists on and a positive constant exists with for all , then there exists exactly one fixed point in .

In addition, if , the corresponding fixed point theorem of Newton’s method is as follows:

Theorem 3.

Let be a differentiable function, if for all , there is

Then, there is a fixed point in Newton’s iteration expression (16) such that . Meanwhile, the iteration sequence been from expression (16) can converge to the fixed point when .

Theorem 4.

Our method is second order convergent.

Proof:

Let be a simple zero for a nonlinear function , where . Using Taylor’s expansion, we have

where , and . Combining with (15), we then have

This means that the convergence order of our method is 2. The proof is completed. □

Theorem 5.

Convergence of our method does not depend on the initial value.

Proof.

According to the description of our method, if the iterative parametric value of the H-H-H method is satisfied with the convergence condition of the Newton’s method, we then go to the Newton’s method. Otherwise, we steadily adopt the H-H-H method until its iterative parametric value is satisfied with the convergence condition of the Newton’s method, and we go to Newton’s method. Then, we run to the end of the whole process. Theorem 1 ensures that it does not depend on the initial value. If our method goes to the fourth step and if it is appropriate to the condition of the fixed point theorem (Theorem 3), Newton’s method is realized by our method. Then, the fourth step of our method being also independent of the initial value can be confirmed by Theorem 3. In brief, convergence of our method does not depend on the initial value via the whole algorithm execution process. The proof is completed. □

4. Numerical Experiments

In order to illustrate the superiority of our method to other algorithms, we provide five numerical examples to confirm its robustness and high efficiency. From Table 1, Table 2, Table 3, Table 4, Table 5, Table 6, Table 7, Table 8, Table 9, Table 10, Table 11, Table 12, Table 13 and Table 14, the iterative termination criteria is satisfied such that . All numerical results were computed through g++ in a Fedora Linux 8 environment. The approximate zero reached up to the 17th decimal place is reflected. These results of our five examples are obtained from computer hardware configuration with T2080 1.73 GHz CPU and 2.5 GB memory.

Table 1.

Running time for different initial values of Example 1 by our method with test point p = (2.0, 4.0, 2.0).

Table 2.

Running time for different initial values of Example 1 by our method with test point p = (2.0, 2.0, 2.0).

Table 3.

Running time for different initial values of Example 2 by our method with test point p = (1.0, 3.0, 5.0).

Table 4.

Running time for different initial values of Example 2 by our method with test point p = (2.0, 4.0, 8.0).

Table 5.

Running time for different initial values of Example 2 by the algorithm [26].

Table 6.

Running time for different initial values of Example 3 by our method with test point p = (3, 4, 5, 6, 7).

Table 7.

Running time for different initial values of Example 3 by our method with test point p = (30, 40, 50, 60, 70).

Table 8.

Running time for different initial values of Example 4 by our method.

Table 9.

Running time for different initial values of Example 4 by the H-H-H method.

Table 10.

Running time for different initial values of Example 4 by the Algorithm [6].

Table 11.

Running time for different initial values of Example 5 by our method.

Table 12.

Running time for different initial values of Example 5 by the Algorithm [6].

Table 13.

Comparison of iterations by different methods in Example 5.

Table 14.

Time comparison of various algorithms.

Example 1.

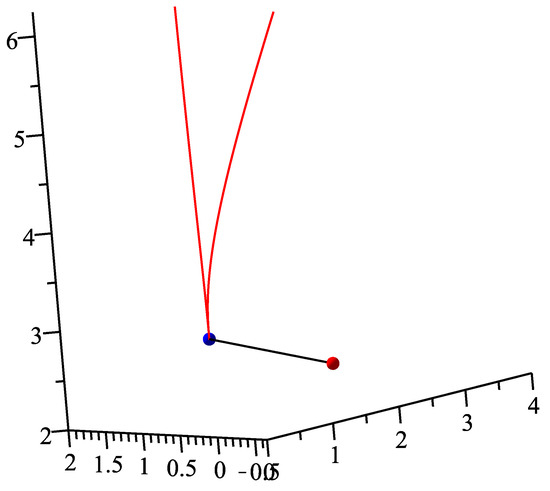

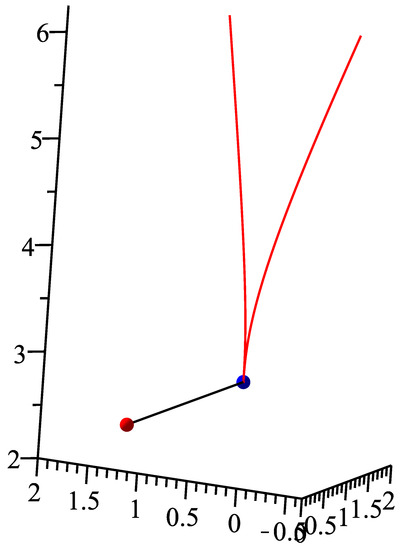

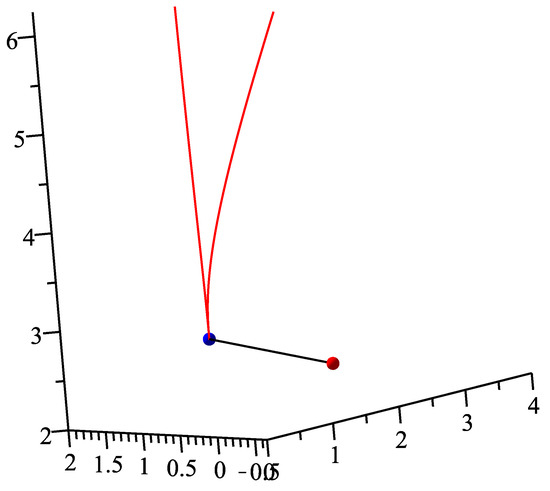

There is a parametric curve in three-dimensional Euclidean space and a test point . Using our method, the corresponding orthogonal projection parametric value is , the initial values are 0,2,4,5,6,8,9,10, respectively. For each initial value, the iteration process runs 10 times and then 10 different iteration times in nanoseconds, respectively. In Table 1, the average run time of our method for eight different initial values are 536,142, 77,622, 101,481, 119,165, 126,502, 142,393, 150,801, 156,413 nanoseconds, respectively. Finally, the overall average running time is 176,315 nanoseconds (see Figure 6). If test point p is (2.0, 2.0, 2.0), the corresponding orthogonal projection parametric value is , we replicate the procedure using our method and report the results in Table 2. In Table 2, the average running time of our method for 8 different initial values are 627,996, 89,992, 119,241, 139,036, 148,269, 167,364, 167,364, 178,554 nanoseconds, respectively. Finally, the overall average running time is 205,228 nanoseconds (see Figure 7). However, for the above two cases, the H-H-H method does not converge for any initial iterative value.

Figure 6.

Geometric illustration for the test point p = (2.0, 4.0, 2.0) of Example 1.

Figure 7.

Geometric illustration for the test point p = (2.0, 2.0, 2.0) of Example 1.

Because of a singular point on the parametric curve, we have also added some pre-processing steps before our method. (1) Find the singular point (0,0,3) and the corresponding parametric value 0 by using the methods [21,46,47,48]. (2) Using our method, the orthogonal projection points of test points (2,4,2) and (2,2,2) and their corresponding parameter values 0 and 0 are calculated, respectively. (3) From all candidate points(singular point and orthogonal projection point), corresponding point is selected so that the distance between the corresponding point and the test point is the minimum one. In Figure 6, the blue point denotes singular point (0,0,3), which is also the orthogonal projecting point of the test point (2,4,2). This is the same for the blue point in Figure 7.

Example 2.

There is a spatial quartic quasi-rational Bézier curve , where , , , and a test point . The corresponding orthogonal projection parametric value α are , , , , respectively. Using our method, the initial values are ,,,,,,,, respectively. For each initial value, the iteration process runs 10 times and then 10 different iteration times in nanoseconds, respectively. From Table 3, the average running time of our method for eight different initial values are 85,344, 93,936, 79,424, 62,643, 54,482, 22,982, 25,654, 26,868 nanoseconds, respectively. Finally, the overall average running time is 56,417 nanoseconds (see Figure 8). If test point p is , the corresponding orthogonal projection parametric value α are , ,, , respectively. We firstly replicate the procedure using our method and report the results in Table 4. From Table 4, the average running time of our method for eight different initial iterative values are 101,436, 109,001, 95,061, 77,563, 62,366, 27,054, 29,587, 32,501 nanoseconds, respectively. Finally, the overall average running time is 66,821 nanoseconds (see Figure 9). We then replicate the procedure using the algorithm [26] and report the results in Table 5. From Table 5, the average running time of the algorithm [26] for eight different initial values are 619,772, 654,281, 584,653, 467,856, 384,393, 163,225, 183,257, 195,013 nanoseconds, respectively. Finally, the overall average running time is 406,556 nanoseconds. However, for the above two cases, the H-H-H method does not converge for any initial value.

Figure 8.

Geometric illustration for the first case of Example 2.

Figure 9.

Geometric illustration for the second case of Example 2.

Example 3.

There is a parametric curve in five-dimensional Euclidean space and a test point . Using our method, the corresponding orthogonal projection parametric value is , the initial values are respectively. For each initial value, the iteration process runs 10 times and then 10 different iteration times in nanoseconds, respectively. In Table 6, the average running time of our method for eight different initial values are 391,013, 424,444, 391,092, 249,376, 115,617, 170,212, 179,465, 196,912 nanoseconds, respectively. Finally, the overall average running time is 264,766 nanoseconds. If test point p is , the corresponding orthogonal projection parametric value α is . We then replicate the procedure using our method and report the results in Table 7. In Table 7, the average running time of our method for eight different initial values are , , , , , , , nanoseconds, respectively. Finally, the overall average running time is 323,464 nanoseconds. However, for the above parametric curve and many test points, the H-H-H method does not converge for any initial value.

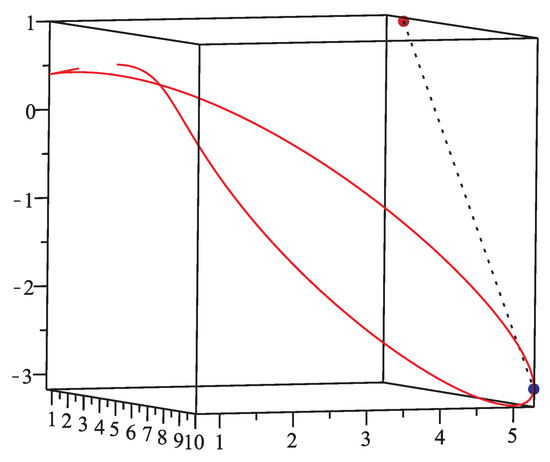

Example 4.

(Reference to [6])There is a parametric curve in two-dimensional Euclidean space and a test point . The corresponding orthogonal projection parametric value is . Using our method, the initial values are respectively. For each initial value, the iteration process runs 10 times and then 10 different iteration times in nanoseconds, respectively. In Table 8, the average running time of our method for eight different initial iterative values are 62,816, 35,042, 27,648, 43,122, 21,625, 38,654, 21,518, 72,917 nanoseconds, respectively. Finally, the overall average running time is 40,418 nanoseconds (see Figure 10). Implementing the same procedure, the overall average running time given by the H-H-H method is 231,613 nanoseconds in Table 9, while the overall average running time given by the second order method [6] is 847,853 nanoseconds in Table 10. Thus, our method is faster than the H-H-H method [38,39,40] and the second order method [6].

Figure 10.

Geometric illustration for Example 4.

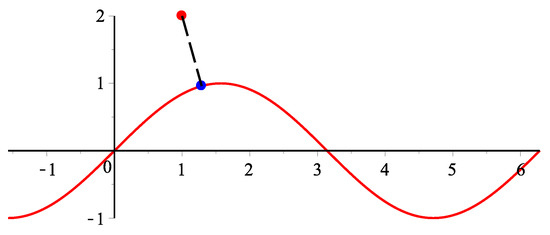

Example 5.

(Reference to [6])There is a parametric curve in two-dimensional Euclidean space and a test point , the corresponding orthogonal projection parametric value is . Using our method, the initial values are respectively. For each initial value, the iteration process runs 10 times and then 10 different iteration time in nanoseconds, respectively. In Table 11, the average running time of our method for eight different initial values are , , , ,, , , nanoseconds, respectively. Finally, the overall average running time is 32,721 nanoseconds (see Figure 11). We then replicate the procedure using the second order method [6] and report the results in Table 12. In Table 12, the average running time of the second order method [6] for 8 different initial values are , , , , , , , nanoseconds, respectively. Finally, the overall average running time is 210,049 nanoseconds. In addition, we compare the iterations by different methods where the NC denotes non-convergence in Table 13.

Figure 11.

Geometric illustration for Example 5.

Remark 2.

From the results of five examples, the overall average running time of our method is 145.5 μs. From the results of Table 9, the overall average running time of the H-H-H method is 231.6 μs. From results of six examples in [26], the overall average running time of the algorithm [1] is 680.8 μs. From results of six examples in [26], the overall average running time of the algorithm [14] is 1270.8 μs. From results of Table 5, the overall average running time of the algorithm [26] is 406.6 μs. From results of Table 10 and Table 12, the overall average running time of the algorithm [6] is 528.9 μs. Table 14 displays time comparison for these algorithms. In short, the robustness and efficiency of our method are more superior to those of the existing algorithms [1,6,14,26,38,39,40].

Remark 3.

For general parametric curve containing the elementary functions, such as sin, cos, , , , , etc., it is very difficult to transform general parametric curve into Bézier-type curve. In contrast, our method can deal with the general parametric curve containing the elementary functions. Furthermore, the convergence of our method does not depend on the initial value. From Table 13, only the H-H-H method or the Newton’s method can not ensure convergence, while our method can ensure convergence. For multiple solutions of orthogonal projection, our approach works as follows:

- (1)

- The parameter interval of parametric curve is divided into M identical subintervals.

- (2)

- An initial value is selected randomly in each interval.

- (3)

- Using our method and using each initial parametric value, do iterations, respectively. Suppose that the iterative parametric values are , , …, , respectively.

- (4)

- Calculate the local minimum distances , where .

- (5)

- Seek the global minimum distance from , .

If we are to solve all solutions as far as possible, we urge the positive integer M to be as large as possible.

We use Example 2 to illustrate how the procedure works, where, for , three parameter values are , , , respectively. It is easy to find that the projection point with the parameter value will be the one with minimum distance, whereas other projection points without these parameter values can not be the one with minimum distance. Thus, only the orthogonal projection point with minimum distance remains after the procedure to select multiple orthogonal projection points.

Remark 4.

We have done many test examples including five test examples. In the light of these test results, our method has good convergent properties for different initial values, namely, if initial value is , then the corresponding orthogonal projection parametric value α for the orthogonal projection point of the test point p is suitable for one inequality relationship

This indicates that the inequality relationship satisfies requirements of Equation (4). This shows that convergence of our method does not depend on the initial value. Furthermore, our method is robust and efficient, which is satisfied with the previous two of ten challenges proposed by [50].

5. Conclusions

This paper discusses the problem related to a point orthogonal projection onto a parametric curve in an n-dimensional Euclidean space on the basis of the H-H-H method, combining with a fixed point theorem of Newton’s method. Firstly, we run the H-H-H method. If the current iterative parametric value from the H-H-H method is satisfied with the convergence condition of the Newton’s method, we then go to the method to increase the convergence rate. Otherwise, we continue the H-H-H method to generate the iterative parametric value with satisfaction of the local convergence condition by the Newton’s method, and we then go to the previous step. Then, we run to the end of the whole process. The presented procedures ensure the convergence of our method and it does not depend on the initial value. Analysis of convergence demonstrates that our method is second order convergent. Some numerical examples confirm that our method is more efficient and performs better than other methods, such as the algorithms [1,6,14,26,38,39,40].

In this paper, our discussion focuses the algorithms in the parametric curve . For the parametric curve being ,, piecewise curve or having singular points, we only present a preliminary idea. However, we have not completely implemented an algorithm for this kind of spline with low continuity. In the future, we will try to construct several brand new algorithms to handle the kind of spline with low continuity such that they can ensure very good robustness and efficiency. In addition, we also try to extend this idea to handle point orthogonal projecting onto implicit curves and implicit surfaces that include singularity points. Of course, the realization of these ideas is of great challenge. However, it is of great value and significance in practical engineering applications.

Author Contributions

The contribution of all the authors is the same. All of the authors team up to develop the current draft. J.L. is responsible for investigating, providing methodology, writing, reviewing and editing this work. X.L. is responsible for formal analysis, visualization, writing, reviewing and editing of this work. F.P. is responsible for software, algorithm and program implementation to this work. T.C. is responsible for validation of this work. L.W. is responsible for supervision of this work. L.H. is responsible for providing resources, writing, and the original draft of this work.

Funding

This research was funded by the National Natural Science Foundation of China Grant No. 61263034, the Feature Key Laboratory for Regular Institutions of Higher Education of Guizhou Province Grant No. 2016003, the Training Center for Network Security and Big Data Application of Guizhou Minzu University Grant No. 20161113006, the Key Laboratory of Advanced Manufacturing Technology, Ministry of Education, Guizhou University Grant No. 2018479, the National Bureau of Statistics Foundation Grant No. 2014LY011, the Key Laboratory of Pattern Recognition and Intelligent System of Construction Project of Guizhou Province Grant No. 20094002, the Information Processing and Pattern Recognition for Graduate Education Innovation Base of Guizhou Province, the Shandong Provincial Natural Science Foundation of China Grant No.ZR2016GM24, the Scientific and Technology Key Foundation of Taiyuan Institute of Technology Grant No. 2016LZ02, the Fund of National Social Science Grant No. 14XMZ001 and the Fund of the Chinese Ministry of Education Grant No. 15JZD034.

Acknowledgments

We take the opportunity to thank the anonymous reviewers for their thoughtful and meaningful comments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ma, Y.L.; Hewitt, W.T. Point inversion and projection for NURBS curve and surface: Control polygon approach. Comput. Aided Geom. Des. 2003, 20, 79–99. [Google Scholar] [CrossRef]

- Piegl, L.; Tiller, W. Parametrization for surface fitting in reverse engineering. Comput.-Aided Des. 2001, 33, 593–603. [Google Scholar] [CrossRef]

- Yang, H.P.; Wang, W.P.; Sun, J.G. Control point adjustment for B-spline curve approximation. Comput.-Aided Des. 2004, 36, 639–652. [Google Scholar] [CrossRef]

- Johnson, D.E.; Cohen, E. A Framework for efficient minimum distance computations. In Proceedings of the IEEE Intemational Conference on Robotics & Automation, Leuven, Belgium, 20 May 1998. [Google Scholar]

- Pegna, J.; Wolter, F.E. Surface curve design by orthogonal projection of space curves onto free-form surfaces. J. Mech. Des. ASME Trans. 1996, 118, 45–52. [Google Scholar] [CrossRef]

- Hu, S.M.; Wallner, J. A second order algorithm for orthogonal projection onto curves and surfaces. Comput. Aided Geom. Des. 2005, 22, 251–260. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Mortenson, M.E. Geometric Modeling; Wiley: New York, NY, USA, 1985. [Google Scholar]

- Limaien, A.; Trochu, F. Geometric algorithms for the intersection of curves and surfaces. Comput. Graph. 1995, 19, 391–403. [Google Scholar] [CrossRef]

- Press, W.H.; Teukolsky, S.A.; Vetterling, W.T.; Flannery, B.P. Numerical recipes. In C: The Art of Scientific Computing, 2nd ed.; Cambridge University Press: New York, NY, USA, 1992. [Google Scholar]

- Elber, G.; Kim, M.S. Geometric Constraint solver using multivariate rational spline functions. In Proceedings of the 6th ACM Symposiumon Solid Modeling and Applications, Ann Arbor, MI, USA, 4–8 June 2001; pp. 1–10. [Google Scholar]

- Patrikalakis, N.; Maekawa, T. Shape Interrogation for Computer Aided Design and Manufacturing; Springer: Berlin, Germany, 2001. [Google Scholar]

- Polak, E.; Royset, J.O. Algorithms with adaptive smoothing for finite minimax problems. J. Optim. Theory Appl. 2003, 119, 459–484. [Google Scholar] [CrossRef]

- Selimovic, I. Improved algorithms for the projection of points on NURBS curves and surfaces. Comput. Aided Geom. Des. 2006, 439–445. [Google Scholar] [CrossRef]

- Zhou, J.M.; Sherbrooke, E.C.; Patrikalakis, N. Computation of stationary points of distance functions. Eng. Comput. 1993, 9, 231–246. [Google Scholar] [CrossRef]

- Cohen, E.; Lyche, T.; Riesebfeld, R. Discrete B-splines and subdivision techniques in computer-aided geometric design and computer graphics. Comput. Graph. Image Process. 1980, 14, 87–111. [Google Scholar] [CrossRef]

- Piegl, L.; Tiller, W. The NURBS Book; Springer: New York, NY, USA, 1995. [Google Scholar]

- Park, C.-H.; Elber, G.; Kim, K.-J.; Kim, G.-Y.; Seong, J.-K. A hybrid parallel solver for systems of multivariate polynomials using CPUs and GPUs. Comput.-Aided Des. 2011, 43, 1360–1369. [Google Scholar] [CrossRef]

- Bartoň, M. Solving polynomial systems using no-root elimination blending schemes. Comput.-Aided Des. 2011, 43, 1870–1878. [Google Scholar]

- van Sosin, B.; Elber, G. Solving piecewise polynomial constraint systems with decomposition and a subdivision-based solver. Comput.-Aided Des. 2017, 90, 37–47. [Google Scholar] [CrossRef]

- Bartoň, M.; Elber, G.; Hanniel, I. Topologically guaranteed univariate solutions of underconstrained polynomial systems via no-loop and single-component tests. Comput.-Aided Des. 2011, 43, 1035–1044. [Google Scholar]

- Johnson, D.E.; Cohen, E. Distance extrema for spline models using tangent cones. In Proceedings of the 2005 Conference on Graphics Interface, Victoria, Canada, 9–11 May 2005. [Google Scholar]

- Li, X.W.; Xin, Q.; Wu, Z.N.; Zhang, M.S.; Zhang, Q. A geometric strategy for computing intersections of two spatial parametric curves. Vis. Comput. 2013, 29, 1151–1158. [Google Scholar] [CrossRef]

- Liu, X.-M.; Yang, L.; Yong, J.-H.; Gu, H.-J.; Sun, J.-G. A torus patch approximation approach for point projection on surfaces. Comput. Aided Geom. Des. 2009, 26, 593–598. [Google Scholar] [CrossRef]

- Chen, X.-D.; Xu, G.; Yong, J.-H.; Wang, G.Z.; Paul, J.-C. Computing the minimum distance between a point and a clamped B-spline surface. Graph. Models 2009, 71, 107–112. [Google Scholar] [CrossRef]

- Chen, X.-D.; Yong, J.-H.; Wang, G.Z.; Paul, J.-C.; Xu, G. Computing the minimum distance between a point and a NURBS curve. Comput.-Aided Des. 2008, 40, 1051–1054. [Google Scholar] [CrossRef]

- Oh, Y.-T.; Kim, Y.-J.; Lee, J.; Kim, Y.-S. Gershon Elber, Efficient point-projection to freeform curves and surfaces. Comput. Aided Geom. Des. 2012, 29, 242–254. [Google Scholar] [CrossRef]

- Sederberg, T.W.; Nishita, T. Curve intersection using Bézier clipping. Comput.-Aided Des. 1990, 22, 538–549. [Google Scholar] [CrossRef]

- Bartoň, M.; Jüttler, B. Computing roots of polynomials by quadratic clipping. Comput. Aided Geom. Des. 2007, 24, 125–141. [Google Scholar]

- Li, X.W.; Wu, Z.N.; Hou, L.K.; Wang, L.; Yue, C.G.; Xin, Q. A geometric orthogonal pojection strategy for computing the minimum distance between a point and a spatial parametric curve. Algorithms 2016, 9, 15. [Google Scholar] [CrossRef]

- Mørken, K.; Reimers, M. An unconditionally convergent method for computing zeros of splines and polynomials. Math. Comput. 2007, 76, 845–865. [Google Scholar] [CrossRef]

- Li, X.W.; Wang, L.; Wu, Z.N.; Hou, L.K.; Liang, J.; Li, Q.Y. Hybrid second-order iterative algorithm for orthogonal projection onto a parametric surface. Symmetry 2017, 9, 146. [Google Scholar] [CrossRef]

- Li, X.W.; Wang, L.; Wu, Z.N.; Hou, L.K.; Liang, J.; Li, Q.Y. Convergence analysis on a second order algorithm for orthogonal projection onto curves. Symmetry 2017, 9, 210. [Google Scholar] [CrossRef]

- Chen, X.-D.; Ma, W.Y.; Xu, G.; Paul, J.-C. Computing the Hausdorff distance between two B-spline curves. Comput.-Aided Des. 2010, 42, 1197–1206. [Google Scholar] [CrossRef]

- Chen, X.-D.; Chen, L.Q.; Wang, Y.G.; Xu, G.; Yong, J.-H.; Paul, J.-C. Computing the minimum distance between two Bézier curves. J. Comput. Appl. Math. 2009, 229, 294–301. [Google Scholar] [CrossRef]

- Kim, Y.J.; Oh, Y.T.; Yoon, S.H.; Kim, M.S.; Elber, G. Efficient Hausdorff distance computation for freeform geometric models in close proximity. Comput.-Aided Des. 2013, 45, 270–276. [Google Scholar] [CrossRef]

- Sundar, B.R.; Chunduru, A.; Tiwari, R.; Gupta, A.; Muthuganapathy, R. Footpoint distance as a measure of distance computation between curves and surfaces. Comput. Graph. 2014, 38, 300–309. [Google Scholar] [CrossRef]

- Hoschek, J.; Lasser, D. Fundamentals of Computer Aided Geometric Design; A. K. Peters: Natick, MA, USA, 1993. [Google Scholar]

- Hu, S.M.; Sun, J.G.; Jin, T.G.; Wang, G.Z. Computing the parameter of points on NURBS curves and surfaces via moving affine frame method. J. Softw. 2000, 11, 49–53. [Google Scholar]

- Hartmann, E. On the curvature of curves and surfaces defined by normal forms. Comput. Aided Geom. Des. 1999, 16, 355–376. [Google Scholar] [CrossRef]

- Li, X.W.; Mu, C.L.; Ma, J.W.; Wang, C. Sixteenth-order method for nonlinear Equations. Appl. Math. Comput. 2010, 215, 3754–3758. [Google Scholar] [CrossRef]

- Liang, J.; Li, X.W.; Wu, Z.N.; Zhang, M.S.; Wang, L.; Pan, F. Fifth-order iterative method for solving multiple roots of the highest multiplicity of nonlinear equation. Algorithms 2015, 8, 656–668. [Google Scholar] [CrossRef]

- Melmant, A. Geometry and Convergence of Euler’s and Halley’s Methods. SIAM Rev. 1997, 39, 728–735. [Google Scholar] [CrossRef]

- Traub, J.F. A Class of Globally Convergent Iteration Functions for the Solution of Polynomial Equations. Math. Comput. 1966, 20, 113–138. [Google Scholar] [CrossRef]

- Śmietański, M.J. A perturbed version of an inexact generalized Newton method for solving nonsmooth equations. Numer. Algorithms 2013, 63, 89–106. [Google Scholar] [CrossRef]

- Chen, F.; Wang, W.-P.; Liu, Y. Computing singular points of plane rational curves. J. Symb. Comput. 2008, 43, 92–117. [Google Scholar] [CrossRef]

- Jia, X.-H.; Goldman, R. Using Smith normal forms and μ-bases to compute all the singularities of rational planar curves. Comput. Aided Geom. Des. 2012, 29, 296–314. [Google Scholar] [CrossRef]

- Shi, X.-R.; Jia, X.-H.; Goldman, R. Using a bihomogeneous resultant to find the singularities of rational space curves. J. Symb. Comput. 2013, 53, 1–25. [Google Scholar] [CrossRef]

- Burden, R.L.; Faires, J.D. Numerical Analysis, 9th ed.; Brooks/Cole Cengage Learning: Boston, MA, USA, 2011. [Google Scholar]

- Piegl, L.A. Ten challenges in computer-aided design. Comput.-Aided Des. 2005, 37, 461–470. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).