Abstract

We use Newton’s method to solve previously unsolved problems, expanding the applicability of the method. To achieve this, we used the idea of restricted domains which allows for tighter Lipschitz constants than previously seen, this in turn led to a tighter convergence analysis. The new developments were obtained using special cases of functions which had been used in earlier works. Numerical examples are used to illustrate the superiority of the new results.

1. Introduction

Let be a differentiable operator in the sense of Fréchet, where and are Banach spaces and is a nonempty and open set. A plethora of problems from many diverse disciplines are formulated using modeling which looks like

Hence, the problem of locating a solution for Equation (1) is very important. Most people develop iterative algorithms approximating under some conditions, since a closed form solution cannot easily be obtained in general. The most widely used iterative method is Newton’s method defined for an initial point by

Numerous convergence results appear in the literature based on which .

In this article, we introduce new semilocal convergence results based on our idea of restricted convergence region through which we locate a more precise set containing . This way, the majorizing constants and scalar functions are tighter leading to a finer convergence analysis.

To provide the semilocal convergence analysis Kantorovich used the condition [1]

Let function be non-decreasing and continuous. A weaker condition is [2,3,4,5,6]

We shall find a tighter domain than , where Equation (4) is satisfied. This way the new convergence analysis shall be at least as precise.

2. Semilocal Convergence

Theorem 1

(Kantorovich’s theorem [1]). Let and be Banach spaces. Let also be a twice continuously differentiable operator in the sense of Fréchet where Ω is a non-empty open and convex region. Assume:

- and there existswith,

- ,

- , ,

- ,

- , where.

Then, Newton’s sequence defined in Equation (2) converges to a solution of the equation . Moreover, , , for all Furthermore, the solution is unique in , where , if , and in , if , for some . Furthermore, the following error bounds hold

and

where

and

The Kantorovich theorem can be improved as follows:

Theorem 2.

Let and be Banach spaces. Let be a twice continuously differentiable operator in the sense of Fréchet. Assume:

- and there exists with ,

- ,

- , ,

- , ,

- , where ,

- , where .

Then, sequence generated by Method (2) converges to . Moreover, , , . Furthermore, the solution is unique in , where

and in

for some . Furthermore, the following error bounds hold

and

where

and

Proof.

The iterates stay in by the proof of the Kantorovich theorem, which is a more precise location for the solution than , since . □

Remark 1.

If , Theorem 1 reduces to the Kantorovich theorem, where k is the Lipschitz constant for used in [1]. We get and so holds in general.

Notice that

so the Newton–Kantorovich sufficient convergence condition has been improved and under the same effort, because the computation of k requires the computation of or as special cases.

Moreover, notice that if provided that and of Theorem 2 holds on with replacing , then Theorem 2 can be extended even further with , , replacing and , respectively, since , so .

Concerning majorizing sequences, define

where

According to the proofs, and are majorizing sequences tighter than and , respectively, and as such, they converge under the same convergence criteria. Notice also that and .

Example 1.

Let , , , and .

Case 1.. Then, we have that

and

so

We see that Kantorovich’s result [4] (see Theorem 1) cannot be applied, since

Case 2.. Then, we get

where

so by Theorem 2, Newton’s method converges for

since

Case 3. provided that . In this case, we obtain from

so

Therefore, we must have that

and

which are true for since , so . Hence, we have extended the convergence interval of the previous cases.

The sufficient convergence criterion for the modified Newton’s method

is the same as the Kantorovich condition . In [7], though we proved that this condition can be replaced by which is weaker if . In the case of the example at hand, we have that this condition is satisfied as in the previous case interval. Therefore, by restricting the convergence domain, sufficient convergence criteria can be obtained for Newton’s method identical to the ones required for the convergence of the modified Newton’s method. The same advantages are obtained if the preceding Lipschitz constants are replaced by the functions that follow.

It is worth noting that the center-Lipschitz condition (not introduced in earlier studies) makes it possible to restrict the domain from to (or ), where the iterates actually lie and where

can be used instead of the less tight estimate

used in Theorem 1 and in other related earlier studies using only condition in Theorem 1.

Next, the condition

is replaced by

Next, we show how to improve these results by relaxing Equation (5) using even weaker conditions

where is a non-decreasing continuous function satisfying . Suppose that equation has at least one positive solution. Denote by the smallest such solution.

If function v is strictly increasing, then we can choose .

Notice that Equation (5) implies Equations (6) and (7) or Equations (6) and (8) but not necessarily vice versa. Moreover, we have that

and

Next, we show that or can replace in the results obtained in Reference [4]. Then, in view of Equations (9)–(11), the new results are finer and are provided without additional cost, since requires the computation functions v, and as special cases. Notice that function v is needed to determine (i. e., and ) and that and .

3. Bratu’s Equation

Bratu’s equation is defined by the following nonlinear integral equation

where , and the kernel T is the Green’s function

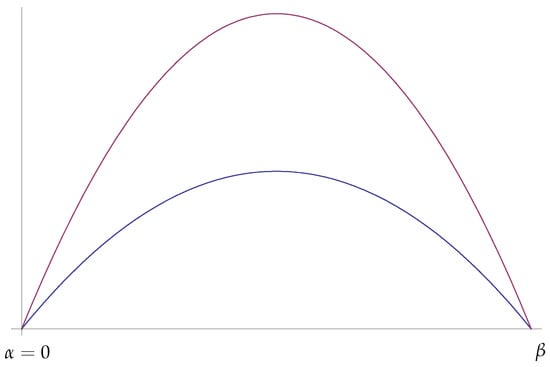

Let and . It follows from [8] that Equation (12) has two solutions such that , provided that for for each , where . Next, we show a sketch of both solutions in Figure 1.

Figure 1.

The two real solutions of Bratu’s Equation (12).

Bratu’s equation appears in connection to many problems: combustion, heat transfer, chemical reactions, and nanotechnology [9].

Using Newton’s method, we approximate the solutions of Bratu’s equation. Let be defined by

Therefore, it is clear that is not bounded in a general domain . However, it is hard to find a region containing a solution of and such that is bounded there.

Our aim is to solve using Newton’s method

Then, we solve

Using m nodes in the Gauss-Legendre quadrature formula

where the nodes and the weights are known. We can write

or

where

We shall relate sequence with its majorizing sequence

Clearly, Theorems 1 and 2 hold if operator F is defined by Equation (16) and Newton’s method in the form of Equation (14) is used.

We shall verify the hypotheses of these theorems, so we can solve our problem. To achieve this, sets

and

where and . Moreover, we have

and , where we used the infinity norm. Notice that is not bounded, since is increasing as a function of . Hence, Theorem 1 or Theorem 2 cannot be used.

Remark 2.

Notice that Kantorovich’s Theorem 1 cannot apply, although is Lipschitz continuous.

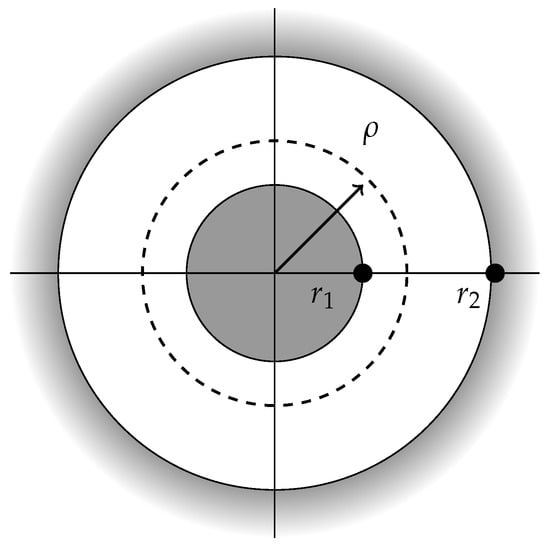

We look for a bound for in such domain ([6]). If solves Equation (16), we have , where and () are roots of the scalar equation . (See Figure 2). We choose such that with .

Figure 2.

.

Example 2.

Let us consider Bratu’s Equation (12) with , and to obtain and By choosing , , , we see that with

so ,

The conditions of Theorem 2 hold.

Consequently, we obtain the solution after three iterations (see Table 1).

Table 1.

The solution of Equation (12) for , and .

Concerning Theorem 1, we define

as an auxiliary function to construct majorazing sequence . We also use the sequence

Note then that , , and . We also obtain the a priori error estimates shown in Table 2, which shows that the error bounds are improved under our new approach.

Table 2.

Absolute error and a priori error estimates.

In this section, we consider the alternative to Equation (4) condition

since is non-decreasing. Then, we look for a function

The solution of Equation (21) is given by

Define also

We suppose in what follows that

Otherwise, i.e., if , then the following results hold with replacing .

Notice that is the function obtained by Kantorovich if and , .

For Bratu’s equation, we have and function (22) is reduced to

with and defined in Equations (17) and (18), respectively. Next, we need the auxiliary results for function .

Lemma 1.

Let be the function defined in Equation (23) and

Then:

- is the unique minimum of in .

- is non-increasing in .

- If , the equation has at least one root in . If is the smallest positive root of , we have .

Next, we define the scalar sequence

Lemma 2.

We need an auxiliary result relating sequence to .

Lemma 3.

Proof.

Observe that

We prove the following four items for all :

- There exists such that ,

- ,

- ,

- .

Firstly, from

exists and

Thirdly,

Fourthly,

Then, if – hold for all , we show in an analogous way that these items hold for too. □

The conditions shall be used:

- and there exists such that ,

- ,

- for ,

- , where is the smallest root of the equation in .

Notice that is increasing and in , since , so that is strictly increasing in . Hence, with .

Theorem 3.

Proof.

Sequence converges, since is its majorizing sequence. Then, if , , for all . Moreover, the sequence is bounded. By the continuity of F, we have , since and .

To show the uniqueness of , let be another solution of Equation (16) in . Notice that . From

it follows that , provided that the operator is invertible. To prove that Q is invertible, we prove equivalently that there exists the operator , where . Indeed, as

so exists. □

Remark 3.

We have by Equation (22) that , where

Remark 4.

- (a)

- If , the results in this study coincide with the ones in [4]. Moreover, if inequality in Equations (9)–(11) is strict, then, the new results have the following advantages: weaker sufficient convergence conditions, tighter error estimates on , and at least as precise information on the location of the solution .

- (b)

- These results can be improved even further, if we simply use the conditionand majorizing function (as in with , ) (also see the numerical section).

Remark 5.

- (a)

- It is worth noting that there are alternative approaches to the root-finding other than Newton’s method [10,11], where the latter one has cubic order of convergence, whereas Newton’s is only quadratic.

- (b)

- If the solution is sufficiently smooth, then one can use generalized Gauss quadrature rules for splines. This way, instead of projecting f into a space of higher-degree polynomials as is done in our article, one can project it to a spline space (see [12,13,14]). These quadratures in general do not affect the convergence order, but they do make the computation more efficient, since fewer quadrature points are required to reach a certain error tolerance.

4. Specialized Bratu’s Equation

Consider the equation

We transform Equation (27) into a finite dimensional problem, as we have done above, with , so that Equation (27) is equivalent to Equation (16) with , , . For this case, we have

where , and

where and .

In Section 2, we have seen that , so that is not bounded. Then, any solution of the particular system given by Equation (16) should satisfy . We can take the region , with and and , where is bounded and contains the solution (see Figure 2). The convergence of Newton’s method to follows Kantorovich’s Theorem 1.

In Theorem 3, set and (according to Remark 3), we have

so, we can choose and

Then, function is defined by

Then, we define

so

Next, we find the solutions and of the equations and to be, respectively:

and

We see that but . Then, the results in [4] cannot assure convergence to but our results guarantee convergence.

Moreover, we have that

5. Conclusions

In this article, we first introduce new Kantorovich-type results for the semilocal convergence on Newton’s method for Banach space valued operators using our idea of convergence regions. Hence, we expand the applicability of Newton’s method. Then, we focus our results on solving Bratu’s equation.

Author Contributions

These authors contributed equally to this work.

Funding

This research received no external funding.

Acknowledgments

We would like to express our gratitude to the anonymous reviewers for their help with the publication of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kantorovich, L.V.; Akilov, G.P. Functional Analysis; Pergamon Press: Oxford, UK, 1982. [Google Scholar]

- Argyros, I.K.; Magreñán, Á.A. Iterative Methods and Their Dynamics with Applications; CRC Press: New York, NY, USA, 2017. [Google Scholar]

- Argyros, I.K.; Magreñán, Á.A. A Contemporary Study of Iterative Methods; Elsevier: Amsterdam, The Netherlands, 2018. [Google Scholar]

- Ezquerro, J.A.; González, D.; Hernández, M.A. A variant of the Newton-Kantorovich theorem for nonlinear integral equations of mixed Hammerstein type. Appl. Math. Comput. 2012, 218, 9536–9546. [Google Scholar] [CrossRef]

- Ezquerro, J.A.; Hernández, M.A. On an application of Newton’s method to nonlinear operators with ω-conditioned second derivative. BIT Numer. Math. 2002, 42, 519–530. [Google Scholar]

- Ezquerro, J.A.; Hernández, M.A. Halley’s method for operators with unbounded second derivative. Appl. Numer. Math. 2007, 57, 354–360. [Google Scholar] [CrossRef]

- Argyros, I.K.; Hilout, S. Weaker conditions for the convergence of Newton’s method. J. Complex. 2012, 28, 364–387. [Google Scholar] [CrossRef]

- Davis, H.T. Introduction to Nonlinear Differential and Integral Equations; Dover Publications: New York, NY, USA, 1962. [Google Scholar]

- Caglar, H.; Caglar, N.; Özer, M.; Valaristos, A.; Miliou, A.N.; Anagnostopoulos, A.N. Dynamics of the solution of Bratu’s equation. Nonlinear Anal. 2007, 71, 672–678. [Google Scholar] [CrossRef]

- Barton, M.; Jüttler, T. Computing roots of polynomials by quadratic clipping. Comput. Aided Geom. Des. 2007, 24, 125–141. [Google Scholar] [CrossRef]

- Sederberg, T.W.; Nishita, T. Curve intersection using Bézier clipping. Comput.-Aided Des. 1990, 22, 538–549. [Google Scholar] [CrossRef]

- Barton, M.; Ait-Haddou, R.; Calo, V.M. Gaussian quadrature rules for C1 quintic splines with uniform knot vectors. J. Comput. Appl. Math. 2017, 322, 57–70. [Google Scholar] [CrossRef]

- Hiemstra, R.R.; Calabrò, F.; Schillinger, D.; Hughes, T.J.R. Optimal and reduced quadrature rules for tensor product and hierarchically refined splines in isogeometric analysis. Comput. Methods Appl. Mech. Eng. 2017, 316, 966–1004. [Google Scholar] [CrossRef]

- Johannessen, K.A. Optimal quadrature for univariate and tensor product splines. Comput. Methods Appl. Mech. Eng. 2017, 316, 84–99. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).