1. Introduction

Sensory experience, seeing, hearing, touching, smelling and tasting, is intricately connected to emotion. Sensory organs, such as the eye, receive data (e.g., electromagnetic energy) and turn them to information (e.g., color). One critical aspect of this transformation is that the information that the sensory organs produce is mainly emotional. It guides the organism to engage in avoid- or approach-action [

1]. When a menacing predator is near-by, the system sends a negative sign; when a prey is detected, the system sends a positive sign. In this manner, our sensory experience is intertwined with emotion.

Sensory engagements are the very foundation of our experience. Among many different sensory experiences, haptic perception is immediate, direct and private [

2,

3]. Skin and muscle mechanoreceptors and thermoreceptors are distributed all over the body surface, enabling perception of texture, forces, motion, vibration and temperature [

4]. Due to this unique nature of haptic perception, touch-based interfaces have flourished in the last 20 years to enhance user engagement in museums, exhibitions and heritage sites [

5,

6,

7].

Despite the growing popularity in tactile interfaces, their utility is not well understood [

8]. Combining different sensory experiences such as smell and touch into visual sense does not always produce an intended effect [

9]. To what extent does multisensory experience (tactile + visual) elevate or undermine emotion? What emotion (e.g., valence, arousal or other emotions) is influenced most by multisensory integration?

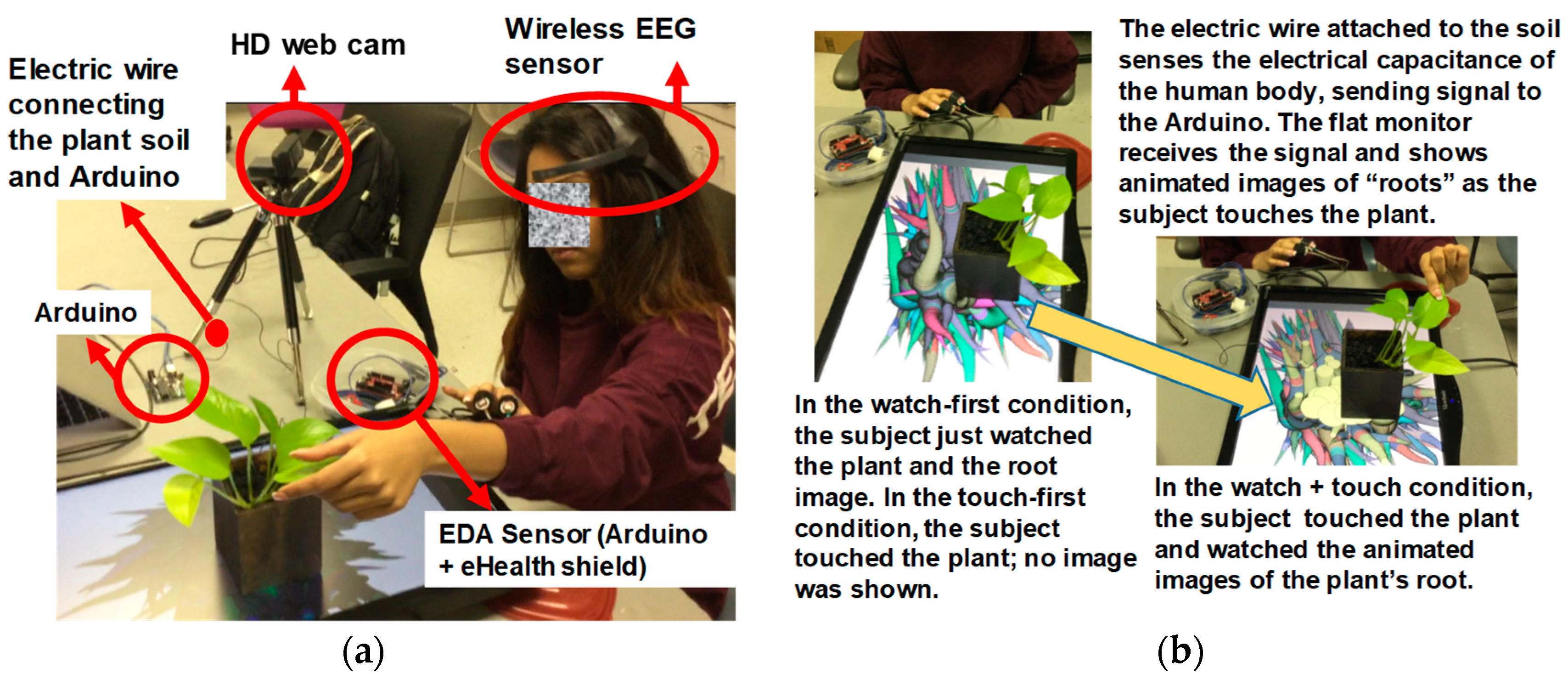

To address these questions, we developed a multisensory apparatus (i.e., Interactive Plant) that converts tactile information into visual information (

Figure 1). As a person touches a plant, our system senses changes in the electrical capacitance of her body and converts her tactile sense to animated images, allowing her to “see” her touch real time.

Background and Related Work

The recent surge in touch-based interface design stems from the development of capacitive sensing technology—sensor technology that measures capacitance change. Capacitance refers to the ability to store charges in an electric field. Capacitance between two or more conductors changes due to touch, proximity or deformation of the conductive materials. Touchscreens and touchpads on mobile phones, laptops and tablets make use of capacitive sensing technology [

10].

Many objects, such as aluminum foil, copper wires and the human body, allow the flow of electrical current. These conductive objects are thus equipped with sensing capability. Physiological sensors such as electromyography, electrocardiogram and galvanic skin sensors all track capacitive change in muscles and skin [

11]. Because proper coating can make nonconductive objects conductive, sensing capability can be imbued to everyday objects including couches [

12], bath tubs [

13], beds [

14], chairs [

15], tables [

16] and rooms for interactive art installation [

17].

Recently, capacitive sensing technology has been extended to human-plant interactions [

17]. A number of interactive plants have been developed for an urban festival and park and museum installations [

18,

19]. For example, Fasnacht and colleagues introduced a large-scale installation of interactive plant system. In their system, colored light emanates from the bottom of the plants as pedestrians touch the plants. Interactive plant systems are also developed to support emotional well-being of people as well as plants. For example, EmotiPlant displays the plant’s health state (e.g., the plants’ stress levels due to overwatering), when touched [

20]. The system helps older adults to interact with plants. Park and colleagues [

21] applied an interactive plant system for computer game design, using interactive plants as an input interface for game control. The system detects different kinds of touching such as tapping, patting and gentle pinching and sends signals for computer games. The researchers demonstrated that their interactive plant system is effective for controlling anxiety.

Despite this growing popularity in touch-based interfaces in general and capacitive sensing technology in particular, few systematic research studies have been conducted for the emotional impact of touch-based multimodal interfaces.

The pioneering work by Obrist and colleagues [

22,

23] have shown that, using ultrasonic tactile stimulation technology, people are remarkably consistent in interpreting and generating emotions. The researchers demonstrated that particular haptic patterns—e.g., circular tactile motions on the palm created by ultrasonic “mid-air” non-touch stimulation—are effective in enhancing arousal for museum visitors and curators experiencing art [

6]. In other studies, Sakr et al. [

24] showed that multimodal digital augmentation (using iPad) enhances students’ emotional engagement in history learning (World War II experiences). For example, one digital stimulus titled “Guns on the Common,” Sakr et al. showed students an image of WWII soldiers training to use guns. While viewing the picture, students also engaged in bodily interactions—pointing and gesture, which resulted in considerable excitement in students, suggesting that multimodal mobile augmentation using iPad is effective in enhancing students’ emotional engagement in history learning.

What is unclear is the emotional impact of touch-based interfaces in a multisensory and multimodal environment, especially when interacting with everyday objects such as plants. Unimodal touch-based interfaces are known to be effective and multimodal interaction using olfaction [

25] and gestures [

24] enhance emotional engagement. But how about touch-infused multisensory experience? Remarkably this question has been rarely investigated. It is implicitly assumed that multimodal experience is a simple sum of unimodal experiences. As Obrist and colleagues cogently argue, this assumption is misguided. Augmenting touch in regular audio-visual presentation at times causes confusion and exhaustion (e.g., Iron Man 3) [

9]. This example underscores the significance of studying multisensory and multimodal experience and interactive plant systems in particular.

In this observation in mind, here we focused on emotional experience of the Interactive Plant and investigated to what extent multisensory experience (tactile + visual) would enhance or undermine emotion using various behavioral and physiological measures. In particular, we investigated how people’s emotional experiences change as their tactile sense (touching the plant) was augmented with visual sense (“seeing” their touch).

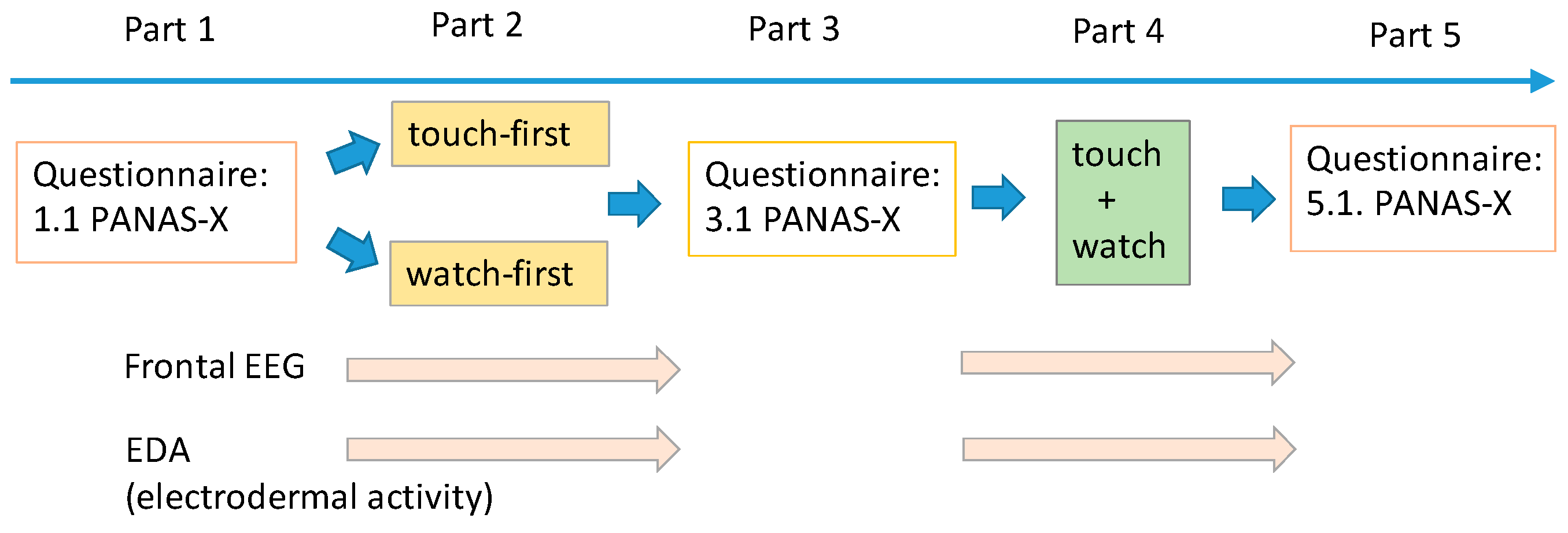

The experiment consisted of five parts (

Figure 2). In Parts 1, 3 and 5, participants indicated their emotional states using a questionnaire (Positive Negative Affect Schedule Extended—PANAS-X) [

26]. In Part 2, we divided participants into two groups (touch-first, watch-first) and let them touch or watch the plant for 1 min freely (unimodal condition). Later in Part 4 participants interacted with the same plant for one minute in a multimodal fashion (multimodal condition); as participants caressed or petted the plant, an Arduino circuit sensed the qualities of touch and sent the information to our custom-made visualization program, which drew real time animated roots on the screen, creating virtually visualized haptic sense. To scrutinize participants’ emotional reaction, we measured their physiological responses (EDA: electrodermal activity and EEG: electroencephalogram) in Parts 2 and 4.

3. Results

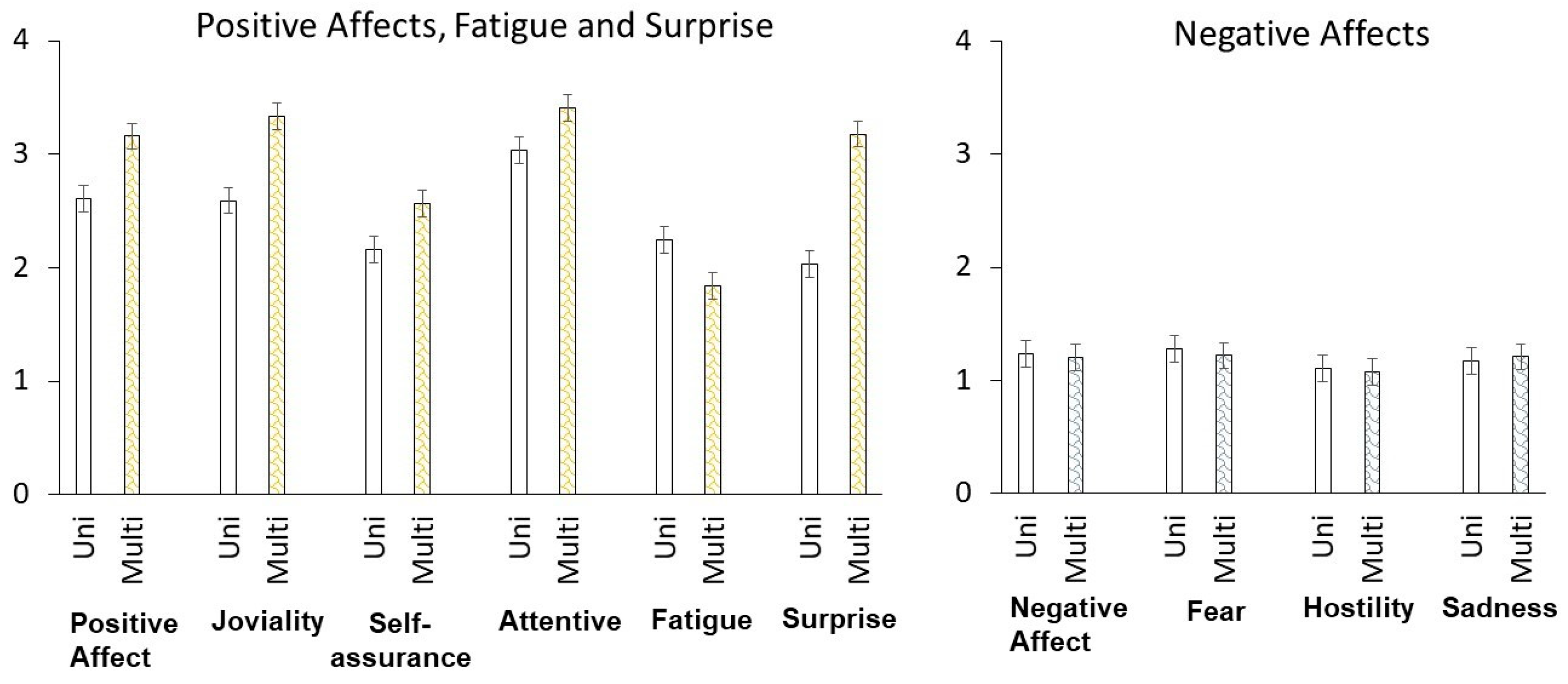

We report the impact of the multisensory experience on emotion with participants’ self-report (PANAS-X) first, followed by EDA and EEG. Overall, we found that multimodal interaction (visual + touch) significantly enhanced participants’ positive effects but had little influence on negative effects.

Questionnaire (PANAS-X). As compared to the unimodal interaction, the multimodal interaction (touch + vision) clearly boosted participants’ positive affect, joviality, self-assurance and attentiveness; positive affect,

F(1, 37) = 15.42,

MSE = 0.38,

p < 0.001,

η2p = 0.29; joviality,

F(1, 37) = 20.41,

MSE = 0.19,

p < 0.001,

η2p = 0.36; self-assurance,

F(1, 37) = 23.43,

MSM = 0.15,

p < 0.001,

η2p = 0.39; attentiveness,

F(1, 37) = 4.92,

MSE = 0.33,

p < 0.05,

η2p = 0.12. The multimodal interaction did not influence negative affect, fear, hostility and sadness;

F’s < 1.0; the multimodal interaction resulted in a significant increase in surprise;

F(1, 37) = 32.48,

MSE = 0.59,

p < 0.001,

η2p = 0.47 and a reduction of fatigue;

F(1, 37) = 6.64,

MSE = 0.30,

p < 0.05,

η2p = 0.15. In all measures, we found no evidence for interaction between modality (unimodal vs. multimodal) and task (touch-first, watch-first); similarly, we found no interaction between modality (unimodal vs. multimodal) and gender; for all measures,

F’s < 2.47,

p’s > 0.12 (

Figure 3).

These results suggest that the multimodal interaction enhanced positive affects but not negative affects. The emotional impact of modality was substantial in both male and female participants and it was present regardless of the initial task (touch-first or watch-first).

EDA (electrodermal activity). As suggested in References [

32,

33], we report the amplitude and the frequency of skin conductance responses (SCRs). To identify an individual SCR, we applied the decomposition algorithm developed by Benedek and Kaernbach [

33]. The algorithm has been implemented in a Matlab toolbox (

Ledalab; http://www.ledalab.de/). To preprocess the EDA data, we applied Ledalab’s adaptive smoothing algorithm first and then discrete deconvolution, which identified time-stamped occurrences and amplitudes of SCRs for individual participants. For each participant, we calculated mean and total SCR amplitudes and its frequency. For the frequency analysis, we counted the number of identified SCR for each participant. To calculate total SCR amplitudes, we summed amplitudes of identified SCR for each participant and to calculate mean SCR amplitudes, for each participant, we divided total SCR amplitudes by SCR frequency. The data from 4 participants were lost due to data acquisition error (their EDA data were not recorded). Thus, the data from 37 were analyzed.

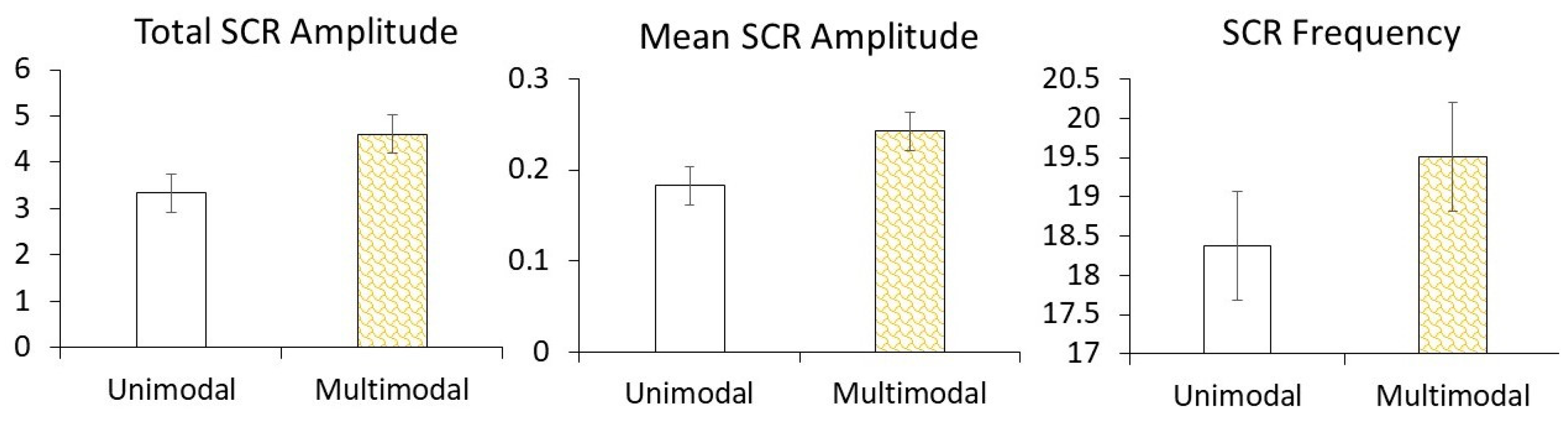

Consistent with the questionnaire data (PANAS-X), we found that total and mean SCR amplitudes were significantly larger in the multimodal condition than in the unimodal condition; total SCR amplitude,

F(1, 33) = 5.78,

MSE = 5.15,

p < 0.05,

η2p = 0.15; mean SCR amplitude,

F(1, 33) = 4.64,

MSE = 1.46,

p < 0.05,

η2p = 0.15. For SCR frequency, the difference between the unimodal condition and the multimodal condition was marginally significant;

F(1, 33) = 3.76,

MSE = 8.23,

p =0.06,

η2p = 0.15. Modality interacted neither with gender nor with task;

F’s < 1.72,

p > 0.19 (

Figure 4).

These results suggest that, consistent with the questionnaire data, the multimodal interaction created high emotional arousal.

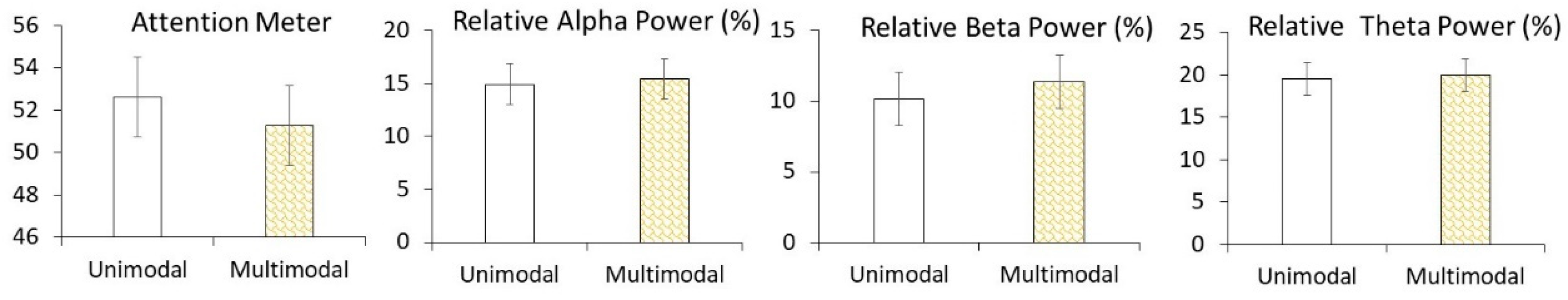

EEG (electroencephalography). In our EEG analysis, we examined powers of 8 spectral bands and

attention meter, which were collected in Parts 2 and 4 every second (1 Hz). For all band signals, we calculated relative band powers following the procedure adopted by Johnstone et al. [

27]; we summed the powers of all eight bands—delta (0.5–2.75 Hz), theta (3.5–6.75 Hz), low-alpha (7.5–9.25 Hz), high-alpha (10–11.75 Hz), low-beta (13–16.75 Hz), high-beta (18–29.75 Hz), low-gamma (31–39 Hz) and mid gamma (41–49.75 Hz)—and then divided the power for each band by the total, which was expressed as a percentage. Here, we focused on attention meter and relative alpha, beta and theta band powers, which are known to be related to mental workload [

31]. None of these comparisons showed statistical significance; for all main effect of modality,

F’s < 1.0, suggesting that the enhanced positive emotion created in the multisensory interaction was unlikely due to boosted attention (

Figure 5).

4. Discussion

Summary. Visualizing touch clearly enhanced participants’ emotions. When visual and tactile senses were integrated (participants could “see” animated images of their touch), participants found the interactive experience more fun, assuring and attentive. They felt less fatigued and more surprising. Participants’ emotional arousal, as measured by their skin conductance response, also showed a significant increase in the multimodal condition than in the unimodal condition. The multisensory combination did not make participants more fearful or hostile. In contrast, the multimodal interaction gave virtually no adverse effect. Negative emotions, such as fear, sadness and hostility were unaffected. Taken together, our results suggest that our Interactive Plant system was effective in enhancing participants’ emotional experience.

One may argue that the within-subjects design we employed for this experiment might have caused an order effect. That is, participants find multimodal interaction (visual + touch) more engaging and fun than unimodal interaction simply because the multimodal interaction was given later in the experiment, rather than earlier. This scenario is very unlikely because, as a vast majority of psychological studies demonstrate, people’s engagement level most likely declines later in the experiment rather than improves [

34]. People’s task engagement is closely related to the cognitive resources (attention and working memory) available to them. Their cognitive resources have a limited capacity and decline with continued use (habituation, mental fatigue). Thus, the level of engagement is generally high earlier in the experiment than later. Thus, our design (i.e., the multimodal condition is presented after the unimodal condition) is most likely to weaken, rather than boost, the level of engagement (i.e., multimodal interaction is emotionally more engaging). In other words, our within-subjects design, rather than promotes the findings, it counteracts the findings. Despite this adverse condition, our result shows that multimodal plant-based interaction was even stronger, revealing the robustness of our findings.

Implications. Using capacitive sensing technology, Poupyrev et al. [

18] developed one of the first interactive plant systems. Since then, several other systems have been developed to study people’s engagement in a large-scale installation [

19], gaming [

21] and eco-feedback [

35]. Our interactive plant system is a direct extension of these predecessors. Our system differs from the previous work in several points. To our knowledge, this study is one of the first systematic studies that assess the impact of interactive plant systems on emotion using both self-report measures as well as physiological measures (EDA). Although past research examined the impact of interactive plants on emotional engagement (e.g., Park), these studies only examined self-report. We show that the interactive plant system is effective on both behavioral level and physiological level (EDA), providing a strong endorsement of touch-based sensing technology as applied to plants in particular.

Why is the touch-based multimodal interface effective in boosting emotional engagement? The modern psychological constructionist model of emotion posits that emotion emerges from continuous conceptualization (meaning making) of core affect—bodily sensations of feeling good/bad and high/low arousal—in a given situation. It is a continuous and dynamic process of situational meaning making [

36,

37]. In this framework, the brain is more like a Bayesian inference meaning-making machine, in which it anticipates sensory inputs (likelihood) to constrain priors to generate posterior inference. Sensory inputs not only come from the external environment (exteroceptive sensations) but also from one’s body (heart rate, inflammation, muscle contraction). A variety of emotions we experience are “constructed” from a domain general mechanism of conceptualization (meaning making) [

36,

37,

38,

39,

40]. In this regard, sensory engagements are the very foundation of our experiences and meaning-making [

2]. Haptic sense is special in this process as it occurs in the entire body surface. We attribute the advantage of touch-based interfaces to the special nature of haptic perception and suggest that interactive plants systems are particularly suited to evoke the constructive nature of emotion.

Limitations and future directions. We are not certain how our finding can be generalized to other multimodal interfaces. The enhanced emotions in our plant system could simply be due to the fact that plants are natural and tangible. Other objects such as tables or desks may not elicit the same effect. It is also possible that our finding could be just due to a side effect of “surprise.” A surprising presentation of depicting “touch” might have created arousal, which in turn resulted in boosting positive emotions. It is also not clear how long the emotional impact would last. With repeated uses of the multimodal interfaces, novelty may fade and the emotional advantage of the plant-based multisensory interaction may disappear. In the current experiment, we find that negative emotions were less affected by the multimodal-multisensory interaction. It is unknown whether this lack of influence can be extended beyond our particular experimental condition. Future research should shed light on these issues.

We chose this plant-based interface because pothos plants are natural and easily available. The results we obtained are very much subject to the choice of the interface. If we used, for example, a teddy bear or a tennis ball as interfaces, we might have obtained different results. Whether we can extend the current finding to other interfaces should be addressed in a future study.

The interactive plant system developed here was designed to enhance emotional experience of people. Raising emotional experience is important for people with emotional disorders such as depression, anxiety and stress. Our system is accessible and relatively inexpensive; it can be employed for older adults who live in a nursery home, as well as young children with autism spectrum and Asperger’s syndrome. Although our interactive plant system is not intended to enhance learning per se, the system could be extended to early mathematics education, such as learning basic concepts of numbers, as our multimodal system is likely to help generate vivid, tangible and direct emotional experience. In future research, we would like to explore these areas.