Abstract

A numerical method is proposed for estimating piecewise-constant solutions for Fredholm integral equations of the first kind. Two functionals, namely the weighted total variation (WTV) functional and the simplified Modica-Mortola (MM) functional, are introduced. The solution procedure consists of two stages. In the first stage, the WTV functional is minimized to obtain an approximate solution . In the second stage, the simplified MM functional is minimized to obtain the final result by using the damped Newton (DN) method with as the initial guess. The numerical implementation is given in detail, and numerical results of two examples are presented to illustrate the efficiency of the proposed approach.

1. Introduction

In many physical problems, the relation between the quantity observed and the quantity to be measured can be formulated as a Fredholm integral equation of the first kind:

where the kernel function k and the right-hand side g are known, while f is the unknown to be determined. The Fredholm integral equation of the first kind is ill-posed; see for instance [1], Chapter 2.

In practical applications, there is noise in the right-hand side; therefore, Equation (1) should be revised as:

where represents the error. The above equation can be written in the operator form:

where , and .

Numerical methods for obtaining a reasonable approximate solution to the Fredholm integral equation of the first kind have attracted many researchers, and many research results have been achieved; see, for instance, ([2], Chapter 12), and [3,4,5,6,7]. Due to the ill-posedness nature of the problem, numerical solutions are extremely sensitive to perturbations caused by observation and rounding errors. Therefore, regularization is required to obtain a reasonable approximate solution. Many regularization methods have been proposed; see, for instance, [8] Chapters 2 and 8. The Tikhonov regularization method [9], the truncated singular value decomposition (TSVD) method [10], the modified TSVD (MTSVD) method [11], the Chebyshev interpolation method [12], the collocation method [13,14], the projected Tikhonov regularization method [15], and so on, are applied to obtain approximate continuous solutions of Equation (2). The total variation (TV) regularization method [16,17,18,19,20,21,22], adaptive TV methods [23,24,25,26,27], the piecewise-polynomial TSVD (PP-TSVD) method [28], and so on, are applied to obtain approximate piecewise-continuous solutions.

In this paper, we focus on the case where the solution of Equation (1) is piecewise-constant and the possible function values are known, that is:

where are given constants. This kind of problem arises in many applications, for instance barcode reading, where , , , and image restoration, where , , .

This paper is organized as follows. In Section 2, two objective functionals are introduced; one is based on a weighted TV functional to allow the TV regularization to be spatially varying, and the other one is based on a simplified Modica-Mortola (MM) functional to make use of the a priori knowledge of the solution. In Section 3, the implementation of numerical methods for solving Equation (2) is presented in detail. In Section 4, numerical examples are presented to illustrate the effectiveness of the proposed approach. Finally, concluding remarks are given in Section 5.

2. The Objective Functionals

The TV regularization method has been shown to be an effective way to estimate piecewise-constant solutions. This method looks for a numerical solution that has small TV, which is not inclined to a continuous or discontinuous solution. The weighted TV regularization scheme is more efficient [23], which allows the TV regularization to be spatially varying, that is a small weight is used if there is a possible edge and a large weight if there is no edge.

A commonly-used weighted TV functional is defined by:

where is a parameter and is a weighting function. An example of is:

where is a parameter; see [23]. We can see that , and decreases as increases. That is, if is large, the corresponding weight is small. The above weighting function is unsmooth, so we modify it as:

so that is smooth and .

One can use the weighted TV functional for Equation (2) to obtain a piecewise-constant solution. In this paper, we consider solving the following minimization problem:

where:

and is a small regularization parameter.

To impose the constraint to the numerical solutions, we consider the following simplified MM functional:

where is a regularization parameter and:

Note that the MM functional, which is given by:

(see for instance [29]) is a useful tool for solving constant constraint problems, e.g., Bogosel and Oudet applied the functional to analyze a spectral problem with the perimeter constraint [30].

It must be pointed out that the MM functional is not convex and has many minimal points. In general, we can only obtain a local minimizer for . In other words, the numerical solution obtained by minimizing depends on the initial guess. This observation motivates us to consider estimating piecewise-constant solutions for (2) by a two-stage method: compute the minimizer of and then use as the initial guess to obtain a minimizer of to obtain the final result.

2.1. Discretization

To solve the minimization problems (5) and (6) numerically, we need to discretize the relevant functionals. We use the midpoint quadrature and the central divided difference to discretize integrals and first order derivatives, respectively. Let the interval be partitioned uniformly into n subintervals , , then the quadrature points are , where . Let , and . Then, the discretization form of (2) is given by:

The discretization of the functionals , , and are as follows.

- (1)

- The discretization of is given by:where denotes the vector two-norm.

- (2)

- Let be the i-th column of the identity matrix, and let:and:

- (3)

- As for , we simply approximate it by:Here, we omit the factor for the sake of simplicity.

Therefore, the discretization of is:

and the discretization of is:

3. Numerical Implementation

In this section, the implementation of the damped Newton (DN) method for minimization of the functions and (cf. (8) and (9)) is given. We first derive the gradients and the Hessians of , and .

Lemma 1.

Let β and θ be positive constants, and define:

(1) The gradient and the Hessian of are given by:

and:

respectively.

(2) Let , and be defined by (10). Let be denoted by . Then, the gradient and the Hessian of are given by:

and:

respectively, where:

and:

Here, we use the symbols and in the same way as they have been used in [8].

(3) Let , , be given by (7), and and be defined by (10). Let be denoted by . Then, the gradient and the Hessian of are given by:

and:

respectively, where:

and:

with .

(4) The gradient of is given by:

where the partial derivatives , , are given by:

The Hessian of is a diagonal matrix given by:

where:

Proof.

Results (1) and (2) of the lemma are well known; see [8], Section 8.2.

(3) Let , then we have:

where and are defined by (10). Let ; it can be easily checked that for any ,

It follows that:

where is given by (18).

To obtain the Hessian of , we consider . From the expression of , we have that for any :

where and are given by (18) and (19) respectively. Consequently:

(4) It is easy to see that the partial derivatives , , are given by:

which can be rewritten in the form of (21). For the second order partial derivatives, we have:

and

Therefore, the Hessian of is a diagonal matrix given by:

where , , are given by (23). ☐

From Formulas (11)–(23), we can get the gradients and the Hessians of and . We introduce the following DN method for minimization of or .

| Algorithm 1: Damped Newton (DN) method for the minimization of . |

| Input an initial guess ; ; Begin iterations Compute and ; Solve to obtain ; Obtain the minimum point of the one-dimensional nonnegative function by using line search to get ; Update the approximate solution: ; Check the termination condition: If , break; ; End iterations Output . |

Remark 1.

(1) Since we carry out exact line search (Step 6) in the iterative process, the DN method converges to a local minimal point if the Hessian of is invertible. In the case that is singular, modification is required. In our numerical tests in Section 4, no modification is needed. It must be pointed out that for large-scale systems, the most expensive part of the algorithm is solving to obtain . If the matrix H has a Toeplitz structure, then the conjugate gradient method with the fast Fourier transform (FFT) can be applied to solve the system efficiently; see [31] for details.

(2) For the minimization of , if we approximate the Hessian of by the positive definite matrix (assume that where is the vector with all elements equal to one) and set the step-size to 1, we get the Gauss–Newton method. If we obtain by using line search method, we get a modified Gauss–Newton (MGN) method. In this paper, we also consider the MGN method for minimization of . Obviously, the MGN method converges to a local minimal point.

To end this section, we state the process for solving Equation (2). We first obtain the minimizer of , and then, we obtain a minimizer of by using the DN method with as the initial guess. The approach can be summarized as Algorithm 2.

| Algorithm 2: Weighted total variation Modica-Mortola (WTVMM) method for estimating piecewise-constant solution of Equation (2). |

| Obtain the minimizer of by using the MGN method or the DN method; Obtain a minimizer of by using the DN method with as the initial guess; Output . |

4. Numerical Examples

In this section, we present numerical results for two examples to illustrate that our approach is indeed capable of estimating piecewise-constant solutions to Equation (2).

Besides the numerical solution obtained by using our approach, approximate solutions obtained by using the TV regularization method and the weighted TV regularization method with weighting factors (cf. (7)) are presented. In the following tables and figures, we use the symbols weighted total variation Modica-Mortola (WTVMM), TV and WTV to denote the above three methods.

We set the number of subintervals to . All tests were carried out by using MATLAB, and the termination parameter is set to ; see Step 8 of Algorithm 1. In the tables, the column “” gives relevant parameters; the column “” gives the number of iterations (for the WTVMM method, denotes that the WTV method and the MM method require and iterations, respectively). The column “error” gives the relative error defined by:

where and are the vectors of the exact solution and the approximate solution at the quadrature points, respectively. Here, one iteration refers to Steps 4–8 of Algorithm 1.

There are several regularization parameter choosing methods, e.g., the discrepancy principle, the generalized discrepancy principle, the generalized cross validation, the L-curve and the normalized cumulative periodogram; see, for instance, [32,33], ([1], Chapters 5–6), ([8], Chapter 7), ([34], Chapters 5, 6 and 9). However, we choose the parameters by experiments since there are two additional parameters in the regularization term . We consider the following rules in choosing the parameters:

- (1)

- Since we use as an approximation of , we set β to a very small positive number. We note that if β is significantly larger than , then:As a result, the regularization term leads to an -like regularization.

- (2)

- If θ is much larger than , then the weighting function is close to one, i.e.,It follows that the weighted TV regularization is about the same as the traditional TV regularization. Therefore, we should not choose a large value for θ.

- (3)

- We choose the value for α by the help of the discrepancy principle and observe if the numerical solution is near piecewise-constant. After choosing a value for α, we consider adjustment of β and θ: if the numerical solution is over-smooth, we choose a smaller value for β or θ; on the other hand, if the numerical solution is oscillating, we choose a larger value for β or θ.

We have tried the DN method and the MGN method for minimization of the functions corresponding to the TV functional () and the WTV functional (the weighted factors are given by (7)) in our numerical tests. Numerical results show that the DN method is better than the MGN method when , and the MGN method is better than the DN method when is given by (7). In the following, we show the numerical results carried out by the DN method for minimization of the function corresponding to the TV functional and those carried out by the MGN method for minimization of the function corresponding to the WTV functional.

Example 1.

The kernel of Equation (2) is given by:

where . In this case, the condition number of the matrix K is . The right-hand side is obtained by setting the exact solution to:

where . Two noisy right-hand sides, which contain 1% and 10% of white noise, respectively, are tested.

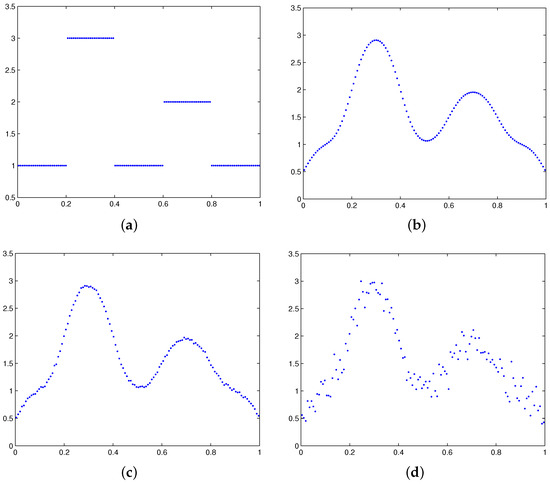

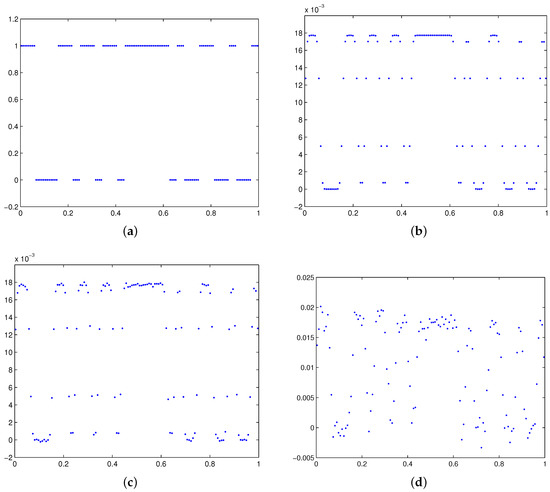

Since the average of , and is two, we choose as the initial guess for the minimization of the functions corresponding to the TV and WTV functionals, which is not too far from the exact solution. The exact solution and the right-hand side are shown in Figure 1a,b, two noisy right-hand sides are shown in Figure 1c,d, respectively. Numerical solutions obtained by different methods, as well as the point-wise error for all numerical solutions are shown in Figure 2. The relevant parameters, the numbers of iterations and the relative errors are given in Table 1 and Table 2.

Figure 1.

The exact solution, the right-hand side and noisy right-hand sides for Example 1. (a) The exact solution; (b) the right-hand side; (c) a noisy right-hand side containing of white noise; and (d) a noisy right-hand side containing of white noise.

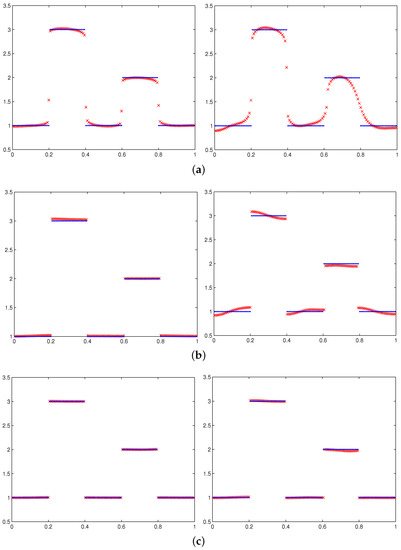

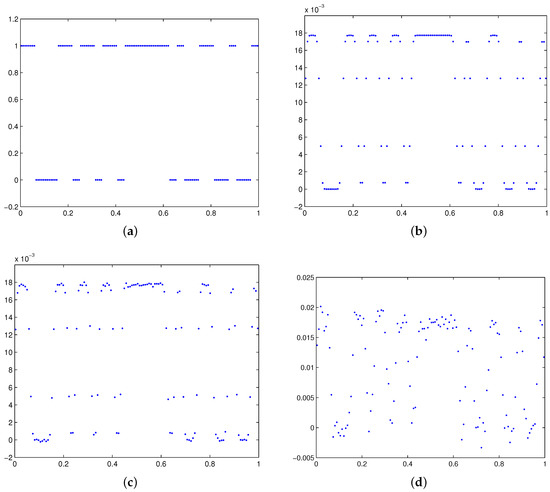

Figure 2.

The exact solution (dot), numerical solutions (x) and point-wise errors for the three methods for Example 1 with noisy right-hand sides containing (left) and (right) of white noise. (a) Numerical solutions of TV; (b) numerical solutions of WTV; (c) numerical solutions of WTVMM; and (d) point-wise errors of the numerical solutions.

Table 1.

Parameters used, number of iterations and relative errors for different methods for Example 1 where the right-hand side contains 1% of white noise. TV: total variation; WTV: weighted total variation; WTVMM: weighted total variation Modica-Mortola.

Table 2.

Parameters used, number of iterations and relative errors for different methods for Example 1 where the noisy right-hand side contains 10% of white noise.

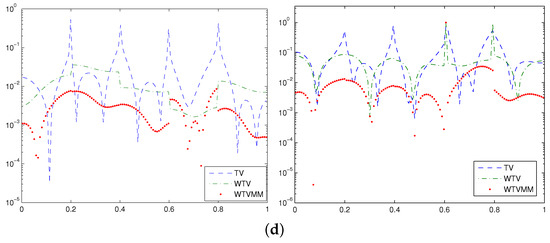

We have tried a one-stage method for solving Example 1. The best numerical solution we obtained by minimizing the function for Example 1 is shown in Figure 3. We observe from all figures in Figure 2 and Figure 3 that the numerical solution obtained via the WTVMM method is the best.

Figure 3.

The exact solution (circle) and the numerical solution obtained by minimizing (dot) for Example 1 where the noisy right-hand side contains of white noise.

We make the following remarks based on the above numerical results.

- (1)

- For the WTVMM method, the main cost lies in obtaining the numerical solution , i.e., minimization of defined by (8).

- (2)

- The numerical solutions obtained by using the WTV method are better than those obtained by the TV method. The numerical solutions obtained by using the WTVMM method can be quite accurate, which can be clearly seen from the relative errors shown in Table 1 and Table 2, the numerical solutions shown in Figure 2c and the point-wise errors shown in Figure 2d.

- (3)

- If the position of the break points in the solution can be identified by the previous step, the approximate solution obtained by the WTVMM method can be very accurate. In the other hand, since the local minimizer of obtained by the DN method is sensitive to the initial guess, we may not be able to obtain a good numerical solution if the approximate solution obtained in the previous step cannot identify the break points well.

To demonstrate the influence of the values of parameters on numerical solutions, we present the relative errors of the numerical solutions of the three methods for a considerably different set of the parameter combinations in Table 3 and Table 4. We can see from the tables that if the values of the parameters are not too far from the optimal ones, we can obtain satisfactory numerical solutions. Moreover, both values of β and θ affect the quality of the numerical solutions, and a correct choice of β seems more important (see the column corresponding to in Table 3 and the one for in Table 4). Moreover, we can see that satisfying numerical solutions can be obtained by using different parameter combinations.

Table 3.

Relative errors for TV (1st element), WTV (2nd element) and WTVMM (3rd element) with different β and θ for Example 1 with the right-hand side containing 1% of white noise.

Table 4.

Relative errors for TV (1st element), WTV (2nd element) and WTVMM (3rd element) with different β and θ for Example 1 with the right-hand side containing 10% of white noise.

Example 2.

(Barcode reading) The Fredholm integral equation can serve as a good approximation of the blurring inside a barcode reader ([1], p. 135). The kernel of the equation is given by:

In our numerical tests, we choose . The intensity of the printed barcode and the exact right-hand side are shown in Figure 4a,b, and two noisy right-hand sides are shown in Figure 4c,d, respectively.

Figure 4.

The exact solution, the right-hand side, and noisy right-hand sides for Example 2. (a) The exact solution; (b) the right-hand side; (c) a noisy right-hand side containing of white noise; and (d) a noisy right-hand side containing of white noise.

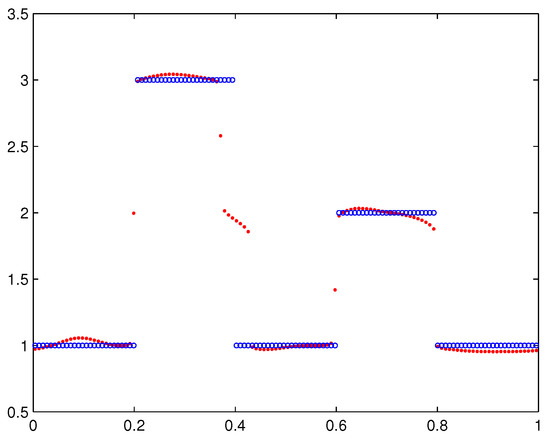

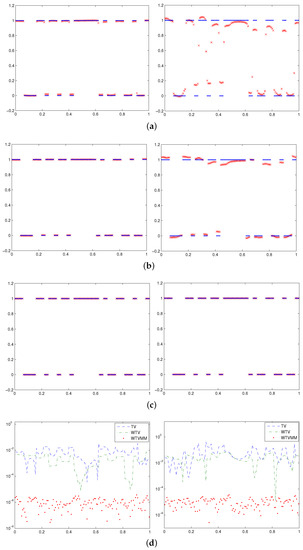

Since the average of and is , we choose as initial guess for minimization of the functions corresponding to the TV and WTV functionals. Numerical solutions obtained by different methods, as well as the the point-wise error for all numerical solutions are shown in Figure 5. The relevant parameters, the number of iterations and the relative errors are given in Table 5 and Table 6.

Figure 5.

The exact solution (dot), numerical solutions (x) and point-wise errors for the three methods for Example 2 with noisy right-hand sides containing (left) and (right) of white noise. (a) Numerical solutions of TV; (b) numerical solutions of WTV; (c) numerical solutions of WTVMM; and (d) point-wise errors of the numerical solutions.

Table 5.

Parameters used, number of iterations and relative errors for different methods for Example 2 where the right-hand side contains 1% of white noise.

Table 6.

Parameters used, number of iterations and relative errors for different methods for Example 2 where the right-hand side contains 10% of white noise.

5. Concluding Remarks

We presented a two-stage numerical method for estimating piecewise-constant solutions for Fredholm integral equations of the first kind. The main work of the two-stage method is the minimization of , which may require many iterations. Numerical results showed that if the relevant parameters are chosen suitably, the proposed method can obtain satisfying numerical solutions. We will study regularization parameter choosing methods, as well as efficient algorithms for relevant minimization problems for weighted total variation (WTV) methods, including iterative scheme for parameter determination.

Acknowledgments

We thank the anonymous referees for carefully reading the manuscript and providing valuable comments and suggestions, which helped us to improve the contents and the presentation of the paper. The research was supported by the NSF of China No. 11271238.

Author Contributions

F.R. Lin put forward basic ideas and deduced theoretical results; S.W. Yang designed and carried out numerical experiments; F.R. Lin and S.W. Yang analyzed numerical data; S.W. Yang wrote a draft and F.R. Lin wrote the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hansen, P.C. Discrete Inverse Problems: Insight and Algorithms; SIAM: Philadelphia, PA, USA, 2010. [Google Scholar]

- Delves, L.M.; Mohamed, J.L. Computational Methods for Integral Equations; Cambridge University Press: Cambridge, UK, 1985. [Google Scholar]

- Dehghan, M.; Saadatmandi, A. Chebyshev finite difference method for Fredholm integro-differential equation. Int. J. Comput. Math. 2008, 85, 123–130. [Google Scholar]

- Ghasemi, M.; Babolian, E.; Kajani, M.T. Numerical solution of linear Fredholm integral equations using sinecosine wavelets. Int. J. Comput. Math. 2007, 84, 979–987. [Google Scholar]

- Koshev, N.; Beilina, L. An adaptive finite element method for Fredholm integral equations of the first kind and its verification on experimental data. CEJM 2013, 11, 1489–1509. [Google Scholar]

- Koshev, N.; Beilina, L. A posteriori error estimates for Fredholm integral equations of the first kind. In Applied Inverse Problems, Springer Proceedings in Mathematics and Statistics; Springer: New York, NY, USA, 2013; Volume 48, pp. 75–93. [Google Scholar]

- Zhang, Y.; Lukyanenko, D.V.; Yagola, A.G. Using Lagrange principle for solving linear ill-posed problems with a priori information. Numer. Methods Progr. 2013, 14, 468–482. (In Russian) [Google Scholar]

- Vogel, C.R. Computational Methods for Inverse Problems; SIAM: Philadelphia, PA, USA, 2002. [Google Scholar]

- Tikhonov, A.N. Regularization of incorrectly posed problems. Sov. Math. Dokl. 1964, 4, 1624–1627. [Google Scholar]

- Hansen, P.C. The truncated SVD as a method for regularization. BIT Numer. Math. 1987, 27, 534–553. [Google Scholar]

- Hansen, P.C.; Sekii, T.; Shibahashi, H. The modified truncated SVD method for regularization in general form. SIAM J. Sci. Stat. Comput. 1992, 13, 1142–1150. [Google Scholar]

- Rashed, M.T. Numerical solutions of the integral equations of the first kind. Appl. Math. Comput. 2003, 145, 413–420. [Google Scholar]

- Lin, S.; Cao, F.; Xu, Z. A convergence rate for approximate solutions of Fredholm integral equations of the first kind. Positivity 2012, 16, 641–652. [Google Scholar]

- Wang, K.; Wang, Q. Taylor collocation method and convergence analysis for the Volterra-Fredholm integral equations. J. Comput. Appl. Math. 2014, 260, 294–300. [Google Scholar]

- Neggal, B.; Boussetila, N.; Rebbani, F. Projected Tikhonov regularization method for Fredholm integral equations of the first kind. J. Inequal. Appl. 2016. [Google Scholar]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Physica 1992, 60, 259–268. [Google Scholar]

- Acar, R.; Vogel, C.R. Analysis of bounded variation penalty methods for ill-posed problems. Inverse Probl. 1994, 10, 1217–1229. [Google Scholar]

- Wang, Y.; Yang, J.; Yin, W.; Zhang, Y. A new alternating minimization algorithm for total variation image reconstruction. SIAM J. Imaging Sci. 2008, 1, 248–272. [Google Scholar]

- Defrise, M.; Vanhove, C.; Liu, X. An algorithm for total variation regularization in high-dimensional linear problems. Inverse Probl. 2011, 27, 065002. [Google Scholar]

- Fadili, J.M.; Peyré, G. Total variation projection with first order schemes. IEEE Trans. Image Process. 2011, 20, 657–669. [Google Scholar]

- Micchelli, C.A.; Shen, L.; Xu, Y. Proximity algorithms for image models: Denoising. Inverse Probl. 2011, 27, 045009. [Google Scholar]

- Chan, R.H.; Liang, H.X.; Ma, J. Positively constrained total variation penalized image restoration. Adv. Adapt. Data Anal. 2011, 3, 1–15. [Google Scholar]

- Strong, D.M.; Blomgren, P.; Chan, T.F. Spatially adaptive local feature-driven total variation minimizing image restoration. Proc. SPIE 1997, 222–233. [Google Scholar]

- Chantas, G.; Galatsanos, N.P.; Molina, R.; Katsaggelos, A.K. Variational Bayesian image restoration with a product of spatially weighted total variation image priors. IEEE Trans. Image Process. 2010, 19, 351–362. [Google Scholar]

- Chen, Q.; Montesinos, P.; Sun, Q.S.; Heng, P.A.; Xia, D.S. Adaptive total variation denoising based on difference curvature. Image Vision Comput. 2010, 28, 298–306. [Google Scholar]

- Chopra, A.; Lian, H. Total variation, adaptive total variation and nonconvex smoothly clipped absolute deviation penalty for denoising blocky images. Pattern Recognit. 2010, 43, 2609–2619. [Google Scholar]

- El Hamidi, A.; Ménard, M.; Lugiez, M.; Ghannam, C. Weighted and extended total variation for image restoration and decomposition. Pattern Recognit. 2010, 43, 1564–1576. [Google Scholar]

- Hansen, P.C.; Mosegaard, K. Piecewise polynomial solutions to linear inverse problems. Lect. Notes Earth Sci. 1996, 63, 284–294. [Google Scholar]

- Jin, B.T.; Zou, J. Numerical estimation of piecewise constant Robin coefficient. SIAM J. Control Optim. 2009, 48, 1977–2002. [Google Scholar]

- Bogosel, B.; Oudet, É. Qualitative and numerical analysis of a spectral problem with perimeter constraint. SIAM J. Control Optim. 2016, 54, 317–340. [Google Scholar]

- Chan, R.H.; Ng, M.K. Conjugate gradient method for Toeplitz systems. SIAM Rev. 1996, 38, 427–482. [Google Scholar]

- Groetsch, C.W. Integral equations of the first kind, inverse problems and regularization: A crash course. J. Phys. Conf. Ser. 2007, 73, 012001. [Google Scholar]

- Goncharskii, A.V.; Leonov, A.S.; Yagola, A.G. A generalized discrepancy principle. USSR Comput. Math. Math. Phys. 1973, 13, 25–37. [Google Scholar]

- Bakushinsky, A.; Kokurin, M.Y.; Smirnova, A. Iterative Methods for Ill-posed Problems: An Introduction. Inverse and Ill-Posed Problems Series 54. De Gruyter: Berlin, Germany; New York, NY, USA, 2011. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).