Abstract

Few-shot image classification aims to classify unlabeled samples when only a small number of labeled samples are available for each class. Recently, local feature-based few-shot learning methods have made significant progress. However, existing methods often treat all local descriptors equally, without considering the importance of each local descriptor in different tasks. Therefore, the few-shot learning model is easily disturbed by class-irrelevant features, which results in a decrease in accuracy. To address this issue, we propose a task-oriented local feature rectification network (TLFRNet) with two feature rectification modules (support rectification module and query rectification module). The former module uses the relationship between each local descriptor and prototypes within the support set to rectify the support features. The latter module uses a CNN to rectify the similarity tensors between the query and support local features and then models the importance of the query local features. Through these two modules, our model can effectively reduce the intra-class variation of class-relevant features, thus obtaining more accurate image-to-class similarity for classification. Extensive experiments on five datasets show that TLFRNet achieves more superior classification performance than the related methods.

MSC:

68T07

1. Introduction

Deep learning is a crucial approach in implementing artificial intelligence, and it has surpassed human-level performance in various computer vision applications such as object detection [1], semantic segmentation [2], and image classification [3]. However, traditional deep learning heavily relies on extensive data for training, and the collection and labeling of such large training datasets consume significant time, money, and manpower. Moreover, in many scenarios with scarce data, such as rare disease diagnosis, recognition of endangered species, and industrial defect detection, obtaining a substantial amount of labeled data is challenging or even impossible. In such cases, persisting with traditional deep learning would inevitably lead to severe overfitting issues, resulting in a significant decline in model performance. In contrast, humans can quickly discern unfamiliar categories with just a few examples. Inspired by this powerful learning ability under extremely limited data conditions, many few-shot learning methods [4] have been proposed. The objective is to mimic the strong generalization capability of humans in learning useful information from very limited data.

Among the existing methods, metric learning-based few-shot learning [5] has received extensive research attention in recent years due to its simplicity and effectiveness. It consists of two main modules: the feature extraction module and the similarity measurement module. The general idea is to extract features using a feature extraction network, compare the similarities based on these features, and classify the sample by choosing the class with the highest similarity. A common approach is to obtain global features for all images as image-level representations, then average all features of each class as prototypes [6]. During prediction, the similarity between the query image and prototype of each class is calculated to determine the classification results. In general, image data contain a lot of local information. Directly using the global feature to model the image may lose valuable detailed information. Therefore, many state-of-the-art methods [7,8,9,10] have tried to construct a few-shot classifier by using the rich local features. However, existing methods often treat all local descriptors equally, without considering the importance of each local descriptor in different tasks. In fact, many parts of an image are irrelevant to the task and may not reflect the essential characteristics of the class. These areas may not contribute to classification and can even be harmful. Therefore, treating all local features equally in similarity computation may lead to inaccurate results that interfere with the classification outcome.

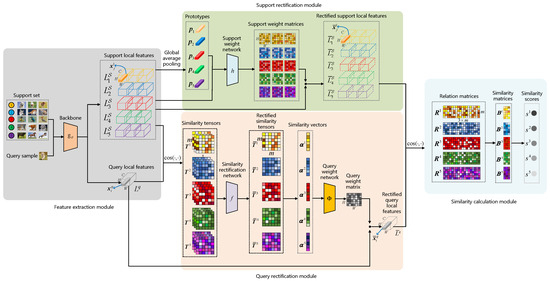

In order to address the aforementioned issues, we propose a task-oriented local feature rectification model, shown in Figure 1, that can adaptively rectify the local features based on the domain knowledge included in the specific task. The intuition behind our method is to rectify the local features by utilizing the feature weights. For example, when dealing with fine-grained classification tasks, the significant intra-class variations in images and local features often result in the failure of metric learning-based classifiers to obtain accurate image-to-class distances. Our model, by modeling the task-dependent feature weights to reduce the intra-class variation of class-relevant features, can effectively address this issue and obtain more accurate image-to-class similarity for classification. Our proposed few-shot learning network comprises four modules: the feature extraction module, support rectification module (SRM), query rectification module (QRM), and similarity calculation module. Firstly, the feature extraction module obtains local features for all images. The SRM utilizes similarity vectors between support features and prototypes to characterize the importance of support features for specific tasks. This enables task-dependent rectification to these features. The QRM models the importance of each local feature in the query image for the current task by utilizing the similarity tensor between the query image and all categories. In order to obtain a more accurate similarity tensor, we rectify the initial tensor by using a similarity rectification network (SRN). Finally, the similarity computation module calculates the similarity between the query image and each class for classification.

Figure 1.

Pipeline of the TLFRNet for the 5-way 3-shot image classification task.

Our main contributions are summarized as follows:

- We separately design the SRM and the QRM to rectify the local features in a task-oriented form. Thus, the model can focus on the features closely related to the corresponding category while suppressing the influence of irrelevant features.

- We use a similarity rectification network to rectify the similarity tensors in order to obtain more accurate feature similarities for the computation of query local feature weight.

- We conduct extensive experiments on multiple datasets, and demonstrate that our method can effectively rectify the local features, resulting in a significant improvement over related methods.

2. Related Work

To address the few-shot learning problem, many excellent methods have been proposed from different perspectives. These methods can be mainly categorized into three types: data augmentation, meta-learning, and metric learning.

2.1. Data Augmentation-Based Methods

Data augmentation refers to the expansion of data by augmenting existing samples, thereby transforming a few-shot learning task into a conventional classification task. This can be achieved by applying various transformations to the training data, such as rotation, cropping, and flipping [11], to increase the diversity of the training samples. Additionally, synthetic samples can be generated to augment the training set, enhancing the model’s generalization performance. Representative methods include delta-encoder [12] and generative adversarial nets [13].

2.2. Meta-Learning-Based Methods

Meta-learning, also known as learning to learn, is a concept that involves acquiring knowledge or experience from a large number of previous tasks, such as neural network initialization parameters, network structures, etc. The key idea is to utilize this knowledge effectively on new tasks to achieve rapid learning, rather than starting from scratch each time. Meta-Learner LSTM [14] utilized an LSTM-based network as a meta-learner, learning an optimization algorithm that could be applied to train other machine learning models. MAML [15] is another popular meta-learning method that aims to find a model initialization that allows the model to achieve good performance on new tasks with only a few gradient update steps from this initialization. Meta-SGD [16] not only learns the initialization parameters but also learns the update direction and learning rate of the meta-learner, enabling more efficient adaptation to new tasks. MetaDiff [17] uses the gradient descent algorithm as a diffusion model, which effectively reduces the memory burden and the risk of gradient vanishing in the inner-loop optimization.

2.3. Metric Learning-Based Methods

Methods based on metric learning have attracted much attention due to their simplicity and effectiveness, making them a popular approach in the field of few-shot learning. The core idea to address the few-shot problem is “learning to compare”, ensuring that the similarity of samples within the same class is greater than the similarity of samples from different classes [18]. The existing methods can be further categorized into image-level feature-based methods [6,19] and local feature-based methods [7,10,20,21]. The first category involves obtaining the global features of query images and all images in the support set through the global average pooling layer of the feature extraction network, i.e., mapping each image into a compact feature vector. The similarity between the query image and each class is then calculated using a similarity calculation module for classification. For example, Matching Net [22] incorporates episodic training mechanisms into few-shot learning, enhancing network learning capabilities through a combination of attention and memory. Prototype networks [6] assumes that each class has a prototype, and the feature vectors are centered around this prototype. Therefore, the average of the feature vectors of each class in the support set is taken as the prototype of the class. The Euclidean distance between the query image and each class prototype is then computed for classification. Relation networks [23] use neural networks to measure similarities, avoiding the need for manually selecting a metric.

Due to the scarcity of samples in few-shot tasks, just using image-level global features may lead to the loss of a considerable amount of discriminative information and cannot effectively model the distribution of each category. In contrast, rich local features possess greater discriminative and representational power. Recent research focusing on local features has made significant progress. For instance, the DN4 [7] network extracts local features from query and support set images. And then, it aggregates the similarities between all local descriptors of the query image and their nearest descriptors to obtain the similarity with each class. DeepEMD [8] solves a linear programming problem to obtain optimal structural distances between local features of different images. In developing LMPNet [9], the authors argued that a single prototype may not sufficiently reflect the feature distribution of a class and utilized the channel squeezing and spatial attention mechanisms to adaptively learn multiple prototypes for each support class. By integrating semantic information into the implicit feature transformation process, SIFT [24] enables the model to better understand the intrinsic meaning of samples and reduces the problem of excessive feature representation deviation.

3. Task-Oriented Local Feature Rectification Network

3.1. Problem Description

In the standard definition of a few-shot learning task, given a support set , there are N classes, each with K labeled images. Here, represents the i-th image of the support set , and is its label. Given a query set , where represents the j-th image in the query set , our objective is to classify each image in the query set with the assistance of a support set . This constitutes the definition of an N-way K-shot task in the context of few-shot learning. Due to the limited number of samples in the support set, traditional deep learning methods, which treat the support set as the training set and the query set as the test set, are prone to overfitting. Therefore, in few-shot learning tasks, a widely adopted episode-based training mechanism is often employed to simulate the few-shot learning scenario. To achieve this goal, we can utilize an auxiliary dataset to learn transferable knowledge. contains a large number of classes, each with numerous labeled images. The label space of does not overlap the label space of . We can construct numerous N-way K-shot auxiliary tasks from to help the model acquire cross-task generalization ability. Such a task is also referred to as an episode [22].

3.2. The Proposed TLFRNet Framework

The overall framework of TLFRNet is illustrated in Figure 1. It mainly consists of four components: the feature extraction module, the support rectification module, the query rectification module, and the similarity calculation module. Following convention, the feature extraction module is composed of a common CNN, which extracts rich local features from both support and query images. Subsequently, the two rectification modules characterize the importance of the learned local features and adaptively rectify them to obtain the final local features. Finally, the similarity calculation module computes the similarity between the query image and each class.

3.3. Feature Extraction Module

In the feature extraction module, we use a convolutional neural network to extract the local features from images. It is worth noting that this network only comprises convolutional layers and does not include fully connected layers. Given an image X, the feature extraction network maps it to , with representing the parameters of the neural network, and the corresponding output is a tensor of size , where H and W denote height and width, while C represents the number of channels. Assuming , from another perspective can be regarded as a collection of m vectors. In many papers [7,25], it has been introduced that these m vectors are local descriptors. Each local descriptor, denoted as , encapsulates local feature information from a small region of the image. For an N-way K-shot task, we use the feature extraction network to map the K images of the n-th class in the support set denoted as to a collection of local descriptors, denoted as :

where represents the j-th local descriptor of class n. In the same way, a query image can be mapped to a set of m local descriptors through the feature extraction network , denoted as :

3.4. Support Rectification Module

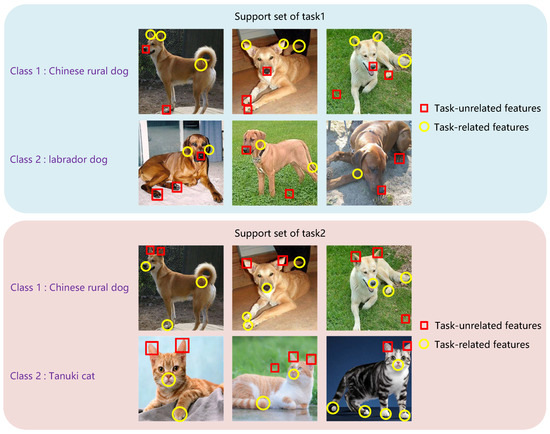

In many previous works [7,8], all local descriptors were treated as equally important. In fact, not all local descriptors in the support set are equally valuable. As shown in Figure 2, distinctive and task-related local descriptors, such as a dog’s ears in task 1 and a dog’s nose in task 2, are crucial. These types of local descriptors contribute significantly to the classification task. On the other hand, local descriptors unrelated to the category, such as background or regions like a dog’s nose in task 1 and a dog’s ears in task 2, are less important. These descriptors often act as interference in the classification process. To address this problem, our SRM adaptively generates support weight matrices within the support set based on the neural network, emphasizing discriminative local descriptors closely related to the category, and suppressing the influence of class-unrelated local descriptors. In our perspective, the discriminative quality of a support descriptor relies on a comprehensive evaluation of its connection with all the classes. This connection can be quantified by the feature-to-class similarity. We employ the mean of local descriptors as the prototype of each class, denoted as for the n-th class. The computation is as follows:

Figure 2.

Considering a 2-way 3-shot scenario.

Therefore, we obtain the set of N prototypes: . Each prototype encapsulates unique information about that class. We model the class relevance of a local descriptor from the perspective of similarity with each class prototype. For a local descriptor from the support set of the n-th class, we calculate its cosine similarity with each prototype, resulting in a similarity vector :

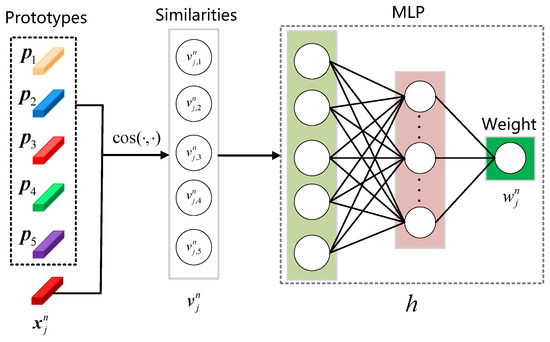

where represents the cosine similarity function. Subsequently, the similarity vector is input into a fully connected neural network (support weight network) to obtain the weight values for the local descriptor, as illustrated in Figure 3. Therefore, we can obtain the support weight matrices for each class in the support set . Then, for the n-th class, we multiply each of its local descriptors by its corresponding weight values to obtain the rectified support local descriptors :

where represents the j-th rectified local descriptor for the n-th class. In this way, we can enhance important features that are highly relevant to specific classes, while diminishing interfering features that are unrelated to those classes.

Figure 3.

Illustration of the weight generation for a local descriptor .

3.5. Query Rectification Module

The SRM, by modeling the class relevance of each local descriptor within the support set based on similarity, achieves the rectification of each support set local descriptor. However, relying solely on the SRM is insufficient, as query images also contain many class-unrelated regions that may introduce interference to the classification. In order to consider this issue, we design a QRM for the rectification of query image features, as shown in Figure 1. Specifically, we calculate the cosine similarity between query local descriptors and support local descriptors. For the K images in the n-th class of the support set, we can construct a similarity tensor , where the element at the k-th channel, i-th row, and j-th column is represented as . The computation is as follows:

This represents the cosine similarity between the i-th local descriptor in the query image and the j-th local descriptor in the k-th support image of the n-th class, where , , and denotes the j-th local descriptor of the k-th image in the n-th class of the support set. Obviously, encapsulates the relationships between query local descriptors and support local descriptors of the n-th class.

In general, the initial similarity tensor is inaccurate. As we know, the local features of images are highly correlated in adjacent regions. Similarly, the similarity matrix of local features also possesses this property. Therefore, we can leverage the ability of convolutional neural networks to integrate the local neighboring information and rectify the similarity matrix. First, each of these N similarity tensors undergoes cross-channel pooling, reducing their channel number to 1. Then, a convolutional kernel is applied to correct them, resulting in N rectified similarity tensors. Among them, the rectified similarity tensor between the query image and the n-th class is denoted as :

Next, the top k maximum values in each row of are identified to form a matrix . The sum of each row in matrix is calculated to obtain a similarity vector , where the i-th value represents the similarity between the i-th local descriptor in the query image and the n-th class. This process is repeated for all N similarity tensors, resulting in N similarity vectors .

In QRM, the weight of the i-th local descriptor in the query image is comprehensively considered based on its similarity with all the N classes. These similarities are concatenated into a vector with a length of N. Subsequently, this vector is input into a fully connected neural network to obtain the weight for . Thus, a query weight matrix composed of weights for all query local descriptors is obtained.

Finally, each local descriptor in the query image is multiplied by its corresponding weight, resulting in the rectified query local descriptors :

where represents the i-th rectified local descriptor.

3.6. Similarity Calculation Module

After the collaborative action of SRM and QRM, all image local features are adaptively rectified by the neural network. The next stage involves similarity computation and classification based on these features. The rectified support local descriptors and the rectified query local descriptors are both input into the similarity calculation module. This module employs the widely used KNN (K-Nearest Neighbors) method to compute the similarity between the query image and each class. In simple terms, for any local descriptor in the query image, we find the k nearest local descriptors in each class. Then, the sum of similarities between all local descriptors in the query image and their respective k nearest local descriptors is calculated, providing the similarity result for each class. Specifically, for the n-th class, we need to construct a relationship matrix . The element at the i-th row and j-th column is computed according to the following formula:

This represents the similarity between the i-th local descriptor of the query image and the j-th local descriptor of the n-th class. Subsequently, for the relation matrix , we find the top k maximum values for each row, forming a matrix . Finally, the summation of all elements within matrix yields the ultimate similarity score between the query image and the n-th class. During classification, the query image is assigned to the class with the highest similarity score. At this point, we use the softmax function to calculate the probability that the query image belongs to class c:

The cross-entropy loss for this query image is

where is the label of the query image .

4. Performance Evaluation

4.1. Experimental Settings

Datasets: We selected five widely used few-shot learning datasets to assess the performance of our method. These datasets were miniImageNet [22], tieredImagenet, CUB-200 [26], Stanford Dogs [27], and Stanford Cars [28]. The dataset partitioning method in the experiment was consistent with DN4 [7] and SANet [25].

Network architecture: For the sake of fairness, we adopted two popular network architectures, Conv-64F and ResNet12 [29], which are commonly used in the few-shot learning literature [7,9,17,30,31].

Implementation details: All our experiments were conducted using the PyTorch 2.0.1 framework on an NVIDIA RTX 4090 GPU. We performed common 5-way 1-shot and 5-way 5-shot tasks on the aforementioned five datasets. The implementation details are the same as for DN4 [7]. We set the hyperparameter k of KNN to 3 in our experiments.

Competing methods: We compared our TLFRNet with a range of metric learning-based methods: Matching Net [22], Prototypical Net [6], Relation Net [23], GNN [32], CovaMNet [33], and LMPNet [9]. Given the close relevance of our method to feature alignment, we also compared it with recent works related to feature alignment: SAML [34], ATL-Net [35], MATANet [36], DLDA [37], DeepEMD [8], SAPENet [38], MADN4 [21], DMN4 [10], and SANet [25]. Additionally, we compared our method with two classic meta-learning-based approaches: Meta-Learner LSTM [14] and MAML [15].

4.2. Experimental Results on miniImageNet and tieredImagenet

On the miniImageNet dataset and the tieredImagenet dataset, we used Conv-64F as the feature extraction network for conducting 5-way 1-shot and 5-way 5-shot experiments, comparing the results with other methods as shown in Table 1. It is evident that the series of methods utilizing local descriptors outperform those relying on global features. The methods related to feature alignment, SAML [34], ATL-Net [35], MATANet [36], DLDA [37], SANet [25], and DMN4 [10] exhibit better performance compared to the methods that do not utilize feature alignment: CovaMNet [33], DN4 [7], and LMPNet [9]. On the miniImageNet dataset, our method achieves accuracy improvements of 3.93% and 3.58% over DN4 [7] in 5-way 1-shot and 5-way 5-shot, respectively. Compared to most recent relevant methods, our approach outperforms SAML [34], ATL-Net [35], MATANet [36], DLDA [37], and SANet [25] by 2.95%/8.11%, 0.87%/1.38%, 1.54%/1.93%, 1.97%/2.84%, and 2.58%/3.14% in 1-shot/5-shot, respectively. The performance of our method is very close to that of TALDS-Net [39].

Table 1.

Classification accuracy (%) on the miniImageNet and tieredImagenet datasets. * denotes the results from the original work, and the results with † are the experimental results in DMN4 [10].

4.3. Experiments on Three Fine-Grained Datasets

Fine-grained few-shot classification is a highly challenging task, characterized by a limited number of samples for each class and facing the issue of significant intra-class variation coupled with small inter-class differences. To validate the effectiveness of our model in fine-grained few-shot classification tasks, we conducted 5-way 1-shot and 5-way 5-shot experiments using Conv-64F as the feature extraction network on three fine-grained datasets. The results are presented in Table 2. It can be observed that our approach outperforms all other methods on CUB-200, and achieves results comparable to MADN4 on Stanford Cars. This suggests that our method can accurately generate weights for local descriptors, emphasizing discriminative features closely related to the categories while suppressing the influence of irrelevant features when dealing with fine-grained few-shot classification.

Table 2.

Classification accuracy (%) on three fine-grained datasets. * denotes the original results, and results marked with † are from the experiments reported in works [7,41,42].

4.4. Comparison with Other Feature Extraction Networks

To further validate the effectiveness of our model under different feature extraction networks, we also selected ResNet12, a widely used feature extraction network in the field of few-shot learning, for 5-way 1-shot and 5-way 5-shot experiments on miniImageNet. The results are shown in Table 3. It can be observed that using the deeper ResNet12 as the feature extraction network outperforms the use of Conv-64F. Our method achieves an accuracy improvement of 6.00% and 2.03% in 5-way 1-shot and 5-way 5-shot experiments, respectively, compared to SANet. The accuracy is comparable to MATANet.

Table 3.

Classification accuracy (%) on miniImageNet using ResNet12 as the feature extraction network.* denotes the results from the original work.

4.5. Ablation Experiments

To verify the effectiveness of the designed SRM, QRM, and SRN, we conducted ablation experiments on the miniImageNet, Stanford Dogs, Stanford Cars, and CUB-200 datasets. Specifically, we sequentially removed the QRM, SRM, and the SRN in QRM from TLFRNet. The accuracies of these models are shown in Table 4. It is evident that removing any module results in a varying degree of accuracy degradation. Therefore, the SRM, QRM, and SRN within TLFRNet all contribute to enhancing the model’s performance.

Table 4.

The results of the ablation experiments on four datasets.

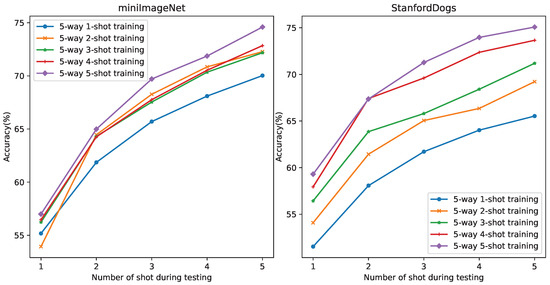

In general, for the convenience of few-shot learning experiments, we usually assume that the number of shots used during training is consistent with the number of shots used during testing. However, in many real-world scenarios, it is challenging to ensure the same number of shots for training and testing. To investigate the performance variation of our model when the number of shots is inconsistent between training and testing, we conducted numerous experiments on the miniImageNet and Stanford Dogs datasets. The results are shown in Figure 4. It is evident that regardless of the number of shots used during training, the model’s accuracy increases as the number of shots during testing increases.

Figure 4.

Performance when the number of shots is inconsistent between training and testing.

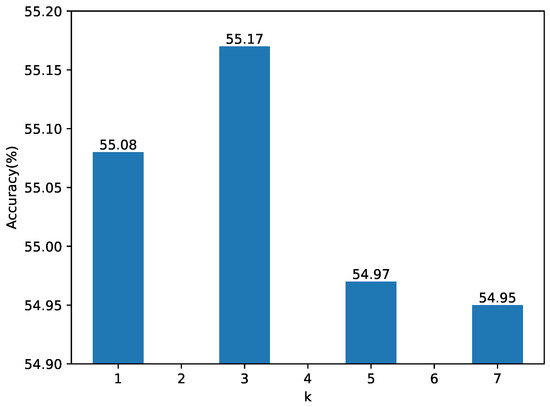

To investigate the performance of our model with respect to the hyperparameter k in KNN, we conducted a 5-way 1-shot experiment on the miniImageNet dataset by varying the value of k. The results are shown in Figure 5. The model performs best when k = 3. This indicates that the hyperparameter k indeed has an impact on model performance. In practical applications, it is necessary to select an appropriate parameter value based on the specific task.

Figure 5.

Performance when the hyperparameter k varies among .

4.6. Computational Complexity

Compared with existing local feature-based methods, the main computational cost of our TLFRNet comes from the computation of the support weight matrices in the SRM, the query weight matrices in the QRM, and the relation matrix in the similarity calculation module. In the SRM, we need to calculate the similarities between the support features and prototypes for the construction of support weights, which has a computational complexity of . In the QRM, we need to calculate the similarities between the query features and support features for the construction of query weight, which has a computational complexity of . The computational process of TLFRNet in the similarity calculation module is similar to the classification process of DN4 [7], which has a computational complexity of . As a result, our model requires additional computational overhead of . However, this computation cost is lower than other local feature-based methods. For example, DeepEMD [8] needs to solve a linear programming problem which has cubic time complexity with respect to the number of local features. LMPNet [9] uses a multi-prototype strategy, which also significantly increases the computation cost.

5. Conclusions

This paper proposes a task-oriented local feature rectification network with two feature rectification modules (SRM and QRM) for few-shot image classification. By utilizing neural networks to model the relationship between local feature similarities for specific task, adaptive weights are generated for each local descriptor of support set and query images. Additionally, a convolutional neural network is introduced to rectify the similarity tensor. Extensive experiments on five datasets demonstrate that our proposed method effectively rectifies local features, outperforming baseline methods significantly. However, our method focuses solely on local features while ignoring global features. In future work, we will explore the fusion of local and global features, as well as incorporating uncertainty [46] in the data and model caused by the scarcity of data to further enhance the accuracy of few-shot learning.

Author Contributions

Methodology, P.L.; software, X.Z.; validation, P.L.; formal analysis, P.L.; investigation, X.Z.; resources, P.L.; data curation, X.Z.; writing—original draft preparation, X.Z.; writing—review and editing, P.L.; visualization, X.Z.; supervision, P.L.; project administration, P.L.; funding acquisition, P.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported partially by the National Natural Science Foundation of China under grant number 62006126, the Natural Science Foundation of Jiangsu Province under grant number BK20200740.

Data Availability Statement

The datasets and code used in this study are publicly available. The source code for the proposed model can be accessed at https://github.com/zxCode6/TLFRNet (accessed on 4 May 2025). The dataset used for evaluation can be downloaded at https://github.com/zxCode6/TLFRNet If any additional information is required, the authors can be contacted for further details.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lin, Z.; Wang, Y.; Zhang, J.; Chu, X. Dynamicdet: A unified dynamic architecture for object detection. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 6282–6291. [Google Scholar]

- Nirkin, Y.; Wolf, L.; Hassner, T. Hyperseg: Patch-wise hypernetwork for real-time semantic segmentation. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 4061–4070. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Luo, X.; Wu, H.; Zhang, J.; Gao, L.; Xu, J.; Song, J. A closer look at few-shot classification again. In Proceedings of the International Conference on Machine Learning (ICML), Honolulu, HI, USA, 23–29 July 2023; pp. 23103–23123. [Google Scholar]

- Zhou, F.; Wang, P.; Zhang, L.; Wei, W.; Zhang, Y. Revisiting prototypical network for cross domain few-shot learning. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 20061–20070. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical Networks for Few-shot Learning. In Proceedings of the Thirty-First Annual Conference on Neural Information Processing Systems, NeurIPS, Long Beach, CA, USA, 4–9 December 2017; pp. 4077–4087. [Google Scholar]

- Li, W.; Wang, L.; Xu, J.; Huo, J.; Gao, Y.; Luo, J. Revisiting local descriptor based image-to-class measure for few-shot learning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7260–7268. [Google Scholar]

- Zhang, C.; Cai, Y.; Lin, G.; Shen, C. Deepemd: Few-shot image classification with differentiable earth mover’s distance and structured classifiers. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12203–12213. [Google Scholar]

- Huang, H.; Wu, Z.; Li, W.; Huo, J.; Gao, Y. Local descriptor-based multi-prototype network for few-shot learning. Pattern Recognit. 2021, 116, 107935. [Google Scholar] [CrossRef]

- Liu, Y.; Zheng, T.; Song, J.; Cai, D.; He, X. Dmn4: Few-shot learning via discriminative mutual nearest neighbor neural network. In Proceedings of the Thirty-Sixth AAAI Conference on Artificial Intelligence (AAAI), Virtual, 22 February–1 March 2022; Volume 36, pp. 1828–1836. [Google Scholar]

- Qi, H.; Brown, M.; Lowe, D.G. Low-shot learning with imprinted weights. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5822–5830. [Google Scholar]

- Schwartz, E.; Karlinsky, L.; Shtok, J.; Harary, S.; Marder, M.; Kumar, A.; Feris, R.; Giryes, R.; Bronstein, A. Delta-encoder: An effective sample synthesis method for few-shot object recognition. In Proceedings of the Conference on Neural Information Processing Systems (NeurIPS), Montréal, QC, Canada, 3–8 December 2018; pp. 2850–2860. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Ravi, S.; Larochelle, H. Optimization as a model for few-shot learning. In Proceedings of the 4th International Conference on Learning Representations (ICLR), San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the International Conference on Machine Learning (ICML), Sydney, Australia, 6–11 August 2017; pp. 1126–1135. [Google Scholar]

- Li, Z.; Zhou, F.; Chen, F.; Li, H. Meta-sgd: Learning to learn quickly for few-shot learning. arXiv 2017, arXiv:1707.09835. [Google Scholar]

- Zhang, B.; Luo, C.; Yu, D.; Li, X.; Lin, H.; Ye, Y.; Zhang, B. Metadiff: Meta-learning with conditional diffusion for few-shot learning. In Proceedings of the 39th Annual AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; pp. 16687–16695. [Google Scholar]

- Zhu, H.; Koniusz, P. Transductive few-shot learning with prototype-based label propagation by iterative graph refinement. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 23996–24006. [Google Scholar]

- Koch, G.; Zemel, R.; Salakhutdinov, R. Siamese neural networks for one-shot image recognition. In Proceedings of the ICML Deep Learning Workshop, Lille, France, 6–11 July 2015. [Google Scholar]

- Lee, S.; Moon, W.; Seong, H.S.; Heo, J.P. Task-Oriented Channel Attention for Fine-Grained Few-Shot Classification. arXiv 2023, arXiv:2308.00093. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Yang, L.; Gao, F. More attentional local descriptors for few-shot learning. In Proceedings of the 29th International Conference on Artificial Neural Networks, ICANN, Bratislava, Slovakia, 15–18 September 2020; pp. 419–430. [Google Scholar]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Kavukcuoglu, K.; Wierstra, D. Matching Networks for One Shot Learning. In Proceedings of the Annual Conference on Neural Information Processing Systems, NeurIPS, Barcelona, Spain, 5–10 December 2016; pp. 3630–3638. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.; Hospedales, T.M. Learning to compare: Relation network for few-shot learning. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1199–1208. [Google Scholar]

- Pan, M.H.; Xin, H.Y.; Shen, H.B. Semantic-based implicit feature transform for few-shot classification. Int. J. Comput. Vis. 2024, 132, 5014–5029. [Google Scholar] [CrossRef]

- Li, P.; Song, Q.; Chen, L.; Zhang, L. Local feature semantic alignment network for few-shot image classification. Multimed. Tools Appl. 2024, 83, 69489–69509. [Google Scholar] [CrossRef]

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S. The Caltech-UCSD Birds-200-2011 Dataset; Technical Report CNS-TR-2011-001; California Institute of Technology: Pasadena, CA, USA, 2011. [Google Scholar]

- Khosla, A.; Jayadevaprakash, N.; Yao, B.; Li, F.F. Novel dataset for fine-grained image categorization: Stanford dogs. In Proceedings of the CVPR Workshop on Fine-Grained Visual Categorization (FGVC), Colorado Springs, CO, USA, 20–25 June 2011. [Google Scholar]

- Krause, J.; Stark, M.; Deng, J.; Fei-Fei, L. 3d object representations for fine-grained categorization. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 2–8 December 2013; pp. 554–561. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zheng, Z.; Feng, X.; Yu, H.; Li, X.; Gao, M. BDLA: Bi-directional local alignment for few-shot learning. Appl. Intell 2023, 53, 769–785. [Google Scholar] [CrossRef]

- Trosten, D.J.; Chakraborty, R.; Løkse, S.; Wickstrøm, K.K.; Jenssen, R.; Kampffmeyer, M.C. Hubs and hyperspheres: Reducing hubness and improving transductive few-shot learning with hyperspherical embeddings. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7527–7536. [Google Scholar]

- Satorras, V.G.; Estrach, J.B. Few-shot learning with graph neural networks. In Proceedings of the 6th International Conference on Learning Representations (ICLR 2018), Vancouver, BC, Canada, 30 April–3 May 2018; pp. 1–13. [Google Scholar]

- Li, W.; Xu, J.; Huo, J.; Wang, L.; Gao, Y.; Luo, J. Distribution consistency based covariance metric networks for few-shot learning. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, AAAI, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8642–8649. [Google Scholar]

- Hao, F.; He, F.; Cheng, J.; Wang, L.; Cao, J.; Tao, D. Collect and select: Semantic alignment metric learning for few-shot learning. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8460–8469. [Google Scholar]

- Dong, C.; Li, W.; Huo, J.; Gu, Z.; Gao, Y. Learning task-aware local representations for few-shot learning. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 19–27 August 2021; pp. 716–722. [Google Scholar]

- Chen, H.; Li, H.; Li, Y.; Chen, C. Multi-scale adaptive task attention network for few-shot learning. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; pp. 4765–4771. [Google Scholar]

- Song, Q.; Zhou, S.; Xu, L. Learning More Discriminative Local Descriptors for Few-shot Learning. arXiv 2023, arXiv:2305.08721. [Google Scholar]

- Huang, X.; Choi, S.H. Sapenet: Self-attention based prototype enhancement network for few-shot learning. Pattern Recognit. 2023, 135, 109170. [Google Scholar] [CrossRef]

- Qiao, Q.; Xie, Y.; Zeng, Z.; Li, F. Talds-net: Task-aware adaptive local descriptors selection for few-shot image classification. In Proceedings of the ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 3750–3754. [Google Scholar]

- Wang, M.; Yan, B. LDCA: Local Descriptors with Contextual Augmentation for Few-Shot Learning. arXiv 2024, arXiv:2401.13499. [Google Scholar]

- Huang, H.; Zhang, J.; Zhang, J.; Xu, J.; Wu, Q. Low-Rank Pairwise Alignment Bilinear Network For Few-Shot Fine-Grained Image Classification. IEEE Trans. Multimed. 2021, 23, 1666–1680. [Google Scholar] [CrossRef]

- Wu, J.; Chang, D.; Sain, A.; Li, X.; Ma, Z.; Cao, J.; Guo, J.; Song, Y.Z. Bi-directional feature reconstruction network for fine-grained few-shot image classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 2821–2829. [Google Scholar]

- Yan, B. Feature Aligning Few shot Learning Method Using Local Descriptors Weighted Rules. arXiv 2024, arXiv:2408.14192. [Google Scholar]

- Mishra, N.; Rohaninejad, M.; Chen, X.; Abbeel, P. A simple neural attentive meta-learner. arXiv 2017, arXiv:1707.03141. [Google Scholar]

- Gidaris, S.; Komodakis, N. Dynamic few-shot visual learning without forgetting. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4367–4375. [Google Scholar]

- He, J.; Zhang, X.; Lei, S.; Alhamadani, A.; Chen, F.; Xiao, B.; Lu, C.T. Clur: Uncertainty estimation for few-shot text classification with contrastive learning. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Minin, SIGKDD, Long Beach, CA, USA, 6–10 August 2023; pp. 698–710. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).