Abstract

This paper presents a partially symmetric regularized two-step inertial alternating direction method of multipliers for solving non-convex split feasibility problems (SFP), which adds a two-step inertial effect to each subproblem and includes an intermediate update term for multipliers during the iteration process. Under suitable assumptions, the global convergence is demonstrated. Additionally, with the help of the Kurdyka−Łojasiewicz (KL) property, which quantifies the behavior of a function near its critical points, the strong convergence of the proposed algorithm is guaranteed. Numerical experiments are performed to demonstrate the efficacy.

Keywords:

non-convex split feasibility problems; alternating direction method of multipliers; Kurdyka−Łojasiewicz (KL) property; convergence analysis; two-step inertial effect MSC:

90-10

1. Introduction

The split feasibility problem (SFP) can be expressed in the following manner:

where C is a closed convex set and Q is a non-convex closed set. A is a linear mapping from to . The split feasibility problem (SFP) has been applied to address a diverse array of real-world challenges, including image denoising [1], CT image reconstruction [2], intensity-modulated radiation therapy (IMRT) [3,4,5], and Pareto front navigation in multi-criteria optimization [6]. Additionally, numerous iterative approaches have been used to address the split feasibility problem (SFP) [7,8,9,10]. The majority of existing techniques are designed for convex sets, but satisfying convexity requirements remains challenging in practice. Therefore, the primary objective of this paper will be the split feasibility problem (SPF), where the sets involved are not always convex.

The alternating direction method of multipliers (ADMM) [11] is a crucial method for solving the separable linear constrained problem, as follows:

where f: is a proper lower semi-continuous function, g: is smooth, . The augmented Lagrangian function for problem (2) is

where is a Lagrangian multiplier and β > 0 is a penalty parameter. The classic iterative format of ADMM for solving problem (2) is as follows:

In recent years, research on the theory and algorithms of ADMM has become relatively comprehensive [12,13,14,15,16]. ADMM has been widely applied for solving convex optimization problems. However, when the objective function is non-convex, ADMM may not converge. To address this issue, we transform problem (1) into a separable problem with linear constraints, which makes it easier to solve. Two functions play a crucial role: the indication function and the distance function. Mathematically, given a non-empty closed set D in the d-dimensional Euclidean space , the indicator function is defined as follows:

The distance function of set C, represented by : is given by

where is obviously proper lower semi-continuous and is smooth. When C is a closed convex set and Q is a non-convex closed set, the non-convex split feasibility problem can be reformulated as follows:

This optimization problem is the sum of two non-negative functions, and their minimum value of zero can only be achieved under the condition of problem (1). Due to C being a convex closed set, f(x) is continuous, differentiable, and gradient-Lipschitz continuous. The augmented Lagrangian function for problem (3) is as follows:

In order to endow the ADMM algorithm with better theoretical properties, a number of researchers have carried out the following studies based on problem (3). Zhao et al. [17] considered the symmetric version of ADMM and selected different relaxation factors and s, adding the intermediate update term of the Lagrange multiplier during the algorithm iteration process:

In addition, the combination of the ADMM algorithm with inertial technology can also significantly improve the performance of the ADMM algorithm in solving non-convex optimization problems. Dang et al. [18] incorporated the inertial technique into each sub-problem of the ADMM algorithm and employed a dual-relaxed term to ensure the convergence of the algorithm.

Based on the previous work, we propose a partially symmetric regularized two-step inertial alternating direction method of multipliers for solving the non-convex split feasibility problem, which has not been extensively studied in the past. The novelty of this paper can be summarized as follows: Firstly, we transform such type of non-convex split feasibility problems into two separable non-convex linear constraint problems for an easier solution. Secondly, we add an intermediate update term for the multipliers throughout the iteration phase and apply the two-step inertial technique to each sub-problem of the Alternating Direction Method of Multipliers (ADMM) algorithm. Lastly, to guarantee the strong convergence of the proposed algorithm for resolving non-convex split feasibility problems, we employ the Kurdyka−Łojasiewicz (KL) property.

The structure of the paper is as follows: The basic concepts, definitions, and related results are described in the second part. The convergence of the algorithm is demonstrated in the Section 3. The fourth part showcases the effectiveness of the algorithms through experiments. The Section 5 presents the main conclusions.

2. Preliminaries

In this article, represents an n dimensional Euclidean space and represents the Euclidean norm. For any , where is a symmetric positive semidefinite matrix. and represent the minimum and maximum eigenvalues of the symmetric matrix G, respectively. Then, . When set is non-empty, for any point , the distance from point y to set Q is defined as . In particular, if Q = ∅, then d(y, Q) = +∞. The domain of function g is denoted as dom g = {}. In the Euclidean space, let C be a non-empty closed subset. The projection on the set C is the operator which is defined as .

Definition 1

([19]). If function g: satisfies at , then function g is said to be lower semi-continuous at y. If g is lower semi-continuous at every point, then g is called a lower semi-continuous function. Due to Q being a closed set, g(y), y Q is a normal lower semi-continuous function.

Definition 2

([19]). Let the function g: be normal lower semi-continuous.

- (I)

- The Fréchet subdifferentiation of g at y dom g is defined as

- (II)

- The limit subdifferentiation of g at y dom g is defined as

Note: The properties of several subdifferentials (see [19]) are listed as follows:

- (I)

- is a closed convex set, is a closed set.

- (II)

- If , then .

- (III)

- If is the minimum point of g, then ; if , then y is the stable point of function g. The set of stable points of function g is denoted as crit g.

- (IV)

- For any y dom g, we get if is normal lower semi-continuous and is continuous differentiable.

Definition 3.

is the stable point of the augmented Lagrangian function , y, λ) for problem (1), i.e., , if and only if

Definition 4

([20]). (Kurdyka−Łojasiewicz property) The function is a normal lower semi-continuous function, let , denoted as and g is said to have KL property in If there exists (0, +∞], a certain rain of y and a continuous concave function : [0,)→, such that

- (I)

- .

- (II)

- is continuously differentiable on (0, ) and is also continuous at 0.

- (III)

- .

- (IV)

- , all KL inequalities hold:

Lemma 1

([21]). (Consistent KL property) Assuming Ω is a compact set and function g: is a normal lower semi-continuous function. If the function g is a constant on Ω and satisfies the KL property at every point in Ω, then there exists such that for any ∈ Ω and any y, they belong to the following intersection

With

In some practical applications, many functions satisfy the KL property, such as semi-algebraic functions, real analytic functions, sub analytic functions, and strongly convex functions, as seen in reference [22].

Lemma 2

([23]). If : is a continuous differentiable function and is Lipschitz continuous, then there exists a Lipschitz constant > 0, such that for any x, y , there is:

Definition 5.

(Sets and functions in semi-algebra)

- (I)

- If there are a finite number of real polynomial functions , such that

- (II)

- A funtion f: →(−∞, +∞] is called semi-algebraic if its graph

Definition 6.

(Cauchy–Schwarz inequality) If and only if x and y are linearly dependent, for , we have .

3. Split Feasibility Problem

3.1. Assumptions

Some assumptions and conditions about problem (3) are listed below.

- (1)

- and .

The solution set gram of this inequality system is represented as , where

and

Note: It can be seen that (γ, s) has a wide range of choices. Specifically, when occurs, the parameters γ and s of the proposed algorithm can take the same value in this interval.

- (2)

- (3)

- Note , where,

- (4)

- Note and . and are fixed constants.

- (5)

- C, Q are both semi-algebraic sets.

- (6)

- f is lg-Lipschitz differentiable, i.e.,

- (7)

- g is proper lower semi-continuous.

- (8)

- The set is bounded.

3.2. Algorithm

For Algorithm 1 (PSRTADMM), its optimal conditions are as follows:

| Algorithm 1 Partially Symmetric Regularized Two-step Inertial Alternating Direction Method of Multipliers for Non-convex Split Feasibility Problems (PSRTADMM) |

3.3. Convergence Analysis

Next, we establish the convergence analysis of the proposed algorithm. Lemma 3 below indicates that the sequence {} monotonically decreases. For ease of analysis, z = (x, y).

Lemma 3.

If Assumptions holds, then

Proof.

Firstly, from the optimality conditions of Equations (5) and (7), we can obtain

And

On the other hand, by the definition of augmented Lagrangian functions, the optimality condition of Equation (6), Lipschitz continuity, and Lemma 2 of f, we have

Given is the optimal solution of (4), it follows that

Therefore, adding Equations (8)–(11), we have

In addition, it can be obtained from Step 2 and Step 4 of Algorithm 1 that

On the other hand, it can be concluded from Step 4 of Algorithm 1 and (6) that:

Combining the Lipschitz continuity of f, we obtain

Furthermore, by applying the Cauchy inequality to (16), we have

So, combining Equations (13), (14) and (17), one has

Substituting Equation (18) into Equation (12), we have

That is

Therefore,

□

Lemma 4.

- (1)

- The sequence is bounded.

- (2)

- is bounded from below and convergent, additionally,

- (3)

- The sequence and have the same limit

Proof.

(1) Because of the decreasing property of {}, we obtain

(2) As {} is bounded, {} is also bounded, and it has at least one aggregation point. Let be a cluster of {}, and . Because g is a lower semi-continuous function and f is continuous differentiable, then is lower semi-continuous. So , that is has a lower bound. According to Lemma 3, monotonically decreases and then converges. Therefore, Lemma 3, for k = 0, 1, …, n. Let , we have:

As , it follows that .

According to Equation (23), there are , so .

(3) From (2), we have and , so and . Combining the definition of in (21) yields . Then, the lemma has been proven.

Utilize the outcomes of Lemma 4 to demonstrate the global convergence of the PSRTADMM algorithm. □

Theorem 1.

(Global convergence) Denote the set of the cluster points of the sequence and by and , respectively. We have:

- (1)

- and are non-empty compact sets and .

- (2)

- if and only if

- (3)

- , is convergent and

- (4)

- .

Proof.

(1) It is easy to prove by the definition of and Ω.

(2) From Lemma 4 and the definitions of {} and {}, we can easily reach the conclusion.

(3) Let . Therefore, there exists , such that . According to (17) and the continuity of f, we derive

Taking monotonicity of {} into account, we obtain that {} is convergent. Thus,

(4) Let . And critL is the set of critical points of L. Then, there exists a subsequence {} of {} that converges to . According to Lemma 4,. From the equations in Step 2 and Step 4, let k = → +∞ and take the limit

Combining , it can be seen that .

So, and are the feasible points for problem (1). According to the PSRTADMM algorithm, the y subproblem has

Combining , with . Also, due to the lower semi-continuity of g(y), there is . Sd g is lower semi-continous, . It follows that .

Furthermore, combining the closeness of ∂g and the continuity f, under the optimality necessary condition, let k = is

Therefore, according to Definition 3, . Therefore, Ω ⊆ . □

Lemma 5.

If the assumptions hold, then there exists a constant C > 0, such that

Proof.

According to the result of Equation (16), there exists a constant > 0, so

By the definition of the augmented Lagrangian function (·), we have

Combining the necessary conditions for optimality (4)–(7) and (23), there are

So, . Therefore, ∀k ≥ 1, there exists > 0, with

Based on the synthesis of Equations (22) and (24), it can be concluded that there exists a constant > 0, ∀k ≥ 2, which has

□

Lemma 6.

Proof.

Because C is a semi-algebraic set, the projection is defined by polynomial constraints. Thus, is a semi-algebraic function.

As Q is a semi-algebraic set, its indicator function is a semi-algebraic function.

In addition, the terms are polynomial functions, hence semi-algebraic.

Therefore, is a semi-algebraic function. According to Lemma 1, the augmented Lagrangian function satisfies the KL property.

The strong convergence of the PSRTADMM algorithm is established using Lemmas 3, 5 and 6, and the relevant conclusions in Theorem 1. □

Theorem 2.

(Strong convergence) Suppose that Section 3.1 holds,satisfies the KL property at each point of, then

- (1)

- .

- (2)

- {} converges to the stable point of L(.).

Proof.

(1) Theorem 1 means that we have . Now, there are two situations for analysis:

Case 1

There exists an integer , such that . By Lemma 3, ∀k ≥ , have

Case 2

Assume ∀k ≥ 1, we have . Due to , for any given ε > 0, there exists > 0, for k > , such that < ε. For any given > 0, there exists > 0, for k > , such that () < () + . Therefore, for a given ε, > 0, when k > k = max{,},

Theorem 1 states that as {} is limited, Ω is a non-empty compact set and (·) is a constant on Ω. Therefore, using Lemma 1, let ∀k > we have

Because , therefore,

Due to the convexity of function , there are

So,

Combining Equation (25), (26) and Lemma 5, there are

Note .

For simplicity, let ∧k = , combining Lemma 3 and Equation (21), , there is

According to Equation (28), there are

Furthermore, there is

From Equation (29), the sum of k = + 1, …, m is obtained

Notice the value of , move the term in the equation above, and apply Cauchy inequality to make m → +∞ to have

So, , . Combined with Equation (26), there are

Additionally, we have noticed that

So,

(2) From (1), we know that {} is a Cauchy sequence and therefore converges, and then from Theorem 1 (3) knowing that {} converges to the stable point of sequence (·) as a whole. □

Lemma 7.

- (1)

- g is coercive, i.e., .

- (2)

- relaxation factor .

- (3)

- function has a lower bound and is coercive, i.e.,

Then, the sequence {} generated by PSRTADMM is bounded.

Proof.

According to monotonically decreasing , combined with (15) has

Furthermore, the fact that is a normal lower semi-continuous forcing function on a closed set is readily apparent. Consequently, there is

Therefore, it is easy to determine that is bounded, so is bounded, and the combination of combination (10) proves is bounded as well. The boundedness of has thus been established. Certificate completion. □

4. Numerical Experiments

This section presents a numerical example to validate the efficacy of Algorithm 1 by addressing the split feasibility problem. The variables and constraints in the experiments exactly match those defined in the theoretical part, thereby ensuring that the experiments can effectively test the validity and practicality of the theory. In the experimental stage, we compare Algorithm 1 with the traditional proximal algorithm to demonstrate how superior the performance of Algorithm 1 is when dealing with the split feasibility problem. All codes were implemented using MATLAB R2021a on a desktop computer with 32 GB of RAM.

In the field of CT image reconstruction, the central objective of inverse problems is to restore an image from noisy CT data. This process can be regarded as the following inverse problem:

where is the observed data, is the true (original) underlying image, represents the measurement error (Gaussian noise at level ), and is the Radon transform in X-Ray computer tomography (CT).

In view of the fact that Equation (30) often exhibits ill-posedness and there are significant difficulties in solving it; in order to ensure the stability of the solutions, we conduct an investigation into the following model:

where F(x) is the regularization term dependent on prior knowledge of images, G(x) is the fidelity term and α > 0 is a balancing regularization parameter. In this experiment, we consider and , which is a widely used fidelity term in CT reconstruction. The term Dix indicates the discrete gradient of x at pixel I and the sum plays the role of total variation (TV) regularization for x. The operator Di at the pixel horizontal gradient operator and vertical gradient operator. Thus, it is easy to rewrite the model (31) as SFP (1) by setting , and y = Hx. The test image is the 96 × 96 Shepp−Logan phantom, corrupted by Gaussian noise with standard deviation σ = 0.05 (equivalent to ε ≈ 4.8 in the feasibility set Q). The parameters that we used for the trials were as follows: , and α = 0.001. The choice of these parameters was primarily based on empirical tuning and theoretical guarantees to ensure the stability and convergence of the algorithm. The settings of and influence the step size and convergence speed of the algorithm, while and control the strength of the inertial effects, which helps accelerate convergence. As a regularization parameter, α balances the fidelity term and the regularization term in image reconstruction, significantly impacting image quality.

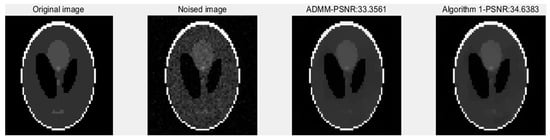

Figure 1 shows four images: the original sinogram image, the noised sinogram image, the image reconstructed by ADMM, and the image reconstructed by Algorithm 1. A comprehensive comparison of the reconstructed images in Figure 1 shows that Algorithm 1 yields higher-quality images than ADMM. To evaluate image quality more objectively, we used the peak signal-to-noise ratio (PSNR) as a statistical indicator. A higher PSNR value means better image quality, less noise, and a smaller difference between the processed image and the original one. The results show that the PSNR of the image reconstructed by Algorithm 1 is 34.6383, which has a significant advantage over the 33.3561 obtained by the alternating direction method of multipliers (ADMM).

Figure 1.

The recovered sinogram image by two algorithms.

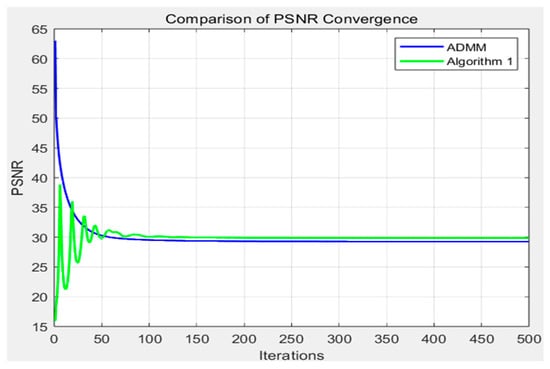

From the relationship between the peak signal-to-noise ratio (PSNR) values and the number of repetitions of the two algorithms presented in Figure 2, it can be clearly observed that the images generated by Algorithm 1 are approaching the original image, and their stability is gradually increasing, eventually achieving convergence to the ideal state. This proves our algorithm is successful.

Figure 2.

PSNR value of the reconstructed image using two algorithms.

Note: the presented experiment focuses on a specific CT image reconstruction scenario. Although the results highlight the algorithm’s efficacy in this case, further studies with diverse datasets and problem configurations are necessary to comprehensively evaluate the robustness and generality of PSRTADMM. This constitutes an important direction for future research.

5. Conclusions

In this paper, we put forward a partially symmetric regularized two-step inertial alternating direction method of multipliers to deal with non-convex split feasibility problems. The proposed algorithm innovatively includes an intermediate multiplier update and two-step inertial effects in subproblems. Through theoretical analysis under appropriate assumptions, its global convergence is proven. In addition, when the augmented Lagrangian function satisfies the Kurdyka−Łojasiewicz (KL) property, the algorithm can achieve a strong convergence, which means it can converge to a more accurate solution. Finally, numerical experiments were conducted in the field of CT image reconstruction. The results show that the proposed algorithm outperforms traditional methods in terms of the reconstruction quality and convergence speed, further confirming its effectiveness.

Author Contributions

Conceptualization, C.Y. and Y.D.; Methodology, C.Y. and Y.D.; Software, C.Y.; Validation, C.Y.; Writing—original draft, C.Y.; Visualization, C.Y.; Supervision, Y.D.; Project administration, Y.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Research supported by The Program for Professor of Special Appointment (Eastern Scholar) at Shanghai Institutions of Higher Learning, grant number No. TP2022126.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, Y.; Yang, J.; Yin, W.; Zhang, Y. A New Alternating Minimization Algorithm for Total Variation Image Reconstruction. SIAM J. Imaging Sci. 2008, 1, 248–272. [Google Scholar] [CrossRef]

- Dong, B.; Li, J.; Shen, Z. X-Ray CT Image Reconstruction via Wavelet Frame Based Regularization and Radon Domain Inpainting. J. Sci. Comput. 2013, 54, 333–349. [Google Scholar] [CrossRef]

- Block, K.T.; Uecker, M.; Frahm, J. Undersampled radial MRI with multiple coils. Iterative image reconstruction using a total variation constraint. Magn. Reson. Med. 2007, 57, 1086–1098. [Google Scholar] [CrossRef]

- Liu, S.; Cao, J.; Liu, H.; Zhou, X.; Zhang, K.; Li, Z. MRI reconstruction via enhanced group sparsity and nonconvex regularization. Neurocomputing 2018, 272, 108–121. [Google Scholar] [CrossRef]

- Lustig, M.; Donoho, D.; Pauly, J.M. Sparse MRI: The application of compressed sensing for rapid MR imaging. Magn. Reson. Med. 2007, 58, 1182–1195. [Google Scholar] [CrossRef]

- Gibali, A.; Kuefer, K.H.; Suess, P. Successive Linear Programing Approach for Solving the Nonlinear Split Feasibility Problem. J. Nonlinear Convex Anal. 2014, 15, 345–353. [Google Scholar]

- Byrne, C. Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 2002, 18, 441–453. [Google Scholar] [CrossRef]

- Censor, Y.; Motova, A.; Segal, A. Perturbed projections and subgradient projections for the multiple-sets split feasibility problem. J. Math. Anal. Appl. 2007, 327, 1244–1256. [Google Scholar] [CrossRef]

- Dang, Y.; Gao, Y. The strong convergence of a KM-CQ-like algorithm for a split feasibility problem. Inverse Probl. 2011, 27, 015007. [Google Scholar] [CrossRef]

- Qu, B.; Wang, C.; Xiu, N. Analysis on Newton projection method for the split feasibility problem. Comput. Optim. Appl. 2017, 67, 175–199. [Google Scholar] [CrossRef]

- Fukushima, M. Application of the alternating direction method of multipliers to separable convex programming problems. Comput. Optim. Appl. 1992, 1, 93–111. [Google Scholar] [CrossRef]

- Deng, W.; Yin, W. On the Global and Linear Convergence of the Generalized Alternating Direction Method of Multipliers. J. Sci. Comput. 2016, 66, 889–916. [Google Scholar] [CrossRef]

- Wang, Y.; Yin, W.; Zeng, J. Global Convergence of ADMM in Nonconvex Nonsmooth Optimization. J. Sci. Comput. 2019, 78, 29–63. [Google Scholar] [CrossRef]

- Yang, Y.; Jia, Q.S.; Xu, Z.; Guan, X.; Spanos, C.J. Proximal ADMM for nonconvex and nonsmooth optimization. Automatica 2022, 146, 110551. [Google Scholar] [CrossRef]

- Ouyang, Y.; Chen, Y.; Lan, G.; Pasiliao, E., Jr. An Accelerated Linearized Alternating Direction Method of Multipliers. SIAM J. Imaging Sci. 2015, 8, 644–681. [Google Scholar] [CrossRef]

- Hong, M.; Luo, Z.-Q. On the linear convergence of the alternating direction method of multipliers. Math. Program. 2017, 162, 165–199. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, M.; Pen, X.; Tan, J. Partial symmetric regularized alternating direction method of multipliers for non-convex split feasibility problems. AIMS Math. 2025, 10, 3041–3061. [Google Scholar] [CrossRef]

- Dang, Y.; Chen, L.; Gao, Y. Multi-block relaxed-dual linear inertial ADMM algorithm for nonconvex and nonsmooth problems with nonseparable structures. Numer. Algorithms 2025, 98, 251–285. [Google Scholar] [CrossRef]

- Rockafellar, R.T.; Wets, R.J.-B. Variational Analysis; Springer Science and Business Media: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Bolte, J.; Daniilidis, A.; Lewis, A. The Lojasiewicz inequality for nonsmooth subanalytic functions with applications to subgradient dynamical systems. SIAM J. Optim. 2007, 17, 1205–1223. [Google Scholar] [CrossRef]

- Attouch, H.; Bolte, J.; Svaiter, B.F. Convergence of descent methods for semi-algebraic and tame problems: Proximal algorithms, forward–backward splitting, and regularized Gauss–Seidel methods. Math. Program. 2013, 137, 91–129. [Google Scholar] [CrossRef]

- Wang, F.; Cao, W.; Xu, Z. Convergence of multi-block Bregman ADMM for nonconvex composite problems. Sci. China-Inf. Sci. 2018, 61, 122101. [Google Scholar] [CrossRef]

- Nesterov, Y. Introductory Lectures on Convex Optimization: A Basic Course; Springer: New York, NY, USA, 2004. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).