1. Introduction

Fractional differential equations (FDEs) have gained interest in science, engineering, and technology because they describe systems with complex and anomalous processes involving infinite correlations. They have applications across many fields of applied sciences, so the investigations for these equations have attracted much interest. Some applications for FDEs can be found in [

1,

2,

3]. Due to the inability to solve these equations analytically, it is important to resort to numerical analysis with different approaches. Many numerical approaches are employed to treat the different types of FDEs. Among these methods are the Adomian decomposition method [

4,

5], the variational iteration transform method [

6], the Laplace transform method [

7], the wavelet neural method [

8], the Haar wavelets method [

9], the generalized Taylor wavelets [

10], the Adams–Bashforth–Moulton methods [

11,

12], the homotopy perturbation method [

13], the shifted Gegenbauer–Gauss collocation method [

14], the shifted Jacobi collocation method [

15], and the localized hybrid kernel meshless method [

16].

The classical Burgers’ equation is one of the most essential FDEs; it employs fractional derivatives to describe a wide range of physical events. This equation has been significantly extended to derive the fractional Burgers’ equation. This equation becomes even more critical when modeling processes with typical diffusion or memory effects because traditional integer-order differential equations cannot account for them. Understanding the physical meaning of the fractional Burgers’ equation is crucial. In [

17,

18], the authors highlighted the role of this equation in modeling subdiffusion convection processes relevant to various physical problems and in describing the propagation of weakly nonlinear acoustic waves through gas-filled pipes, respectively. Many numerical algorithms have been employed to solve the fractional Burgers’ equation. For example, in [

19], the time-fractional Burgers’ equation was solved based on the Haar–Sinc spectral approach. Recently, the authors of [

20] followed a compact difference tempered fractional Burgers’ equation. A discontinuous Galerkin method was used to treat the time-fractional Burgers’ equation. The homotopy method was applied in [

21]. The authors of [

22] solved the one- and two-dimensional time-fractional Burgers’ equation using Lucas polynomials. Some other contributions can be found in [

23,

24,

25,

26,

27].

Modern engineering and physics applications necessitate a more comprehensive understanding of applied mathematics than previously required. Specifically, a solid grasp of the fundamental properties of special functions is essential. These functions are frequently used in various fields, including communication systems, electromagnetic theory, quantum mechanics, approximation theory, probability theory, electrical circuit theory, and heat conduction. For some applications of special functions, one can refer to [

28,

29].

Fibonacci and Lucas polynomials and their generalizations have been extensively studied in the mathematical literature due to their rich properties and diverse applications across various fields, such as combinatorics, graph theory, and numerical analysis. These polynomials are extensively used in numerical analysis to solve several differential equations (DEs). We give some contributions in this direction. In [

30], the author used Fibonacci polynomials to treat FDEs. The authors of [

31] treated numerically a modified epidemiological model of computer viruses using Fibonacci wavelets. Pell polynomials were used in [

32] to solve stochastic FDEs. Another application for the Pell polynomials was given in [

33]. Shifted Lucas polynomials were used in [

34] to solve the time-fractional FitzHugh–Nagumo DEs. Other shifted Horadam polynomials were used to solve the nonlinear fifth-order KdV equations in [

35]. In [

36], the authors treated fractional Bagley–Torvik using Fibonacci wavelets. The authors of [

37] handled the Lane–Emden type equation using combined Pell–Lucas polynomials. Vieta–Lucas polynomials were used in [

38] to solve specific systems of FDEs using an operational approach.

Among the extensions of Fibonacci sequences are the convolved generalized Fibonacci polynomials, which were presented and examined in [

39]. These polynomials extend several classical sequences, including Fibonacci and Pell polynomials and their generalized ones. In [

40], the authors examined some features of the convolved generalized Fibonacci and Lucas polynomials. Some other formulas concerned with the convolved polynomials were developed in [

41]. In [

42], the authors introduced certain generalizations of convolved generalized Fibonacci and Lucas polynomials. In [

43], the authors formulated novel formulas for convolved Pell polynomials. In [

44], the authors developed some new formulas for convolved Fibonacci polynomials. This paper will introduce and use particular polynomials of the generalized ones introduced in [

39], namely, convolved Fermat polynomials. These polynomials generalize the standard conventional Fermat polynomials.

Spectral methods are a class of numerical methods that approximate the solution of differential equations by expanding the solution in terms of global basis functions, which are typically special functions. In contrast to finite difference and finite element methods, spectral methods use global approximations. A key advantage of spectral methods is their exponential convergence to solutions in smooth scenarios; that is, the error decays fast as the basis functions count increases. This means that spectral methods are ideal for highly accurate models. There are three major categories of spectral methods. These are the Galerkin, tau, and collocation methods. With the spectral Galerkin method, the solution is written as a series of basis functions, and the residual is ensured to be orthogonal to these functions. In addition, the selected trial and test functions should coincide. In [

45], the authors handled some partial DEs through the Galerkin method. In [

46], the authors applied spectral Galerkin methods for some FDEs. The authors of [

47] used the Galerkin method and generalized Chebyshev polynomials to treat some fractional delay pantograph DEs. The tau method is unlike the Galerkin method in that one is free to pick the trial and test functions. The authors of [

48] utilized the tau method to treat the time-fractional cable problem. In [

49], the authors treated systems of fractional-order integro-DEs using the tau method along with the monic Laguerre polynomial. Other integro-DEs were treated in [

50] using a tau–Gegenbauer spectral method. In the collocation method, the residual should vanish at some discrete collocation points. This approach is advantageous due to its applicability to all types of DEs, so it is used extensively to treat many DEs. For example, the authors of [

51] followed a collocation approach to certain stochastic FDEs. Another collocation method based on Chebyshev polynomials was used in [

52] to solve some elliptic partial DEs. In [

53], the Laguerre spectral collocation method was used to solve the space-fractional diffusion equation. The authors of [

54] followed a collocation method to treat FitzHugh-–Nagumo DEs. Another collocation method was employed in [

55] to treat certain variable-order FDEs.

This paper concentrates on the numerical solution of the time-fractional Burgers’ equation [

56]:

with the following conditions:

where

represents the source term and

represents the kinematic viscosity.

We comment here on the remark of Wang and He in [

57], in which they demonstrated that, to maintain consistency, both time and space are modeled fractionally—a principle termed the fractional spatio-temporal relation. This generalization will be a target for us in a forthcoming paper.

The following are the primary objectives of this paper:

Providing certain polynomials that generalize the well-known standard Fermat polynomials, called the convolved Fermat polynomials.

Establishing the analytic and inversion formulas of these polynomials.

Constructing the operational derivative matrices of the convolved Fermat polynomials for both integer and fractional derivatives.

Developing a collocation technique to handle the time-fractional Burgers’ equation.

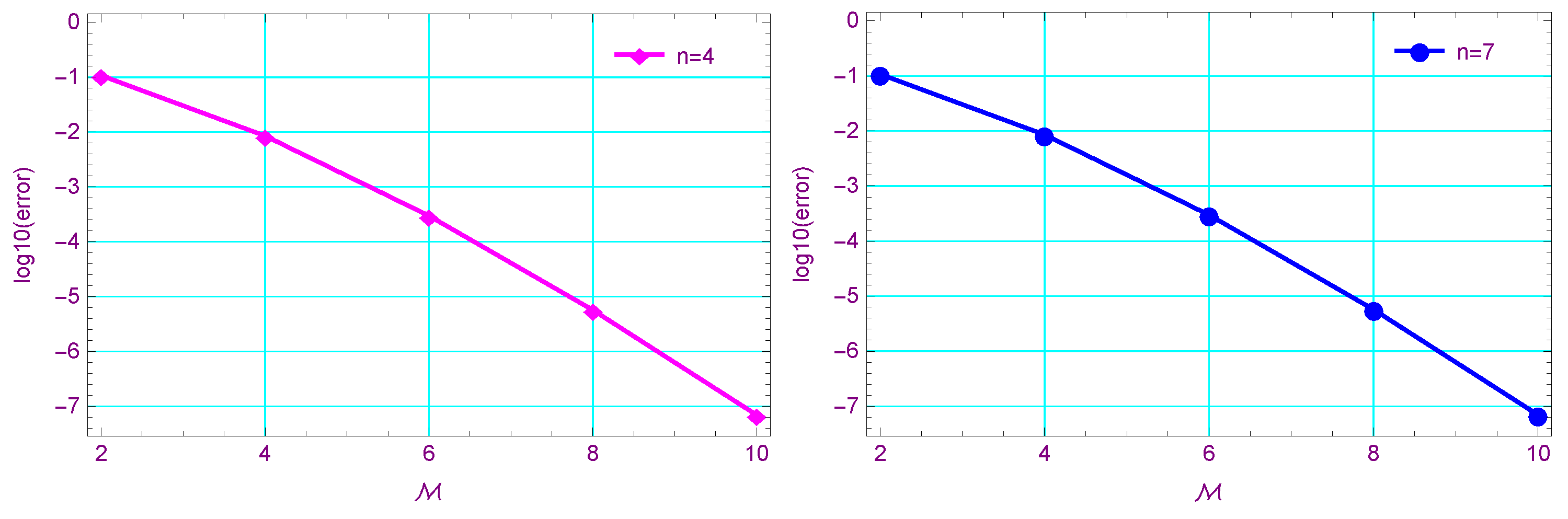

Analyzing the convergence and error analysis of the convolved Fermat expansion.

Testing the accuracy of our numerical algorithm against other established techniques to demonstrate its effectiveness.

Furthermore, we mention here that some advantages and original contributions in this work can be listed as follows:

The new theoretical results concerning the convolved Fermat polynomials are new. We think that the theoretical background of these polynomials in this paper may be utilized in other contributions in the scope of the numerical solutions of differential equations.

To our knowledge, employing such polynomials in numerical analysis is new. This gives us motivation for our study.

Highly accurate solutions can be obtained by employing the convolved Fibonacci polynomials as basis functions.

The rest of the paper is organized as follows. The next section overviews the convolved generalized polynomials and some of their particular polynomials. Some new formulas of the convolved Fermat polynomials, such as their power form representation and its inversion formula, are developed in

Section 3.

Section 4 analyzes the numerical algorithm designed to solve the fractional Burgers’ equation based on the application of the collocation method using the convolved Fermat polynomials as basis functions. The convergence of the convolved Fermat expansion is presented in

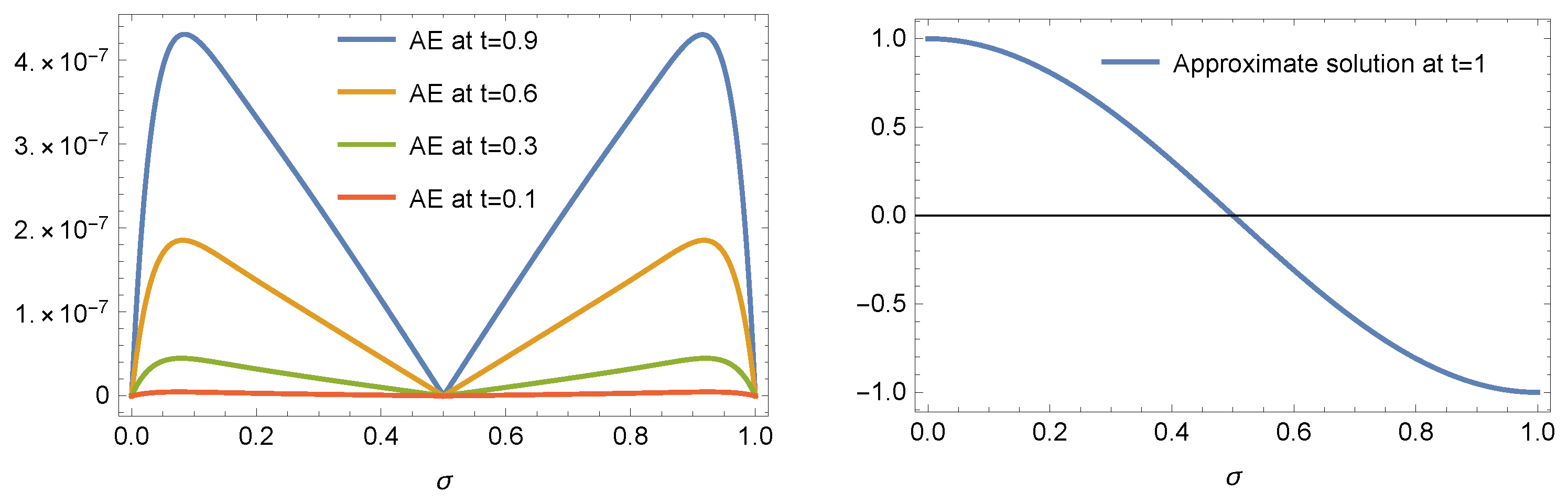

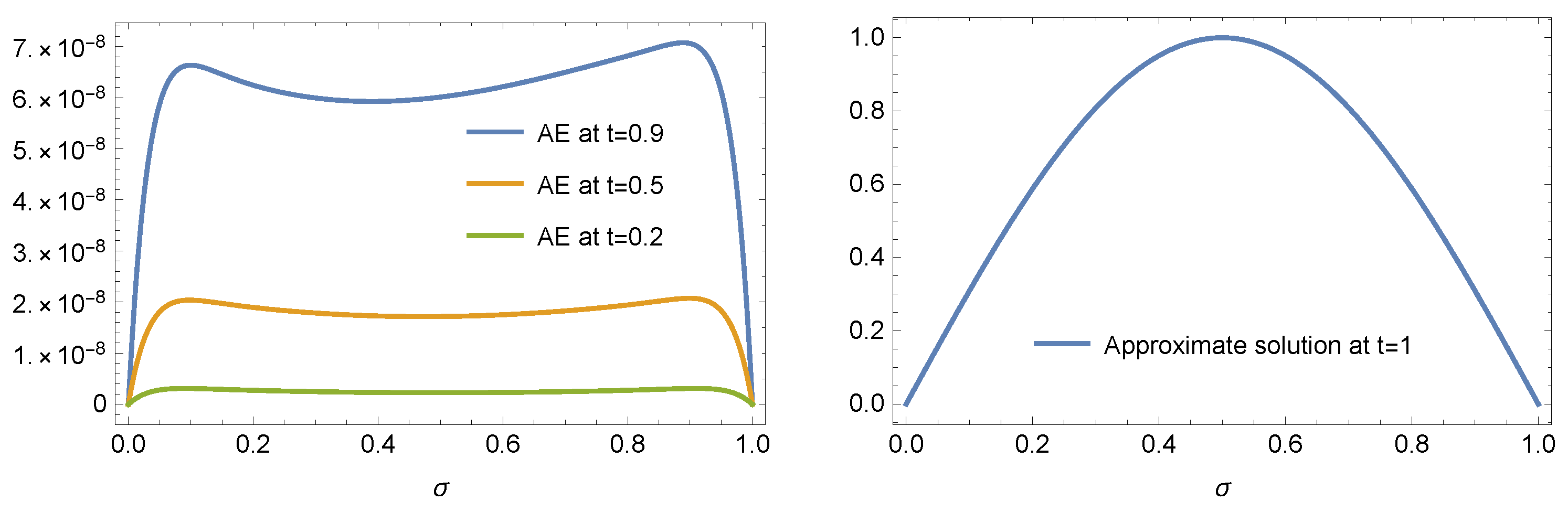

Section 5 by stating and proving some lemmas and theorems. Some illustrative examples are presented in

Section 6 accompanied by comparisons with some other algorithms to test our numerical algorithm. Finally,

Section 7 presents the concluding findings.