Abstract

Interior design, which integrates art and science, is vulnerable to infringements such as copying and tampering. The unique and often intricate nature of these designs makes them vulnerable to unauthorized replication and misuse, posing significant challenges for designers seeking to protect their intellectual property. To solve the above problems, we propose a deep learning-based zero-watermark copyright protection method. The method aims to embed undetectable and unique copyright information through image fusion technology without destroying the interior design image. Specifically, the method fuses the interior design and a watermark image through deep learning to generate a highly robust zero-watermark image. This study also proposes a zero-watermark verification network with U-Net to verify the validity of the watermark and extract the copyright information efficiently. This network can accurately restore watermark information from protected interior design images, thus effectively proving the copyright ownership of the work and the copyright ownership of the interior design. According to verification on an experimental dataset, the zero-watermark copyright protection method proposed in this study is robust against various image-oriented attacks. It avoids the problem of image quality loss that traditional watermarking techniques may cause. Therefore, this method can provide a strong means of copyright protection in the field of interior design.

MSC:

68T07; 68T01; 54H30

1. Introduction

Interior design is a type of design that is a creative output of human society. As a discipline that integrates art and science, it produces a variety of media forms that carry designers’ unique creative concepts. The digital age has provided more possibilities for design innovation and application. Many researchers have tried to use human designers’ design data to batch-generate designs with specific decorative styles and spatial functions and have retrained diffusion models to create new datasets of interior decorative styles, thus further expanding the creative boundaries of design [1]. In addition, the increasing expansion of the channels for the dissemination of design works, which promotes design sharing and communication, also brings new challenges. Design works are more susceptible to theft, tampering, and illegal copying in digital environments and are exposed to the risk of copyright infringement [2,3,4]. These actions not only infringe upon the intellectual property rights of the original creators but also may lead to substantial economic losses. Therefore, protecting the rights and interests of original designs and establishing a sound mechanism for copyright protection and technology maintenance are of great significance for safeguarding the rights and interests of designers and promoting the benign development of the industry.

As an essential method of embedding copyright information into digital media, watermarking technology can achieve the purpose of copyright protection and content verification. However, traditional watermarking technology has certain limitations in protecting interior design. Firstly, visible watermarking can reduce the visual quality and original expression of design works and weaken the aesthetic relationships in artistic communication. Secondly, to ensure the robustness of watermarking, traditional techniques often require a sizable embedding intensity, especially in high-contrast areas of a watermark embedded in a design image, which can also lead to the destruction of the color hierarchy and texture details. For this reason, the development of zero-watermarking techniques brings a new perspective. Unlike traditional watermarking techniques that directly modify the carrier image, zero-watermarking techniques provide copyright protection by extracting the inherent features of an image [5]. However, there are still some problems with existing zero-watermarking methods. Firstly, most of the zero-watermarking techniques based on image transformations have poor robustness when an image is geometrically oriented because their manual features are easily affected by the relative positions of images. Secondly, interior design has rich color levels and texture details, but most zero-watermarking methods are limited to gray-scale images for feature extraction. This feature extraction method can only capture shallow information, and it is not easy to fully reflect the complex structure of an image. Especially when facing interior design with complex colors and textures, the robustness of existing methods is insufficient, which limits their application in real scenarios.

To solve the above problems, we propose an interior design protection scheme based on image fusion through deep learning. The scheme first extracts the higher-order features of the color and texture of the host image and the watermarked image’s salient regions and edge features, respectively, using deep learning techniques. Subsequently, to construct a more complex and robust feature space, we organically fuse these two types of features to generate a zero-watermark image for copyright protection. In the copyright determination stage, the host image is superimposed with the zero-watermark image and input into the zero-watermark authentication network to extract the copyright protection information and realize accurate copyright determination. This scheme effectively solves the problem of the insufficient robustness of traditional methods in complex image scenarios. It is vital to improve the copyright protection system for interior design and promote the development of the industry.

The contributions of this study are as follows:

- A novel zero-watermark method for interior design preservation based on image fusion through deep learning is proposed.

- A zero-watermark authentication network for extracting copyright protection information for accurate copyright identification for interior design is proposed.

- Our proposed method has good robustness against various types of attacks.

The remainder of this article is structured as follows: Section 2 introduces the related work on zero-watermark methods. Section 3 presents the proposed method. Section 4 describes the experimental validation of this study’s method. Section 5 and Section 6 discuss and summarize the process, respectively.

2. Related Work

2.1. Watermark Protection Methods

Currently, the development of watermarking technology, as an essential method of embedding copyright information into digital media, has evolved from simply visible watermarking to complex invisible watermarking [6]. Traditional watermarking technology achieves the purpose of copyright protection and content verification by embedding information such as text, images, or digital data into the spatial or frequency domain [7,8]. Research has shown that an effective watermarking system needs to satisfy the three basic metrics of covertness, robustness, and security simultaneously [9]. Watermarking to protect copyrights should not affect the standard display of the disseminated creative content and should provide resistance to external theft and attacks [10]. In recent years, with the rapid development of digital media technology, it has been gradually revealed that, in practical applications, traditional watermarking technology has the limitations of insufficient attack resistance and limited embedding capacity [11]. Since the current watermarking technology for interior design image protection is mainly reflected in the visual domain [12], traditional watermarking technology in the protection of interior design will face two types of limitations. Firstly, the direct embedding method of traditional watermarking can lead to an irreversible loss of image quality, destroying an image’s color hierarchy and texture details [13]. As a result, visible watermarking can degrade the visual quality and original expression of design work and even weaken the aesthetic relationships in artistic communication. For example, embedding traditional watermarks in marble design images resulted in the distortion of material gloss and texture details. Secondly, traditional watermarking often lacks robustness in complex image processing, and it is usually challenging to determine the optimal embedding region [14]. In addition, although watermarking is widely used as an effective protection method, its technology also has certain security risks. In particular, if the watermarking algorithm is not secure enough, an attacker may remove or tamper with the watermark information.

Therefore, as interior design ideas flow through the marketplace in the future, watermarking technology should be compatible with different image formats and processing flows to better enable watermarking algorithms to strike a balance between protecting information and maintaining image quality.

2.2. Zero-Watermark Protection Methods

In contrast to traditional watermarking methods, zero-watermarking techniques can protect intellectual property rights in design works without changing the initial design by extracting image features for formal copyright authentication [5]. Based on the concept of zero-watermarking, Liu et al. designed a zero-watermarking technology scheme by combining a dual-tree complex wavelet transform and discrete cosine transforms and experimentally verified that the scheme showed better performance in the presence of multiple image attacks (DCT) [15]. However, in current practice, the problem of the poor robustness of extracted image features remains, which leads to the emergence of the weak performance of zero-watermarking methods. Therefore, more and more teams are trying to utilize deep neural networks to learn and build automatic image watermarking algorithms [16]. Such deep learning-based zero-watermarking techniques have attracted the interest of scholars concerned with current digital image copyright protection due to their automated and efficient image feature extraction.

Several existing studies have shown that a fusion mechanism based on deep feature extraction not only enhances the robustness of the zero-watermarking method but also provides a barrier to intellectual property protection for artistic creators [17]. Xiang et al. constructed image style features for zero-watermarking construction [18]. In addition, Shi et al. proposed a zero-watermarking algorithm based on multiple features and chaotic encryption to improve the distinguishability of different zero-watermark images [19], and Li et al. pointed out that deep learning-based zero-watermarking technology is driving change in the field of copyright protection at an unprecedented speed [20]. Further, other studies have also attempted the application of deep feature extraction in zero-watermarking methods. Cao’s team [21] designed a multi-scale feature fusion mechanism to accurately extract watermark information during image propagation even when it encounters malicious attacks such as geometric transformation, compression, or cropping. This deep learning-based zero-watermarking method performs well against various attacks and maintains the image’s visual quality, providing new ideas for copyright protection for interior design works [22].

3. Method

3.1. Overview

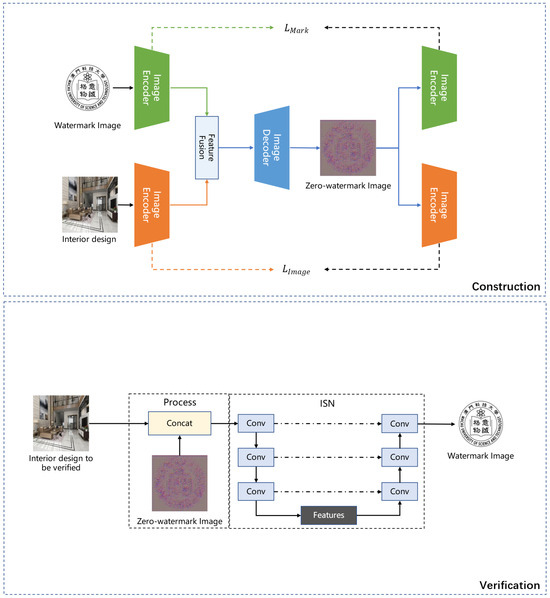

The flowchart of the methodology proposed in this study is shown in Figure 1. It consists of two primary parts: zero-watermark construction based on an image fusion network and zero-watermark authentication utilizing an inspection network (ISN).

Figure 1.

The overall structure of the proposed zero-watermark method.

In the zero-watermark construction phase, the protected interior design and watermark images are processed through an encoder to extract their respective features. Subsequently, these extracted features are fused, and the fused feature representation is passed through the decoder to generate a robust zero-watermark image, which is then stored. The construction approach aims to leverage deep learning techniques to integrate the interior design with the watermark image, ensuring that the zero-watermark image contains the salient features of both the interior design and the watermark image. Our construction approach can significantly enhance the robustness of the watermark against various forms of image attacks.

An inspection network (ISN) is employed to verify the zero-watermark image. The ISN is trained to separate the zero-watermark image and reconstruct the embedded watermark image. During the authentication process, the interior design is verified, and the zero-watermark image is used as the input to the network, thereby obtaining the extraction and authentication of the initially embedded watermark image information.

3.2. Zero-Watermark Construction

Here, the interior design is defined as . The watermark image is denoted by . denotes the pixel value at position of . denotes the pixel value at position of .

Firstly, zero-watermark generation takes and as inputs and performs feature extraction based on the encoder.

Secondly, the features extracted from and will undergo feature fusion.

Thirdly, the decoder turns the fused features into a zero-watermark image.

Finally, the zero-watermark image is sent back to the previous feature extraction encoder, and the loss for training is calculated.

3.2.1. Feature Extraction

Input: Interior design and watermark image .

Output: The extracted features of the interior design and the watermark image . H and W represent the height and weight of the feature, respectively. C represents the channel of the feature.

During zero-watermark construction, the encoder can transform the interior design and watermark image into high-dimensional feature representations, providing a reliable base for zero-watermark generation. Considering the efficiency and performance requirements of zero-watermark construction, MobileNet v2 [23], with its lightweight design, is introduced for the efficient extraction of features from image content. It is a lightweight model that employs depthwise-separable convolution and an inverted residual structure to achieve efficient feature extraction by reducing the amount of computation and the number of parameters. In this study, MobileNet v2 adopts a four-layer inverse residual structure, with a step size of 1 for the first and third layers and a step size of 2 for the second and fourth layers. The feature extraction network is denoted by , and its structure is summarized in Table 1.

Table 1.

The structure of the feature extraction network.

Firstly, the input interior design and watermark image perform convolution operations with a kernel size of to obtain the shallow features. The process can be represented as follows:

Here, and denote the feature extraction networks for the interior design and the watermark, respectively. and represent the results of the shallow features. denotes convolution operations with a kernel size of , denotes batch normalization, is the activation function, represents the mean value of X, and represents the variance of X. and represent the scaling factor and offset, respectively.

Secondly, four inverted residual blocks are introduced to reduce the model parameters while maintaining model performance. Each block contains an expansion layer, depthwise-separable convolution, and residual connections. The extension layer aims to increase the number of channels through convolution. After the inverted residual blocks, a convolution layer with a kernel size of is used to obtain the features extracted from images. The process can be represented as follows:

Here, represents residual blocks that have been inverted four times, and denotes depthwise-separable convolution with a kernel size of . The extracted features of the interior design and watermark image are denoted by and , and they will be used for the subsequent feature fusion.

3.2.2. Feature Fusion

Input: The extracted feature of the interior design is , and the extracted feature of the watermark image is . and represent the height and weight of the extracted feature, and represents the channel of the feature.

Output: The fused feature is . and represent the height and weight of the feature, and represents the channel of the feature.

Generally, zero-watermark images constructed by relying only on a single feature are prone to loss or corruption in the face of common attacks, such as noise, compression, and cropping. To this end, we effectively improve the performance of zero-watermark features by fusing the features of protected and watermarked images to generate more complex and robust feature representations.

After feature extraction, we take and as inputs and fuse them to obtain the fused feature with Equation (13).

Here, is a control coefficient responsible for regulating the fusion ratio of and .

3.2.3. Zero-Watermark Image Generation

Input: The fused feature

Output: A zero-watermark image Z.

The generation of zero-watermark images is based on the decoder. The decoder reduces the fused features to a zero-watermarked image, aiming to generate a robust zero-watermarked image based on semantic features. This approach ensures that the generated zero-watermark image contains both the fused features of the interior design and the watermark image and makes the generated zero-watermark image highly resistant to attacks, which improves the overall robustness and applicability of the zero-watermarking method.

Here, the decoder achieves feature upsampling and reduction through transpose convolution and batch normalization to gradually generate zero-watermarked images from the fused feature maps. Meanwhile, the ReLU activation function enhances the nonlinear representation capability. The detailed structure is shown in Table 2.

Table 2.

The structure of the decoder.

The calculation process can be represented as follows:

Here, Z denotes the generated zero-watermark image, and denotes the convolution operation to adjust the number of channels. and are the activation functions. denotes a block that has been upsampled three times. represents the transpose convolution operation.

To ensure that the extracted interior design and watermarked image features maintain integrity and their correlation with the generated zero-watermarked image, we designed a two-way reconstruction mechanism. Specifically, the generated zero-watermark image is sequentially fed into the encoder, which extracts the features of the interior design () and the watermark image () for further processing. It can ensure the quality and feature correlation of the generated zero-watermark image. The process is expressed as follows:

Here, is the generated feature of Z for , and is the generated feature of Z for .

Thus, the overall loss can be defined as , and the calculation can be expressed as follows:

where is the control parameter.

This consists of two parts. Firstly, is used to measure the watermark difference between the watermark image and the zero-watermark image Z, and it is expressed as

Here, represents the generated feature of Z for at position , while represents the pixel value of the watermark image at position .

Secondly, is used to measure the interior design difference between the interior design and the zero-watermark image Z, and it is expressed as

Here, represents the generated feature of Z for at position , and represents the pixel value of the watermark image at position .

Through network training and by minimizing the loss , the network constructs a zero-watermark image containing information on both the interior design and the watermark, which provides a certain degree of security and is challenging to illegally crack due to the complexity of the fused features. Further, the zero-watermark image is robust due to the extraction and fusion for the generation of stable image features.

3.3. Zero-Watermark Verification

The zero-watermark verification process consists of two steps. First, the interior design to be tested (denoted by ) and the zero-watermark image are fed into the inspection network (ISN). Second, the ISN transforms the input to obtain the reconstructed copyright image .

ISN

The ISN is based on the UNet [24] shown in Figure 1. The network consists of three parts: the encoder, bottleneck layer, and decoder. The encoder section consists of four convolutional blocks, and each is followed by a max pooling layer, thus gradually reducing the size of the feature map and increasing the number of feature channels. The bottleneck layer contains a convolutional module that processes the feature map output by the encoder for input into the decoder. The decoder restores the feature map size through a stepwise upsampling operation (using transposed convolutional layers) and combines it with the encoder output of the corresponding layer for skip connections. This section contains four convolutional blocks that process the concatenated results of the upsampled feature map and the corresponding layer’s feature map in the encoder. Finally, the output layer uses a convolution to map the number of channels to the target output channel number.

Firstly, we simulate various image attacks on image and obtain the set of attacked images . is expressed in Equation (24).

Here, is the attack operation function, and represents the parameter combinations of different attack strategies or intensities.

Secondly, we take the generated zero-watermark image Z and the set of attacked images as the input and regard the watermark image as the target output for ISN training. Secondly, the training loss for verification (denoted by ) is defined in Equation (25).

Here, denotes the reconstructed copyright image, and is the dimension of the copyright image.

After the ISN completes training, we take the generated zero-watermark image Z and the detected image as the input for the ISN to obtain the reconstructed copyright image for copyright verification.

Compared with traditional verification methods, this method can still effectively recover the original watermark features from a damaged image when facing high-intensity image attacks, enhancing its overall robustness.

4. Experiments

4.1. Dataset and Evaluation Indexes

We selected two interior design datasets for testing, namely, interior_design https://www.kaggle.com/datasets/aishahsofea/interior-design (accessed on 24 February 2025) and a synthetic dataset for home interiors https://www.kaggle.com/datasets/luznoc/synthetic-dataset-for-home-interior/ (accessed on 24 February 2025). Both datasets were taken from the Kaggle project. The interior_design (I_D) dataset includes 4147 interior design photos with different locations and styles. The synthetic dataset for home interiors (SHI) consists of diverse annotated composite data for computer vision projects, and it includes 85 high-quality composite RGB interior design images showcasing rich interior scenes.

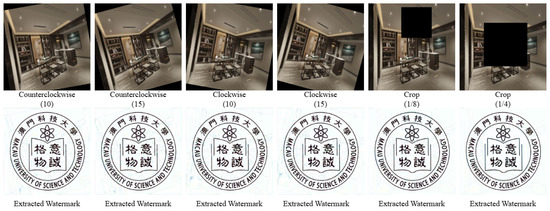

In our experiment, five images were selected from the I_D dataset, and four were selected from the SHI dataset for performance evaluation. The image size was . A copyrighted watermark image is shown in Figure 2, with a size of . Adam was adopted in the model as the optimization method.

Figure 2.

The interior designs after conventional attacks and the extracted copyright images.

We adopted the peak signal-to-noise ratio (PSNR) and normalized coefficient (NC) to verify the performance of the proposed method.

Firstly, the PSNR is commonly used to measure the quality of an image—or, more precisely, the quality of a watermark image and reconstructed copyright image [25]. The higher the PSNR value, the better the image quality. The calculation is demonstrated in Equation (26).

where represents the maximum value of the image, and denotes the mean squared error.

Secondly, the NC verifies the robustness of the watermarking method by calculating the similarity between the reconstructed copyright image and the original embedded watermark image [26]. The range of NC is (−1,1), and the closer the NC value is to 1, the better the algorithm’s robustness. The calculation is demonstrated in Equation (27).

Here, and denote the pixel values of the watermark image and reconstructed copyright image at position , respectively.

4.2. Robustness Evaluation

4.2.1. Conventional Attacks

To verify the robustness of the proposed method when facing conventional attacks, we applied different conventional attacks on interior design images and evaluated the watermark images with the extracted copyright image of the attacked images. The conventional attacks comprised three types of noise attacks on images (Gaussian, salt-and-pepper, and speckle noise) and three types of filtering attacks (Gaussian filter, mean filter, and median filter). Table 3 shows the specific attack types and intensities. Table 4 and Table 5, respectively, show the PSNR and NC results of the proposed method for the I_D dataset, while Table 6 and Table 7, respectively, show the PSNR and NC results of the proposed method for the SHI dataset. Figure 2 shows the images receiving the conventional attacks and the extracted copyright image.

Table 3.

The types and intensities of conventional attacks.

Table 4.

The PSNR results of conventional attacks for the I_D dataset.

Table 5.

The NC results of conventional attacks for the I_D dataset.

Table 6.

The PSNR results of conventional attacks for the SHI dataset.

Table 7.

The NC results of conventional attacks for the SHI dataset.

The experimental results show that the proposed method is robust against conventional noise and filter processing attacks. For the three noise types (Gaussian, salt-and-pepper, and speckle noise), the values of NC and PSNR decrease slightly with the increase in the noise intensity. Still, the overall change is small, and in the experiments, the average PSNR and NC values were 25.30 and 0.987, which shows that the method has good robustness. For the three filters (Gaussian blur, mean blur, and median blur), the effects of different filtering treatment strengths on the NC and PSNR values are more moderate. In the experiments, the average PSNRs of the test were 25.46 and 0.988, indicating that the method can effectively resist filtering interference. Generally, traditional zero-watermark schemes suffer from degradation under intense noise or filtering attacks. Our method can maintain high robustness under attacks compared with traditional zero-watermark methods. This stability can be attributed to the advantage of deep learning in stable feature extraction. In addition, the experimental results indicate that although different types of noise and filtering can cause defects in the original image, the overall structural integrity of the extracted copyright information remains largely unchanged. Overall, the technique demonstrates better robustness when oriented to conventional noise.

4.2.2. Geometric Attacks

To verify the robustness of the method proposed in this study under geometric attacks, we set up several geometric attacks, as shown in Table 8, to test on the images, and the experimental results of their PSNRs and NCs for the I_D dataset are shown in Table 9 and Table 10. The PSNR and NC results for the SHI dataset are shown in Table 11 and Table 12. In addition, Figure 3 shows the images receiving the geometric attacks and the extracted copyright images.

Table 8.

The types and intensities of geometric attacks.

Table 9.

The PSNR results of geometric attacks for the I_D dataset.

Table 10.

The NC results of geometric attacks for the I_D dataset.

Table 11.

The PSNR results of geometric attacks for the SHI dataset.

Table 12.

The NC results of geometric attacks for the SHI dataset.

Figure 3.

The interior designs after geometric attacks and the extracted copyright images.

According to the results in Table 9, Table 10, Table 11 and Table 12, it can be seen that the average PSNR and NC values of the method proposed in this study are more than 24.874 and 0.986 for the extraction of watermarks when encountering geometric attacks, indicating that the process is robust against geometric attacks. Specifically, in rotation attacks, the average PSNR and NC values are more significant than 24.716 and 0.985, respectively. In cropping attacks, the average PSNR and NC values are greater than 25.032 and 0.987, respectively, which shows superior resistance to geometric attacks. Geometric attacks are inherently more challenging than traditional noise or filtering attacks, as they alter the spatial position of the original image. However, the experimental results confirm that our proposed method outperforms traditional methods in handling these distortions, making it more suitable for common real-world applications of geometric transformations.

4.2.3. Comparisons with Existing Methods

To further verify the robustness of our method, we compared it with several zero-watermark methods while including conventional and geometric attacks. Table 13 shows the results of the comparison. As shown in Table 13, our proposed method exhibits higher robustness than other methods in the presence of different attack modes. Our new method demonstrates significant advantages, especially in resisting geometric attacks, such as rotation attacks. When the image is rotated by 20 degrees, many other methods show a significant decrease in performance, but our method still maintains a high performance index of 0.9879 under the same conditions. Therefore, the zero-watermark algorithm proposed in this study has more advantages in overall robustness.

Table 13.

Comparative results for the NC under different attacks.

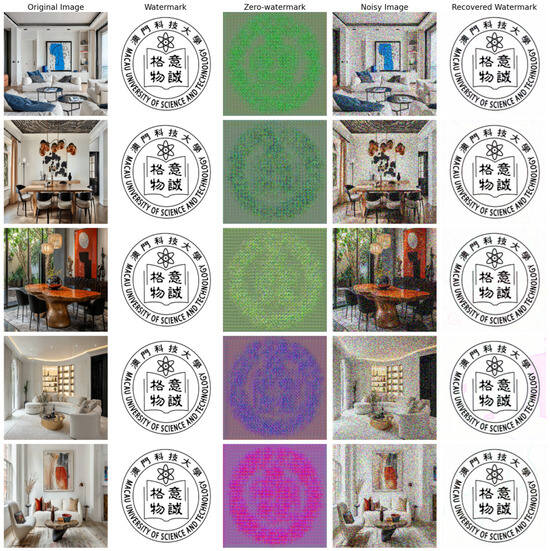

4.3. Uniqueness Evaluation

Since zero-watermark information is generated based on image features, it is necessary to ensure that the generated watermark information is unique to a specific image. Also, zero-watermark images generated from distinct datasets should be different [31].

Here, an experiment was conducted to confirm the uniqueness of the generated zero-watermark images. Specifically, we generated zero-watermark images of five tested images and calculated the NC values among them in pairs. Figure 4 shows the generated zero-watermark information. In addition, the NC results are summarized in Table 14. The results show that the similarity between the zero-watermark images generated from the five images is low, as it is less than 0.2. This indicates significant differences between the zero-watermark images generated based on different images, which verifies the uniqueness of the proposed zero-watermark methods for generating zero-watermark information. Since this method generates zero-watermark information by fusing the features of the watermark and image, the generated zero-watermark content has high uniqueness and can effectively distinguish different images, providing strong technical support for copyright protection.

Figure 4.

The generated zero-watermark results.

Table 14.

Verification of uniqueness using the NC values for zero-watermark images.

4.4. Efficiency Analysis

Efficiency analysis provides a deeper understanding of the model’s feasibility in practical applications. Here, we referred to the experimental setup used by Li et al. [32] and conducted tests using images of the same size. The proposed method was performed 10 times, and the average time was calculated. Meanwhile, we compared the model parameters of three other methods. Table 15 shows the efficiency results for the different models. According to the results, the time required for zero-watermark generation by the proposed method is lower than that of the other methods. Although the compared methods are based on convolutional neural networks, Liu’s method requires a different style of learning information for the host image. It requires more extended training and inference time, resulting in increased memory requirements due to the model’s complexity. In addition, the ResNet101 network based on Nawaz’s model has a relatively large depth, thus requiring more parameters and a greater inference time. However, the proposed method uses a lightweight MobileNet as the image encoder. Compared with CNNs, the lightweight model reduces the required parameters and inference time while retaining a good feature extraction ability and improving the overall efficiency.

Table 15.

Comparison of processing time and model parameters with other models.

5. Discussion

Original interior design drafts are highly valuable and face significant risks of piracy and tampering [34]. Protecting these works is crucial to preserving designers’ intellectual property rights and fostering innovation within creative industries [35]. However, existing methods for protecting intellectual property often lack robustness or practicality in real-world scenarios, as they suffer from insufficient resilience to complex attacks [36]. Our proposed method addresses these challenges by proposing a zero-watermark method that promises to enhance the security and usability of copyright protection for interior design.

Deep learning provides a robust foundation for zero-watermarking techniques to adapt to increasingly complex image types and diverse application scenarios [29]. By integrating image fusion and deep learning, this study effectively fused the features of interior design and watermark images in a high-dimensional space. The fused features not only contained the visual features of the interior design and the features of the watermarked image but also enhanced the robustness of the zero-watermark information. This also promises to improve the security and usability of copyright protection systems for interior design drafts.

5.1. Ambiguity Attack Analysis

Watermark methods often face the challenge of ambiguity attacks. However, our method has certain advantages in effectively resisting such attacks. On the one hand, our zero-watermark method is based on deep learning image fusion, which integrates complex information between the host and watermark images. Through uniqueness experiments, we found that each generated zero-watermark image has significant differences, which makes it difficult for attackers to approximate the original zero-watermark image through similar zero-watermark images, thus increasing the difficulty of deception attacks. On the other hand, our deep learning-based zero-watermark method flexibly adjusts different hyperparameters when generating zero-watermark information. It is also necessary to verify the information of these hyperparameters. This feature further enhances the resistance of our method in the face of ambiguity attacks. In summary, our zero-watermarking method has a certain degree of resistance against ambiguity attacks.

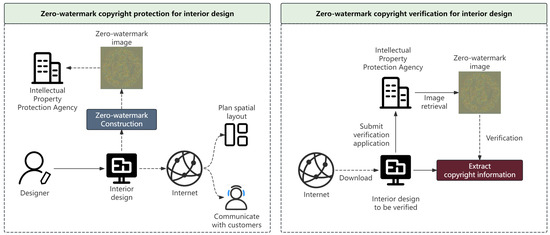

5.2. Model Application Analysis

With its demonstrated robustness against common attacks and the ability to maintain uniqueness, the proposed method is well suited for real-world applications in protecting the intellectual property of interior design. Figure 5 shows a copyright protection and verification scenario based on the proposed zero-watermark method.

Figure 5.

Copyright protection and verification scenarios based on the proposed zero-watermark method.

Firstly, after completing an interior design, the designer combines the copyright information that needs to be embedded into the interior design and generates a zero-watermark image using the proposed method. Secondly, the generated zero-watermark image will be sent to an intellectual property protection agency for protection. At this point, the interior design can be communicated with clients online or directly used for spatial layout planning. If the designer discovers an interior design with a copyright dispute online, a verification application can be submitted to the intellectual property protection agency. The intellectual property protection agency will provide designers with saved zero-watermark images. At this point, the designer can extract embedded copyright information through the proposed zero-watermark verification network and verify their copyright ownership.

To further verify the application of the model in actual scenarios, we selected five modern interior designs online https://www.decorilla.com/online-decorating/modern-interior-design-ideas/ (accessed on 24 February 2025). and verified them with the proposed zero-watermark method. The results are shown in Figure 6. In these selected samples, the model demonstrates good robustness. Even under strong attacks, it remains feasible to extract relatively intact copyright information. This highlights the effectiveness of our method in preserving essential copyright ownership details under different challenging conditions. The proposed method generates robust and distinctive zero-watermark information, which can serve as reliable evidence for establishing copyright ownership and ensuring the integrity of interior designs. Furthermore, in cases of unauthorized use or tampering, the method enables efficient extraction and verification of the embedded zero-watermark information, thus facilitating the rapid detection of infringement and providing robust legal support for copyright enforcement.

Figure 6.

The practical effects of applying the copyright protection model to real-life scenarios.

Beyond interior design, the proposed method has broad applicability in protecting intellectual property rights in various design-related fields. In architectural design, it can protect floor plans and 3D renderings, ensure the traceability of original designs, and prevent unauthorized use. In fashion design, this method can protect digital sketches and textile patterns, helping designers maintain ownership of their works. In addition, in product and industrial design, prototypes and conceptual models are often shared digitally, and the proposed method ensures that design ownership is verifiable and can prevent tampering. This method may provide creators with a broad solution, reducing the risk of unauthorized copying or abuse.

Despite its promising results, the method still faces some challenges. First, attacks on interior design are often more complex and varied, which can reduce the feasibility of extracting watermark images with our method. Second, the time and computational costs bring specific challenges. In the future, we will continue to explore zero-watermark approaches that can handle more challenging and advanced attack scenarios. In addition, we will consider employing knowledge distillation to reduce the time and computational costs.

6. Conclusions

This study presents a novel zero-watermark method designed to protect interior design. By leveraging deep learning, this method achieves robust feature extraction and the effective integration of watermarks and interior design through image fusion. The experimental results demonstrate that the proposed method is robust against conventional and geometric attacks. In addition, this work highlights the potential of deep learning and image fusion in advancing zero-watermark technology and provides a solid foundation for addressing complex copyright protection scenarios.

Author Contributions

Conceptualization, Y.P. and Q.H.; data curation, J.X.; formal analysis, Y.P.; investigation, Y.P.; methodology, Y.P.; project administration, J.C.; resources, Q.H.; software, Y.P.; supervision, K.U.; validation, K.U.; visualization, Y.P.; writing—original draft, Y.P. and J.X.; writing—review and editing, K.U. and J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are available in a publicly accessible repository: https://www.kaggle.com/datasets/aishahsofea/interior-design (accessed on 24 February 2025); https://www.kaggle.com/datasets/luznoc/synthetic-dataset-for-home-interior (accessed on 24 February 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chen, J.; Shao, Z.; Hu, B. Generating Interior Design from Text: A New Diffusion Model-Based Method for Efficient Creative Design. Buildings 2023, 13, 1861. [Google Scholar] [CrossRef]

- Kirillova, E.A.; Koval’, V.; Zenin, S.; Parshin, N.M.; Shlyapnikova, O.V. Digital Right Protection Principles under Digitalization. Webology 2021, 18, 910–930. [Google Scholar] [CrossRef]

- Pasa, B. Industrial Design and Artistic Expression: The Challenge of Legal Protection. Brill Res. Perspect. Art Law 2020, 3, 1–137. [Google Scholar] [CrossRef]

- Chakraborty, D. Copyright Challenges in the Digital Age: Balancing Intellectual Property Rights and Data Privacy in India’s Online Ecosystem. 2023. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4647960 (accessed on 24 February 2025).

- Wen, Q.; Sun, T.F.; Wang, S.X. Concept and application of zero-watermark. Acta Electron. Sin. 2003, 31, 214–216. [Google Scholar]

- Panchal, U.H.; Srivastava, R. A comprehensive survey on digital image watermarking techniques. In Proceedings of the 2015 Fifth International Conference on Communication Systems and Network Technologies, Gwalior, India, 4–6 April 2015; pp. 591–595. [Google Scholar]

- Luo, Y.; Tan, X.; Cai, Z. Robust Deep Image Watermarking: A Survey. Comput. Mater. Contin. 2024, 81, 133. [Google Scholar] [CrossRef]

- Abraham, J.; Paul, V. An imperceptible spatial domain color image watermarking scheme. J. King Saud-Univ.-Comput. Inf. Sci. 2019, 31, 125–133. [Google Scholar] [CrossRef]

- Hu, R.; Xiang, S. Reversible data hiding by using CNN prediction and adaptive embedding. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 10196–10208. [Google Scholar] [CrossRef] [PubMed]

- Ray, A.; Roy, S. Recent trends in image watermarking techniques for copyright protection: A survey. Int. J. Multimed. Inf. Retr. 2020, 9, 249–270. [Google Scholar] [CrossRef]

- Kadian, P.; Arora, S.M.; Arora, N. Robust digital watermarking techniques for copyright protection of digital data: A survey. Wirel. Pers. Commun. 2021, 118, 3225–3249. [Google Scholar] [CrossRef]

- Zheng, L.; Zhang, Y.; Thing, V.L. A survey on image tampering and its detection in real-world photos. J. Vis. Commun. Image Represent. 2019, 58, 380–399. [Google Scholar] [CrossRef]

- Liu, H.; Chen, Y.; Shen, G.; Guo, C.; Cui, Y. Robust Image Watermarking Based on Hybrid Transform and Position-Adaptive Selection. In Circuits, Systems, and Signal Processing; Springer: Berlin/Heidelberg, Germany, 2024; pp. 1–28. [Google Scholar]

- Zhang, X.; Jiang, R.; Sun, W.; Song, A.; Wei, X.; Meng, R. LKAW: A robust watermarking method based on large kernel convolution and adaptive weight assignment. Comput. Mater. Contin. 2023, 75, 1–17. [Google Scholar] [CrossRef]

- Liu, J.; Li, J.; Cheng, J.; Ma, J.; Sadiq, N.; Han, B.; Geng, Q.; Ai, Y. A novel robust watermarking algorithm for encrypted medical image based on DTCWT-DCT and chaotic map. Comput. Mater. Contin. 2019, 61, 889–910. [Google Scholar] [CrossRef]

- Zhong, X.; Huang, P.C.; Mastorakis, S.; Shih, F.Y. An automated and robust image watermarking scheme based on deep neural networks. IEEE Trans. Multimed. 2020, 23, 1951–1961. [Google Scholar] [CrossRef]

- Downs, R.; Illangovan, D.; Alférez, G.H. Open Zero-Watermarking Approach to Prevent the Unauthorized Use of Images in Deep Learning. In Proceedings of the Intelligent Systems Conference, Guilin, China, 26–27 October 2024; Springer: Cham, Switzerland, 2024; pp. 395–405. [Google Scholar]

- Xiang, R.; Liu, G.; Li, K.; Liu, J.; Zhang, Z.; Dang, M. Zero-watermark scheme for medical image protection based on style feature and ResNet. Biomed. Signal Process. Control 2023, 86, 105127. [Google Scholar] [CrossRef]

- Shi, H.; Zhou, S.; Chen, M.; Li, M. A novel zero-watermarking algorithm based on multi-feature and DNA encryption for medical images. Multimed. Tools Appl. 2023, 82, 36507–36552. [Google Scholar] [CrossRef]

- Li, D.; Deng, L.; Gupta, B.B.; Wang, H.; Choi, C. A novel CNN based security guaranteed image watermarking generation scenario for smart city applications. Inf. Sci. 2019, 479, 432–447. [Google Scholar] [CrossRef]

- Cao, F.; Yao, S.; Zhou, Y.; Yao, H.; Qin, C. Perceptual authentication hashing for digital images based on multi-domain feature fusion. Signal Process. 2024, 223, 109576. [Google Scholar] [CrossRef]

- Taj, R.; Tao, F.; Kanwal, S.; Almogren, A.; Altameem, A.; Ur Rehman, A. A reversible-zero watermarking scheme for medical images. Sci. Rep. 2024, 14, 17320. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Thanh, T.M.; Tanaka, K. An image zero-watermarking algorithm based on the encryption of visual map feature with watermark information. Multimed. Tools Appl. 2017, 76, 13455–13471. [Google Scholar] [CrossRef]

- Dong, F.; Li, J.; Bhatti, U.A.; Liu, J.; Chen, Y.W.; Li, D. Robust zero watermarking algorithm for medical images based on improved NasNet-mobile and DCT. Electronics 2023, 12, 3444. [Google Scholar] [CrossRef]

- Shen, Z.; Kintak, U. A novel image zero-watermarking scheme based on non-uniform rectangular. In Proceedings of the 2017 International Conference on Wavelet Analysis and Pattern Recognition (ICWAPR), Ningbo, China, 9–12 June 2017; pp. 78–82. [Google Scholar]

- Gong, C.; Liu, J.; Gong, M.; Li, J.; Bhatti, U.A.; Ma, J. Robust medical zero-watermarking algorithm based on Residual-DenseNet. IET Biom. 2022, 11, 547–556. [Google Scholar] [CrossRef]

- Nawaz, S.A.; Li, J.; Shoukat, M.U.; Bhatti, U.A.; Raza, M.A. Hybrid medical image zero watermarking via discrete wavelet transform-ResNet101 and discrete cosine transform. Comput. Electr. Eng. 2023, 112, 108985. [Google Scholar] [CrossRef]

- Li, F.; Wang, Z.X. A Zero-Watermarking Algorithm Based on Scale-Invariant Feature Reconstruction Transform. Appl. Sci. 2024, 14, 4756. [Google Scholar] [CrossRef]

- Ren, N.; Guo, S.; Zhu, C.; Hu, Y. A zero-watermarking scheme based on spatial topological relations for vector dataset. Expert Syst. Appl. 2023, 226, 120217. [Google Scholar] [CrossRef]

- Li, C.; Sun, H.; Wang, C.; Chen, S.; Liu, X.; Zhang, Y.; Ren, N.; Tong, D. ZWNET: A deep-learning-powered zero-watermarking scheme with high robustness and discriminability for images. Appl. Sci. 2024, 14, 435. [Google Scholar] [CrossRef]

- Liu, G.; Xiang, R.; Liu, J.; Pan, R.; Zhang, Z. An invisible and robust watermarking scheme using convolutional neural networks. Expert Syst. Appl. 2022, 210, 118529. [Google Scholar] [CrossRef]

- Sadnyini, I.A.; Putra, I.G.P.A.W.; Gorda, A.N.S.R.; Gorda, A.N.T.R. Legal Protection of Interior Design in Industrial Design Intellectual Property Rights. NOTARIIL J. Kenotariatan 2021, 6, 27–37. [Google Scholar] [CrossRef]

- Wang, B.; Jiawei, S.; Wang, W.; Zhao, P. Image copyright protection based on blockchain and zero-watermark. IEEE Trans. Netw. Sci. Eng. 2022, 9, 2188–2199. [Google Scholar] [CrossRef]

- Anand, A.; Bedi, J.; Aggarwal, A.; Khan, M.A.; Rida, I. Authenticating and securing healthcare records: A deep learning-based zero watermarking approach. Image Vis. Comput. 2024, 145, 104975. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).