Abstract

Surrogate-assisted evolutionary algorithms (SAEAs), which combine the search capabilities of evolutionary algorithms (EAs) with the predictive capabilities of surrogate models, are effective methods for solving expensive optimization problems (EOPs). However, the over-reliance on the accuracy of the surrogate model causes the optimization performance of most SAEAs to decrease drastically with the increase in dimensionality. To tackle this challenge, this paper proposes a surrogate-assisted gray prediction evolution (SAGPE) algorithm based on gray prediction evolution (GPE). In SAGPE, both the global and local surrogate model are constructed to assist the GPE search alternately. The proposed algorithm improves optimization efficiency by combining the macro-predictive ability of the even gray model in GPE for population update trends and the predictive ability of surrogate models to synergistically guide population searches in promising directions. In addition, an inferior offspring learning strategy is proposed to improve the utilization of population information. The performance of SAGPE is tested on eight common benchmark functions and a speed reducer design problem. The optimization results are compared with existing algorithms and show that SAGPE has significant performance advantages in terms of convergence speed and solution accuracy.

Keywords:

expensive optimization problems; gray prediction evolution; surrogate-assisted evolutionary algorithms MSC:

68W50

1. Introduction

Evolutionary algorithms (EAs) [1] have received much attention for their ability to provide effective solutions in complex optimization problems. However, the large number of function evaluations required by EAs to find the optimal value makes it expensive or even unacceptable in terms of time cost in solving optimization problems that require time-consuming computer simulations. Computationally expensive optimization problems are commonly referred to as EOPs [2]. To reduce the computational cost of EAs for solving EOPs, researchers have investigated surrogate-assisted evolutionary algorithms (SAEAs) [3]. The basic idea of SAEAs is to reduce computational cost by using surrogate models instead of true fitness evaluation functions and defining prescreening strategies to select individuals from the search population that need to be evaluated with the true function [4]. Polynomial regression (PR) [5], radial basis function (RBF) [6], Kriging model [7], and support vector regression (SVR) [8] are commonly used surrogate models.

Almost all traditional EAs have the potential to solve EOPs with the assistance of surrogate models [9]. In SAEAs, the most commonly used EAs are particle swarm optimization (PSO) [10] and differential evolution (DE) [11], in addition to competitive swarm optimizer (CSO) [12], gray wolf optimization (GWO) [13], and others. Depending on the surrogate model, the existing SAEAs can be broadly categorized into three types, i.e., global-model-assisted EAs [14], local-model-assisted EAs [15], and hybrid-model-assisted EAs [16]. Global surrogate models, which focus on exploring the entire search space, are often used to approximate the entire landscape of a problem. Di Nuovo et al. [17] presented a study on the use of inexpensive fuzzy function approximation models in selecting promising candidates for true function evaluation in multiobjective optimization problems. Li et al. [18] proposed a fast surrogate-assisted particle swarm algorithm (FSAPSO) for medium dimensional problems, which designs a prescreening criterion that considers both the predicted fitness value and the distance. Local surrogate models aim to make accurate predictions within a small search area, focusing on the development of promising search areas. Ong et al. [19] constructed a local RBF model based on the principle of transformational inference and then used a trust domain approach to cross-use the true function and RBF for the objective and constraint functions. Li et al. [20] defined a number of subproblems in each search generation and constructs a local surrogate to optimize each of them. Due to the limited number of training examples available, a single global or local model cannot effectively fit a variety of problem landscapes, making it often difficult for single-model SAEAs to effectively address different EOPs [11]. To balance exploration and exploitation, there is a growing interest in hybrid surrogate models. Hybrid surrogate models typically consist of a global surrogate model and a local surrogate model and have been shown to outperform single surrogate models on most problems. Wang et al. [21] proposed an evolutionary sampling-assisted optimization (ESAO) for high-dimensional EOPs, which performs global and local searches alternately based on whether the current optimal value is improved or not. A surrogate-assisted competitive swarm optimization (SACSO), which uses global search, local search, and dyadic-based search as three different criteria to select suitable particles for true evaluation, was proposed in Pan et al. [22]. A generalized surrogate-assisted DE (GSDE) [23] was proposed to solve the optimal design problem of turbomachinery vane grids. In a GSDE, an RBF is introduced into the population to update the process to achieve a good balance between global and local searches. Recently, Zhang et al. [24] developed a hierarchical surrogate framework for hybrid evolutionary algorithms. In the global search phase, RBF surrogates are used to guide the teaching–learning-based optimization to locate promising subregions. In the local search phase, a new dynamic surrogate set is proposed to assist differential evolution to speed up the convergence process.

All of the above methods have good performance in solving EOPs. The effectiveness of using surrogate models to help EAs reduce the computational costs is demonstrated in them. However, due to the over-reliance on surrogate models, most existing SAEAs still require extensive true function evaluation before finding a high-quality solution. The powerful search capability of traditional EAs lies in the fact that they do not need the gradient information of the objective function and search for the optimal value by means of information interaction through mutation and crossover operations between current or historical individuals [25]. It is well known that surrogate models are the main source of population fitness information in the SAEAs framework. The “curse of dimensionality” makes it difficult to establish accurate surrogate models with limited samples as the dimension of the problem increases. Inaccurate surrogates may provide unreliable fitness information to the search population, mislead the population search, and waste computational resources [13]. Therefore, in the design of SAEAs, how to reduce the risk of inaccurate surrogate models misleading the direction of the population search and efficiently find a good solution is an urgent problem that needs to be solved.

EAs that utilize population prediction information for searches provide a potential opportunity to address the above problem. They use a prediction model as a reproduction operator to predict the next generation of the population based on the iterative information of the historical population. During the optimization process, all individuals search in the promising direction predicted by the prediction model based on the historical iteration information rather than by moving closer to or farther away from specific individuals. This feature can effectively reduce the risk that the search direction of the population is misdirected by inaccurate surrogate models. In addition, the ability of the reproduction operator in EAs designed based on predictive models to predict population renewal trends has the potential to synergize with surrogate models to guide the population search in promising directions, thereby improving the efficiency of finding optimal values. Therefore, EAs that utilize population prediction information for searching have great potential for development in the design of SAEAs.

Gray prediction evolution (GPE) [26] has attracted considerable attention for its excellent performance among EAs that use population prediction information to search. Based on GPE, several improved algorithms with excellent performance have been successfully developed and applied in various fields [27]. The advantage that the even gray model (EGM(1,1)) operator in GPE can make accurate predictions on small sample data makes it very suitable for EOPs with scarce computational resources. Based on the considerations above, a surrogate-assisted method on the basis of the GPE is proposed in this paper. To the best of our knowledge, this study is the first attempt to combine the predictive information of search populations with surrogate models to solve EOPs. The proposed method is called the surrogate-assisted gray prediction evolution (SAGPE) algorithm. In order to balance exploration and exploitation, SAGPE constructs a global RBF model and a local RBF model alternately to assist GPE in the search. During the optimization process, surrogate models are used to predict the fitness values of offspring generated by the GPE reproduction operator, and the individual with the best surrogate model value is selected for true function evaluation. Individuals in the offspring whose model values are better than those of the parental individuals are retained by greedy selection. If the global search does not find a better solution than the current global optimal value, it switches to a local search and vice versa. In addition, greedy selection only retains the individuals with good fitness values in each search of EAs and directly discards the information of individuals with poor fitness values, which results in the loss of information. To improve the use of population information, this paper designed an inferior offspring learning strategy in the global search phase to improve the quality of candidate individuals. Experiments for SAGPE compared with five other SAEAs were conducted on eight commonly used benchmark functions and a real world optimization problem. The experimental results show that SAGPE outperforms other algorithms in terms of optimization effectiveness and convergence speed on most problems.

The main works of this paper are as follows:

- •

- A gray prediction technique is introduced to solve expensive optimization problems (EOPs). In this work, an even gray model (EGM(1,1)) operator is adopted in concert with a surrogate model to guide the population to search in a promising direction.

- •

- We verified that predictive model operators can be better combined with surrogate models to search for optimal solutions compared to the traditional conventional mutation and crossover operators.

- •

- An inferior individual offspring strategy is proposed to improve the quality of candidate individuals used for true function evaluation.

- •

- We have proposed a surrogate-assisted gray prediction evolution algorithm (SAGPE) for solving EOPs.

The remainder of this paper is organized as follows. Section 2 describes several relevant technologies. Section 3 explains the details of the proposed SAGPE. Section 4 demonstrates the performance of SAGPE in solving EOPs through numerical experiments. Section 5 is a discussion of future work and includes concluding remarks.

2. Related Techniques

2.1. GPE Algorithm

In this paper, GPE is used as the underlying search algorithm. As a novel population-intelligent optimization algorithm with strong global search capability, GPE takes the results of EGM(1,1) prediction of population updating trends as offspring. Specifically, the prediction of the EGM(1,1) consists of the following three steps: (1) transforming irregular discrete data into a dataset with an exponential growth pattern by data accumulation, (2) constructing an exponential function from the accumulated dataset and then predicting the next element of the transformed dataset, and (3) obtaining the predicted elements of the raw discrete data by backward derivation. The computational flow of EGM(1,1) is described as follows.

Let a raw data sequence exist as . The first-order accumulation operation of generates the transformed data sequence as follows:

where

The immediate neighboring mean sequence of is generated as follows:

where

We establish the following first-order linear ordinary differential equation for as follows:

where and are the parameters to be estimated. We then discretize Equation (3) to obtain , i.e., EGM(1,1). We write this equation in matrix form as . Therein,

, , .

The least squares method is utilized to solve for the parameter . The following equation is obtained:

We obtain the values of and . Solving Equation (3) with and gives . It is then discredited to obtain the following prediction equation:

Therein, denotes the prediction sequence of the transformed data sequence. Then, the predicted value of the raw sequence is obtained by backward derivation. The specific formula is as follows:

Finally, substituting and into (6) gives the following equation:

Note that the GPE requires information from three generations of populations for the next generation of population prediction. Therefore, it is necessary to randomly generate three populations in the initialization phase and perform selection operations on the offspring by using the third-generation population as the parent in all subsequent searches. In addition, an exponential function cannot be constructed for the EGM(1,1) prediction when two identical data exist. Therefore, to facilitate the population search, the GPE generates offspring during the search according to the following rules:

- (i)

- If the maximum Euclidean distance between three individuals in the data sequence is less than a given threshold , the GPE generate offspring by random perturbation.

- (ii)

- If the distance between any two values of the sequence is less than a given threshold , it uses linear fitting to generate offspring.

- (iii)

- Otherwise, it uses EGM(1,1) to generate offspring.

Ref. [26] is provided for readers interested in detailed information on GPE.

2.2. RBF Model

The RBF [28] is a commonly used surrogate model, and studies have shown that the RBF performs well on both low-dimensional and high-dimensional problems [29]; additionally, the time of modeling the RBF does not rise significantly with the increase in dimension [9]. In this study, the RBF is used to build a surrogate model to assist in the GPE search.

Let and denote n input points in N-dimensional space and their corresponding output values in output space, respectively. The definition formula of the RBF is

in which denotes the function value obtained by RBF interpolation fitting, denotes the weight coefficient, and denotes kernel function. In this paper, the cubic function represented by serves as the kernel function. denotes the Euclidean distance between c and , that is, the input points and the centroid. is a linear function. For the unknown parameter , it can be obtained by the solution of the equation below:

where is the matrix having elements . P is the kernel function matrix of the linear function . is the vector of coefficients for , and . If rank (P) = D + 1, then the matrix is non-singular. Then, the system has a unique solution. In other words, the RBF model can be uniquely determined.

3. Surrogate-Assisted Gray Prediction Evolution Algorithm

The use of predictive information from populations as offspring makes SAGPE different from other SAEAs. The framework and main components of SAGPE are described in detail as follows.

3.1. Overall Framework

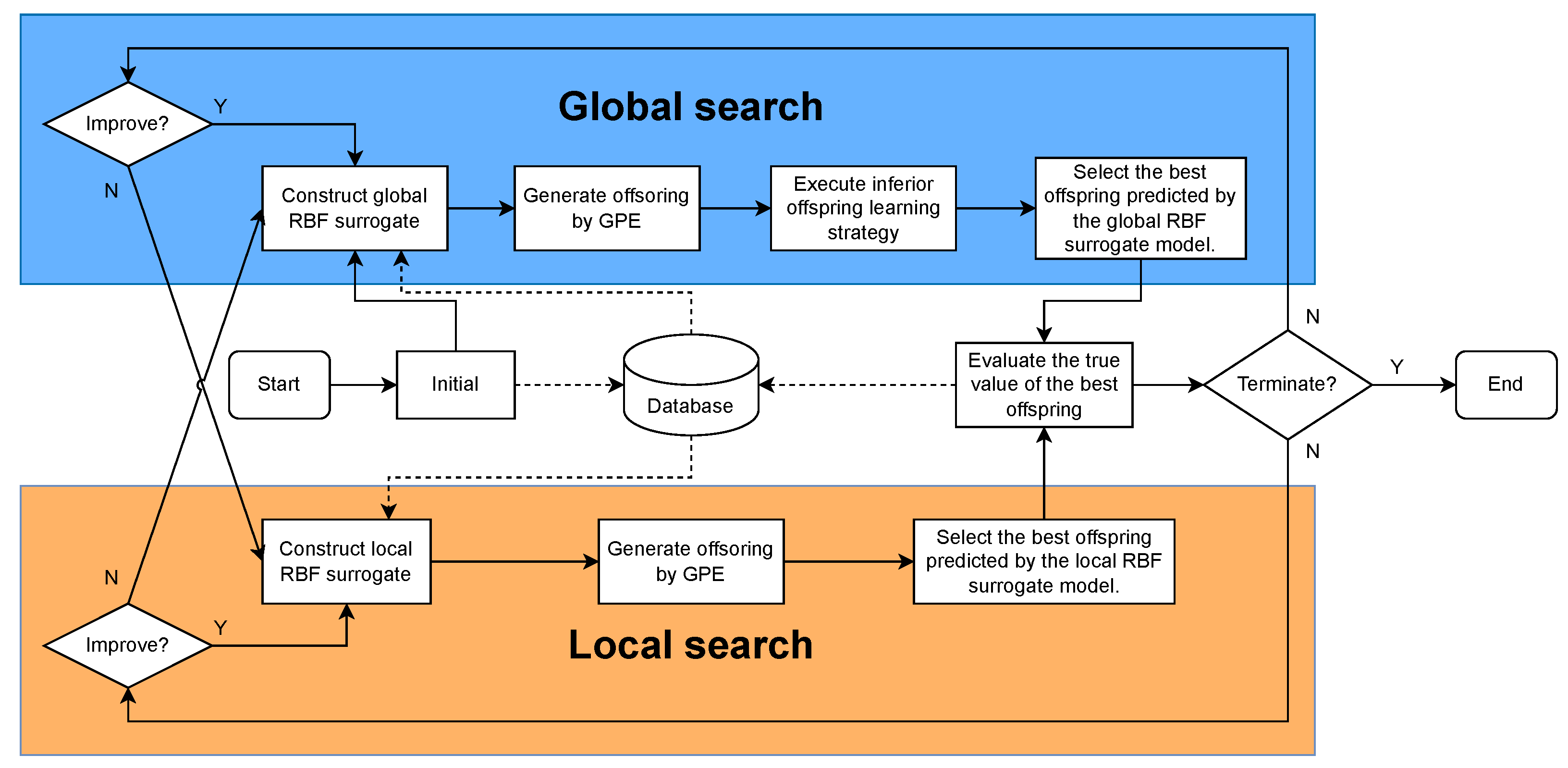

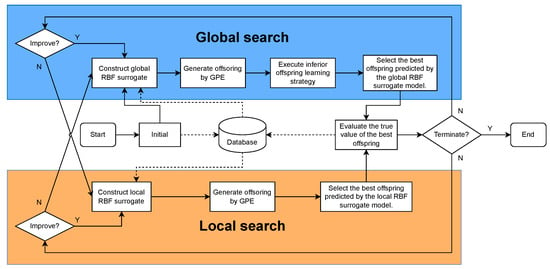

Through the predictive search capabilities of GPE as well as the prediction ability for populations’ true fitness values of RBF models, SAGPE is able to efficiently find good solutions. SAGPE searches for optimal solutions by alternately performing global and local searches. Both search components include GPE and an RBF surrogate model. The surrogate model in the global search is constructed based on all existing samples in the database. The best fitness values in the current database are used to construct the surrogate model for local search. The main framework of SAGPE is shown in Figure 1, and the solid and dashed lines in it represent the operation flow and data flow of the algorithm, respectively. Initial samples are generated by means of Latin Hypercube Sampling (LHS) [30]. All samples obtained by LHS are evaluated by true function value and added to the database as the initial dataset for training surrogate models. Since three generations of populations are required for the reproduction operation of the GPE, this paper obtained three populations by performing three random samples during the initialization population stage and used the third population as the parent population. After initialization, SAGPE starts the optimization process from the global search phase, and the search mode will be changed to local search if it cannot successfully find a solution better than the global optimal value. Similarly, the search mode will be changed from local to global search if it cannot successfully obtain a solution that improves the global optimal value. The search process ends and the optimization results are output when the maximum amount of true function evaluations is exhausted.

Figure 1.

Framework of the proposed SAGPE.

3.2. Global Search

The pseudo-code for performing a global search is presented in Algorithm 1. The global RBF model is trained to predict the fitness value of the offspring by using all the samples in the database. The model generates offspring using GPE and predicts fitness values of the offspring using a global RBF model. The offspring with the best model predicted fitness value is selected for true evaluation. The global search continues if the current optimal function value is improved. If no individual is found that is better than the current best sample, the search is switched to a local search. To ensure convergence of the search population, only individuals with better model predictions than their parents are retained in the offspring population. Considering that each search retains only the individual whose predicted fitness values are better than those of the parent, information about the individual whose predicted fitness values are worse than those of the parent is lost. This paper designed an inferior offspring learning strategy for offspring with worse predicted fitness values than their parents for improving the utilization of population information. Specifically, during the global search phase, those individuals in the offspring population whose predicted fitness values have not improved relative to their parents will learn from the current optimal sample. Algorithm 2 gives the pseudo-code for the inferior offspring learning strategy. It should be emphasized that the population should always search in the direction predicted by EGM(1,1), thus fully combining the predictive search capability of EGM(1,1) with the predictive capability of the surrogate model. Therefore, any offspring that has performed an inferior offspring learning strategy will not be retained even if its predicted fitness value exceeds that of the parent.

| Algorithm 1 Pseudo-code of the global search |

|

3.3. Local Search

Unlike a global surrogate model that focuses on exploration, a local surrogate model is constructed for increasing the searching speed in regions of promise. In SAGPE, the best solutions evaluated by true function value are used to construct a local surrogate model for local searching. The offspring is generated by GPE, and then the local RBF model is used to evaluate the offspring. Only individuals in the offspring population with better model predictions than the previous generation are retained. The offspring with the best model-predicted adaptation values are selected for true evaluation. If the function value of a sample added to the database is not better than the current optimal function value, it switches to a global search. The pseudo-code of the local search is noted in Algorithm 3.

| Algorithm 2 Pseudo-code of the inferior offspring learning strategy |

|

| Algorithm 3 Pseudo-code of the local search |

|

4. Experimental Studies

In this paper, the performance of SAGPE for solving high-dimensional EOPs is tested on eight commonly used benchmark functions for 30D, 50D, and 100D, and the optimization results are compared with five SAEAs. Table 1 shows the details of the benchmark functions. For ease of reading, simplified names are used for benchmark functions. For example, SRR stands for Shifted Rotated Rastrigin Problem, and other simplified names are given in Table 1. The five SAEAs compared are AutoSAEA [11], LSADE [31], IDRCEA [32], ESAO [21], and SHPSO [33]. The use of multiple surrogate models to assist in the search for EAs is a common feature of all the SAEAs compared. Among them, AutoSAEA, LSADE, and IDRCEA were recently proposed. AutoSAEA employs DE’s mutation and crossover operators to generate new solutions and uses a two-level reward approach to collaboratively select alternative models and filling criteria online. The LSADE uses a combination of Lipschitz-based surrogate models, RBF surrogate models, and a local optimization procedure to assist in the search for the DE. The IDRCEA based on DE design uses individual distribution search strategy (IDS) and a regression categorization-based prescreening (RCP) mechanism to enhance the capability of solving high-dimensional EOPs. ESAO adaptively selects the best individual among the offspring generated by DE using alternating global and local search. SHPSO uses an RBF-assisted social learning PSO to explore the entire decision space and an RBF-assisted PSO to develop promising regions.

Table 1.

Characteristics of eight benchmark functions.

4.1. Experimental Setup

In SAGPE, the initial sample size and population size were set to 2*D. The optimal sample size used to construct the local RBF model was set to 2*D. The threshold in the GPE was set to 1 × . The parameter settings for AutoSAEA, LSADE, and IDRCEA are consistent with the original recommended values. Since the source codes of ESAO and SHPSO were not obtained, their experimental data were extracted from the original literature. The maximum true function evaluation number was set to 1000 for each benchmark function. In this paper, all table data and convergence curves were averaged by results of 30 independent runs. The performance of SAGPE was compared with other algorithms by Wilcoxon rank sum test, and symbols “+”, “−” and “=” indicate whether the proposed SAGPE is significantly better than the compared algorithms, significantly worse than the compared algorithms, or comparable to the compared algorithms respectively. All algorithms were run in MATLAB R2023b with 12th Gen Intel(R) Core(TM) i7-12700H 2.30 GHz.

4.2. Parameter Sensitivity Analysis

The main parameters in this study are the threshold of GPE and the population size NP. The effects of them on unimodal, multimodal, and complex multimodal problems of different dimensions are discussed in terms of Ellipsoid, Ackley, and SRR functions in this subsection.

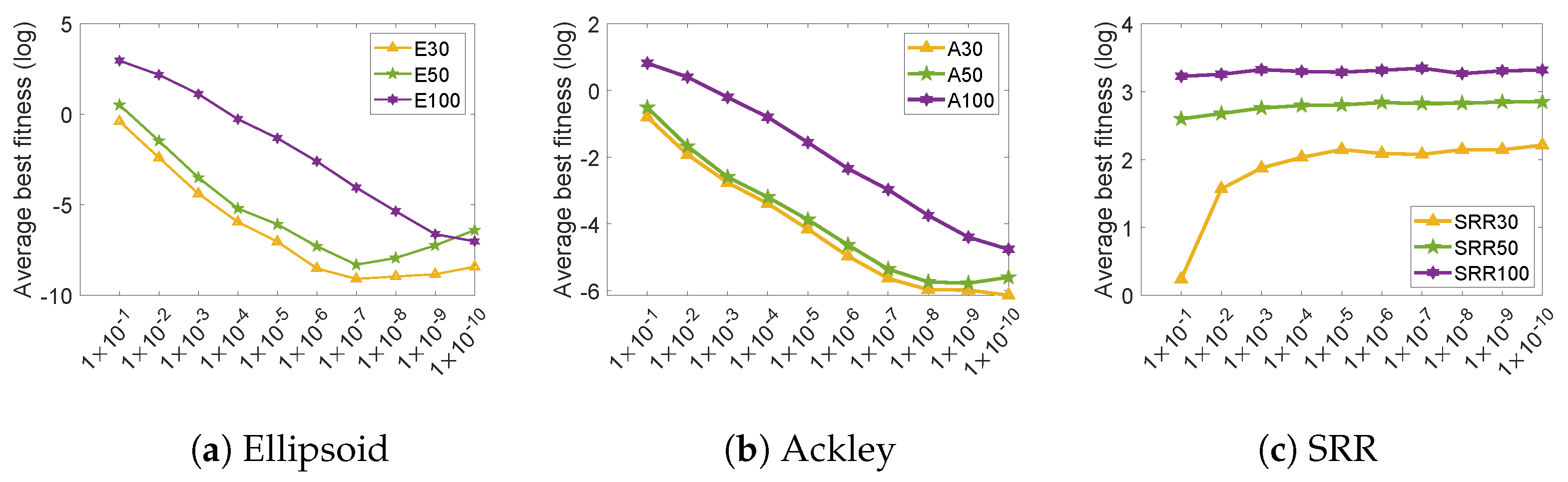

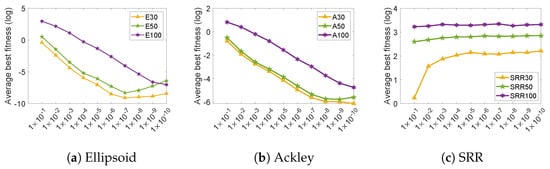

Firstly, the population size was set to a fixed value of = 100 for all test problems of different dimensions, and was allowed to vary from 1 × to 1 × . As described in the related work section of this paper, during the GPE search, if the distance between any two of the three individuals used for the prediction search was greater than , the EGM(1,1) operator was used for reproduction; otherwise, the linear prediction operator or random perturbation operator was used. The experimental results are shown in Figure 2. For SRR, there was a significant difference in the optimization results between = 1 × and = 1 × only in 30D, while it was insensitive to the value of in the 50D and 100D cases. In comparison, the setting of for Ellipsoid and Ackley affected the optimization results to a large extent. It is worth noting that the optimization results for Ellipsoid and Ackley became better and then worse as decreased. The reason may be that as decreases, the exploitation ability of the algorithm increases and the exploration ability decreases. Meanwhile, when was too small, the population converged prematurely and fell into the local optimum. To balance exploration and exploitation, all experiments in this paper set to 1 × under comprehensive consideration.

Figure 2.

Results for (a) Ellipsoid, (b) Ackley, and (c) SRR functions for different values of .

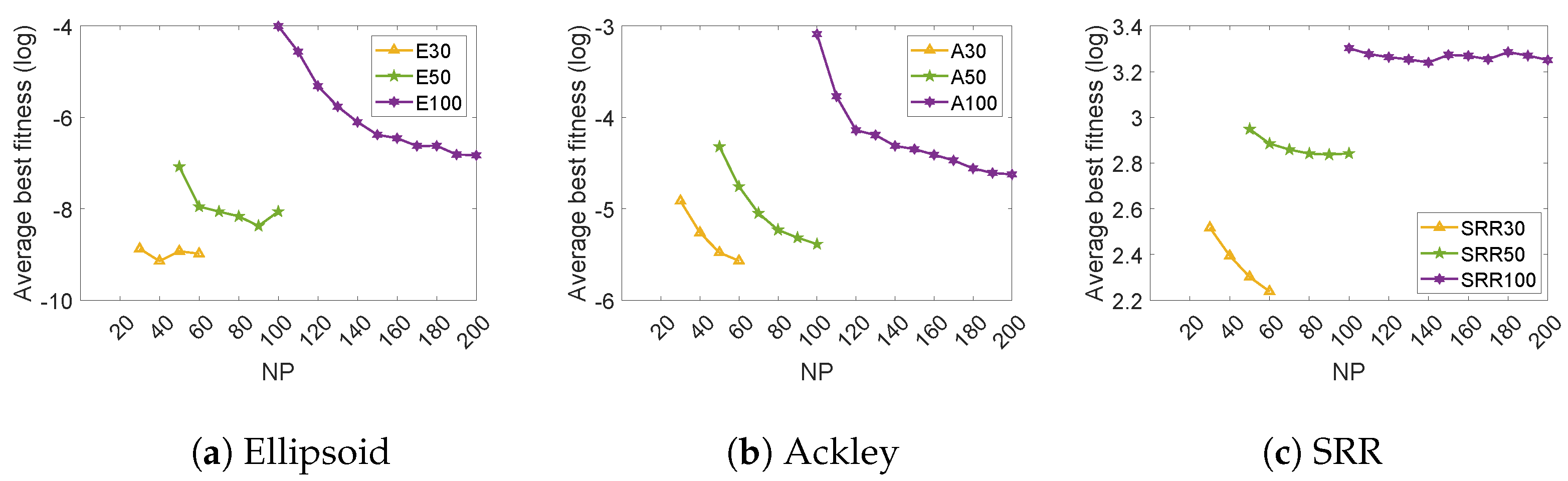

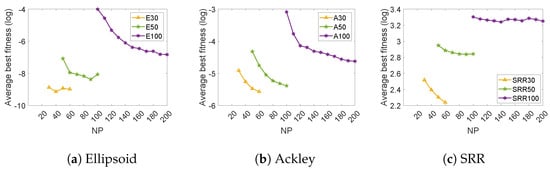

Secondly, the effect of on problems with different dimensions was analyzed under the setting of = 1 × . Considering that the setting of the population size not only affects the optimization effect but also affects the time required for optimization, this paper set the value range of to the interval [D, 2*D] in each dimension test in order to balance the optimization effect and the optimization time. The results are shown in Figure 3. It can be seen that the optimization results of SAGPE for each type of function were generally improved with the increase in . The larger the NP, the greater the diversity and the better the optimization performance. Thus, = 2*D was set for a good performance of SAGPE.

Figure 3.

Results for different population sizes for (a) Ellipsoid, (b) Ackley, and (c) SRR functions.

4.3. Experimental Results on 30D, 50D, and 100D Benchmark Functions

To examine the performance of SAGPE, it was tested on commonly used benchmark functions and compared to the five SAEAs mentioned above at 30D, 50D, and 100D, respectively. For all the algorithms, the average values of fitness and corresponding standard deviations are shown in Table 2. For ease of observation, the best value for each test function is recorded using black bold font in the table. As can be seen from Table 2, the overall optimization performance of SAGPE is better than the other five compared algorithms. SAGPE has better average values than the other five algorithms in 14 of the 24 problems tested. Table 2 shows that SAGPE has the best optimization results on 30D for Ellipsoid, Ackley, and Rastrigin; on 50D for Ellipsoid, Ackley, Griewank, Rastrigin, and RHC2; and on 100D for Ellipsoid, Rosenbrock, Ackley, Griewank, Rastrigin, and RHC2. As the dimensionality increased, the number of benchmark functions on which SAGPE outperformed the other five algorithms increased.

Table 2.

Comparison of results of five SAEAs on 30D, 50D, and 100D functions.

SAGPE outperformed IDRCEA in 15 of 24 problems. IDRCEA generates offspring by IDS and uses RCP to select promising individuals for true functional evaluate. Compensating for the shortcomings of regression models that easily lead to population search stagnation in complex landscapes by using categorical models allows IDECEA to have superior performance on complicated multimodal problems such as SRR and RHC1. Comparatively speaking, the advantages of SAGPE are reflected in solving unimodal and multimodal problems. For the complicated multimodal problems, EGM(1,1) may not be able to accurately capture the population update trend during the search process. It is noteworthy that SAGPE achieved the optimal average value on both 50D and 100D of the complicated multimodal function RHC2. The reason may be that RHC2 is a complicated multimodal function with a narrow basin, and EGM(1,1) can accurately capture the update trend of the population in the narrow basin, thus guiding the population to find a good solution efficiently. Compared to ESAO, SAGPE yielded better averages on 13 out of 18 problems. A global RBF model and a local RBF model are used alternately in both SAGPE and ESAO. As can be seen in Table 2, ESAO has superior performance in solving medium-dimensional problems. For example, Rosenbrock’s 30D and 50D both achieved better optimization results than SAGPE. This may be due to the fact that the local search of ESAO selects the local model optima for the true evaluation, which allows ESAO to find good solutions in a narrow domain. However, it is difficult to construct accurate local models in promising regions as dimensionality increases, which ultimately leads to poor optimization performance of ESAO in high-dimensional problems. The EGM(1,1) operator in GPE has the ability to predict the trend of population updates, which can synergize with the surrogate model to assist the population search. This feature allows SAGPE to guide the population search to promising areas by predicting the population update trend through EGM(1,1), even when an accurate surrogate model cannot be constructed. Compared to AutoSAEA, SAGPE has better averages on 15 out of 24 problems. AutoSAEA improves the quality of candidate individuals by automatically configuring surrogate models and prescreening strategies during optimization, and it has the average on 30D Griewank. However, the reliance on surrogates makes AutoSAEA poor at solving high-dimensional problems, although it solves low-dimensional problems well. Comparatively, the predictive search mechanism of GPE in SAGPE reduces the dependence of population search on surrogate models and does not drastically degrade optimization performance in high-dimensional problems due to the difficulty of constructing accurate surrogate models. As a result, AutoSAEA optimized Rosenbrock, Griewank, and RHC2 better than SAGPE at 30D, but not as well as SAGPE at 50D and 100D. The predictive search mechanism of GPE in SAGPE has a more powerful global search capability than traditional EAs. Although SHPSO uses a multipopulation strategy with PSO and SL-PSO working in tandem to enhance the global search capability, SAGPE outperformed SHPSO on average in 14 out of 21 problems. In addition, the inferior offspring learning strategy in SAGPE can significantly improve the search capability of the population. The average values of LSADE are mostly inferior to those of SAGPE due to its weak exploration and exploitation capabilities. Overall, the proposed SAGPE has better optimization results compared to all the above algorithms.

To evaluate the overall performance of all algorithms, Friedman’s test was used to rank the optimization performance of each algorithm on each dimension (the lower, the better). Table 3 records the results of Friedman’s statistical analysis. Table 3 shows that SAGPE has the best overall performance among the eight commonly used benchmark functions, with an average ranking of 2.39. SAGPE ranks first in the 50D and 100D cases, demonstrating its superior performance in solving high-dimensional functions. In the 30D case, SAGPE ranks third and is still competitive.

Table 3.

Friedman test results for all functions.

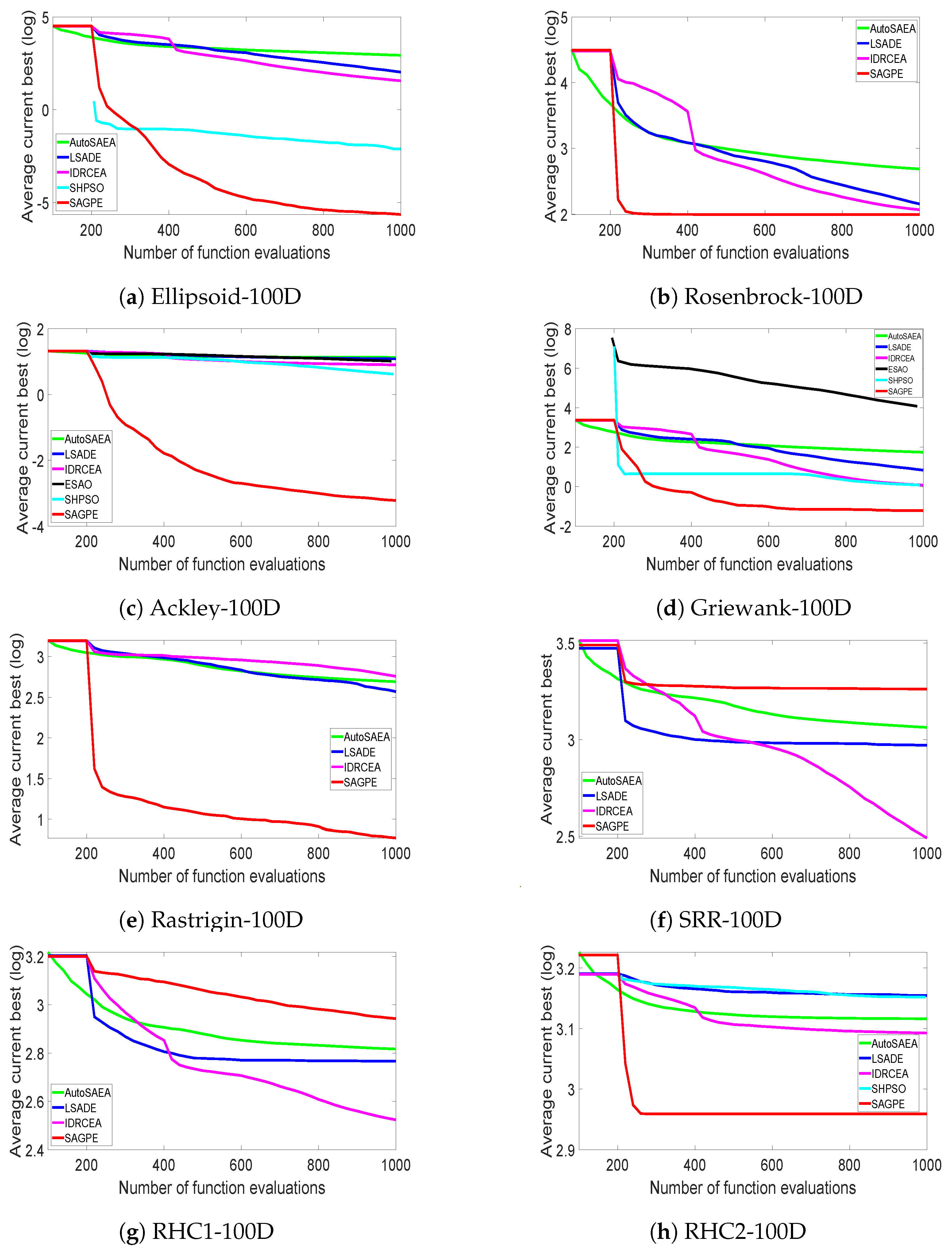

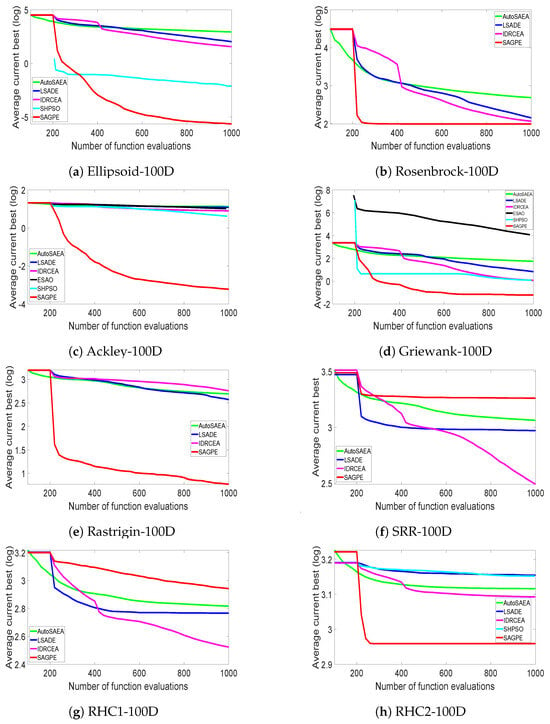

In addition to the optimization results, the convergence speed is also one of the metrics to judge the performance of an algorithm. From the convergence curves in Figure 4 show that, except for SRR and RHC1, the proposed SAGPE was faster than the other five algorithms in all dimensions of the remaining benchmark functions. This proves that SAGPE is more suitable than other algorithms for optimization with less available resources. It is worth noting from the SRR convergence curve in Figure 4 that SAGPE quickly fell into local optima in the early stages of optimization. The main reason may be the existence of a large number of local minima in the SRR and the large discrepancy between the function values of the locally optimal solution and those of the surrounding region. In GPE, each individual in the population searches in its promising direction as predicted by EGM(1,1). Although the feature that each individual in the population has its unique search direction enhances the global search capability of the algorithm, it is easy to make the individuals in the population scatter in the local optimal region for searching when solving functions with a large number of local minima such as SRR. At the same time, the SRR function has the characteristic that the function value of the local optimal solution differs greatly from the function value of the surrounding area, which makes it difficult for the GPE to jump out of the local search area using the random perturbation operator within the limited computational resources. Therefore, although SAGPE has superior performance on unimodal and multimodal problems, its optimization performance on complex multimodal problems needs to be strengthened.

Figure 4.

Convergence curves of AutoSAEA, LSADE, IDRCEA, EASO, SHPSO, and SAGPE on 100D functions.

4.4. Effects of Inferior Offspring Learning Strategy

To analyze the impact of the proposed inferior offspring learning strategy on the performance of the optimization algorithm, the proposed SAGPE was compared with SAGPE-W without the inferior offspring learning strategy on eight commonly used benchmark functions. The optimization results of the two methods after 30 independent runs are shown in Table 4. From Table 4, it can be seen that SAGPE yielded better optimization results than SAGPE-W on most of the benchmark problems, which proves that the inferior offspring learning strategy can effectively enhance the performance of SAGPE.

Table 4.

Experimental results of SAGPE and SAGPE-W for 30D, 50D, and 100D functions.

In addition, the contributions of global and local search of the two algorithms to the search for good solutions were investigated. Table 4 records the average number of true function evaluations (NFEs) and true improvements (NTIs) over all benchmark functions for the two search phases of SAGPE and SAGPE-W. It can be seen that the NFE of the global search for both SAGPE and SAGPE-W was always higher than the NFE of the local search, but this difference is not very large. To some extent, this phenomenon is a reflection of the fact that both SAGPE and SAGPE-W are well balanced between the exploration capability of the global model and the development capability of the local model. For SAGPE-W, except for F1-50D, the NTI in the global search was always greater than the NTI in the local search. This is reasonable because both global and local searches select the offspring with the best predicted fitness values for true evaluation. The local model focuses on accurate prediction in a small area without covering the entire search space. The local model will not be able to provide accurate prediction results for the offspring if the offspring is not within the accurate prediction region of the local model, which affects the search efficiency. It is worth noting that both the NFE and NTI of the global search were higher in SAGPE than in SAGPE-W. This may be due to the fact that the inferior offspring learning strategy in the global search of SAGPE improves the population’s ability to search for good solutions, and the NFE improves with increasing NTI. This presents further evidence for the effectiveness of the inferior offspring learning strategy.

4.5. Comparison of Different EAs

To test the superiority of GPE in the framework of SAEAs, this paper compared GPE with traditional EAs that search by mutation and crossover. The most commonly used EAs in SAEAs are DE and PSO [9]. Therefore, DE and PSO were selected as the comparison EAs. In the following, the reproduction operations of the DE and the PSO used for the comparison are described.

Suppose there is a population , where each x represents a D-dimensional vector. The DE generates the mutation vector v by the following mutation operation:

Therein, subscripts , , and are randomly generated integers in [1, n], which differ from each other, and none is equal to i. F is a scaling factor in the range [0, 1]. Then, the reproduction operation is completed by generating a new by combining and by following the crossover operator:

Therein, is a random integer between [1, D] and . is the crossover probability in the range [0, 1].

For PSO, the reproduction operation is performed using the following formulas:

In the above two formulas, is the individual’s velocity, is the individual’s current position, and and are learning factors. is the historical optimal position of the ith individual, and is the global optimal position.

To test the fairness of the test, this paper simply replaced the GPE in the above SAGPE-W with DE and PSO, respectively, and the rest of the conditions remained unchanged. GPE, DE, and PSO were tested as basic algorithms in the same SAEAs framework on the CEC2014 [34] function set of 50D, respectively. In DE, F was set to 0.6, and was set to 0.8. and were both set to 2 in PSO. Table 5 shows the average results of 30 independent runs. The results of the Wilcoxon rank sum test show that GPE achieved better optimization results than DE for 18 out of 30 test functions and similar results for 4 test functions. GPE outperformed PSO in 21 of the tested functions and showed similar results on 4 of the tested functions. The above results show that GPE, which searches through population prediction information, performs better in the SAEAs framework than DE and PSO, which search through the interaction of information from current or historical individuals.

Table 5.

Experimental results of different surrogate-assisted EAs in the 50-D CEC2014 test set.

4.6. Application on the Speed Reducer Design

In this subsection, the proposed SAGPE was applied to solve the speed reducer design problem to test its performance in dealing with real engineering problems. The problem consists of seven variables, where is the width of the tooth surface, is the module of the tooth, is the number of teeth on the pinion, is the length of the first shaft between the bearings, is the length of the second shaft between the bearings, is the diameter of the first shaft, and is the diameter of the second shaft. The design goal is to minimize the weight of the speed reducer. The mathematical model of the problem is described as follows:

where 2.6 ≤ ≤ 3.6, 0.7 ≤ ≤ 0.8, 17 ≤ ≤ 28, 7.3 ≤ ≤ 8.3, 7.3 ≤ ≤ 8.3, 2.9 ≤ ≤ 3.9, and 5.0 ≤ ≤ 5.5.

Table 6 records the experimental results obtained after 30 independent runs using SAGPE and the compared results with those previously reported by Pan et al. [22]. From the statistical results in Table 6, it can be seen that the mean values and worst values obtained by SAGPE are superior to the other five methods. The best results and standard deviations obtained by SAGPE are competitive with the other algorithms. It can be seen that the application of the proposed SAGPE to the speed reducer design problem is effective and proves the practical application value of SAGPE.

Table 6.

Comparison of the experimental results of the speed reducer design.

5. Conclusions and Future Work

A surrogate-assisted gray prediction evolution algorithm (SAGPE) which can be used to solve EOPs was proposed in this article. The algorithm exploits the predictive power of the even gray model (EGM(1,1)) operator in the gray prediction evolution algorithm (GPE) to effectively balance the speed and effectiveness of optimization. In addition, an inferior offspring learning strategy is included in the global search phase to enhance the exploitation of population information. The performance of SAGPE was validated by comparing it with five state-of-the-art SAEAs on 30D, 50D, and 100D using eight benchmark functions. The superiority of SAGPE in solving EOPs was confirmed by the experimental results. The optimization performance of GPE was compared with that of classical DE and PSO with the same surrogate model and prescreening strategy through extended experiments.

The preliminary experimental results indicate that the search by the predictive information of the population has superiority in the optimization framework of SAEAs. The core of the superior performance of the proposed algorithm is the predictive power of the EGM(1,1). Therefore, it can be considered to further improve the performance of the algorithm by increasing the predictive ability of the EGM(1,1), such as increasing the number of data sequences. On this basis, adaptive selection of the number of data sequences is also a promising direction for improvement. In addition, it is also significant to combine GPE with other EAs that have strong exploitation capabilities to solve complicated multimodal problems.

Author Contributions

Conceptualization, Q.Z.; Methodology, X.H.; Software, X.H.; Validation, X.H.; Formal analysis, H.L. and Q.S.; Data curation, X.H.; Writing—original draft, X.H.; Writing—review & editing, X.H.; Visualization, X.H.; Supervision, H.L., Q.Z. and Q.S.; Project administration, H.L. and Q.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Xiang, X.; Su, Q.; Huang, G.; Hu, Z. A simplified non-equidistant grey prediction evolution algorithm for global optimization. Appl. Soft Comput. 2022, 125, 109081. [Google Scholar] [CrossRef]

- Zhu, H.; Shi, L.; Hu, Z.; Su, Q. A multi-surrogate multi-tasking genetic algorithm with an adaptive training sample selection strategy for expensive optimization problems. Eng. Appl. Artif. Intell. 2024, 130, 107684. [Google Scholar] [CrossRef]

- Jin, Y.; Wang, H.; Chugh, T.; Guo, D.; Miettinen, K. Data-driven evolutionary optimization: An overview and case studies. IEEE Trans. Evol. Comput. 2018, 23, 442–458. [Google Scholar] [CrossRef]

- Zhen, H.; Gong, W.; Wang, L.; Ming, F.; Liao, Z. Two-Stage Data-Driven Evolutionary Optimization for High-Dimensional Expensive Problems. IEEE Trans. Cybern. 2023, 53, 2368–2379. [Google Scholar] [CrossRef]

- Pan, J.S.; Liu, N.; Chu, S.C.; Lai, T. An efficient surrogate-assisted hybrid optimization algorithm for expensive optimization problems. Inf. Sci. 2021, 561, 304–325. [Google Scholar] [CrossRef]

- Hardy, R.L. Multiquadric equations of topography and other irregular surfaces. J. Geophys. Res. 1971, 76, 1905–1915. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, Q.; Gielen, G.G. A Gaussian process surrogate model assisted evolutionary algorithm for medium scale expensive optimization problems. IEEE Trans. Evol. Comput. 2013, 18, 180–192. [Google Scholar] [CrossRef]

- Herrera, M.; Guglielmetti, A.; Xiao, M.; Filomeno Coelho, R. Metamodel-assisted optimization based on multiple kernel regression for mixed variables. Struct. Multidiscip. Optim. 2014, 49, 979–991. [Google Scholar] [CrossRef]

- Huang, K.; Zhen, H.; Gong, W.; Wang, R.; Bian, W. Surrogate-assisted evolutionary sampling particle swarm optimization for high-dimensional expensive optimization. Neural Comput. Appl. 2023, 1–17. [Google Scholar] [CrossRef]

- Tian, J.; Tan, Y.; Zeng, J.; Sun, C.; Jin, Y. Multiobjective infill criterion driven Gaussian process-assisted particle swarm optimization of high-dimensional expensive problems. IEEE Trans. Evol. Comput. 2018, 23, 459–472. [Google Scholar] [CrossRef]

- Xie, L.; Li, G.; Wang, Z.; Cui, L.; Gong, M. Surrogate-Assisted Evolutionary Algorithm with Model and Infill Criterion Auto-Configuration. IEEE Trans. Evol. Comput. 2023, 28, 1114–1126. [Google Scholar] [CrossRef]

- Nguyen, B.H.; Xue, B.; Zhang, M. A constrained competitive swarm optimizer with an SVM-based surrogate model for feature selection. IEEE Trans. Evol. Comput. 2022, 28, 2–16. [Google Scholar] [CrossRef]

- Dong, H.; Dong, Z. Surrogate-assisted grey wolf optimization for high-dimensional, computationally expensive black-box problems. Swarm Evol. Comput. 2020, 57, 100713. [Google Scholar] [CrossRef]

- Li, G.; Zhang, Q.; Lin, Q.; Gao, W. A three-level radial basis function method for expensive optimization. IEEE Trans. Cybern. 2021, 52, 5720–5731. [Google Scholar] [CrossRef]

- Li, G.; Wang, Z.; Gong, M. Expensive optimization via surrogate-assisted and model-free evolutionary optimization. IEEE Trans. Syst. Man. Cybern. Syst. 2022, 53, 2758–2769. [Google Scholar] [CrossRef]

- Wang, H.; Jin, Y.; Doherty, J. Committee-based active learning for surrogate-assisted particle swarm optimization of expensive problems. IEEE Trans. Cybern. 2017, 47, 2664–2677. [Google Scholar] [CrossRef]

- Di Nuovo, A.G.; Ascia, G.; Catania, V. A study on evolutionary multi-objective optimization with fuzzy approximation for computational expensive problems. In Proceedings of the Parallel Problem Solving from Nature-PPSN XII: 12th International Conference, Taormina, Italy, 1–5 September 2012; Springer: Berlin/Heidelberg, Germany, 2012. Part II. pp. 102–111. [Google Scholar] [CrossRef]

- Li, F.; Shen, W.; Cai, X.; Gao, L.; Wang, G.G. A fast surrogate-assisted particle swarm optimization algorithm for computationally expensive problems. Appl. Soft Comput. 2020, 92, 106303. [Google Scholar] [CrossRef]

- Ong, Y.S.; Nair, P.B.; Keane, A.J. Evolutionary optimization of computationally expensive problems via surrogate modeling. AIAA J. 2003, 41, 687–696. [Google Scholar] [CrossRef]

- Li, G.; Zhang, Q. Multiple penalties and multiple local surrogates for expensive constrained optimization. IEEE Trans. Evol. Comput. 2021, 25, 769–778. [Google Scholar] [CrossRef]

- Wang, X.; Wang, G.G.; Song, B.; Wang, P.; Wang, Y. A novel evolutionary sampling assisted optimization method for high-dimensional expensive problems. IEEE Trans. Evol. Comput. 2019, 23, 815–827. [Google Scholar] [CrossRef]

- Pan, J.S.; Liang, Q.; Chu, S.C.; Tseng, K.K.; Watada, J. A multi-strategy surrogate-assisted competitive swarm optimizer for expensive optimization problems. Appl. Soft Comput. 2023, 147, 110733. [Google Scholar] [CrossRef]

- Guo, Z.; Zhang, Z.; Chen, Y.; Ma, G.; Song, L.; Li, J.; Feng, Z. An efficient surrogate-assisted differential evolution algorithm for turbomachinery cascades optimization with more than 100 variables. Aerosp. Sci. Technol. 2023, 142, 108675. [Google Scholar] [CrossRef]

- Zhang, J.; Li, M.; Yue, X.; Wang, X.; Shi, M. A hierarchical surrogate assisted optimization algorithm using teaching-learning-based optimization and differential evolution for high-dimensional expensive problems. Appl. Soft Comput. 2024, 152, 111212. [Google Scholar] [CrossRef]

- Meng, Z.; Yang, C. Hip-DE: Historical population based mutation strategy in differential evolution with parameter adaptive mechanism. Inf. Sci. 2021, 562, 44–77. [Google Scholar] [CrossRef]

- Hu, Z.; Xu, X.; Su, Q.; Zhu, H.; Guo, J. Grey prediction evolution algorithm for global optimization. Appl. Math. Model. 2020, 79, 145–160. [Google Scholar] [CrossRef]

- Li, W.; Su, Q.; Hu, Z. A grey prediction evolutionary algorithm with a surrogate model based on quadratic interpolation. Expert Syst. Appl. 2024, 236, 121261. [Google Scholar] [CrossRef]

- Jin, R.; Chen, W.; Simpson, T.W. Comparative studies of metamodelling techniques under multiple modelling criteria. Struct. Multidiscip. Optim. 2001, 23, 1–13. [Google Scholar] [CrossRef]

- Díaz-Manríquez, A.; Toscano, G.; Coello Coello, C.A. Comparison of metamodeling techniques in evolutionary algorithms. Soft Comput. 2017, 21, 5647–5663. [Google Scholar] [CrossRef]

- Stein, M. Large sample properties of simulations using Latin hypercube sampling. Technometrics 1987, 29, 143–151. [Google Scholar] [CrossRef]

- Kuudela, J.; Matousek, R. Combining Lipschitz and RBF surrogate models for high-dimensional computationally expensive problems. Inf. Sci. 2023, 619, 457–477. [Google Scholar] [CrossRef]

- Li, G.; Xie, L.; Wang, Z.; Wang, H.; Gong, M. Evolutionary algorithm with individual-distribution search strategy and regression-classification surrogates for expensive optimization. Inf. Sci. 2023, 634, 423–442. [Google Scholar] [CrossRef]

- Yu, H.; Tan, Y.; Zeng, J.; Sun, C.; Jin, Y. Surrogate-assisted hierarchical particle swarm optimization. Inf. Sci. 2018, 454, 59–72. [Google Scholar] [CrossRef]

- Liang, J.J.; Qu, B.Y.; Suganthan, P.N. Problem definitions and evaluation criteria for the CEC 2014 special session and competition on single objective real-parameter numerical optimization. Comput. Intell. Lab. Zhengzhou Univ. Zhengzhou China Tech. Rep. Nanyang Technol. Univ. Singap. 2013, 635, 2014. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).