Detection of Apple Leaf Gray Spot Disease Based on Improved YOLOv8 Network

Abstract

1. Introduction

2. Materials and Methods

2.1. Materials

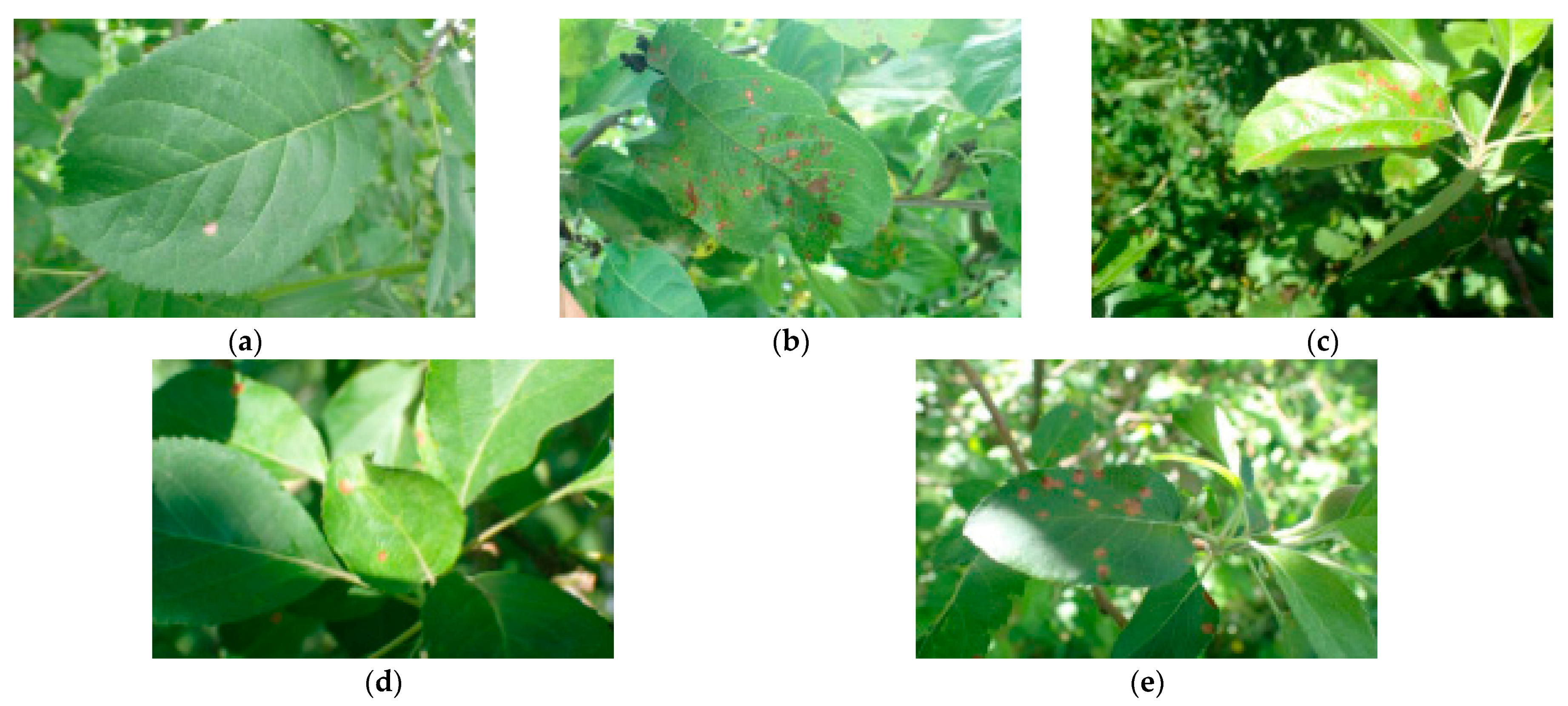

2.1.1. Data Acquisition and Image Feature

- A leaf with a single lesion: This is a single leaf with one spot, as shown in Figure 1a;

- An overexposed leaf with lesions: Excessive light can easily lead to missed detections, as shown in Figure 1c;

- A leaf with blurry lesions: Spots are far from the camera, which causes blur problems, as shown in Figure 1e.

2.1.2. Image and Data Augmentation

2.1.3. Image Annotation and Dataset Generation

2.2. Relevant Work

YOLOv8

2.3. The Proposed Algorithm

2.3.1. Dilated Reparam Block

2.3.2. SBAY

2.3.3. Small Detection Head

3. Results and Discussion

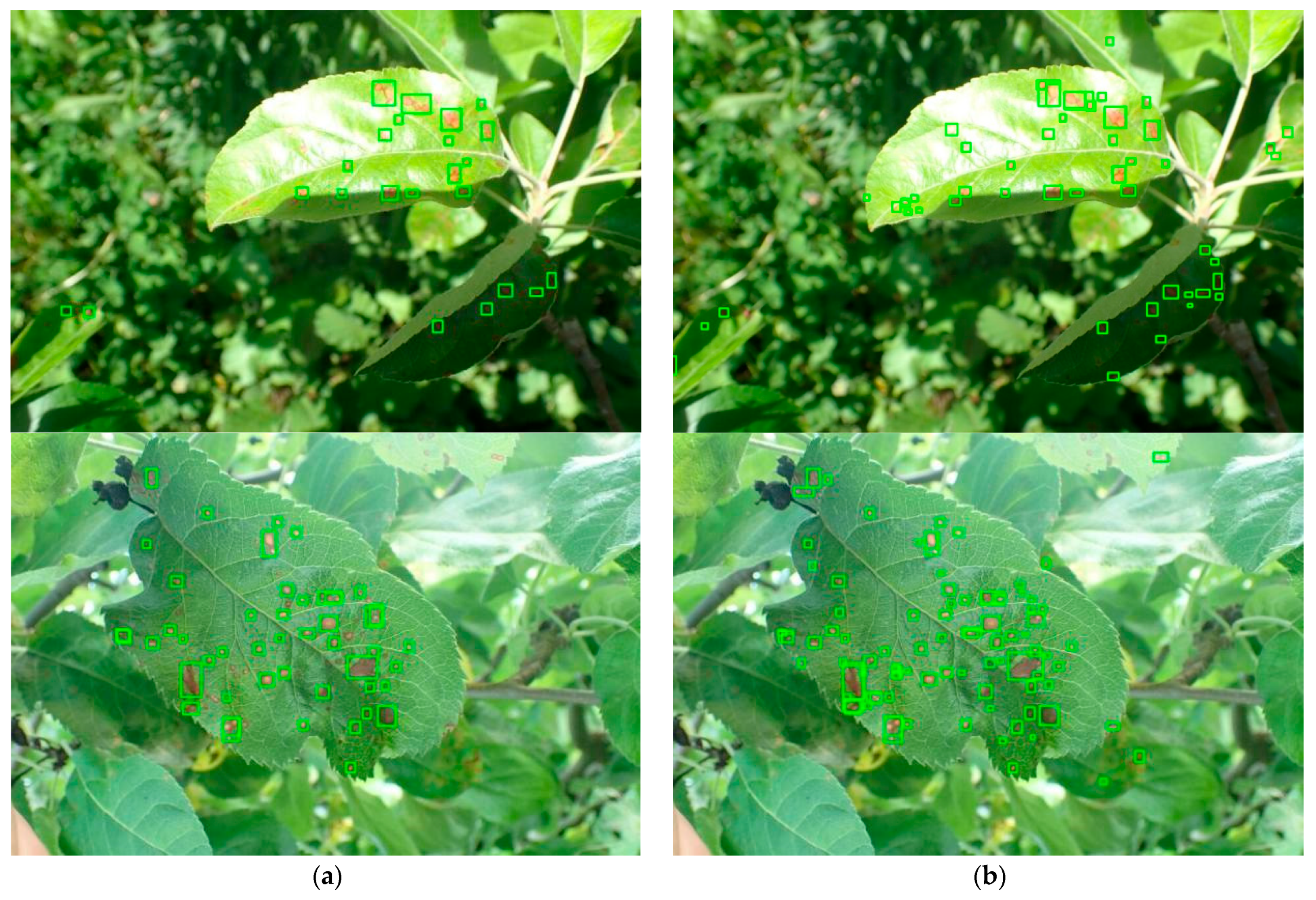

3.1. Training Result Analysis

3.2. Algorithm Performance Evaluation

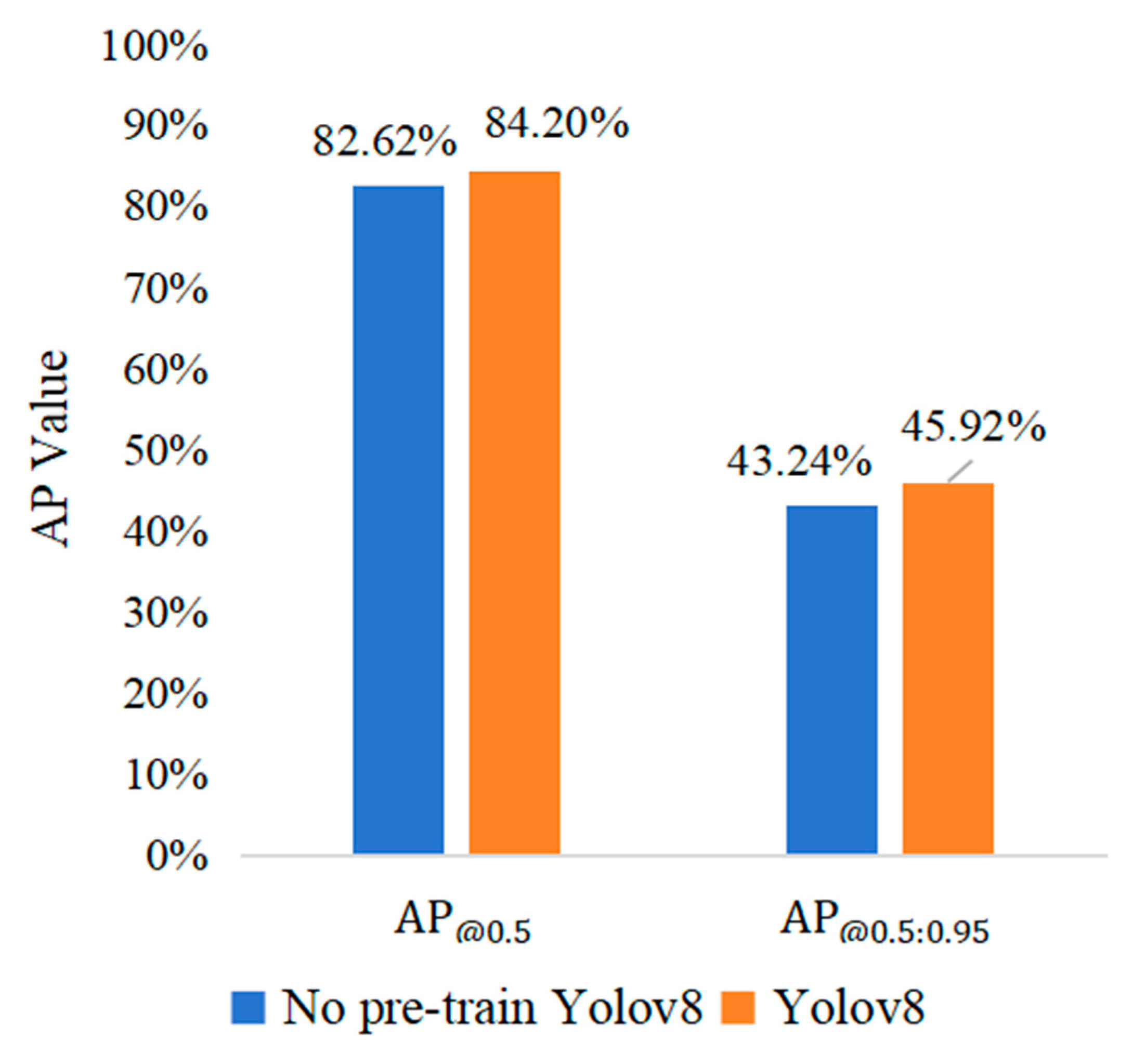

3.2.1. Pre-Training

3.2.2. Comparison of Improved Convolutional Layer

3.2.3. Comparison of Feature Fusion Strategy

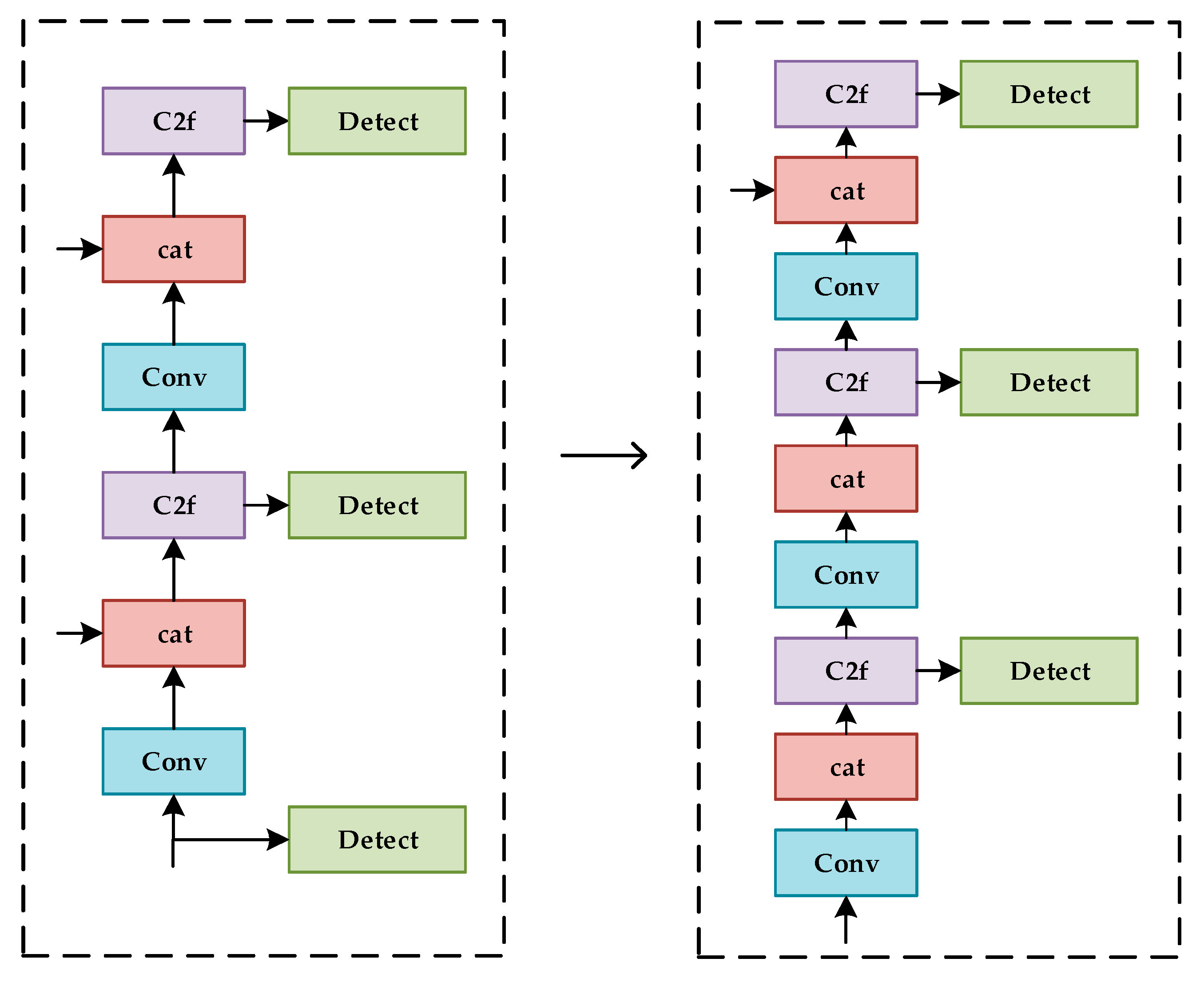

3.2.4. Comparison of Improved Detection Head

3.3. Overall Algorithm Performance Comparison

4. Conclusions and Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ayaz, F.K. Effect of climatic factors on sooty blotch, flyspeck intensity and fruit quality of apple. J. Pure Appl. Biol. 2018, 7, 727–735. [Google Scholar]

- Bansal, P.; Kumar, R.; Kumar, S. Disease detection in apple leaves using deep convolutional neural network. Agriculture 2021, 11, 617. [Google Scholar] [CrossRef]

- Yağ, İ.; Altan, A. Artificial intelligence-based robust hybrid algorithm design and implementation for real-time detection of plant diseases in agricultural environments. Biology 2022, 11, 1732. [Google Scholar] [CrossRef] [PubMed]

- Anupam, B.; Sunil, P.; Amandeep, K.; Shah, M.A. Exploring the trend of recognizing apple leaf disease detection through machine learning: A comprehensive analysis using bibliometric techniques. Artif. Intell. Rev. 2024, 57, 21. [Google Scholar]

- Imtiaz, A.; Kumar, P.Y. Predicting Apple Plant Diseases in Orchards Using Machine Learning and Deep Learning Algorithms. SN Comput. Sci. 2024, 5, 700. [Google Scholar]

- Jan, M.; Ahmad, H. Image features based intelligent apple disease prediction system: Machine learning based apple disease prediction system. Int. J. Agric. Environ. Inf. Syst. 2020, 11, 31–47. [Google Scholar] [CrossRef]

- Shao, Y.; Devaraj, M. Application of plant disease identification and detection based on deep learning. In Proceedings of the 3rd International Conference on Robotics, Artificial Intelligence and Intelligent Control (RAIIC), Mianyang, China, 5–7 July 2024; pp. 437–441. [Google Scholar]

- Anju, Y.; Udit, T.; Rahul, S.; Pal, V.; Bhateja, V. AFD-Net: Apple foliar disease multi-classification using deep learning on plant pathology dataset. Plant Soil 2022, 477, 595–611. [Google Scholar]

- Ayaz, H.; Rodríguez-Esparza, E.; Ahmad, M.; Oliva, D.; Pérez-Cisneros, M.; Sarkar, R. Classification of apple disease based on non-linear deep features. Appl. Sci. 2021, 11, 6422. [Google Scholar] [CrossRef]

- Khan, A.I.; Quadri, S.M.K.; Banday, S. Deep learning for apple diseases: Classification and identification. Int. J. Comput. Intell. Stud. 2021, 10, 1–12. [Google Scholar]

- Zhao, K.; Li, J.; Shi, W.; Qi, L.; Yu, C.; Zhang, W. Field-based soybean flower and pod detection using an improved YOLOv8-VEW method. Agriculture 2024, 14, 1423. [Google Scholar] [CrossRef]

- Niu, S.; Nie, Z.; Li, G.; Zhu, W. Early drought detection in maize using UAV images and YOLOv8+. Drones 2024, 8, 170. [Google Scholar] [CrossRef]

- Zhang, L.; You, H.; Wei, Z.; Li, Z.; Jia, H.; Yu, S.; Zhao, C.; Lv, Y.; Li, D. DGS-YOLOv8: A method for ginseng appearance quality detection. Agriculture 2024, 14, 1353. [Google Scholar] [CrossRef]

- Gao, L.; Zhao, X.; Yue, X.; Yue, Y.; Wang, X.; Wu, H.; Zhang, X. A lightweight YOLOv8 model for apple leaf disease detection. Appl. Sci. 2024, 14, 6710. [Google Scholar] [CrossRef]

- Lv, J.; Xu, H.; Han, Y.; Lu, W.; Xu, L.; Rong, H.; Yang, B.; Zou, L.; Ma, Z. A visual identification method for the apple growth forms in the orchard. Comput. Electron. Agric. 2022, 197, 106954. [Google Scholar] [CrossRef]

- Qi, J.; Liu, X.; Liu, K.; Xu, F.; Guo, H.; Tian, X.; Li, M.; Bao, Z.; Li, Y. An improved YOLOv5 model based on visual attention mechanism: Application to recognition of tomato virus disease. Comput. Electron. Agric. 2022, 194, 106780. [Google Scholar] [CrossRef]

- Li, S.; Li, K.; Qiao, Y.; Zhang, L. A multi-scale cucumber disease detection method in natural scenes based on YOLOv5. Comput. Electron. Agric. 2022, 202, 107363. [Google Scholar] [CrossRef]

- Shang, Y.; Xu, X.; Jiao, Y.; Wang, Z.; Hua, Z.; Song, H. Using lightweight deep learning algorithm for real-time detection of apple flowers in natural environments. Comput. Electron. Agric. 2023, 207, 107765. [Google Scholar] [CrossRef]

- Jiang, P.; Chen, Y.; Liu, B.; He, D.; Liang, C. Real-time detection of apple leaf diseases using deep learning approach based on improved convolutional neural networks. IEEE Access 2019, 7, 59069–59080. [Google Scholar] [CrossRef]

- Liu, B.; Ding, Z.; Tian, L.; He, D.; Li, S.; Wang, H. Grape leaf disease identification using improved deep convolutional neural networks. Front. Plant Sci. 2020, 11, 1082. [Google Scholar] [CrossRef]

- Manju, R.A.; Koshy, G.; Simon, P. Improved method for enhancing dark images based on CLAHE and morphological reconstruction. Procedia Comput. Sci. 2019, 165, 391–398. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A comprehensive review of YOLO architectures in computer vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Li, J.; Qiao, Y.; Liu, S.; Zhang, J.; Yang, Z.; Wang, M. An improved YOLOv5-based vegetable disease detection method. Comput. Electron. Agric. 2022, 202, 107345. [Google Scholar] [CrossRef]

- Gong, Y.; Yu, X.; Ding, Y.; Peng, X.; Zhao, J.; Han, Z. Effective fusion factor in FPN for tiny object detection. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021. [Google Scholar]

- Xi, R.; Jiang, K.; Zhang, W.; Lv, Z.; Hou, J. Recognition method for potato buds based on improved Faster R-CNN. Trans. Chin. Soc. Agric. Mach. 2020, 51, 216–223. [Google Scholar]

- Qiao, J.; Li, X.-L.; Han, H.-G. Design and Application of Deep Belief Network with Adaptive Learning Rate. Acta Autom. Sin. 2017, 43, 1339–1349. [Google Scholar]

- Jiang, X.; Wang, N.; Xin, J.; Xia, X.; Yang, X.; Gao, X. Learning lightweight super-resolution networks with weight pruning. Neural Netw. 2021, 144, 21–32. [Google Scholar] [CrossRef]

- Goutte, C.; Gaussier, E. A probabilistic interpretation of precision, recall, and F-score, with implication for evaluation. In Advances in Information Retrieval; Lecture Notes in Computer Science; Losada, D.E., Fernández-Luna, J.M., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 345–359. [Google Scholar]

- Cao, D.; Chen, Z.; Gao, L. An improved object detection algorithm based on multi-scaled and deformable convolutional neural networks. Hum.-Cent. Comput. Inf. Sci. 2020, 10, 14. [Google Scholar] [CrossRef]

- Wang, A.; Xu, Y.; Wang, H.; Wu, Z.; Wei, Z. CDE-DETR: A real-time end-to-end high-resolution remote sensing object detection method based on RT-DETR. In Proceedings of the IGARSS 2024—2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; pp. 8090–8094. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Receptive field block net for accurate and fast object detection. In Proceedings of the Computer Vision—ECCV 2018: 15th European Conference, Munich, Germany, 8–14 September 2018; Part XI. Volume 11215, pp. 404–419. [Google Scholar]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Ghiasi, G.; Lin, T.-Y.; Le, Q.V. NAS-FPN: Learning scalable feature pyramid architecture for object detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7029–7038. [Google Scholar]

- Li, X.; Hu, X.; Li, F.; Xu, J. Lightweight recognition for multiple and indistinguishable diseases of apple tree leaf. Trans. Chin. Soc. Agric. Eng. 2023, 39, 184–190. [Google Scholar]

- Zhong, J.; Cheng, Q.; Hu, X.; Liu, Z. YOLO adaptive developments in complex natural environments for tiny object detection. Electronics 2024, 13, 2525. [Google Scholar] [CrossRef]

- Li, X.; He, M.; Luo, H. Occluded pedestrian detection algorithm based on improved YOLOv3. Acta Opt. Sin. 2022, 42, 1415003. [Google Scholar]

- Zhao, J.; Zhang, X.; Yan, J.; Qiu, X.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W. A wheat spike detection method in UAV images based on improved YOLOv5. Remote Sens. 2021, 13, 3095. [Google Scholar] [CrossRef]

| Configuration | Parameter |

|---|---|

| CPU | Intel Core i7-10750H |

| GPU | NVIDIA RTX 4090 |

| Operating system | Windows 11 |

| Accelerated environment | CUDA 11.8 |

| Development environment | Pycharm 2022 |

| TConf-Thresh= 0.25 IOU = 0.5 | Precision | Recall | F1 score | TP | FP | FN |

|---|---|---|---|---|---|---|

| YOLOv8 | 0.826 | 0.79 | 0.81 | 22,315 | 1740 | 5875 |

| Improved YOLOv8 | 0.905 | 0.92 | 0.93 | 25,852 | 794 | 2338 |

| Model | YOLO-V8 | DRB | SBAY | Head | (%) | (%) |

|---|---|---|---|---|---|---|

| 1 | √ | 82.62% | 43.24% | |||

| 2 | √ | √ | 85.21% | 43.78% | ||

| 3 | √ | √ | 86.25% | 46.00% | ||

| 4 | √ | √ | 84.15% | 43.44% | ||

| 5 | √ | √ | √ | 88.12% | 47.42% | |

| 6 (Ours) | √ | √ | √ | √ | 90.54% | 48.32% |

| Model | (%) | (%) |

|---|---|---|

| Faster R-CNN | 78.57% | 42.34% |

| SSD | 71.32% | 38.52% |

| YOLOv7 | 79.64% | 40.71% |

| YOLOv8 | 82.62% | 43.24% |

| YOLOv11 | 85.16% | 44.02% |

| Ours | 90.54% | 48.32% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, S.; Yin, W.; He, Y.; Kan, X.; Li, X. Detection of Apple Leaf Gray Spot Disease Based on Improved YOLOv8 Network. Mathematics 2025, 13, 840. https://doi.org/10.3390/math13050840

Zhou S, Yin W, He Y, Kan X, Li X. Detection of Apple Leaf Gray Spot Disease Based on Improved YOLOv8 Network. Mathematics. 2025; 13(5):840. https://doi.org/10.3390/math13050840

Chicago/Turabian StyleZhou, Siyi, Wenjie Yin, Yinghao He, Xu Kan, and Xin Li. 2025. "Detection of Apple Leaf Gray Spot Disease Based on Improved YOLOv8 Network" Mathematics 13, no. 5: 840. https://doi.org/10.3390/math13050840

APA StyleZhou, S., Yin, W., He, Y., Kan, X., & Li, X. (2025). Detection of Apple Leaf Gray Spot Disease Based on Improved YOLOv8 Network. Mathematics, 13(5), 840. https://doi.org/10.3390/math13050840