Abstract

The alternating direction method is one of the attractive approaches for solving convex optimization problems with linear constraints and separable objective functions. Experience with applications has shown that the number of iterations depends significantly on the penalty parameter for the linear constraint. The penalty parameters in the classical alternating direction method are a constant. In this paper, an improved alternating direction method is proposed, which not only adaptively adjusts the penalty parameters per iteration based on the iteration message but also adds relaxation factors to the Lagrange multiplier update steps. Preliminary numerical experiments show that the technique of adaptive adjusting of the penalty parameters per iteration and attaching relaxation factors in the Lagrange multiplier updating steps are effective in practical applications.

Keywords:

convex optimization; alternating direction method of multipliers; symmetric alternating direction method of multipliers; global convergence MSC:

65F10; 65F45

1. Introduction

Many problems in the fields of signal and image processing, machine learning, medical image reconstruction, computer vision, and network communications [1,2,3,4,5,6,7,8] can be reduced to solving the following convex optimization problem with linear constraints:

where and are the given matrices; is the given vector; and are continuous closed convex functions; and and are nonempty closed convex subsets in and , respectively. The augmented Lagrangian function of the convex optimization problem (Equation (1)) is the following Equation (2).

where is the penalty parameter and is the Lagrange multiplier vector. Based on the objective function with separable structural properties, the alternating direction method of multipliers (ADMM) has been proposed in the literature [9,10] to solve the problem (Equation (1)) as follows:

The ADMM algorithm can be viewed as the Gauss Seidel form of the augmented Lagrangian multiplier method, and it can be interpreted as the Douglas Rachford splitting algorithm (DRSM) from a dual perspective [11]. In addition, the DRSM can be further interpreted as a proximal point algorithm (PPA) [12]. The ADMM algorithm is an important method for solving separable convex optimization problems. Due to its fast processing speed and good convergence performance, the ADMM algorithm is widely used in statistical learning, machine learning, and other fields. However, the ADMM algorithm has slow convergence speed under the requirement of a large scale and high precision. Therefore, many experts and scholars are working on improving the ADMM algorithm, such as Fortin and Glowinski, who suggested in [13] attaching a relaxation factor to the Lagrange multiplier updating step in Equation (3), which this results in the following scheme:

where the parameter γ can be chosen in the interval , and thus it becomes possible to enlarge the step size for updating the Lagrange multiplier. An advantage of this larger step size in Equation (4) is that it can numerically lead to faster convergence empirically. This scheme (Equation (4)) differs from the original ADMM scheme (Equation (3)) only in the fact that the step size for updating the Lagrange multiplier can be larger than 1. But technically they are actually two distinct families of ADMM algorithms, in which one is derived from the operator splitting framework and the other is derived from Lagrangian splitting. Thus, despite the similarity in notation, the ADMM scheme (Equation (4)) with Fortin et al.’s larger step size and the original ADMM scheme (Equation (3)) are completely different in nature. On the other hand, Glowinski et al. [14] applied the Douglas Rachford splitting method (DRSM) [11] to the dual of Equation (1) and obtained the following scheme:

This scheme (Equation (5)) can be regarded as a symmetric version of the ADMM scheme (Equation (3)) in the sense that the variables and are treated equally, each of which is followed consequently by an update of the Lagrange multiplier. However, as shown in [15], the sequence generated by the symmetric ADMM (Equation (5)) is not necessarily strictly contractive with respect to the solution set of Equation (1), while this property can be ensured by the sequence generated by the ADMM (Equation (3)). Because of these deficiencies, Bingsheng He et al. [15] proposed the following scheme:

where the parameter α∈ (0, 1) is for shrinking the step sizes in Equation (5). The sequence generated by Equation (6) is strictly contractive with respect to the solution set of Equation (1). Thus, we called it the strictly contractive symmetric version of the ADMM. Limiting α ∈ (0, 1) in the strictly contractive symmetric version of the ADMM (Equation (6)) makes the updating of Lagrange multipliers more conservative and with smaller steps, but smaller steps should be strongly avoided in practical applications. Instead, one wants to seek larger steps where possible to speed up numerical performance. For this purpose, Bingsheng He et al. [16] proposed the following scheme:

where and are limited to the following region

As for more symmetric alternation method improvements of the ADMM algorithm, we provide the reader with references [17,18,19,20,21,22,23,24,25,26].

In addition, the convergence speed of the ADMM algorithm is directly related to the choice of the penalty parameter . Therefore, improvements of the ADMM algorithm with a change to penalty parameter instead of the fixed penalty parameter are proposed. Such as He, Yang, and Wang [27] proposed an adaptive penalty parameter scheme to solve linearly constrained monotone variational inequalities. In this paper, we, based on the idea of references [16,27], propose an adaptive penalty parameter alternate direction method of multipliers with attach relaxation factors and with the Lagrange multiplier updated twice at each iteration. The iteration format is shown in Algorithm 1.

| Algorithm 1: Improved alternate direction multiplier algorithm to solve convex optimization problem (1) | |

| Given error tolerance , controls parameter , and relaxation factors defined in (Equation (8)). Choose parameter sequence , which satisfies and . Given initial penalty parameter and initial approximation . Set . Step 1. Computing | |

| , | (9) |

| , | (10) |

| (11) | |

| . | (12) |

| Step 2. If , stop. Otherwise, go to Step 3. Step 3. Updating penalty parameter | |

| (13) | |

| Step 4. Set , and go to Step 1. | |

We choose the algorithm described in [28] with the necessary modifications to solve the x-subproblem and y-subproblem in Algorithm 1. The iteration method to solve the x-subproblem can be described as follows in Algorithm 2.

| Algorithm 2: Algorithm to solve x-subproblem (9) or y-subproblem (11) |

| Given constants , , error tolerance , and initial approximation . Set and . Step 1. Computing , where . Step 2. If , computing where , , . If , stop (in this case, is an approximate solution of the x-subproblem in Algorithm 1). Otherwise, define and set . Go to Step 1. If all of the above are not true, go to Step 3. Step 3. Define and set . Go to Step 2. |

2. Convergence of Algorithm 1

To conveniently prove the global convergence of Algorithm 1, we first give the following Lemmas 1–3.

Lemma 1.

([29]). Let be a nonempty closed convex subset on

, and is a continuous closed convex function on

; then, a sufficient necessary condition for

to be a solution of the optimization problem

is that is a solution of the variational inequality

Lemma 2.

([26,27]). A sufficient necessary condition for the vector to be a solution of the variational inequality (Equation (14)) is that is also a solution of the projection equation

where

is the projection of onto ; that is,

.

Lemma 3.

([30]). Let be a nonempty closed convex subset on , then we have

Let

then the problem (Equation (1)) is equivalent to finding such that

It is known from Lemma 2 that is a solution of the variational inequality (Equation (15)) if and only if is the zero point of

Lemma 4.

Assume that the sequence

is generated by Algorithm 1; then, the sufficient necessary condition for to be a solution of the variational inequality (Equation (15)) is

Proof.

It follows from Lemma 1 that solving Equations (9) and (11) is equivalent to finding and such that

Thus, it follows from Lemma 2 that

Noting that Equations (10) and (12) hold, we have

Therefore, we have Lemma 3, that

From above inequality, and noting that r and s are fixed constants and is a bounded sequence of positive numbers, we know that to be the solution of the variational inequality (Equation (15)) if and only if Equation (16) holds. □

Lemma 5.

Assume that the sequence

is generated by Algorithm 1 and

is a solution of the variational inequality (Equation (14)), and we have

Proof.

Since is a solution of the variational inequality (Equation (15)), we have and

On the other hand, we have by Equations (17) and (18) that

Noting that the gradient of convex function is a monotone function, we have from Equations (20)–(22) that

Noting that the gradient of convex function is a monotone function, we have from Equations (21)–(23) that

Noting that , we know from Equation (11), Equations (24) and (25) that Equation (19) holds. □

Lemma 6.

For , we have

Proof.

From Equation (18) we have

and on the other hand, by setting and in Equation (18), we have

Noting that Equation (6) holds, we have

Combining Equation (3) and Equation (29), we obtain

From Equations (27), (28), and (30) and the monotonicity of the gradient of the convex function, we know that Equation (26) holds. □

Let

then is a symmetric semi-definite matrix, and defining

we have the following Lemma 7.

Lemma 7.

Assume that the sequence is generated by Algorithm 1 and

is the solution of the variational inequality (Equation (14)), and we have

where

and

Proof.

By Equations (3) and (4) and the definition of and , we have

By the definition of , Lemmas 5 and 6, and noting that , we have

Therefore, we have

Hence, the inequality Equation (31) holds. □

To prove the global convergence of Algorithm 1, we divided the domain defined in Equation (8) into the following five parts:

Obviously,

Lemma 8.

Assume that the sequence

is generated by Algorithm 1; then, for any

, there exist constants

and , such that

defined in Equation (32) satisfies

where

Proof.

In the case , we have by the Cauchy–Schwarz inequality for the last term of in Lemma 7 that

Thus, we have

Let in Equation (33) as

then the inequality Equation (33) holds.

In the case , that is, , we have by Lemma 7 that

Let , and we have

In the case , we have by the Cauchy–Schwarz inequality for the last term of in Lemma 7 that

where . Thus, we have

And let in Equation (33) as

then the inequality Equation (33) holds.

In the case , we have by the Cauchy–Schwarz inequality for the last term of in Lemma 7 that

where . Thus, we have

And let in Equation (33) as

then the inequality Equation (33) holds.

In the case , we have by the Cauchy–Schwarz inequality for the last term of in Lemma 7 that

where . Thus, we have

And let in Equation (33) as

then the inequality Equation (33) holds. □

Lemma 9.

Assume that the sequence is generated by Algorithm 1; then, we have that there exist constants such that

where .

Proof.

By the definition of and , we have

Since , we know that matrix

is a positive semi-definite matrix. And thus . Analogously, we have .□

Lemma 10.

Assume that the sequence

is generated by Algorithm 1; then, there exists a constant

such that holds for all positive integer numbers k.

Proof.

If , we have by Equation (26), Lemmas 7–9, and the definition of and that

If , we have by Equation (26), Lemmas 7–9, and the definition of and that

Let

with

then

Noting that and , we know that there exists a constant such that holds for all positive integer numbers k. □

Theorem 1.

Assume that the sequence is generated by Algorithm 1; then, we have

And so

is a solution of the variational inequality (Equation (15)). Hence

, is a solution of the optimization problem (Equation (1)).

Proof.

If , we have by Lemmas 7, 8, and 10 that

Hence, we have

Noting that , the above equation is equivalent to

So, we have .

If , we have by Lemmas 7, 8, and 10 that

Hence, we have

Noting that , the above equation is equivalent to

So, we have .

If , we have by Lemmas 7 and 8 that

Hence, we have

with its equivalence to

So, we have . By that discussed above and Lemma 4, we know that is a solution of the variational inequality (Equation (19)). Hence, , is a solution of the optimization problem (Equation (1)). □

3. Numerical Experiments

In this section, some numerical experiments will be tested to illustrate the efficiency of Algorithm 1. The experiments are divided into two parts. In the first part, we first analyze the relationship between the parameters r and s and the convergence of Algorithm 1 and give the selection range of the best parameters r and s. Then, we give a comparison of Algorithm 1 with the algorithm proposed in [15], the algorithm proposed in [16], and the algorithm proposed in [27] to solve a convex quadratic programming problem. In the second part, we give the numerical comparison between Algorithm 1 and the algorithm proposed in [16] in image restoration. All codes were written in MATLAB R2019a, and all experiments were performed on a personal computer with an Intel(R) Core i7-6567U processor (3.3 GHz) and 8 GB memory.

3.1. Convex Quadratic Programming Problem

To specify the problem (Equation (1)), we choose a separable convex quadratic programming problem proposed in [31], where

and here the matrices , and , and are randomly generated square matrices with all entries are chosen in intervals [−5, 5]. The vectors are generated from a uniform distribution in the interval [−500, 500]. For the linear constraint, and (i.e., all entries of A and B are taken as 1), and . The convex set is defined as a sphere with the origin at the center of the circle and radius , and the convex set is defined as the box in with all entries are chosen in intervals [0, 5].

Since the convergence of the original ADMM algorithm is well proven, the algorithm proposed in [11] may not converge in some cases, the symmetric ADMM algorithm proposed in [16] has a larger step size relative to the algorithm in [16], and the adaptive penalty parameter has been less studied. Therefore, we only compare Algorithm 1 numerically with the algorithms presented in references [14], [16] and [27] denoted respectively by Algorithm 14, 16 and 27.

To ensure the fairness of the experiment, the parameter of Algorithm 14 and 27 is chosen as the optimal value ; the parameters r and s of the algorithm proposed in [18] are chosen as the optimal value r = 0.8, s = 1.17; and the initial penalty parameter of all algorithms mentioned above is chosen as 1. The initial iterations for all tested methods are zero vectors, and the stopping criterion is chosen as

For Algorithm 1, we take

The parameters of Algorithm 2, which solves the x-subproblem and the y-subproblem of Algorithm 1, are chosen as , , and . Table 1 reports the iteration numbers (Iter) and computation time (Time) of Algorithm 1 with different parameters r and s, and the best estimate of the parameters r and s can be chosen as r = 0.95, s = 1.12. And so, in the following numerical comparison experiment, the parameters r and s of Algorithm 1 are chosen as r = 0.95, s = 1.12.

Table 1.

The numerical comparison of Algorithm 1 with different parameters r and s.

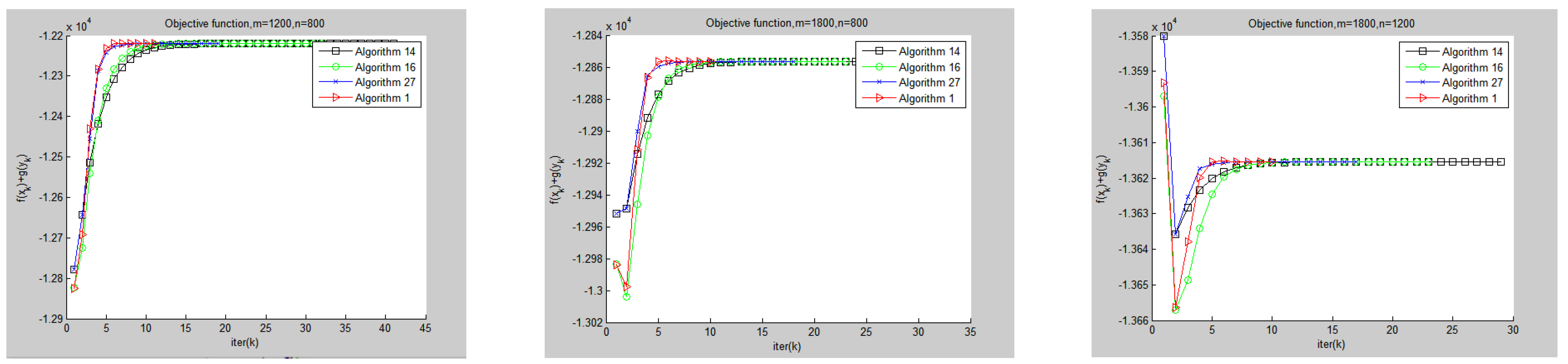

In Table 2, we show the iteration numbers (Iter), the computational time (Time) of Algorithm 1, and Algorithm14, 16 and 27 for solving the separable convex quadratic programming problem proposed in [31]. To investigate the stability and accuracy of the algorithms, we tested 8 different sets of m and n values throughout the experiment, running each set of data 10 times and averaging the final results. In Figure 1, we plot the comparison in terms of objective function values (Obj) and computing time with Algorithm 1, and Algorithm14, Algorithm16 and Algorithm27 for solving the separable convex quadratic programming problem proposed in [31].

Table 2.

The numerical comparison of Algorithm 1 with the other three algorithms.

Figure 1.

Objective function curve of Algorithm 1 and the other three algorithms.

3.2. Image Restoration Problem

In this subsection, we consider the total variational image deblurring model proposed in [16], whose discretized version can be written as

where represents a digital clean image, is a corrupted input image, is the discrete gradient operator, and and are the discrete derivatives in the horizontal and vertical directions, respectively. is the matrix representation of a spatially invariant blurring operator, λ > 0 is a constant balancing the data fidelity and total variational regularization terms, and defined on is given by

This is a basic model for various more advanced image processing tasks, and it has been studied extensively in the literature. Introducing the auxiliary variable x, we can reformulate Equation (34) as

which is a special case of the generic model (Equation (1)) under discussion, and thus Algorithm 1 is applicable.

We test two images lena.tif (512 × 512) and man.pgm (512 × 512). These images are first corrupted by the blur operator with kernel size of 21 × 21, and then the blurred images are further corrupted by zero-white Gaussian noise with a standard derivation 0.002. In Figure 2, we list the original and degraded images. The quality of restored images is measured by the value of the SNR given by

where is the original image and is the recovered image. A larger SNR value means a higher quality of the restored image. Table 3 and Figure 3 report the comparison experiment between Algorithm 1 and the algorithm proposed in [16] for the image restoration problem.

Figure 2.

First column is original images; second column is corrupted images.

Table 3.

The numerical comparison of Algorithm 1 and the algorithm in [16].

Figure 3.

First column is blurred images; second column is recovered images by Algorithm 1; and third column is recovered images by the algorithm in [18].

4. Conclusions

The alternating direction method is one of the most attractive approaches for solving convex optimization problems with linear constraints and separable objective functions. Experience with applications has shown that the iteration numbers and computing time depend significantly on the penalty parameter for the linear constraint. The penalty parameters in the classical alternating direction method are a constant. In this paper, we, based on the idea of references [16,28], propose an adaptive penalty parameter alternate direction method of multipliers with attach relaxation factors and with the Lagrange multiplier updated twice at each iteration (Algorithm 1), which not only adaptively adjusts the penalty parameters per iteration based on the iteration message but also adds relaxation factors to the Lagrange multiplier update steps. For the proposed algorithm, that is, Algorithm 1, the global convergence is proven (that is, Theorem 1). Preliminary numerical experiments show that the technique of adaptive adjusting of penalty parameters per iteration and attaching relaxation factors in Lagrange multiplier updating steps are effective in practical applications (see Table 2 and Figure 1).

Author Contributions

Writing—original draft preparation, J.P. and Z.W.; writing-review and editing, S.Y. and Z.T. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by National Natural Science Foundation of China (grant number 11961012) and the Special Research Project for Guangxi Young Innovative Talents (grant number AD20297063).

Data Availability Statement

The original contributions presented in the study are included in the article; further inquiries can be directed to the corresponding author.

Acknowledgments

The authors thank the anonymous reviewer for valuable suggestions that helped them to improve this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yang, J.F.; Zhang, Y. Alternating direction algorithms for l1-problems in compressive sensing. SIAM J. Sci. Comput. 2011, 33, 250–278. [Google Scholar] [CrossRef]

- Li, J.C. A parameterized proximal point algorithm for separable convex optimization. Optim. Lett. 2018, 12, 1589–1608. [Google Scholar]

- Tao, M.; Yuan, X.M. Recovering low-rank and sparse components of matrices from incomplete and noisy observations. SIAM J. Optim. 2011, 21, 57–81. [Google Scholar] [CrossRef]

- Hager, W.W.; Zhang, H.C. Convergence rates for an inexact ADMM applied to separable convex optimization. Comput. Optim. Appl. 2020, 77, 729–754. [Google Scholar] [CrossRef]

- Liu, Z.S.; Li, J.C.; Liu, X.N. A new model for sparse and Low-Rank matrix decomposition. J. Appl. Anal. Comput. 2017, 7, 600–616. [Google Scholar]

- Jiang, F.; Wu, Z.M. An inexact symmetric ADMM algorithm with indefinite proximal term for sparse signal recovery and image restoration problems. J. Comput. Appl. Math. 2023, 417, 114628. [Google Scholar] [CrossRef]

- Bai, J.C.; Hager, W.W.; Zhan, H.C. An inexact accelerated stochastic ADMM for separable convex optimization. Comput. Optim. Appl. 2022, 81, 479–518. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternation direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Chan, T.F.; Glowinski, R. Finite Element Approximation and Iterative Solution of a Class of Mildly Nonlinear Elliptic Equations; Technical Report STAN-CS-78-674; Computer Science Department, Stanford University: Stanford, CA, USA, 1978. [Google Scholar]

- Hestenes, M.R. Multiplier and gradient methods. J. Optim. Theory Appl. 1969, 4, 303–320. [Google Scholar] [CrossRef]

- Lions, P.L.; Mercier, B. Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 1979, 16, 964–979. [Google Scholar] [CrossRef]

- Cai; X. J.; Gu, G.Y.; He, B.S.; Yuan, X.M. A proximal point algorithm revisit on the alternating direction method of multipliers. Sci. China Math. 2013, 56, 2179–2186. [Google Scholar] [CrossRef]

- Fortin, M.; Glowinski, R. Augmented Lagrangian Methods: Applications to the Numerical Solution of Boundary-Value Problems; North-Holland: Amsterdam, The Netherlands, 1983. [Google Scholar]

- Glowinski, R.; Karkkainen, T.; Majava, K. On the Convergence of Operator-Splitting Methods, in Numerical Methods for Scientific Computing, Variational Problems and Applications; Heikkola, E., Kuznetsov, P.Y., Neittaanm, P., Pironneau, O., Eds.; CIMNE: Barcelona, Spain, 2003; pp. 67–79. [Google Scholar]

- He, B.S.; Liu, H.; Wang, Z.R.; Yuan, X.M. A strictly contractive Peaceman-Rachford splitting method for convex programming. SIAM J. Optim. 2014, 24, 1011–1040. [Google Scholar] [CrossRef] [PubMed]

- He, B.S.; Ma, F.; Yuan, X.M. Convergence study on the symmetric version of ADMM with larger step sizes. SIAM J. Imaging Sci. 2016, 9, 1467–1501. [Google Scholar] [CrossRef]

- Luo, G.; Yang, Q.Z. A fast symmetric alternating direction method of multipliers. Numer. Math. Theory Meth. Appl. 2020, 13, 200–219. [Google Scholar]

- Li, X.X.; Yuan, X.M. A proximal strictly contractive Peaceman-Rachford splitting method for convex programming with applications to imaging. SIAM J. Imaging Sci. 2015, 8, 1332–1365. [Google Scholar] [CrossRef]

- Bai, J.C.; Li, J.C.; Xu, F.M.; Zhang, H.C. Generalized symmetric ADMM for separable convex optimization. Comput. Optim. Appl. 2018, 70, 129–170. [Google Scholar] [CrossRef]

- Wu, Z.M.; Li, M. An LQP-based symmetric Alternating direction method of multipliers with larger step sizes. J. Oper. Res. Soc. China 2019, 7, 365–383. [Google Scholar] [CrossRef]

- Chang, X.K.; Bai, J.C.; Song, D.J.; Liu, S.Y. Linearized symmetric multi-block ADMM with indefinite proximal regularization and optimal proximal parameter. Calcolo 2020, 57, 38. [Google Scholar] [CrossRef]

- Han, D.R.; Sun, D.F.; Zhang, L.W. Linear rate convergence of the alternating direction method of multipliers for convex composite programming. Math. Oper. Res. 2018, 43, 622–637. [Google Scholar] [CrossRef]

- Gao, B.; Ma, F. Symmetric alternating direction method with indefinite proximal regularization for linearly constrained convex optimization. J. Optim. Theory Appl. 2018, 176, 178–204. [Google Scholar] [CrossRef]

- Shen, Y.; Zuo, Y.N.; Yu, A.L. A partially proximal S-ADMM for separable convex optimization with linear constraints. Appl. Numer. Math. 2021, 160, 65–83. [Google Scholar] [CrossRef]

- Adona, V.A.; Goncalves, M.L.N. An inexact version of the symmetric proximal ADMM for solving separable convex optimization. Numer. Algor. 2023, 94, 1–28. [Google Scholar] [CrossRef]

- He, B.S.; Yang, H. Some convergence properties of a method of multipliers for linearly constrained monotone variational inequalities. Oper. Res. Lett. 1998, 23, 151–161. [Google Scholar] [CrossRef]

- He, B.S.; Yang, H.; Wang, S.L. Alternating direction method with Self-Adaptive penalty parameters for monotone variational inequalities. J. Optim. Theory Appl. 2000, 106, 337–356. [Google Scholar] [CrossRef]

- He, B.S.; Liao, L.Z. Improvements of some projection methods for monotone nonlinear variational inequalities. J. Optim. Theory Appl. 2002, 112, 111–128. [Google Scholar] [CrossRef]

- Palomar, D.P.; Scutari, G. Variational inequality theory a mathematical framework for multiuser communication systems and signal processing. In Proceedings of the International Workshop on Mathematical of Issues in Information Sciences-MIIS, Xi’an, China, 7–13 July 2012. [Google Scholar]

- He, B.S. A new method for a class of linear variational inequalities. Math. Programm. 1994, 66, 137–144. [Google Scholar] [CrossRef]

- Han, D.R.; He, H.J.; Yang, H.; Yuan, X.M. A customized Douglas–Rachford splitting algorithm for separable convex minimization with linear constraints. Numer. Math. 2014, 127, 167–200. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).