Abstract

In recent years, deep learning has been extensively deployed on unmanned aerial vehicles (UAVs), particularly for object detection. As the cornerstone of UAV-based object detection, deep neural networks are susceptible to adversarial attacks, with adversarial patches being a relatively straightforward method to implement. However, current research on adversarial patches, especially those targeting UAV object detection, is limited. This scarcity is notable given the complex and dynamically changing environment inherent in UAV image acquisition, which necessitates the development of more robust adversarial patches to achieve effective attacks. To address the challenge of adversarial attacks in UAV high-altitude reconnaissance, this paper presents a robust adversarial patch generation framework. Firstly, the dataset is reconstructed by considering various environmental factors that UAVs may encounter during image collection, and the influences of reflections and shadows during photography are integrated into patch training. Additionally, a nested optimization method is employed to enhance the continuity of attacks across different altitudes. Experimental results demonstrate that the adversarial patches generated by the proposed method exhibit greater robustness in complex environments and have better transferability among similar models.

MSC:

68T07

1. Introduction

In recent years, unmanned aerial vehicle (UAV) technology has witnessed rapid development. Its application fields have been widely expanded to civil, military, and commercial areas, covering numerous aspects such as reconnaissance and surveillance, medical rescue, and logistics distribution [1], demonstrating huge application potential and practical value. With the increasing maturity of control technology and the significant improvement in cost-effectiveness, UAVs are showing a trend of miniaturization and lightweight design. Moreover, they are capable of capturing high-resolution and real-time dynamic images, which makes UAVs an indispensable and important data source in the research field of low-altitude remote sensing. Due to the accessibility and remarkable cost-effectiveness of UAV remote sensing images, their importance is becoming increasingly prominent.

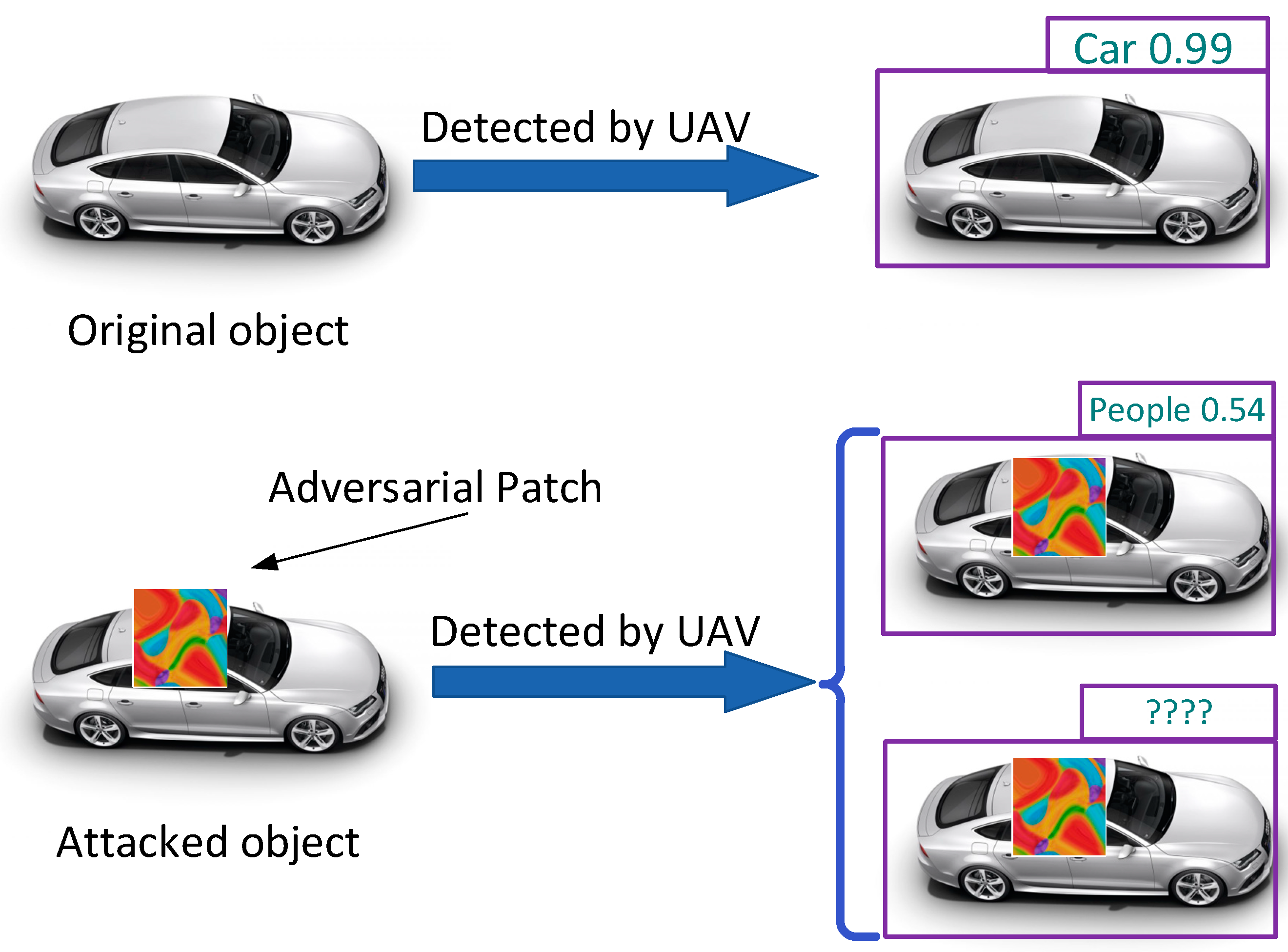

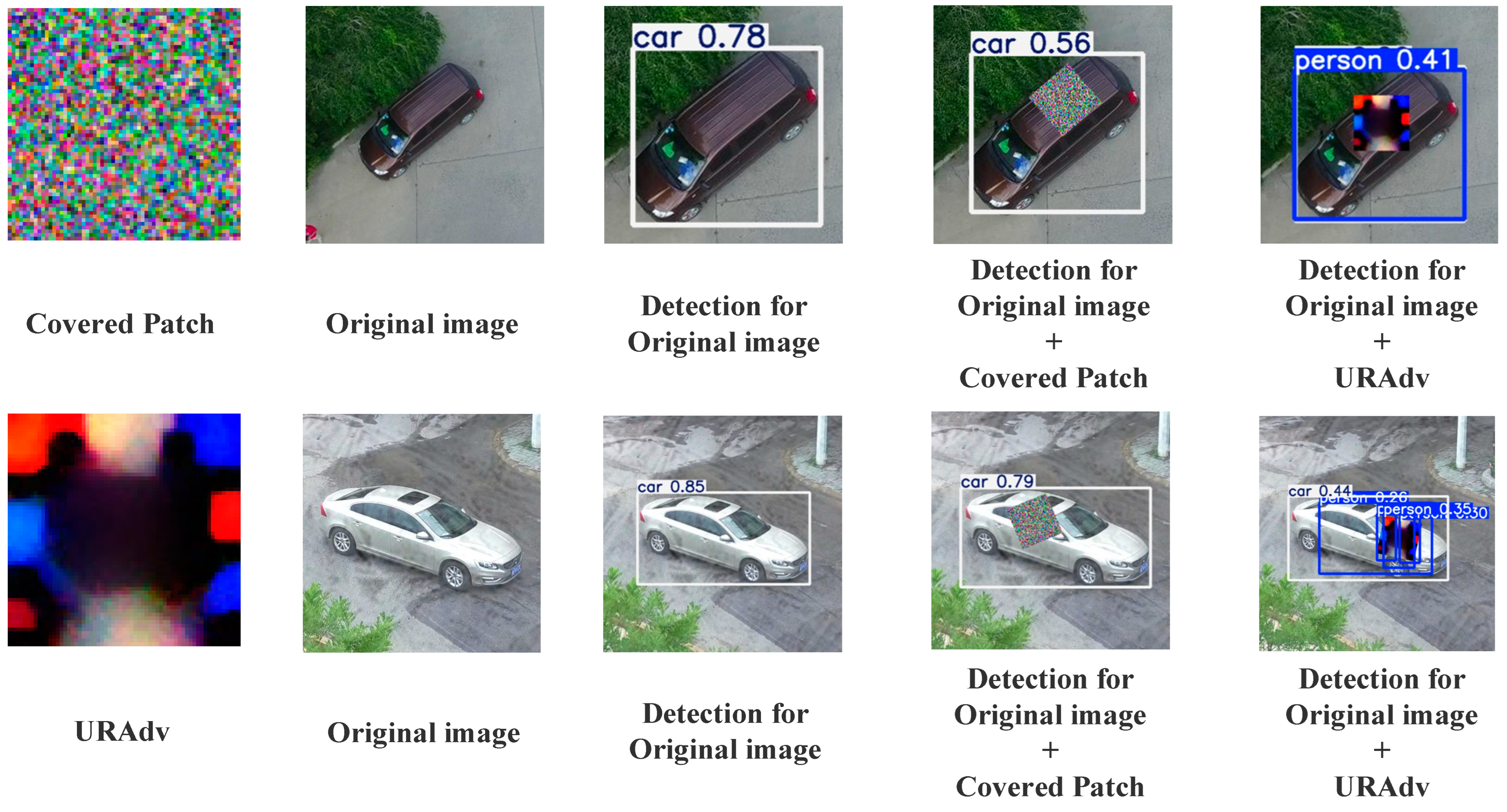

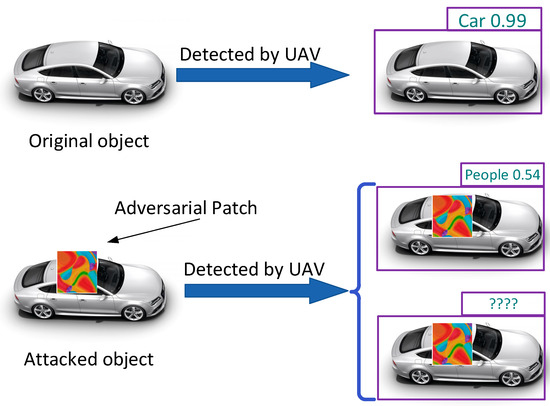

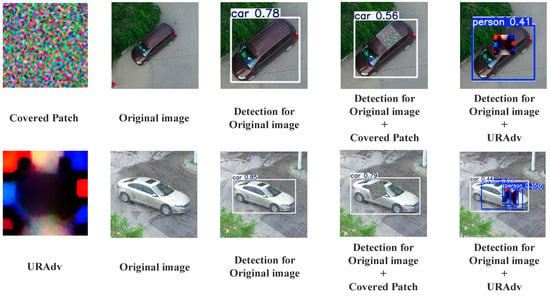

Object detection is one of the key technologies for enhancing the perception ability of UAVs, and it is of great significance for autonomous UAVs to complete tasks in multiple aspects, such as path planning [2], obstacle avoidance [3], fault detection [4], and geolocation [5]. Deep learning methods based on neural networks have become the mainstream algorithms for object detection tasks due to their powerful feature learning and expression capabilities. However, the reliability and security of these algorithms are being challenged by a new type of threat—adversarial attacks. Attackers add tiny and imperceptible perturbations to the collected images, causing the model to make a wrong prediction. In the specific practices of the physical world, adversarial attacks usually occur in two forms. One is that attackers can directly access the target objects, often in the form of “patches” or “stickers” superimposed on the targets. In such cases, considering the variability of the physical environment, the affine transformation strategy is generally adopted in the training stage to enhance robustness. The other, used when attackers cannot directly access the target objects, is that they usually change the environment instead. For example, they may use lasers [6], create shadow interferences [7], or attach additional color films to the image acquisition devices [8]. Current research about adversarial patch techniques has already demonstrated the vulnerability of detectors based on deep neural networks (DNNs). There have been many related studies especially in the fields of face recognition, traffic signs, and automotive autonomous driving [9,10]. Figure 1 shows the effect of adversarial patch attacks on UAV detectors.

Figure 1.

The effect of adversarial patch attacks on UAV detectors.

Currently, the research on adversarial attacks in remote sensing imaging is mostly concentrated on satellite images. In contrast, the research on the reliability of UAV object detection is still quite limited [11], which is insufficient to meet the challenges brought about by the rapid development of UAVs. On the one hand, UAVs may be affected by numerous factors during the process of image acquisition, such as weather conditions, shooting angles, and light intensity. However, most of the currently publicly available datasets do not comprehensively consider these complex factors, resulting in generated adversarial patches that may only be effective in ideal environments [10]. On the other hand, the current research on adversarial patches in UAV object detection is mostly based on white-box scenarios, leading to a situation where the obtained adversarial patches often show good effects only for a certain model or a certain dataset, with insufficient transferability. All in all, continuous exploration is still needed on how to generate adversarial patches with good transferability.

This paper proposes an adversarial patch generation algorithm for UAV aerial images: URAdv. This method takes into account various complex factors that UAVs may encounter during flight and has better environmental adaptability. In the first stage, we verified that the generated patches exhibited a relatively high attack success rate on the test set and other datasets of the same type. In the second stage, we transferred the generated patches to other models to simulate gray-box attacks. Experiments have demonstrated that this method can still maintain a certain level of attack effectiveness.

In summary, the main contributions of this work are threefold:

- (a)

- We constructed the VisDrone-13 dataset based on VisDrone2019, which more closely resembles the complexities of the physical environment. We trained more transferable adversarial patches using this dataset, which simulates 13 types of real-world perturbations that could be encountered during UAV data acquisition, thereby enhancing the robustness of adversarial patches in complex UAV scenarios.

- (b)

- We integrated a local corruption model into the training process of adversarial patches, primarily to account for reflections under strong lighting conditions and shadows under occlusion. This approach aligns the decision boundary of the surrogate model more closely with the classification boundary encountered in real-world scenarios, thereby enhancing the transferability of adversarial attacks.

- (c)

- We implemented a nested optimization approach to address the discontinuity in adversarial attacks due to altitude variations during UAV flights. By strategically overlaying patches, this method enables continuous attacks at various distances within a predefined range.

Experimental results demonstrate that URAdv achieved an average attack success rate (ASR) of 82.0% in white-box settings and maintained high transferability (78.5% ASR) across different model architectures.

2. Background and Related Work

2.1. Application of UAV Object Detection

Currently, UAVs and deep learning are progressively achieving a high degree of integration, particularly in the field of computer vision. Object detection serves as the foundation for UAVs to autonomously execute a variety of tasks, providing support for a range of functions such as autonomous navigation, path planning, and target tracking [12,13]. Although significant progress has been made in UAV object detection under ideal conditions, the technology still faces shortcomings when applied to actual image capture due to the unique flight environment encountered during UAV image acquisition. Therefore, overcoming complex and variable environmental conditions to develop more robust and efficient object detection techniques has become a research hotspot in recent years. Much of this research focuses on the intrinsic characteristics of UAVs, such as variations in image scale caused by altitude changes and differences in image perspective effects due to changes in shooting angles [14,15,16,17], while neglecting the impact of adverse weather conditions and potential influences from the imaging equipment itself in outdoor flight environments. These two adverse factors can lead to color distortion and blurred contours in captured images, which in turn result in feature loss during the neural network recognition process.

2.2. Physical Adversarial Attacks

The most prominent feature of physical environment adversarial attacks (hereinafter referred to as physical attacks) is that attackers can implement carefully crafted adversarial examples in the real world through various means. Generally, physical attacks can be divided into contact attacks and non-contact attacks according to whether the attack involves direct contact with the attack target or not.

Contact attacks mean that the generated adversarial examples directly contact the target. In most cases, the examples are printed and attached to the surface of the target in the form of patches or stickers to achieve the purpose of evading detectors or causing misclassification. Under two-dimensional conditions, as long as it is ensured that the patches can be captured by the detector, the basic attack conditions can be met. In recent years, there have been numerous studies on adversarial patches for image classification and object detection. Thys et al. [18] targeted the YOLOV2 detection algorithm, printed digital perturbations, and pasted them on the target person, making the detector unable to recognize the person wearing the adversarial patch in the video. Meanwhile, they introduced the concepts of non-printable loss and total variation loss to enhance the robustness of the generated patches. Wang et al. [19] verified that the patches generated on YOLOV2 had poor transferability and further constructed an adversarial patch with better transferability targeting YOLOV3. For infrared imaging, Wei et al. [20] proposed a physically feasible adversarial patch generation method. By attaching thermal insulation materials to the target object to change the actual effect under infrared imaging, they achieved the purpose of deceiving the detector. In [21], the authors extended adversarial patches to the three-dimensional level and proposed a 3D adversarial patch generation method that can adapt to different postures of the human body. Duet et al. [10] designed two adversarial patches for vehicle detection in aerial images. When the patches were deployed on the top of or around the vehicle, they could make the detector ineffective. Zhang et al. [22] proposed an adversarial attack method for object detection of remote sensing data by optimizing the adversarial patches to attack as many targets as possible and introducing a scale factor to adapt to multi-scale targets. However, this method exhibited poor robustness in complex environments, particularly in cases where the light and shadows changed markedly. In [23], the authors proposed an adversarial attack method named Patch-Noobj for aircraft detection in remote sensing images. This method adaptively adjusts the size of the adversarial patches to adapt to the scale changes of aircraft.

Non-contact attacks refer to situations where attackers do not directly contact the attack targets and often adopt more concealed means to achieve the attack effects. Such attacks mainly simulate sunlight, shadows in nature, and other media that are relatively difficult to detect. Duan et al. [6] proposed a new adversarial attack method. By adjusting the physical parameters of the constructed laser beam, it can achieve effective attacks in both the digital environment and the physical environment. Amira et al. [24] proposed an adversarial attack method named AdvRain that simulates raindrops. It prints the adversarial examples in the shape of raindrops onto translucent paper and then places them in front of the camera lens to achieve the attack purpose. This kind of adversarial attack that simulates substances in nature is hardly noticeable. Wang et al. [8] introduced the RFLA (reflected light adversarial attack) method, an adversarial attack leveraging reflected light. This technique operates by strategically placing a chromatically transparent plastic film and a precisely cut paper template near a reflective surface, inducing the formation of distinctive colored geometric patterns on the target object. This method showcases the potential of illumination in adversarial attacks; however, it mainly concentrates on the static environment and does not take into account the dynamic changes of the illumination conditions during the flight of unmanned aerial vehicles. Zhong et al. [7] achieved adversarial attacks on detectors by constructing shadow areas of different shapes. This more natural method achieved good concealment.

Despite the significant achievements of the aforementioned methods in specific scenarios, several limitations remain. First, the adaptability to environmental conditions is insufficient. Most existing methods are designed for static or ideal environments and fail to adequately account for the dynamic conditions encountered during UAV flights, such as varying lighting, shadows, and weather. Second, the transferability of most adversarial attack methods is limited. Third, some methods exhibit low computational efficiency. Table 1 presents a comparison of several classical methods and the URAdv proposed by us.

Table 1.

Comparison of URAdv and classical methods.

3. Threat Model

The adversarial patches developed in this study are specifically directed at UAV aerial photography tasks, with the objective of lowering the detection confidence of onboard detection platforms to below the recognition threshold, thereby preventing the identification of targets in imagery. Assuming the attacker has complete knowledge of the target model’s structure and parameters, adversarial patches are generated using optimization strategies and subsequently transferred to the physical environment via printing for deployment in attacks. Given the unique aerial environment of UAV photography, the printed adversarial patches must be appropriately sized to cover the top of the target, allowing them to effectively disrupt detection without fully obscuring it.

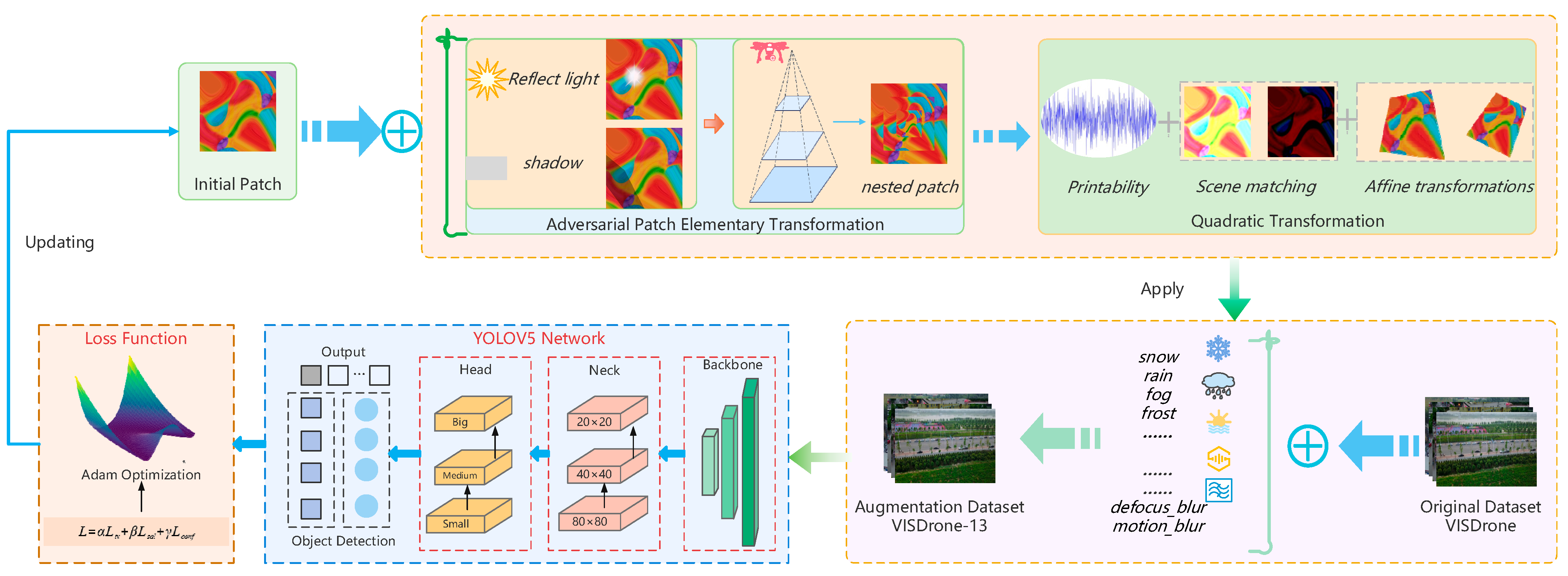

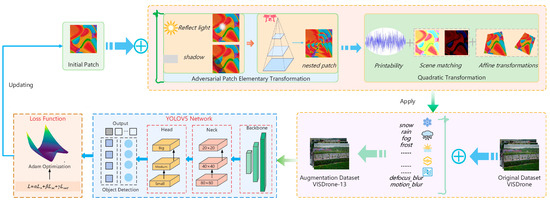

Our approach to generating adversarial patches with enhanced transferability encompasses two key aspects: dataset reconstruction and patch transformation. Initially, we constructed a more realistic and comprehensive dataset. Following this, we simulated physical environments through patch transformation to bolster robustness. Ultimately, a rational optimization function to target the detection network was designed. The overall framework is depicted in Figure 2.

Figure 2.

Proposed adversarial patch generation framework.

4. Constructed VisDrone-13 Dataset

Diversified data play a crucial role in the training of adversarial examples. To enhance the transferability of the generated adversarial patches, we constructed the VisDrone-13 dataset to train the adversarial patches. This dataset can simulate various interference factors that UAVs may encounter during flight in a more realistic manner.

4.1. Benchmark of Dataset

In constructing the VisDrone-13 dataset, we drew upon the well-established VisDrone2019 benchmark [25]. Comprising 288 video clips with a total of 261,908 video frames and 10,209 static images, this dataset encompasses 12 distinct categories. The data, sourced from various UAV cameras, offer a broad spectrum of scenarios, including multiple cities, diverse environments, a variety of objects, and differing object densities. Furthermore, the ground truth data have been meticulously annotated, primarily for applications in object detection, tracking, and counting tasks.

4.2. Complicating Factors

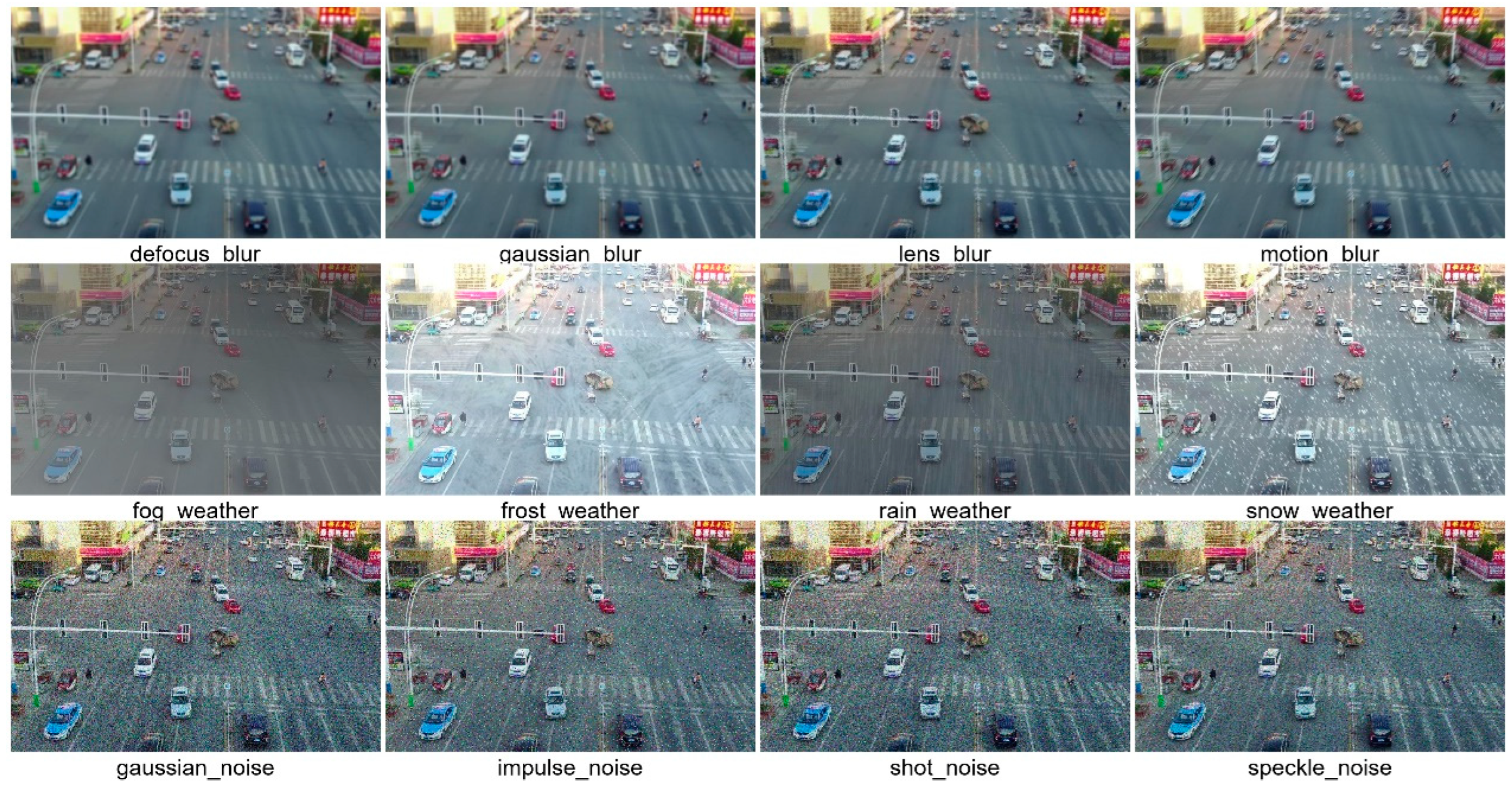

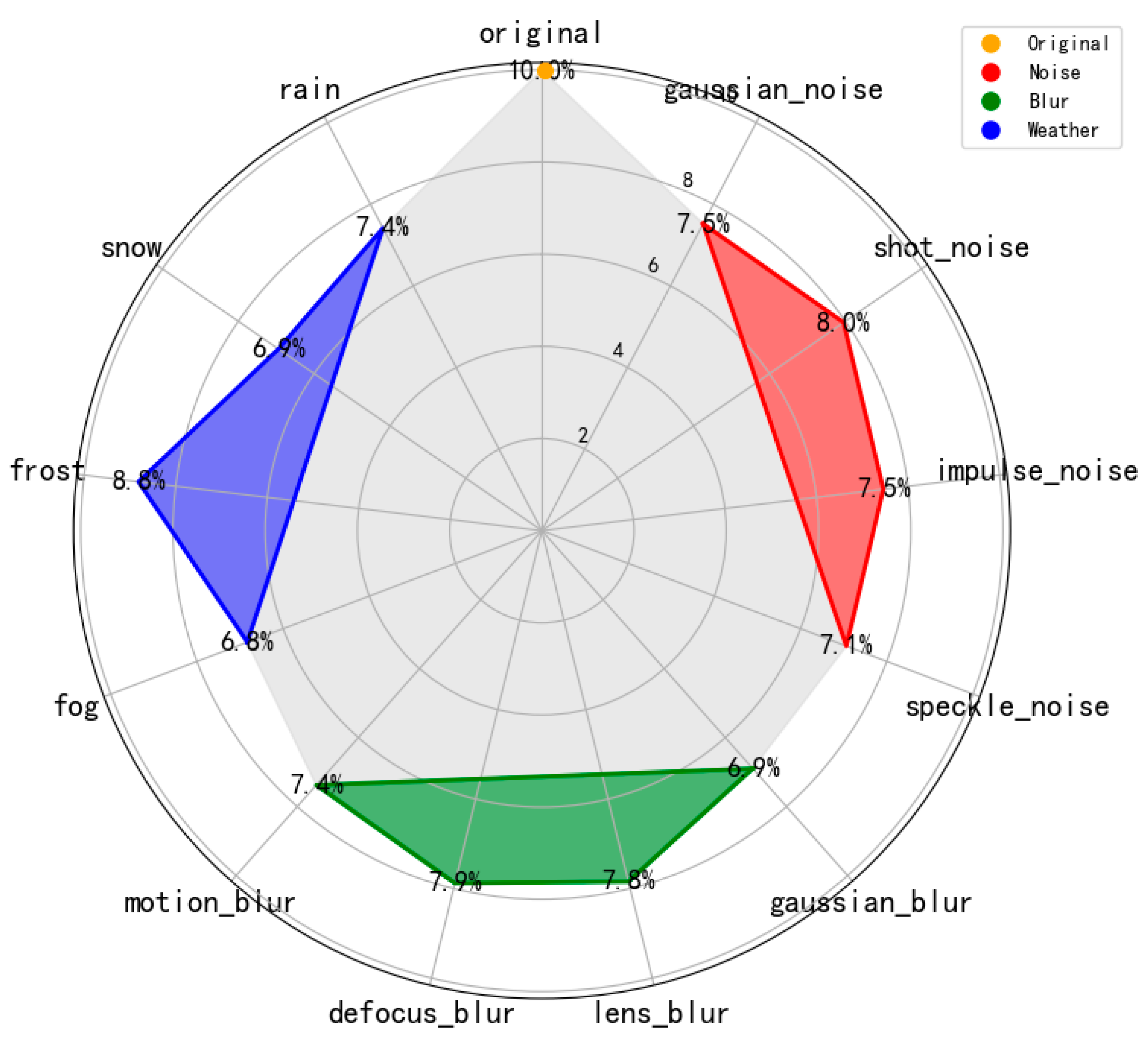

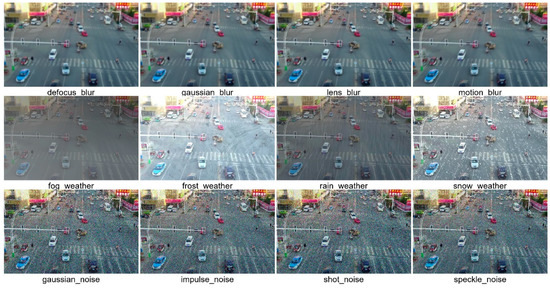

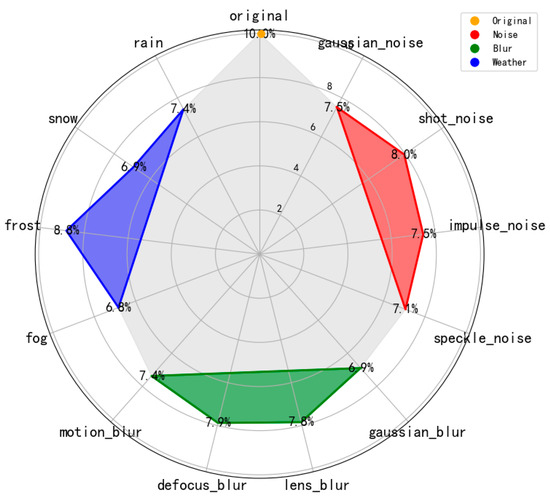

Inspired by Hendrycks [26], we redesigned three categories of interferences to align more closely with real-world conditions encountered during the UAV image acquisition process, specifically divided into 12 sub-categories as depicted in Figure 3.

Figure 3.

Schematic diagram of 12 perturbation factors.

Weather Changes: Acknowledging that UAVs operate outdoors, we implemented algorithms to simulate four prevalent weather conditions—rain, snow, fog, and frost—that UAVs are likely to encounter in flight. These simulated conditions were then integrated with the original images to emulate the degradation effects attributable to weather factors.

Camera Blur: Given the high-speed motion of UAVs during image capture, we established four types of blur—lens blur, Gaussian blur, defocus blur, and motion blur—to replicate the image degradation potentially caused by a UAV’s camera capabilities.

Environmental Noise: Accounting for the environmental noise encountered by UAVs during image acquisition, we incorporated impulse noise, shot noise, Gaussian noise, and speckle noise to simulate the degradation induced by sensor interference from noise.

4.3. Construction of the VisDrone-13 Dataset

To enhance the comprehensiveness of our interference dataset without altering the original dataset’s size, we expanded upon the 4 types of interference factors, delineating them into 12 specific scenarios, with 3 severity levels. This expansion, when combined with the original set of clean images, resulted in the formation of the VisDrone-13 dataset, which encompasses a total of 13 distinct types. To mitigate the issue of class imbalance within the new dataset, we meticulously determined the representation ratio for each interference factor. Specifically, the original, interference-free images constitute 10% of the dataset, while the remaining categories, each containing one of the four interference types, are randomly allocated to sum up to a total of 30%. Further details are presented in Table 2.

Table 2.

The proportions of the VisDrone-13 dataset.

In the following discussion, we detail the specific scenarios for each type of interference and the algorithms utilized to simulate them.

Weather Changes: Given that UAVs operate in outdoor environments to gather data, weather conditions significantly impact the quality of the imagery collected. We simulated four fundamental weather conditions—rain, snow, fog, and frost—that can diminish air visibility and, consequently, the clarity of the images obtained. The detection capabilities of UAV cameras are particularly compromised when lenses or target surfaces are partially obscured by snow or frost. To simulate fog, we overlaid translucent grayscale images, unevenly distributed and of the same dimensions as the original, onto the original images at varying opacities. For frost, we extracted the surface texture on real frosted glass and merged it with the original image to simulate frosty weather. In the case of rain and snow, we superimposed particle masks of corresponding sizes onto the original images and incorporated motion blur to account for the falling motion of these precipitations.

Camera Blur: This category of interference primarily stems from the rapid motion inherent in UAV operations. Firstly, during flight, the imaging surface of the aerial photography device (CCD) may not synchronize with the pixel movement of the captured image due to factors such as payload vibrations and camera instability. This relative motion induces displacement of the actual image on the CCD, leading to motion blur. Secondly, the relative motion between the UAV and the subject results in image blurring as the entire movement is recorded on the CMOS (complementary metal-oxide semiconductor) sensor. To simulate these effects, we employed convolution operations. Specifically, the radius of the convolution kernel simulates the extent of the blurred area, the standard deviation of the kernel dictates the severity of the blurring, and the kernel’s random orientation emulates the direction of movement.

Environmental Noise: The outdoor environment in which UAVs operate is replete with various types of noise. Considering the mission-specific attributes of image capture, we simulated four representative types of noise. Impulse noise is often associated with the aerodynamic noise of UAV rotors; in multi-rotor UAV systems, this aerodynamic noise is a primary noise source, while in fixed-wing UAV systems, the power system and air friction are the main contributors to noise. Shot noise and speckle noise are primarily related to light source intensity [26], whereas Gaussian noise manifests as random pixel value fluctuations, typically associated with sensor readout noise. To simulate speckle noise, we used normally distributed random noise, multiplying it with image pixels and then superimposing it onto the original image. Shot noise was simulated by using random numbers following the Poisson distribution.

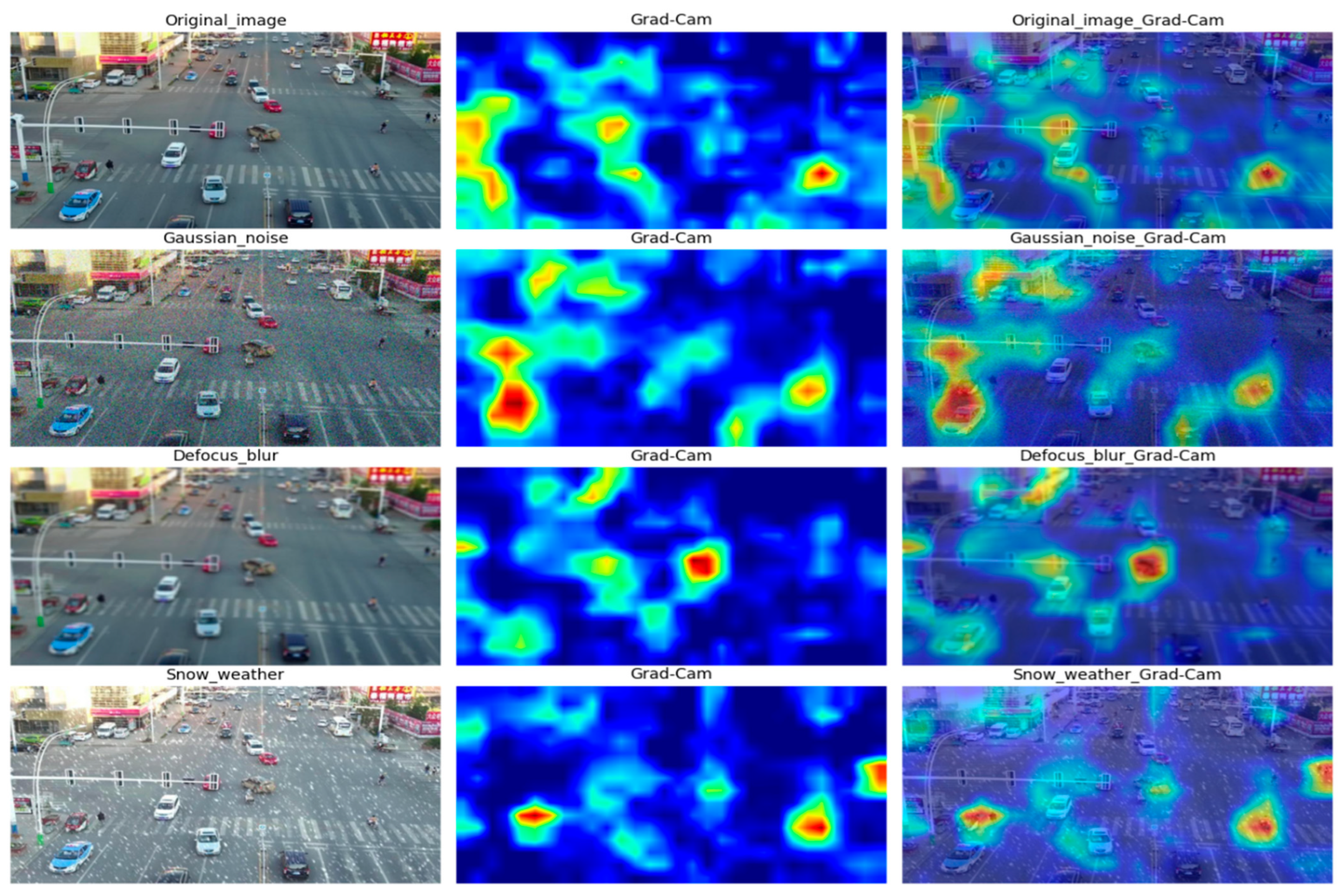

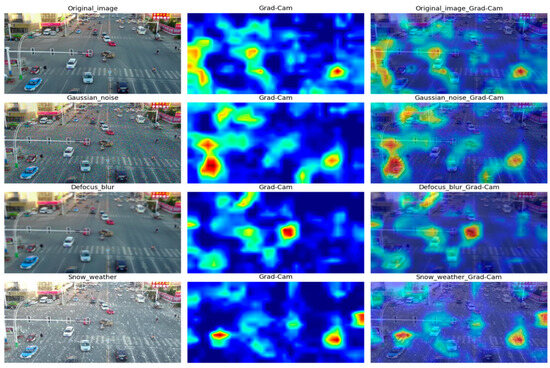

To discern the differences in feature distributions between the VisDrone-13 and the original dataset with greater clarity, we employed the Grad-CAM method [27] for visual representation. As depicted in Figure 4, our newly constructed dataset effectively perturbs the feature distributions of the original dataset while maintaining a visually similar effect for the human eye.

Figure 4.

Comparison of feature distributions between VisDrone-13 data and the original data.

5. Methods

5.1. Adversarial Patch Transformation

5.1.1. Perturbation of Light and Shadow

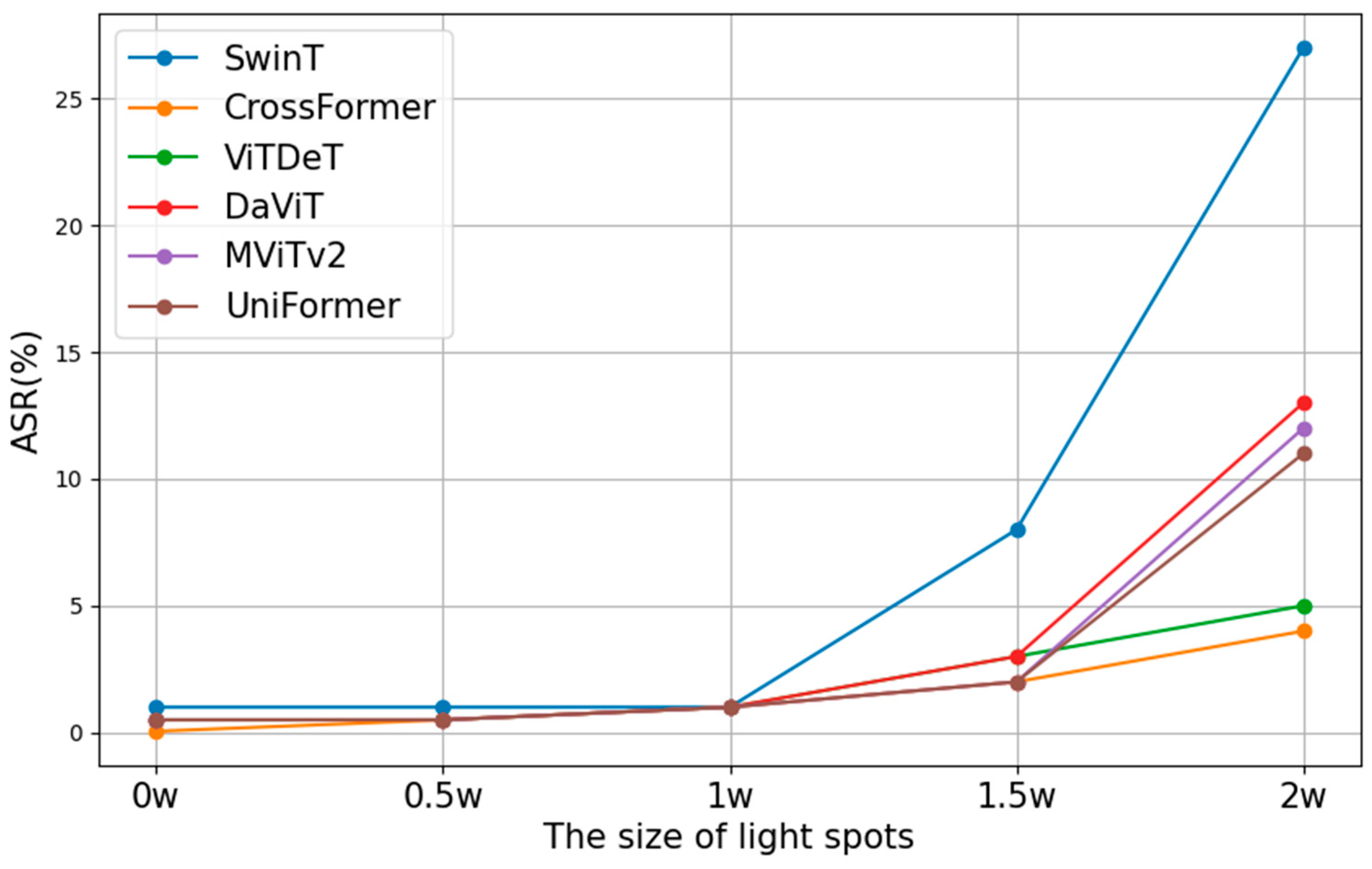

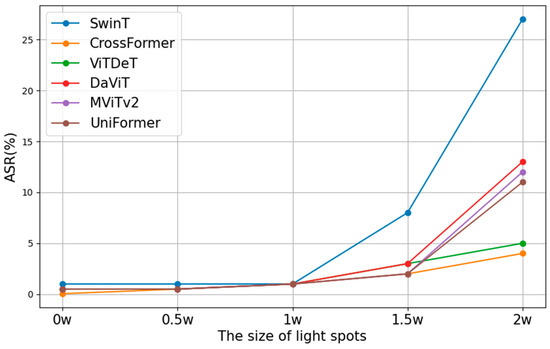

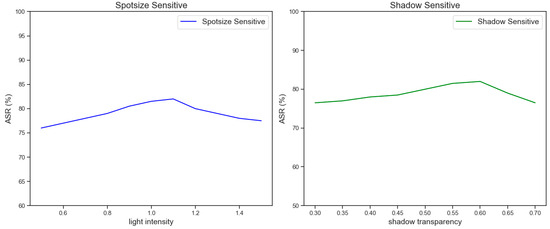

Zhong et al. [7] and Li et al. [28] have demonstrated that introducing light and shadows with specific intensities in image classification tasks can lead classifiers to output incorrect results. Zhang et al. [29] conducted experimental analyses on toy examples and observed the impact of directly altering the size of reflections on the attack success rates (ASRs) of various computer vision algorithms, as illustrated in Figure 5. This experiment confirmed that small-sized reflections have a negligible impact on detectors. However, when the size of the reflection surpasses a certain threshold, the ASRs increase significantly. Yet, at this point, the light intensity becomes discernible to the human eye, which contradicts the principle that attacks should be as inconspicuous as possible.

Figure 5.

Effect of light spot size on ASRs [30].

Drawing inspiration from [31], we posit that light spots or shadows of appropriate dimensions can render training data with patches closer to the classifier’s decision boundary while maintaining a low level of visibility. Consequently, we integrated the effects of light and shadow into the patch optimization process from the outset to develop adversarial patches with enhanced robustness. The primary manifestations are light spots resulting from reflections and shadows cast by obstructions. While digital adversarial attacks typically induce global perturbations to target objects, our approach utilizes light spots from simulated reflections and shadows from obstructions to alter the local features of targets, subsequently iterating to approach the detector’s classification boundary.

Reflections—Light spots. The real significance of adding light spots is to simulate the reflections on the surfaces of objects that we often see in real life. This is particularly pertinent when unmanned aerial vehicles (UAVs) capture imagery from aerial perspectives, potentially encountering reflections from the printing materials of patches or the smooth surfaces of targets. As reported in [30], we have defined the size range of light spots to fluctuate randomly between 0.5w and 1.5w, with w denoting the width of the target bounding box as identified by the detector. Algorithm 1 is proposed to simulate natural light spots more realistically, within a relatively small radius, is colored pure white, and Gaussian blur is applied to create a gradient of diminishing intensity towards the periphery. The center of the light spot is randomly positioned within the detection box.

| Algorithm 1. Generation of Light Spots on Patch |

| Input: max_radius: Maximum radius of the light spots. P: Patch. Output: combined_image: Image with light spot. |

|

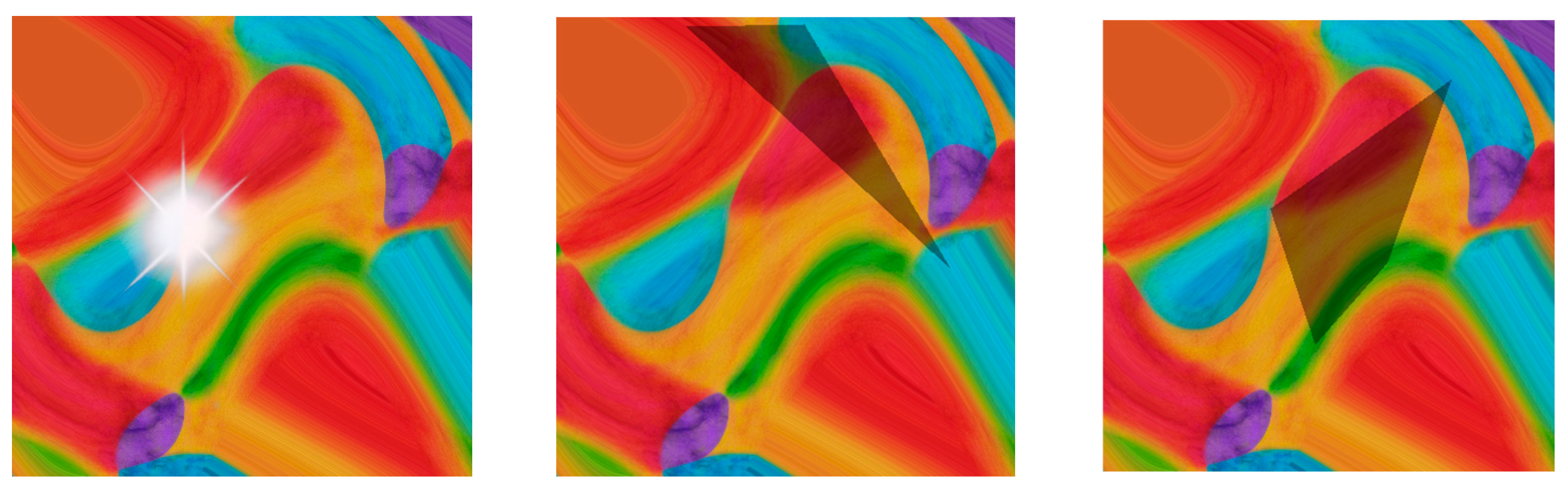

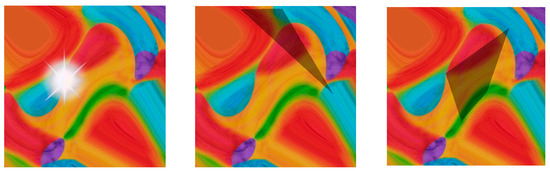

Shelter shadows. Shadows primarily arise from obstruction by external objects. For instance, when UAVs capture images of targets, they often include the shadows of surrounding trees or buildings. Unlike light spots, which tend to be more uniform, shadows frequently manifest in irregular shapes. To simulate this, we employ randomly selected triangles or quadrilaterals, each with a 50% probability of being chosen, to represent shadows. The vertices of these polygons, which define the shadow area, are randomly positioned within the object detection box. To ensure the simulated shadows are neither excessively large nor too small, the side lengths of the polygons are set to range between 25% and 75% of the patch side lengths. These polygons are filled with semi-transparent black to emulate the darkness characteristic of shadows. Figure 6 shows the effect of the patches after adding the light spots and shadows.

Figure 6.

The effect of superimposing light spots and shadows on the patch.

5.1.2. Nested Optimization

The distance between the UAV and the target, whether horizontal or vertical, is in a state of constant flux throughout the flight process. Consequently, the relative position and size of the patch within the captured images are also subject to continual change. Addressing the challenge of maintaining the effectiveness of the same adversarial patch across varying shooting distances is a significant consideration. Zhang et al. [22] introduced a scaling factor to adjust the size of the patch based on the UAV’s flight altitude, enabling adversarial attacks at different heights. However, this approach necessitates the continuous transformation of the patch as the distance changes, which is complex to implement in practical scenarios. Liu et al. [30] proposed a nested generation algorithm that overlays small patches with a uniform distribution on the original patch, thereby enhancing the patch’s robustness to changes in height. Building on the work of [22,30], we propose a novel nested optimization strategy for adversarial patches, which consists of two basic principles.

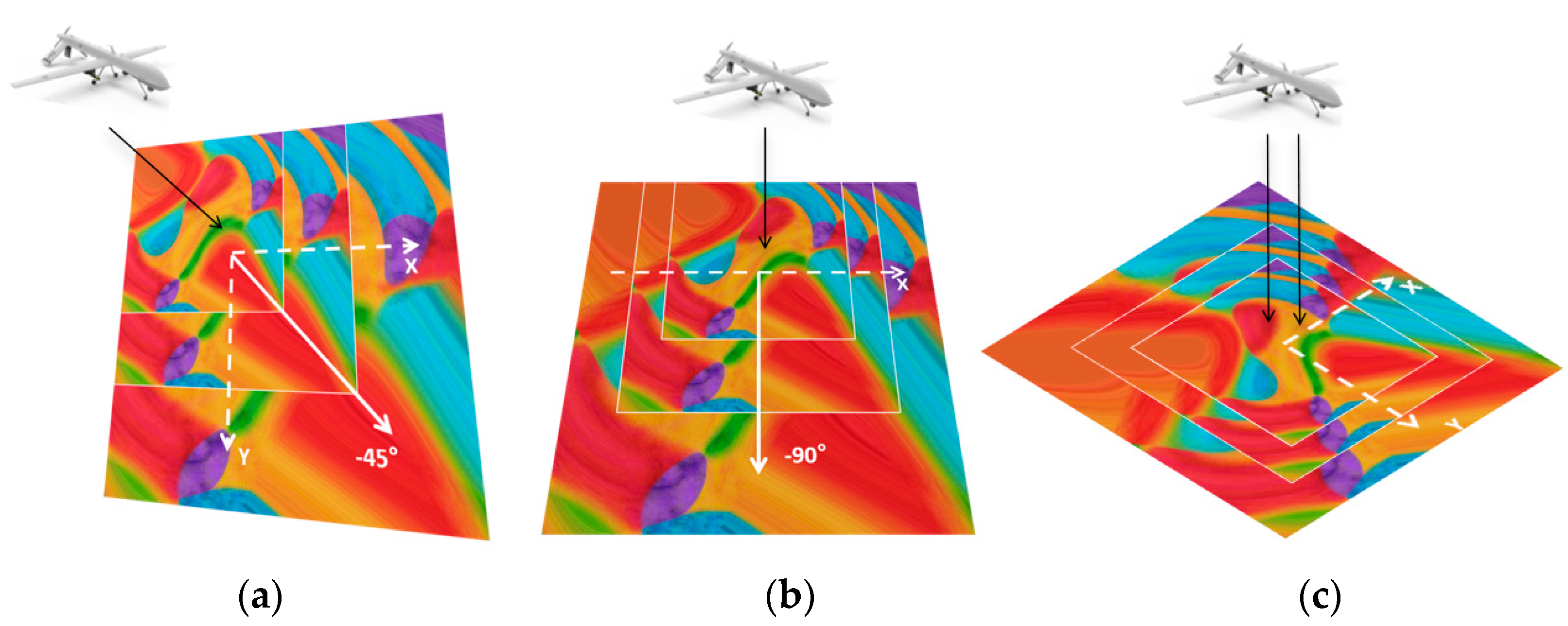

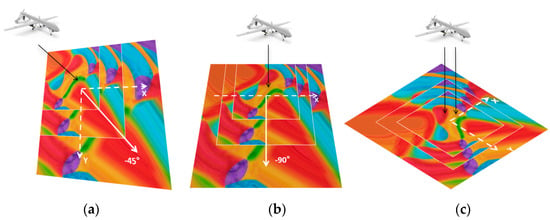

(a) Nested patches, which are new patches created by overlaying patches of varying sizes in a specific manner, take into account the perspective angle issue. The alignment of the nested centers’ connection line should be parallel to the flight direction of the UAV, as illustrated in Figure 7.

Figure 7.

The relationship between the direction of patch superposition and that of UAV flight. Figure (a) represents the nesting direction of the patch when the UAV flies from the northwest, Figure (b) represents the nesting direction of the patch when the UAV flies from the north, and Figure (c) represents the nesting direction when the UAV is directly above.

(b) When the impact of height is the sole consideration, the patches should be nested in a centralized fashion, as illustrated in Figure 7c. The dimensions of the sub-patches for nesting can be calculated based on the varying flight heights of the UAV. The scaling factor, , is defined to adjust the size of the sub-patches relative to the flight height, and is given by the following formula:

where is the reference flight height at which the original patch is effective, and is the current flight height.

(c) To enable the most accurate interpolation of the graphics when the patches are nested and reduce the influence of the patch edges on the underlying image, bicubic interpolation should be adopted during nesting.

Each patch of a specific size is designed to function within a particular height range. Our proposed method does not merely involve overlaying the original patches; instead, it entails a continuous optimization of these patches throughout the training process. Following each nesting iteration, the patches are subjected to optimization within the network to ensure that each resultant patch retains the capacity for adversarial perturbation.

5.1.3. Printability and Scene Matching

Unlike digital attacks, adversarial patches must be printed on physical media to exert their effects. Consequently, mitigating the post-printing degradation is a critical consideration. The discrepancies between materials, printers, and inks used can lead to variations in the printed output, even for the same digital image. Furthermore, printing devices typically operate in the CMYK (cyan, magenta, yellow, black) color mode, whereas digital images are predominantly in RGB (red, green, blue). These inherent differences are challenging to eliminate, necessitating algorithmic adjustments to minimize post-printing losses. Given the variability in such losses, we incorporated random number matrices following a low-amplitude Gaussian distribution into our digital images to simulate the transformation from digital to physical, thereby enhancing the robustness of the printed adversarial patches.

where can randomly perturb the brightness and contrast of the image, while can randomly change the color values of the image.

Given that images captured by UAVs are substantially influenced by ambient light, it is imperative to adjust the patches accordingly. These adjustments are designed to simulate variations in shooting angles, optical distortions, and ambient brightness. The specific transformations are detailed in the subsequent Table 3.

Table 3.

The methods of specific transformation.

5.1.4. Patch Transformations

UAVs capture imagery from a top-down perspective, necessitating the placement of adversarial patches on the target, typically on its top surface. To avoid obscuring the target, the patches are designed to be smaller than the target bounding box. Consequently, we utilized the expectation over transformation (EOT) framework to fine-tune the constructed patches through scaling and rotation. Scaling ensures that the target bounding box fully encompasses the patch, while rotation simulates the angular variations inherent in UAV photography. Algorithm 2 shows the complete process of patch transformations.

| Algorithm 2. Patch Transformations |

|

5.2. Loss Function

The purpose of generating adversarial patches is to enhance the success rate of adversarial attacks while ensuring that the patches remain effective when transitioning from digital to physical media and maintaining their robustness. To this end, we have formulated four optimization functions.

(a) Printing Loss: Printers utilize the CMYK color mode, where each primary color’s level ranges from 0 to 100. In contrast, digital images are represented in the RGB mode, with each primary color’s level ranging from 0 to 255. The total numbers of colors that can be represented by the two modes are, respectively:

Consequently, the RGB color range exceeds that of CMYK, which implies that digital images generated by algorithms cannot maintain all their color characteristics post-printing. To address this, we defined the first loss function to quantify the discrepancy between the printed patch and the original digital image.

where represents an element within the printable patch P, while denotes an element within the original digital patch P.

(b) Variation Loss: As patches are ultimately captured by cameras to produce collected images, and considering that cameras often struggle to capture subtle changes between adjacent pixels, we incorporated the variation loss concept from [30,32] to smooth the noise within the patches. This approach further minimizes distortion when the patches are digitized.

where is the pixel value at the coordinate of the adversarial patch.

(c) Detection Loss: Adversarial patches fulfill their attack objective by diminishing the confidence score of the target bounding box. This reduction brings the score below the threshold utilized by the model’s non-maximum suppression (NMS) mechanism. Consequently, the confidence score associated with the bounding box is typically incorporated as a key component in the construction of the loss function.

where and are used to measure the confidence score and objectiveness score of correctly outputting the classification result when the adversarial image is input.

(d) Total loss function: Finally, we used the combined loss function below to optimize the generation process of adversarial patches:

where , , and represent the weights of each component in the loss function, respectively.

5.3. Generation of Adversarial Patch

We initialized the patch as a random tensor of . The aim of training is to position the generated patch on the surface of the object, enabling it to evade the detection of the UAV. The patch obtained from one training is applicable to the entire dataset. During the training process, the obtained patches are placed on top of the corresponding object to form a fused image, which is then passed to the training network for learning through backpropagation and the Adam optimizer, and ultimately the patch that meets the requirements is obtained. The update process of the patch can be expressed as follows:

where is the tensor of the patch, which represents the current adversarial patch. is the learning rate that governs the step size of each update. is the gradient of the loss function with respect to the patch tensor, indicating the sensitivity of the loss to the patch. is the operation that confines the value of within the interval of . and are the lower and upper limits of the patch pixel values, and typically, the pixel value of is normalized to the range of [0, 1].

6. Experiments

In this section, we verify the attack effect of the generated patches through comprehensive experiments.

6.1. Setup of the Experiments

Dataset: Based on the division ratios of different interference types of data in Table 2, we obtained the dataset VisDrone-13 used for the final experiment through random allocation. It contains over 10,000 images, and the ratios of the training set, validation set, and test set are explicitly defined as 4:1:1. The distribution of various data types within the training set is illustrated in the accompanying Figure 8.

Figure 8.

Composition scale map of data in VisDrone-13.

Training Network: We trained the adversarial patches on the dataset of VisDrone-13, which was constructed as described in Chapter 3, utilizing PyTorch v2.1.0. To enhance the fit of the transformed patches to the detected targets, the experiment configured the size of the generated patches to 64 × 64 pixels and uniformly resized all input images to 640 × 640 pixels. For images with dimensions less than 640 pixels on any side, gray padding was applied. The goal was to conceal these detected targets by incorporating adversarial patches.

We employed the YOLOv5 model with small parameters and the YOLOv5 model with large parameters to simulate the detection capabilities of small and large UAVs, respectively. YOLOv5 was selected as the baseline detector due to its widespread adoption in UAV applications [26,33], its ability to balance between accuracy and efficiency, and its modular architecture that facilitates adversarial robustness analysis. Compared to other detectors (e.g., Faster R-CNN), YOLOv5′s single-stage design reduces computational overhead, making it suitable for real-time UAV tasks. Additionally, its variant architectures (Small/Large) allow us to evaluate transferability across models with varying capacities. We also cite recent studies (e.g., Deng et al., 2023 [17]) that benchmark YOLOv5′s superiority in UAV-based detection.

Both models were initialized using the COCO benchmark [33,34], and the stochastic gradient descent (SGD) optimizer was selected for training. The mean average precision (MAP) was utilized as the primary evaluation metric. Additional relevant parameters for the training are presented in Table 4. The learning rate was linearly elevated from 0.001 to 0.1 within the first three training epochs, and subsequently linearly decreased after each epoch. We set the initial learning rate at 0.03 and adopted the variant of the Adam optimizer, Amsgrad, which enhances certain convergence. The default betas parameter values of the optimizer are (0.9, 0.999), and a learning rate scheduler is utilized. If the loss situation has not improved when the training epoch reaches 50, the training should be halted.

Table 4.

Description of training-related parameters.

Patch Transformation: As detailed in Section 5, we implemented a series of transformations to bolster the robustness of adversarial patches in complex environments. These transformations included the addition of light and shadow effects and the application of nesting techniques during the optimization process. We also adjusted parameters related to color noise and scene brightness, establishing empirical value ranges based on extensive experimental validation. Subsequently, affine transformations were applied to further refine the patches. A selection of the parameters utilized in these experiments is presented in Table 5.

Table 5.

Description of patch transformation-related parameters.

Control Setting: To more effectively evaluate the impact of the proposed adversarial patches on the performance of the object detector, we set up two control experiments with the mean average precision (MAP) of the model under the influence of adversarial patches as the core indicator, aiming to evaluate two hypotheses:

- (a)

- The decline in recognition accuracy is caused by the adversarial patches (URAdv) rather than the general interference resulting from ordinary object occlusion.

- (b)

- The patches generated using YOLOv5-Small can be effectively transferred to YOLOv5-Large to achieve a similar adversarial effect. That is, URAdv has good transferability to gray-box attacks.

Due to the diverse colors of the detection targets, in order to simulate the occlusion of ordinary objects and avoid color interference as much as possible, we used the ‘torch.rand’ function in the PyTorch framework to generate random tensors that match the size of the adversarial patches, and then converted them into images, which are named covered patches. This step was intended to simulate random occlusions with non-adversarial properties and provide a baseline comparison for the experiments.

Furthermore, based on the experimental settings in Section 5.1, we applied the adversarial patches generated by the YOLOv5-Small to the YOLOv5-Large to test the transferability of the generated patches among similar network structures. This step is crucial for verifying the universality and network structure adaptability of the adversarial patches and helps to understand the vulnerability of deep learning models when facing adversarial attacks and their transfer characteristics.

6.2. Results and Evaluation

6.2.1. Evaluation of Effectiveness

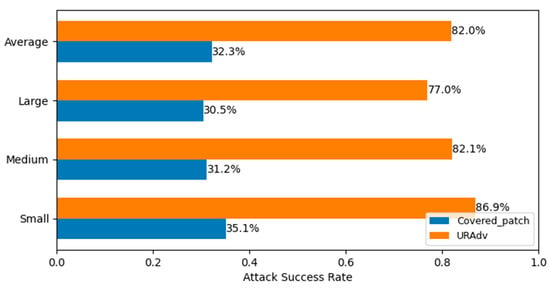

Two distinct patches—covered patches and the trained URAdv—were applied to the same image, and object detection was performed, as illustrated in Figure 9. It is evident that while the random covered patches reduced object recognition accuracy, the degree of influence was limited. When using the URAdv we generated, there was a very obvious deviation between the object detection result and the true value. The specific degree of influence is compared and explained in Figure 10.

Figure 9.

Comparison of the detection effects of two different patches applied to the image.

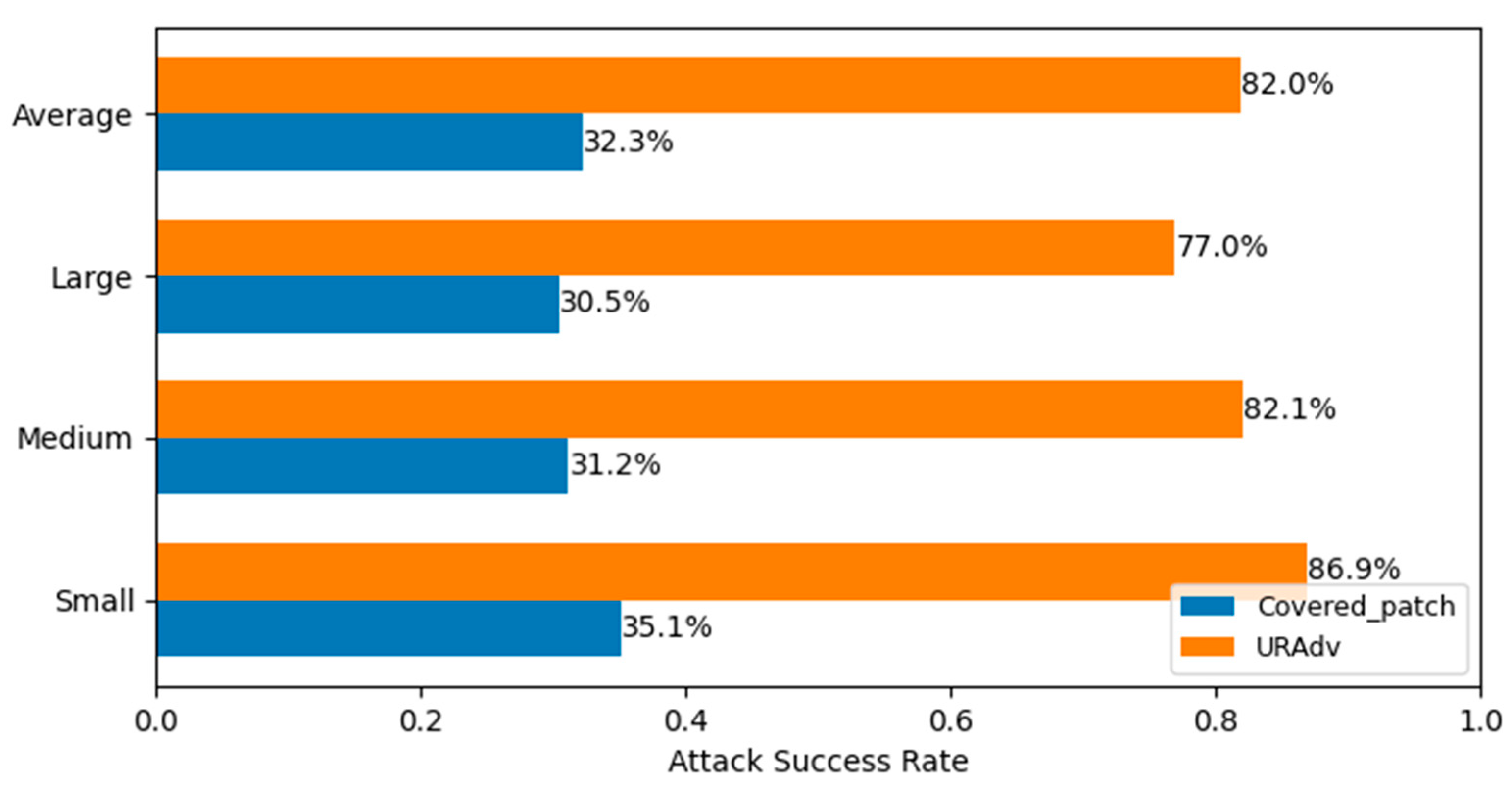

Figure 10.

Attack success rate on targets of different sizes.

We classified the target size into three categories based on the obtained detection box area: Small (≤32 × 32), Medium (32 × 32 ≤ X ≤ 96 × 96), and Large (≥96 × 96). Figure 10 shows the attack success rates when two different patches, Covered_Patch and URAdv, were applied to the images. Among them, both target misclassification and undetected can be regarded as attack successes. It can be seen from Figure 10 that the average attack success rate of using Covered_Patch was only 32.3%, significantly lower than the 82% rate when using URAdv. Therefore, for hypothesis (a), a clear conclusion can be drawn: the reduction in object detection accuracy is not due to random occlusion but rather to the adversarial effect exerted by the URAdv patches we generated.

To verify the robustness of our research results, we carried out five independent experiments by setting different random seeds. This approach helps to alleviate the influence of random fluctuations and ensured that our results were consistent under different initial conditions. Meanwhile, standard deviations were added to all the reported metrics. For example, the attack success rate (ASR) is now reported as ASR = 82.0% ± 2.3%. This notation reflects the average and standard deviation obtained from multiple trials, ensuring that our results are transparent and reproducible. For easier observation, Figure 10 and Figure 11 only employ the average of the five experimental results.

We conducted a comprehensive analysis of the computational efficiency of URAdv, focusing on training time, inference speed, and memory usage. Specifically, training URAdv on a single NVIDIA RTX 3090 GPU for YOLOv5-Small required approximately 200 h, reflecting the computational resources needed to optimize robustness. The inference speed decreased from 45 FPS to 42 FPS (a 6.7% drop), while the FLOPs increased from 7.2 G to 7.3 G (±1.4%), indicating a minimal increase in computational complexity. These results demonstrate that URAdv achieved robustness with minimal impact on inference speed and memory usage, making it a practical solution for real-world applications.

Based on our analysis, we contend that the training time can be further reduced, for instance, by freezing the network layers whose weights do not need to be updated to shorten the backpropagation time, pruning and quantizing the detection model to decrease the inference computation, exploring more efficient learning rate scheduling methods such as CosineAnnealingLR, and utilizing multiple GPUs for distributed training to expedite the single experiment process. Relevant experiments can be explored in subsequent studies.

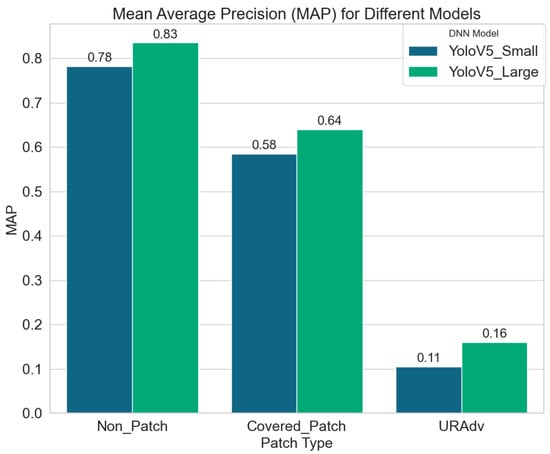

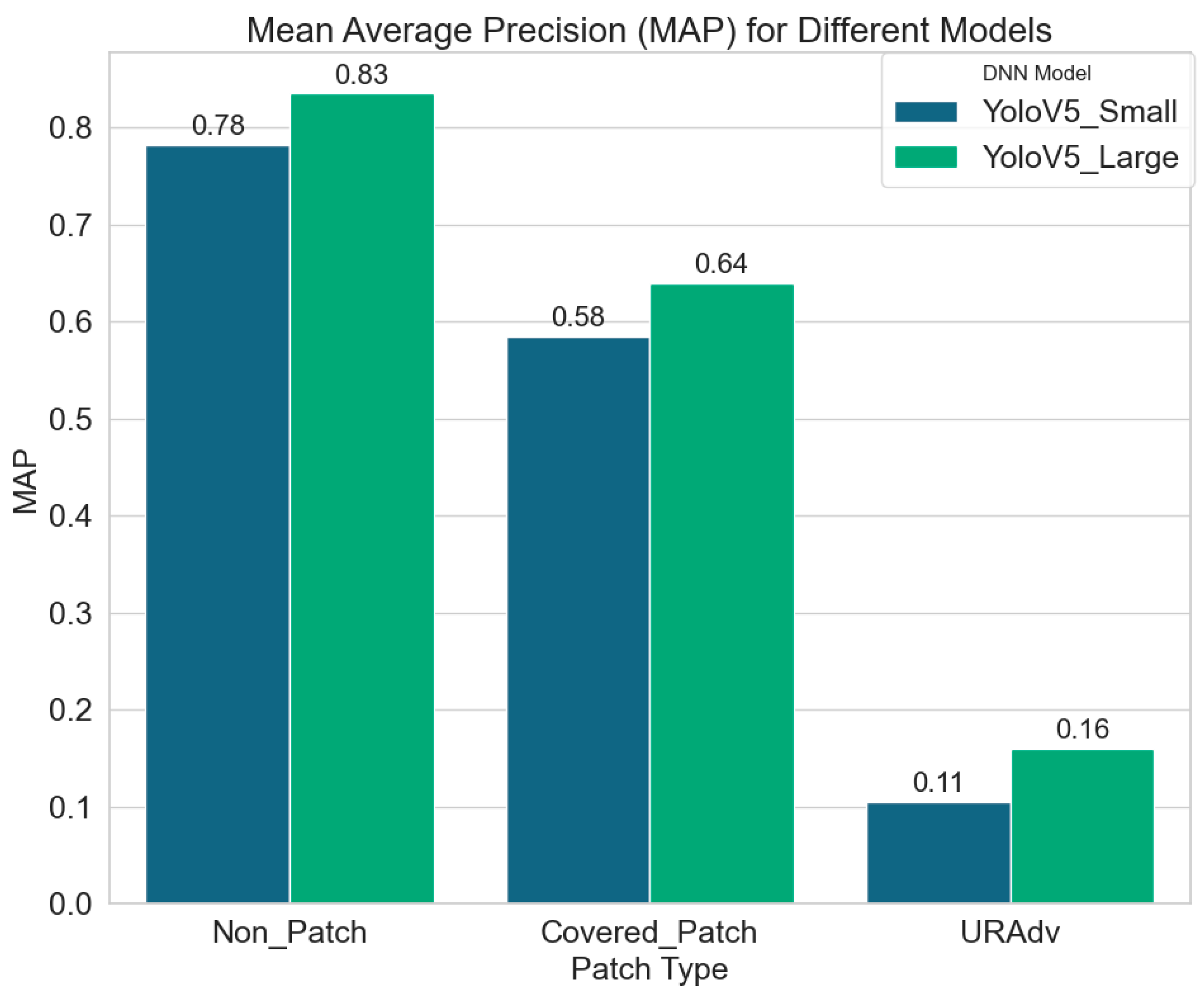

6.2.2. Evaluation of Transferability

Figure 11 shows the results obtained to verify hypothesis (b), indicating the change in MAP when the patches generated by YOLOv5-Small were transferred to YOLOv5-Large.

Non_Patch: In scenarios where no patches were applied, it is observed that both models demonstrated relatively high recognition accuracy for the experimental targets. Notably, the detection accuracy of the YOLOv5-Large model consistently outperformed that of the YOLOv5-Small model. This suggests that models trained with a larger parameter set achieve superior detection capabilities compared to those trained with a smaller parameter set. Consequently, when employing UAVs for aerial photography tasks, platforms that leverage large-scale and efficient models should exhibit significant advantages.

Covered_Patch: When randomly generated patches were applied to obscure the detection targets, a decline in detection accuracy was observed for both models. This indicates that the ability of the models to recognize targets is compromised when they are occluded, regardless of the model used or the nature of the patches—whether adversarial or not.

URAdv: Upon deployment of the adversarial patches generated in this study, a significant decrease in recognition accuracy for both models were observed when compared to the use of randomly generated covered patches. This result indicates that URAdv adversarial patches can have a good adversarial effect on UAV object detection platforms in complex environments. Furthermore, it demonstrates that transferring URAdv from the YOLOv5-Small to the YOLOv5-Large model retains the attack efficacy, suggesting that the proposed adversarial patch generation method exhibits strong transferability in gray-box attack scenarios.

Figure 11.

Mean average precision (MAP) for different models.

Figure 11.

Mean average precision (MAP) for different models.

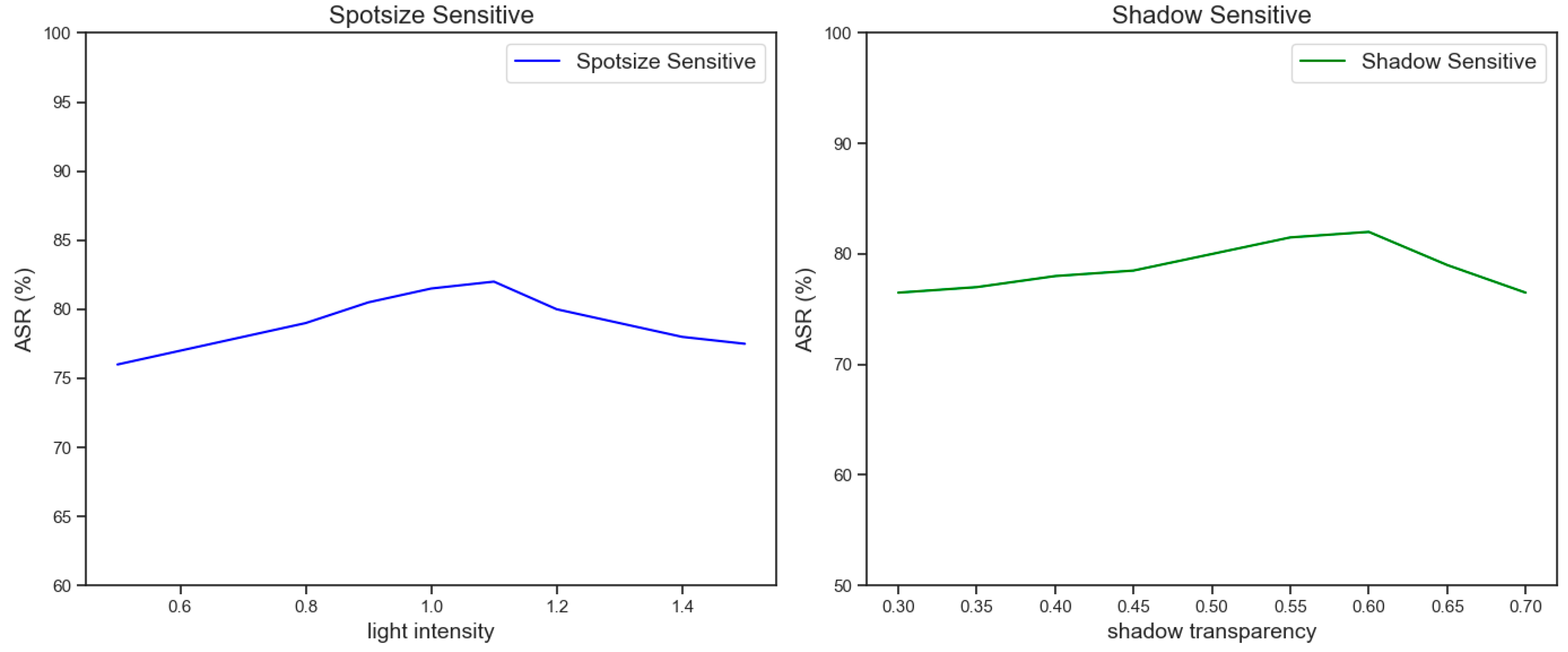

6.2.3. Parameter Sensitivity Analysis

In this section, we conduct a detailed sensitivity analysis of the key dynamic parameters in the URAdv framework to verify their impact on the attack success rate (ASR). Specifically, we focus on the influence of the range of light intensity and the transparency of shadows on the ASR. The selection of these parameters was based on the diversity of light and shadow in the actual unmanned aerial vehicle shooting environment, aiming to ensure that the generated adversarial patches could remain efficient under different environmental conditions. The specific results are shown in Figure 12.

Figure 12.

Illustrations of the parameter sensitivity of light spots and shadows.

To simulate the effect of adversarial patches under different light conditions, we set the light intensity range to [0.5, 1.5] and gradually adjusted the light intensity value through the grid search method to observe its influence on the ASR. The experimental results show that the change in light intensity significantly affected the visibility and attack effect of the adversarial patches:

Low light intensity (0.5–0.8): In a low-light environment, the visibility of the adversarial patch decreased, but its interference effect on the target detection model was still significant. The ASR remained above 75% within this range, indicating that URAdv has high robustness under low light conditions.

Medium light intensity (0.8–1.2): This was the range where URAdv performed optimally and the ASR reached the peak (82.0 ± 2.3%). Within this range, the light intensity was sufficient to ensure the visibility of the patch, and at the same time, it did not cause distortion of the patch features due to excessive light.

High light intensity (1.2–1.5): When the light intensity was too high, some features of the patch could be overexposed, resulting in a slight decrease in the ASR (about 78.5 ± 1.8%). However, URAdv could still maintain a high attack success rate, indicating its certain adaptability to strong light environments.

To simulate the effect of the adversarial patch under different shadow conditions, we set the shadow transparency range to [0.3, 0.7] and verified its influence on the ASR through experiments. The experimental results are as follows:

Low transparency (0.3–0.5): When the shadow transparency was low, the visibility of the patch was high, but its interference effect on the target detection model slightly decreased. The ASR within this range was 78.0 ± 1.5%, indicating that the low transparency shadow had a small impact on the attack effect of the patch.

Medium transparency (0.5–0.6): This was the range where URAdv performed best and the ASR reached the peak (82.0 ± 2.3%). Within this range, the transparency of the shadow could effectively simulate the shadow effect in the real environment and did not overly cover the key features of the patch.

High transparency (0.6–0.7): When the shadow transparency was high, the visibility of the patch significantly decreased, resulting in a decrease in the ASR to 75.5 ± 1.7%. Nevertheless, URAdv could still maintain a high attack success rate, indicating its certain robustness in the high transparency shadow environment.

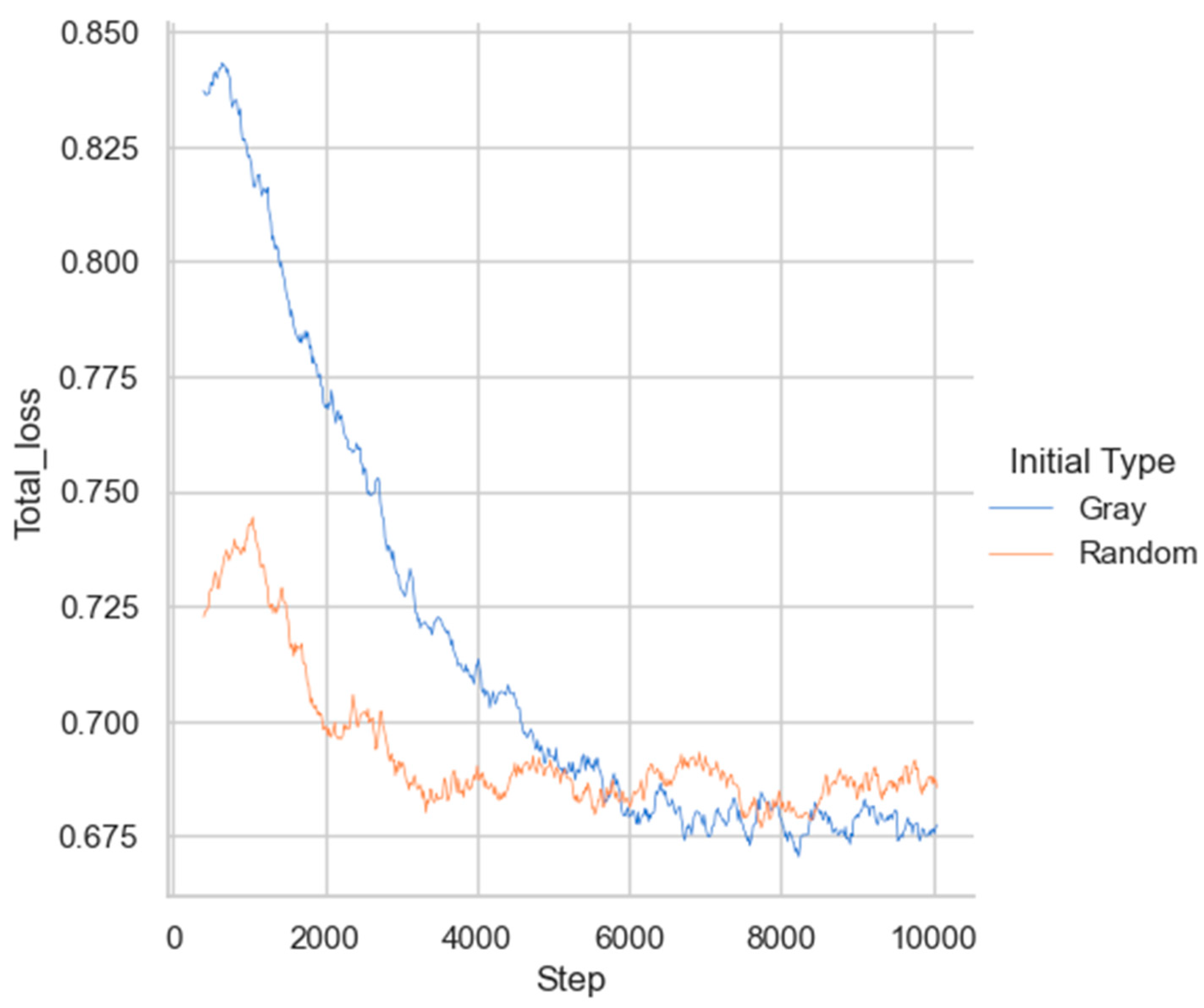

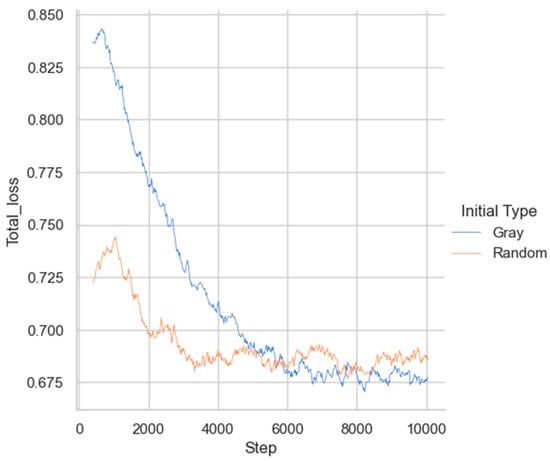

6.2.4. Comparison of Initialization Methods

The experiment also compared the influence of two different patch initialization methods on the training convergence rate, with the results shown in Figure 13. As observed, patches initialized randomly exhibited a lower value of loss function at the beginning of the training and could converge earlier to a stable state. In contrast, patches initialized with gray values started with a higher loss function and required longer convergence times. This indicates that different patch initialization methods significantly affect the training convergence rate. For targeted attacks, we hypothesize that the following initialization methods may accelerate the convergence of the training network, and further exploration by researchers is encouraged:

Figure 13.

Comparison of convergence speed under two different patch initialization methods.

- (a)

- Initialization Based on Image Features: Utilize intermediate layer features from the target detection model to initialize the patch. For instance, extract activation features of the model for a specific category or use the average of image examples from the target category to initialize the patch, thereby aligning it more closely with the target category.

- (b)

- Initialization Based on Adversarial Examples: Employ existing adversarial examples as the initial patch. These examples, having undergone optimization, can serve as an effective starting point.

7. Conclusions

Unmanned aerial vehicle remote sensing technology (UAVRS), as an emerging modality within remote sensing, has seen extensive application across various domains. Consequently, ensuring the deployment of secure and robust intelligent algorithms is of paramount importance. This paper introduces a practical framework for constructing adversarial patches, termed URAdv. Through rigorous testing on VisDrone-13, we have demonstrated that our proposed method is capable of mounting adversarial attacks on multiple targets within complex environments. Additionally, URAdv exhibits the ability to transfer between different models, thereby enhancing the success rate of gray-box attacks for analogous models. In conclusion, URAdv not only increases the threat of adversarial examples in practical applications but also provides a reference for the realization of highly robust adversarial attacks. Our findings reveal the vulnerability of UAV-based intelligent visual perception to adversarial attacks, which is crucial for advancing the security of UAV object detection models. Although URAdv demonstrated promising performance in the current model, its robustness against other attacks still requires deeper investigation. Future work will integrate the latest adversarial defense technologies to explore the adaptability of URAdv in a wider range of attack scenarios. Additionally, we plan to expand our experiments to other detector families, such as Faster R-CNN and DETR, to verify the generalizability of the method.

Author Contributions

Conceptualization, H.X. and L.R.; methodology, H.X.; validation, S.H. and W.W.; formal analysis, H.X.; investigation, B.L.; resources, H.X.; data curation, H.X.; writing—original draft preparation, H.X.; writing—review and editing, H.X. and X.L.; visualization, H.X.; supervision, X.L.; project administration, L.R.; funding acquisition, J.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Young Talent Fund of Association for Science and Technology in Shaaxi: 20240105; Natural Science Basic Research Program of Shaanxi Province: 2024JC-YBQN-0620.

Data Availability Statement

Due to privacy issues, data in this paper are unavailable for the time being.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lee, S.J.; Rho, M. Multimodal Deep Learning Applied to Classify Healthy and Disease States of Human Microbiome. Sci. Rep. 2022, 12, 824. [Google Scholar] [CrossRef] [PubMed]

- Kuang, Q.; Wu, J.; Pan, J.; Zhou, B. Real-Time Uav Path Planning for Autonomous Urban Scene Reconstruction. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 1156–1162. [Google Scholar]

- Chen, H.; Lu, P. Computationally Efficient Obstacle Avoidance Trajectory Planner for Uavs Based on Heuristic Angular Search Method. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 5693–5699. [Google Scholar]

- Katta, S.S.; Viegas, E.K. Towards a Reliable and Lightweight Onboard Fault Detection in Autonomous Unmanned Aerial Vehicles. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 1284–1290. [Google Scholar]

- Jun, M.; Lilian, Z.; Xiaofeng, H.; Hao, Q.; Xiaoping, H. A 2dgeoreferenced Map Aided Visual-Inertial System for Precise Uav Localization. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 4455–4462. [Google Scholar]

- Duan, R.; Mao, X.; Qin, A.K.; Chen, Y.; Ye, S.; He, Y.; Yang, Y. Adversarial Laser Beam: Effective Physical-World Attack Todnns in a Blink. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16062–16071. [Google Scholar]

- Zhong, Y.; Liu, X.; Zhai, D.; Jiang, J.; Ji, X. Shadows Can Be Dangerous: Stealthy and Effective Physical-World Adversarial Attack by Natural Phenomenon. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 15345–15354. [Google Scholar]

- Wang, D.; Yao, W.; Jiang, T.; Li, C.; Chen, X. RFLA: A Stealthy Reflected Light Adversarial Attack in the Physical World. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 4455–4465. [Google Scholar]

- Wei, X.; Guo, Y.; Yu, J. Adversarial Sticker: A Stealthy Attack Method in the Physical World. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 2711–2725. [Google Scholar] [CrossRef] [PubMed]

- Du, A.; Chen, B.; Chin, T.-J.; Law, Y.W.; Sasdelli, M.; Rajasegaran, R.; Campbell, D. Physical Adversarial Attacks on an Aerial Imagery Object Detector. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 1796–1806. [Google Scholar]

- Lian, J.; Mei, S.; Zhang, S.; Ma, M. Benchmarking Adversarial Patch against Aerial Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Shen, H.; Zong, Q.; Tian, B. Voxel-Based Localization and Mapping for Multirobot System in GPS-Denied En. IEEE Trans. Ind. Electron. 2022, 69, 10333–10342. [Google Scholar] [CrossRef]

- Zhou, H.; Yao, Z.; Zhang, Z. An online multi-robot SLAM system based on LiDAR/UWB fusion. IEEE Sens. J. 2021, 22, 2530–2542. [Google Scholar] [CrossRef]

- Yang, C.; Huang, Z.; Wang, N. QueryDet: Cascaded Sparse Query for Accelerating High-Resolution Small Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13668–13677. [Google Scholar]

- Du, B.; Huang, Y.; Chen, J. Adaptive Sparse Convolutional Networks with Global Context Enhancement for Faster Object Detection on Drone Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 13435–13444. [Google Scholar]

- Qi, G.; Zhang, Y.; Wang, K.; NealMazur, Y.L.; Malaviya, D. Small Object Detection Method Based on Adaptive Spatial Parallel Convolution and Fast Multi-Scale Fusion. Remote Sens. 2022, 14, 420. [Google Scholar] [CrossRef]

- Deng, S.; Li, S.; Xie, K.; Song, W.; Liao, X.; Hao, A.; Qin, H. A Global-Local Self-Adaptive Network for Drone-View Object Detection. IEEE Trans. Image Process. 2021, 30, 3. [Google Scholar] [CrossRef] [PubMed]

- Thys, S.; Ranst, W.; Goedemé, T. Fooling Automated Surveillance Cameras: Adversarial Patches to Attack Person Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Wang, Y.; Lv, H.; Kuang, X. Towards a Physical-World Adversarial Patch for Blinding Object Detection Models. Inf. Sci. 2021, 556, 459–471. [Google Scholar] [CrossRef]

- Wei, X.; Yu, J.; Huang, Y. Physically Adversarial Infrared Patches with Learnable Shapes and Locations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12334–12342. [Google Scholar]

- Maesumi, A.; Zhu, M.; Wang, Y.; Chen, T.; Wang, Z.; Bajaj, C. Learning Transferable 3D Adversarial Cloaks for Deep Trained Detectors. arXiv 2021, arXiv:2104.11101. [Google Scholar]

- Zhang, Y.; Zhang, Y.; Qi, J. Adversarial Patch Attack on Multi-Scale Object Detection for UAV Remote Sensing Images. Remote Sens. 2022, 14, 5298. [Google Scholar] [CrossRef]

- Lu, M.; Li, Q.; Chen, L. Scale-Adaptive Adversarial Patch Attack for Remote Sensing Image Aircraft Detection. Remote Sens. 2021, 13, 4078. [Google Scholar] [CrossRef]

- Guesmi, A.; Hanif, M.A.; Shafique, M. Advrain: Adversarial Raindrops to Attack Camera-Based Smart Vision Systems. Information 2023, 14, 634. [Google Scholar] [CrossRef]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and Tracking Meet Drones Challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 7380–7399. [Google Scholar] [CrossRef]

- Hendrycks, D.; Dietterich, T. Benchmarking Neural Network Robustness to Common Corruptions and Perturbations. arXiv 2019, arXiv:1903.12261. [Google Scholar]

- Selvaraju, R.R. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Yufeng, L.; Fengyu, Y.; Qi, L.; Jiangtao, L.; Chenhong, C. Light Can Be Dangerous: Stealthy and Effective Physical-World Adversarial Attack by Spot Light. Comput. Secur 2023, 132, 103345. [Google Scholar]

- Zhang, Y.; Gong, Z.; Wen, H.; Hu, X.; Xia, X.; Jiang, H.; Zhong, P. Pattern Corruption-Assisted Physical Attacks against Object Detection in UAV Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 12931–12944. [Google Scholar] [CrossRef]

- Liu, T.; Yang, C.; Liu, X. RPAU: Fooling the Eyes of Uavs via Physical Adversarial Patches. IEEE Trans. Intell. Transp. Syst. 2023, 25, 2586–2598. [Google Scholar] [CrossRef]

- Zeng, B.; Gao, L.; Zhang, Q.; Li, C.; Song, J.; Jing, S. Boosting Adversarial Attacks by Leveraging Decision Boundary Information. arXiv 2023, arXiv:2303.05719. [Google Scholar]

- Shrestha, S.; Pathak, S.; Viegas, E.K. Towards a Robust Adversarial Patch Attack against Unmanned Aerial Vehicles Object Detection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 3256–3263. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft Coco: Common Objects in Context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014, Proceedings, Part V 13; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Sharif, M.; Bhagavatula, S.; Bauer, L. Accessorize to a Crime: Real and Stealthy Attacks on State-of-the-Art Face Recognition. In Proceedings of the 2016 Acm Sigsac Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 1528–1540. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).