Abstract

Ensemble learning and data fusion techniques play a crucial role in modern machine learning, enhancing predictive performance, robustness, and generalization. This paper provides a comprehensive survey of ensemble methods, covering foundational techniques such as bagging, boosting, and random forests, as well as advanced topics including multiclass classification, multiview learning, multiple kernel learning, and the Dempster–Shafer theory of evidence. We present a comparative analysis of ensemble learning and deep learning, highlighting their respective strengths, limitations, and synergies. Additionally, we examine the theoretical foundations of ensemble methods, including bias–variance trade-offs, margin theory, and optimization-based frameworks, while analyzing computational trade-offs related to training complexity, inference efficiency, and storage requirements. To enhance accessibility, we provide a structured comparative summary of key ensemble techniques. Furthermore, we discuss emerging research directions, such as adaptive ensemble methods, hybrid deep learning approaches, and multimodal data fusion, as well as challenges related to interpretability, model selection, and handling noisy data in high-stakes applications. By integrating theoretical insights with practical considerations, this survey serves as a valuable resource for researchers and practitioners seeking to understand the evolving landscape of ensemble learning and its future prospects.

Keywords:

ensemble learning; bagging; boosting; random forests; deep learning integration; multimodal data fusion MSC:

68Q32; 68T99

1. Introduction

The no-free-lunch theorem asserts that no single method universally outperforms others across all problem domains. In practice, various techniques are employed to tackle specific challenges, each with its own strengths and weaknesses. One effective approach to solving complex problems is brainstorming, where diverse perspectives are shared, and decisions are made through consensus or voting.

Learning algorithms differ in accuracy, and the no-free-lunch theorem states that no single algorithm excels in all domains. Combining algorithms can improve performance, with an infinite number of unbiased classifiers approximating the optimal Bayes classifier [1]. Data fusion involves integrating multiple records corresponding to the same real-world entity into a unified, consistent, and accurate representation. In machine learning, the fusion of data plays a crucial role in boosting prediction accuracy and ensuring reliability, addressing a significant challenge in the field.

Fusion strategies can be applied at various levels, including the signal enhancement and sensor (data) level, feature level, classifier level, decision level, and semantic level. Evidence theory [2] is a framework within the domain of imprecise probabilities.

In classification tasks, diverse classifiers can be created through different initializations, training on varied datasets, or utilizing distinct feature sets. Ensemble methods [3,4] generate multiple base learners and combine them into a single composite predictor for classifying new data. This approach relies on the concept of diversity [5], where two classifiers are considered diverse if they produce different incorrect predictions on unseen data. Diversity is a crucial factor in ensemble learning, often referred to as a committee machine or a mixture of experts. By leveraging diversity, ensemble learning enhances classification accuracy compared to any individual classifier, given the same training data.

In ensemble learning, the classifiers within the ensemble can be built independently, as in bagging [3], or sequentially, as in boosting [4]. The effectiveness of ensemble techniques largely stems from the diversity among weak learners, which increases the variability of predictions and enhances the ensemble’s generalization ability. This is achieved by aggregating predictions through strategies such as majority voting or weighted averaging.

Fusing multiple probability density functions (pdfs) is a specialized form of data or information fusion [6]. In the axiomatic framework, fusion rules are defined by adhering to specific properties (axioms), while the supra-Bayesian method treats the pdfs to be fused as random observations interpreted by the fusion center.

Various strategies for generating base learners operate at different levels. At the data level, methods include sampling techniques like bagging [3] and dagging [7], or weighting strategies like boosting [8], wagging [9], and multiboosting [10]. At the feature level, methods such as random subspace [11] are employed. Techniques combining both data and feature manipulations include random forests [12] and rotation forests [13]. Additional approaches include negative correlation learning [14] and neural network-based methods utilizing random initialization [15].

Despite their effectiveness, ensemble learning and data fusion present challenges, such as increased computational complexity, scalability concerns, and the need for efficient integration with deep learning models. Given the increasing prevalence of large-scale, high-dimensional datasets, understanding the trade-offs between different ensemble strategies is crucial for real-world applications.

The objective of this paper is to explore how ensemble learning and data fusion can address critical challenges in machine learning. By leveraging diversity among models and integrating information at various levels, this study highlights how these approaches improve classification accuracy, robustness, and generalization.

Specifically, this paper provides a comprehensive examination of both fundamental and advanced topics, including multiclass classification, multiview learning, multiple kernel learning, and the Dempster–Shafer theory of evidence. Furthermore, it analyzes the interplay between ensemble learning and deep learning, offering insights into their respective advantages. By doing so, this article aims to serve as a resource for researchers and practitioners seeking to understand and apply these methodologies effectively.

For readers new to ensemble learning, we recommend starting with Section 2, which categorizes ensemble learning methods and provides an overview of foundational concepts before progressing to advanced topics such as multiclass classification (Section 10) and multiview learning (Section 12). Additionally, Table 1 serves as a quick reference guide to help readers identify the most suitable techniques based on their needs and familiarity with the topic.

Table 1.

Summary of popular ensemble learning methods.

This paper presents a comprehensive overview of ensemble learning and data fusion. Section 2 categorizes ensemble learning methods, providing a foundation for the subsequent discussions. Section 3, Section 4, Section 5, Section 6, Section 7 and Section 8 examine key topics, including aggregation techniques, majority voting, theoretical analyses, bagging, boosting, and random forests. A comparative analysis of popular ensemble methods is provided in Section 9. Section 10 addresses multiclass classification, while Section 11 discusses the Dempster–Shafer theory of evidence. Section 12 explores multiview learning, followed by an in-depth examination of multiple kernel learning (MKL) in Section 13. Network ensembles are covered in Section 14, with their theoretical foundations reviewed in Section 15. Section 16 introduces incremental ensemble learning, and Section 17 compares ensemble learning with deep learning. Empirical validations from the literature are presented in Section 18. Finally, Section 19 outlines future research directions, and Section 20 concludes this paper by summarizing key insights and open challenges.

2. Ensemble Learning Methods

Popular ensemble techniques such as random forests [12], bagging (bootstrap aggregating) [3], and random initialization [16] promote diversity by either randomly initializing models or resampling the training data for each model. Boosting [17] and snapshot ensembles [18] also foster diversity by training multiple models while ensuring a degree of difference between the newly trained models and their predecessors.

Bagging [3] and boosting [4] are two widely used ensemble methods that train models on different distributions of the data. Bagging generates multiple training sets, or “bags”, by randomly sampling with replacement, while boosting adaptively adjusts the training set’s distribution, focusing more on difficult examples based on previous classifiers’ performance. Bagging reduces global variance by training multiple models on different distributions of the data, while boosting iteratively trains weak models to minimize the overall model bias.

Both bagging and random forests involve ensemble trees where each tree votes for the predicted class. Random forests and boosting share similar mechanisms [19], with both typically outperforming other methods in reducing generalization error, significantly surpassing bagging [12,19]. However, unlike random forests, boosting evolves the committee of weak learners over time, with each learner casting a weighted vote.

Subspace or multiview learning generates multiple classifiers from distinct feature spaces, allowing each classifier to define its decision boundaries from a different perspective. By leveraging the agreement between these learners, multiview learning aims to improve classification performance. Notable methods in this area include the random subspace method [11], random forests [20], and rotation forests [13].

The mixture of experts [21] is a divide-and-conquer approach that includes a gating network for soft partitioning of the input space and expert networks that model each partition. The classifiers and gating mechanism are jointly trained on the dataset. This technique can be viewed as a radial basis function (RBF) network where the second-layer weights , known as experts, are outputs of linear models that process the input. The VC dimension bounds for mixtures of experts are discussed in [22].

The Bayesian committee machine [23] partitions the dataset into M equal subsets, with M models trained on these subsets. Predictions are combined using weights based on the inverse covariance of each model’s prediction within the Bayesian framework for Gaussian process regression. Though applicable to various estimators, the method primarily targets Gaussian process regression, regularization networks, and smoothing splines. Performance improves when multiple test points are queried simultaneously, reaching optimality when the number of test points matches or exceeds the estimator’s degrees of freedom. Bayesian model averaging [24] offers a related ensemble technique from a Bayesian perspective.

Stack generalization [25] improves voting by combining base learners through a combiner trained on a separate dataset, addressing individual learner biases. Stacking [26] refines predictions by training a stronger model on outputs from multiple weak models, using cross-validation to estimate weights based on performance rather than posterior probabilities. While Bayesian model averaging matches stacking when the true data-generating model is included [27], it is more sensitive to model approximation errors. Stacking, in contrast, excels under significant bias or limited model representational capacity, making it more robust in most practical scenarios [27].

Cascading is a multi-stage method where learner j is only applied if all preceding learners k (for ) are not confident. Each learner has an associated confidence weight , which indicates how confident it is in its output. The learner j can be used if exceeds a threshold , where in case of K classes. For classification tasks, the confidence is defined by the highest posterior probability: , with representing the confidence of learner j for class i.

Recent advancements in ensemble learning extend traditional approaches to improve model performance, scalability, and interpretability. Deep ensembles [28] enhance robustness and uncertainty estimation by aggregating multiple independently trained deep neural networks. These methods are particularly valuable in applications requiring well-calibrated uncertainty estimates, such as medical diagnosis and autonomous systems.

Hybrid ensemble strategies integrate multiple ensemble paradigms to maximize their respective strengths. For instance, AdaBoost can be combined with deep neural networks to enhance robustness, while bagging and boosting can be hybridized to balance variance reduction and bias correction [29]. Additionally, evolutionary algorithms [30,31] have been applied to dynamically optimize classifier selection and weighting, improving adaptability across diverse learning tasks.

Advanced aggregation techniques, such as meta-learning-based ensembles [32], dynamically adjust the ensemble composition based on dataset characteristics. Unlike static combinations of weak learners, these methods utilize reinforcement learning or optimization techniques to tailor the ensemble structure to specific problem domains.

Attention-based ensemble methods have emerged as an effective approach to enhancing model performance by selectively focusing on different parts of the input data. By leveraging multiple attention masks [33], each learner can emphasize distinct regions of the input space. This strategy promotes diversity among learners and has demonstrated significant performance improvements in deep metric learning tasks.

Ensemble pruning seeks to enhance efficiency by selecting a subset of classifiers that maintain predictive performance while reducing computational complexity. In [34], ensemble pruning is approached as a submodular function maximization problem. This structured method effectively balances classifier quality and diversity, enabling efficient optimization while preserving the ensemble’s predictive power.

Neural networks [35,36] and support vector machines (SVMs) [37] can be viewed as ensemble methods. Bayesian approaches to nonparametric regression can also be interpreted as ensemble methods, where a collection of candidate models is averaged according to the posterior distribution of their parameter values. The error-correcting output code (ECOC) method for multiclass classification [38] is another example of a learning ensemble. Similarly, dictionary methods like regression splines can be characterized as ensemble methods, with the basis functions acting as weak learners. Neural trees [39] merge neural networks and decision trees, which operate on distinct paradigms—connectionist and symbolic—offering complementary strengths and limitations, with neural networks learning hierarchical representations and decision trees learning hierarchical clusters.

3. Aggregation

Aggregation involves combining data from multiple sources or the predictions from various candidates [40]. It serves as a fundamental step in ensemble methods. In fuzzy logic, two common aggregation operators are t-norm and t-conorm [41]. The ordered weighted averaging (OWA) operator [42] is a widely used aggregation method in multicriteria decision-making, offering a parameterized family of operators that includes the maximum, minimum, and average as special cases.

The base algorithms in an ensemble can differ by algorithm type, parameter settings, input features, or training data subsets. Base learners may also use cascaded training or address subtasks of a primary task, as with ECOCs. Learner combination can occur in parallel or through a multistage process.

Voting is a straightforward method for combining multiple classifiers by taking a weighted sum of their outputs. The final output is calculated as

where the weights satisfy the condition

and represents the vote of learner j for class i, and denotes the weight associated with each vote.

When , this corresponds to simple voting. In classification tasks, this is known as plurality voting, where the class receiving the most votes is chosen as the winner. In the case of two classes, it is referred to as majority voting, where the class that secures more than half of the votes is selected. Voting mechanisms can be interpreted within a Bayesian framework, where the weights represent prior model probabilities, and the model decisions correspond to model-conditional likelihoods.

Aggregation typically carries a lower risk than the best individual rule [43]. In comparison, cross-validation, which selects only one candidate, cannot exceed the performance of the best rule within the family.

The aggregated hold-out (Agghoo) method [44] is an ensemble method that combines resampling and aggregation, similar to bagging. However, unlike Agghoo, bagging applies to single estimators and does not address estimator selection. Thus, if there is a free hyperparameter, bagging must be paired with an estimator selection method, such as cross-validation. Bagging applied to hold-out was first proposed by [3] as a means to combine pruning and bagging in classification and regression trees (CART).

Agghoo averages the learning rules chosen through hold-out, which is a form of cross-validation with a single split. When the risk is convex, Agghoo performs no worse than the hold-out method, and this holds for classification with 0–1 risk, with an added constant factor. The data are split multiple times, and for each split, one predictor is selected by the hold-out, with the final predictors aggregated. Agghoo is also referred to as cross-validation + averaging in [45,46].

In classification, the equivalent of Agghoo is to use a majority vote, known as Majhoo. Agghoo and Majhoo outperform hold-out and sometimes surpass cross-validation, provided their parameters are properly selected [44]. For k-nearest neighbors (k-NN), Majhoo performs notably better than cross-validation in selecting the optimal number of neighbors [44].

In contrast to Agghoo, which averages selected prediction rules, one can also average the chosen regularization parameter in high-dimensional regression using the methods of K-fold averaging cross-validation [47] and bagged cross-validation [48].

Vote aggregation methods for combining the predictions of component classifiers can be broadly divided into two categories: weighting methods and metalearning methods [49,50]. Weighting methods assign a weight to each classifier and aggregate their votes accordingly (e.g., majority voting, performance weighting, and Bayesian combination). These methods are effective when the classifiers perform similar tasks and have comparable accuracy. On the other hand, metalearning methods involve learning from both the classifiers and their predictions on training data (e.g., stacking, arbiter trees, and grading). These methods are particularly useful when certain classifiers tend to consistently misclassify or correctly classify specific instances [49].

4. Majority Voting

Majority voting is effective when there is limited or no information about the labeling quality of individual labelers, class distributions, or instance difficulty, making it particularly attractive for crowdsourcing applications. It performs well when most labelers have a labeling accuracy greater than 50% in binary tasks, and their errors are distributed approximately uniformly across all classes.

In weighted majority voting, each classifier or voter is assigned a specific weight. The PAC-Bayesian theory [51] provides PAC guarantees for Bayesian-like learning algorithms. This approach bounds the risk of a weighted majority vote indirectly by analyzing the associated Gibbs classifier. The PAC-Bayesian theorem gives a risk bound for the “true” risk of the Gibbs classifier by considering its empirical risk and the Kullback–Leibler divergence between the posterior and prior distributions. The risk of the majority vote classifier is known to be at most twice that of the Gibbs classifier [52,53].

The C-bound [54] is a more accurate risk indicator for majority voting. By minimizing the C-bound, the true risk of the weighted majority vote can be reduced, which is formulated as a quadratic optimization problem. Based on PAC-Bayesian theory, the MinCq algorithm [55] optimizes the weights of a set of voters by minimizing the C-bound, which involves the first two statistical moments of the margin on the training data. MinCq outputs a posterior distribution Q over , assigning each voter a weight.

The behavior of majority votes in binary classification is analyzed in [56], showing that the C-bound can outperform the Gibbs classifier’s risk, approaching zero even when the Gibbs risk is near 1/2. MinCq outperforms AdaBoost and SVM, while P-MinCq [57] extends MinCq by integrating prior knowledge as a weight distribution constraint, with convergence proofs for data-dependent voters in the sample compression setting. Applied to k-NN voting, P-MinCq demonstrates strong resistance to overfitting, particularly in high-dimensional contexts.

Majority votes are central to the Bayesian framework [58], where the majority vote is the Bayes classifier. Classifiers from kernel methods, such as SVM, can also be seen as majority votes. Specifically, the SVM classifier computes , where is a kernel function, and the pairs are training examples in the set . Each acts as a voter with confidence , and is the weight of the voter. Similarly, the neurons in a neural network’s final layer can be interpreted as performing majority voting.

While majority voting is one of the simplest and most widely used ensemble methods, recent research has explored various enhancements to improve its effectiveness. One such extension is weighted majority voting, where classifiers are assigned different voting weights based on their predictive confidence or past performance [59]. This approach ensures that more reliable classifiers have a stronger influence on the final decision.

Another advancement is the use of probabilistic voting schemes, where classifiers output probability distributions rather than discrete labels, and the ensemble aggregates these probabilities to make a more informed decision [60]. This method can mitigate the impact of weak classifiers and is particularly useful in imbalanced learning scenarios.

From a theoretical standpoint, recent studies have analyzed error bounds for majority voting, showing that its effectiveness depends on classifier diversity and individual accuracies [61]. This aligns with the concept that ensemble diversity is crucial for improving generalization performance. Our analysis suggests that while majority voting is robust in many cases, its performance can be further enhanced by incorporating confidence-aware voting schemes and optimizing ensemble diversity.

5. Theoretical Analysis of Ensemble Methods

Ensemble learning methods operate by aggregating multiple models to improve predictive performance, generalization, and robustness. The theoretical foundation of these methods primarily revolves around the bias–variance decomposition, margin theory, and optimization strategies.

5.1. Bias–Variance Decomposition

A fundamental result in statistical learning theory is bias–variance decomposition, which states that the expected error of a model can be expressed as follows:

where is the predicted output of the model given input , represents the systematic error due to incorrect assumptions in the model, Variance quantifies the sensitivity to fluctuations in the training data, and denotes the irreducible noise. Bagging reduces variance by averaging multiple models trained on different bootstrap samples, whereas boosting aims to reduce bias by iteratively refining weak learners.

5.2. Margin Theory in Boosting

Boosting methods are theoretically justified through margin theory. It has been shown that boosting algorithms, such as AdaBoost, increase the minimum margin of the training data, which correlates with improved generalization performance [62]. More formally, the generalization error bound of a boosted classifier depends on the empirical margin distribution:

where is the ensemble classifier, denotes the margin (i.e., confidence of the classifier in the correct label), is a margin threshold, and T is the number of boosting iterations. The implication is that boosting continues to improve generalization performance even after the training error reaches zero.

5.3. Ensemble Pruning

Recent studies have explored optimization-driven ensemble learning techniques. Ensemble pruning seeks to optimize the trade-off between accuracy and computational complexity by selecting a subset of base classifiers [63]. The pruning problem can be formulated as follows:

where is the set of all base classifiers, is the selected subset of classifiers, is the weight assigned to classifier h, and is the indicator function, which equals 1 if the classifier predicts correctly and 0 otherwise.

Approaches such as greedy optimization and convex relaxation methods have been developed to solve this problem efficiently.

These theoretical results provide a rigorous foundation for understanding the mechanisms behind ensemble methods, offering insights into their bias–variance tradeoff, margin improvements, and optimization-based refinements.

6. Bagging

Bagging [3] is an ensemble method designed to reduce variance and improve model stability by training multiple base learners on different subsets of the training data. Each subset is generated through bootstrap sampling, where instances are randomly selected with replacement. The final prediction is obtained via majority voting for classification tasks or averaging for regression tasks. This approach is particularly effective for high-variance, low-bias models like decision trees.

A key advantage of bagging is its ability to mitigate overfitting by reducing model variance. Since individual classifiers are trained on different sampled subsets, their prediction variance is averaged out, leading to improved generalization. This effect is particularly beneficial for models like decision trees, making bagging a strong choice for stabilizing complex learners such as random forests [12].

Decision trees are well-suited for bagging since they can model complex data interactions. Because all trees in bagging are trained independently on different bootstrap samples, averaging their predictions does not change bias compared to individual trees. This differs from boosting, where trees are trained sequentially to reduce bias [64] and are not identically distributed.

Recent advancements in bagging include stratified bagging, which ensures a more balanced distribution of class labels across subsets, improving performance in imbalanced datasets [65]. Deep bagging ensembles have emerged, where deep neural networks are combined using bagging to improve robustness in high-dimensional tasks, particularly in medical image analysis and financial forecasting [66]. Researchers have also explored hybrid bagging approaches that integrate kernel methods to enhance feature representations before training ensemble models [67].

The performance boost from bagging comes from repeatedly training a model on various bootstrap samples and averaging the resulting predictions. It is particularly effective for unstable models, where small changes in the training data can lead to significant differences in predictions [3]. Unlike bagging, boosting assigns different weights to predictions during averaging.

While both bagging and boosting are non-Bayesian, they share similarities with Markov Chain Monte Carlo (MCMC) methods in Bayesian models. In a Bayesian context, parameters are adjusted based on a posterior distribution, while bagging perturbs the training data independently and re-estimates the model for each perturbation. The final prediction is an average of all these models. However, bagging suffers from randomness, as classifiers are trained independently without interaction.

Mathematically, bagging can be expressed as follows:

where represents a collection of model parameters. In the case of a Bayesian model, , and the average serves to estimate the posterior mean by sampling from the posterior distribution. For bagging, , with corresponding to parameters refitted to bootstrap resamples of the data. In boosting, , while is chosen sequentially to iteratively refine the model’s fit.

Online bagging [68] applies the bagging procedure in a sequential manner, approximating the outcomes of traditional batch bagging. A variation of this method involves replacing the standard bootstrap with the Bayesian bootstrap, where the online Bayesian version of bagging [69] is mathematically equivalent to its batch Bayesian counterpart. By adopting a Bayesian approach, this method offers a lossless bagging technique, improving accuracy and reducing prediction variance, particularly for small datasets.

7. Boosting

Boosting, or ARCing (adaptive resampling and combining), was introduced in [4] to enhance weak learning algorithms, which generate classifiers only slightly better than random guessing. Schapire demonstrated that strong and weak PAC learnability are equivalent [4], forming the theoretical foundation for boosting. Boosting algorithms, a type of voting method, combine weak classifiers linearly.

Boosting [4] is an iterative ensemble method that trains weak learners sequentially, with each learner focusing more on misclassified instances from the previous iteration. Unlike bagging, which builds independent models, boosting assigns adaptive weights to training samples, ensuring that difficult instances receive greater attention in subsequent training rounds.

A key advantage of boosting is its ability to minimize bias by converting weak learners into strong ones. Each iteration reweights the data to prioritize misclassified examples, and the final predictions are aggregated using a weighted majority vote. The most well-known boosting variant, AdaBoost [8], assigns weights to classifiers based on their individual performance.

Recent research has advanced boosting in multiple directions. Gradient boosting machines (GBMs) [70] generalize AdaBoost by using gradient descent optimization over a loss function, yielding state-of-the-art performance in structured data tasks. Extreme gradient boosting (XGBoost) [71] further enhances computational efficiency through parallelization and regularization techniques, making it widely used in large-scale applications such as fraud detection and recommendation systems.

Deep boosting methods, such as boosted convolutional neural networks [17], integrate boosting with deep learning architectures to improve representation learning. Additionally, self-adaptive boosting algorithms [72] dynamically adjust hyperparameters during training, enhancing robustness against noisy data. These advancements highlight the continuous evolution of boosting beyond traditional ensemble learning paradigms.

Boosting operates by iteratively applying a weak learning algorithm to different distributions of training examples and combining their outputs. From a gradient descent perspective, boosting is a stagewise fitting procedure for additive models [64,70]. In each iteration, gradient boosting performs a two-step process: first, it identifies weak learners based on the steepest descent direction of the gradient, then it applies a line search to determine the step size.

The original boosting method, called boosting by filtering [4], relies on PAC learning theory and requires large amounts of training data. AdaBoost [8,73] was developed to address this limitation. Boosting can be done using subsampling, where a fixed number of training examples is drawn and resampled based on a specified probability distribution, or using reweighting, where each example is assigned a weight and all examples are used to train the weak learner, which must handle weighted examples.

In binary classification, the output of a strong classifier is produced by aggregating the weak hypotheses using weighted contributions:

where T denotes the total number of iterations, and represents the strong classifier. To reduce the learning error, the goal is to optimize at each boosting iteration, which involves determining the appropriate confidence level .

Upper bounds on the risk for boosted classifiers are derived by noting that boosting tends to maximize the margin for training samples [62]. Under certain assumptions about the underlying distribution, boosting can converge to the Bayes risk as the number of iterations increases indefinitely [74]. When used with a weak learner defined by a kernel, boosting becomes equivalent to estimation using a special boosting kernel [75].

Boosting differs from bagging in that it adds models sequentially, focusing on misclassified instances, and it uses non-i.i.d. samples for training. Unlike bagging, where the base learners remain fixed, in boosting, the committee of weak learners evolves over time, with each learner casting a weighted vote. In many scenarios, boosting outperforms bagging and is often preferred as the method of choice. An example of a boosting variant is Learn++.NC [76]. However, boosting tends to perform poorly compared to bagging in noisy environments [77], as it focuses not only on difficult regions but also on outliers and noise [9].

Boosting tends to perform well and resist overfitting [73,78], even when the ensemble consists of many deep trees [12,19]. However, the risk of overfitting increases when the number of classifiers becomes large [64]. By continuously adding weak classifiers, it reduces the generalization error even after the training error reaches zero. Some research has suggested that boosting can overfit, particularly in the case of noisy data [79,80]. Though boosting can eventually overfit when run for an excessively large number of steps, this process is slow.

When decision trees serve as base learners, boosting can automatically capture complex non-linear relationships, discontinuities, and higher-order interactions, making it robust against outliers [81]. Tree-based models, including popular algorithms like XGBoost [71], LightGBM [82], CatBoost [83], and random forests [20], often outperform deep neural networks on tabular data [84,85].

AdaBoost

The adaptive boosting (AdaBoost) algorithm [8,73] is a widely used method in ensemble learning. AdaBoost is theoretically capable of reducing the error of any weak learner. From a statistical perspective, AdaBoost can be seen as stagewise optimization of an exponential loss function [64]. From a computer science standpoint, generalization error bounds are derived from VC theory and the concept of margins in PAC learning [86]. AdaBoost has been shown to outperform other classifiers, such as CART, neural networks, and logistic regression, and it is significantly superior to ensemble methods created via bootstrap sampling [3].

The AdaBoost algorithm trains each classifier in sequence, using the entire dataset. It adjusts the distribution of the training examples based on the difficulty of classification. At each iteration, the algorithm places greater emphasis on misclassified instances, thus focusing more on harder-to-classify examples. The weights of misclassified samples are increased, while those of correctly classified samples are reduced. Additionally, classifiers are weighted according to their performance, and these weights are used during the testing phase. When a new instance is introduced, each classifier casts a weighted vote, and the final prediction is made based on the majority vote.

Consider a training set where represents labels drawn from i.i.d. samples of the random pair following an unknown distribution P. AdaBoost aims to construct a strong classifier that minimizes a convex loss function, specifically the average of the negative exponential of the margin over the training set , given as follows:

The AdaBoost algorithm can continue adding weak learners iteratively until a sufficiently low training error is reached.

The AdaBoost algorithm is presented in Algorithm 1, where denotes the data distribution. The goal is to produce a boosted classifier . In the update rule for , when , and when . This implies that after selecting the best classifier for the distribution , the correctly classified examples are given lower weights, while those incorrectly classified receive higher weights. In the subsequent round, the updated distribution emphasizes the examples that the previous classifier failed to classify, prompting the selection of a new classifier that focuses more on these difficult instances.

AdaBoost is an iterative coordinate descent algorithm that minimizes exponential margin loss over training data [62,64,87], converging asymptotically to the optimal exponential loss [88] and ensuring strong performance when weak classifiers are only slightly better than random guessing [8]. The algorithm guarantees consistency if stopped after iterations for sample size N and , leading the error probability towards the Bayes risk [89]. Its convergence rate to the exponential loss minimum is shown in [90], requiring iterations to achieve exponential loss within of the optimal, with a lower bound of rounds for near-optimal performance. However, AdaBoost can overfit noisy datasets due to its penalty on misclassified samples with large margins, making it sensitive to outliers.

While AdaBoost identifies a linear separator with a large margin [62], it does not always converge to the maximal margin solution [91]. With weak learnability, the data become linearly separable [73], and with shrinkage, AdaBoost asymptotically converges to an -margin-maximizing solution [91]. AdaBoost* [91] converges to the maximal margin solution in iterations, a property shared by algorithms in [92] for linearly separable data. These methods have been developed for maximizing margins [92].

| Algorithm 1: The AdaBoost algorithm for boosting weak classifiers. |

|

FloatBoost [93] reduces error by backtracking after each AdaBoost iteration, targeting error minimization rather than exponential margin loss, requiring fewer classifiers with lower error rates but longer training times. MultBoost [94] allows for parallelization of AdaBoost in both space and time. LogitBoost [64] optimizes logistic regression loss for classification tasks [95], and LogLoss Boost [88] minimizes logistic loss for inseparable data. SmoothBoost [96] limits sample weight to avoid overfitting, with noise tolerance comparable to the online perceptron algorithm.

AdaBoost.M1 and AdaBoost.M2 [8] extend AdaBoost to multiclass classification. AdaBoost.M1 stops if the weak classifier error exceeds 50% [62], while AdaBoost.M2 uses pseudoloss to continue if the classifier is slightly better than random guessing. AdaBoost.M2 also introduces a mislabel distribution to focus on difficult or misclassified examples.

For multilabel classification, AdaBoost.MH [87] and AdaBoost.LC [97] extend AdaBoost, with AdaBoost.MH optimizing Hamming loss and AdaBoost.LC minimizing covering loss [97]. SAMME [98] is another multiclass extension, optimizing exponential loss for weighted classification. SM-Boost [99] improves robustness to noise using stochastic decision rules and gradient descent in the function space of base learners.

AdaBoostReg [79] and BrownBoost [100] prevent overfitting by limiting function class complexity. BrownBoost dampens the influence of hard-to-learn samples with an error function as a margin-based loss function, while eta-boost [101] strengthens robustness to mislabels and outliers. AdaBoost’s selection of weak learners based on training error may struggle with small datasets, but error-degree-weighted training error [102] addresses this by considering sample distances from the separating hyperplane.

AdaBoost.R [8] adapts AdaBoost.M2 for regression tasks, while AdaBoost.RT [103] filters examples with high estimation errors before applying AdaBoost. Cascaded detectors [104] use AdaBoost to balance detection rate and false positives in a sequence of stages with progressively stronger classifiers. FCBoost [105] extends AdaBoost by minimizing a Lagrangian risk for classification accuracy and speed, optimizing the cascade configuration automatically. It is compatible with cost-sensitive boosting [106], ensuring high detection rates.

-Boosting [78] minimizes loss in a stepwise manner, ensuring consistency [89] and resistance to overfitting [78], making it popular for regression [107]. However, its convergence rate is slow due to linear step size determination. For sparse functions, its rate follows , where k represents the number of iterations [108]. -Boosting [109], RSBoosting [110], and RTBoosting [111] offer improvements in step size selection, improving convergence over traditional gradient boosting. RBoosting [112] accelerates convergence and enhances performance, achieving near-optimal convergence rates and superior generalization compared to standard boosting.

8. Random Forests and Decision Forests

Decision trees are a fundamental approach for classification and regression tasks, valued for their simplicity and interpretability. They perform well and offer clear decision rules in the form of if–then statements along paths from the root to the leaf. A notable implementation is C4.5 [113]. However, defining a global objective for optimally learning decision trees is challenging. Trees can be constructed using criteria like Gini impurity [114] and information gain [113].

The C4.5 algorithm takes a set of cases represented by an n-dimensional feature vector, where each case is preclassified into one of the target classes. It generates classifiers as decision trees, where each node represents a feature, branches connect feature values, and leaves denote the class. To classify an instance, one traces the path from the root node to the leaf. C4.5 uses a divide-and-conquer strategy to build an initial tree based on a given set of instances.

The CART method [114] uses a binary recursive partitioning technique for classifying a dataset. CART operates greedily, choosing the best split at each node to minimize impurity, with the goal of producing a series of increasingly refined trees. The ideal tree size is determined by evaluating the predictive performance of all pruned trees.

A forest is a graph with connected components that are trees. Random forest, an ensemble technique, builds upon the concept of bagging by generating multiple decision trees. These trees are trained using randomly selected subsets of features [20] or instances [12].

Decision forests, including random forests and gradient-boosted decision trees (GBDTs) [70], are typically composed of ensembles of axis-aligned decision trees that partition the feature space along individual dimensions.

8.1. Random Forests

A random forest [12] is an ensemble of classification trees, all of which are grown using a partitioning rule and trained on bootstrap samples from the dataset. The method aims to enhance variance reduction compared to bagging by minimizing the correlation between trees without significantly increasing bias. This is achieved by randomly selecting input variables during the tree-building process. Similar to bagging, the bias of a random forest equals the bias of individual trees. Prediction is made through a majority vote among all trees, meaning improvements in prediction are purely due to variance reduction. Beyond a certain number of trees, adding more does not enhance performance [12].

Numerous experiments show that increasing the number of trees stabilizes predictions, while other tuning parameters impact model accuracy [115,116,117]. The accuracy of tree ensembles is bounded by a function of the trees’ strength and diversity [12]. Random forests enhance tree diversity by using random bootstrap samples for each tree and limiting the selection of the splitting dimension j to a random subset of the p total dimensions. As the number of trees increases, the generalization error converges, with an established upper bound [12]. Each bootstrap sample forms a classification tree without pruning, and predictions are made via majority vote for classification or averaging for regression.

Empirical results show that random forests consistently outperform most methods in predictive accuracy [118]. Moreover, random forests exhibit strong resilience to both outliers and noise in datasets, making them a reliable choice for various applications [12,19].

Random forests operate in batch mode, and the procedure is detailed in Algorithm 2.

The convergence properties and rates of random forests have been extensively studied [119,120,121], along with methods for estimating prediction standard errors [122,123]. Consistency is established under the assumption that the true regression function is additive [124], though theoretical studies on their asymptotic behavior often rely on strong assumptions and yield suboptimal rates [124,125,126]. Purely random forests, based on data-independent hierarchical partitions, offer a simpler framework for theoretical analysis [119]. Mondrian forests achieve minimax-optimal rates by tuning their complexity parameter and utilizing recursive axis-aligned partitioning, efficient Markov construction, and self-consistency, making them suitable for online learning [127,128]. Minimax optimality has also been extended to random forests with general split directions [129].

| Algorithm 2: Outline of the random forest algorithm. |

|

Random forests are competitive with SVMs in classification tasks, offering an unbiased internal estimate of generalization error while handling missing data effectively. They also estimate the relative importance of input features and can be formulated as kernel methods, enhancing interpretability and enabling analytical evaluation [130]. As base learners for regression tasks, they implicitly construct kernel-like weighting functions for training data. An extension proposed in [131] generalizes random forests to multivariate responses by leveraging their joint conditional distribution, independent of the target data model.

A unified framework for estimating prediction error in random forests introduces an estimator for the conditional prediction error distribution function, with pointwise uniform consistency demonstrated for an enhanced version [132]. Misclassification error in random forests shows lower sensitivity to variance than mean squared error (MSE), and overfitting tends to occur slowly or not at all, similar to boosting. The method functions as a robust adaptive weighted version of the k-NN classifier, leveraging forest-induced weights rather than averaging individual tree estimates [133]. Variance estimation for bagged predictors and random forests relies solely on the bootstrap samples used for predictions, with moderate computational cost. For bagging, convergence requires bootstrap replicates, where N is the training set size [123].

The computational efficiency of random forests heavily depends on the complexity of the splitting step. To improve runtime, approximations of the splitting criterion are used, including density estimation that aggregates CART criteria for various response transformations [134] and the aggregation of standard univariate CART splitting criteria [135,136].

Random forests inherently exhibit bias, with theoretical bounds established under assumptions about the tree-growing process and data distribution [125]. Building on this, Ghosal and Hooker [137] explore a bias correction method first suggested by [138], conceptually akin to gradient boosting [70], and further analyzed in [139]. Another approach to mitigating bias [140] adapts traditional bootstrap bias estimation for greater computational efficiency.

Extensions of random forests to quantile regression include quantile regression forests [141], which enable consistent conditional prediction intervals. Generalized random forests [142] further support quantile regression, emphasizing heterogeneity in the target metric. Prediction intervals can also be computed using empirical quantiles of out-of-bag prediction errors [143]. More generally, conformal inference provides a flexible framework for estimating prediction intervals, applicable to nearly any regression estimator, including random forests [144,145].

Conventional random forests assign equal weights to all trees. Alternative weighting strategies include tree weighting based on out-of-bag accuracy [146] or tree-level prediction errors [147]. For regression, optimal weighting algorithms [148] asymptotically match the squared loss and risk of the best-weighted random forests under certain regularity conditions.

8.2. Recent Advances in Random Forests

WildWood [149] enhances random forests by aggregating predictions from all subtrees using exponential weights via the context tree weighting algorithm. This method, combined with histogram-based feature binning similar to XGBoost [71], LightGBM [82], and CatBoost [83], significantly improves computational efficiency, making it competitive with extreme gradient boosting algorithms. Inspired by subtree aggregation with exponential weights [150], WildWood leverages out-of-bag samples for weight computation, naturally mitigating overfitting without relying on heuristic pruning methods [113]. For categorical features, it applies exponential weight aggregation instead of CatBoost’s target encoding to prevent overfitting. Additionally, by adopting bootstrap-based sample splitting from honest forests [125], WildWood ensures robust predictions. With few hyperparameters to tune, it integrates histogram-based acceleration and adapts subtree aggregation for batch learning, achieving strong performance across various tasks.

Frechet trees and Frechet random forests [151] extend random forests to heterogeneous data types, including curves, images, and shapes, where input and output variables lie in general metric spaces. These methods introduce a novel node-splitting technique, generalize prediction procedures, and adapt out-of-bag error estimation and variable importance scoring. A consistency theorem for the Frchet regressogram predictor using data-driven partitions is also established and applied to purely random trees.

Markowitz random forest [152] introduces a tree-weighting method that considers both performance and diversity through a tree covariance matrix for risk regularization. Inspired by financial optimization, this approach applies to binary and multi-class classification as well as regression tasks. Experiments on 15 benchmark datasets demonstrate that the Markowitz random forest significantly outperforms previous tree-weighting methods and other learning approaches.

8.3. Decision Forests

For decision forests, the splits of axis-aligned decision trees parallel to the coordinate axes limit the ability to capture feature dependencies, often necessitating deeper trees with complex, step-like decision boundaries. This can increase variance and the risk of overfitting, particularly when data classes are not separable along single dimensions.

To address these limitations, methods such as forest-RC (randomized combination), which employs splits based on linear combinations of features [12], and oblique-splitting random forests [153,154,155], have been introduced to improve empirical performance. Additionally, sparse projections have been developed to reduce the computational cost associated with oblique splits [156].

The random subspace method [11] selects random feature subsets for training, as seen in random forests and bootstrap-based techniques [157]. It can also be viewed as an axis-aligned random projection approach [158]. Extensions include boosting [159] and sparse classification ensembles, where weak learners are trained in optimally chosen subspaces [160].

Several extensions to decision forests introduce axis-oblique splits, such as random rotation random forests [154] and canonical correlation forests [155]. These oblique approaches, often referred to as oblique decision forests, allow for splits based on combinations of feature dimensions, enhancing flexibility. However, they may sacrifice some of the advantageous properties of axis-aligned forests, such as interpretability and computational simplicity. Trees that use oblique splits are also known as binary space partitioning trees.

The random rotation random forest method [154] applies uniform random rotations to the feature space before training each tree. This adjustment enables oblique decision boundaries, increasing the ensemble’s flexibility. Following rotation, standard axis-aligned procedures are used to train the trees. A regularized variant of the random rotation method [161] encourages simpler base models. Canonical correlation forests [155] use canonical correlation analysis (CCA) at each split to determine directions that maximize correlation with class labels. Random projection methods [158,162,163] train base classifiers in low-dimensional subspaces derived from random projections. For example, random projection forests [164] utilize random projections to compress image filter responses, while forest-RC [12] uses univariate projections and often outperforms random forests [165]. Rotation forests [13] utilize K-axis rotations to generate new features for training multiple classifiers, enhancing splits through the application of principal component analysis (PCA).

Sparse projection oblique randomer forests (SPORF) [156] improve on both axis-aligned and oblique decision forests through very sparse random projections, offering better performance and scalability while preserving interpretability. The sparse projection framework can also be extended to GBDTs for similar gains.

8.4. Gradient-Boosted Decision Trees

Gradient boosting [64,70] commonly employs decision trees as base learners, forming a robust model by sequentially aggregating them. Accelerated gradient boosting [166], inspired by Nesterov’s accelerated descent [70], enhances performance by reducing sensitivity to the shrinkage parameter and producing sparser models with fewer trees.

GBDTs [70] build additive models by iteratively combining small, shallow decision trees, known as weak learners. Each iteration trains a new tree to approximate the residuals between the true labels and the current predictions, addressing errors from previous iterations. This stage-wise process optimizes a cost function using gradient descent [70,167].

GBDTs are a widely used ensemble learning method for regression and classification. The popularity of GBDTs stems from their strong predictive performance, computational efficiency, and interpretability. Their adoption has been further accelerated by fast, scalable, open-source tools like XGBoost [71], LightGBM [82], and CatBoost [83]. XGBoost improves accuracy by utilizing second-order gradient information to refine the boosting process. LightGBM significantly enhances training speed through histogram-based gradient aggregation. CatBoost introduces a specialized technique for handling categorical features efficiently.

For both training and inference, GBDT implementations such as XGBoost and LightGBM operate with linear time complexity concerning the number of bins, input features, output dimensions, and boosting iterations.

GBDTs are highly effective, often surpassing deep learning models and other traditional algorithms on tabular data [83,168], including neural oblivious decision ensembles [168]. Representer point methods [169] provide more efficient model interpretation, representing it as the sum of contributions from all training points, grounded in representer theorems.

TracIn [170] focuses on estimating the influence of training examples throughout the learning process rather than just analyzing the final model’s properties. Influence estimation has been extended from deep learning models with continuous parameters to GBDTs [171]. TREX [171], based on representer-point methods [169], and BoostIn [171], an efficient influence-estimation method for GBDTs inspired by TracIn [170], offer significant performance improvements. BoostIn is notably four orders of magnitude faster while maintaining or exceeding the accuracy of existing methods [171].

GBDT models have been extended for multi-output learning [172,173,174], with tree leaves predicting all or subsets of outputs to optimize aggregate objective gains [174]. Privileged information has also been integrated to iteratively refine predictions, guiding updates via auxiliary functions that adjust residuals [175].

8.5. Adaboost, GBDTs Versus Random Forests

AdaBoost’s behavior puzzled statisticians due to two notable properties: interpolation is achieved within relatively few iterations, yet the generalization error continues to improve beyond this point. From a statistical perspective, AdaBoost performs stagewise optimization of an exponential loss, necessitating regularization of tree size and control over iteration count. Conventional wisdom suggests using early stopping or limiting it to low-complexity learners.

Random forests, by contrast, grow trees independently and optimize local criteria, such as the Gini index, without a global loss function. As an ensemble of interpolating classifiers, random forests perform well with large trees and extensive iterations. In many cases, random forests perform similarly to boosting but are easier to train and tune, making them a widely used method in various applications.

GBDTs fundamentally differ from AdaBoost, addressing an infinite-dimensional optimization problem that drives their strong performance. Unlike random forests, which grow trees independently and combine them in parallel, GBDTs construct trees sequentially, progressively enhancing prediction accuracy. Despite these methodological differences, both GBDTs and random forests achieve success through weighted ensembles of interpolating classifiers that adapt to local decision boundaries, often yielding comparable performance [19].

Breiman speculated that AdaBoost, in its later stages, mimics a random forest [12]. AdaBoost has been characterized as a weighted ensemble of classifiers that interpolates the training data [19]. In its later iterations, the algorithm exhibits an averaging effect, leading to behavior that closely resembles that of a random forest [19]. It has been argued that boosting methods, like random forests, are most effective when employing large decision trees and avoiding both regularization and early stopping [19]. In this interpretation, AdaBoost can be seen as a “randomized” forest of forests, where the individual trees in random forests and the ensembles in AdaBoost both achieve error-free interpolation of the training data [19].

This interpolation introduces robustness to noise: when classifiers fit data very locally, noise in one region has minimal influence on predictions in neighboring areas. Training models with deep trees and averaging their outputs inherently prevents overfitting by maintaining local interpolation properties [19]. Both random forests and AdaBoost leverage this mechanism, reducing overfitting as the number of iterations increases while preserving predictive accuracy.

WildWood [149] bridges the gap between traditional random forests and modern boosting techniques, offering a blend of efficiency, robustness, and interpretability. This method might pave the way for new innovations in ensemble learning and machine learning algorithms.

9. Comparison of Ensemble Methods: Computational Trade-Offs

Random forest [12] is an ensemble learning method that constructs multiple decision trees using bootstrapped samples of training data and randomly selected subsets of features. The final prediction is obtained through majority voting (classification) or averaging (regression). The advantage of random forest lies in its robustness to overfitting and its ability to handle high-dimensional data. Recent advancements include adaptations such as extremely randomized trees (extra-trees) [176] and weighted feature selection techniques [120].

Bagging [3] is a method that trains multiple base learners independently on different bootstrapped subsets of the dataset and aggregates their predictions. It effectively reduces variance and improves stability, particularly for high-variance models like decision trees. Bagging-based techniques such as random forests have been widely used due to their improved generalization capabilities. Computationally, bagging is parallelizable, making it efficient for large-scale learning [60].

Boosting [4,8] is an iterative ensemble approach that sequentially trains weak learners, with each learner focusing on misclassified instances from the previous iteration. Prominent algorithms include AdaBoost [8], gradient boosting [70], and their modern extensions like XGBoost [71] and LightGBM [82]. Boosting achieves high accuracy but is computationally more expensive than bagging due to its sequential nature.

Table 1 provides an overview of key ensemble techniques, their strengths, weaknesses, and common applications.

Understanding the computational and storage complexities of ensemble methods is crucial for selecting an appropriate approach based on resource availability. Table 2 summarizes the trade-offs among key ensemble techniques.

Table 2.

Computational and storage complexity of popular ensemble learning methods.

Bagging methods such as random forests benefit from parallelization, making them more scalable for large datasets [12]. They train base models independently, making them highly parallelizable, but they require large storage for all base learners [12]. They are effective in reducing variance and are robust to overfitting, but they require substantial storage and training time [12].

In contrast, boosting methods such as AdaBoost and XGBoost exhibit higher computational costs due to their sequential training nature but often achieve superior accuracy [71]. Boosting is sequential and can be more computationally expensive, but it often achieves higher accuracy with fewer base learners [8]. Boosting methods excel in reducing bias but are sensitive to noisy data and require careful tuning [4].

For large-scale applications, bagging is preferred due to its parallelizable nature, while boosting can be prohibitive in real-time environments [60]. Random forests scale well to large datasets, whereas boosting can be computationally intensive as the number of weak learners grows [70].

Stacking adds another layer of complexity by requiring an additional meta-learner, making both training and inference slower than simpler ensembles [25]. Stacking is useful when diverse models contribute complementary strengths, but it is computationally expensive and prone to overfitting without sufficient regularization [7].

10. Solving Multiclass Classification

A typical approach to handling multiclass classification tasks involves constructing multiple binary classifiers and integrating their outputs. ECOCs provide a versatile framework for combining these binary classifiers to solve multiclass problems effectively [38].

10.1. One-Against-All Strategy

The one-against-all approach is one of the simplest and most commonly used strategies for multiclass classification with K classes (). In this method, each class is trained against the combined set of all other classes. For the ith binary classifier, the original K-class training data are labeled as either belonging to or not belonging to class i and are used for training. As a result, K binary classifiers are created, each requiring training on the entire dataset. However, this method may struggle with distinguishing between classes and can lead to imbalanced data issues.

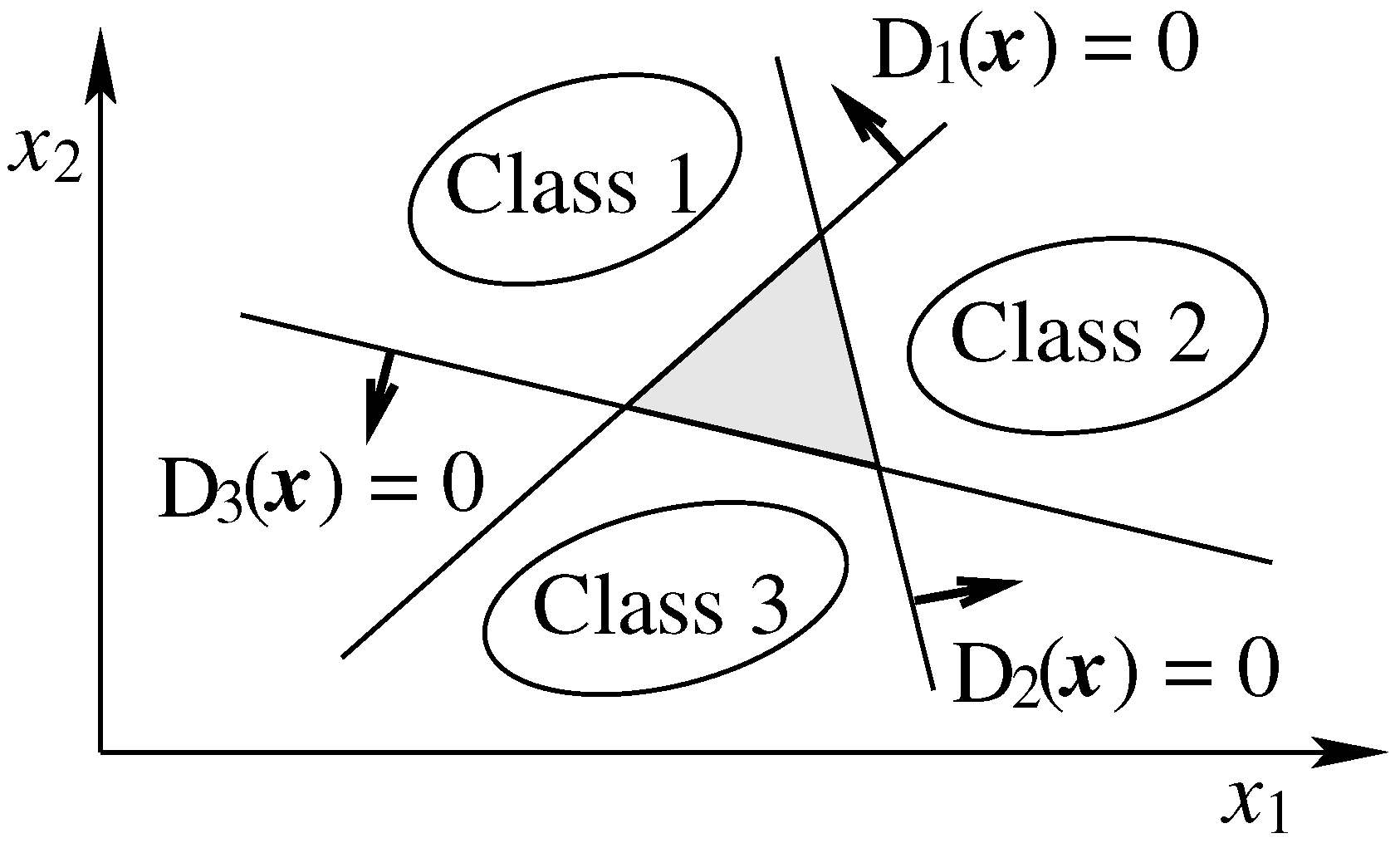

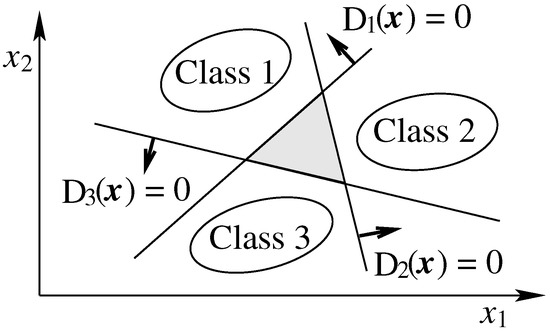

In this framework, we define K direct decision functions, each of which separates a single class from the rest. Let be the decision function for class i, which maximizes the margin separating it from the other classes. On the decision boundary, . To avoid unclassifiable regions, as depicted in Figure 1, a data sample is assigned to the class corresponding to the maximum value of , i.e., .

Figure 1.

Regions that cannot be classified by the one-against-all approach.

10.2. One-Against-One Strategy

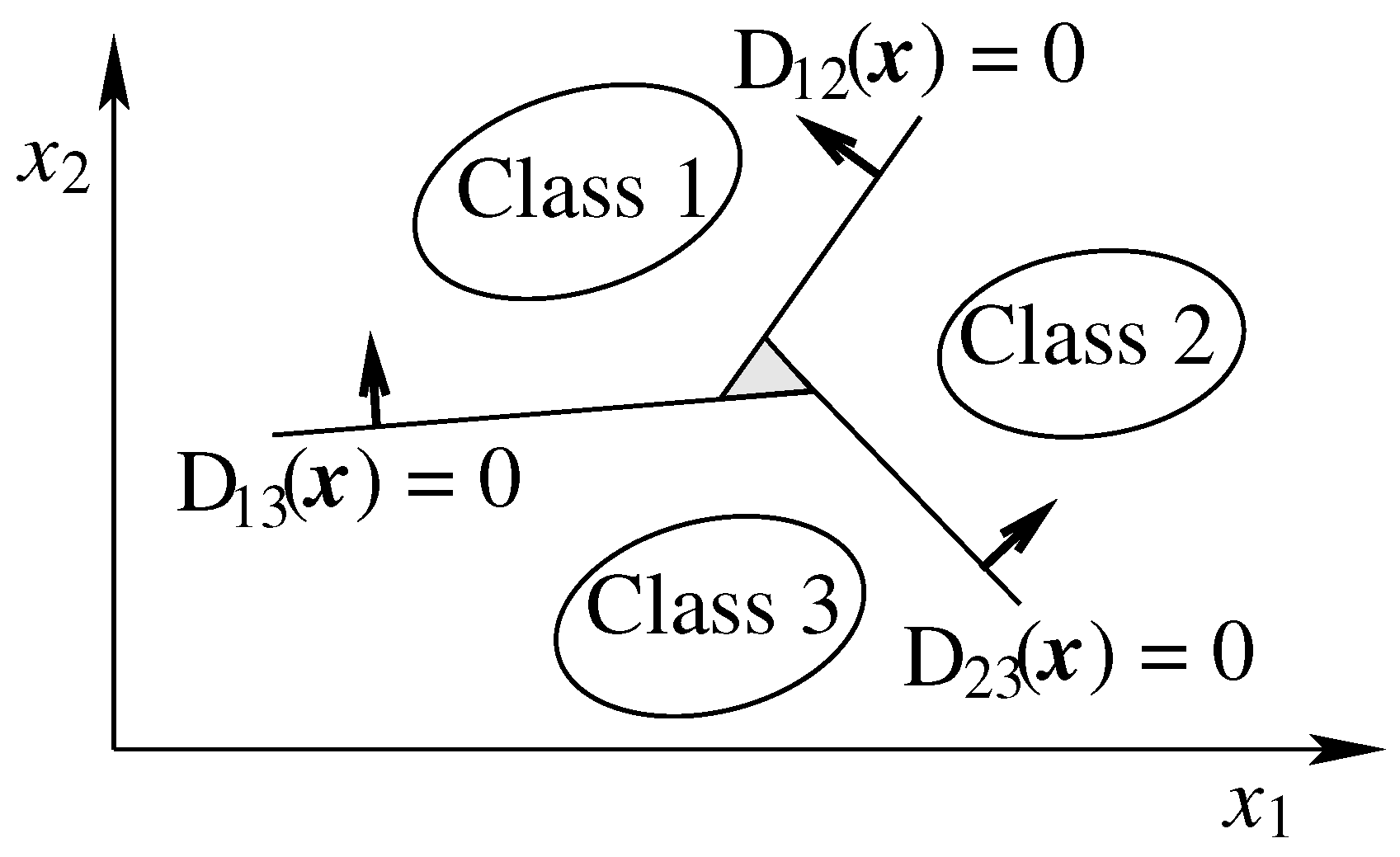

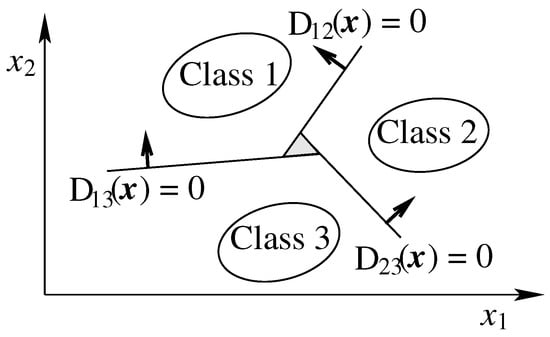

The one-against-one (pairwise voting) approach helps mitigate the unclassifiable regions that arise in the one-against-all method, although some unclassifiable regions still persist. In the one-against-one strategy, decision functions are determined for all possible class pair combinations. For each class pair, the training data are restricted to the two classes involved, reducing the amount of data used in each training session compared to the one-against-all method, which utilizes the entire dataset. The one-against-one strategy requires training binary classifiers, while the one-against-all strategy only needs K. To make a final decision, the outputs of all classifiers must be combined using majority voting. This results in significant computational complexity, especially when K is large.

Let represent the decision function for class i against class j, with the maximum margin. It holds that . The regions

do not overlap. If , it is classified as belonging to class i. However, there is a possibility that does not belong to any of the regions . In such cases, we classify by employing a voting mechanism. This is done by calculating

and assigning to the class .

If , then and for all , meaning that is correctly classified. However, if any , then multiple classes may be possible, making unclassifiable. In Figure 2, the shaded area represents the unclassifiable region, which is significantly smaller compared to the one-against-all approach.

Figure 2.

Regions where classification is ambiguous in the one-against-one approach.

To address unclassifiable regions while maintaining the same classification outcomes as the conventional one-against-one method in classifiable regions, the membership function is introduced, similar to the one-against-all approach. The all-and-one method [177] combines one-against-all and one-against-one strategies, partially mitigating their respective weaknesses.

A variation of the one-against-one strategy is found in directed acyclic graph SVM (DAGSVM) [178]. While the training process is identical to that of the one-against-one method, the testing phase utilizes a rooted binary directed acyclic graph with internal nodes and K leaves, requiring the evaluation of only binary classifiers.

The multiclass smooth SVM [179] implements a one-versus-one-versus-rest scheme, breaking the problem into ternary classification subproblems based on ternary voting games. This approach outperforms both the one-against-one and one-against-rest methods across all datasets.

10.3. Error-Correcting Output Codes

In the ECOC framework, the process begins with the creation of binary problems by partitioning classes, which are then learned by a base classifier. These partitions are encoded into the columns of a coding matrix , where each row represents a codeword that encodes a specific class. During the decoding phase, a new test sample is processed, and a corresponding codeword is generated based on the results of the binary problems. The codeword that most closely resembles the test sample is identified using a distance metric such as Hamming or Euclidean distance. If the minimum Hamming distance between any pair of codewords is t, then up to single-bit errors in can be corrected, where denotes the largest integer less than or equal to a.

In contrast to simple voting procedures, the ECOC method shares information among classes to make a more accurate classification decision, which helps mitigate errors stemming from variance and bias in the individual learners [180].

In a binary ECOC setup, each entry in the coding matrix takes values from the set . This means that each dichotomizer treats classes as part of one of two possible partitions. Common binary coding schemes include the one-against-all strategy and the dense random strategy [38], which require N and dichotomizers, respectively [181].

In the ECOC framework, the classification task is divided into subtasks handled by binary classifiers. Each classifier’s output is combined using a coding matrix of size , where K is the number of classes and L is the number of base learners (with outputs , ). The final classification is determined by the highest vote,

where is the accumulated vote for class i.

In ternary ECOCs [181], the coding matrix uses symbols , , and 0, where 0 indicates that a class is excluded from the learning process of a particular classifier. This ternary framework introduces a broader set of binary classification tasks, prompting the development of problem-specific designs and decoding strategies [182,183,184]. Common ternary ECOC designs include one-against-one [185], one-versus-all, and sparse random strategies [181]. The one-against-one strategy involves all class pairs, resulting in binary classifiers. The sparse random design introduces the 0 symbol with a different probability, yielding a code of length [181]. Additionally, the discriminant ECOC approach [184] uses classifiers, where binary tree nodes are encoded as dichotomizers in a ternary problem-dependent ECOC scheme.

In the ECOC framework, the one-against-one coding design typically outperforms other coding schemes in real-world multiclass problems [182]. A notable feature of this approach is the high sparsity of the coding matrix, with many positions being filled with zeros. This introduces two types of bias, which necessitate adjustments to the decoding procedure [182]. To address these biases, a new decoding measure and two strategies are proposed. These strategies ensure that all codewords operate within the same dynamic range, leading to significant improvements in ECOC performance [182]. The ECOC framework has been extended to online learning scenarios [186], where the final classifier adapts to the introduction of new classes without requiring changes to the base classifier. Online ECOC methods converge to the results of batch approaches while providing a robust and scalable solution for incorporating new classes.

In the ECOC approach, error-correcting codewords are utilized to enhance classification accuracy. While these codewords exhibit strong error-correcting properties, some of the generated subproblems can be challenging to solve [181]. Simpler methods like one-against-all and one-against-one often yield comparable or even better results in various applications than the more complex ECOC approach. Additionally, the ECOC method requires at least K times the number of tests.

Typically, the ECOC strategy combines the outputs of multiple binary classifiers using a straightforward nearest-neighbor rule, selecting the class closest to the binary classifiers’ outputs. For nearest-neighbor ECOCs, the error rate of the multiclass classifier is improved based on the average binary distance [187]. This demonstrates why methods such as elimination and Hamming decoding often lead to similar accuracy levels. Beyond improving generalization, ECOCs can also be applied to handle unclassifiable regions.

In the ECOC framework, let represent the target value for the jth decision function corresponding to class i, defined as follows:

The target vector for the jth decision function is the column vector . If all elements of a column vector are either 1 or , the decision function does not contribute to classification. Additionally, if two column vectors satisfy , they represent the same decision function. Therefore, the maximum number of distinct decision functions is .

The ith row vector represents a codeword for class i, where k denotes the number of decision functions. For a three-class problem, there can be at most three decision functions, represented by which corresponds to the one-against-all formulation and lacks error-correcting capability. By introducing a don’t-care output symbol 0, the one-against-all, one-against-one, and ECOC schemes are unified [181]. The one-against-one classification for three classes can be represented as follows:

11. Dempster–Shafer Theory of Evidence

The Dempster–Shafer theory of evidence, introduced by Dempster [188] and expanded by Shafer [2], builds upon the concepts of upper and lower probabilities. It generalizes the Bayesian approach to probability by facilitating the combination of multiple pieces of evidence in order to make probability judgments. This framework is commonly used for fusing information from diverse sources to assess the truth of a hypothesis. Within Dempster–Shafer theory, two key concepts are employed: belief, which quantifies the support for a hypothesis based on the evidence, and plausibility, which measures the extent to which the evidence does not contradict the hypothesis. These concepts are analogous to necessity and possibility in fuzzy logic. The Dempster–Shafer approach utilizes intervals to represent uncertain probabilities, similar to interval-valued fuzzy sets. As a method for reasoning under uncertainty, Dempster–Shafer theory has found extensive application in fields such as expert systems, information fusion, and decision analysis.

Definition 1

(Frame of Discernment). A frame of discernment, denoted by Θ, is a finite set consisting of N mutually exclusive singleton hypotheses, representing the scope of knowledge for a given source. This can be expressed as follows:

Each hypothesis , known as a singleton, represents the simplest and most granular level of distinguishable information.

Definition 2

(Basic Probability Assignment Function). The basic probability assignment (BPA) function, denoted as , assigns a probability to each event (or proposition) within the frame of discernment Θ. The mapping must satisfy the following conditions: and

The set represents the power set of , which includes all possible subsets of . For example, if , then consists of the following subsets: .

In the context of a frame of discernment , an event is considered a focal element of the BPA m if . When m has only one focal element, denoted as , the BPA is referred to as categorical and is written as . If every focal element of m corresponds to a singleton event, then m is considered Bayesian.

The values assigned by a BPA function are referred to as belief masses. A BPA function is considered normal if .

Definition 3

(Belief Function). For any hypothesis , its belief function, denoted as , is a mapping from to and is defined as the sum of the basic probabilities of all subsets of ,

The belief function, also referred to as the lower limit function, quantifies the minimum support for a hypothesis . It can be interpreted as a global indicator of the degree of belief that is true. Based on its definition, we have and . It is important to note that there is a one-to-one correspondence between the BPA and the belief functions.

Definition 4

(Plausibility Function). The plausibility function of a hypothesis , denoted , measures the belief that is not assigned to the negation of . It is defined as follows:

The plausibility function, also known as the upper limit function, represents the maximum possible belief assigned to . While the plausibility function serves as a measure of possibility, the belief function is its dual counterpart, representing necessity. It can be demonstrated that [2]

In the case where m is Bayesian, both the belief and plausibility functions coincide, effectively acting as a probability measure.

Definition 5

(Uncertainty Function). The uncertainty associated with is given by the difference between the plausibility and belief functions:

Definition 6

(Commonality Function). The commonality function quantifies the amount of BPA that is allocated to and all sets that contain as a subset:

Belief functions are extensively utilized to represent and handle uncertainty. In Dempster–Shafer theory, Dempster’s combination rule is employed to merge different belief sources. Under certain probabilistic assumptions, the outcomes of this rule are both probabilistically sound and interpretable. Known as the orthogonal summing rule, Dempster’s rule synthesizes the BPAs from multiple sources to produce a new belief or probability, expressed as a BPA. If and are the BPAs from two distinct sources within the same frame of discernment, the combination is given by [2]

where

represents the conflict between the evidence sources. If , the two pieces of evidence are deemed logically inconsistent and cannot be combined. To apply Dempster’s rule in cases of high conflict, any conflicting belief masses can be assigned to an empty event, in line with the open-world assumption. This assumption posits that some potential event may have been overlooked and thus is not represented in the frame of discernment [189].

In general, the combined result of n BPA values, , , ⋯, , within the same frame of discernment , is computed as follows:

where

In the context of Dempster–Shafer theory, the fusion process involving Dempster’s rule of combination is known to be NP-complete, which limits the number of belief functions and focal sets that can be combined. To overcome this challenge, Monte Carlo algorithms [190] use importance sampling and low-discrepancy sequences to approximate the results of Dempster’s rule.

One key advantage of the Dempster–Shafer method over the Bayesian approach is that it does not require prior probability distributions, instead directly combining the available evidence. However, the Dempster–Shafer method fails in fuzzy systems due to its assumption that hypotheses are mutually exclusive.

Dempster’s rule presumes the independence of classifiers. For combining dependent classifiers, alternatives such as the cautious rule and t-norm-based rules, which provide a range of behaviors from Dempster’s rule to the cautious rule, can be utilized [191]. An optimal combination strategy can be derived from a parameterized family of t-norms.

Another limitation of Dempster’s rule is that it treats all sources of evidence as equally reliable. In certain cases, particularly when there is substantial conflict among evidence sources, counterintuitive results may arise [192]. A measure of falsity, derived from the conflict coefficient using Dempster’s rule, has been proposed to address this issue [193]. Based on this measure, discounting factors can be introduced to adjust the evidence combination process.