Generalization of Liu–Zhou Method for Multiple Roots of Applied Science Problems

Abstract

1. Introduction

2. Basic Definitions

2.1. Multiple Root

2.2. Order of Convergence

2.3. Error Equation

2.4. Computational Order of Convergence

2.5. Kung–Traub Hypothesis

3. Formulation of Scheme

Special Members of Scheme (3)

4. Numerical Simulation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Azarmanesh, M.; Dejam, M.; Azizian, P.; Yesiloz, G.; Mohamad, A.A.; Sanati-Nezhad, A. Passive microinjection within high-throughput microfluidics for controlled actuation of droplets and cells. Sci. Rep. 2019, 9, 6723. [Google Scholar] [CrossRef]

- Dejam, M. Advective-diffusive-reactive solute transport due to non-Newtonian fluid flows in a fracture surrounded by a tight porous medium. Int. J. Heat Mass Transf. 2019, 128, 1307–1321. [Google Scholar] [CrossRef]

- Nikpoor, M.H.; Dejam, M.; Chen, Z.; Clarke, M. Chemical-Gravity-Thermal Diffusion Equilibrium in Two-Phase Non-isothermal Petroleum Reservoirs. Energy Fuel 2016, 30, 2021–2034. [Google Scholar] [CrossRef]

- Ostrowski, A.M. Solution of Equations and Systems of Equations; Academic Press: New York, NY, USA, 1960. [Google Scholar]

- Traub, J.F. Iterative Methods for the Solution of Equations; Prentice-Hall: Englewood Cliffs, NJ, USA, 1964. [Google Scholar]

- Petkovic, M.S.; Neta, B.; Petkovic, L.D.; Dzunic, J. Multipoint Methods for Solving Nonlinear Equations; Academic Press: New York, NY, USA, 2013. [Google Scholar]

- Kung, H.T.; Traub, J.F. Optimal order of one-point and multipoint iteration. J. Assoc. Comput. Mach. 1974, 21, 643–651. [Google Scholar] [CrossRef]

- Behl, R.; Cordero, A.; Motsa, S.S.; Torregrosa, J.R. On developing fourth-order optimal families of methods for multiple roots and their dynamics. Appl. Math. Comput. 2015, 265, 520–532. [Google Scholar] [CrossRef]

- Behl, R.; Cordero, A.; Motsa, S.S.; Torregrosa, J.R.; Kanwar, V. An optimal fourth-order family of methods for multiple roots and its dynamics. Numer. Algor. 2016, 71, 775–796. [Google Scholar] [CrossRef]

- Behl, R.; Zafar, F.; Alshormani, A.S.; Junjua, M.U.D.; Yasmin, N. An optimal eighth-order scheme for multiple zeros of unvariate functions. Int. J. Comput. Meth. 2018, 16, 1843002. [Google Scholar] [CrossRef]

- Dong, C. A family of multipoint iterative functions for finding multiple roots of equations. Int. J. Comput. Math. 1987, 21, 363–367. [Google Scholar] [CrossRef]

- Geum, Y.H.; Kim, Y.I.; Neta, B. A class of two-point sixth-order multiple-zero finders of modified double-Newton type and their dynamics. Appl. Math. Comput. 2015, 270, 387–400. [Google Scholar] [CrossRef]

- Hansen, E.; Patrick, M. A family of root finding methods. Numer. Math. 1977, 27, 257–269. [Google Scholar] [CrossRef]

- Hueso, J.L.; Martínez, E.; Teruel, C. Determination of multiple roots of nonlinear equations and applications. J. Math. Chem. 2015, 53, 880–892. [Google Scholar] [CrossRef]

- Kansal, M.; Kanwar, V.; Bhatia, S. On some optimal multiple root-finding methods and their dynamics. Appl. Appl. Math. 2015, 10, 349–367. [Google Scholar]

- Li, S.; Liao, X.; Cheng, L. A new fourth-order iterative method for finding multiple roots of nonlinear equations. Appl. Math. Comput. 2009, 215, 1288–1292. [Google Scholar]

- Li, S.G.; Cheng, L.Z.; Neta, B. Some fourth-order nonlinear solvers with closed formulae for multiple roots. Comput Math. Appl. 2010, 59, 126–135. [Google Scholar] [CrossRef]

- Liu, B.; Zhou, X. A new family of fourth-order methods for multiple roots of nonlinear equations. Nonlinear Anal. Model. Control 2013, 21, 143–152. [Google Scholar] [CrossRef]

- Neta, B. New third order nonlinear solvers for multiple roots. Appl. Math. Comput. 2008, 202, 162–170. [Google Scholar] [CrossRef]

- Neta, B. Extension of Murakami’s high-order non-linear solver to multiple roots. Int. J. Comput. Math. 2010, 87, 1023–1031. [Google Scholar] [CrossRef]

- Osada, N. An optimal multiple root-finding method of order three. J. Comput. Appl. Math. 1994, 51, 131–133. [Google Scholar] [CrossRef]

- Sharma, J.R.; Kumar, S. An excellent numerical technique for multiple roots. Math. Comput. Simul. 2021, 182, 316–324. [Google Scholar] [CrossRef]

- Sharma, J.R.; Kumar, S. A class of computationally efficient numerical algorithms for locating multiple zeros. Afr. Mat. 2021, 32, 853–864. [Google Scholar] [CrossRef]

- Sharma, J.R.; Sharma, R. Modified Jarratt method for computing multiple roots. Appl. Math. Comput. 2010, 217, 878–881. [Google Scholar] [CrossRef]

- Sharifi, M.; Babajee, D.K.R.; Soleymani, F. Finding the solution of nonlinear equations by a class of optimal methods. Comput. Math. Appl. 2012, 63, 764–774. [Google Scholar] [CrossRef]

- Soleymani, F.; Babajee, D.K.R.; Lotfi, T. On a numerical technique for finding multiple zeros and its dynamics. J. Egypt. Math. Soc. 2013, 21, 346–353. [Google Scholar] [CrossRef]

- Soleymani, F.; Babajee, D.K.R. Computing multiple zeros using a class of quartically convergent methods. Alex. Eng. J. 2013, 52, 531–541. [Google Scholar] [CrossRef]

- Thukral, R. A new family of fourth-order iterative methods for solving nonlinear equations with multiple roots. J. Numer. Math. Stoch. 2014, 6, 37–44. [Google Scholar]

- Victory, H.D.; Neta, B. A higher order method for multiple zeros of nonlinear functions. Int. J. Comput. Math. 1983, 12, 329–335. [Google Scholar] [CrossRef]

- Zhou, X.; Chen, X.; Song, Y. Constructing higher-order methods for obtaining the multiple roots of nonlinear equations. J. Comput. Appl. Math. 2011, 235, 4199–4206. [Google Scholar] [CrossRef]

- Zhou, X.; Chen, X.; Song, Y. Families of third and fourth order methods for multiple roots of nonlinear equations. Appl. Math. Comput. 2013, 219, 6030–6038. [Google Scholar] [CrossRef]

- Cordero, A.; Torregrosa, J.R. Variants of Newton’s method using fifth-order quadrature formulas. Appl. Math. Comput. 2007, 190, 686–698. [Google Scholar] [CrossRef]

- Douglas, J.M. Process Dynamics and Control; Prentice Hall: Englewood Cliffs, NJ, USA, 1972. [Google Scholar]

- Hoffman, J.D. Numerical Methods for Engineers and Scientists; McGraw-Hill Book Company: New York, NY, USA, 1992. [Google Scholar]

- Kumar, S.; Kumar, D.; Sharma, J.R.; Cesarano, C.; Agarwal, P.; Chu, Y.M. An Optimal Fourth Order Derivative-Free Numerical Algorithm for Multiple Roots. Symmetry 2020, 12, 1038. [Google Scholar] [CrossRef]

| Functions | Root () | ||

|---|---|---|---|

| Continuous stirred tank reactor problem [33] | |||

| −2.85 | 2 | −3 | |

| Standard test problem | |||

| 0 | 3 | 0.6 | |

| Isentropic supersonic flow problem [34] | |||

| 1.8411294068… | 3 | 1.5 | |

| Eigen value problem [10] | |||

| 3 | 4 | 2.3 | |

| Complex root problem [35] | |||

| i | 6 | 1.1 i |

| Methods | i | COC | |||

|---|---|---|---|---|---|

| LLC | 4 | 4.000 | |||

| LCN | 4 | 4.000 | |||

| SM | 4 | 4.000 | |||

| ZCS | 4 | 4.000 | |||

| SBL | 4 | 4.000 | |||

| KKB | 4 | 4.000 | |||

| LZ-1 | 4 | 4.000 | |||

| LZ-2 | 4 | 4.000 | |||

| M-1 | 4 | 4.000 | |||

| M-2 | 4 | 4.000 | |||

| M-3 | 4 | 4.000 | |||

| M-4 | 4 | 4.000 |

| Methods | i | COC | |||

|---|---|---|---|---|---|

| LLC | 5 | 4.000 | |||

| LCN | 5 | 4.000 | |||

| SM | 5 | 4.000 | |||

| ZCS | 5 | 4.000 | |||

| SBL | 5 | 4.000 | |||

| KKB | 5 | 4.000 | |||

| LZ-1 | 5 | 4.000 | |||

| LZ-2 | 5 | 4.000 | |||

| M-1 | 5 | 4.000 | |||

| M-2 | 5 | 4.000 | |||

| M-3 | 5 | 4.000 | |||

| M-4 | 5 | 4.000 |

| Methods | i | COC | |||

|---|---|---|---|---|---|

| LLC | 5 | 4.000 | |||

| LCN | 5 | 4.000 | |||

| SM | 5 | 4.000 | |||

| ZCS | 5 | 4.000 | |||

| SBL | 5 | 4.000 | |||

| KKB | 5 | 4.000 | |||

| LZ-1 | 5 | 4.000 | |||

| LZ-2 | 5 | 4.000 | |||

| M-1 | 5 | 4.000 | |||

| M-2 | 5 | 4.000 | |||

| M-3 | 5 | 4.000 | |||

| M-4 | 5 | 4.000 |

| Methods | i | COC | |||

|---|---|---|---|---|---|

| LLC | 5 | 4.000 | |||

| LCN | 5 | 4.000 | |||

| SM | 5 | 4.000 | |||

| ZCS | 5 | 4.000 | |||

| SBL | 5 | 4.000 | |||

| KKB | 5 | 4.000 | |||

| LZ-1 | 5 | 4.000 | |||

| LZ-2 | 5 | 4.000 | |||

| M-1 | 5 | 4.000 | |||

| M-2 | 5 | 4.000 | |||

| M-3 | 5 | 4.000 | |||

| M-4 | 5 | 4.000 |

| Methods | i | COC | |||

|---|---|---|---|---|---|

| LLC | 5 | 4.000 | |||

| LCN | 5 | 4.000 | |||

| SM | 5 | 4.000 | |||

| ZCS | 5 | 4.000 | |||

| SBL | 5 | 4.000 | |||

| KKB | 5 | 4.000 | |||

| LZ-1 | 5 | 4.000 | |||

| LZ-2 | 5 | 4.000 | |||

| M-1 | 5 | 4.000 | |||

| M-2 | 5 | 4.000 | |||

| M-3 | 5 | 4.000 | |||

| M-4 | 5 | 4.000 |

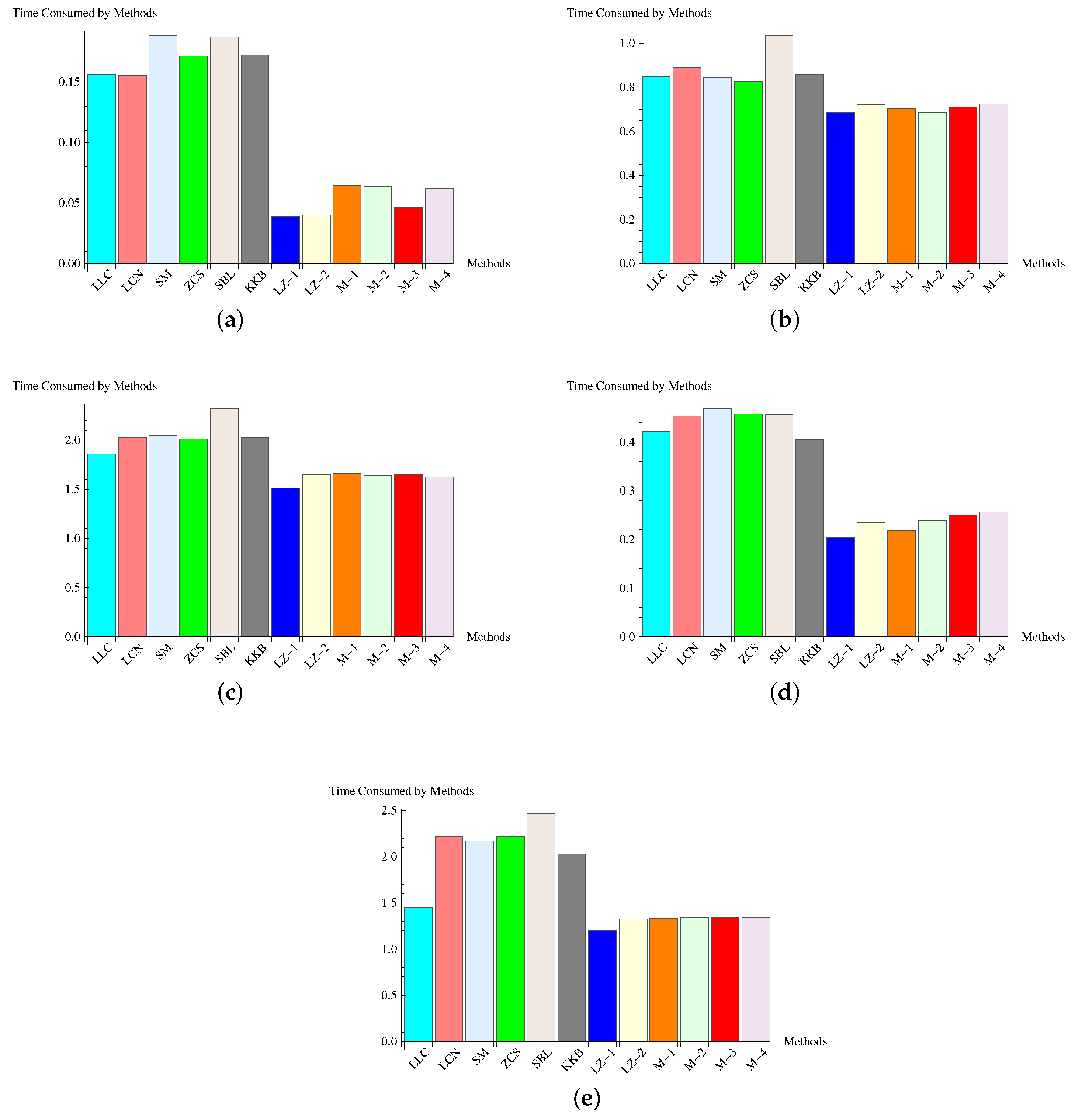

| Methods | |||||

|---|---|---|---|---|---|

| LLC | 0.1562 | 0.8491 | 1.8570 | 0.4217 | 1.4506 |

| LCN | 0.1557 | 0.8897 | 2.0283 | 0.4534 | 2.2164 |

| SM | 0.1884 | 0.8433 | 2.0442 | 0.4681 | 2.1681 |

| ZCS | 0.1714 | 0.8265 | 2.0129 | 0.4575 | 2.2165 |

| SBL | 0.1873 | 1.0342 | 2.3187 | 0.4567 | 2.4653 |

| KKB | 0.1723 | 0.8587 | 2.0284 | 0.4052 | 2.0287 |

| LZ-1 | 0.0392 | 0.6867 | 1.5138 | 0.2029 | 1.2015 |

| LZ-2 | 0.0399 | 0.7221 | 1.6533 | 0.2347 | 1.3261 |

| M-1 | 0.0648 | 0.7024 | 1.6592 | 0.2184 | 1.3326 |

| M-2 | 0.0637 | 0.6870 | 1.6387 | 0.2392 | 1.3427 |

| M-3 | 0.0461 | 0.7107 | 1.6534 | 0.2501 | 1.3419 |

| M-4 | 0.0624 | 0.7231 | 1.6239 | 0.2562 | 1.3426 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kumar, S.; Khatri, M.; Vyas, M.; Kumar, A.; Dhankhar, P.; Jäntschi, L. Generalization of Liu–Zhou Method for Multiple Roots of Applied Science Problems. Mathematics 2025, 13, 523. https://doi.org/10.3390/math13030523

Kumar S, Khatri M, Vyas M, Kumar A, Dhankhar P, Jäntschi L. Generalization of Liu–Zhou Method for Multiple Roots of Applied Science Problems. Mathematics. 2025; 13(3):523. https://doi.org/10.3390/math13030523

Chicago/Turabian StyleKumar, Sunil, Monika Khatri, Muktak Vyas, Ashwini Kumar, Priti Dhankhar, and Lorentz Jäntschi. 2025. "Generalization of Liu–Zhou Method for Multiple Roots of Applied Science Problems" Mathematics 13, no. 3: 523. https://doi.org/10.3390/math13030523

APA StyleKumar, S., Khatri, M., Vyas, M., Kumar, A., Dhankhar, P., & Jäntschi, L. (2025). Generalization of Liu–Zhou Method for Multiple Roots of Applied Science Problems. Mathematics, 13(3), 523. https://doi.org/10.3390/math13030523