Complex-Valued Multivariate Neural Network (MNN) Approximation by Parameterized Half-Hyperbolic Tangent Function

Abstract

1. Introduction

2. Preliminaries

- for

- Reciprocal anti-symmetry: is satisfied for .

- Since is derived for every , is strictly increasing over .

3. -Valued-Normalized MNN Operators

4. Approximation Results

5. Conclusions and Discussion

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Symbol | Description |

| ; | The set of real numbers; Banach space of complex numbers |

| The set of natural numbers | |

| Arbitrarily chosen natural numbers | |

| Mathematical formulation of an “ANN architecture” based upon multiple hidden layers | |

| An -dimensional element from the set of | |

| Closed subinterval of | |

| An element of | |

| A general activation function of an ANN architecture | |

| Connection weights of an ANN architecture | |

| Thresholds of an ANN architecture | |

| Parameterized half-hyperbolic tangent activation function | |

| Parameters of parameterized half-hyperbolic tangent activation function | |

| Density function | |

| Density function for multivariate case | |

| Supremum norm of in multivariate case | |

| Complex-valued linear normalized MNN operator | |

| Complex-valued linear normalized MNN associate operator | |

| A component of operator | |

| The first modulus of continuity | |

| Reminder of multivariate hyperbolic Taylor formula |

References

- Ansari, K.J.; Özger, F. Pointwise and weighted estimates for Bernstein-Kantorovich type operators including beta function. Indian J. Pure Appl. Math. 2024. [Google Scholar] [CrossRef]

- Savaş, E.; Mursaleen, M. Bézier Type Kantorovich q-Baskakov Operators via Wavelets and Some Approximation Properties. Bull. Iran. Math. Soc. 2023, 49, 68. [Google Scholar] [CrossRef]

- Cai, Q.; Aslan, R.; Özger, F.; Srivastava, H.M. Approximation by a new Stancu variant of generalized (λ,μ)-Bernstein operators. Alex. Eng. J. 2024, 107, 205–214. [Google Scholar] [CrossRef]

- Ayman-Mursaleen, M.; Zaman, M.N.; Sharma, S.K.; Cai, Q.-B. Invariant means and lacunary sequence spaces of order (α,β). Demonstr. Math. 2024, 57, 20240003. [Google Scholar] [CrossRef]

- Rao, N.; Ayman-Mursaleen, M.; Aslan, R. A note on a general sequence of λ-Szász Kantorovich type operators. Comput. Appl. Math. 2024, 43, 428. [Google Scholar] [CrossRef]

- Alamer, A.; Nasiruzzaman, M. Approximation by Stancu variant of λ-Bernstein shifted knots operators associated by Bézier basis function. J. King Saud Univ. Sci. 2024, 36, 103333. [Google Scholar] [CrossRef]

- Ayman-Mursaleen, M.; Zaman, M.N.; Rao, N.; Dilshad, M. Approximation by the modified λ-Bernstein-polynomial in terms of basis function. AIMS Math. 2024, 9, 4409–4426. [Google Scholar] [CrossRef]

- Mudarra, A.; Valdivia, D.; Ducange, P.; Germán, M.; Rivera, A.J.; Pérez-Godoy, M.D. Nets4Learning: A Web Platform for Designing and Testing ANN/DNN Models. Electronics 2024, 13, 4378. [Google Scholar] [CrossRef]

- Rashedi, K.A.; Ismail, M.T.; Al Wadi, S.; Serroukh, A.; Alshammari, T.S.; Jaber, J.J. Multi-Layer Perceptron-Based Classification with Application to Outlier Detection in Saudi Arabia Stock Returns. J. Risk Financ. Manag. 2024, 17, 69. [Google Scholar] [CrossRef]

- Minsky, M.; Papert, S. Perceptrons; MIT Press: Cambridge, MA, USA, 1969. [Google Scholar]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- Kolmogorov, A.N. On the representation of continuous functions of many variables by superposition of continuous functions of one variable and addition. Transl. Am. Math. Soc. 1963, 2, 55–59. [Google Scholar]

- Hecht-Nielsen, R. Kolmogorov’s mapping neural network existence theorem. In Proceedings of the International Conference on Neural Networks, San Diego, CA, USA, 21–24 June 1987; IEEE Press: New York, NY, USA, 1987; pp. 11–14. [Google Scholar]

- Cybenko, G. Approximation by superpositions of a sigmoidal function. Math. Control. Signals Syst. 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Funahashi, K.I. On the approximate realization of continuous mappings by neural networks. Neural Netw. 1989, 2, 183–192. [Google Scholar] [CrossRef]

- Chen, T.; Chen, H. Universal approximation to nonlinear operators by neural networks with arbitrary activation functions and its application to dynamical systems. IEEE Trans. Neural Netw. 1995, 6, 911–917. [Google Scholar] [CrossRef]

- Chui, C.K.; Li, X. Approximation by ridge functions and neural networks with one hidden layer. J. Approx. Theory 1992, 70, 131–141. [Google Scholar] [CrossRef]

- Hahm, N.; Hong, B.I. An approximation by neural networks with a fixed weight. Comput. Math. Appl. 2004, 47, 1897–1903. [Google Scholar] [CrossRef]

- Costarelli, D.; Spigler, R. Approximation results for neural network operators activated by sigmoidal functions. Neural Netw. 2013, 44, 101–106. [Google Scholar] [CrossRef]

- Costarelli, D.; Spigler, R. Multivariate neural network operators with sigmoidal activation functions. Neural Netw. 2013, 48, 72–77. [Google Scholar] [CrossRef]

- Costarelli, D. Neural network operators: Constructive interpolation of multivariate functions. Neural Netw. 2015, 67, 28–36. [Google Scholar] [CrossRef]

- Anastassiou, G.A. Banach Space Valued Ordinary and Fractional Neural Network Approximation Based on q-Deformed and β-Parametrized Half Hyperbolic Tangent. In Parametrized, Deformed and General Neural Networks; Studies in Computational Intelligence; Springer: Cham, Switzerland, 2023; Volume 1116. [Google Scholar]

- Pinkus, A. Approximation theory of the MLP model in neural networks. Acta Numer. 1999, 8, 143–195. [Google Scholar] [CrossRef]

- Ismailov, V.E. Ridge Functions and Applications in Neural Networks, Mathematical Surveys and Monographs, Vol. 263; American Mathematical Society: Providence, RI, USA, 2021; p. 186. [Google Scholar]

- Costarelli, D.; Piconi, M. Implementation of neural network operators with applications to remote sensing data. arXiv 2024, arXiv:2412.00375. [Google Scholar]

- Angeloni, L.; Bloisi, D.D.; Burghignoli, P.; Comite, D.; Costarelli, D.; Piconi, M.; Sambucini, A.R.; Troiani, A.; Veneri, A. Microwave Remote Sensing of Soil Moisture, Above Ground Biomass and Freeze-Thaw Dynamic: Modeling and Empirical Approaches. arXiv 2024, arXiv:2412.03523. [Google Scholar]

- Baxhaku, F.; Berisha, A.; Agrawal, P.N.; Baxhaku, B. Multivariate neural network operators activated by smooth ramp functions. Expert Syst. Appl. 2025, 269, 126119. [Google Scholar] [CrossRef]

- Kadak, U. Multivariate fuzzy neural network interpolation operators and applications to image processing. Expert Syst. Appl. 2022, 206, 117771. [Google Scholar] [CrossRef]

- Costarelli, D.; Sambucini, A.R. A comparison among a fuzzy algorithm for image rescaling with other methods of digital image processing. Constr. Math. Anal. 2024, 7, 45–68. [Google Scholar] [CrossRef]

- Karateke, S. Some Mathematical Properties of Flexible Hyperbolic Tangent Activation Function with Application to Deep Neural Networks. 2025; accepted. [Google Scholar]

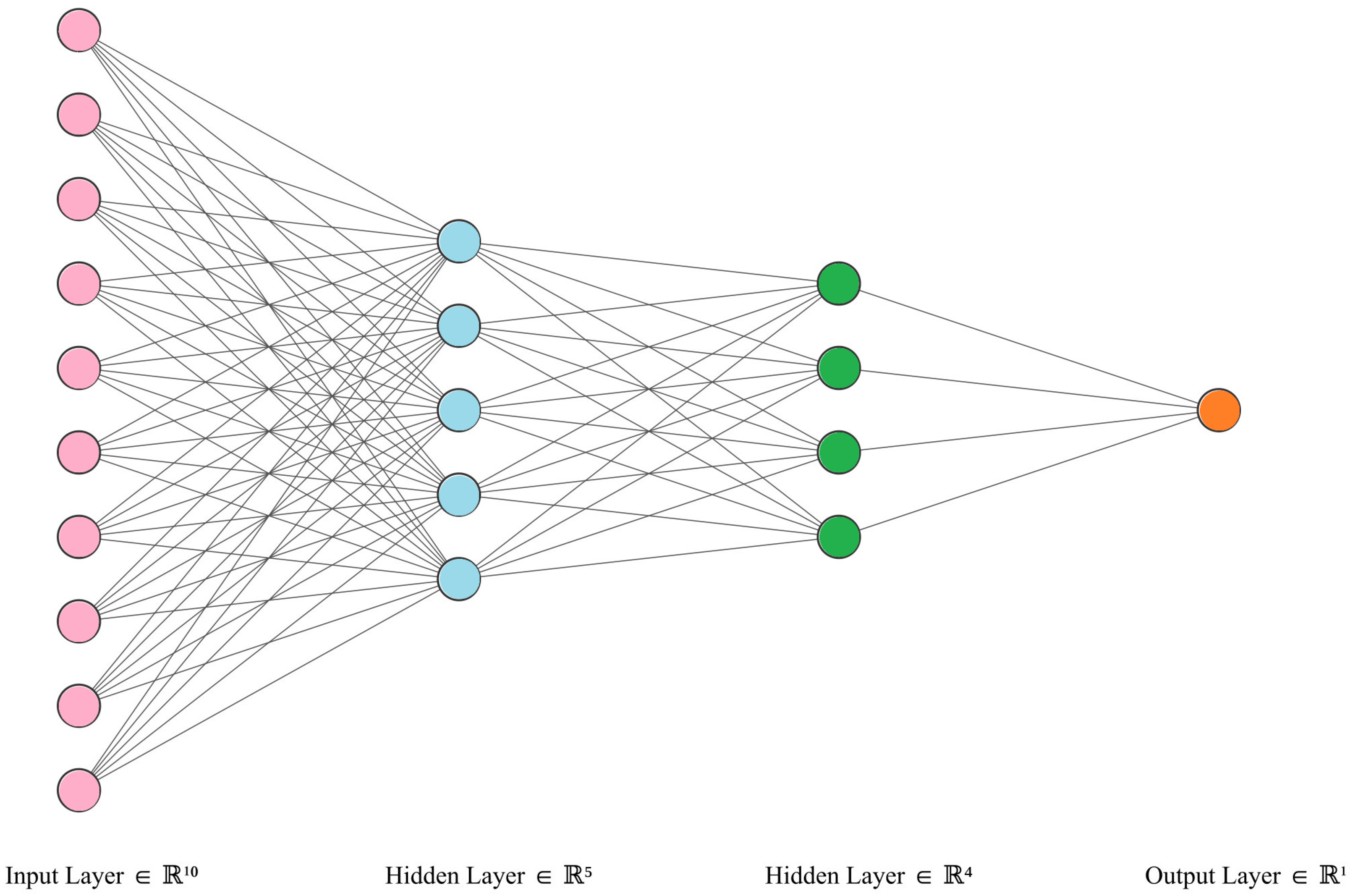

- Available online: https://alexlenail.me/NN-SVG/ (accessed on 26 January 2025).

- Anastassiou, G.A. Multivariate hyperbolic tangent neural network approximation. Comput. Math. 2011, 61, 809–821. [Google Scholar]

- Anastassiou, G.A. Rate of convergence of some neural network operators to the unit-univariate case. J. Math. Anal. Appl. 1997, 212, 237–262. [Google Scholar] [CrossRef]

- Anastassiou, G.A. Multivariate sigmoidal neural network approximation. Neural Netw. 2011, 24, 378–386. [Google Scholar] [CrossRef]

- Anastassiou, G.A. Approximation by neural networks iterates. In Advances in Applied Mathematics and Approximation Theory, Springer Proceedings in Mathematics & Statistics; Anastassiou, G., Duman, O., Eds.; Springer: New York, NY, USA, 2013; pp. 1–20. [Google Scholar]

- Anastassiou, G. Intelligent Systems II: Complete Approximation by Neural Network Operators; Springer: Heidelberg, NY, USA, 2016. [Google Scholar]

- Anastassiou, G.A. Intelligent Computations: Abstract Fractional Calculus, Inequalities, Approximations; Springer: Heidelberg, NY, USA, 2018. [Google Scholar]

- Karateke, S. On an (ι,x0)-Generalized Logistic-Type Function. Fundam. J. Math. Appl. 2024, 7, 35–52. [Google Scholar] [CrossRef]

- Haykin, S. Neural Networks: A Comprehensive Foundation, 2nd ed.; Prentice Hall: New York, NY, USA, 1998. [Google Scholar]

- McCulloch, W.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943, 7, 115–133. [Google Scholar] [CrossRef]

- Mitchell, T.M. Machine Learning; WCB-McGraw-Hill: New York, NY, USA, 1997. [Google Scholar]

- Anastassiou, G.A.; Karateke, S. Parametrized Half-Hyperbolic Tangent Function-Activated Complex-Valued Neural Network Approximation. Symmetry 2024, 16, 1568. [Google Scholar] [CrossRef]

- Arai, A. Exactly solvable supersymmetric quantum mechanics. J. Math. Anal. Appl. 1991, 158, 63–79. [Google Scholar] [CrossRef]

- Anastassiou, G.A. Perturbed Hyperbolic Tangent Function-Activated Complex-Valued Trigonometric and Hyperbolic Neural Network High Order Approximation. In Trigonometric and Hyperbolic Generated Approximation Theory; World Scientific: Singapore, 2025. [Google Scholar]

- Anastassiou, G.A. Opial and Ostrowski Type Inequalities Based on Trigonometric and Hyperbolic Type Taylor Formulae. Malaya J. Mat. 2023, 11, 1–26. [Google Scholar] [CrossRef]

- Ali, H.A.; Pales, Z. Taylor-type expansions in terms of exponential polynomials. Math. Inequalities Appl. 2022, 25, 1123–1141. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karateke, S. Complex-Valued Multivariate Neural Network (MNN) Approximation by Parameterized Half-Hyperbolic Tangent Function. Mathematics 2025, 13, 453. https://doi.org/10.3390/math13030453

Karateke S. Complex-Valued Multivariate Neural Network (MNN) Approximation by Parameterized Half-Hyperbolic Tangent Function. Mathematics. 2025; 13(3):453. https://doi.org/10.3390/math13030453

Chicago/Turabian StyleKarateke, Seda. 2025. "Complex-Valued Multivariate Neural Network (MNN) Approximation by Parameterized Half-Hyperbolic Tangent Function" Mathematics 13, no. 3: 453. https://doi.org/10.3390/math13030453

APA StyleKarateke, S. (2025). Complex-Valued Multivariate Neural Network (MNN) Approximation by Parameterized Half-Hyperbolic Tangent Function. Mathematics, 13(3), 453. https://doi.org/10.3390/math13030453