EdgeFormer-YOLO: A Lightweight Multi-Attention Framework for Real-Time Red-Fruit Detection in Complex Orchard Environments

Abstract

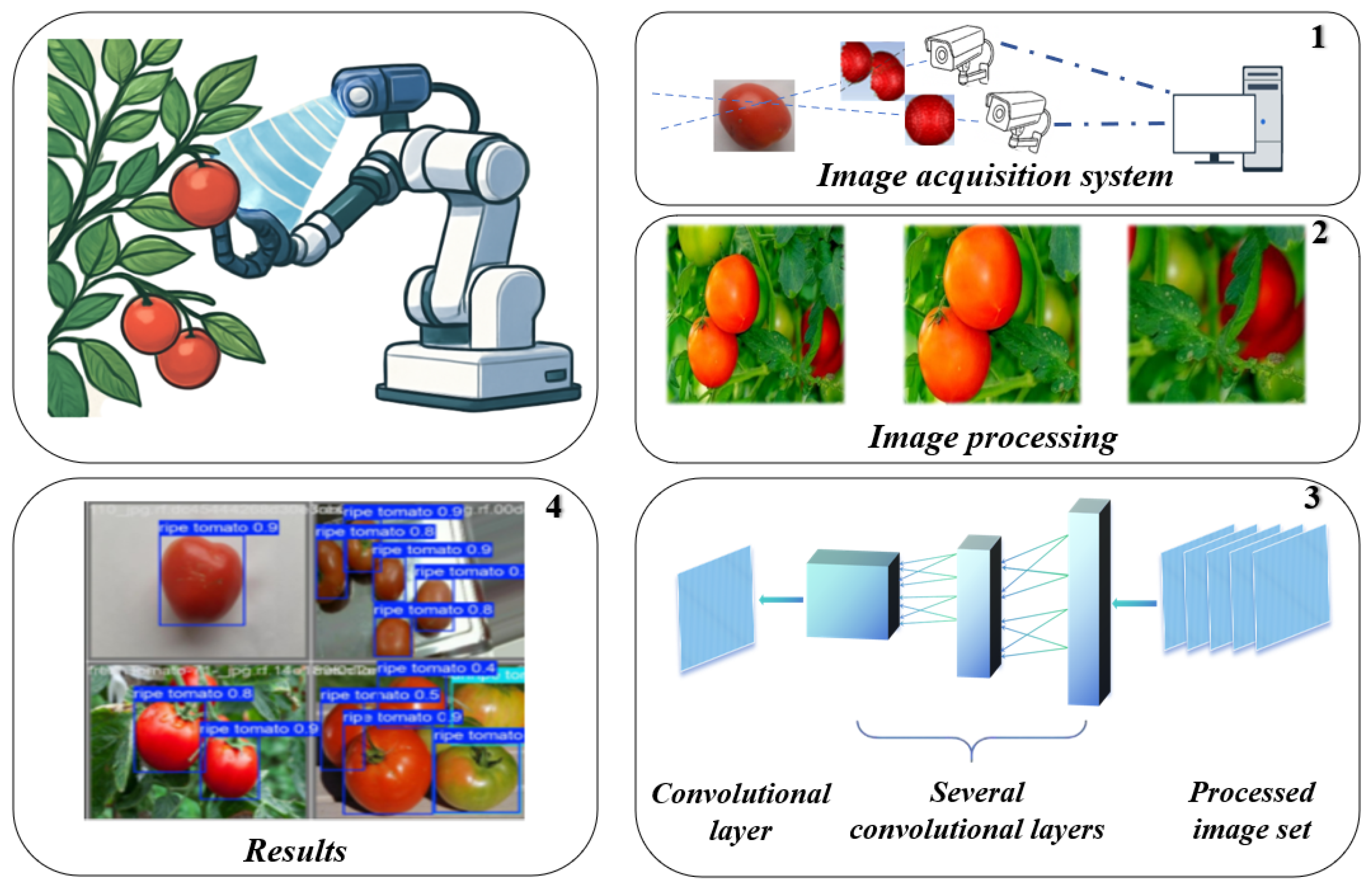

1. Introduction

- (1)

- We embed a multi-head self-attention (MHSA) mechanism into the YOLO backbone network, overcoming the limitations of convolutional local receptive fields and effectively modeling long-range contextual relationships between fruits, significantly mitigating the missed detection problem caused by occlusion.

- (2)

- By designing a hierarchical feature fusion strategy, the model integrates high-order semantics and fine-grained texture information across four scales, effectively improving the joint detection capability for small and large objects and enhancing robustness in scenes with strong light reflection and drastic scale changes.

- (3)

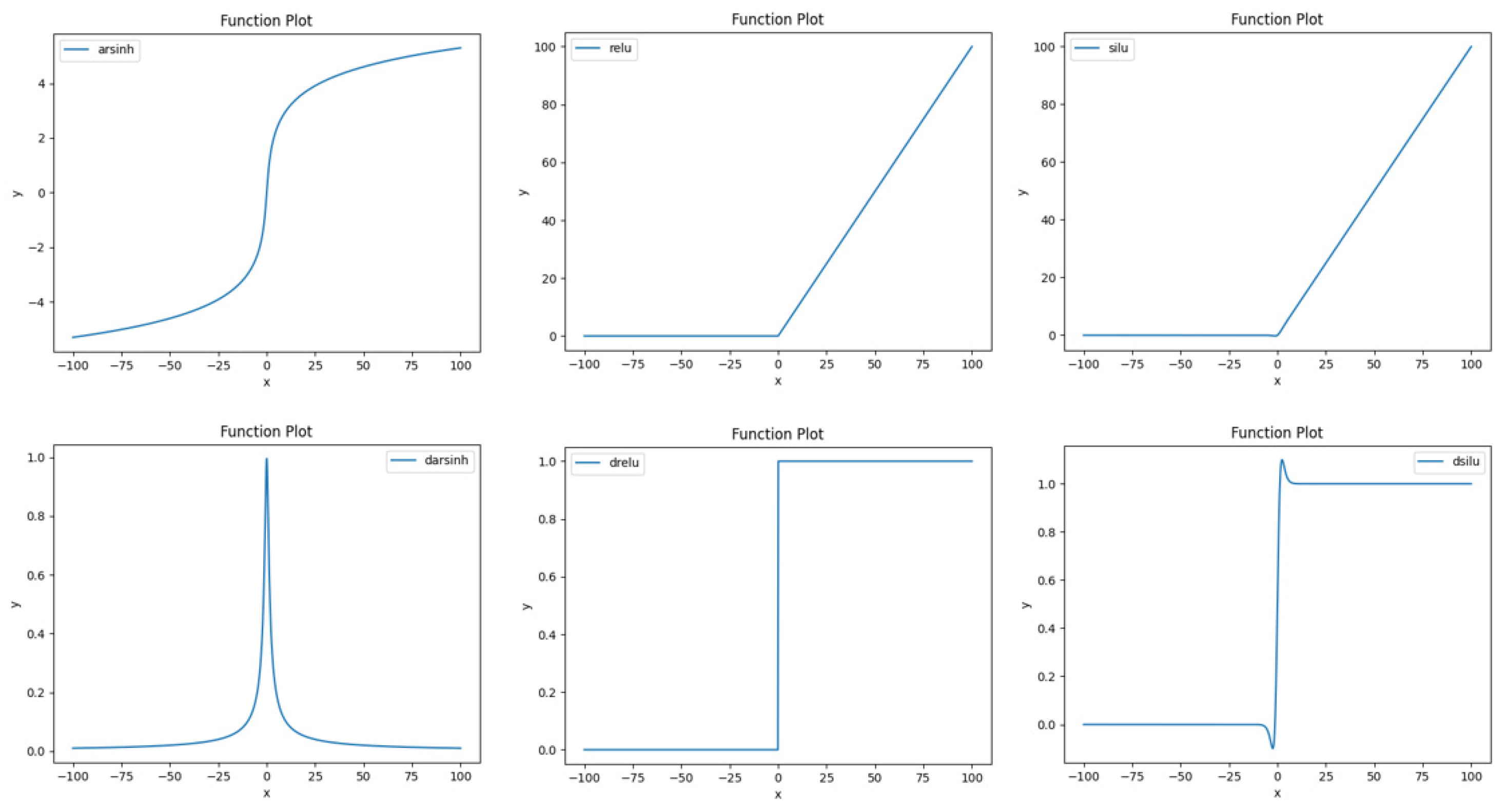

- We introduce the arsinh activation function to replace the traditional SiLU function. Its non-zero derivative across the entire real domain effectively alleviates the gradient vanishing problem during edge quantization, improving the model’s numerical stability and convergence efficiency in low-precision deployment environments.

2. Related Work

3. Materials and Methods

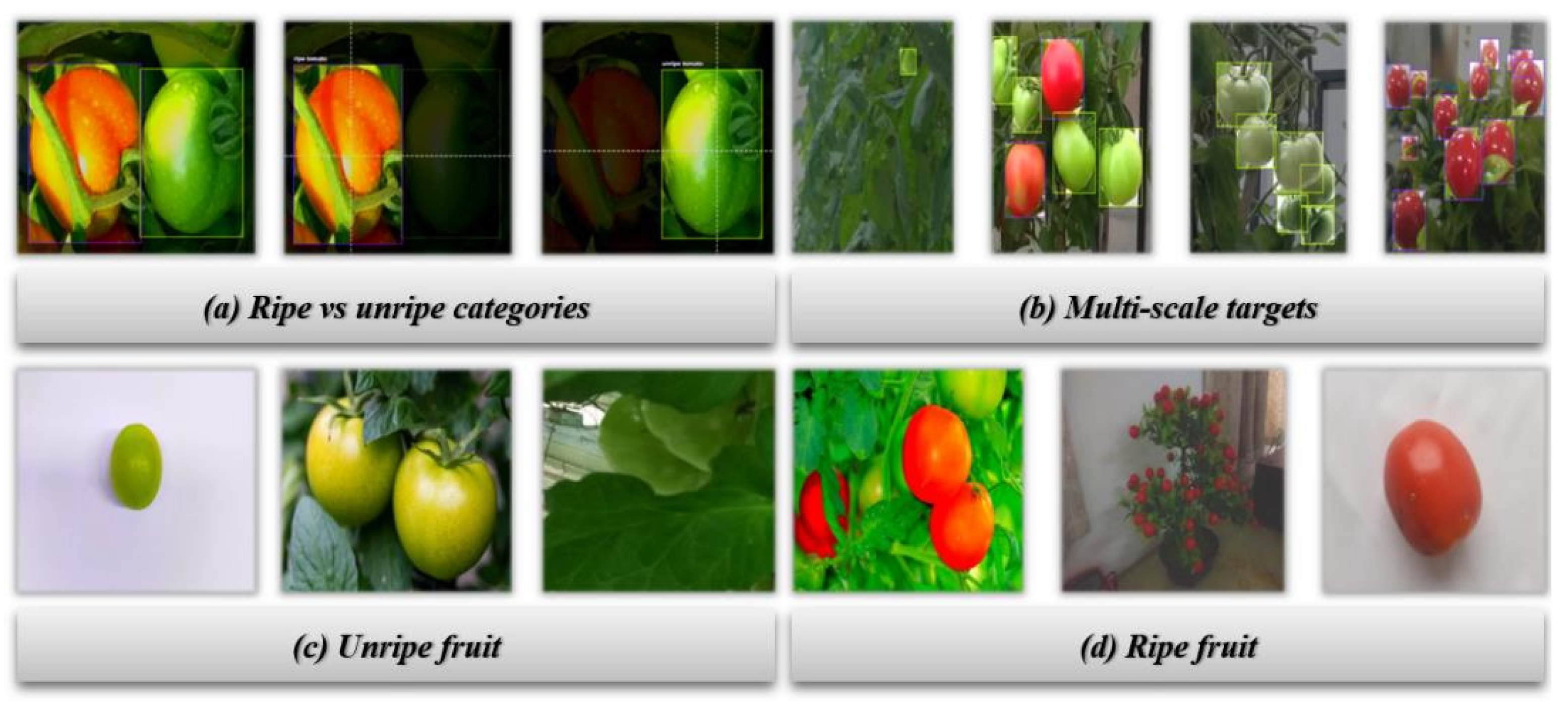

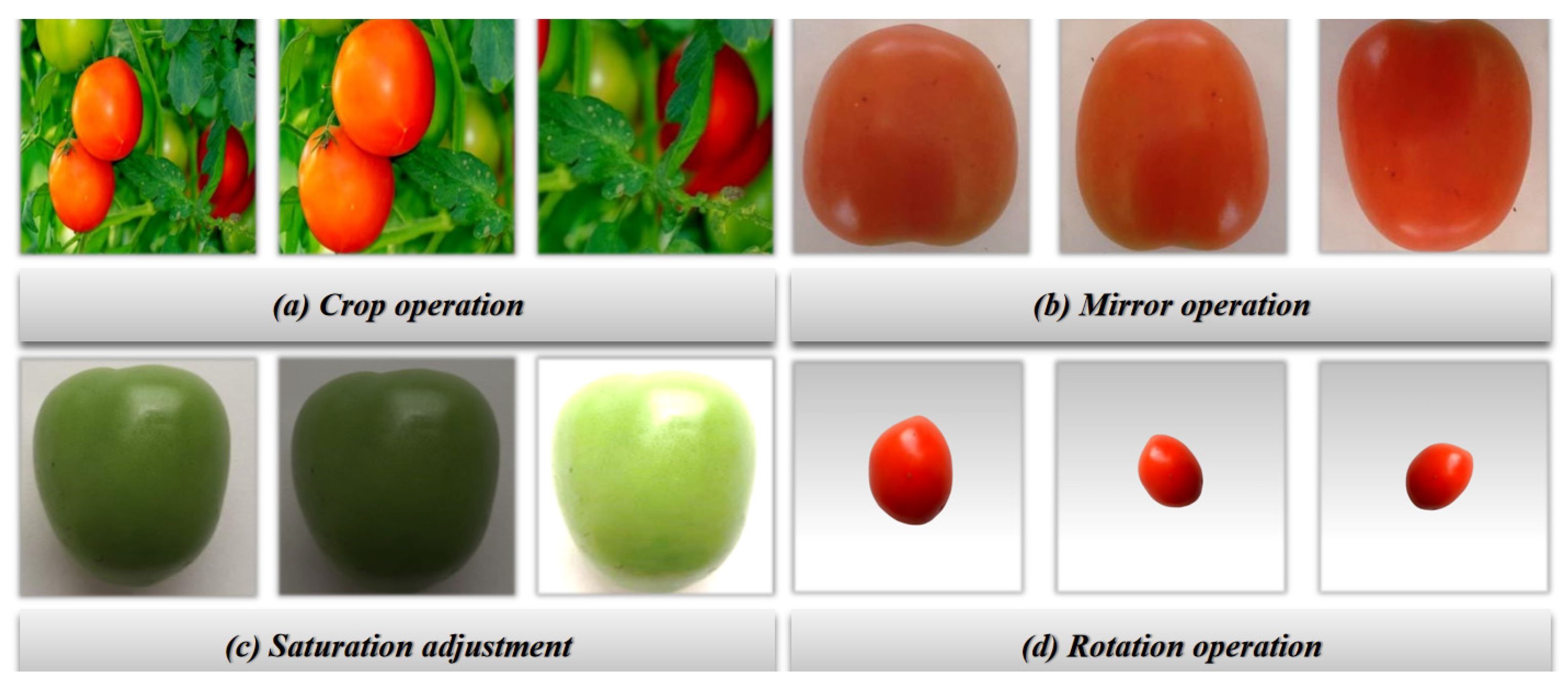

3.1. Dataset

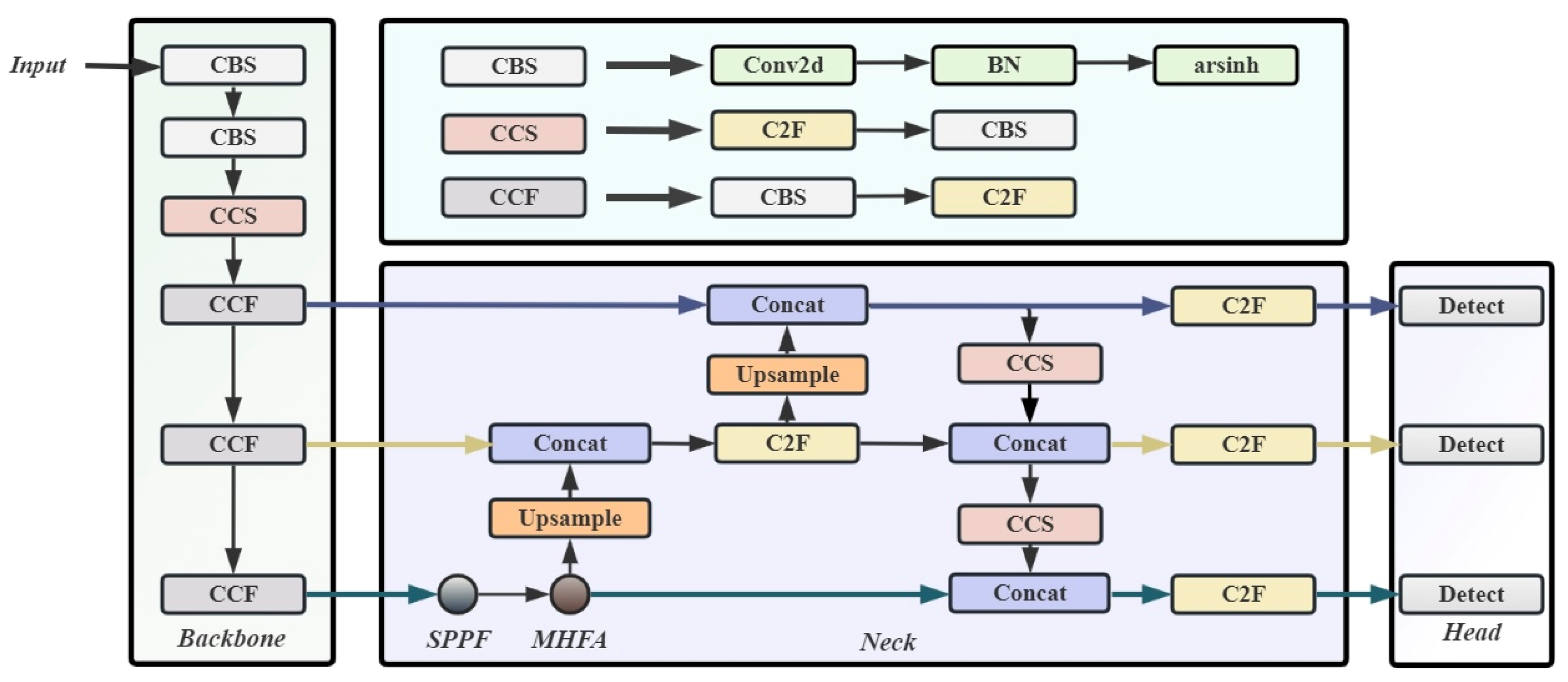

3.2. Model

3.2.1. Feature Pyramid Network

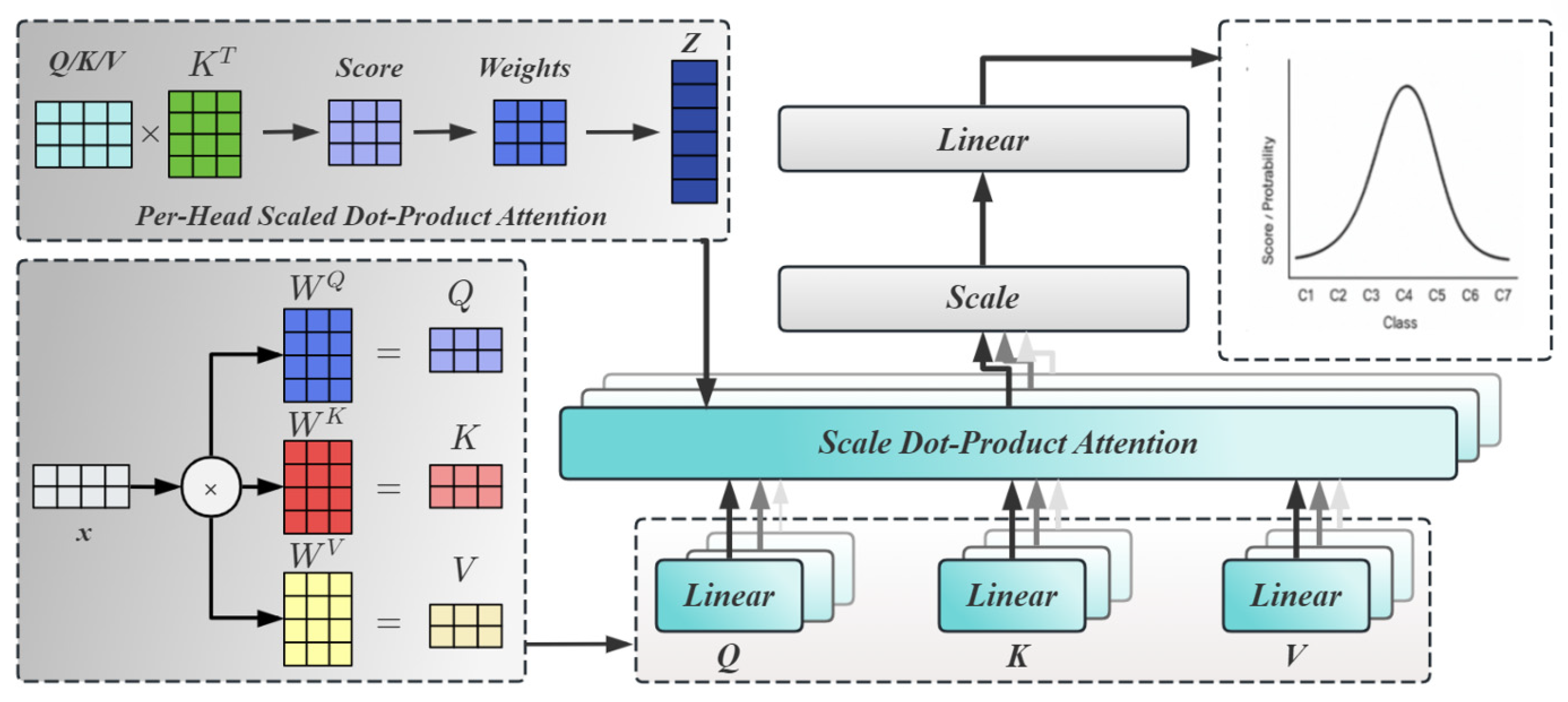

3.2.2. Multi-Head Self-Attention

3.2.3. Hierarchical Feature Fusion

3.2.4. Activation Function

3.3. Evaluation Metrics

3.3.1. Average Precision

3.3.2. mAP

3.3.3. Precision–Recall Curve

4. Results

4.1. Experimental Setup

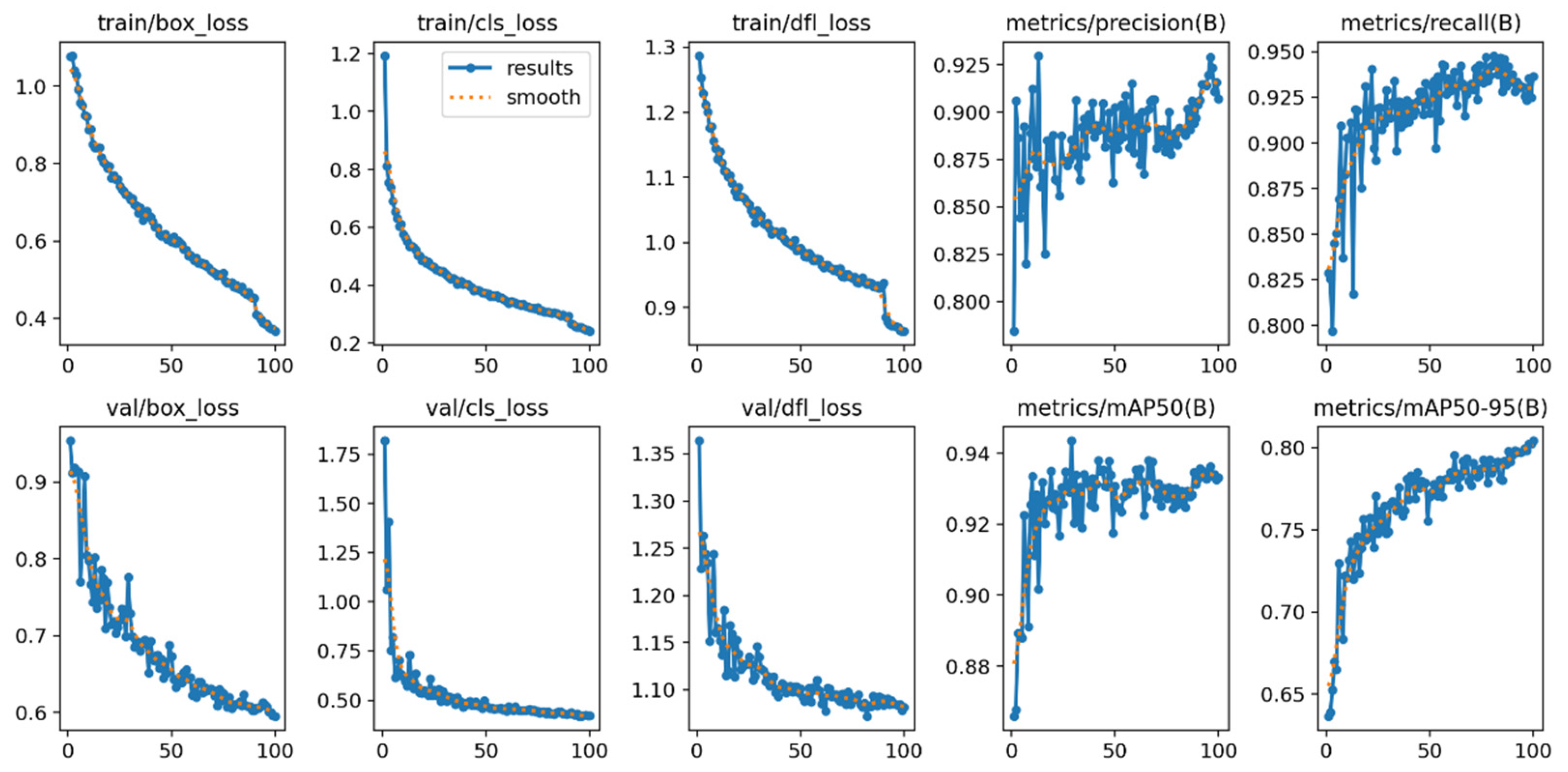

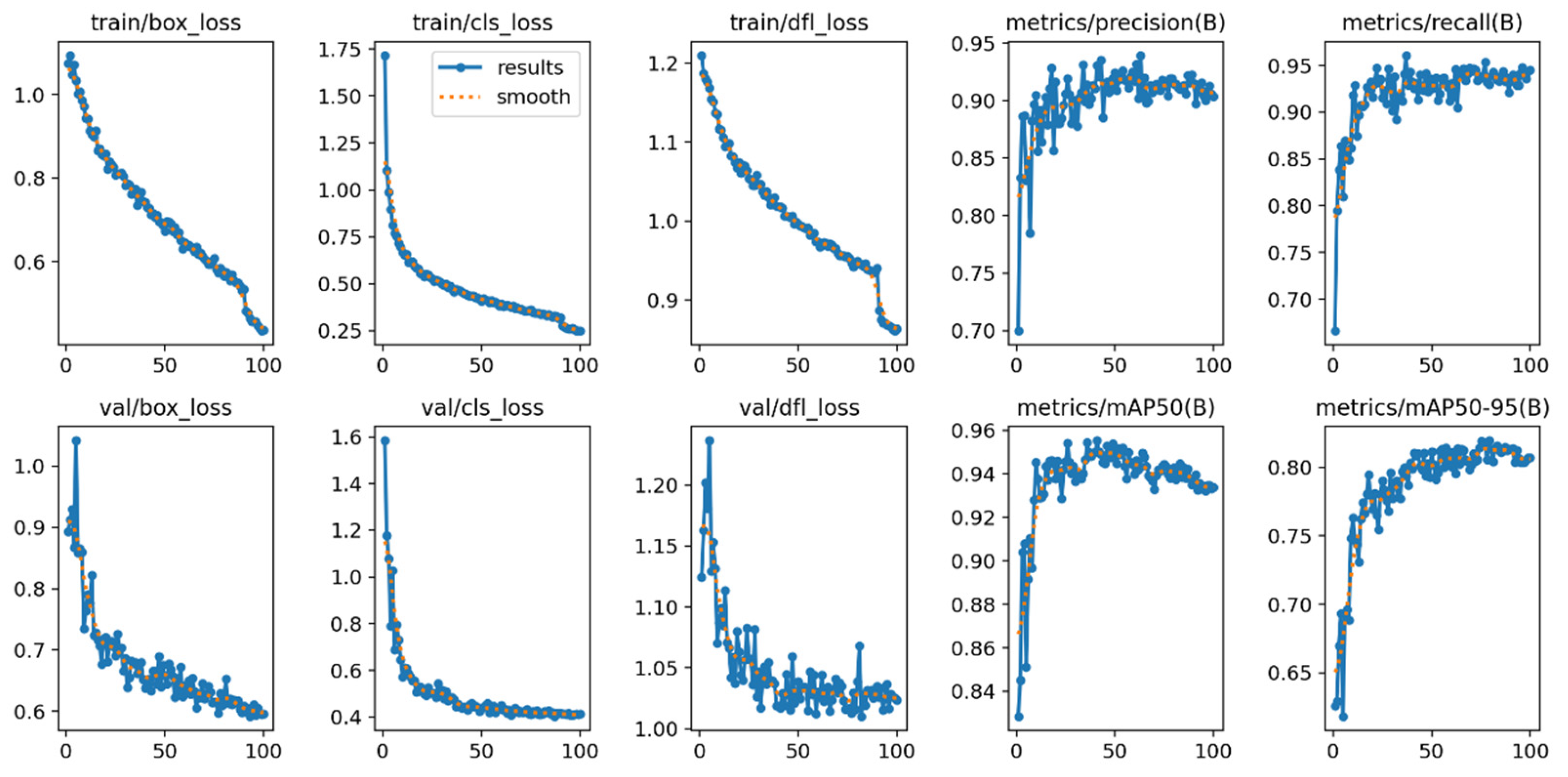

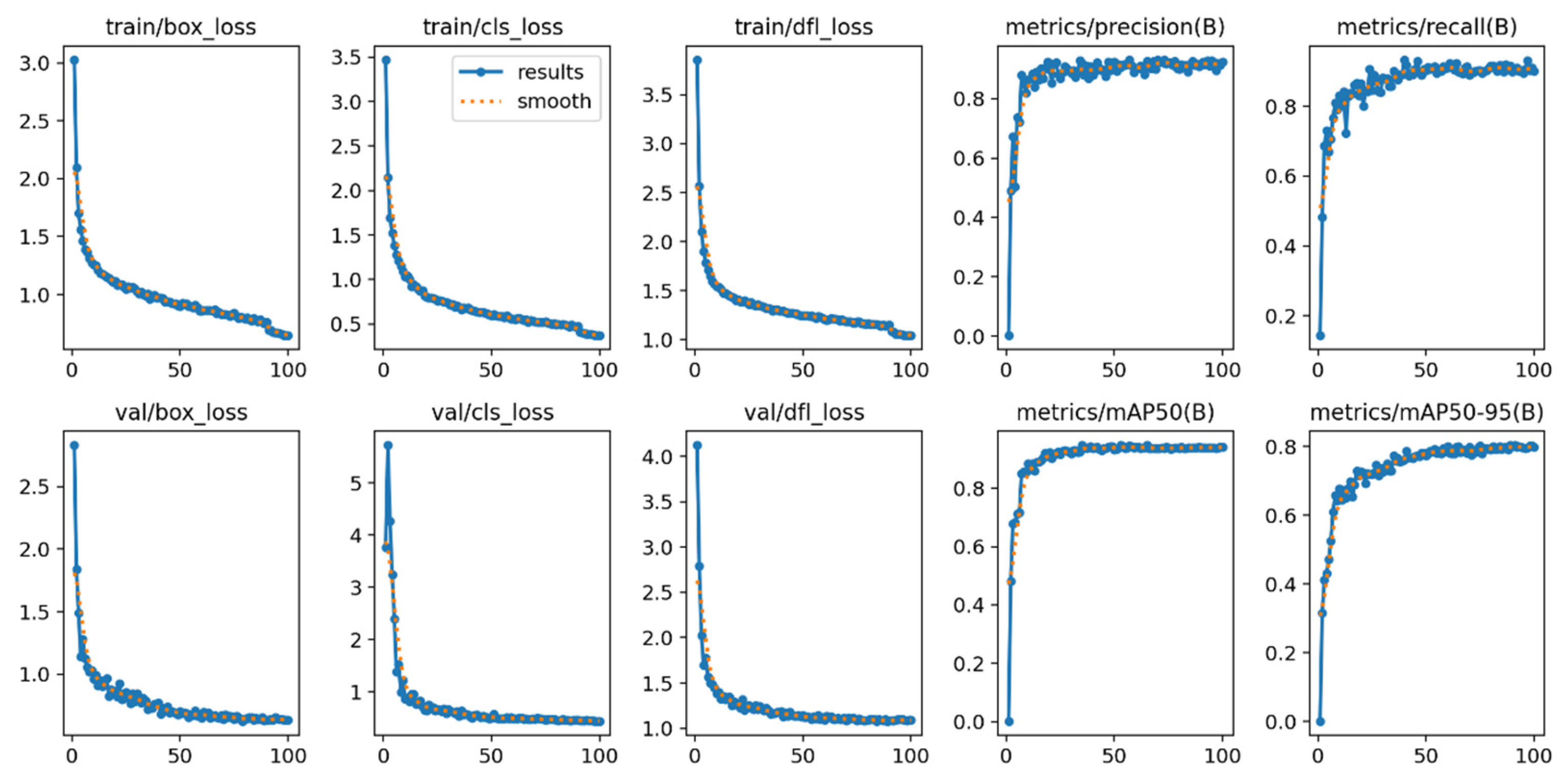

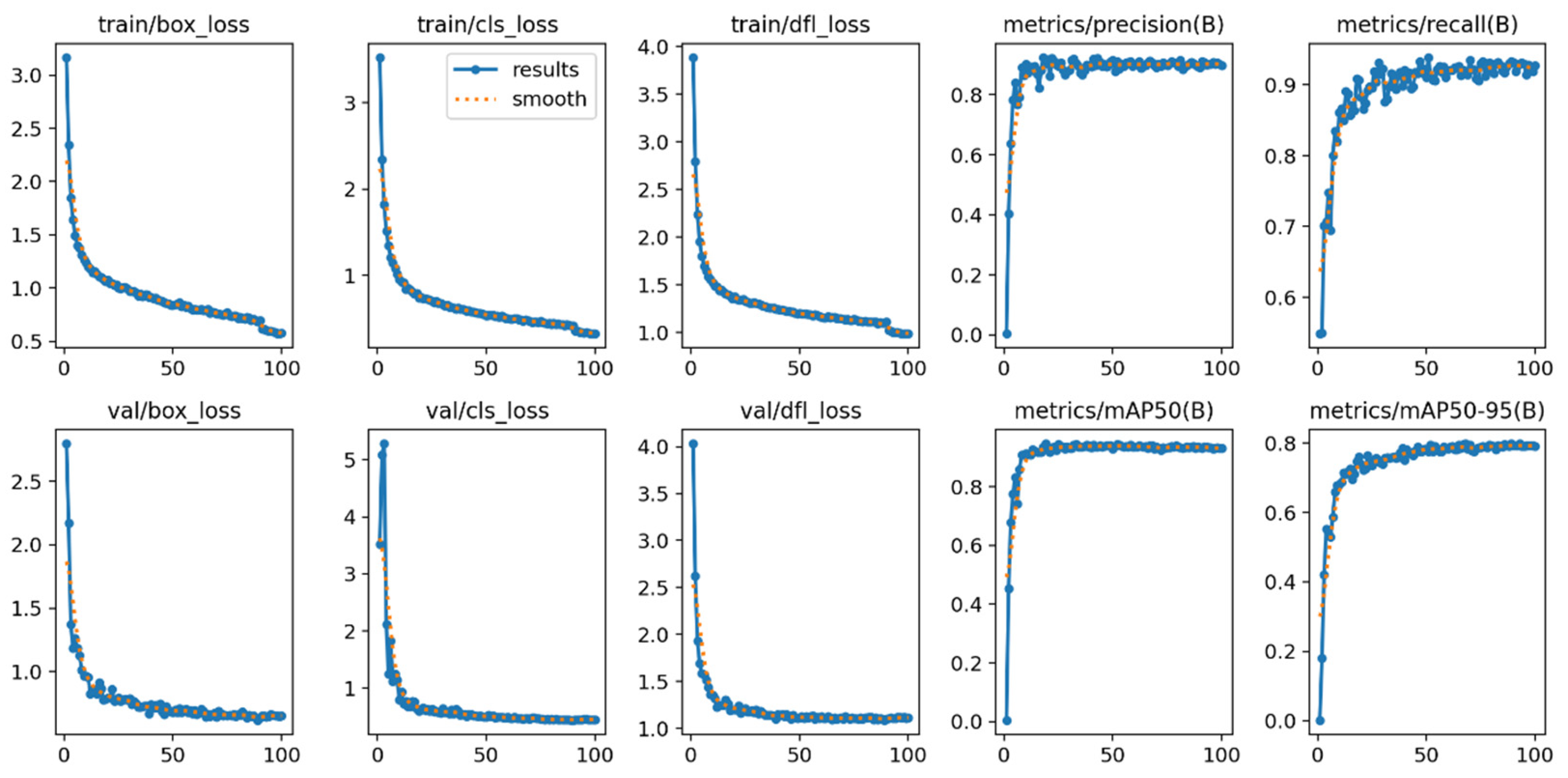

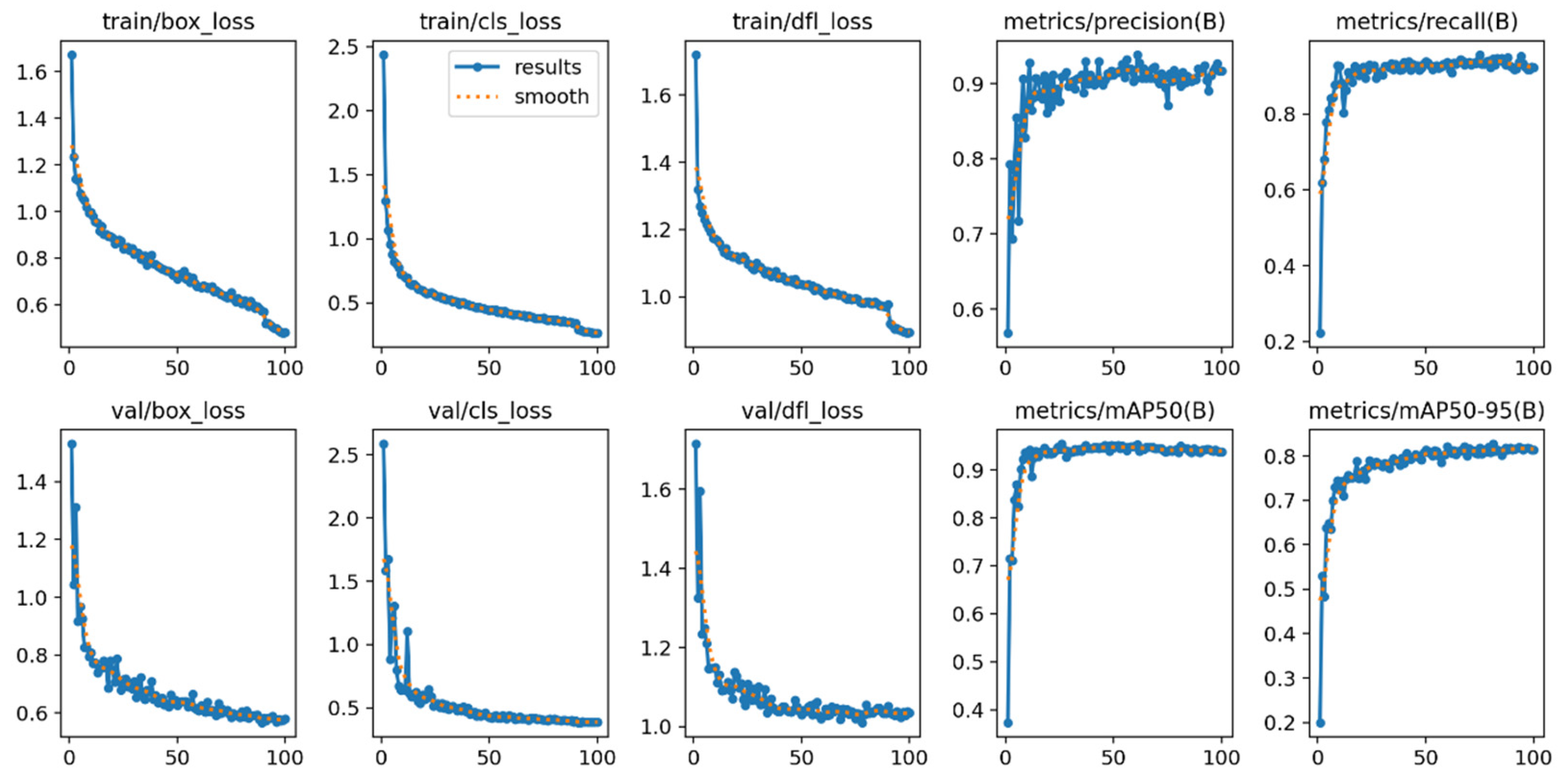

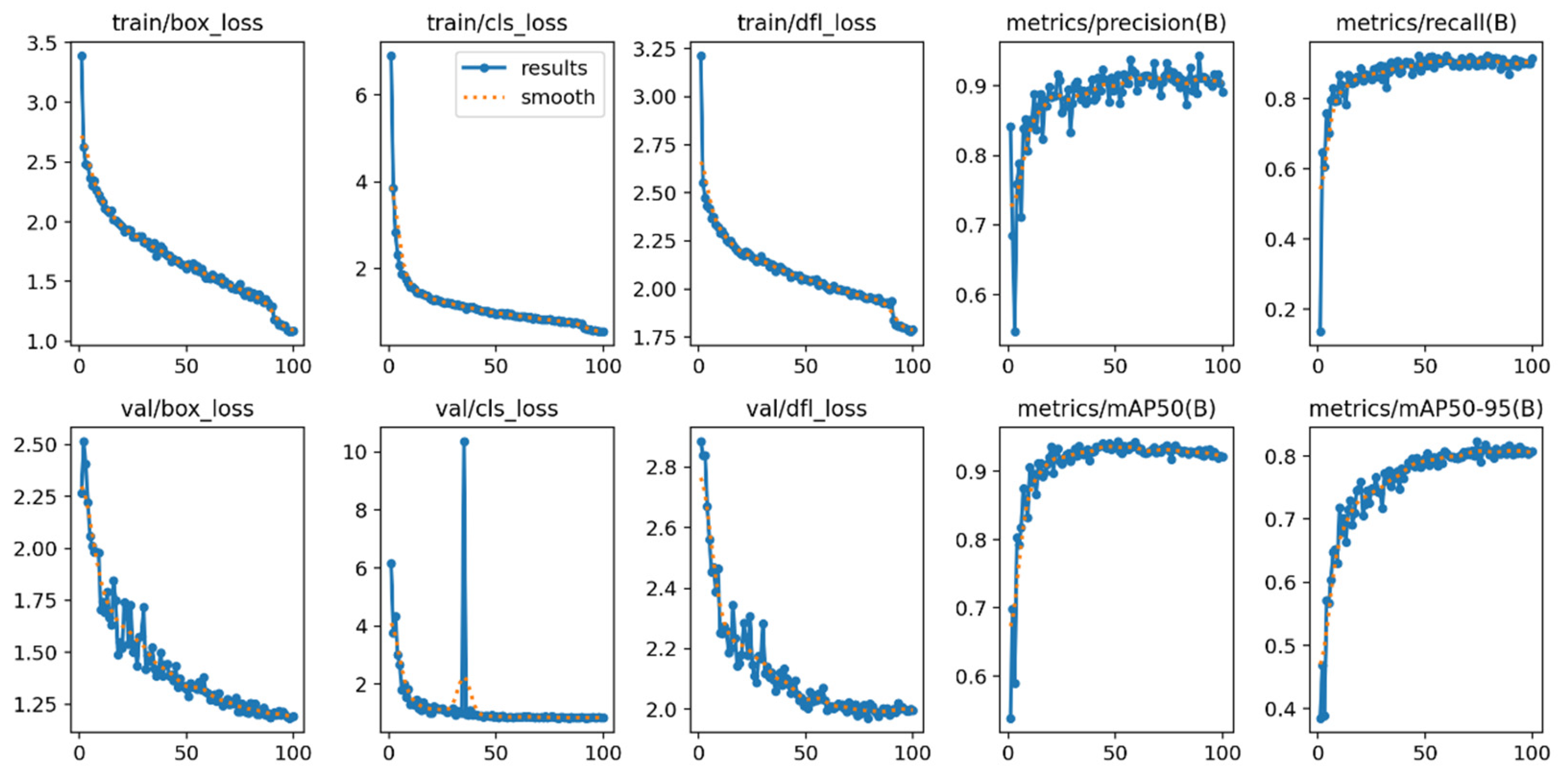

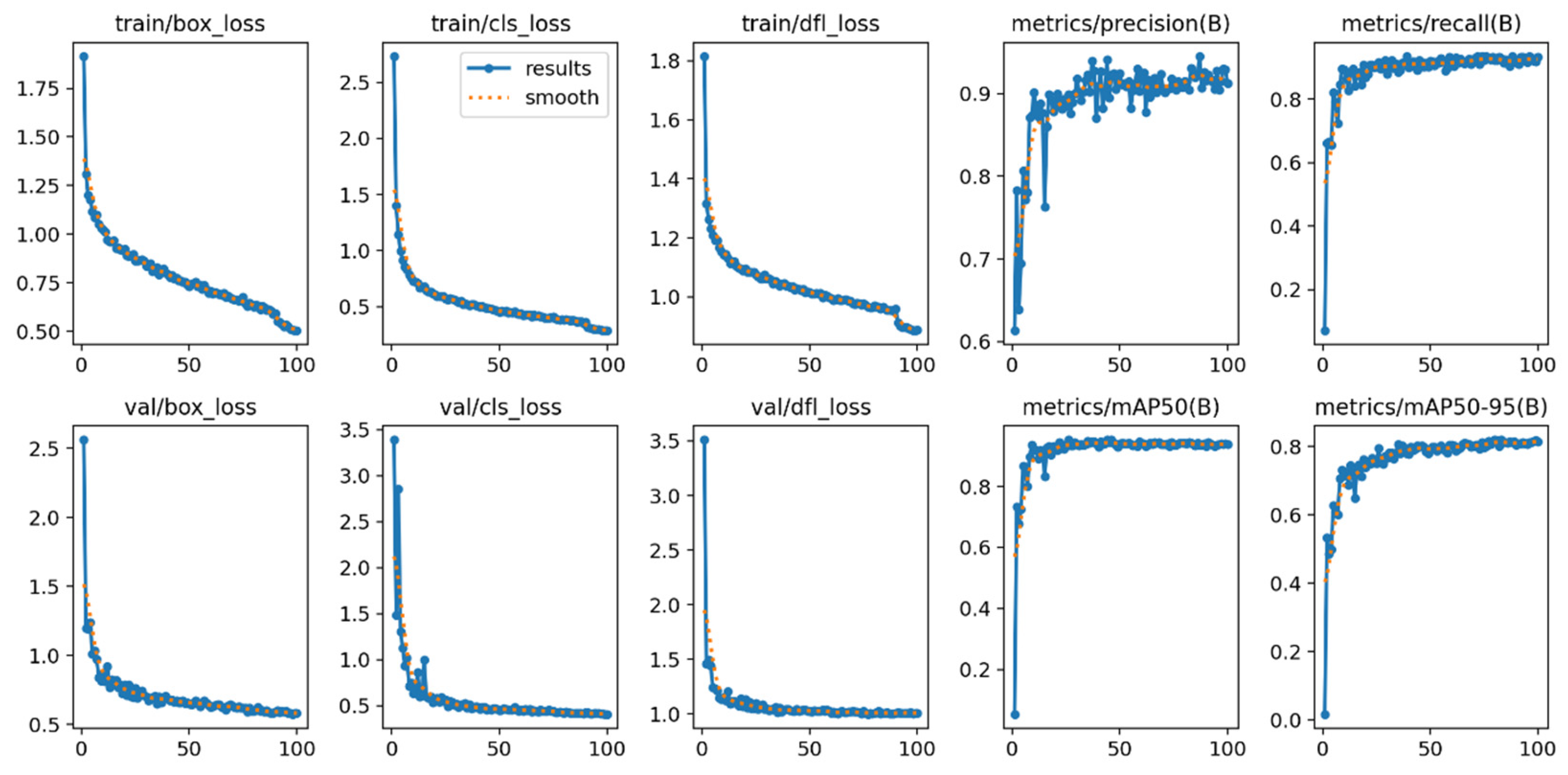

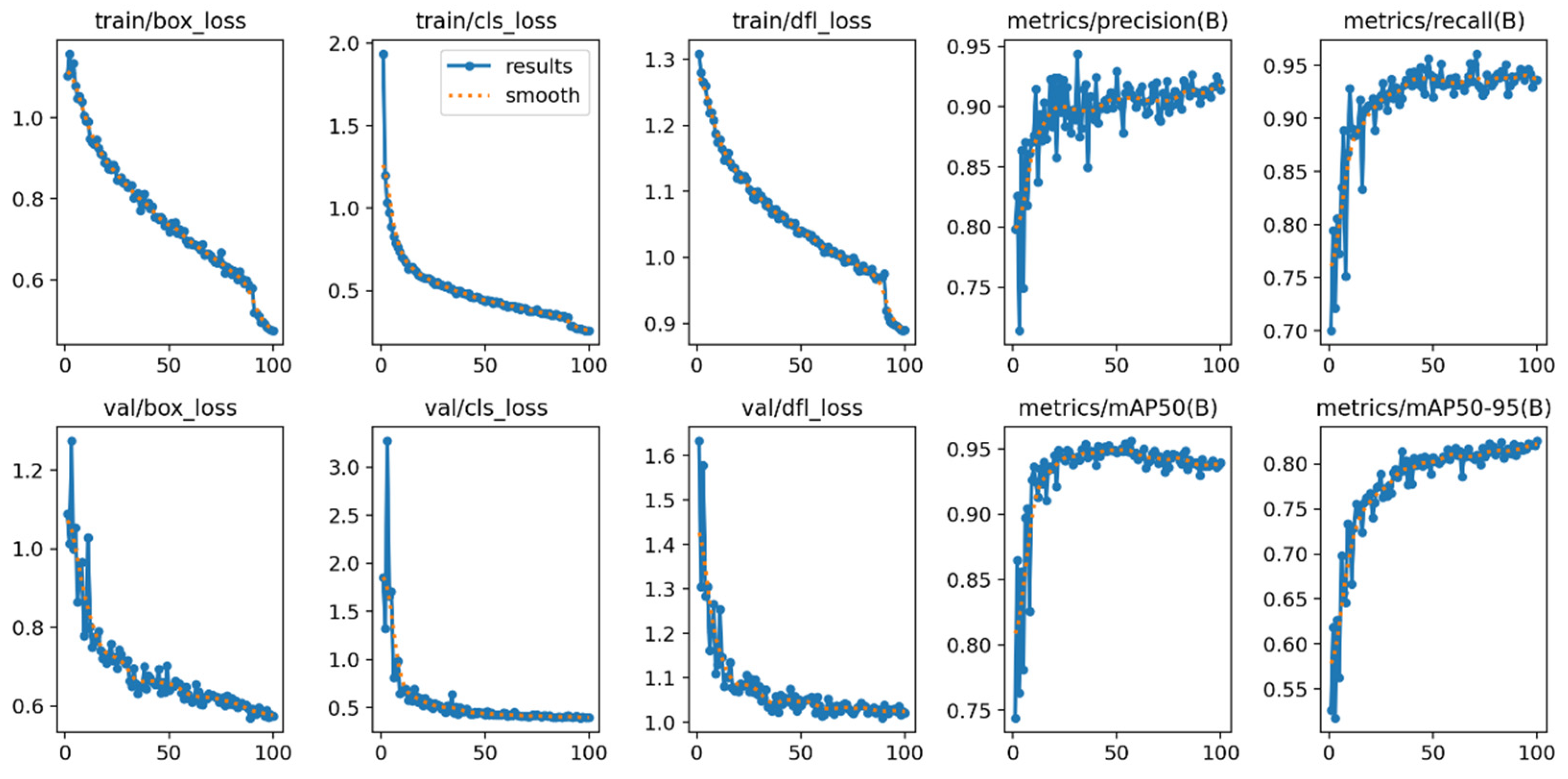

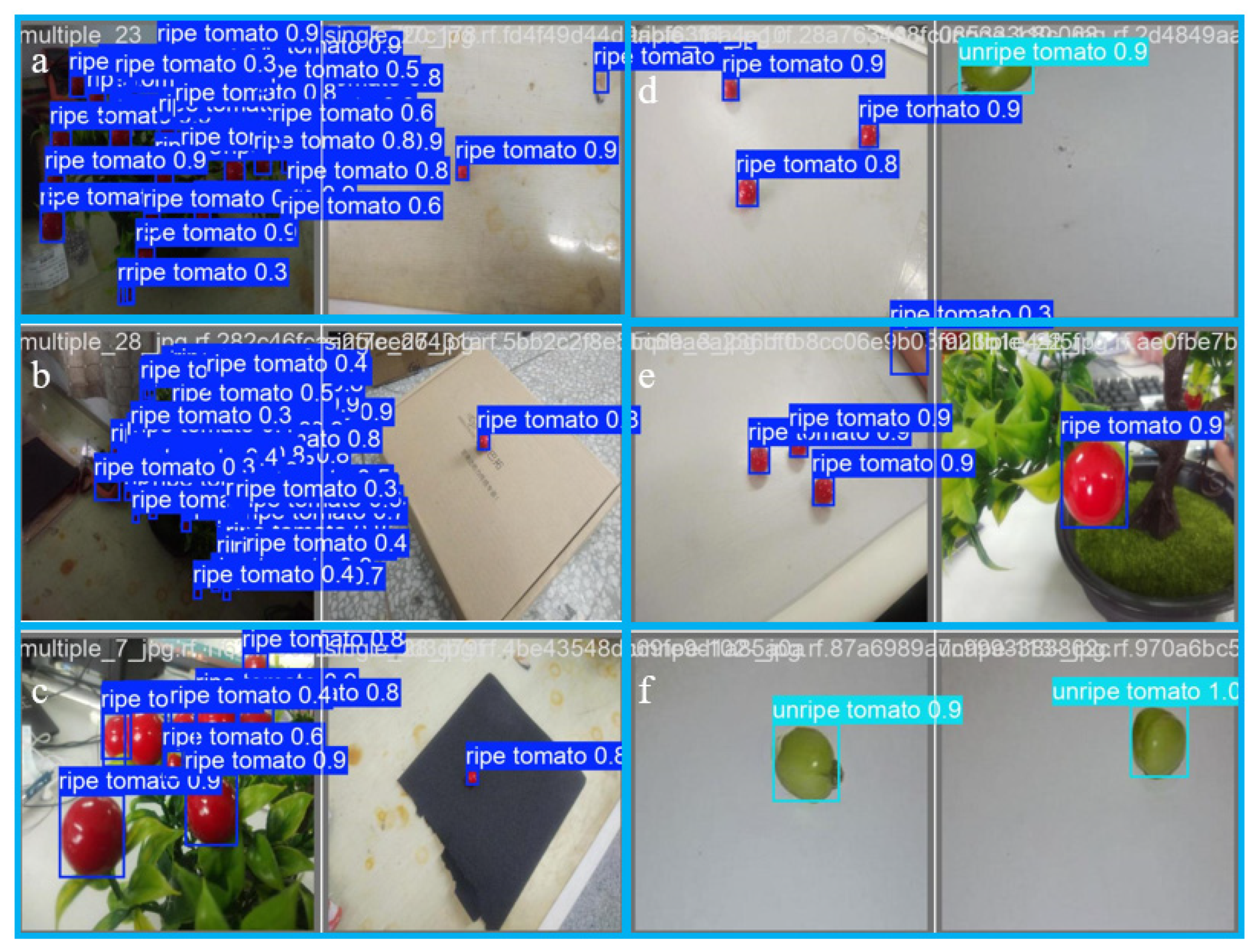

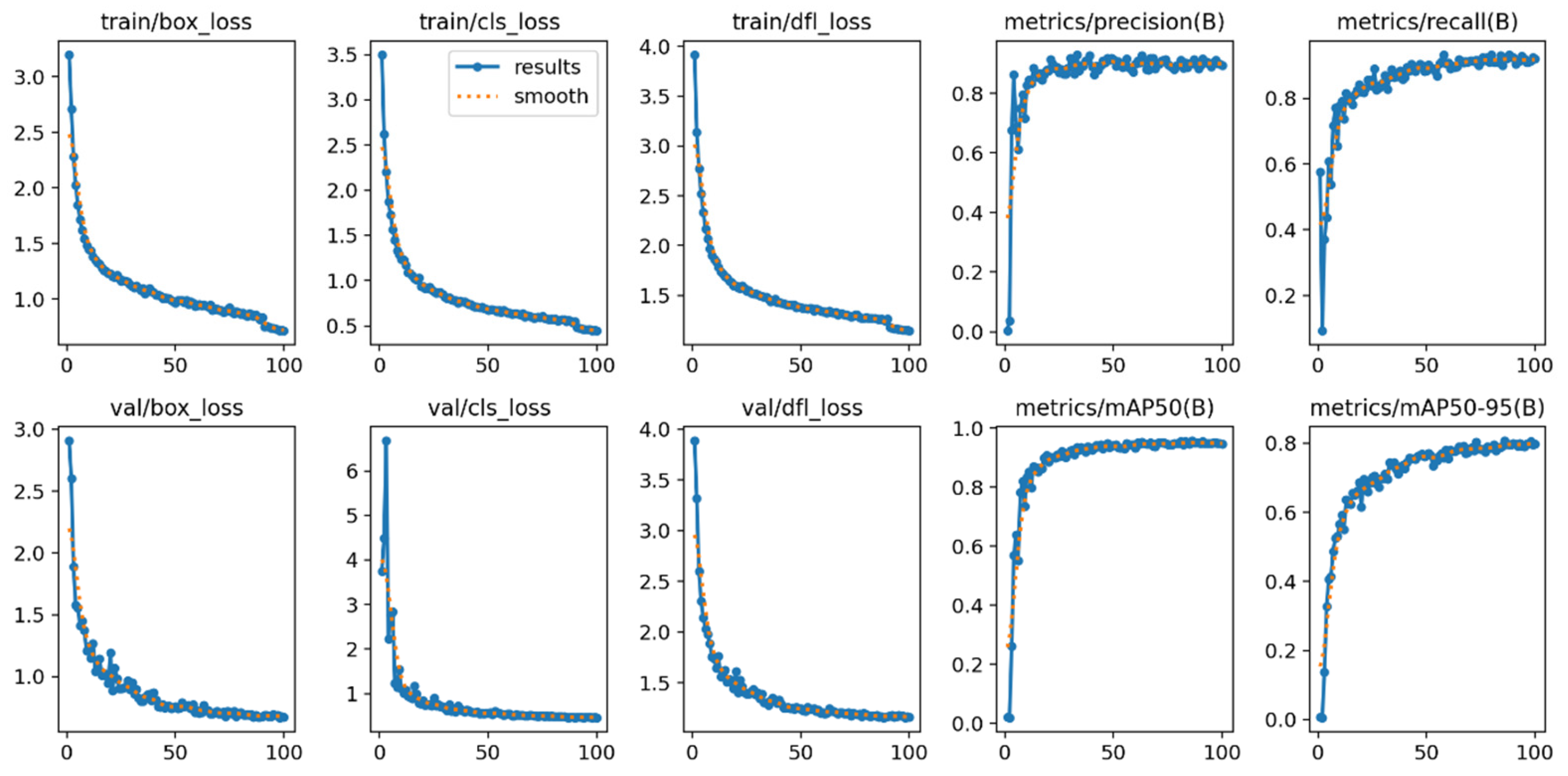

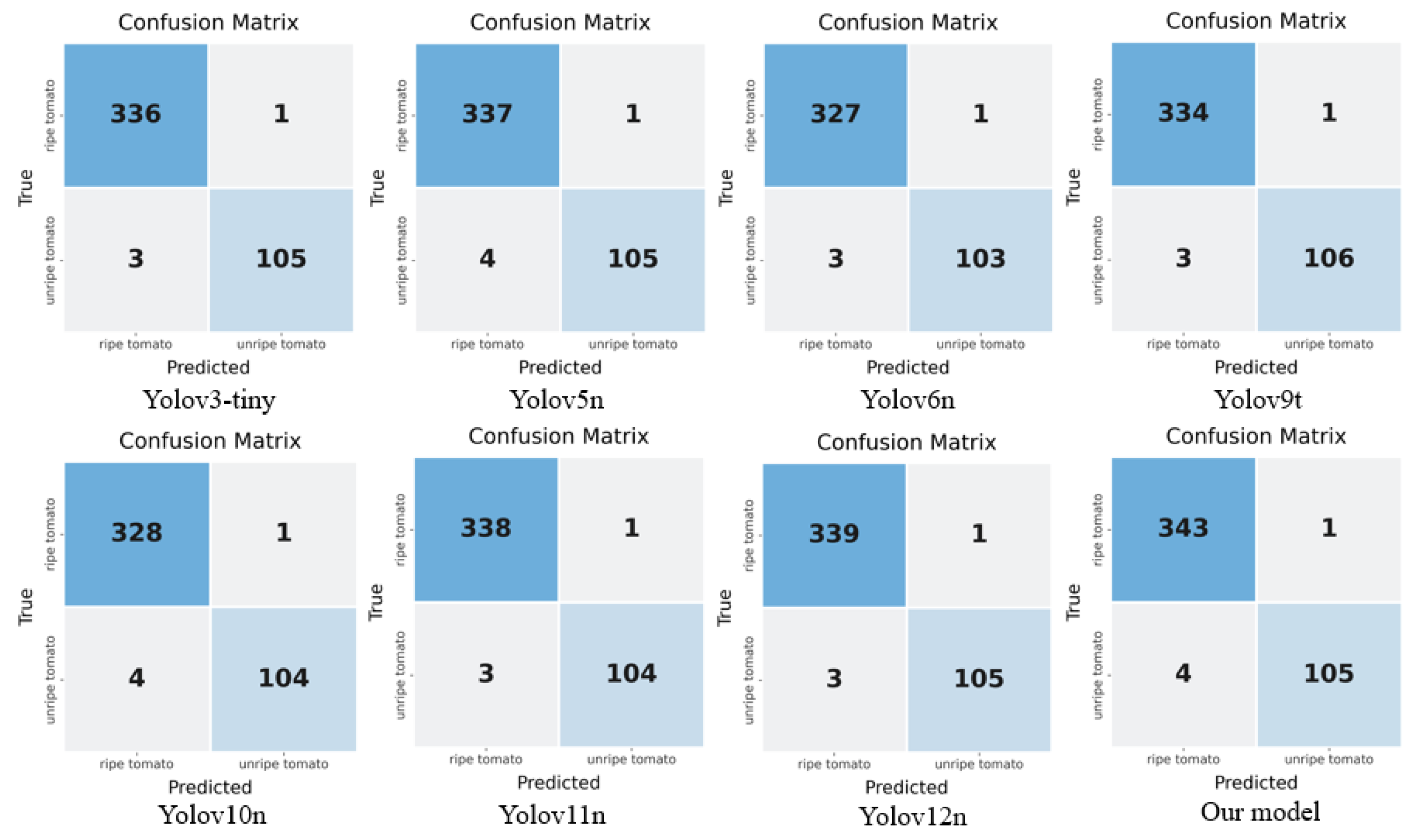

4.2. Experimental Results

4.3. Ablation Study

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| MHSA | Multi-head Self-attention |

| CNN | Convolutional Neural Network |

| NMS | Non-maximum Suppression |

| YOLO | You Only Look Once |

| SVM | Support Vector Machines |

| SOTA | State-of-the-art |

| AP | Average Precision |

| mAP | Mean Average Precision |

| PR | Precision–Recall |

Appendix A

References

- Lin, G.; Zhu, L.; Li, J.; Zou, X.; Tang, Y. Collision-Free Path Planning for a Guava-Harvesting Robot Based on Recurrent Deep Reinforcement Learning. Comput. Electron. Agric. 2021, 188, 106350. [Google Scholar] [CrossRef]

- Rajendran, V.; Debnath, B.; Mghames, S.; Mandil, W.; Parsa, S.; Parsons, S.; Ghalamzan-E, A. Towards Autonomous Selective Harvesting: A Review of Robot Perception, Robot Design, Motion Planning and Control. J. Field Robot. 2024, 41, 2247–2279. [Google Scholar] [CrossRef]

- Montoya-Cavero, L.-E.; Díaz De León Torres, R.; Gómez-Espinosa, A.; Escobedo Cabello, J.A. Vision Systems for Harvesting Robots: Produce Detection and Localization. Comput. Electron. Agric. 2022, 192, 106562. [Google Scholar] [CrossRef]

- Yin, H.; Sun, Q.; Ren, X.; Guo, J.; Yang, Y.; Wei, Y.; Huang, B.; Chai, X.; Zhong, M. Development, Integration, and Field Evaluation of an Autonomous Citrus-harvesting Robot. J. Field Robot. 2023, 40, 1363–1387. [Google Scholar] [CrossRef]

- Wang, W.; Li, C.; Xi, Y.; Gu, J.; Zhang, X.; Zhou, M.; Peng, Y. Research Progress and Development Trend of Visual Detection Methods for Selective Fruit Harvesting Robots. Agronomy 2025, 15, 1926. [Google Scholar] [CrossRef]

- Riehle, D.; Reiser, D.; Griepentrog, H.W. Robust Index-Based Semantic Plant/Background Segmentation for RGB- Images. Comput. Electron. Agric. 2020, 169, 105201. [Google Scholar] [CrossRef]

- Huang, Y.; Xu, S.; Chen, H.; Li, G.; Dong, H.; Yu, J.; Zhang, X.; Chen, R. A Review of Visual Perception Technology for Intelligent Fruit Harvesting Robots. Front. Plant Sci. 2025, 16, 1646871. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Computer Vision–ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2020; Volume 12346, pp. 213–229. ISBN 978-3-030-58451-1. [Google Scholar]

- Li, Y.; Miao, N.; Ma, L.; Shuang, F.; Huang, X. Transformer for Object Detection: Review and Benchmark. Eng. Appl. Artif. Intell. 2023, 126, 107021. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo Algorithm Developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Vijayakumar, A.; Vairavasundaram, S. YOLO-Based Object Detection Models: A Review and Its Applications. Multimed. Tools Appl. 2024, 83, 83535–83574. [Google Scholar] [CrossRef]

- Zhu, F.; Zhang, W.; Wang, S.; Jiang, B.; Feng, X.; Zhao, Q. Apple-Harvesting Robot Based on the YOLOv5-RACF Model. Biomimetics 2024, 9, 495. [Google Scholar] [CrossRef]

- Liu, Z.; Rasika, D.; Abeyrathna, R.M.; Mulya Sampurno, R.; Massaki Nakaguchi, V.; Ahamed, T. Faster-YOLO-AP: A Lightweight Apple Detection Algorithm Based on Improved YOLOv8 with a New Efficient PDWConv in Orchard. Comput. Electron. Agric. 2024, 223, 109118. [Google Scholar] [CrossRef]

- Li, J.; Wu, K.; Zhang, M.; Chen, H.; Lin, H.; Mai, Y.; Shi, L. YOLOv8s-Longan: A Lightweight Detection Method for the Longan Fruit-Picking UAV. Front. Plant Sci. 2025, 15, 1518294. [Google Scholar] [CrossRef] [PubMed]

- De-la-Torre, M.; Zatarain, O.; Avila-George, H.; Muñoz, M.; Oblitas, J.; Lozada, R.; Mejía, J.; Castro, W. Multivariate Analysis and Machine Learning for Ripeness Classification of Cape Gooseberry Fruits. Processes 2019, 7, 928. [Google Scholar] [CrossRef]

- Taner, A.; Mengstu, M.T.; Selvi, K.Ç.; Duran, H.; Kabaş, Ö.; Gür, İ.; Karaköse, T.; Gheorghiță, N.-E. Multiclass Apple Varieties Classification Using Machine Learning with Histogram of Oriented Gradient and Color Moments. Appl. Sci. 2023, 13, 7682. [Google Scholar] [CrossRef]

- Kumari, A.; Singh, J. Designing of Guava Quality Classification Model Based on ANOVA and Machine Learning. Sci. Rep. 2025, 15, 33920. [Google Scholar] [CrossRef]

- Santos Pereira, L.F.; Barbon, S.; Valous, N.A.; Barbin, D.F. Predicting the Ripening of Papaya Fruit with Digital Imaging and Random Forests. Comput. Electron. Agric. 2018, 145, 76–82. [Google Scholar] [CrossRef]

- Lai, Y.; Cao, A.; Gao, Y.; Shang, J.; Li, Z. Advancing Efficient Brain Tumor Multi-Class Classification: New Insights from the Vision Mamba Model in Transfer Learning. Int. J. Imaging Syst. Technol. 2025, 35, e70177. [Google Scholar] [CrossRef]

- Qin, H.; Xu, T.; Tang, Y.; Xu, F.; Li, J. OSFormer: One-Step Transformer for Infrared Video Small Object Detection. IEEE Trans. Image Process. 2025, 34, 5725–5736. [Google Scholar] [CrossRef]

- Shang, J.; Lai, Y. An Optimal U-Net++ Segmentation Method for Dataset BUSI. In Proceedings of the 2024 5th International Seminar on Artificial Intelligence, Networking and Information Technology (AINIT), Nanjing, China, 29–31 March 2024; pp. 1015–1019. [Google Scholar]

- Amjoud, A.B.; Amrouch, M. Object Detection Using Deep Learning, CNNs and Vision Transformers: A Review. IEEE Access 2023, 11, 35479–35516. [Google Scholar] [CrossRef]

- Stasenko, N.; Shadrin, D.; Katrutsa, A.; Somov, A. Dynamic Mode Decomposition and Deep Learning for Postharvest Decay Prediction in Apples. IEEE Trans. Instrum. Meas. 2023, 72, 2518411. [Google Scholar] [CrossRef]

- Halstead, M.; McCool, C.; Denman, S.; Perez, T.; Fookes, C. Fruit Quantity and Ripeness Estimation Using a Robotic Vision System. IEEE Robot. Autom. Lett. 2018, 3, 2995–3002. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Lu, S.; Chen, W.; Zhang, X.; Karkee, M. Canopy-Attention-YOLOv4-Based Immature/Mature Apple Fruit Detection on Dense-Foliage Tree Architectures for Early Crop Load Estimation. Comput. Electron. Agric. 2022, 193, 106696. [Google Scholar] [CrossRef]

- Yang, C.; Liu, J.; He, J. A Lightweight Waxberry Fruit Detection Model Based on YOLOv5. IET Image Process. 2024, 18, 1796–1808. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Wu, T.; Miao, Z.; Huang, W.; Han, W.; Guo, Z.; Li, T. SGW-YOLOv8n: An Improved YOLOv8n-Based Model for Apple Detection and Segmentation in Complex Orchard Environments. Agriculture 2024, 14, 1958. [Google Scholar] [CrossRef]

- Wang, C.; Wang, H.; Han, Q.; Zhang, Z.; Kong, D.; Zou, X. Strawberry Detection and Ripeness Classification Using YOLOv8+ Model and Image Processing Method. Agriculture 2024, 14, 751. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Wang, J.; Shang, Y.; Zheng, X.; Zhou, P.; Li, S.; Wang, H. GreenFruitDetector: Lightweight Green Fruit Detector in Orchard Environment. PLoS ONE 2024, 19, e0312164. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Michael, K.; Fang, J.; Zeng, Y.; Wong, C.; Montes, D.; et al. Ultralyt-ics/Yolov5: V7.0—YOLOv5 SOTA Realtime Instance Segmentation; Zenodo: Geneva, Switzerland, 2022. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Yeh, I.-H.; Mark Liao, H.-Y. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In Computer Vision–ECCV 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2025; Volume 15089, pp. 1–21. ISBN 978-3-031-72750-4. [Google Scholar]

- Viso.AI; Boesch, G. Yolov11: A New Iteration of “You Only Look Once”. 2024. Available online: https://viso.ai/computer-vision/yolov11/ (accessed on 21 October 2024).

| Configuration | Details |

|---|---|

| CPU | Intel (R) Core (TM) i7-12650HFCPU@2.3 GHz |

| CPU Memory | 32 GB |

| GPU | NVIDIA GeForce RTX 4090 |

| GPU Memory | 24 GB |

| Operating System | Windows10 (x64) |

| Neural Network Framework | torch2.6.0 + cu124 |

| Development Language | Python 3.9 |

| Model | Precision | Recall | mAP@0.5 | mAP@0.5–0.95 |

|---|---|---|---|---|

| YOLOv3-Tiny(u) [37] | 0.907 | 0.937 | 0.933 | 0.805 |

| YOLOv5nu [38] | 0.913 | 0.936 | 0.944 | 0.819 |

| YOLOv6n [39] | 0.924 | 0.905 | 0.940 | 0.803 |

| YOLOv8n [29] | 0.894 | 0.933 | 0.935 | 0.798 |

| YOLOv9t [40] | 0.897 | 0.947 | 0.950 | 0.827 |

| YOLOv10n [32] | 0.932 | 0.895 | 0.938 | 0.823 |

| YOLOv11n [41] | 0.930 | 0.929 | 0.943 | 0.819 |

| YOLOv12n [33] | 0.914 | 0.936 | 0.939 | 0.826 |

| Ours | 0.900 | 0.925 | 0.957 | 0.807 |

| Model | FLOPs (G) | Parameters (M) | FPS | Model Size |

|---|---|---|---|---|

| YOLOv3-Tiny(u) [37] | 9.56 | 12.17 | 550.71 | 23.31 |

| YOLOv5nu [38] | 3.92 | 2.65 | 214.79 | 5.31 |

| YOLOv8n [29] | 4.43 | 3.16 | 232.56 | 6.25 |

| YOLOv9t [40] | 4.24 | 2.13 | 70.14 | 4.74 |

| YOLOv10n [32] | 4.37 | 2.78 | 142.95 | 5.59 |

| YOLOv11n [41] | 3.31 | 2.62 | 175.05 | 5.35 |

| YOLOv12n [33] | 3.33 | 2.60 | 114.30 | 5.34 |

| EdgeFormer-YOLO | 4.18 | 3.21 | 148.78 | 6.35 |

| Model | Precision | Recall | mAP@0.5 | mAP@0.5–0.95 |

|---|---|---|---|---|

| w/o arsinh and MHSA | 0.894 | 0.933 | 0.935 | 0.798 |

| w/o MHSA | 0.914 | 0.913 | 0.944 | 0.803 |

| w/o arsinh | 0.919 | 0.922 | 0.942 | 0.808 |

| Ours | 0.900 | 0.925 | 0.957 | 0.807 |

| Model | FLOPs (G) | Parameters (M) | FPS | Model Size |

|---|---|---|---|---|

| w/o arsinh | 4.18 | 3.21 | 215.73 | 6.35 |

| w/o MHSA | 4.43 | 3.16 | 155.67 | 6.25 |

| EdgeFormer-YOLO | 4.18 | 3.21 | 148.78 | 6.35 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Z.; Luo, T.; Lai, Y.; Liu, Y.; Kang, W. EdgeFormer-YOLO: A Lightweight Multi-Attention Framework for Real-Time Red-Fruit Detection in Complex Orchard Environments. Mathematics 2025, 13, 3790. https://doi.org/10.3390/math13233790

Xu Z, Luo T, Lai Y, Liu Y, Kang W. EdgeFormer-YOLO: A Lightweight Multi-Attention Framework for Real-Time Red-Fruit Detection in Complex Orchard Environments. Mathematics. 2025; 13(23):3790. https://doi.org/10.3390/math13233790

Chicago/Turabian StyleXu, Zhiyuan, Tianjun Luo, Yinyi Lai, Yuheng Liu, and Wenbin Kang. 2025. "EdgeFormer-YOLO: A Lightweight Multi-Attention Framework for Real-Time Red-Fruit Detection in Complex Orchard Environments" Mathematics 13, no. 23: 3790. https://doi.org/10.3390/math13233790

APA StyleXu, Z., Luo, T., Lai, Y., Liu, Y., & Kang, W. (2025). EdgeFormer-YOLO: A Lightweight Multi-Attention Framework for Real-Time Red-Fruit Detection in Complex Orchard Environments. Mathematics, 13(23), 3790. https://doi.org/10.3390/math13233790