1. Introduction

Fractional differential equations (FDEs) have become an essential framework for modeling complex dynamical systems across engineering, physics, and biomedical sciences. In contrast to classical integer-order formulations, fractional derivatives naturally capture the memory and hereditary effects that characterize many real-world processes [

1,

2]. Their intrinsic ability to represent nonlocal interactions makes fractional models particularly suitable for describing phenomena such as anomalous diffusion, viscoelasticity, and regulatory mechanisms in biomedical systems, including glucose–insulin dynamics [

3]. Owing to these capabilities, the analysis and numerical solution of fractional initial value problems have attracted growing attention in recent years.

Analytical solutions of fractional differential equations [

4], often expressed through special functions such as the Mittag–Leffler function [

5], provide exact representations of system dynamics and serve as valuable benchmarks for validating numerical methods. Fractional-order initial value problems represent a specific class of fractional-order differential equations that involve derivatives of non-integer order [

6]. They can be formulated as

where the Caputo fractional derivative of order

is defined by

FOIVPs offer a more general and realistic framework for modeling dynamical systems exhibiting memory and hereditary properties, extending beyond the limitations of classical integer-order formulations. Despite their theoretical significance, obtaining closed-form solutions is often infeasible for real-world nonlinear problems, high-dimensional systems, or models involving complex boundary conditions [

6]. This limitation motivates the development of reliable and efficient numerical methods.

Classical analytical approaches such as direct computation [

7], Adomian decomposition [

8], and variational iteration methods [

9] can produce closed-form, series, or integral-transform solutions, but they suffer from several inherent limitations. These techniques are often computationally demanding for large-scale or high-order systems and are generally unsuitable for nonlinear FDEs. In addition, they can become numerically unstable when applied to stiff problems, and the convergence of truncated series is frequently slow, limiting their practicality for real-time applications. Consequently, there remains a need for numerical schemes that combine high accuracy, computational efficiency, and robustness for nonlinear fractional systems. To address these challenges, implicit numerical methods—particularly multi-stage formulations—have attracted increasing attention within the fractional calculus community. Compared with higher-order explicit Runge–Kutta type schemes [

10,

11,

12], implicit multi-stage approaches [

13] incorporate future information at each step, improving both stability and convergence and allowing larger time increments [

14,

15].

In parallel, artificial neural networks (ANNs) have emerged as powerful tools for approximating nonlinear mappings and accelerating computations across scientific and engineering disciplines [

16,

17]. Once trained, ANNs can deliver rapid and accurate predictions, providing an effective mechanism to accelerate implicit solvers. Recently, increasing attention has been devoted to integrating data-driven learning models with numerical iterative solvers for fractional differential equations. Physics-Informed Neural Networks (PINNs) address fractional dynamics by embedding physical laws into the network’s training loss [

18,

19,

20]. Other studies have introduced neural Runge–Kutta [

21] formulations and data-driven implicit [

22] solvers that employ neural networks to improve the temporal integration accuracy of fractional problems or to approximate unknown operators. Despite these advances, most existing hybrid numerical approaches [

23] still employ neural networks primarily as surrogate or black-box models that approximate unknown functions or solutions without explicitly enforcing the underlying physical or mathematical principles. This limitation can restrict generalization and accuracy, particularly in complex or fractional systems [

24], where learning relies mainly on data rather than the governing equations. In the context of fractional differential equations, neural networks can also approximate implicit stage terms and provide intelligent initial guesses, thereby reducing the number of iterations required by conventional iterative methods such as Newton–Raphson [

25].

Building upon these developments, hybrid strategies that combine artificial neural networks (ANNs) with classical implicit numerical methods have recently emerged as a promising direction. These approaches exploit the fast approximation capability of ANNs along with the high accuracy of iterative refinement, leading to substantial improvements in computational efficiency and solution accuracy [

26]. Recent studies have investigated hybrid AI–numerical approaches for solving initial value problems (IVPs) that integrate neural networks with traditional integration schemes. To enhance solution accuracy for IVPs, Wen et al. [

27] employ a Lie-group-guided neural network, while Finzi et al. [

28] propose a scalable neural IVPs solver with improved stability. Yadav et al. [

29] combine harmony search with ANNs to minimize residual errors, whereas Dong & Ni [

30] demonstrate that randomized neural networks can learn time-integration techniques, achieving higher long-term accuracy. These studies collectively highlight the potential of hybrid frameworks that embed AI components within numerical solvers to improve convergence and efficiency. However, their performance depends on the availability of an initial approximation sufficiently close to the exact root, since the underlying iterative solvers remain locally convergent. This hybrid paradigm is particularly effective for fractional systems, characterized by strong nonlinearities and long memory effects, where conventional methods often require substantial computational resources [

31,

32].

Motivated by these challenges, the present study introduces a hybrid ANN-assisted implicit two-stage scheme for solving nonlinear fractional initial value problems. The main goals of the proposed framework are to (i) reduce the computational cost associated with solving implicit stage equations, (ii) preserve high accuracy comparable to analytical or benchmark solutions, and (iii) enhance the convergence and stability properties of the classical two-stage method. The scheme is designed for fractional orders , with a representative case study at illustrating its effectiveness.

The main contributions of this study can be summarized as follows:

A new two-stage implicit Caputo-type scheme for fractional initial value problems.

A feedforward artificial neural network (ANN) integrated with the two-stage fractional solver to efficiently handle nonlinear systems in parallel form.

A trained ANN that provides accurate approximations of the implicit stage values, thereby reducing the number of iterative corrections.

Comprehensive numerical experiments demonstrating improved convergence, fewer iterations, and higher computational efficiency than classical two-stage methods.

Detailed error and stability analyses validating the accuracy of the proposed approach against exact solutions.

The novelty of this work lies in the integration of neural network predictions as intelligent initial guesses for the implicit stage of a fractional multi-stage method. This hybrid strategy combines the global approximation capability of ANNs with the rigorous convergence properties of implicit numerical schemes, achieving a substantial reduction in computational iterations while preserving high accuracy. The proposed scheme is systematically validated through several fractional initial value problems of Caputo type, demonstrating superior performance and robustness compared with existing fractional numerical methods. Overall, the approach represents a promising and effective direction in fractional numerical analysis.

The remainder of this paper is organized as follows:

Section 2 outlines the fundamental definitions and mathematical preliminaries related to fractional calculus.

Section 3 presents the formulation of the fractional two-stage method together with its stability and consistency analyses.

Section 4 describes the proposed hybrid ANN-assisted framework, including the network architecture, training process, optimization strategy, and implementation details.

Section 5 reports the numerical experiments and results, encompassing accuracy comparisons, error analysis, and parallel iteration performance. Finally,

Section 6 concludes the paper and discusses potential directions for future research.

2. Preliminaries

This section presents the fundamental concepts and theoretical background underlying the two-stage fractional numerical scheme and its hybrid ANN implementation. The discussion introduces the main definitions of fractional derivatives, key properties of the Gamma function, the generalized Taylor expansion, and the analytical framework used to assess local truncation error, convergence, and stability.

Definition 1 (Caputo Fractional Derivative)

. Let be a sufficiently smooth function defined on the interval . The Caputo fractional derivative of order is defined as [33,34]where denotes the Gamma function, given for byThe operator acts on continuously differentiable functions and reduces to the classical first derivative when . Throughout this work, all fractional operators and parameters are considered within the domain , .

Properties of Caputo Derivative:

Linearity: .

Derivative of a power function: .

Caputo derivative of a constant: .

Although this study focuses on the Caputo fractional operator, the proposed framework can be readily adapted to other definitions, such as the Riesz or Riemann–Liouville derivatives, for which several high-order discretization schemes have recently been developed [

35,

36].

Definition 2 (Generalized Taylor Series for Fractional Derivatives)

. Let and assume that y is sufficiently smooth so that the fractional derivatives (for ) exist and are continuous on . Then, the fractional Taylor expansion about is given by [37]for some point . The final term represents the remainder in the mean-value form of the fractional Taylor theorem and indicates that the truncation error after n terms isprovided that is bounded on . This series forms the basis of multi-step and two-stage fractional schemes. Definition 3 (Convergence, Consistency, and Stability)

. We define the convergence, consistency, and stability [38] of Caputo fractional schemes as follows:Order of Convergence: Let denote the local truncation error, defined as the difference between the exact solution and the numerical approximation after a single time step, assuming that all previous steps are exact. A numerical method is said to be of order p if the local truncation error satisfies Consistency: A numerical scheme is consistent ifensuring that the discrete scheme approximates the continuous fractional initial value problem. Stability: Stability of fractional schemes can be analyzed using zero-stability and absolute stability criteria [39]: Absolute Stability: For the test equation , a scheme is absolutely stable if as whenever .

Stability Polynomial. Consider the linear test equation where λ is a complex constant characterizing the stiffness or oscillatory behavior of the system, and denotes the numerical approximation at time level . For such problems, the stability function of the numerical method is defined as and the method is said to be stable if for the relevant values of z in the complex plane.

Definition 4. Lax Equivalence Theorem for Fractional IVPs [40]. Theorem 1. For a linear consistent fractional difference scheme applied to a well-posed linear FOIVPs, stability is a necessary and sufficient condition for convergence. That is, if the scheme is consistent and stable, then Definition 5. Boundedness Fractional Scheme. Consider a fractional-order numerical scheme generating approximations for the FOIVPswhere is Lipschitz continuous in y with Lipschitz constant L. Then, for any two solutions and of the scheme (possibly with perturbations), the scheme satisfieswhere depends on the specific scheme. This inequality guarantees the stability of the method. The preceding definitions, lemmas, and theorems establish the theoretical foundation required to develop and analyze the proposed two-stage fractional method. They guarantee the scheme’s consistency, stability, and convergence, and form the mathematical basis for the hybrid ANN-assisted acceleration introduced in the next section.

3. Construction and Analysis of Two-Stage Fractional Scheme

Fractional-order differential equations with Caputo derivatives are commonly used to model systems exhibiting memory and hereditary effects in science and engineering. Numerical schemes for these equations generalize classical methods to fractional orders, where the nonlocal character of the derivative introduces additional challenges for accuracy, stability, and convergence. Such methods can be formulated in terms of increment functions, analogous to those in classical Runge–Kutta schemes [

41], providing a compact framework for solving fractional initial order value problems.

The fractional backward implicit Euler method (

), proposed by Batiha et al. [

42], is defined as

This method possesses a convergence order of

. It is consistent since

ensuring that the discrete scheme approximates the continuous FOIVPs as

.

To enhance accuracy, Batiha et al. [

42] also introduced the fractional trapezoidal implicit two-stage scheme (

), which incorporates a midpoint-type correction. It is expressed as

where

The fractional trapezoidal rule achieves a convergence order of under sufficient smoothness of f. Its local truncation error satisfies , confirming consistency as .

Overall, these two schemes highlight the trade-off between simplicity and accuracy: the fractional method achieves an accuracy of order , whereas attains an accuracy of order , both measured with respect to the fractional step size . In the classical case , these orders reduce to first- and second-order accuracy, respectively.

We propose the following two-stage modified trapezoidal-type scheme (

) for the Caputo fractional IVP:

where

and

represents the increment function of the scheme.

Assumption 1. Let satisfy the following regularity conditions:

is continuous in x and satisfies a Lipschitz condition in y, i.e., there exists a constant such that has continuous fractional derivatives up to the required order σ, ensuring that the Caputo derivative exists and remains bounded.

The exact solution is sufficiently smooth so that is continuous on the interval of interest.

Under these assumptions, the convergence of the proposed scheme can be established as follows:

Theorem 2. Let the numerical scheme be defined aswhereThen, the proposed fractional implicit scheme is consistent, with a local truncation error of order and a convergence order of . Proof. Expanding the exact solution

in a fractional Taylor series gives

Next, expanding

in a similar manner, we have

Assume the fractional series form

Substituting (

19) into (

18) and matching terms in powers of

, we obtain

where the coefficients

are explicitly derived in

Appendix A. By comparing (

19) and (

20), the coefficients are found as

Substituting (

21) into (

19), we obtain

Substituting

and

in (

16) gives

Subtracting (

23) from (

17) gives the local truncation error (LTE):

Imposing

leaves one equation with two parameters. Choosing

gives

. Substituting these values yields the two-stage fractional scheme:

where

and

Hence, the proposed fractional scheme has a local truncation error of order , and therefore exhibits a consistency order of . □

Remark 1. The derived convergence order generalizes the classical result for integer-order implicit Runge–Kutta methods, where yields the standard second-order accuracy. The proposed implicit framework achieves higher accuracy and precision with reduced computational cost, owing to its compact two-stage structure and ANN-assisted initialization. Moreover, the implicit two-stage fractional formulation inherently incorporates the memory effect: each time-step update involves fractional weights of the form , originating from the memory kernel of the Caputo operator (4), thereby coupling the current solution with all past evaluations of . 3.1. Boundedness, Stability, and Convergence

Let

denote the global error. From the recursive form (

16) and using the Lipschitz condition

, one can show

which ensures boundedness if

. Applying discrete Grönwall’s inequality yields

proving the global stability and convergence of order

. Thus, the scheme is both consistent and zero-stable, guaranteeing overall convergence in the sense of Dahlquist’s criterion [

43]. Considering the two-stage fractional scheme

for FOIVPs as

where

and

is assumed to satisfy a Lipschitz condition in

y with constant

, i.e.,

Now, we check the boundness in the second stage

. From the implicit definition of

in (

26), consider two sequences

and

(perturbed initial values). Using the Lipschitz condition, we obtain

From (

26), the difference between successive steps satisfies

where

C depends on the Lipschitz constant

L and

. This inequality shows that the growth of perturbations is bounded at each step.

Applying (

29) recursively for

n steps gives

For sufficiently small step size

h, the factor

remains bounded, ensuring that the numerical solution does not grow unboundedly. Therefore, the scheme (

26) is bounded.

3.2. Stability Analysis

To analyze the stability of the proposed scheme

with

We employ the standard linear test equation

where

denotes the Caputo fractional derivative of order

and

. This equation serves as a canonical model for analyzing the stability and convergence properties of fractional numerical schemes. The corresponding right-hand side can therefore be expressed as

which is used in the subsequent derivation of the stability function.

Substituting into the scheme, we obtain

Hence, the stability function of the scheme is

The stability region is defined as [

44]:

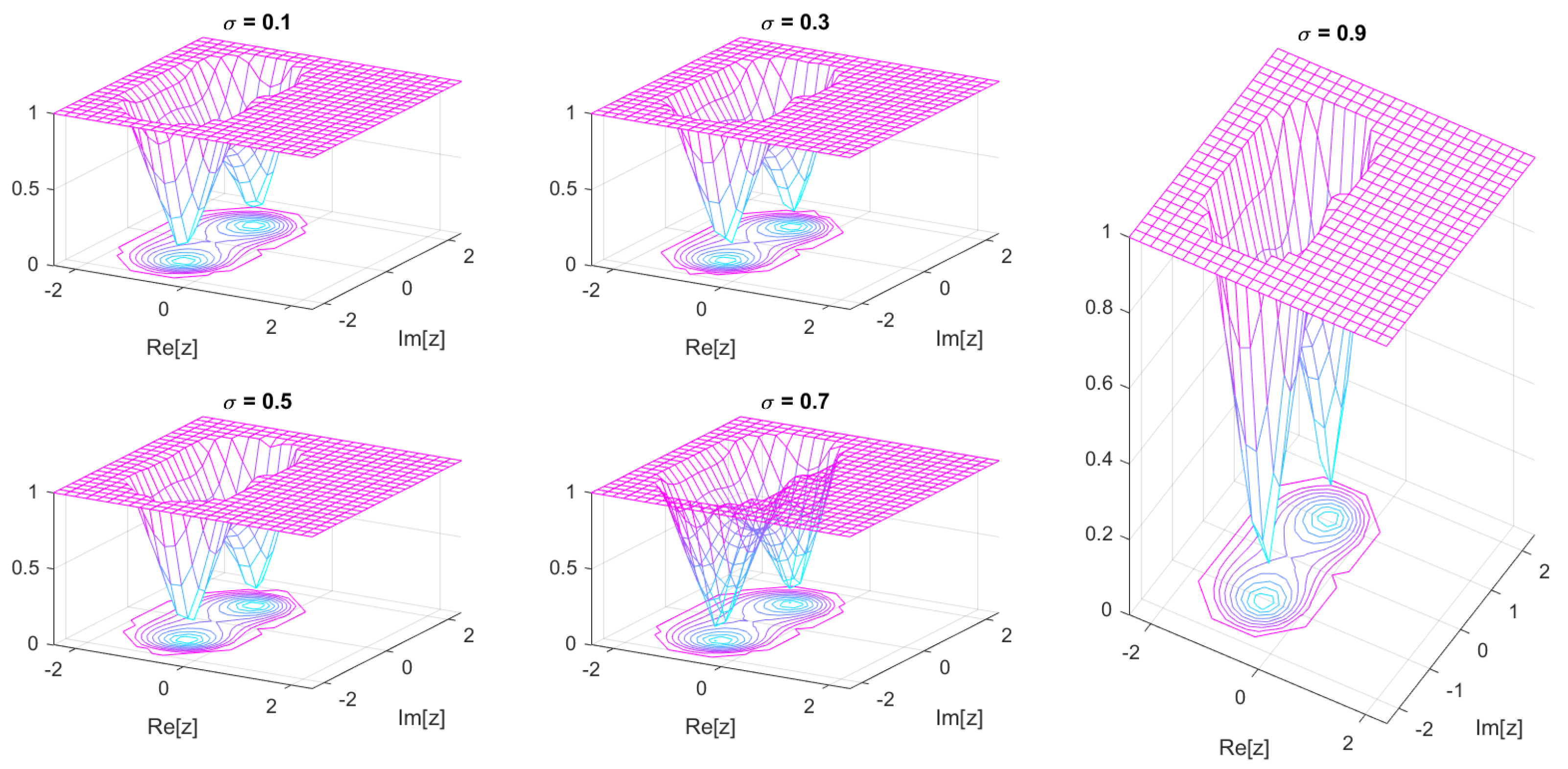

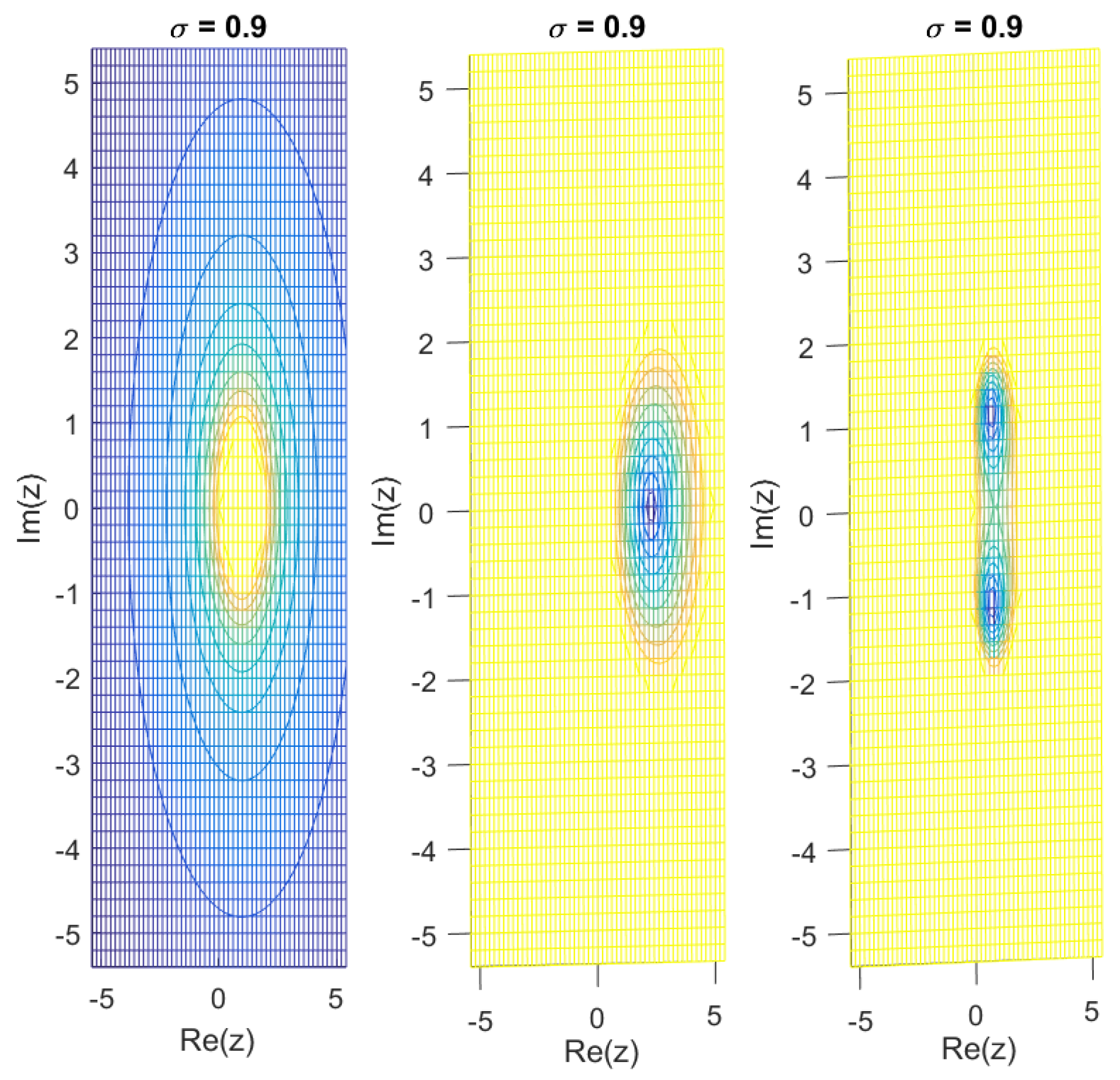

For fractional orders

, the stability region can be visualized in the complex

z-plane. The scheme remains stable for all

z within

, and the region gradually shrinks as

decreases, confirming the expected stability trend of the proposed fractional scheme

. The stability function of the

scheme is given by

whereas that of the

scheme is

Numerical Comparison on the Real Axis

We tabulate, for each chosen

, the factor

and the numerically determined left endpoint

on the real axis for the new method

, i.e., the most negative real

z for which

in

Table 1 and

Figure 1 and

Figure 2.

4. Fractional Implicit Hybrid Scheme, Methodology, and Implementation

This section presents the development and implementation of the proposed fractional implicit hybrid scheme, which integrates the two-stage Caputo-type formulation with an artificial neural network (ANN)-assisted strategy. The resulting method, referred to as ISBF-, combines the high-order accuracy of the implicit fractional scheme with the adaptive learning and approximation capabilities of ANNs to improve convergence and stability. The detailed computational framework, algorithmic steps, and implementation procedure are described in the following subsections.

4.1. Methodology

This subsection outlines the detailed methodology of the hybrid ANN-assisted two-stage fractional approach. It first reviews the classical two-stage fractional scheme, then describes the design and training of the artificial neural network (ANN), and finally presents the hybrid framework that integrates ANN predictions with the parallel refinement process.

Consider the nonlinear fractional initial value problem

where

denotes the Caputo fractional derivative of order

, and

is a known function. The goal is to approximate

numerically while achieving high accuracy and computational efficiency.

4.1.1. Two-Stage Implicit Fractional Method

The classical two-stage implicit method discretizes the problem over a uniform time grid

,

, with step size

. The method is defined as

Note that

is defined implicitly and requires solving a nonlinear equation, typically using Newton-Raphson iterations. Although the method is stable and accurate, solving

at each step can be computationally expensive.

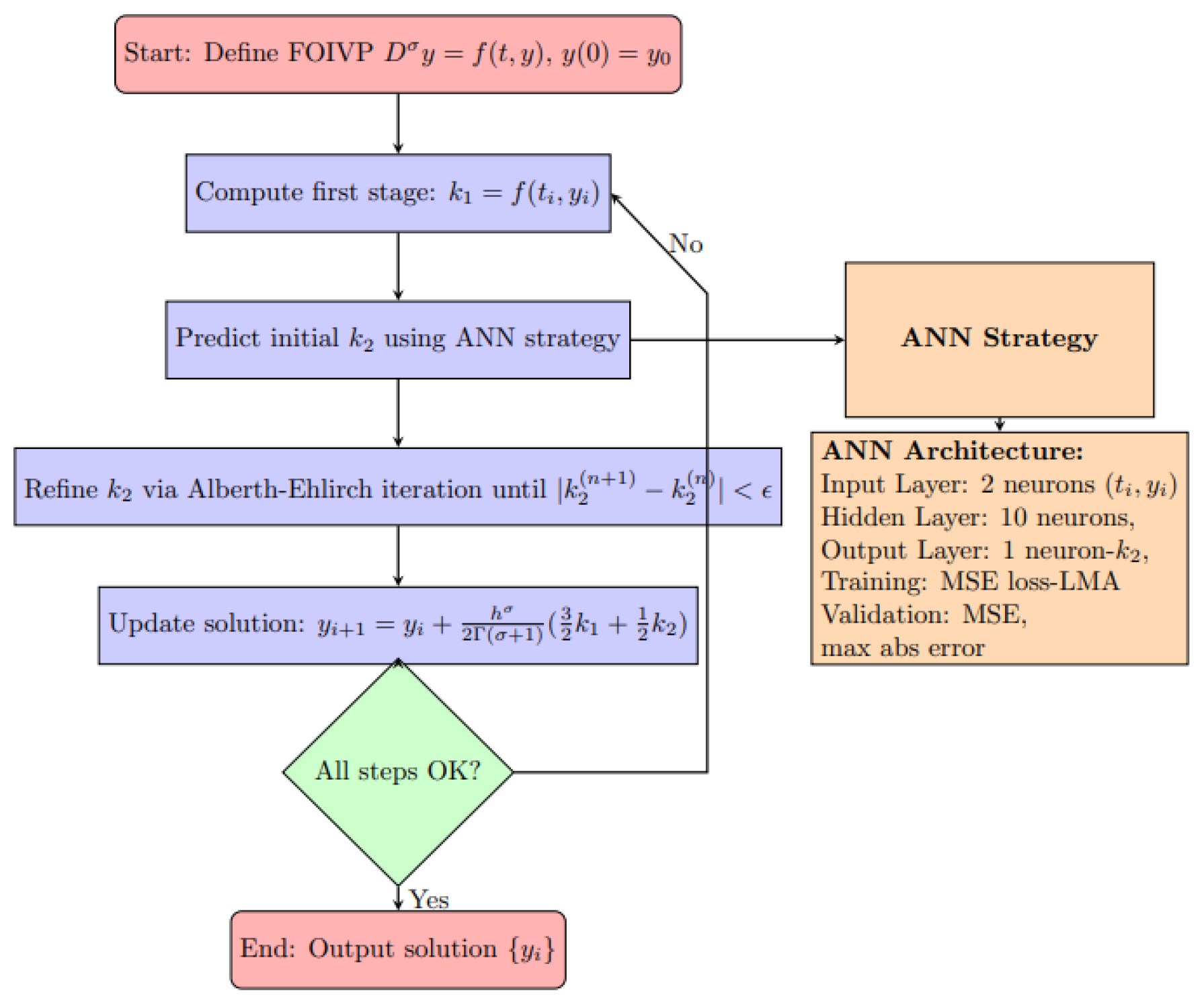

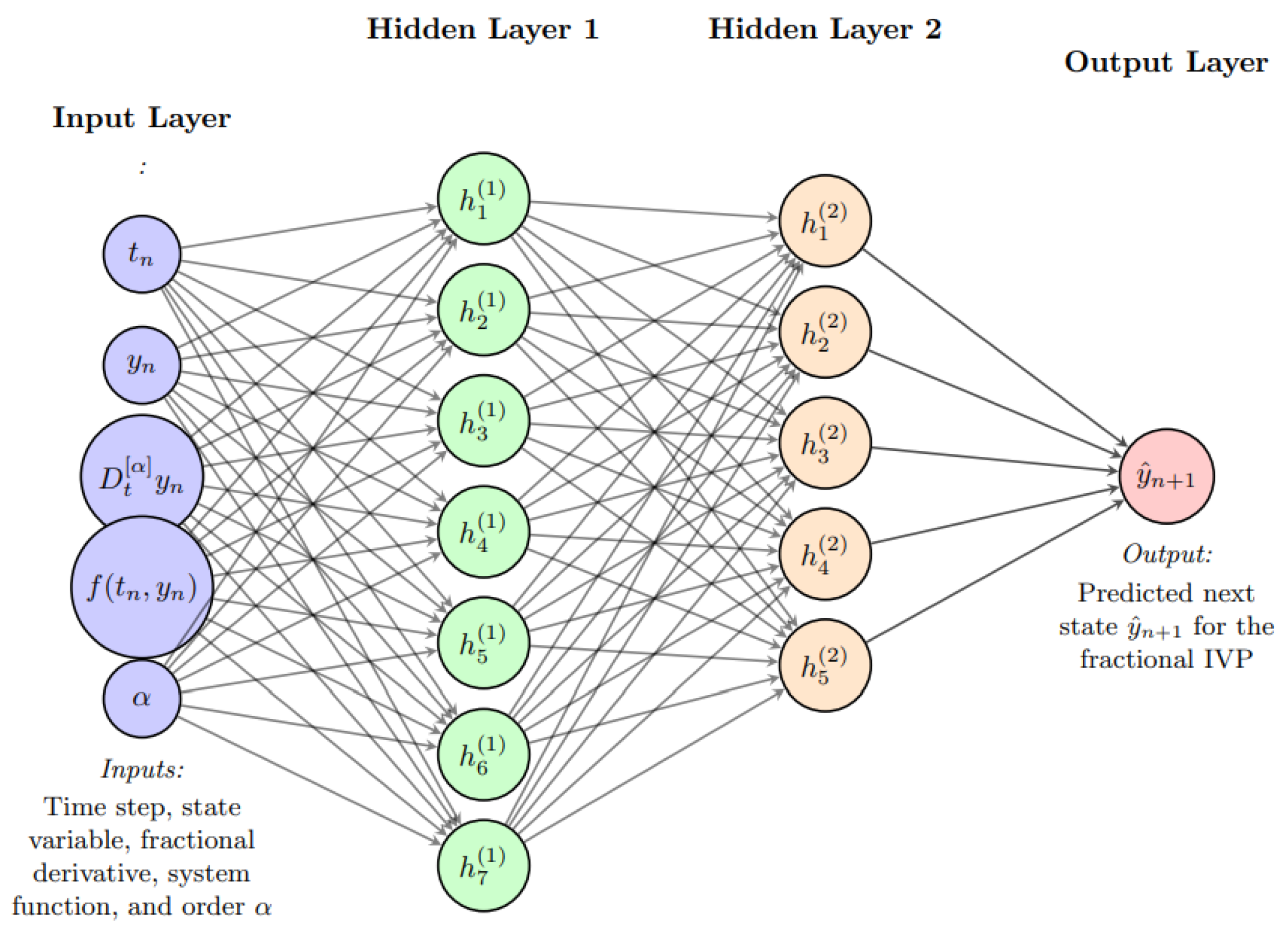

4.1.2. Artificial Neural Network (ANN) Framework and Implementation

To accelerate the computation, we employ an ANN to approximate the implicit stage value

. The ANN is designed as a feedforward network (

Figure 3 and

Figure 4) with one hidden layer, which maps the inputs

to an estimate of

:

where

and

are the weight and bias parameters of the network. The hidden layer uses a sigmoid activation function, while the output layer is linear to predict continuous

values.

Training Data Generation

The training dataset is generated using the exact solution:

Here,

form the ANN input, and

forms the target output.

Loss Function and Optimization

The ANN parameters are optimized [

45] by minimizing the mean squared error (MSE) between the predicted

and the true

:

Optimization is carried out using the Levenberg–Marquardt algorithm [

46], which combines the advantages of gradient descent and Gauss–Newton methods [

47,

48] to achieve fast convergence. This approach enables the network to approximate

with high accuracy, thereby reducing the number of iterations required during time stepping.

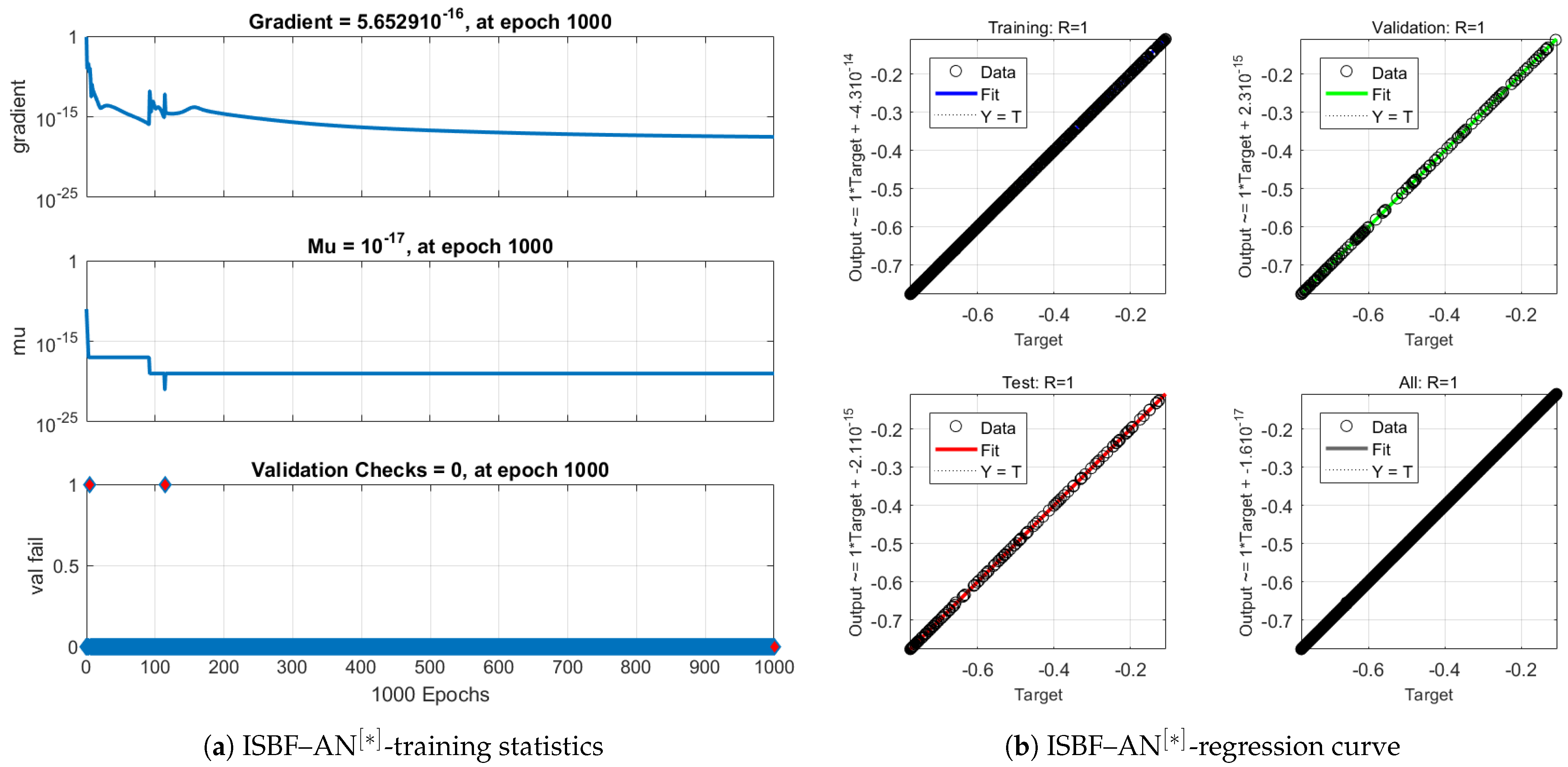

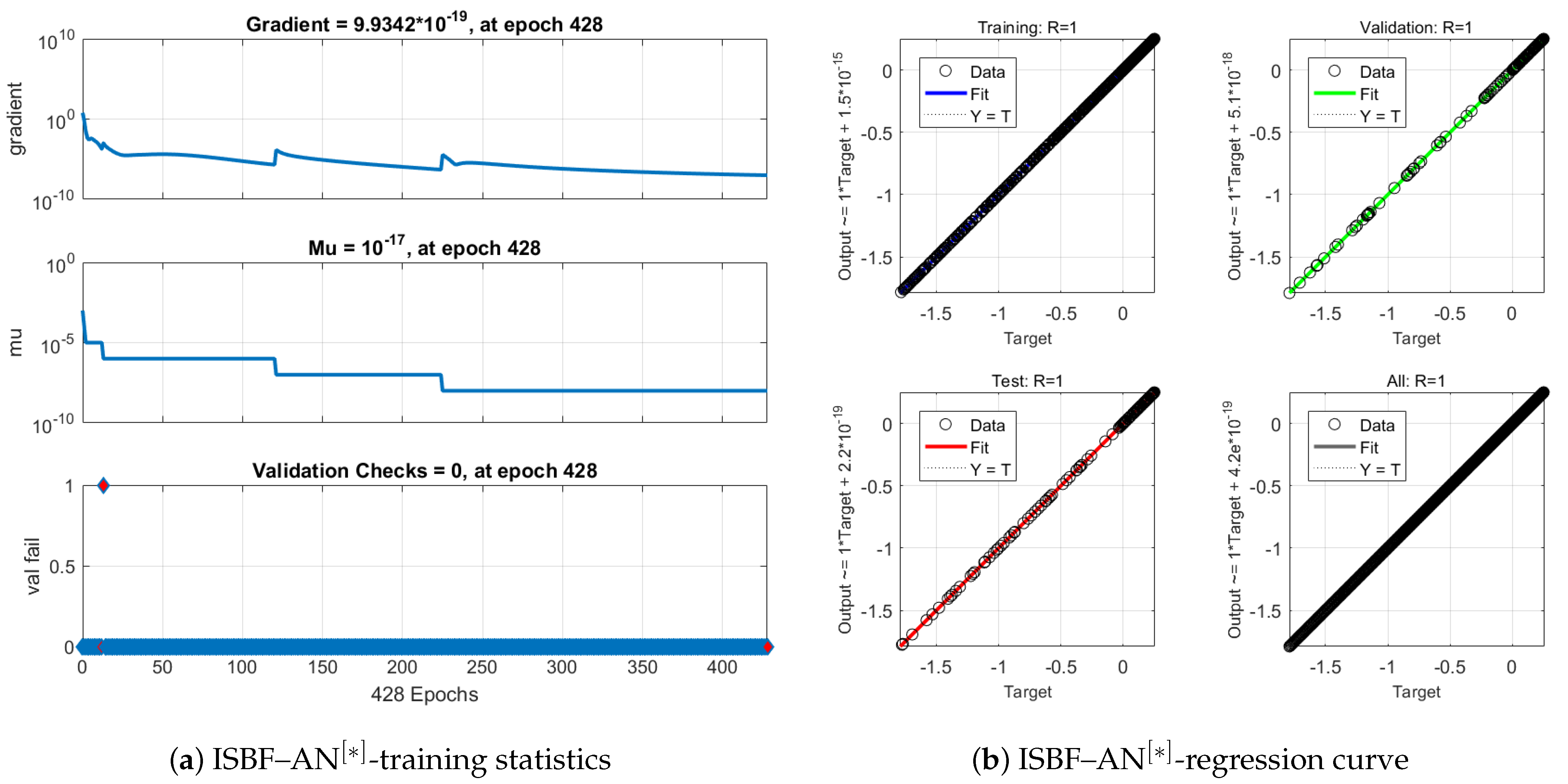

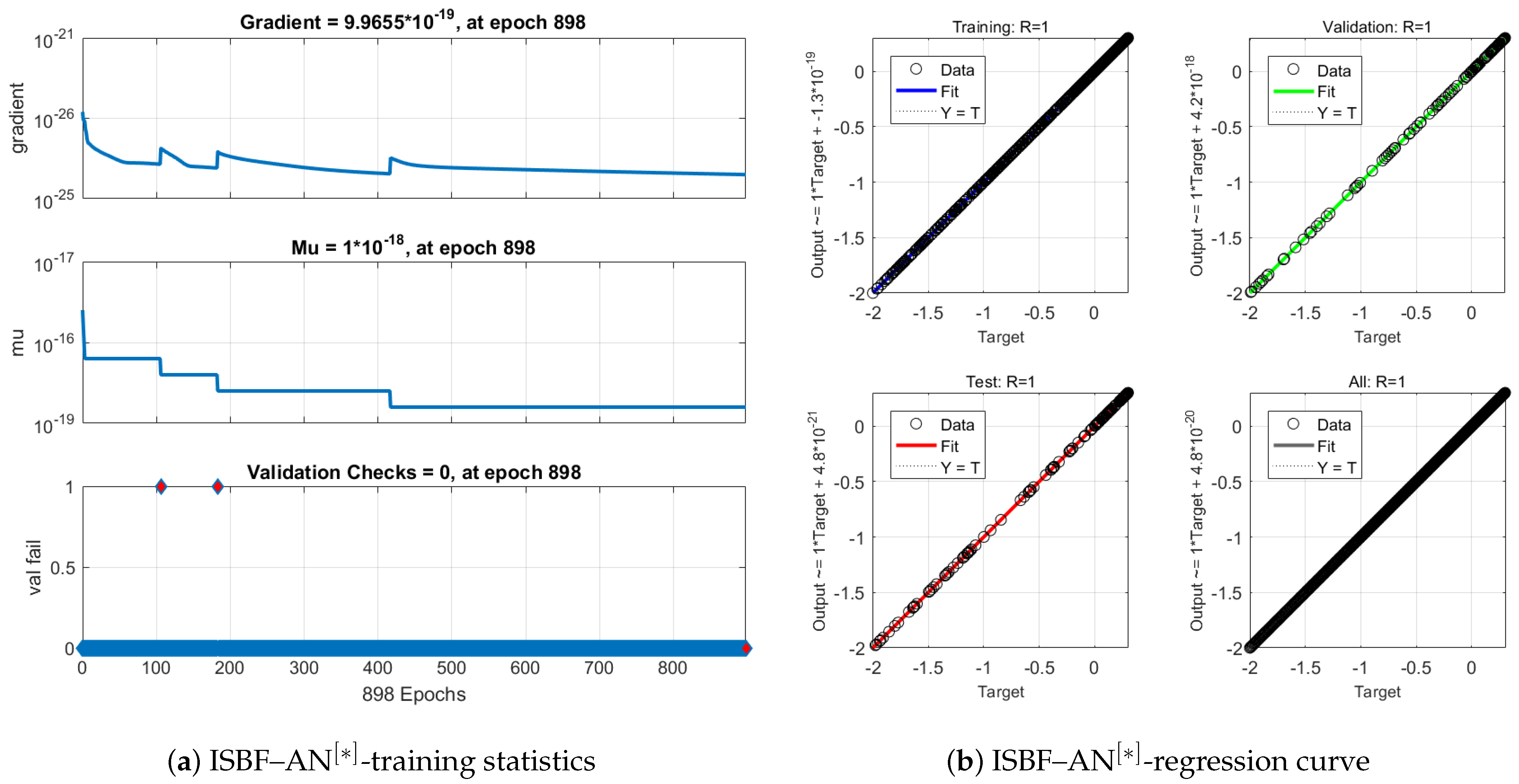

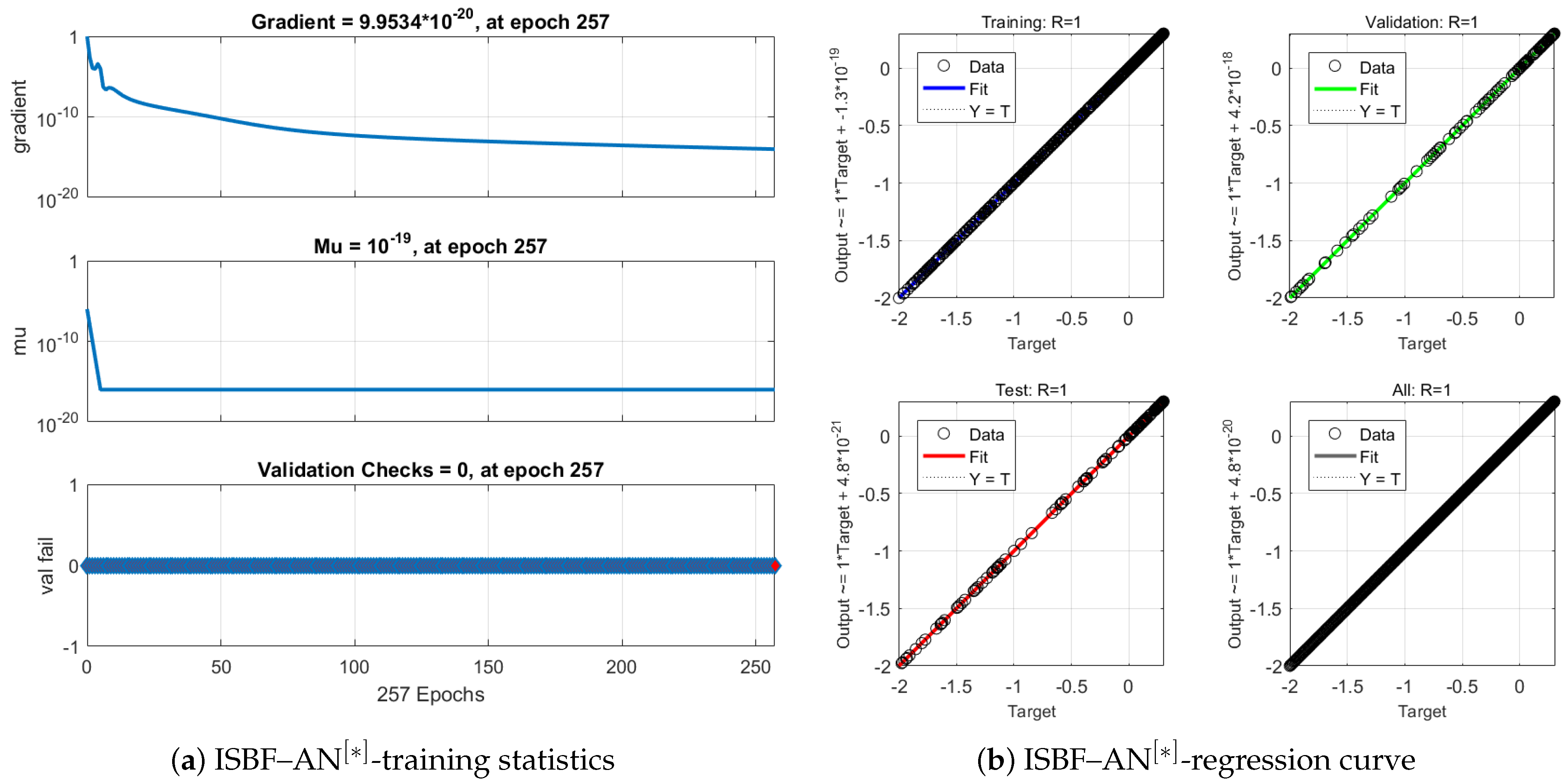

Validation and Testing

The trained ANN is validated using a separate test set to ensure generalization. The relative error

is computed to verify that predictions meet the desired tolerance. Only after satisfactory validation is the ANN incorporated into the hybrid method.

4.2. Hybrid ANN with Parallel Tuning ISBF-

In the hybrid strategy, the ANN output serves as the initial guess for the Alberth–Ehlirch method [

49] iteration of

:

The iteration continues until , where tol is a small tolerance (e.g., ). By initializing with from the ANN, the method significantly reduces the number of iterations per time step, thereby improving efficiency without sacrificing accuracy.

The hybrid ANN-based implicit iterative solver exhibits the same computational complexity as classical implicit methods, since the ANN correction is applied only during the initialization step. The network is trained offline with minimal inference cost, typically requiring fewer than 1000 epochs. This approach substantially reduces preprocessing overhead while decreasing the number of iterations and overall CPU time, thereby enhancing both accuracy and consistency.

In

Table 3,

N denotes the number of time steps,

n the number of unknowns per step,

m the average iteration count,

p the number of ANN parameters, and

the linear algebra cost per iteration. The proposed hybrid formulation achieves a substantial reduction in overall cost compared with the classical implicit approach,

, since in practice

.

4.3. Convergence Enhancement and Efficiency

The hybrid approach enhances convergence through two complementary mechanisms:

Intelligent Initialization: The ANN provides a high-quality initial estimate for , thereby reducing the number of iterations required by the scheme.

Iterative Refinement: The parallel iterative procedure ensures that the final value of satisfies the implicit equation with high precision, preserving the second-order accuracy of the two-stage .

Performance Evaluation: To illustrate the efficiency of the proposed ANN–implicit solver across different fractional orders

, a summary table reports the percentage improvements in both computational time and accuracy relative to baseline methods (IEBF and ITBF). The percentage improvements are computed as follows:

where

and

denote the CPU time and error of the reference method, respectively, while

and

correspond to the proposed scheme. These metrics enable a clear comparison of the relative performance advantages of the proposed fractional implicit solver for various fractional orders

when applied to FOIVPs.

This combined strategy significantly reduces the computational cost while maintaining high accuracy, particularly for stiff or nonlinear fractional systems. All experiments were performed in

Matlab (R2023b) (The MathWorks, Natick, MA, USA) on a Windows 11 platform equipped with an Intel

® Core

TM i7–12700H (Intel Corporation, Santa Clara, CA, USA) CPU @ 2.70 GHz and 16 GB of RAM. The complete procedure is presented in pseudocode form in Algorithm 1.

| Algorithm 1 Hybrid ANN-Based Two-Stage Method ISBF- for Fractional IVPs |

Require: Fractional IVP: , time step h, tolerance , total steps N, trained ANN Ensure: Approximate solution - 1:

Step 1: Define FOIVPs - 2:

Set initial condition and fractional order - 3:

Define function - 4:

Step 2: Two-Stage Time-Stepping Method - 5:

For each time step : - 1.

Compute first stage:

- 6:

Step 3: ANN-Based Initial Guess for - 1.

ANN Architecture: Input layer: 2 neurons Hidden layer: 10 neurons, tanh activation Output layer: 1 neuron (predicted ), linear activation

- 2.

Training: Generate training data: Optimize weights using Levenberg–Marquardt Method

- 3.

Prediction: - 4.

Validate ANN on test dataset using MSE and maximum absolute error

- 7:

Step 4: Refine via Alberth–Ehlirch Method - 1.

Set iteration - 2.

Repeat until :

- 8:

- 9:

Step 6: Repeat for all time steps - 10:

Step 7: Output - 11:

Return approximate solution vector

|

Key Advantages of the Hybrid ANN-Based Implicit Scheme

The hybrid approach demonstrates the following:

Accurate prediction of the implicit stage value ;

Reduction in Newton iterations and overall CPU time;

Strong generalization capability of the ANN across time steps;

Potential applicability to other fractional-order systems and multi-dimensional problems.