1. Introduction

Chatbot technology has advanced rapidly in the past decade, driven by improvements in artificial intelligence (AI) techniques such as natural language processing (NLP) and machine learning (ML) [

1]. Modern conversational agents benefit from text-to-speech and speech-to-text capabilities, making interactions more natural [

2] and increasing user acceptance worldwide [

3]. Intelligent personal assistants (Apple Siri, Microsoft Cortana, Amazon Alexa, Google Assistant) now demonstrate a better understanding of user input than earlier chatbots [

4], thanks to these AI advances. They can even mimic human voices and produce coherent, well-structured sentences.

Chatbots are employed across diverse domains—entertainment, healthcare, customer service, education, finance, travel [

5]—and even as companions [

6]. These successes result from years of research aimed at improving conversational AI functionality, performance, and language understanding accuracy [

7], along with new strategies for efficient implementation [

8] and dialogue quality evaluation [

9]. Nevertheless, current chatbots often still fall short of user expectations [

1], leading to frustration and dissatisfaction [

10]. This shortfall has led researchers to emphasize the importance of endowing such systems with affective recognition capabilities, on the premise that acknowledging and responding to user emotions improves user experience [

11].

Due to issues like the above, users may perceive chatbot interactions as unnatural or impersonal. To address this, Wolk [

12] argued that conversational agents should provide personalized experiences, foster lasting relationships, and receive positive user feedback. He suggested this could be achieved if such systems were capable of processing users’ intents (in addition to understanding content).

In summary, many researchers advocate for conversational agents and other AI applications to be able to recognize both the user’s intentions and emotions during interactions. This dual capability could make dialogues more natural and satisfying.

Recently, large language models (LLMs) have had a profound impact on the NLP community with their remarkable zero-shot performance on a wide range of language tasks [

13]. As LLMs are increasingly integrated into daily life applications, it is vital to analyze how well these models can recognize and classify user intentions and emotions. Developments like supervised fine-tuning have further improved LLMs’ understanding of user instructions and intentions [

14].

The debut of ChatGPT in late 2022 [

15] revolutionized AI conversations with its ability to engage in human-like dialog. More recently, in early 2025, a new open-source large language model (LLM) called DeepSeek-r1 (DS-r1) [

16] was introduced as a promising model with purportedly advanced dialogue capabilities. DS-r1 achieves performance comparable to OpenAI-o1-1217 on reasoning tasks, and outstanding results on benchmarks such as MMLU, MMLU-Pro, and GPQA Diamond, with scores of 90.8% on MMLU, 84.0% on MMLU-Pro, and 71.5% on GPQA Diamond, showing its competitiveness [

17]. DS-r1 also excels in a wide range of tasks, including creative writing, general question answering, editing, summarization, and more. It achieves an impressive length-controlled win-rate of 87.6% on AlpacaEval 2.0 and a win-rate of 92.3% on ArenaHard, showcasing its strong ability to handle non-exam-oriented queries intelligently [

17].

However, to the best of our knowledge, this is the first systematic study evaluating DeepSeek, a recently released open-source large language model, on the combined tasks of emotion and intent detection in dialogue, and whether providing the additional context of the conversation (in the case of emotion recognition, the previous annotation of intent, and vice-versa) influences performance on these tasks. While prior research has assessed ChatGPT, GPT-4, or fine-tuned transformer models for these tasks, DeepSeek has not been examined in this context despite its reported strengths in reasoning and general dialogue. Our work, therefore, provides novel insights into the affective and pragmatic capacities of a state-of-the-art open-source LLM, highlighting an asymmetric relationship between emotions and intentions that has not been previously documented.

Objectives: In this study, we evaluate the performance of DeepSeek (V3-0324 and R1-0528) as a representative LLM on two key tasks: emotion recognition and intent recognition from text. We focus on zero-shot prompting scenarios—without any fine-tuning—on established conversational datasets. Specifically, we assess (1) how accurately DeepSeek-v3 (DS-v3) and DeepSeek-r1 (DS-r1) can classify the emotion expressed in a user utterance, and (2) how accurately they can classify the user’s intent (dialogue act). We also investigate whether providing additional context (such as the surrounding conversation or knowledge of the other aspect, emotion versus intent) affects performance on these tasks.

The main contributions of this work are:

a demonstration that DeepSeek can make a reasonable classification of intent and the emotional state in zero-shot conditions.

a demonstration that DeepSeek can improve its comprehension of intents and the emotional state of a conversation, providing it with the conversation context.

reaffirms the suggestion that providing the intention of the utterance to an LLM can improve an emotional state classification.

The remainder of this paper is organized as follows:

Section 2 provides background on emotion and intention detection, as well as their relationship.

Section 4 describes the experimental methodology, including the prompt design and datasets.

Section 5 presents the results of the emotion and intent classification experiments.

Section 6 presents the comparative results of ChatGPT, DeepSeek, and Gemini.

Section 7 discusses the findings, and

Section 8 concludes the paper and outlines future work.

4. Materials and Methods

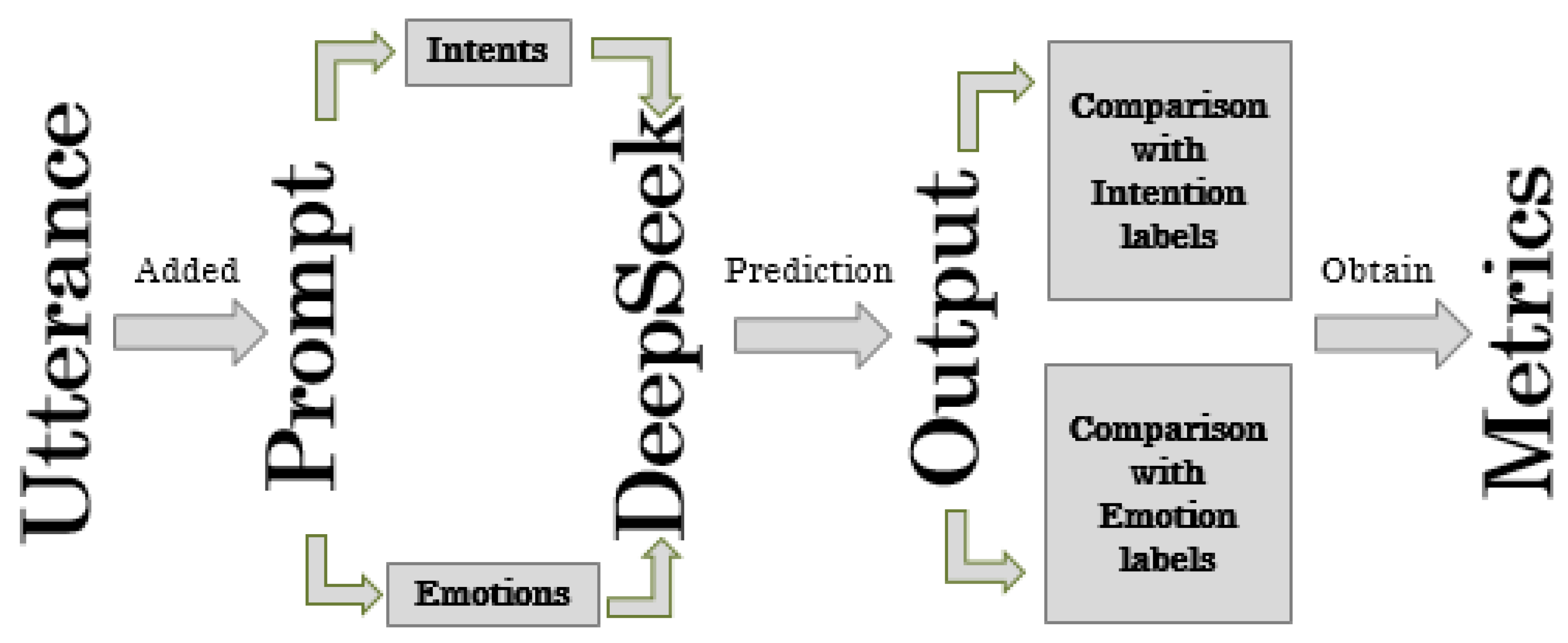

To classify every utterance from each conversation, those were sent to DeepSeek (DS) through a module specifically designed for this purpose. This classification was made within a predefined set of categories. To each utterance, a prompt specifying the desired task was added. Then the classification produced by DS was retrieved, compared, and evaluated against the dataset’s original annotations. Finally, we obtained adequate metrics to understand the model’s performance.

Formally, the method mathematically can be described as follows:

Given a conversation

C,

where

represent the

i-th utterance.

The function that maps every utterance to its prediction can be defined as,

where

represents the added prompt for each utterance,

the prediction made by the model for the specific utterance

, and

E the set of predefined labels.

Then, let us say that,

where

represents the ordered list of all the predictions made by the model in a set of predefined labels.

Now, if we want to consider the entire context of the conversation, we have the following function,

where

represents the prompt that asks for consideration of the conversational context of the conversation,

is an element of

, the ordered list of all the predictions made by the model.

Now, let

L be an ordered list with the original annotations of the conversation

C mapped from each utterance,

In the last case, when we want to consider the context of the conversation and provide the model with the additional information of the pre-annotated label class, we have the following function,

where

represents the prompt that asks for consideration of the conversational context of the conversation with the additional information, and

represents the original labels of the conversation

C belonging to

L, mapped from each utterance

.

Finally, the next function returns the confusion matrix that will help us obtain the necessary metrics to evaluate the model,

The matrix

enables us to obtain the metrics for evaluation (see

Section 4.3 for more details on this function and other algorithms).

The following diagram helps us to visualize the same method described before (

Figure 1):

4.1. Prompt Design for the LLM

Although at the beginning, we designed a series of text prompts to query DeepSeek for emotion and intent classification, and because we operate in a zero-shot setting (carefully phrased prompts are essential for eliciting the desired behavior from the model), we experimented with multiple prompt formulations to determine which yielded the most accurate and consistent responses. At the end, we issued two separate prompts per input: one requesting the dominant emotion, and another asking the inferred communicative intention. Categories for emotion followed the Ekman taxonomy for the MELD dataset (see

Section 4.2 below), and for the IEMOCAP dataset, the same Ekman taxonomy plus excited and frustrated. While intentions were defined using the corpus authors’ annotation schema. All prompts instructed the model to output a single-word label indicating either an emotion or an intent.

For emotion recognition, without considering the context of the conversation, the prompt used was:

“In one word, choose between anger, excited, fear, frustrated, happy, neutral, sad, or surprised. Not a summary. What emotion is shown in the next text?: ‘…’”

We found that constraining the output to a single word and providing an explicit list of emotion labels often improved the consistency of the model’s answers. Adding “Not a summary” to the prompt is essential to retrieve the model’s response and avoid irrelevant information, which can make it harder to extract the desired word.

In some variants, we provided conversation context and asked the model to label the emotion of each utterance, evaluating its ability to perform in-context learning across multiple turns.

For intent (dialog act) recognition, we used analogous prompts but with dialog act labels. For example, when we wanted the model to classify the intent of an utterance, we might prompt:

“In one word, choose between Greeting, Question,…, and Others. Not a summary. What dialogical act is shown in the next text?: ‘…’.”

In the case of classifying the emotion of each sentence, considering the context of the conversation, we used the following prompt:

“According to the conversation context, choose between anger, excitement, fear, frustration, happiness, neutral, sadness, or surprise. Answer each sentence in one word with a list and a corresponding number. Not a summary. What emotion is shown in each sentence?: ‘…’.”

In the prompt shown above, the sentences “According to the conversation context” and “Answer each sentence in one word with a list and a corresponding number” can be suppressed, but what is essential is the structure in which the conversation is provided to classify, each utterance of it must have a sequential number (e.g., “1.—Joey—But then who? The waitress I went out with last month? 2.—Rachel—You know? Forget it! 3.—Joey—No-no-no-no, no!…), to obtain the corresponding list as expected.

However, in this study, we primarily focus on intent classification in conjunction with emotion (see

Section 4.4 below).

For experiments where we provided cross-task information, the prompts were extended. For instance, to see if emotion context aids intent classification, we gave the model an utterance along with a known emotion label and asked for the intent. Conversely, to test if knowing the intent aids emotion recognition, we provided the dialog act label in the prompt and asked for the emotion. An example of the latter:

“According to the context of the conversation and its dialog act classification (given in parentheses), choose the emotion (fear, surprise, sadness, anger, joy, disgust, or neutral) of each sentence. Each sentence’s dialog act is provided. Not a summary. What emotion is shown in each sentence of the conversation? ‘…’.”

Here, the conversation lines were annotated with dialog act tags in parentheses, and the model was asked to output an emotion for each line.

In summary, our prompt engineering strategy was to specify the task, restrict the output format (for reliability), and supply additional context or options when needed. We did not perform exhaustive prompt tuning; rather, we aimed for reasonably straightforward prompts, under the assumption that an effective LLM should handle such direct instructions (this reflects a typical end-user approach).

4.2. Datasets

We evaluated the model on the EMOTyDA (Emotion aware Dialogue Act) dataset (Saha et al. [

34]), which was constructed by combining and reannotating two public dialogue datasets: IEMOCAP (Interactive Emotional Dyadic Motion Capture Database) and MELD (Multimodal Emotion Lines Dataset). Both are multimodal dialogue corpora with emotion labels; for our purposes, we used only the text transcripts and associated labels.

IEMOCAP [

51] contains scripted and improvised two-person conversations performed by actors. It has about 152 dialogues and over 10,000 utterances (turns), each annotated by multiple annotators for emotion, in the following emotion categories: happy, sad, fear, disgust, neutral, angry, excited, frustrated, and surprise.

MELD [

52] is derived from TV show transcripts (dialogues from the Friends series). It includes over 1400 dialogues and 13,000 utterances, labeled with seven emotion categories: joy, sadness, neutral, anger, fear, disgust, and surprise. To compare the results of the classifications of DS once they were made, we used the same emotion categories as the labels provided.

From these, EMOTyDA was formed by taking the text of each conversation along with its emotion labels (Saha et al. [

34]). Additionally, for a subset of the data, each utterance was annotated with one of 12 dialog act (intent) categories: greeting, question, answer, statement-opinion, statement-non-opinion, apology, command, agreement, disagreement, acknowledge, backchannel, and other. Although the SWBD-DAMSL tag-set consists of 42 dialogue acts (DA) developed by [

53], and it has been widely used for the task of classification, Saha et al. [

34] employed the SWBD-DAMSL tag-set as the base for conceiving a tag-set for the EMOTyDA, since both these datasets contain task-independent conversations. Of those 42 tags, the 12 most common were used to annotate utterances in the EMOTyDA dataset. This is because EMOTyDA is smaller than the SWBD corpus. And many of the tags of the SWBD-DAMSL tag-set will never appear in the EMOTyDA dataset. These are the same categories used in the present study.

These dialogue act annotations were available or adapted from the original datasets, and we used those for consistency.

This unified dataset enabled us to test the model for emotion and intent classification using both corpora.

For evaluation, we considered each utterance in isolation or with its conversation context, depending on the experiment (see

Section 4.4). The model’s predicted label was compared with the ground truth label to assess accuracy (see the

Section 4.3).

4.3. Metrics and Algorithms

We evaluated DeepSeek performance using various metrics. Some algorithms were implemented as well to obtain the values of the variables used in the formulas, such as Algorithm 1, which was used to compute each confusion matrix, or Algorithm 2, to obtain the values of the True Positives, or Algorithm 3, to receive the values of the True Negatives, or Algorithm 4, to obtain the values of the False Positives, or Algorithm 5, to receive the values of the False Negatives [

54].

| Algorithm 1 Confusion Matrix |

Input: List of Classes (listClass), Original Labels (y), Prediction of the model (y’) Output: confusionMatrix (Confusion Matrix) - 1:

- 2:

- 3:

fordo - 4:

- 5:

- 6:

- 7:

end for - 8:

return

|

| Algorithm 2 True Positives (TP) |

Input: confusionMatrix, listClass, class Output: truePositives - 1:

- 2:

- 3:

return

|

| Algorithm 3 True Negatives (TN) |

Input: confusionMatrix, listClass, class Output: trueNegatives - 1:

- 2:

- 3:

fordo - 4:

if then - 5:

- 6:

else - 7:

do nothing - 8:

end if - 9:

end for - 10:

return

|

| Algorithm 4 False Positives (FP) |

Input: confusionMatrix, listClass, class Output: falsePositives - 1:

- 2:

- 3:

fordo - 4:

if then - 5:

- 6:

else - 7:

do nothing - 8:

end if - 9:

end for - 10:

return

|

| Algorithm 5 False Negatives (FN) |

Input: confusionMatrix, listClass, class Output: falseNegatives - 1:

- 2:

- 3:

fordo - 4:

if then - 5:

- 6:

else - 7:

do nothing - 8:

end if - 9:

end for - 10:

return

|

Next, we used specific formulas to obtain every metric:

Accuracy. Accuracy refers to the percentage of correct predictions [

54].

Precision. Precision is understood as the fraction of values that belong to a positive class out of all of the values that are predicted to belong to the same class [

54].

Recall. Recall is equal to the number of correct predictions out of all the values that truly belong to the positive class [

54].

F1 score. The F1 score is the harmonic mean of precision and recall, with a value of 1 indicating perfect performance and 0 indicating no performance [

54].

Macro-F1. Macro-F1 averages the F1 score across all classes equally [

55].

Weighted F1 scores. Weighted (W) F1 accounts for class imbalance by giving more weight to frequent classes [

55].

4.4. Experimental Settings

We implemented a Python script to interface with DeepSeek models (DeepSeek-R1-0528 and DeepSeek-V3-0324, accessed in June 2025) via their API (simulating user queries to the model). All evaluations were done in a zero-shot manner; no few-shot examples or in-context demonstrations were used—no model fine-tuning was performed, only prompt-based queries. We used the EMOTyDA dataset, comprising 9420 instances from the IEMOCAP dataset (151 conversations) and 9988 cases from the MELD dataset (943 conversations). All prompts were submitted to

DS with its standard temperature set to 1 and the default max output of 32k tokens. Each input was queried once. For future replication, full prompt logs are available:

https://github.com/Emmanuel-Castro-M/EmotionAndIntentionRecognition (accessed on 24 September 2025).

We conducted three main experiments for emotion recognition and three for intention recognition, for each corpus (IEMOCAP and MELD):

Classification to utterance-level (no conversational context): The model was given each utterance independently, with a prompt asking for the classification (emotion or intention, independently). This setting mimics classifying each sentence in isolation, without conversational context.

Classification with conversational context: Utterances were presented to the model within their dialogue, and the model was asked to output an emotion or intention for each utterance. This tests whether providing context (preceding utterances) improves per-utterance classification recognition.

Classification with conversational context and known the contra-par classification of dialog acts or emotions: Here, we provided the model with each utterance’s dialog act label, in the case of emotion classification (from the human annotation), in addition to the conversation context, or we provided the model with each utterance’s emotion label, in the case of dialog acts classification. Then we asked it for the classification. This condition assesses whether providing an additional hint about the intent can enhance the prediction.

We calculated overall accuracy as the primary metric since the class distributions were moderately balanced. (In cases of class imbalance, we planned to consider F1-scores per class, but for simplicity and given our focus on overall trends, we mainly report accuracy.)

We used the gold labels provided by human annotators as ground truth. The model predictions were compared with these labels using accuracy and F1 scores. No additional human annotation was performed.

7. Discussion

Our experiments highlight an asymmetric relationship between emotion and intent detection in large language models. DeepSeek demonstrated moderate zero-shot performance, but its accuracy increased substantially when conversational context was available, and further improved when dialog act labels were supplied. This confirms that contextual and intentional cues are strong predictors of emotional tone. In contrast, providing emotion labels did not enhance intent recognition and sometimes misled the model, suggesting that emotions are not equally reliable indicators of communicative function.

This asymmetry is theoretically significant: while dialog acts often imply emotional tendencies (e.g., apologies correlate with sadness, disagreements with anger), emotions alone do not comparably constrain intent categories. Such findings suggest a structural imbalance in the interaction between affect and pragmatics, a phenomenon that is not widely reported in the current literature. They also highlight a limitation of zero-shot LLMs: although they capture affective nuance, they are less consistent in mapping emotions to communicative goals without explicit training.

Beyond accuracy scores, this study emphasizes the importance of designing evaluation frameworks that test both dimensions of dialogue simultaneously. By openly releasing our prompts and methodology, we provide a reproducible benchmark for exploring the affect–intent interplay in other models.

Emotion Detection: DeepSeek demonstrated a reasonable zero-shot ability to classify emotions from text, especially when provided with conversation context. The improvement in DS-v3 from 44% to 57% accuracy due to context, and then to 62% with dialog act cues, and in DS-r1 from 54% to 62%, then to 63%, underscores the importance of contextual understanding. This suggests that LLMs like DeepSeek can effectively leverage additional information. When the conversation’s flow or the nature of the utterance is known, the model can disambiguate emotions that might be neutral or ambiguous out of context. For instance, the model often failed to detect surprise or sarcasm without context, but with preceding lines, it could infer those emotions from an unexpected turn in the dialogue.

The positive impact of including dialog act labels on emotion classification (a five percentage point gain on MELD, from 57% to 62%) provides empirical support for our hypothesis in one direction: knowing what someone is doing (question, apologizing, etc.) helps the model figure out how they feel. Why might this be the case? Specific dialog acts carry implicit emotional connotations—an apology often correlates with regret or sadness; a disagreement may correlate with anger or frustration; a question might be asked in a curious (neutral/happy) tone or a challenging (angry) tone depending on context. By giving the model the dialog act, we essentially narrowed down the plausible emotions. The model could then map, say, a Disagreement act to a likely negative emotion such as anger or disgust, rather than considering all emotion categories. These findings may aid the development of empathy agents for mental health support, education, or customer interaction in low-resource settings, potentially benefiting the community.

Intent Detection: In contrast, providing emotion information did not aid intent classification. One interpretation is that emotion is a less reliable predictor of dialog act in our data. A user could be angry when asking a question or happy when making a statement—emotion does not deterministically indicate the functional intent of an utterance. The slight performance drop suggests that the model might have over-relied on emotion cues when they were present, leading to misclassifications (e.g., it might assume an angry utterance is a disagreement when in fact it was an angry question). This points to a limitation: the model does not inherently know when to separate style (emotion) from speech act (intent), and additional information can sometimes confuse style with content.

Another observation is that DeepSeek’s overall intent classification accuracy (61%) is modest. This is perhaps not surprising—dialog act classification can be quite nuanced, and our label set was large. Additionally, the model was not fine-tuned on any dialog act data; it relied solely on its pre-training. Its performance is roughly comparable to random guessing among a handful of dominant classes (since many utterances are statements or questions, which the model did get right). This indicates that while LLMs have implicit knowledge of language patterns, translating that into explicit dialog act labels may require either few-shot examples or fine-tuning. Indeed, prior work on ChatGPT (a similar LLM) has noted it can follow conversation flows but might not explicitly categorize them without guidance.

Logical Consistency and Errors: There were a few logical inconsistencies in model outputs. For example, in one scenario, the model labeled consecutive utterances from the same speaker as conflicting emotions (likely because it treated each utterance in isolation, failing to enforce the temporal consistency of that speaker’s emotional state). This highlights that the LLM does not maintain a persistent “persona state” of emotion unless explicitly modeled. In future work, incorporating a constraint that a speaker’s emotion should not wildly oscillate within a short exchange might improve realistic output.

We also noticed that ambiguous expressions like “Fine.” could be either neutral or negative; the model’s guess would sometimes flip depending on prompt phrasing. This suggests some instability typical of prompt-based LLM responses. More advanced prompt techniques or calibration might be needed for critical applications.

Implications for Affective Dialogue Systems: Our findings indicate that LLMs are promising as all-in-one classifiers for affect and intent, but their raw zero-shot capability might not yet match specialized models. For building empathetic chatbots, one could imagine using an LLM like DeepSeek to detect user emotion in real time and craft responses. The advantage is that the LLM doesn’t require separate training for the detection task. However, as we saw, it hits around 60% accuracy, whereas task-specific models can exceed 80% on some emotion benchmarks. There is, therefore, a trade-off between convenience (using one model for everything) and accuracy.

For intent detection in conversational AI, relying on an LLM’s internal knowledge might be risky if high precision is needed (for example, correctly interpreting a user’s request vs. a question can be critical). Fine-tuning an LLM on annotated intents or providing few-shot exemplars in the prompt could likely boost performance.

Error Analysis: The hypothesis that “emotions help detect intentions and vice versa” was only half-supported by our results. Emotions (especially when combined with context) did help detect intentions in some manual observations—for instance, when the model knew the user was laughing (happy), it correctly recognized a statement as a joke (which is a kind of intentional act). But these were specific cases. Overall, the automation did not show an aggregated improvement.

This asymmetry might stem from the mapping from intent to likely emotion being more direct than from emotion to intent. Intent categories are numerous and orthogonal to emotion in many cases, whereas emotions typically fall into a smaller set and can align with broad intent tones (e.g., anger often accompanies disagreement, sadness often accompanies apologetic statements, etc.). Our LLM could perhaps leverage intent cues to narrow down emotion options, but when given emotion, it still had to choose among many possible intents.

Some of the most common errors we observed are that when the context of the conversation is not provided to the LLM, it misclassifies different emotions in both datasets, as anger instead of frustration, or excited instead of happy, or disgust instead of anger. For example, consider the next sentence from IEMOCAP dataset: “I’ve been to the back of the line five times.”, annotated as anger, but predicted for the model as frustration. Once the context information was provided to the model, it made a correct prediction, because now, it was crystal clear that knowing the previous sentence “There’s nothing I can do for you. Do you understand that? Nothing.”, annotated as anger, the next sentence must be anger too, as a consequence of an reaction directed to whom said the previous sentence, perceived as a threat, instead of in the case of frustration that can be more generalized or self-directed.

In another example, the sentence: “Yes. There’s a big envelope, it says, you’re in. I know.”, annotated as excited, but predicted for the model in baseline condition as happy. Once the context information was provided, the model made the correct prediction, since the previous sentence was “Did you get the letter?”, the model now is ready to understand that the emotional state is derived of an intense temporary emotion linked to anticipation or enthusiasm for a specific event, so excitement, is the correct answer.

Now, let’s see the sentence “Oh my god, what were you thinking?” from MELD dataset, annotated as disgust, and initially predicted by the model as anger. Without the conversation context, the model struggled to make an accurate prediction; however, with the context provided, it made a better guess and changed its classification to disgust, which was the correct classification. Here is the reason why this happened, the two sentences previous were: the first one was “Monica—Joey, this is sick, it’s disgusting, it’s, it’s—not really true, is it?” which clearly can be classified as disgust, and the second one was “Joey—Well, who’s to say what’s true? I mean…”, and the third sentence was presented before where Monica replied to Joey, and by the flow of the conversation, we can infer that there is no reason to think that the state of emotion of Monica changed from disgust to anger. That is how context aids the model in making better predictions.

Limitations: While our study sheds light on several aspects of emotion and intention recognition using DeepSeek, certain limitations should be acknowledged. MELD and IEMOCAP datasets showed inconsistencies in the labeling criteria. Although they share similar labels, they also differ in the labeling criteria, eliciting different emotions when humans annotated them. This could have generated discrepancies in the results. Also, the results for emotion and intent detection based on the MELD style (TV show dialogues) might differ in more formal conversations or other contexts.

Another limitation of our study is that DeepSeek is a single model, and its behavior may not generalize to all LLMs. Newer or larger models might have different capabilities. By conducting new studies with other LLMs, we could find a reasonable explanation for why, in some cases, providing additional information (the emotion classification label for each sentence) to the model does not improve DA prediction performance.

Also, our prompt designs, while reasonable, could potentially be optimized. It’s possible that different wording (or using a few-shot examples) could significantly improve performance on either task. We did not exhaustively tune the prompts due to scope constraints.

Finally, we also note that the “Genesis flash 2.5” and additional model comparisons we initially intended (as hinted in the manuscript) were not completed; thus, our study focuses only on DeepSeek. Future work should compare multiple models (e.g., ChatGPT and others) on the same tasks to see whether they behave similarly or whether some handle the affect-intent interplay better.

8. Conclusions and Future Work

In this paper, we present a study on using large language models (DeepSeek-v3 and DeepSeek-r1) for emotion and intent detection in dialogues. Our evaluation, conducted without any task-specific fine-tuning, yielded several insights:

DeepSeek can recognize basic emotions from text with moderate accuracy in a zero-shot setting. Its performance improves substantially when given conversational context, highlighting the model’s strength in understanding dialogue flow.

Providing the model with information about the conversational intent (dialog act) of an utterance further enhanced emotion recognition, suggesting a synergistic effect where knowing “what” the utterance is doing helps determine “how it is said” emotionally.

For intent recognition, the model’s zero-shot performance was weaker (around chance level across a broad set of dialog act classes). Unlike the emotion case, providing the model with the speaker’s emotion did not assist and occasionally confused the intent classification.

The relationship between emotions and intentions is asymmetric in the context of this LLM: context and intent cues aid emotion detection, but emotion cues do not significantly aid intent detection.

The DeepSeek model, while powerful, sometimes struggled with non-literal language (e.g., sarcasm) and maintaining consistency, indicating areas for further refinement if used in practical systems.

In terms of academic contribution, our work demonstrates the feasibility of leveraging a single LLM for multiple dialogue understanding tasks simultaneously. This opens the door to developing more unified conversational AI systems. Rather than having separate pipelines for intent detection and sentiment analysis, a single model could potentially handle both, simplifying the architecture.

Future Work: There are several directions for future exploration: 1. Few-shot Prompting: We will experiment with providing a few examples of labeled emotions and intents in the prompt (in-context learning) to see if DeepSeek’s performance improves. Preliminary research on models like GPT-3/4 suggests that few-shot examples can dramatically boost accuracy. 2. Model Fine-tuning: Fine-tuning DeepSeek on a small portion of our dataset for each task might yield significant gains. It would be interesting to quantify how much fine-tuning data is required to reach parity with dedicated models. 3. Multi-task Learning: An extension of fine-tuning is to train a model on both emotion and intent labels jointly (multi-task learning). This could encourage the model to learn representations that capture both aspects internally. We hypothesize that multi-task training might enforce the kind of beneficial relationship between emotion and intent that we partially observed. 4. Applying to Real-world Conversations: We intend to test the model on more spontaneous, real user conversations (such as dialogues from customer support chats or social media threads). These often use noisier language, which would test the model’s robustness. 5. Incorporating External Knowledge: Emotions and intents might be better inferred if a model had access to external knowledge about typical scenarios. For instance, recognizing that “I’m fine!” with a particular punctuation is likely anger or sarcasm might be improved by knowledge distillation or rules. Hybrid systems combining LLMs with rule-based disambiguation for specific, tricky cases could be fruitful. 6. Improving Intent Granularity: Our results showed difficulty in fine-grained intent categories. Collapsing intents into broader classes (e.g., question, statement, command) might yield higher reliability. Future work could focus on whether LLMs are better suited to broad categorization and on refining them for detailed subclasses. 7. User State Tracking: One promising area is to have the LLM maintain a running estimate of a user’s emotional state throughout a conversation (rather than independent per-utterance classification). This could potentially smooth out moment-to-moment classification noise and provide a more stable assessment. Techniques from state tracking in dialogue could be applied here.

In conclusion, this work examined the capacity of DeepSeek to recognize emotions and intentions in dialogues under zero-shot conditions. The results show that the model can achieve reasonable emotion recognition when provided with context and dialog act cues. In contrast, intent recognition remains weaker and does not consistently benefit from emotional information.

The central contribution is the identification of an asymmetric relationship: intent knowledge helps disambiguate emotions, but emotion knowledge does not aid intent classification to the same extent. This finding advances our understanding of the limits of current LLMs and opens new perspectives for building empathetic and context-aware conversational systems.

Future research should test whether few-shot prompting, fine-tuning, or multi-task learning can enforce a more balanced integration of affect and pragmatics. Applying these methods to real-world conversational data, beyond scripted corpora, will also be crucial for validating the robustness of the proposed approach.

We acknowledge that while accuracy improved from 57% to 62% on MELD classification of emotions and other relationships, no statistical significance test (e.g., McNemar’s or bootstrap test) was conducted. Future work could explore this further.

We also recognize that using different experimental approaches is crucial for thoroughly validating our work. Future studies will include comparisons with transformer-based and classifier architectures better to demonstrate the strength and generalizability of our results.