Abstract

E-learning systems that support personalized learning require sophisticated decision-making methods to adapt content to students optimally. This paper deals with applying multi-criteria decision-making methods in assigning learning objects in an e-learning system to students based on relevant customization criteria. The novelty of this study lies in the application of ANP and DEMATEL to improve content adaptation for students. Structuring the decision-making problem according to the DEMATEL and using ANP for prioritization has made the entire selection of learning objects better with respect to cognitive and learning styles and Bloom’s taxonomy levels. The method consists of various forms. In the first, DEMATEL has identified dependencies between criteria and clusters, mentioning their influence values on a 0–4 scale. A linear transformation model quantified the compatibility level of a student profile to a learning material. The transformed DEMATEL results were incorporated in all the interdependencies among criteria. The unweighted supermatrix was normalized by cluster weights assigned by experts before the iterative computation led to the converging weighted supermatrix. The outcome was that the individual students made these final priority rankings for learning materials. A pilot experiment was carried out to validate the system, and the results revealed that in the experimental group, the personalized learning environment showed the maximum statistical improvement over the control group. The research was conducted in Croatia, and the participants were students (N = 77) from two public universities and one polytechnic. Ultimately, the newly developed combined ANP-DEMATEL approach was effective in an instantaneous result-optimized dynamic learning path generation, ensuring knowledge acquisition. This research further contributes to developing intelligent educational systems by demonstrating how ANP and DEMATEL can be used synergistically to improve e-learning personalization. Future work could include optimizing weight assignment strategies or using new learning contexts to further adaptivity.

MSC:

90B50; 68T05; 68U35; 97U50

1. Introduction

E-learning systems provide students with access to educational resources in digital form without spatial or temporal restrictions [1]. With the emergence of such systems, which require certain technological foundations and are expected to adapt to user needs, the paradigm of creating learning content has shifted. Learning Objects (LOs) have become the primary carriers of instructional material. Today, the requirements for LOs include reusability, applicability across different purposes, independence from specific technologies, and adaptability to integrate seamlessly into various modules. For example, the Moodle platform (https://moodle.org (accessed on 1 October 2025)) allows the display of individual lessons to be defined by predefined conditions; most commonly, it directs the learner to specific content based on responses to assessment questions, leveraging learning analytics.

The selection of the best-suitable LO considering the characteristics of both students and LOs is a multi-criteria decision-making (MCDM) problem. The criteria in this MCDM problem are precisely related to the characteristics of students and LOs. For example, students can have different learning styles—some are more visual, some are more verbal, etc. On the other hand, LOs can also be described using the same parameters, i.e., they can be evaluated based on learning style as well as other characteristics. Consequently, selecting the most suitable LO for specific students lies in identifying the LO whose evaluation through the characteristics is the most similar to the student’s profile.

It is necessary to integrate more advanced decision-making methods and consider a greater number of criteria for personalization to make adaptive e-learning systems more effective [2]. As the complexity of the system increases, so does the dynamics of its functionality, especially in the way it provides educational content to users [3]. This dynamism is realized through different adaptation criteria and decision-making methods governing their application, which constitute the subject of this study [4]. The focus is on individually personalized instruction, which implies a learner-centred approach that adapts to a single user and their characteristics [5]. In such systems, decisions must be made based on multiple parameters (criteria), which justifies the use of multi-criteria methods [6].

At first, MCDM methods based on the calculation of distances among the LO’s and students seem to be the most suitable for this analysis (ex. method TOPSIS). However, since we can identify the dependencies among the criteria and feedback among LOs and criteria, a network-based approach was needed. Our proposed solution is the DEMATEL-ANP (DEcision MAking Trial and Evaluation Laboratory—Analytic Network Process) approach. The base of this integration is ANP which enables modelling both, criteria and alternatives, which is the case with our problem. DEMATEL allows modelling only one type of element at time, but we used DEMATEL to decrease the complexity of ANP which is considered as one of the most complex methods. By combining the ANP with DEMATEL, we avoided making a large number of pairwise comparisons, problems with consistency, and enabled automatization in selection process. The description of how those two methods are integrated is presented further in the paper. At last, we combined our model with statistical analysis. Statistical analysis was used for system validation to confirm if the practical results are statistically significantly better in case when system was used compared to when it is not used.

The central hypothesis of this study is: “Users of a system for dynamically generating learning objects, where the selection of adaptation criteria are guided by the DEMATEL-ANP multi-criteria decision-making method, will achieve higher knowledge acquisition compared to users of a system that does not employ this method for assigning learning objects.”

The paper is organized as follows. Section 2 brings the state of the art related to the adaptivity in e-learning systems and application of networked methods, DEMATEL and ANP, in the area of e-learning. Further, Section 3 describes the application of methodology which is based on design science research process. Section 4 presents the results related to the system description and its evaluation in the real environment. Section 5 and Section 6 brings discussion and the conclusions of the paper.

2. State of the Art

2.1. Adaptivity in E-Learning Systems

Research on adaptivity in e-learning has evolved from early adaptive hypermedia to contemporary data-driven and AI-enhanced personalization. A foundational strand comes from adaptive hypermedia, which models user goals, preferences, and knowledge to tailor navigation and content. Seminal surveys synthesized core techniques such as adaptive navigation support and curriculum sequencing, establishing design principles that still underpin modern adaptive platforms [7,8,9].

With the development of intelligent learning systems, personalisation has been achieved by modelling the user and their needs. In modelling the user, or rather the data describing their profile, approaches such as overlay models, Bayesian and rule-based modelling, and constraint-based methods are often used. User modelling has been considered in relation to pedagogical goals and metacognitive support [10,11].

The research results support the positive effects of adaptive, intelligent learning systems compared to traditional teaching across various sample sizes, from medium to large, in line with the research design [12,13,14,15]. Meta-analyses focused on K–12 reading and mathematics show similar benefits, though they highlight heterogeneity driven by learner characteristics, task complexity, and type of adaptivity Thus, Xu et al. found that using an intelligent learning system significantly affected users’ reading comprehension [16].

Beyond meta-analyses, controlled experiments demonstrate that micro-level adaptive mechanisms—such as adaptive instruction granularity or elaborated feedback—produce measurable gains [17,18]. Many authors analyse the content and break it down into smaller components, with particular emphasis on content-specific aspects of adaptability in teaching practice, keeping the student at the centre along with the learning goals [19,20]. Randomized and quasi-experimental studies consistently report superior performance for adaptive conditions Recent university-level deployments of commercial or institution-built adaptive tools also show improvements in course scores and progression, though instructional alignment and feedback design moderate the observed effects [21]. Learning analytics enables the collection and analysis of data about students and their environment, optimising learning and allowing real-time adaptation of learning systems. Formative assessment, conducted throughout the learning process, is key to tailoring teaching activities to individual students and is a dynamic element of the competency framework that requires more attention [22,23].

At the same time, research has examined who benefits most from adaptive materials. Studies in higher education show that effectiveness can depend on student characteristics such as prior knowledge and self-regulation, which has implications for learner modeling and the granularity of adaptation rules E [24].

Personalised adaptive learning in higher education is a pedagogical approach that uses technology to tailor educational content to individual student needs. This method enables a more effective and engaging learning experience, particularly in online and hybrid environments. Research indicates overall improvement in academic performance and increased student engagement. Despite technological limitations, personalised adaptive learning shows significant potential for enhancing student success and engagement in higher education [25,26]. Analysis of adaptive learning systems primarily driven by learning style as the main criterion for adaptability highlights the importance of basing adaptability on pedagogical principles and learning performance, to avoid personalisation based on trivial criteria that lead to superficial results [27].

2.2. Review of DEMATEL and ANP Methods in E-Learning Systems

An analysis of previous research on the use of DEMATEL and ANP methods in e-learning systems has identified several approaches to integrating the DEMATEL-ANP methodology:

- using DEMATEL to analyze interrelationships among criteria, followed by ANP to compute weights [28,29];

- applying fuzzy DEMATEL to capture relationships under uncertainty, with Fuzzy ANP then determining the importance and priority of components [30];

- adopting hybrid models that combine DEMATEL and ANP with additional methods, such as fuzzy logic, to enable more comprehensive evaluations [31].

The combined use of these methods has mainly been directed toward evaluating learning materials, assessing the quality of higher education, and measuring the quality of e-learning services. The applied logical framework and evaluation system, based on the DEMATEL-ANP method, showed that digital learning ability has the greatest impact on other competences, while the application of digital tools and digital innovation are key components. The results of this research contribute to the theoretical understanding of teachers’ digital competence and offer new approaches for its quantitative assessment [32]. Reported advantages include identifying interdependencies among criteria, managing system uncertainties more effectively, and improving learner satisfaction. However, a widely acknowledged challenge remains the potential bias in expert judgments during pairwise comparisons of criteria. Hybrid applications of these two methods can yield valuable results when the strengths of both are properly utilized [33].

The integration of ANP and DEMATEL has been the focus of significant scholarly attention, given the importance of thoroughly analyzing and structuring decision-making problems in multi-criteria decision-making contexts. Multi-criteria decision-making has proven to be a powerful and structured approach for solving complex problems in education, allowing for more flexible evaluation of alternative educational solutions [34]. For example, Gölcük and Baykasoğlu, through an extensive review of over 500 articles and several books, classified integration approaches into the following categories [35]:

- Network-Relation Based: DEMATEL is used to structure the decision problem, and ANP computes element priorities.

- Inner Dependence: DEMATEL models intra-cluster dependencies by determining priorities of diagonal blocks, while ANP addresses the remaining relationships.

- Cluster-Weighted: cluster weights are derived by normalizing the network obtained from DEMATEL, which are then applied to the unweighted supermatrix to produce the weighted supermatrix.

- DANP: a comprehensive integration of the above three approaches, eliminating pairwise comparisons by conducting all steps through DEMATEL.

- Finally, more recent surveys point to a convergence between adaptive learning and learning analytics/AI. Adaptive feedback, personalized recommendations, and adaptive dashboards are increasingly informed by data-driven estimators of knowledge and engagement, expanding the design space for adaptive interventions in both individual and collaborative settings [36]

2.3. Research Gap

The present paper contributes by focusing on multi-criteria decision-making (MCDM) for adaptivity: modelling interdependencies among personalisation criteria and alternatives (learning objects) with DEMATEL, and computing priorities via ANP. As stated earlier, selecting the most suitable LO for a specific student involves considering criteria such as learning or cognitive style. The solution aims to find the best match between the student’s profile and the LO profile, while respecting the criteria. Since there are the dependencies between the criteria, a network-based method is needed to analyse the decision-making problem. The most well-known methods that support modelling the influences among the criteria and feedback in a network are the ANP. However, due to the high complexity of the ANP and the need for automation, we combined it with DEMATEL, thereby reducing the number of inputs required from decision makers and the potential for inconsistencies.

The designed method is integrated into a working web system and evaluated against a non-adaptive baseline, extending the adaptive e-learning literature with explicit causal modeling and network-based prioritization.

3. Methodology

3.1. Research Design

This study follows the principles of Design Science Research (DSR) as a methodological framework for developing and rigorously evaluating an innovative solution to a relevant problem in educational technology. The DSR paradigm emphasizes the creation and assessment of artifacts—such as models, methods, or systems—that address identified needs in practice and extend existing theoretical knowledge.

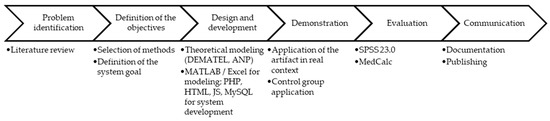

In line with canonical DSR guidelines [37,38] and reporting recommendations for presenting design science contributions [39], the research design comprised six sequential steps (Figure 1):

Figure 1.

Design Science Research steps.

- Problem identification and motivation—recognizing the limitations of existing adaptive learning management systems (LMSs), particularly their reliance on heuristic or rule-based personalization that fail to account for interdependencies among adaptation criteria.

- Definition of objectives for a solution—establishing design goals to improve personalization through the integration of multi-criteria decision-making (MCDM) methods, specifically DEMATEL and ANP, to model and prioritize adaptation criteria.

- Design and development of the artifact—constructing the DEMATEL–ANP framework and embedding it into a functional web-based learning system capable of dynamically generating learning objects tailored to learner profiles.

- Demonstration—implementing and operating the system in a real higher-education environment to observe its functionality and immediate impact on learning processes.

- Evaluation—empirically testing the system through an experimental study comparing two groups of students (experimental and control) to assess the effect of adaptive versus non-adaptive content assignment on learning outcomes.

- Communication—documenting and disseminating the artifact, results, and methodological contributions to both academic and practitioner communities.

The research design thus integrates both constructive and empirical dimensions. The constructive part involves developing the DEMATEL–ANP model and its implementation within a working e-learning system. The empirical part involves evaluating its effectiveness through a controlled experiment designed to test the main hypothesis: Users of a system for dynamically generating learning objects, where the application of adaptation criteria is guided by the DEMATEL–ANP multi-criteria decision-making method, will achieve better knowledge acquisition compared to users of a system that does not employ this method.

The following subsection presents the detailed case study and describes how the methodological framework was applied in practice, including the structure of the model, criteria selection, and system implementation.

3.2. Case Study Description

Figure 2 presents the application of the DSR methodology to the case study of the selection of a suitable LO considering the student’s profile. Further, after the figure, the steps of the methodology applied to the case study of selecting the suitable LO are presented in detail.

Figure 2.

Application of DSR to the case study.

3.2.1. Step 1: Problem Identification and Motivation

Clearly articulate the practical problem and justify the value of a solution. Establish the gap in existing approaches that the artifact will fill [37,40]. We target adaptive e-learning content selection: aligning learning objects (LOs) with individual learners’ learning styles, cognitive approaches, and targeted Bloom levels. Existing LMS personalization rules are often heuristic and do not explicitly handle interdependent criteria or feedback among criteria and alternatives, limiting optimality in dynamic content delivery.

3.2.2. Step 2: Define the Solution Objectives

In the second phase, we had to translate the problem into design objectives and requirements—functional (what the artifact must do) and quality (how well), anchored in relevant theory. Objectives should be measurable when possible [38].

In this study, objectives were to:

- Model interdependencies among adaptation criteria;

- Prioritize candidate LOs per learner profile;

- Integrate the model into a working web system for real-time LO assignment; and

- Improve learning outcomes vs. a baseline (random LO assignment) in a controlled pilot.

Theoretical anchors include ANP for prioritization under dependence and feedback [41] and DEMATEL for causal structure discovery and influence measurement [42]. Target competencies are expressed via the revised Bloom taxonomy [43].

3.2.3. Step 3: Design and Development of the Artifact

In the third step, we build the artifact (constructs, models, methods, instantiations) that embodies the objectives. Make design choices explicit and grounded; link to prior theory [37,40]. In our study,

- Method-level artifact (ANP–DEMATEL framework).

- Structure discovery: DEMATEL elicits influences (0–4 scale) among criteria and clusters.

- Profile–content compatibility: We devised linear mappings from absolute profile–LO differences to DEMATEL influence scores (e.g., for style/cognitive criteria on , map and ; for Bloom level difference on , map and ).

- Prioritization under dependence: The unweighted supermatrix is formed from these influence relations, normalized within clusters, and then weighted by cluster weights from experts to obtain the weighted supermatrix; repeated powering yields limit priorities for LOs [41].

- System-level instantiation (web application).

- Architecture & tech: PHP/HTML/JavaScript frontend with MySQL persistence.

- Modules: (i) initial learner profiling; (ii) student module (pre-test, learning flow, progress); (iii) course domain with LO repository; (iv) decision rules; (v) content-generation engine implementing the ANP–DEMATEL method; (vi) evaluation module; (vii) user interface.

- Criteria & clusters: learning style (, , ); cognitive approach (deep, surface); learning objective level (revised Bloom); and alternatives (candidate LOs). The DEMATEL network specifies intra/inter-cluster links that feed the ANP supermatrix.

3.2.4. Step 4: Demonstration

In the fourth step, we show the artefact working to solve the problem in a relevant setting [38]. In this study. We integrated the method into the live system and dynamically assigned LOs during learning sessions. Two conditions were demonstrated:

- Experimental: assignment by the ANP–DEMATEL engine aligned to the learner’s profile and target Bloom level.

- Control: random LO assignment under the same content coverage.

Experimental and control group results will be further used to evaluate the system. This is the crucial part in artifact evaluation and its acceptance.

3.2.5. Step 5: Evaluation

The fifth step should bring rigorous assessment of how well the artefact meets the objectives using appropriate metrics (utility, quality, efficacy). DSR recognizes diverse evaluation strategies: analytical, experimental, observational, testing, and descriptive [37,39]. In our study, we implemented

- Design-evaluation focus. We evaluate effectiveness (learning outcomes) and process quality (first-attempt success).

- Participants & setting. University students from three Croatian HEIs participated in scheduled lab sessions using the system.

- Measures.

- The first-attempt LO mastery (points) across content segments;

- Total knowledge test score at the end.

- Analysis. Between-group comparisons (experimental vs. control) via independent-samples t-tests showed statistically significant improvements (p < 0.05) for the ANP–DEMATEL condition on both measures.

- Interpretation. Results support that modeling interdependencies (DEMATEL) and network-based prioritization (ANP) yield more effective personalization than non-model-based assignment.

3.2.6. Step 6: Communication

The last step means to communicate the problem, artifact, design, and evaluation to academic and practitioner audiences, clarifying contributions, generalizability, and limitations [39]. In this study, we document the method (ANP–DEMATEL), the instantiated system, and the pilot evaluation, and we outline avenues for replication, extension (e.g., weight-learning strategies), and deployment in broader e-learning contexts.

4. Results

4.1. System for Dynamic Content Generation in LMS

4.1.1. The Description of System’s Components

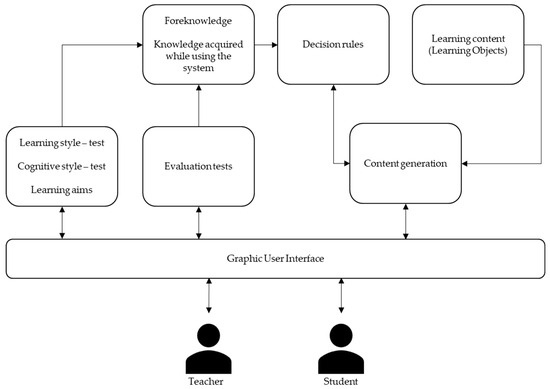

For the purpose of investigating the application of a system for individually personalized learning, we developed a prototype whose components are described below. Building on prior research in Intelligent Adaptive Hypermedia Learning Systems, the system comprises several core modules: an initial student module, a student module, a course domain, a decision-rules module, an evaluation module, a content-generation module, and a user interface module. A schematic representation of the system is provided in Figure 3.

Figure 3.

Schematic representation of the system for dynamic generation of learning objects as support for individually personalized instruction.

The primary purpose of the system is to support individually personalized instruction by assigning each student appropriate learning objects during the learning process. The assignment is based on the student’s characteristics, such as learning goals, learning style, and cognitive style, using the multi-criteria decision-making method DEMATEL-ANP. The system has been implemented as a web application, and its main principles of use are described below. The implementation relies on open-source web technologies including PHP, HTML, JavaScript, and MySQL. Data are stored in a relational database using a traditional entity–relationship model, implemented via phpMyAdmin on a MySQL server. A brief description of the main components follows.

- User Interface. The user interface encompasses activities accessible to both students and instructors. After logging into the system, students can complete a prior knowledge test and then proceed with learning activities. The interface is intentionally simple, with minimal functionality, to avoid distracting students and to ensure immediate usability without additional preparation. Instructors can assess learning objects within the course domain and define the desired levels of learning objectives.

- Initial Student Module. This module handles the creation of a student profile, including results from tests that determine cognitive style and learning style. Both tests rely on validated questionnaires: the (visual, auditory, kinesthetic) Learning Styles Questionnaire by Chislett and Chapman, and the Study Process Questionnaire for cognitive style (approach to learning) by Biggs [44,45]. The reliability of the instruments was tested by calculating Cronbach’s alpha coefficient for both questionnaires. For the VAK instrument it is 0.929 and for the cognitive style instrument it is 0.612. Both values indicate satisfactory internal consistency and reliability of the instruments. Students complete these tests upon their first entry into the system.

- Student Module. This module monitors activities related to prior knowledge as well as knowledge acquisition during system use. Upon entry, students take a prior knowledge test; only those achieving more than 90% (equivalent to the highest grade, “excellent,” in Croatia) proceed to the learning activities. The module also tracks knowledge acquisition progress, earned points, success on first or second attempts at answering questions, and overall achievement at the end of system use.

- Evaluation Module. The evaluation module records the attained knowledge level, specifically the results of learning object assessments conducted by the instructor.

- Course Domain. This component represents the repository of learning objects that make up the content of a particular course.

- Decision-Rules Module. This module defines decision rules for selecting learning content, as well as rules for selecting questions associated with specific learning objects. These rules are aligned with the learning objectives specified by the instructor for the course.

- Content Generation Module. At the core of the system is the content generation module, which applies an algorithm to decide which learning object should be assigned to a given student profile. This decision-making relies on the ANP method for multi-criteria analysis. ANP is particularly suitable because it accounts for dependencies among criteria (e.g., between learning style and cognitive style) and feedback relationships between alternatives and criteria. Other decision-making methods do not model these characteristics, which are essential in learning scenarios where dependencies and feedback loops are inherent. Adapting an e-learning system to individual learner needs ultimately represents a decision-making problem involving multiple alternatives or scenarios, some of which are more compatible with a given learner than others.

4.1.2. The Functionalities of the System (Content Generation Module)

In this subsection, we provide a detailed description of how the Content Generation Module is designed. To develop the Content Generation Module, we had to:

- Define user characteristics and other adaptation criteria to be incorporated into the module.

- Structure the decision network. If adaptation criteria are complex and can be decomposed into lower-level elements (e.g., learning styles broken down into specific subtypes), these must be identified. Clusters and nodes are then formed in the graph. Next, dependencies among criteria and clusters are identified to establish the network structure.

- Specify value ranges for all criteria (nodes in the network).

- Define instruments for measuring user values across all criteria in order to identify the “ideal” alternative.

- Develop the automation rules mentioned earlier, i.e., the algorithms that encode dependencies among criteria and links between alternatives and criteria into the model of the system for dynamic generation of learning content, supporting individually personalized instruction.

Furthermore, in the application phase of the module (system), the following steps are carried out:

- 6.

- Identify the alternatives in the decision-making problem and describe them in terms of the network criteria using the defined instruments.

- 7.

- Select the best alternative.

In the first step, focusing on user characteristics, a prior study by Gligora Marković, Kadoić, and Kovačić was conducted [46]. Based on that research, three adaptation criteria were selected: the learner’s learning goal, learning style, and cognitive learning style.

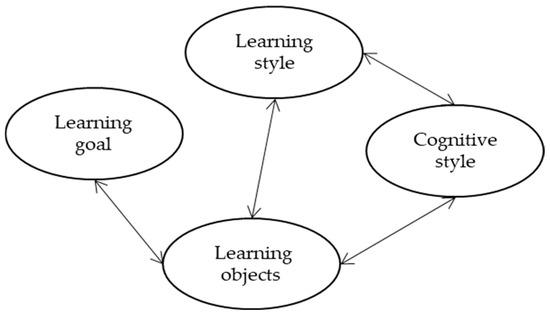

In the second step, the decision problem was structured, resulting in the creation of a decision network consisting of clusters and nodes, along with the identification of relationships among them. Within the terminology of the ANP method, the network contains three types of clusters: the goal cluster, the adaptation criteria clusters of the system for dynamic generation of learning objects, and the cluster of alternatives in the decision-making problem.

Expert analysis yielded the network model of criteria and clusters. Four clusters are included in the model: learning style, cognitive style, learning goal, and alternatives. However, if the model contains the goal cluster and the appropriate inputs (weights of criteria with respect to the goal) are included in the calculation of the limit matrix (the powering step in ANP), those values—regardless of their magnitude—do not influence the final priorities of the alternatives [47]. For this reason, the goal cluster was excluded from the present model.

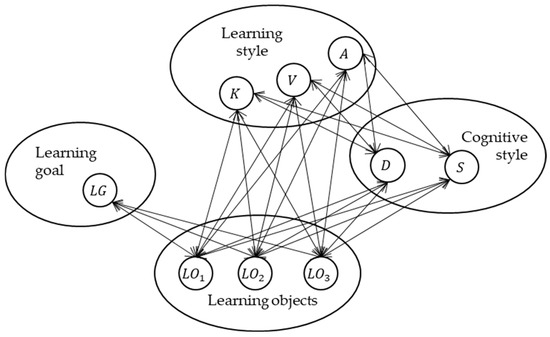

The learning style cluster contains three nodes, , , and , corresponding to the levels of intensity of the individual learning styles (visual, auditory, and kinesthetic). The cognitive style cluster consists of two criteria, and , which represent the learner’s approach to studying (deep or surface). The learning goal cluster has a single node, the learning goal, indicating the required level of learning according to Bloom’s taxonomy. Finally, the alternative cluster contains the learning objects.

Figure 4 presents the structured decision problem at the cluster level, accompanied by a table displaying the inter-cluster relationships. Furthermore, Figure 5 shows the decision problem model at the node-connection level, with only three alternatives included for clarity. Finally, Table 1 presents the connections among the nodes. The grey-shaded areas in Table 1 are related to the same-cluster membership.

Figure 4.

Decision problem model at the cluster level.

Figure 5.

Decision problem model (node-level representation).

Table 1.

Matrix of dependencies among the clusters’ nodes.

In the third step, intervals of values for all criteria (nodes in the network) were defined as follows:

- Nodes , , are measured as percentages, with values ranging from , and their sum must equal .

- Nodes and are determined on a scale from to , representing the cognitive learning style or approach (deep or surface). Each of them can reach a maximum of , and their values are also expressed as percentages independently.

- Node is defined on a scale from to , corresponding to the levels of Bloom’s taxonomy.

- Nodes , , …, () are defined according to the instrument for learning object evaluation developed by Gligora Marković [48]. For the purpose of examining the reliability of the instrument, the Cronbach’s alpha coefficient was calculated, yielding a value of 0.9165 [48]. Learning objects are evaluated with respect to the criteria, using the value intervals described above.

In the fourth step, the values of the “ideal” alternative are determined across the three specified criteria, as presented in Table 2.

Table 2.

Criteria for determining the “ideal” alternative.

In the fifth step, it is necessary to define how the ANP supermatrix will be populated with priorities of nodes in relation to other nodes. The input data for the ANP model are:

- Data on the student’s/learning object’s learning styles (labels: , , )

- Data on the student’s/learning object’s targeted cognitive style (labels: , )

- Data on the required learning goal, defined by the instructor for the student/learning object (label: )

- Input data for the ANP decision-making model that define the alternatives (learning objects, LOs). These values are determined based on evaluations by experts (instructors or domain specialists in teaching). For illustrative purposes, three learning objects are included in the connection matrix to demonstrate the application of the ANP multi-criteria decision-making method, although in theory the number of alternatives may be .

The supermatrix is filled based on the connection matrices among nodes, as shown in matrix . To structure the decision-making problem, the DEMATEL method was applied, using its evaluation scale from 0 to 4, where 0 represents the lowest level of influence and 4 represents a very high level of influence.

Let denote the difference between the values of the student’s and learning object’s learning style and cognitive style criteria, expressed as percentages. These values range from to (). The indicates no difference between the criterion values of the student and the learning object (), while indicates the maximum possible difference (e.g., the student’s criterion value is and the ’s value is ). All other differences fall within this interval.

We define a linear function , where is the value on the DEMATEL scale and . When the difference in values between the student and the is zero, according to the DEMATEL method this represents a strong match, and the scale assigns it a high influence value. Conversely, when the difference is large, this represents weak alignment and thus low influence. This constitutes a novel way of integrating ANP and DEMATEL methods, not yet present in the literature.

In terms of the possible values of the learning style and cognitive style criteria for students and LOs, this means that when , we set ; and when , we set . The graph of this linear function is a straight line obtained from the equation of the line passing through two points: and defined as described above.

Further we apply:

and get:

Since represents the difference between the values of a student’s and a learning object’s criteria, it can be either positive or negative. However, because the focus is on the magnitude of the difference, the formula for calculation uses the absolute value of this difference.

This calculation approach was also applied to compare the nodes of the learning style and cognitive approach criteria between students and learning objects.

To calculate the influence values of the connection between the learning goal criterion and the learning objects, a linear function was also defined. The learning goal criterion is expressed as a level (represented numerically) from the first to the sixth level. Thus, , representing the difference between the student’s and the learning object’s learning goal, belongs to the set , while takes values from the DEMATEL scale.

When the learning goal levels are identical, this represents a strong match between the student and the learning object, corresponding to a high influence value on the DEMATEL scale. In this case, and . The maximum possible difference between the student’s and the learning object’s learning goal levels is , which corresponds to weak alignment, or a low influence value, where . Finally, we get

This equation defines the calculation formula for comparing the nodes of the student’s and learning objects’ learning goal criteria.

The comparison of the cognitive style nodes and the learning style nodes was determined through expert judgment and literature [49]. Since the selected learning style criterion has three forms, the influence value of learning style on cognitive style was evenly distributed, with a value of assigned to each form.

The initial DEMATEL supermatrix is presented as matrix X. This supermatrix is to be normalised by sum of all values in the same cluster and column to obtain the unweighted supermatrix (). The unweighted supermatrix has to be further transformed into the weighted supermatrix (), respecting the regular ANP steps. For this purpose, the cluster matrix has to be created ().

Cluster weights can be determined either by direct assessment or by pairwise comparisons, both provided by experts while accounting for the relationships among clusters. The values in the matrix are determined by experts in the field who participated in a focus group. A total of seven experts participated in the focus group. Their expertise is in the fields of education, multi-criteria decision making, theory of learning and informatics.

Filling the matrix of clusters is challenging in ANP, in general, because pairwise comparisons of different types of clusters (for example, criteria and alternatives with respect to criteria) are often vague and incomprehensible. In our case, the highest challenge is related to the second column () because experts need to evaluate which cluster, or , has a higher impact on the cluster . It is hard to imagine those kinds of influences and evaluate them. So, in this case (column 2 ()), longer discussion was held, and it was decided to assess those influences directly. Higher priority was given to cluster because, in general, alternatives more influence the criteria (like in columns 1 () and 3 ()). Additionally, focus group included an analysis of large number of demo examples (see one in Section 4.2). Consequently, it was possible to conduct the sensitivity analysis of how those two priorities in column 2 () influence the selection of suitable learning object in demo examples. The conclusion was that change of those two weights (0.2–0.4 and 0.8–0.6) have no influence on the decision, so experts decided for 0.3 and 0.7 as weights in second column () with full consensus.

Finally, the weights in this case were established as follows:

- Among the remaining clusters, only the cluster of learning objects () cluster influences the cluster. Therefore, in the matrix row for and column for , the value 1 is entered, while all other values are 0. Here, no direct assessment or pairwise comparison was needed, since only one cluster influences .

- The two clusters influence cluster: and . Experts assessed that influences with a weight of 0.3, and with a weight of 0.7. The cluster is influenced solely by the cluster, so a single value of 1 appears in the column.

- The cluster is influenced by all remaining clusters. Experts judged that the clusters , , and exert equal influence; therefore, each was assigned a weight of . (The sum of all cluster weights in a column must equal 1.)

The weighted supermatrix obtained in this way is multiplied by itself repeatedly until a matrix with identical columns is reached. At this stage, the priority values of the elements in the Learning objectives cluster can be read directly from the rows corresponding to its elements.

The sixth step is implemented in the system within the course domain module, where the learning objects (i.e., alternatives) are stored. The decision-making process for selecting the best learning objective is carried out in the content generation module, where the priorities of all alternatives are determined (the last step).

4.2. Demonstration of the Artifact

In this subsection we demonstrate the artefact using an example. Let us assume that student profile is Table 3:

Table 3.

Demo data about the student and learning objects.

- Learning style (): score is 30, score is 40, score is 30,

- Cognitive style (): percentage is 80%, percentage is 20%,

- Learning goal (): = 1.

Similarly, three learning objects are evaluated respecting the same criteria as presented in Table 3.

Using the data from Table 3, we create an initial supermatrix using (Section 4.1.2). For example, the first value 3.8 in the row is calculated using . Since and , we get:

| 0 | 0 | 0 | 0.333333333 | 0.333333333 | 0 | 3.8 | 3.6 | 3 | ||

| 0 | 0 | 0 | 0.333333333 | 0.333333333 | 0 | 3.8 | 4 | 4 | ||

| 0 | 0 | 0 | 0.333333333 | 0.333333333 | 0 | 4 | 3.6 | 3 | ||

| 0 | 0 | 0 | 0 | 0 | 0 | 3.8 | 3.2 | 4 | ||

| 0 | 0 | 0 | 0 | 0 | 0 | 4 | 3.6 | 3.6 | ||

| 0 | 0 | 0 | 0 | 0 | 0 | 4 | 2.4 | 3.2 | ||

| 3.8 | 3.8 | 4 | 3.8 | 4 | 4 | 0 | 0 | 0 | ||

| 3.6 | 4 | 3.6 | 3.2 | 3.6 | 2.4 | 0 | 0 | 0 | ||

| 3 | 4 | 3 | 4 | 3.6 | 3.2 | 0 | 0 | 0 |

In the next step, an unweighted supermatrix, , is to be created by normalising columns respecting cluster membership.

| 0.0000 | 0.0000 | 0.0000 | 0.3333 | 0.3333 | 0.0000 | 0.3276 | 0.3214 | 0.3000 | ||

| 0.0000 | 0.0000 | 0.0000 | 0.3333 | 0.3333 | 0.0000 | 0.3276 | 0.3571 | 0.4000 | ||

| 0.0000 | 0.0000 | 0.0000 | 0.3333 | 0.3333 | 0.0000 | 0.3448 | 0.3214 | 0.3000 | ||

| 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.4872 | 0.4706 | 0.5263 | ||

| 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.5128 | 0.5294 | 0.4737 | ||

| 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 1.0000 | 1.0000 | 1.0000 | ||

| 0.3654 | 0.3220 | 0.3774 | 0.3455 | 0.3571 | 0.4167 | 0.0000 | 0.0000 | 0.0000 | ||

| 0.3462 | 0.3390 | 0.3396 | 0.2909 | 0.3214 | 0.2500 | 0.0000 | 0.0000 | 0.0000 | ||

| 0.2885 | 0.3390 | 0.2830 | 0.3636 | 0.3214 | 0.3333 | 0.0000 | 0.0000 | 0.0000 |

Furthermore, a weighted supermatrix, , was created by multiplying the unweighted supermatrix by the matrix of cluster (which is presented in Section 4.1.2) respecting the cluster membership (like in usual ANP).

| 0.0000 | 0.0000 | 0.0000 | 0.1000 | 0.1000 | 0.0000 | 0.1092 | 0.1071 | 0.1000 | ||

| 0.0000 | 0.0000 | 0.0000 | 0.1000 | 0.1000 | 0.0000 | 0.1092 | 0.1190 | 0.1333 | ||

| 0.0000 | 0.0000 | 0.0000 | 0.1000 | 0.1000 | 0.0000 | 0.1149 | 0.1071 | 0.1000 | ||

| 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.1624 | 0.1569 | 0.1754 | ||

| 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.1709 | 0.1765 | 0.1579 | ||

| 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.3333 | 0.3333 | 0.3333 | ||

| 0.3654 | 0.3220 | 0.3774 | 0.2418 | 0.2500 | 0.4167 | 0.0000 | 0.0000 | 0.0000 | ||

| 0.3462 | 0.3390 | 0.3396 | 0.2036 | 0.2250 | 0.2500 | 0.0000 | 0.0000 | 0.0000 | ||

| 0.2885 | 0.3390 | 0.2830 | 0.2545 | 0.2250 | 0.3333 | 0.0000 | 0.0000 | 0.0000 |

Finally, the unweighted supermatrix was powered to until convergence, and after, in this demo example, in , we achieved convergence at six decimal places, and limit supermatrix ().

| 0.0662 | 0.0662 | 0.0662 | 0.0662 | 0.0662 | 0.0662 | 0.0662 | 0.0662 | 0.0662 | ||

| 0.0730 | 0.0730 | 0.0730 | 0.0730 | 0.0730 | 0.0730 | 0.0730 | 0.0730 | 0.0730 | ||

| 0.0672 | 0.0672 | 0.0672 | 0.0672 | 0.0672 | 0.0672 | 0.0672 | 0.0672 | 0.0672 | ||

| 0.0785 | 0.0785 | 0.0785 | 0.0785 | 0.0785 | 0.0785 | 0.0785 | 0.0785 | 0.0785 | ||

| 0.0802 | 0.0802 | 0.0802 | 0.0802 | 0.0802 | 0.0802 | 0.0802 | 0.0802 | 0.0802 | ||

| 0.1587 | 0.1587 | 0.1587 | 0.1587 | 0.1587 | 0.1587 | 0.1587 | 0.1587 | 0.1587 | ||

| 0.1782 | 0.1782 | 0.1782 | 0.1782 | 0.1782 | 0.1782 | 0.1782 | 0.1782 | 0.1782 | ||

| 0.1442 | 0.1442 | 0.1442 | 0.1442 | 0.1442 | 0.1442 | 0.1442 | 0.1442 | 0.1442 | ||

| 0.1538 | 0.1538 | 0.1538 | 0.1538 | 0.1538 | 0.1538 | 0.1538 | 0.1538 | 0.1538 |

After convergence, we were able to conclude that the highest priority is achieved by which is the most suitable learning object for the student.

4.3. Evaluation of the System

4.3.1. Description of the Procedure Conducted to Evaluate the System

For the purpose of testing the system, a study was conducted with students from three higher education institutions: the University of Split (Department of Teacher Education, undergraduate and graduate levels), the University of Rijeka (Faculty of Medicine, program in Dental Medicine, integrated undergraduate and graduate study), and the Polytechnic of Rijeka (undergraduate professional study in Telematics). The sample was purposive, aligned with the target group—students whose prior knowledge level was below 80% in the subject Colors and Color Models, and who had not yet had the opportunity to acquire knowledge in this area during their studies.

The study was carried out in two sessions. For the second session, it was necessary to secure computer-equipped classrooms and the cooperation of lecturers willing to allocate part of their teaching hours for the research. Organizing these conditions required additional effort from the researchers.

At the beginning of the study (first session), 100 students participated. They were informed about the details of the research and signed informed consent forms. All participants were adults and took part voluntarily. In the second session, 88 students participated. At that time, each participant received a copy of their signed informed consent for personal records, while the originals were stored by the researchers. The students then used the e-learning system: first, they completed a prior knowledge test on the topic Colors and Color Models, followed by learning activities related to the same content. After completing the prior knowledge test, participants were not given feedback on their results but instead immediately proceeded to the learning content. The prior knowledge test consisted of basic questions related to the subject area, whereas the knowledge assessment test included a larger number of questions designed to evaluate more detailed mastery of individual concepts within the topic Colors and Color Models.

As students worked through the content, their progress depended on performance. If they successfully mastered a particular section, the system assigned them the next learning object—using the DEMATEL-ANP multi-criteria decision-making method for one group, and random assignment for the other. If they failed to master a section, the system reassigned them another learning object of the same content: again, for one group this was prioritized using DEMATEL-ANP, while for the other it was chosen randomly. After a second attempt at the same content, regardless of performance, all students proceeded to the next section. At the end of the session, participants received an overview of all their answers; for incorrect responses, the system provided feedback indicating the correct answers. After completion of the second part of the study, of the 88 participants, three achieved a prior knowledge test score above 80% and were therefore excluded from further analysis. In addition, eight participants did not complete the use of the system, leaving their data incomplete; these cases were also excluded. The analysis described below is thus based on 77 complete responses.

The collected data were processed using SPSS Statistics 23.0 (SPSS Inc., 2019) and MedCalc Statistical Software version 18.11.3 (MedCalc Software bvba, 2019), and the results are presented in the following sections.

4.3.2. System Evaluation Results

Data were collected on participants’ demographics, including gender, current institution of study, and year of study, as well as data generated during system use. The demographic characteristics are presented in Table 4. Most participants were female, with an approximately equal number enrolled at the University of Split and the Polytechnic of Rijeka. The majority were studying at the undergraduate level, i.e., within the first three years of their studies.

Table 4.

Demographic data about the students.

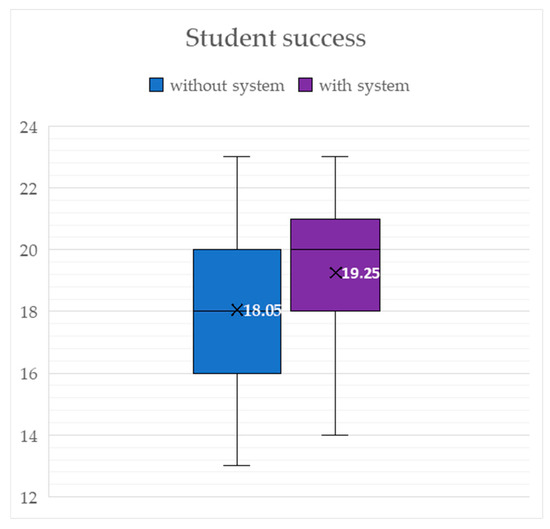

The knowledge acquisition after the first use of each learning object showed that the group using the system with the DEMATEL-ANP algorithm achieved an average score of 19.25 points ( = 40), whereas the group using the system without the DEMATEL-ANP algorithm ( = 37) achieved an average of 18.05 points (Figure 6). Results of the t-test revealed a statistically significant difference at the level of (, ), indicating that students who used the system with the DEMATEL-ANP algorithm performed significantly better after their first exposure to each learning object than those who used the system without the algorithm (Table 5).

Figure 6.

Box-plot of student success results (first system use).

Table 5.

t-test results.

To evaluate the practical significance of the difference between two independent groups, Cohen’s d was calculated based on the results of an independent samples t-test. The mean score of Group 1 (without system) was 18.05 (SD = 2.49), while Group 2 (with system) had a mean score of 19.25 (SD = 2.05). The sample sizes were n1 = 37 and n2 = 40. Cohen’s d was then calculated (d = 0.53). This value indicates a moderate effect size, suggesting that the observed difference between the two groups are meaningful in practical terms.

This finding suggests that even short-term engagement with adaptively selected learning content can lead to measurable improvements in immediate knowledge acquisition. The observed effect indicates that personalization based on learning style, cognitive style, and learning goals helps students process and retain new information more effectively, reducing the need for repeated exposure to the same content. Such an outcome is especially relevant in learning environments where time efficiency and the ability to quickly grasp new concepts are critical.

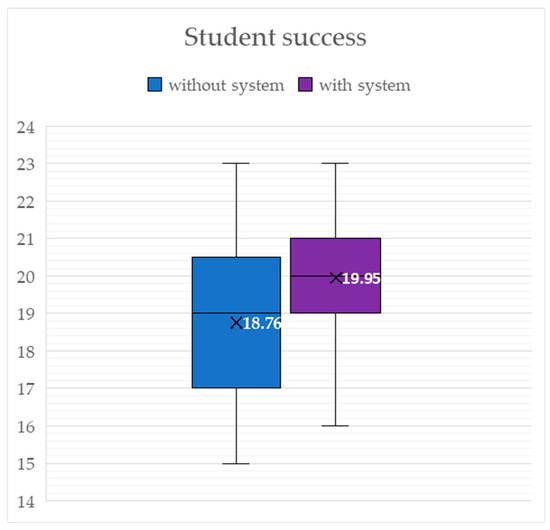

Furthermore, students using the system without the DEMATEL-ANP algorithm (with learning objects assigned randomly) achieved an average score of 18.76 points on the final knowledge test. By contrast, students whose learning objects were assigned through the DEMATEL-ANP algorithm, tailored to their individual profiles, achieved an average score of 19.95 points (Figure 7). Results of the t-test (Table 6) again showed a statistically significant difference at the level (, ).

Figure 7.

Box-plot of student success results (final system use).

Table 6.

t-test results.

To evaluate the practical significance of the difference between two independent groups, Cohen’s d was calculated based on the results of an independent samples t-test. The mean score of Group 1 (without system) was 18.76 (SD = 2.22), while Group 2 (with system) had a mean score of 19.95 (SD = 1.75). The sample sizes were n1 = 37 and n2 = 40. Cohen’s d was then calculated (d = 0.6). This value indicates a moderate to large effect size, suggesting that the observed difference between the two groups are meaningful in practical terms.

These results confirm that the advantages of the adaptive algorithm are not limited to immediate, short-term gains but also extend to more comprehensive knowledge acquisition assessed after the full learning process. The higher performance of the experimental group suggests that personalization contributes to deeper understanding and integration of content, rather than superficial recall. Taken together, both analyses provide strong evidence that embedding a DEMATEL-ANP framework into adaptive e-learning systems can enhance both efficiency in initial learning and effectiveness in overall knowledge outcomes.

Both findings support the hypothesis that users of a system for dynamically generating learning objects, where the application of adaptation criteria is guided by the DEMATEL-ANP multi-criteria decision-making method, will achieve better knowledge acquisition compared to users of a system that does not employ this method. The evidence from both stages of evaluation strengthens this claim: in the short term, students in the experimental group demonstrated superior performance after their first exposure to new learning objects, showing that adaptive selection promotes faster and more effective initial comprehension. In the longer term, the same group achieved significantly higher scores on the final knowledge test, indicating that the benefits of the DEMATEL-ANP algorithm extend beyond immediate recall and contribute to deeper and more durable learning. Taken together, these findings provide robust empirical confirmation that personalization grounded in multi-criteria decision-making not only enhances efficiency during learning but also improves overall knowledge outcomes, thereby validating the central hypothesis of the study.

5. Discussion

The results of this study demonstrate that applying a combined DEMATEL-ANP multi-criteria decision-making framework to adaptive e-learning can significantly improve students’ knowledge acquisition compared to non-adaptive or randomly adaptive systems. Students who engaged with the system in which learning objects were assigned based on their learning style, cognitive style, and learning goals achieved higher scores both after the first attempt at learning new content and in the overall knowledge test. These findings confirm the study hypothesis and provide empirical evidence that multi-criteria decision-making methods can add value to personalization in e-learning environments.

Our results align with earlier findings in adaptive learning research. Previous studies have shown that adaptivity improves learning outcomes by aligning instructional materials with learner characteristics [7,24]. Meta-analyses of intelligent tutoring systems have also demonstrated that adaptive systems outperform traditional and non-adaptive systems in terms of effectiveness [14,16]. The present study extends this line of work by introducing a network-based approach, in which DEMATEL structures interdependencies among criteria and ANP determines the relative priorities of alternatives.

This research makes several important contributions:

- Novel methodological integration. While DEMATEL and ANP have previously been combined in hybrid models [28,29,30,50], this study introduces a new integration mechanism. By linearly mapping differences between student and learning object profiles onto the DEMATEL influence scale and embedding these values into the ANP supermatrix, the model captures both dependencies among criteria and feedback loops between alternatives and criteria. This approach has not been documented in the adaptive learning literature to date.

- Operationalization of personalization through MCDM. Unlike many adaptive systems that rely on rule-based or data-driven personalization, our system applies a formalized multi-criteria decision-making framework. This enables a more transparent and theoretically grounded mechanism for content adaptation.

- Empirical validation in a real educational context. The study provides experimental evidence, with statistically significant improvements in learning outcomes, that validates the utility of the DEMATEL-ANP framework in actual classroom settings.

Compared with existing adaptive e-learning systems, which often focus on limited personalization parameters (e.g., navigation, sequencing, or content recommendation based on prior performance), the proposed system integrates multiple learner characteristics simultaneously—learning style, cognitive style, and learning goals—while also accounting for their interdependencies. This multi-layered modelling approach distinguishes the present system from conventional adaptive platforms and enhances its ability to deliver individualised learning paths in a more systematic manner.

Although the study demonstrates the potential of the DEMATEL-ANP approach, several avenues remain for further exploration:

- Expanding adaptation criteria. Future work could include learner motivation, self-regulated learning skills, affective states, or engagement metrics to enrich the personalization model.

- Automated weighting strategies. While expert judgment was central to the current study, machine learning and learning analytics could provide adaptive, data-driven methods for assigning weights and refining decision rules.

- Cross-domain and longitudinal validation. Replicating the study in different subject areas, with larger and more diverse student populations, and tracking long-term learning outcomes would enhance the generalizability of results.

- Integration with emerging technologies. Incorporating artificial intelligence techniques such as recommender systems, Bayesian networks, or deep learning could further optimize the dynamic allocation of learning objects.

In sum, this study contributes theoretically by presenting a novel way of integrating DEMATEL and ANP in adaptive learning, and practically by demonstrating the measurable benefits of this approach in improving student outcomes. It advances the literature on personalization in e-learning by showing how multi-criteria decision-making can be systematically embedded into intelligent educational systems, thereby supporting more effective, student-centered learning.

In this way, the described integration of DEMATEL and ANP methods extends their application beyond traditional economic-management engineering roles [51]. This combination of methods enhances analytical precision in decision-making [52]. Although the AHP method is the most widely used multi-criteria decision-making method globally, it does not offer the same capabilities as ANP for modelling the interdependence of criteria, which was required in this work, where ANP was fully utilised in the application of adaptive e-learning systems [53].

6. Conclusions

This paper presented a novel framework for adaptive e-learning based on the integration of the DEMATEL and ANP multi-criteria decision-making methods. The study addressed the problem of dynamically assigning learning objects to students by considering three key personalization criteria—learning style, cognitive style, and learning goals. The DEMATEL method was used to model interdependencies among criteria, while ANP was employed to determine the priorities of alternatives. A web-based prototype system was developed to implement this approach, and its effectiveness was validated through an experimental study with university students. The DEMATEL-ANP integration was further followed with statistical analysis which is used for validation of a system. By using t-test methods we determined if the knowledge of students who used the system evaluated through tests is statistically higher than the knowledge of students which did not use the system.

The findings show that students who used the system with the DEMATEL-ANP algorithm achieved significantly better results, both in terms of immediate learning gains after the first attempt with each learning object and in overall knowledge acquisition, compared to students who used a non-adaptive system with randomly assigned learning objects. These results confirm the main hypothesis and highlight the potential of formal multi-criteria decision-making approaches for improving personalization in e-learning.

Based on the analysis, the main conclusions are:

- Integration of DEMATEL and ANP provides a robust foundation for adaptive e-learning by capturing dependencies among personalization criteria and enabling transparent prioritization of learning objects.

- Personalization based on learning style, cognitive style, and learning goals significantly improves student learning outcomes compared to random assignments.

- Expert judgment remains a valuable element in structuring and weighting decision models, though future work may combine this with data-driven approaches for greater scalability.

- The proposed framework represents a scientific advancement over conventional adaptive systems, as it explicitly models interdependencies and feedback relationships that are typically ignored.

Overall, the study contributes both theoretically and practically to the development of intelligent educational systems. It demonstrates that embedding multi-criteria decision-making methods into adaptive e-learning can enhance student-centered learning and improve knowledge acquisition. The limitations of the research are primarily in the smaller number of subjects, the complexity of the experimental research in which a large number of variables were analysed, and the consequent lack of control over confounding variables in the statistical analysis.

Future research will focus on extending the range of personalization criteria, exploring automated weight assignment techniques, and validating the approach across diverse learning contexts.

Author Contributions

Conceptualization, M.G.M. and B.K.; methodology, M.G.M., N.K. and B.K.; validation, M.G.M. and B.K.; formal analysis, M.G.M., N.K. and B.K.; investigation, M.G.M.; resources, M.G.M. and B.K.; data curation, M.G.M.; writing—original draft preparation, M.G.M., N.K. and B.K.; writing—review and editing, M.G.M., N.K. and B.K.; visualization, M.G.M. and N.K.; supervision, B.K. and N.K.; project administration, B.K.; funding acquisition, B.K. All authors have read and agreed to the published version of the manuscript.

Funding

The research was funded by the University of Rijeka project entitled “Learning analytics in e-learning systems based on interactive data visualization assisted by data mining”. The project ID is uniri-iskusni-drustv-23-270.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ANP | Analytic Network Process |

| DEMATEL | Decision-Making Trial and Evaluation Laboratory |

| DANP | DEMATEL-based Analytic Network Process |

| MCDM | Multi-Criteria Decision-Making |

| DSR | Design Science Research |

| LO | Learning Object |

| LMS | Learning Management System |

| VAK | Visual, Auditory, Kinesthetic |

| ITS | Intelligent Tutoring System |

| HEI | Higher Education Institution |

| SPSS | Statistical Package for the Social Sciences |

References

- Albert, D. E-learning Future—The Contribution of Psychology. In Catching the Future: Women and Men in Global Psychology, Proceedings of the 59th Annual Convention, 2001, International Council of Psychologists, Winchester, England, 8–11 July 2001; Roth, R., Lowenstein, L., Trent, D., Eds.; Pabst Science Publishers: Vienna, Austria, 2001; pp. 30–53. [Google Scholar]

- Aljably, R.; Hammami, S. Using Multi-Criteria Evaluation of E-Learning System: A Methodology Based on Learning Outcomes. In Big Data and Security, Proceedings of the Third International Conference, ICBDS 2021, Shenzhen, China, 26–28 November 2021; Xiang, Y., Zhang, H., Liu, Z., Eds.; Springer: Singapore, 2022; pp. 563–574. [Google Scholar] [CrossRef]

- Peng, H.; Su, Y.-S.; Chou, C. Personalized adaptive learning: An emerging pedagogical approach enabled by a smart learning environment. Smart Learn. Environ. 2019, 6, 9. [Google Scholar] [CrossRef]

- Ramesh, M.; Jayashree, R. Adaptive e-learning environments: A methodological approach to identifying and integrating multi-layered learning styles. SN Comput. Sci. 2024, 5, 772. [Google Scholar] [CrossRef]

- Yekollu, R.K.; Sreerama, K.; Reddy, P.V. AI-driven personalized learning paths: Enhancing education through adaptive systems. In Smart Data Intelligence, Proceedings of the International Conference on Smart Data Intelligence, Trichy, India, 2–3 February 2024; Asokan, R., Ruiz, D.P., Piramuthu, S., Eds.; Springer: Singapore, 2024; pp. 471–480. [Google Scholar] [CrossRef]

- Alshamsi, A.M.; Alshamsi, A.A.; Alshamsi, M.A. A multi-criteria decision-making (MCDM) approach for data-driven distance learning recommendations. Educ. Inf. Technol. 2023, 29, 11589. [Google Scholar] [CrossRef]

- Sajja, R.; Sermet, Y.; Cikmaz, M.; Cwiertny, D.; Demir, I. Artificial Intelligence-Enabled Intelligent Assistant for Personalized and Adaptive Learning in Higher Education. Information 2024, 15, 596. [Google Scholar] [CrossRef]

- Brusilovsky, P. Adaptive hypermedia. User Model. User-Adap. Interact. 2001, 11, 87–110. [Google Scholar] [CrossRef]

- Brusilovsky, P.; Millán, E. User models for adaptive hypermedia and adaptive educational systems. In The Adaptive Web; Brusilovsky, P., Kobsa, A., Nejdl, W., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 3–53. [Google Scholar] [CrossRef]

- Kan, Y.; Yue, K.; Wu, H.; Fu, X.; Sun, Z. Online learning of parameters for modeling user preference based on Bayesian network. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2022, 30, 285–310. [Google Scholar] [CrossRef]

- Mahya, P.; Fürnkranz, J. Biasing Rule-Based Explanations Towards User Preferences. Information 2025, 16, 535. [Google Scholar] [CrossRef]

- Huang, X.; Xu, W.; Liu, R. Effects of intelligent tutoring systems on educational outcomes: Evidence from a comprehensive analysis. Int. J. Distance Educ. Technol. 2025, 23, 1–25. [Google Scholar] [CrossRef]

- Létourneau, A.; Deslandes Martineau, M.; Charland, P.; Karran, J.A.; Boasen, J.; Léger, P.M. A systematic review of AI-driven intelligent tutoring systems (ITS) in K-12 education. npj Sci. Learn. 2025, 10, 29. [Google Scholar] [CrossRef] [PubMed]

- Steenbergen-Hu, S.; Cooper, H. A meta-analysis of the effectiveness of intelligent tutoring systems on college students’ academic learning. Educ. Psychol. Rev. 2014, 26, 215–243. [Google Scholar] [CrossRef]

- Wang, H.; Cui, Y.; He, X. Examining the applications of intelligent tutoring systems in K–12 education: A systematic review. Educ. Inf. Technol. 2023, 28, 1317–1343. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.; Wijekumar, K.; Ramirez, G.; Hu, X.; Irey, R. The effectiveness of intelligent tutoring systems on K-12 students’ reading compmartinrehension: A meta-analysis. Br. J. Educ. Technol. 2019, 50, 3119–3137. [Google Scholar] [CrossRef]

- Iterbeke, K.; De Witte, K.; Declercq, K.; Schelfhout, S. The effects of computer-assisted adaptive instruction and elaborated feedback on learning outcomes: A randomized control trial. Comput. Hum. Behav. 2021, 117, 106666. [Google Scholar] [CrossRef]

- Lim, L.L.; Neo, M.; Mohd Nor, N.H.; Neo, T.K. Efficacy of an adaptive learning system on course scores. Systems 2023, 11, 31. [Google Scholar] [CrossRef]

- Prediger, S.; Quabeck, K.; Erath, K. Conceptualizing micro-adaptive teaching practices in content-specific ways: Case study on fractions. J. Math. Educ. 2022, 13, 1–30. [Google Scholar] [CrossRef]

- Zhang, H.; Galaup, M. Reflection on the construction and impact of an adaptive learning ecosystem. Int. J. Technol. High. Educ. 2023, 20, 125–138. [Google Scholar] [CrossRef]

- Shi, P.; Liu, W. Adaptive learning oriented higher educational classroom teaching strategies. Sci. Rep. 2025, 15, 15661. [Google Scholar] [CrossRef]

- Contrino, M.F.; Reyes-Millán, M.; Vázquez-Villegas, P.; Membrillo-Hernández, J. Using an adaptive learning tool to improve student performance and satisfaction in online and face-to-face education for a more personalized approach. Smart Learn. Environ. 2024, 11, 6. [Google Scholar] [CrossRef]

- Dunagan, L.; Larson, D.A. Alignment of Competency-Based Learning and Assessment to Adaptive Instructional Systems. In Adaptive Instructional Systems. Design and Evaluation, Proceedings of the Third International Conference, AIS 2021, Held as Part of the 23rd HCI International Conference, HCII 2021, Virtual Event, 24–29 July 2021; Sottilare, R.A., Schwarz, J., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2021; Volume 12792. [Google Scholar] [CrossRef]

- Van Seters, J.R.; Ossevoort, M.A.; Tramper, J.; Goedhart, M.J. The influence of student characteristics on the use of adaptive e-learning material. Comput. Educ. 2012, 58, 942–952. [Google Scholar] [CrossRef]

- Plooy, E.D.; Casteleijn, D.; Franzsen, D. Personalized adaptive learning in higher education: A scoping review of key characteristics and impact on academic performance and engagement. Heliyon 2024, 10, e39630. [Google Scholar] [CrossRef] [PubMed]

- Ihichr, A.; Oustous, O.; El Idrissi, Y.E.B.; Lahcen, A.A. A systematic review on assessment in adaptive learning: Theories, algorithms and techniques. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 855–868. [Google Scholar] [CrossRef]

- Bernacki, M.L.; Greene, M.J.; Lobczowski, N.G. A systematic review of research on personalized learning: Personalized by whom, to what, how, and for what purpose(s)? Educ. Psychol. Rev. 2021, 33, 673–703. [Google Scholar] [CrossRef]

- Permadi, G.S.; Vitadiar, T.Z.; Kistofer, T.; Mujianto, A.H. The Decision Making Trial and Evaluation Laboratory (Dematel) and Analytic Network Process (ANP) for Learning Material Evaluation System. E3S Web Conf. 2019, 125, 23011. [Google Scholar] [CrossRef]

- Çelikbilek, Y.; Tüylü, B. Prioritizing the components of e-learning systems by using fuzzy DEMATEL and ANP. Interact. Learn. Environ. 2019, 29, 1499–1517. [Google Scholar] [CrossRef]

- Puente, J.; Fernandez, I.; Gomez, A.; Priore, P. Integrating sustainability in the quality assessment of EHEA institutions: A hybrid FDEMATEL-ANP-FIS model. Sustainability 2020, 12, 1707. [Google Scholar] [CrossRef]

- Mahdavi Ardestani, S.F.; Adibi, S.; Golshan, A.; Sadeghian, P. Factors Influencing the Effectiveness of E-Learning in Healthcare: A Fuzzy ANP Study. Healthcare 2023, 11, 2035. [Google Scholar] [CrossRef]

- Ma, Y.; Xiao, C.; Zhang, J. The logical framework and evaluation system of rural teachers’ digital competence—Analysis based on DEMATEL-ANP. Heliyon 2025, 11, e43036. [Google Scholar] [CrossRef]

- Jeong, J.S.; González-Gómez, D.; Yllana-Prieto, F. Multi-criteria Decision Analysis and Fuzzy-Decision-Making Trial and Evaluation Laboratory (MCDA and F-DEMATEL) Method for Flipped and Sustainable Mathematics Teaching as a Real-Life Application. In Real Life Applications of Multiple Criteria Decision Making Techniques in Fuzzy Domain; Sahoo, L., Senapati, T., Yager, R.R., Eds.; Studies in Fuzziness and Soft Computing; Springer: Singapore, 2023; Volume 420. [Google Scholar] [CrossRef]

- Srivastava, S.; Tripathi, A.; Arora, N. Multi-criteria decision making (MCDM) in diverse domains of education: A comprehensive bibliometric analysis for research directions. Int. J. Syst. Assur. Eng. Manag. 2024. [Google Scholar] [CrossRef]

- Gölcük, İ.; Baykasoğlu, A. An analysis of DEMATEL approaches for criteria interaction handling within ANP. Expert Syst. Appl. 2016, 46, 346–366. [Google Scholar] [CrossRef]

- Sinha, S.; Castro, E.; Moran, C. How Artificial Intelligence Can Personalize Education. IEEE Spectrum. Available online: https://spectrum.ieee.org/how-ai-can-personalize-education (accessed on 18 December 2023).

- Hevner, A.R.; March, S.T.; Park, J.; Ram, S. Design science in information systems research. MIS Q. 2004, 28, 75–106. [Google Scholar] [CrossRef]

- Peffers, K.; Tuunanen, T.; Rothenberger, M.A.; Chatterjee, S. A design science research methodology for information systems research. Commun. Assoc. Inf. Syst. 2007, 24, 24. [Google Scholar] [CrossRef]

- Gregor, S.; Hevner, A.R. Positioning and presenting design science research for maximum impact. MIS Q. 2013, 37, 337–355. [Google Scholar] [CrossRef]

- March, S.T.; Smith, G.F. Design and natural science research on information technology. Decis. Support Syst. 1995, 15, 251–266. [Google Scholar] [CrossRef]

- Saaty, T.L. Decision Making with Dependence and Feedback: The Analytic Network Process; RWS Publications: Pittsburgh, PA, USA, 1996. [Google Scholar]

- Fontela, E.; Gabus, A. The DEMATEL Observer; Battelle Geneva Research Center: Geneva, Switzerland, 1976. [Google Scholar]

- Anderson, L.W.; Krathwohl, D.R. (Eds.) A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives; Longman: New York, NY, USA, 2001. [Google Scholar]

- Chislett, V.; Chapman, A. VAK Learning Styles Self-Assessment Questionnaire. 2005. Available online: https://www.businessballs.com/freepdfmaterials/vak_learning_styles_questionnaire.pdf (accessed on 1 October 2025).

- Biggs, J. Enhancing learning: A matter of style or approach? In Perspectives on thinking, Learning, and Cognitive Styles; Sternberg, R.J., Zhang, L.-F., Eds.; Lawrence Erlbaum Associates Publishers: Mahwah, NJ, USA, 2001; pp. 73–102. [Google Scholar]

- Gligora Marković., M.; Kadoić., N.; Kovačić., B. Selection and prioritization of adaptivity criteria in intelligent and adaptive hypermedia e-learning systems. TEM J. 2018, 7, 137–146. [Google Scholar] [CrossRef]

- Kadoić, N. Nova Metoda za Analizu Slozenih Problema Odlucivanja Temeljena na Analitičkom Mrežnom Procesu i Analizi Drustvenih Mreža. Ph.D. Thesis, Fakultet Organizacije i Informatike, Varaždin, Croatia, 2018. [Google Scholar]

- Gligora Marković, M. How to Evaluate Learning Object? In Proceedings of the 9th International Conference on Future of Teaching and Education, Vienna, Austria, 7–9 March 2025; Deimantas, M.L., Ed.; Mokslinės Leidybos Deimantas: Vilnius, Lithuania, 2025; pp. 25–42. [Google Scholar]

- Kozhevnikov, M.; Evans, C.; Kosslyn, S.M. Cognitive Style as Environmentally Sensitive Individual Differences in Cognition: A Modern Synthesis and Applications in Education, Business, and Management. Psychol. Sci. Public Interest 2014, 15, 3–33. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.-C.; Shieh, J.-I.; Tzeng, G.-H. The use of a DANP with VIKOR approach for establishing the model of e-learning service quality. Eurasia J. Math. Sci. Technol. Educ. 2017, 13, 2267–2290. [Google Scholar] [CrossRef]

- Kazimieras Zavadskas, E.; Kazimieras Zavadskas, E.; Antucheviciene, J.; Adeli, H.; Turskis, Z.; Adeli, H. Hybrid Multiple Criteria Decision Making Methods: A Review of Applications in Engineering. Sci. Iran. 2016, 23, 1–20. [Google Scholar] [CrossRef]

- Si, S.L.; You, X.Y.; Liu, H.C.; Zhang, P. DEMATEL Technique: A Systematic Review of the State-of-the-Art Literature on Methodologies and Applications. Math. Probl. Eng. 2018, 2018, 3696457. [Google Scholar] [CrossRef]

- Khan, A.U.; Ali, Y. Analytical Hierarchy Process (AHP) and Analytic Network Process methods and their applications: A twenty year review from 2000–2019: AHP & ANP techniques and their applications: Twenty years review from 2000 to 2019. Int. J. Anal. Hierarchy Process 2020, 12, 369–459. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).