1. Introduction

Developing stable, accurate, and generalizable numerical simulation methods has long been one of the core challenges in computational fluid dynamics (CFD) [

1,

2,

3]. Traditional numerical methods, such as the finite difference method (FDM), finite volume method (FVM), and spectral method (SM), have established the basic framework of modern CFD through discretization theory, mesh partitioning, and algebraic equation solving [

1,

4]. These methods have become benchmark tools for simulating physical phenomena such as fluid dynamics and heat transfer. However, when dealing with strong nonlinearity, discontinuous solutions, and complicated geometries, these traditional methods face significant limitations, including heavy reliance on refined meshes, numerical instability, and severe accuracy degradation or even failure [

5,

6,

7].

In recent years, physics-informed neural networks (PINNs) have emerged as a novel computational method that combines the advantages of physical laws and data-driven learning [

8]. Leveraging their mesh-free nature, PINNs demonstrate great potential in addressing diverse fluid problems, gradually becoming a key new paradigm for breaking through the bottlenecks of traditional numerical methods [

9]. Specifically, PINNs embed physical laws directly into the loss function of neural networks and utilize parameter optimization to implicitly solve the governing equations, thus largely eliminating mesh dependence and enhancing adaptability to complex physical problems [

10,

11,

12]. For instance, this approach has shown promising performance in shock capturing, turbulence modeling, and unsteady flow simulations [

13]. Nonetheless, PINNs also suffer from challenges such as spurious solutions, convergence difficulties, and limited capability for dynamic system modeling [

10,

14,

15]. As a result, introducing hybrid model architectures to improve robustness has become a promising research direction. Among these approaches, the integration of PINNs with the lattice Boltzmann method (LBM) represents a recently developed and physically motivated extension.

The LBM, owing to its clear evolution mechanism, highly parallel computational structure, and flexibility in handling intricate boundaries and multi-physics coupling problems, has been widely adopted for numerical simulation in CFD [

16]. Its fundamental governing equation, the lattice Boltzmann equation (LBE), originates from the underlying kinetic equation, i.e., the continuous Boltzmann equation. This intrinsic physical nature rooted in kinetic theory enables the LBE to systematically preserve the essential coupling between microscopic particle dynamics and macroscopic conservation law evolution in physical modeling, thus endowing it with inherent physical consistency, theoretical closure, and high generality for fluid flow solutions [

17,

18]. The LBE models the mesoscopic evolution of particle distributions through collision and streaming processes, and offers a solid theoretical basis for complex flow simulations. Based on this foundation, several researchers have explored coupling the LBM with physics-informed neural networks by replacing macroscopic PDEs such as the Navier–Stokes (N–S) equations with the LBE, thereby embedding mesoscopic kinetic physics directly into the learning process [

19]. Lou et al., for instance, implemented a single-relaxation-time (SRT) Bhatnagar–Gross–Krook (BGK) model within a PINN framework and demonstrated its feasibility for both forward and inverse flow reconstruction [

20]. While the SRT formulation simplifies the kinetic description by applying a single relaxation time to all kinetic modes, its direct use within a PINN framework introduces new challenges that differ from those in conventional solvers. Specifically, the coupling of a single relaxation scale with the data-driven loss landscape can lead to poorly conditioned residuals and gradient imbalance, resulting in optimization stiffness and slow convergence.

To overcome the aforementioned challenges, this study incorporates the multi-relaxation-time (MRT) formulation into the PINN framework. By assigning distinct relaxation rates to different kinetic moments, the MRT model provides a more flexible and physically consistent representation of mesoscopic relaxation processes, which helps alleviate gradient imbalance and improves the conditioning of the physics-informed residuals. This enhanced formulation serves as the foundation for achieving stable and accurate training in complex flow regimes.

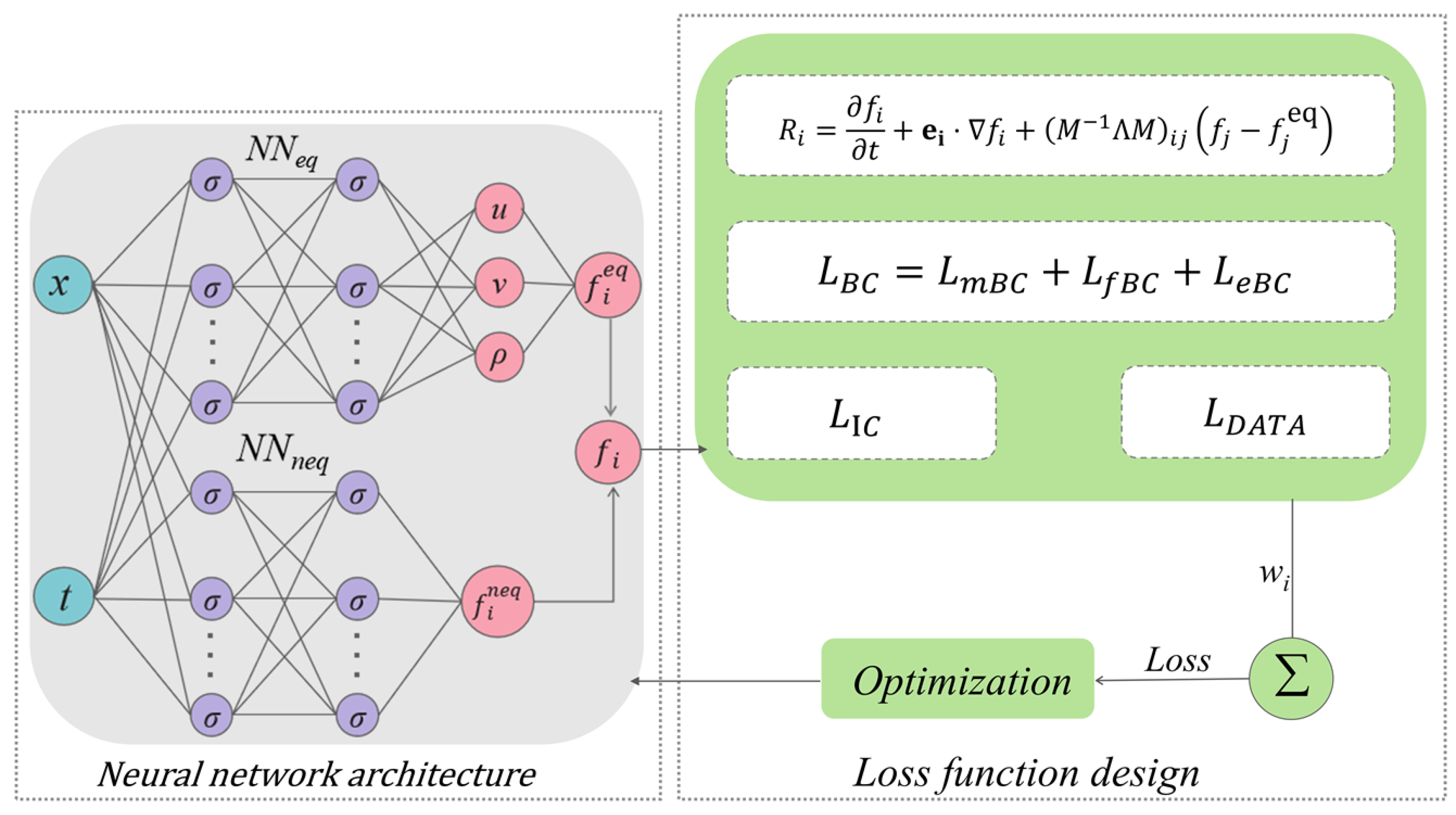

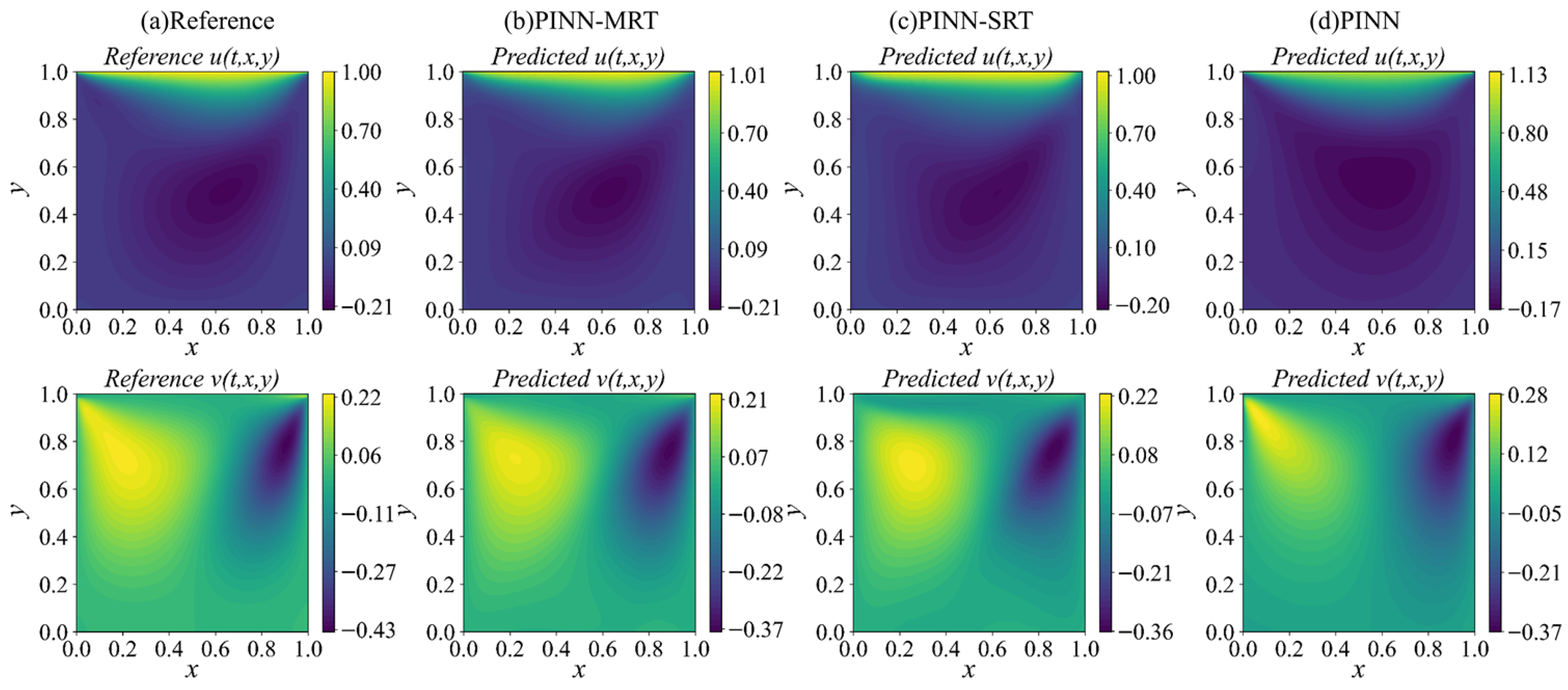

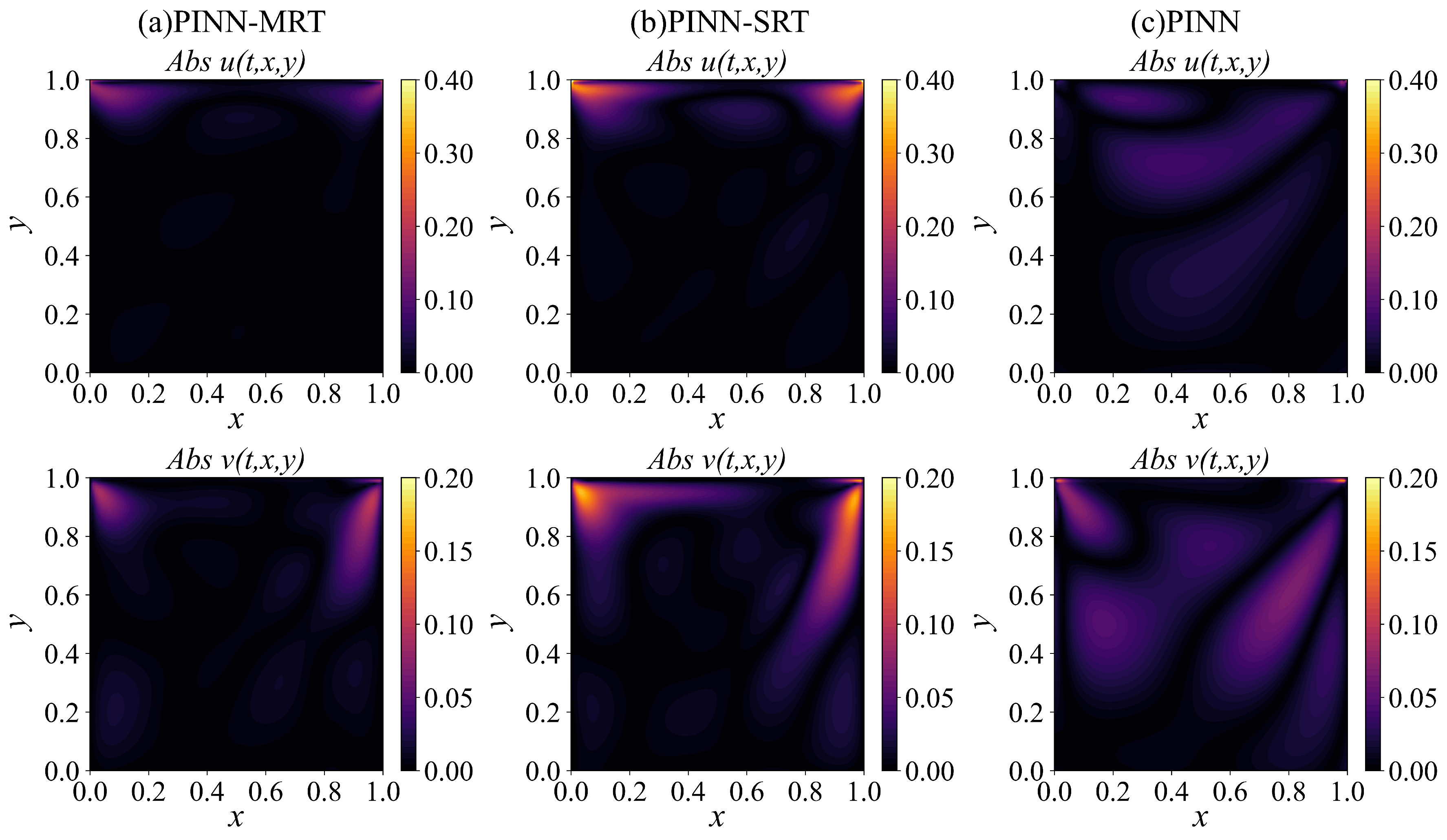

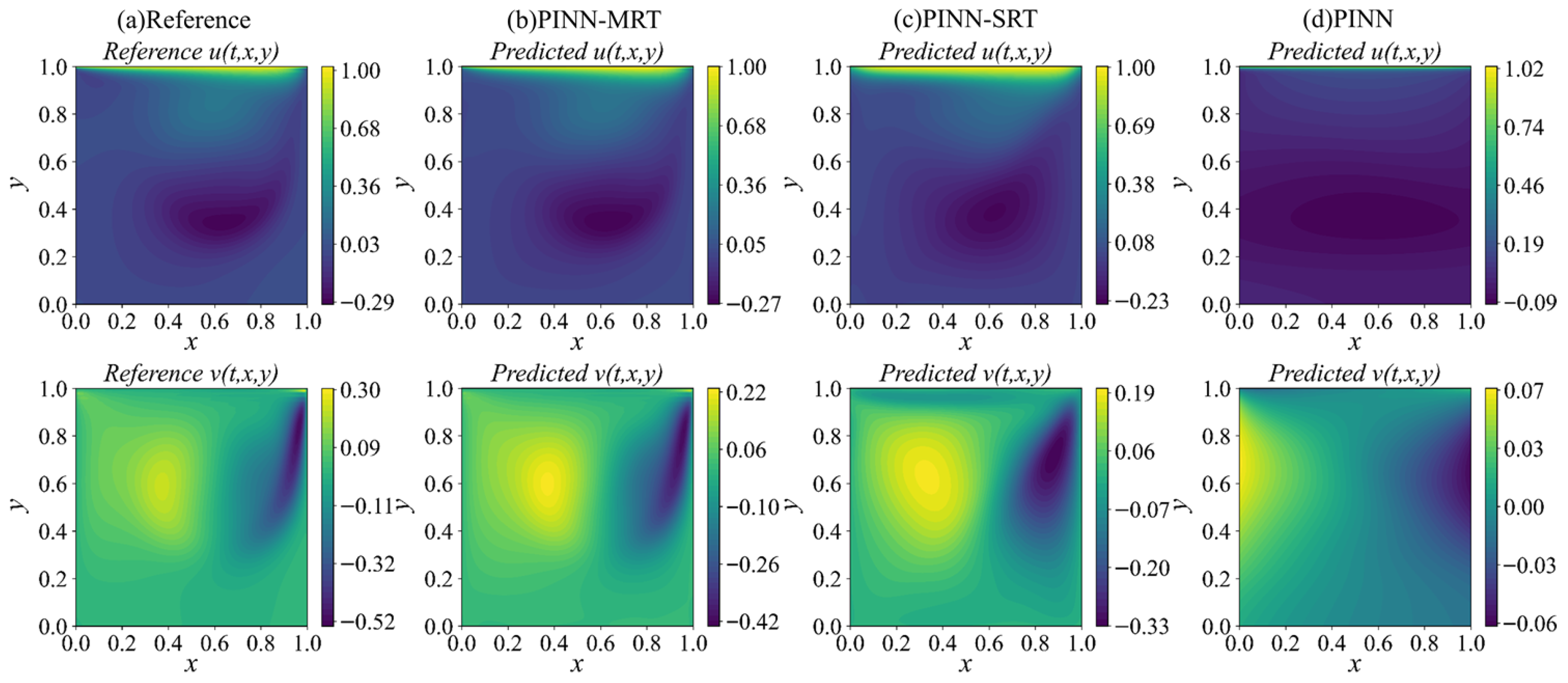

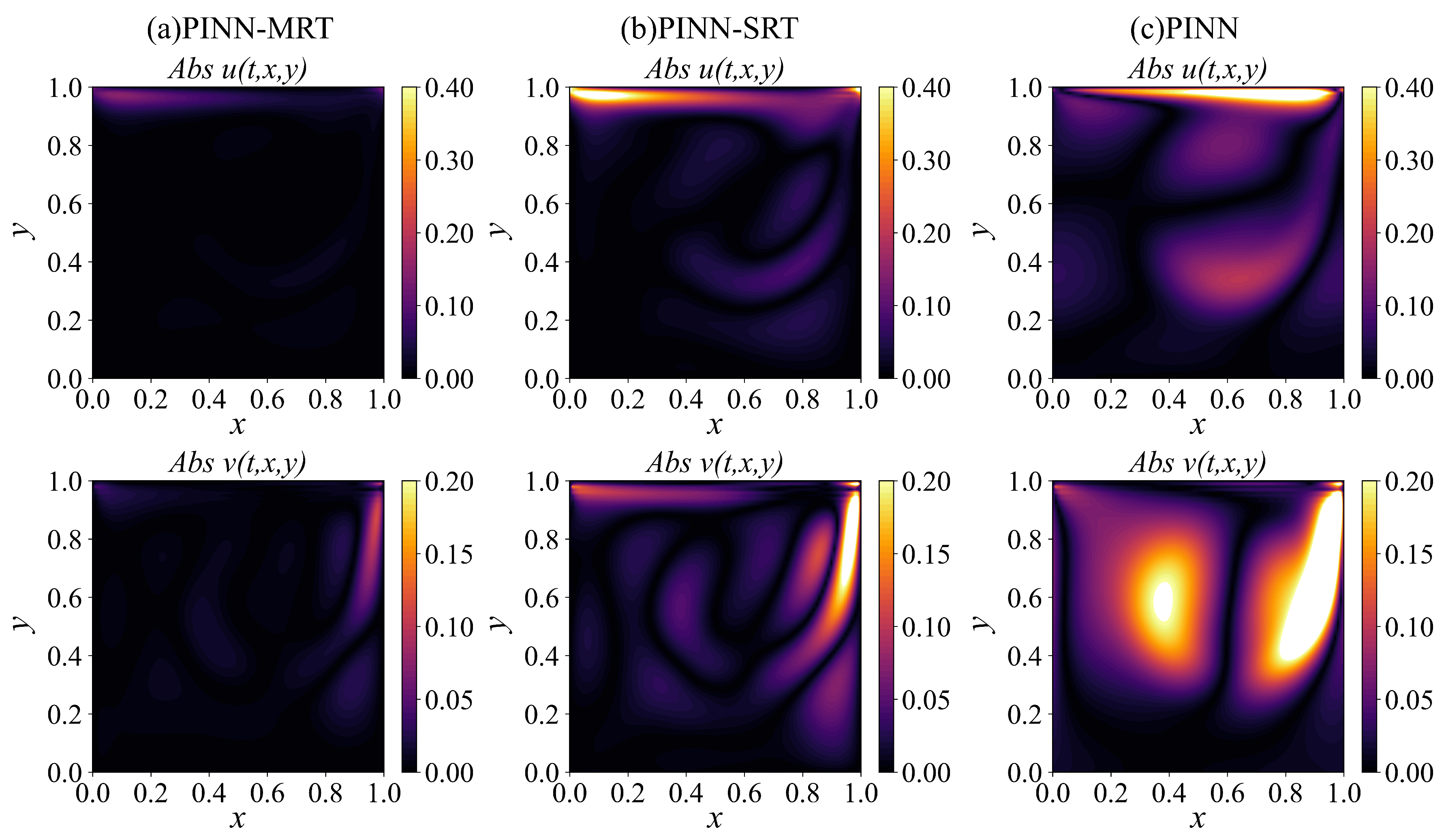

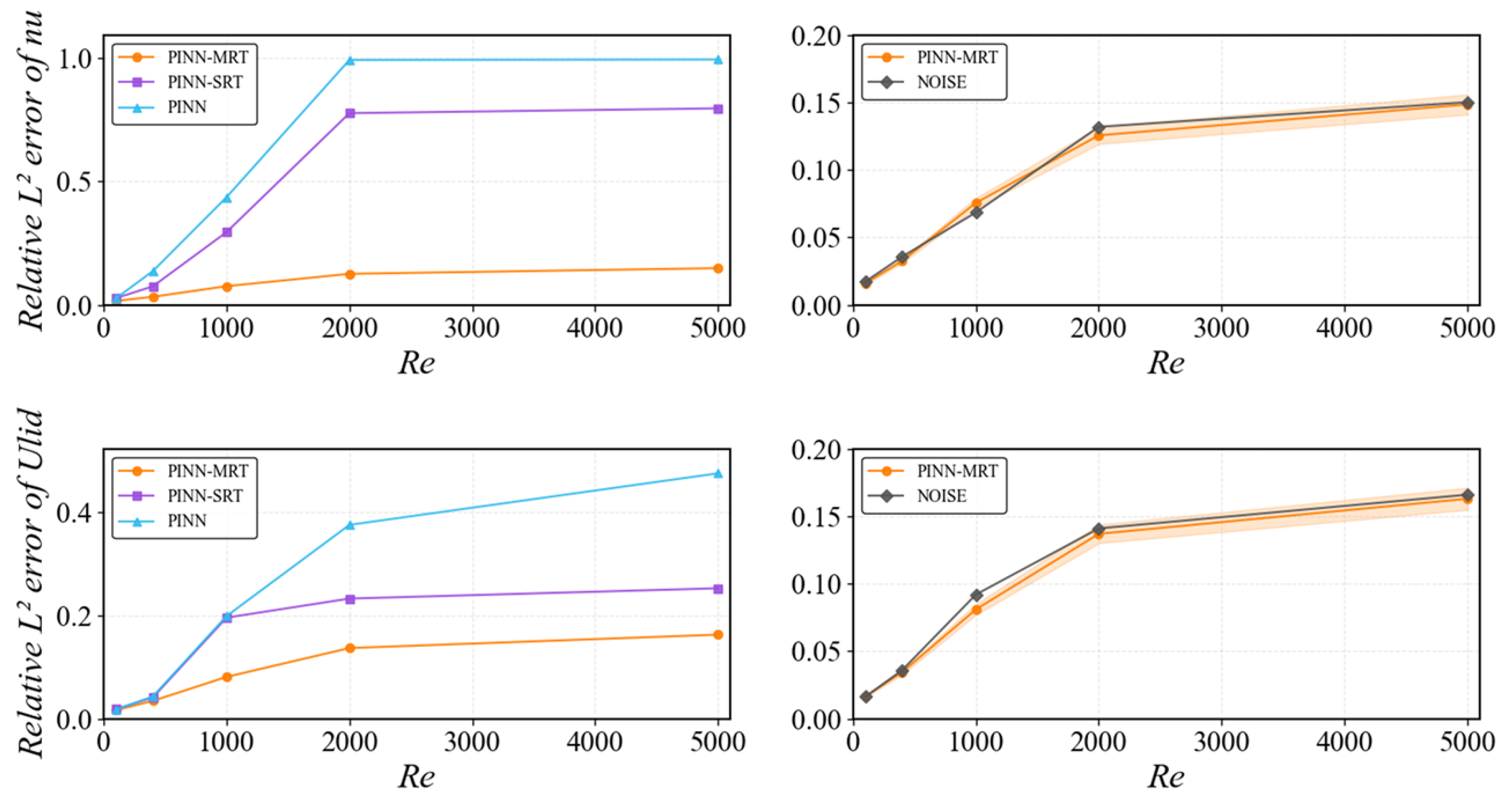

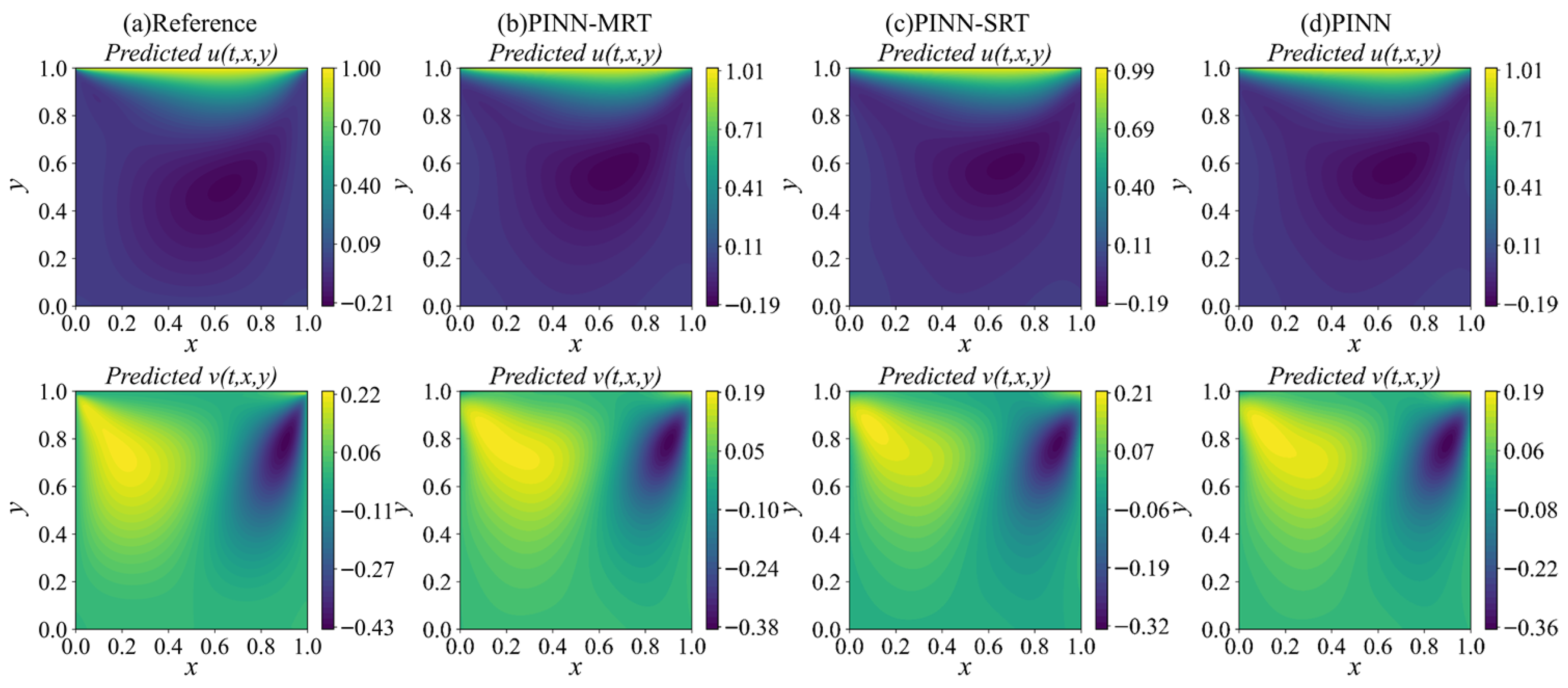

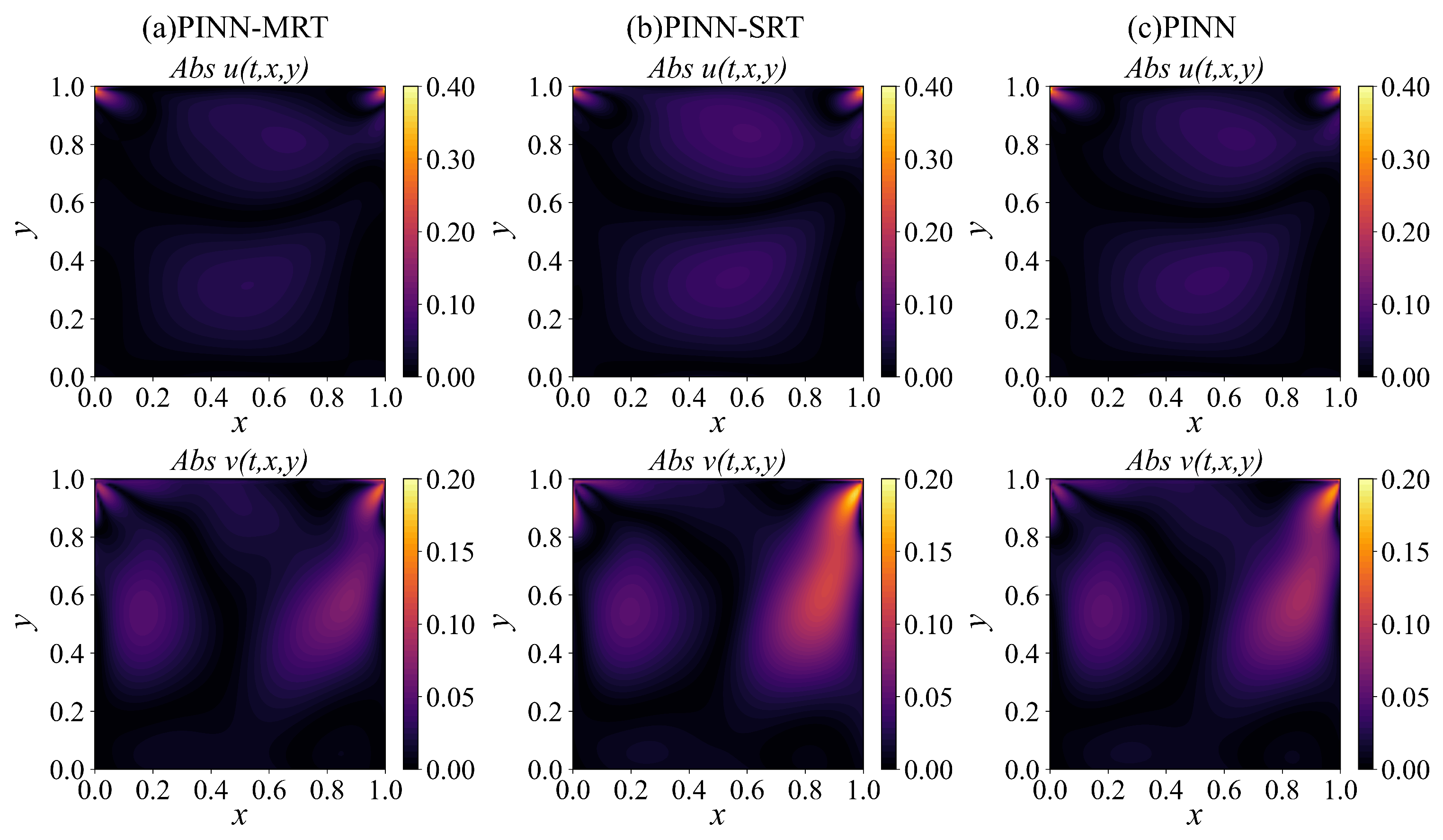

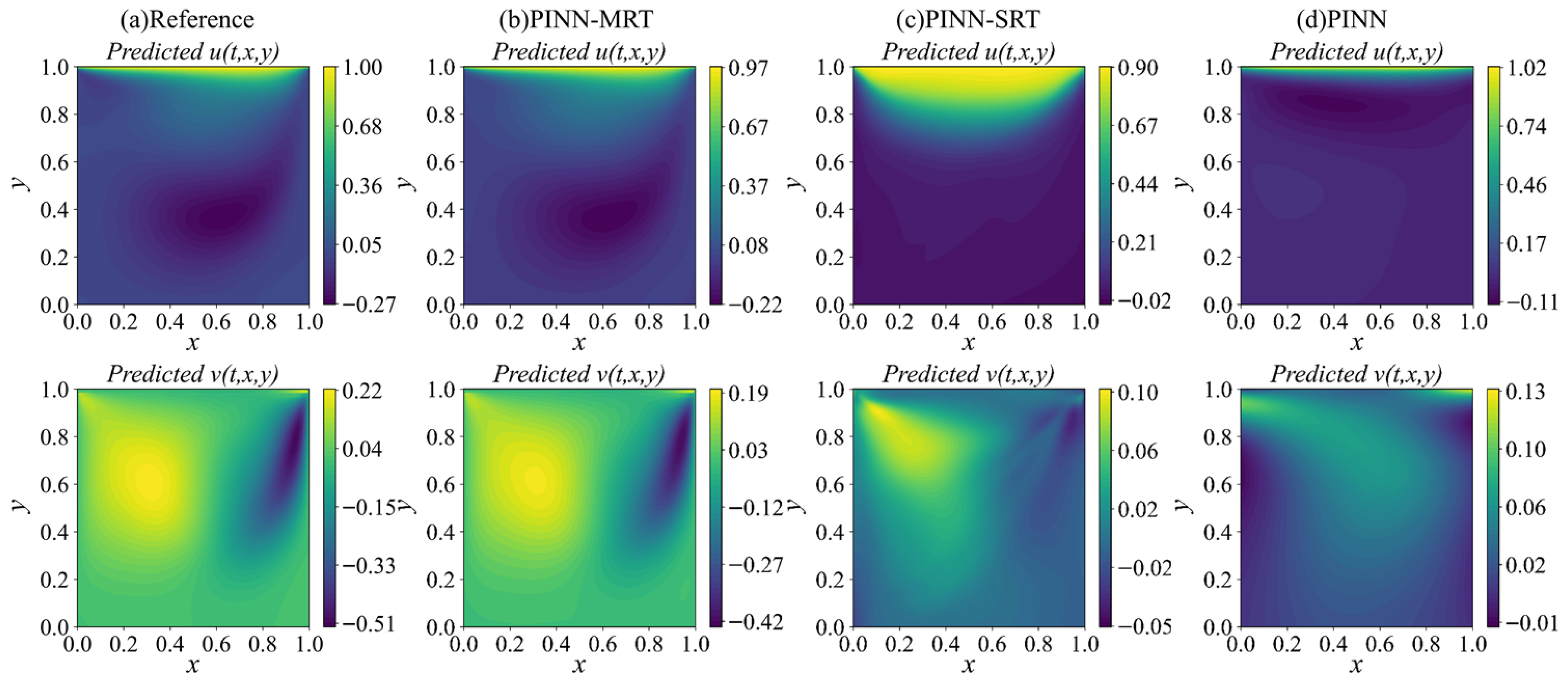

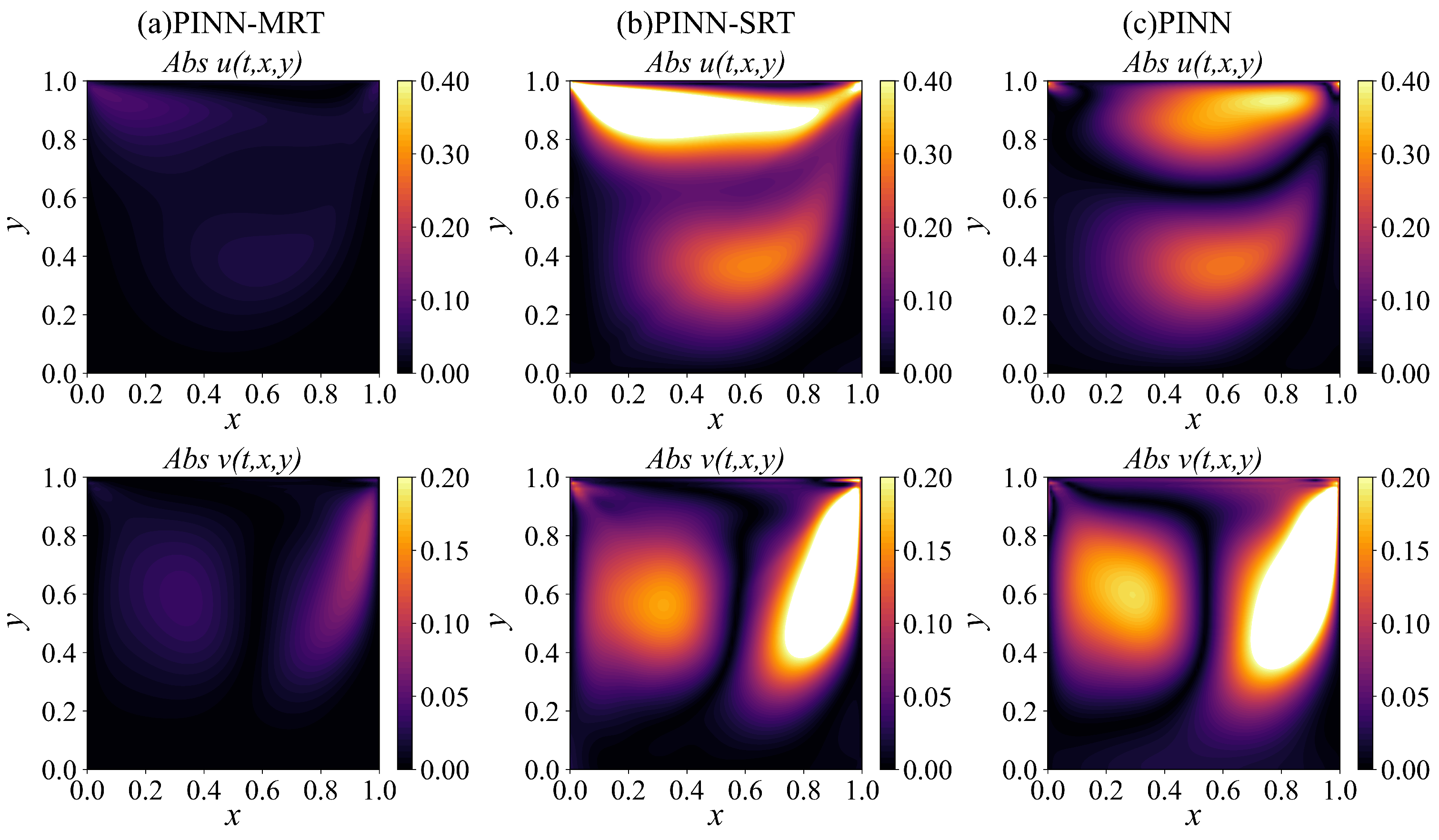

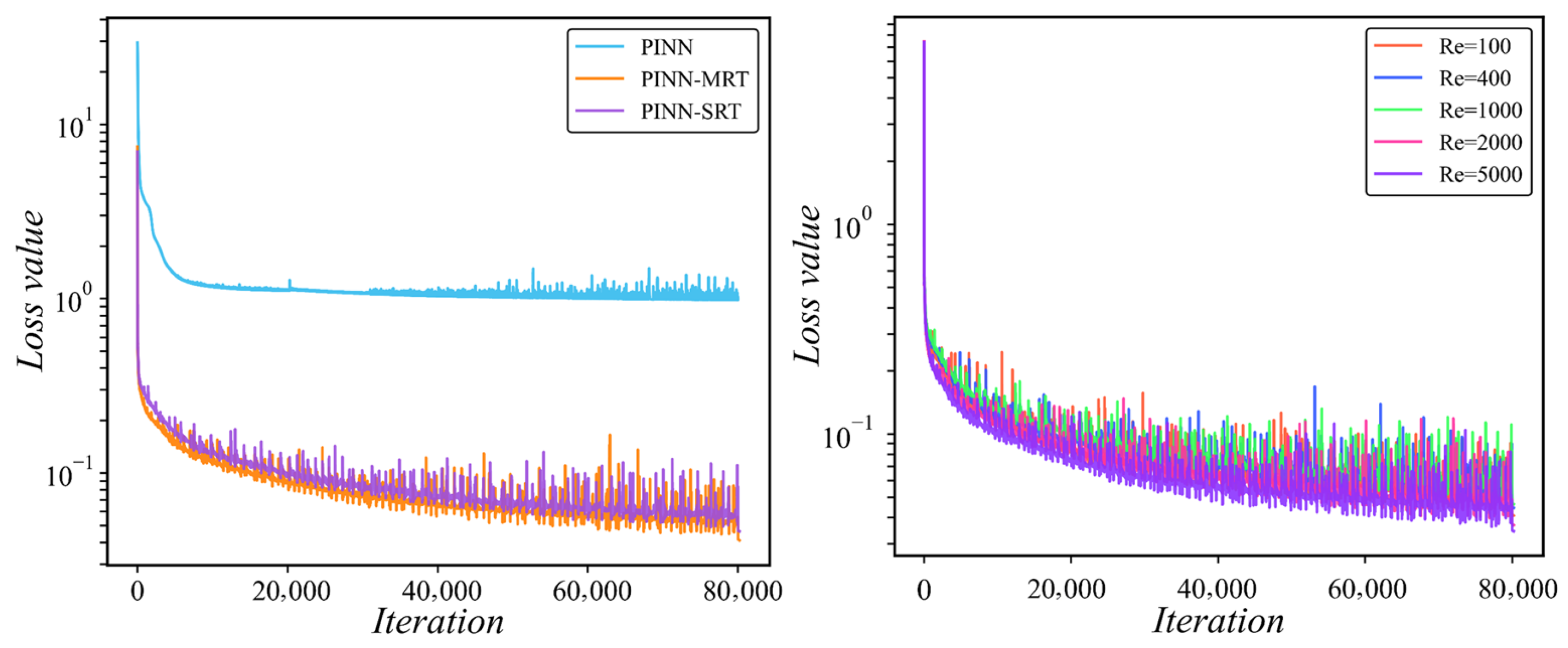

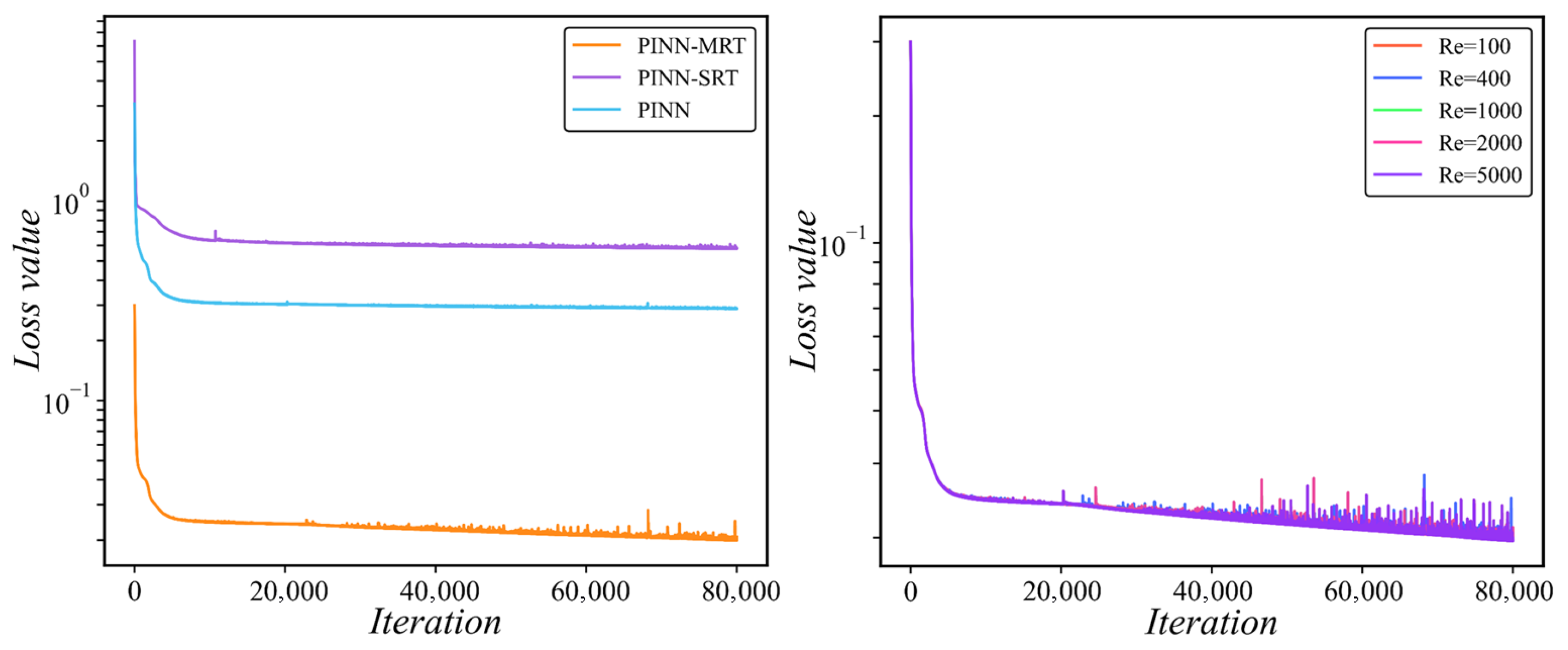

Building upon this idea, we develop a dual-network PINN-MRT architecture that separately learns macroscopic conserved variables and non-equilibrium distribution functions. The objective is to improve convergence stability and predictive accuracy in both forward and inverse problems, while maintaining robustness across moderate to high-Reynolds-number (Re) flows. The proposed framework is validated using the two-dimensional lid-driven cavity flow benchmark over a Re range of , enabling a systematic comparison against PINN-SRT and baseline PINN models.

The remainder of this paper is organized as follows:

Section 2 presents the theoretical foundation and design of the proposed PINN-MRT architecture, which integrates dual networks and physics-informed loss functions.

Section 3 introduces the benchmark problem setup and model configurations.

Section 4 demonstrates the applications of PINN-MRT to forward and inverse problems, including predictive accuracy and parameter identification at different Reynolds numbers. Finally,

Section 5 summarizes the main findings and outlines future research directions.

3. Benchmark Modeling

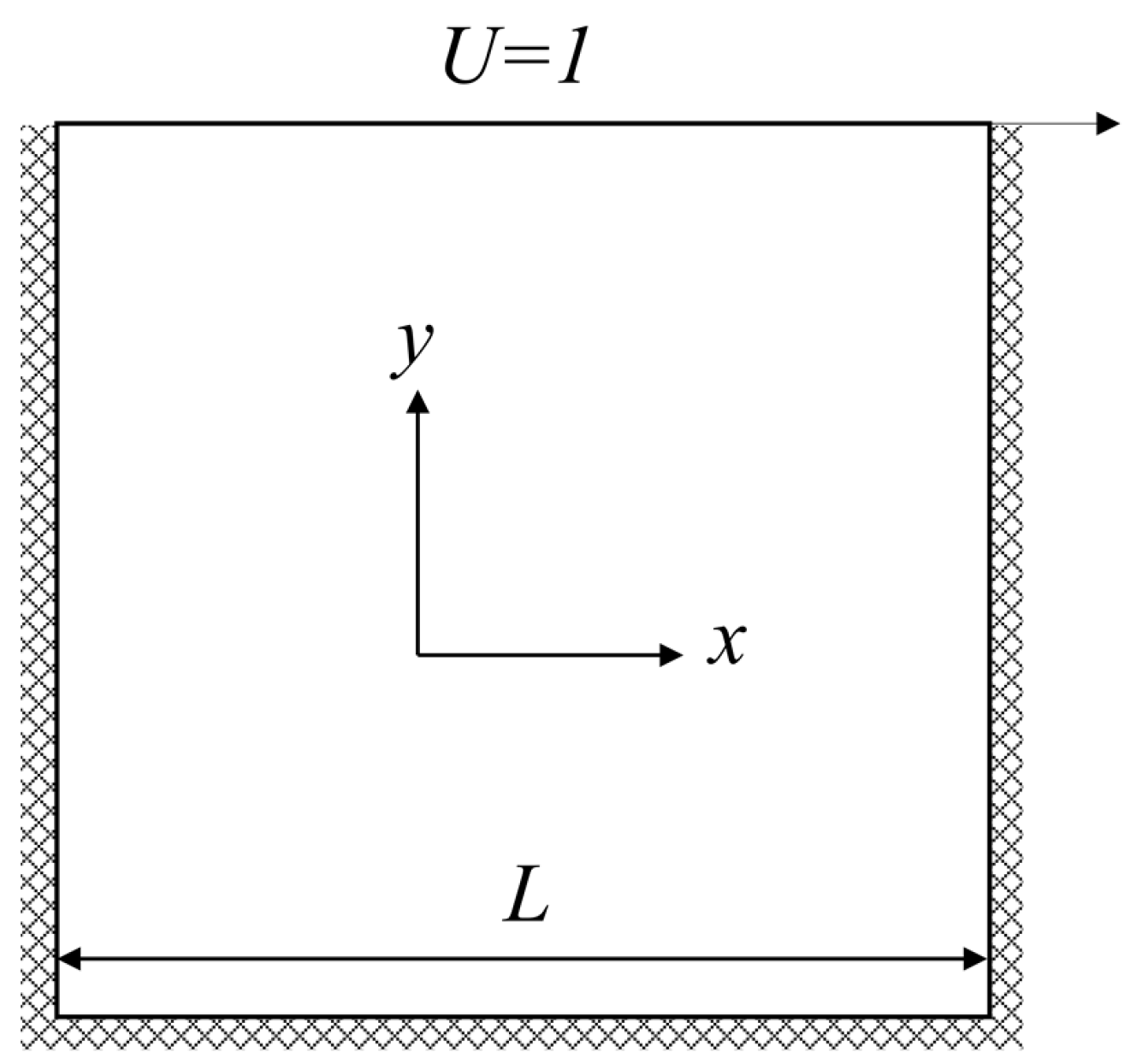

The classical lid-driven cavity flow is selected as the benchmark case to evaluate the model performance. As shown in

Figure 2, the computational domain is a unit square with spatial coordinates

. A constant velocity of

,

is prescribed on the top boundary, and the remaining walls are subjected to no-slip conditions (i.e., stationary). This setup induces a characteristic steady-state vortex structure that varies with Re, making it a widely adopted benchmark for assessing the predictive capability of both forward and inverse modeling approaches.

To enable a systematic comparison of different levels of physical embedding in physics-informed neural networks, three distinct formulations are considered

Standard PINN: Enforces the macroscopic incompressible N–S equations directly as PDE residuals.

PINN-SRT: Incorporates the SRT approximation by using a scalar relaxation matrix in the LBE.

PINN-MRT: Employs the MRT collision model, utilizing a full diagonal relaxation matrix derived from MRT theory to better capture kinetic effects.

The macroscopic dynamics are governed by the incompressible N-S equations [

30]

where

denote the velocity components in the

x and

y directions, respectively,

p is the pressure, and

represents the kinematic viscosity, which can be expressed in terms of the Re as

here,

U represents the lid velocity, and

L denotes the characteristic length (i.e., the cavity length).

Three models share the same network architecture and training configuration to ensure a fair comparison. The detailed configurations for each model in the inverse problem are listed in

Table 1.

Table 2 summarizes the corresponding configurations for forward problem [

10,

14,

31,

32].

Notably, the treatment of the lid velocity differs between forward and inverse problems. In forward simulations, is imposed as a fixed boundary condition, and the model predicts the full velocity field. In contrast, for inverse problems, both and the kinematic viscosity are treated as unknown parameters to be inferred from sparse and potentially noisy observations.

The reference data for both problem types are generated using a high-resolution FDM solver. All spatial points used for collocation, boundary conditions, and observational data are uniformly sampled at the start of training and remain unchanged throughout the process, which corresponds to a static sampling strategy. No adaptive refinement or iterative resampling is applied. A fixed random seed (2341) is used to ensure reproducibility across all experiments.

Spatial and temporal derivatives in the physics-informed loss are computed using automatic differentiation (AD) through the PyTorch framework (version 2.7.1 with CUDA 11.8 support). This approach provides exact gradients of the neural network outputs with respect to the input coordinates without discretization errors, unlike finite difference schemes. As a result, the loss function remains fully differentiable with respect to both network parameters and physical parameters such as and .

Table 1.

Configurations and training settings for inverse problem.

Table 1.

Configurations and training settings for inverse problem.

| Category | Description |

|---|

| Hardware | NVIDIA RTX 4070 GPU (16 GB memory) |

| Framework | PyTorch |

| Batch size | 1024 |

| Network structure | 5-layer fully connected network (1 input, 3 hidden, 1 output) |

| Hidden neurons | 60 neurons per hidden layer |

| Activation function | Tanh |

| Trainable parameter | Both and are randomly initialized in |

| Loss function | |

| Data source | FDM |

| Data location | Random sampling |

| Sampling distribution | 20,000 collocation pts; 5000 boundary pts; 1000 data pts |

| Optimizer | Adam (80,000 iterations, initial LR , exponential decay) |

Table 2.

Configurations and training settings for forward problem.

Table 2.

Configurations and training settings for forward problem.

| Category | Description |

|---|

| Hardware | NVIDIA RTX 4070 GPU (16 GB memory) |

| Framework | PyTorch |

| Batch size | 1024 |

| Network structure | 7-layer fully connected network (1 input, 5 hidden, 1 output) |

| Hidden neurons | 60 neurons per hidden layer in ; 100 in |

| Activation function | Tanh |

| Trainable parameter | None |

| Loss function | |

| Data source | None |

| Data location | None |

| Sampling distribution | 20,000 collocation pts; 5000 boundary pts |

| Optimizer | Adam (80,000 iterations, initial LR , exponential decay) |

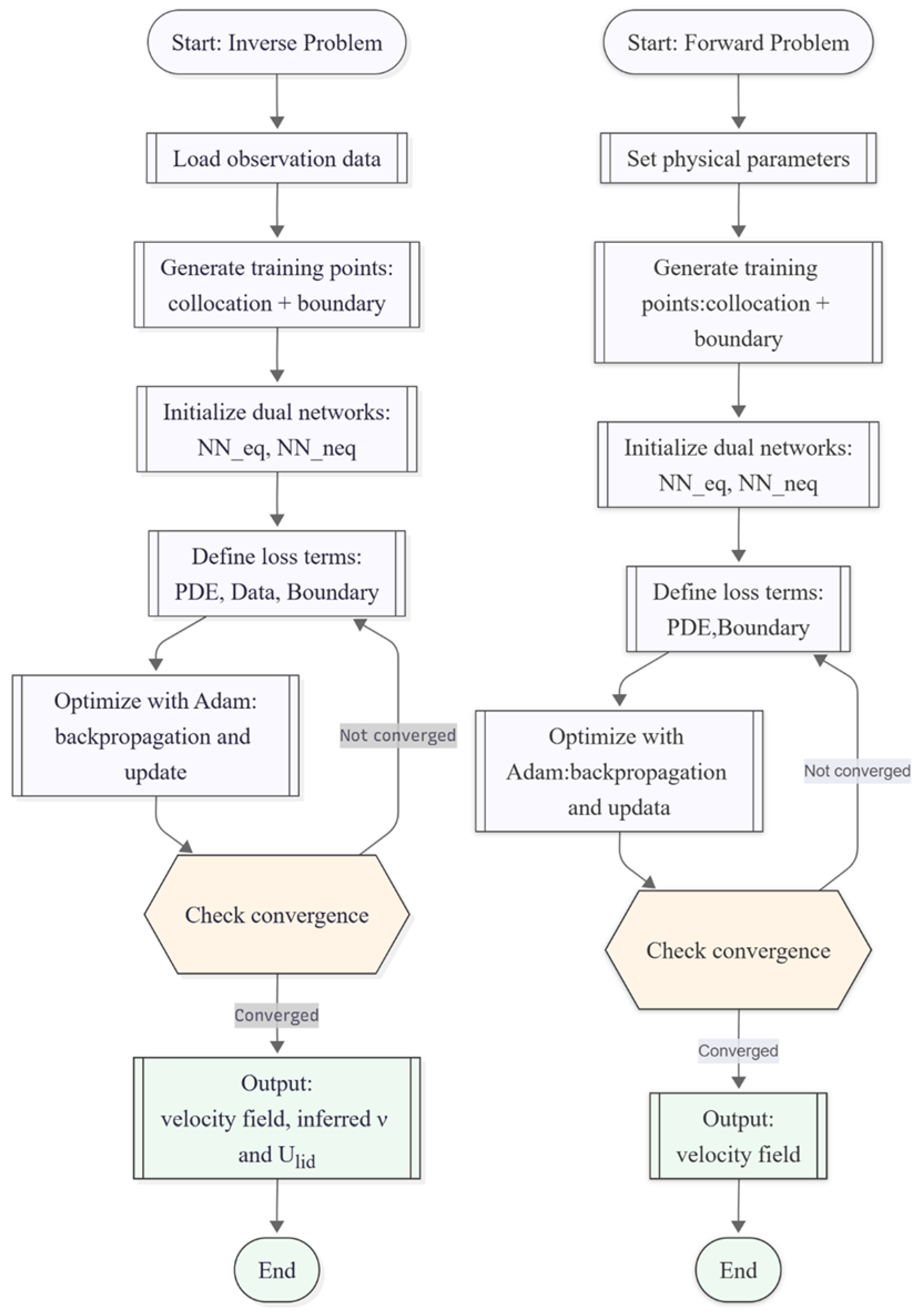

The complete training workflow, including data preparation, dual-network initialization, loss construction, and iterative optimization with the Adam optimizer, is illustrated in

Figure 3 for the inverse and forward problems.

5. Conclusions

In this study, we introduced PINN-MRT, a novel hybrid architecture that integrates MRT-LBM into PINNs, demonstrating significantly enhanced stability and accuracy for CFD simulations, particularly in high-Re regimes. Our core finding is that the superiority of PINN-MRT stems from the MRT mechanism’s ability to fundamentally improve the optimization landscape by decoupling the gradients of different physical modes, which mitigates the training difficulties inherent in standard PINN formulations. The proposed dual-network architecture further aids this process by separating the learning tasks for equilibrium and non-equilibrium components. While the current work is validated on 2D steady flows with preset relaxation parameters, its robust performance highlights its considerable potential. From a fluid-mechanics standpoint, the same formulation can be naturally extended to unsteady flows by incorporating temporal derivatives into the residual equations, allowing the model to capture transient phenomena such as vortex shedding and oscillatory boundary layers. This extension mainly involves ensuring temporal stability and appropriate time-step consistency during rapid flow evolution. Furthermore, applying the method to three-dimensional flows would require resolving all three velocity components and handling secondary vortices and anisotropic shear effects, which substantially increase computational cost but remain physically compatible with the MRT framework.

Future research will focus on extending the PINN-MRT framework to more complex scenarios, including three-dimensional unsteady turbulence (e.g., LES) and developing adaptive optimization strategies for the relaxation parameters. This approach establishes a promising pathway for applying deep learning to solve challenging multi-physics problems that remain intractable for conventional methods.

Despite the demonstrated advantages, several limitations of the present study should be acknowledged. First, the proposed PINN-MRT framework incurs a relatively high computational cost due to the dual-network structure and the additional mesoscopic constraints, and its scalability to large-scale or real-time simulations has not yet been systematically assessed. Second, a formal analysis of error propagation from the MRT relaxation parameters to the macroscopic PINN outputs remains to be developed, which would provide deeper insights into the sensitivity and robustness of the coupled learning process. Third, the current validation is limited to two-dimensional, single-phase steady flows, and the extension to three-dimensional or multiphase configurations requires further investigation. Finally, the present work employs fixed weighting coefficients in the composite loss function; introducing adaptive weighting schemes could further improve convergence efficiency and balance among competing physical constraints. Addressing these aspects constitutes an important direction for future research toward a more general, efficient, and physically consistent PINN-MRT framework.