1. Introduction

The explosive growth of digital content across e-commerce, music streaming, and media platforms has made recommender systems indispensable for reducing information overload and connecting users to relevant items. Classical collaborative filtering techniques—most prominently matrix factorization on static user–item matrices—captured stationary preference structures but failed to model the sequential and dynamic nature of user behavior, in which order, timing, and evolving context play decisive roles [

1,

2,

3,

4]. This limitation motivated the emergence of sequential recommendation, which reformulates a user’s history as an ordered sequence and aims to predict the next interaction conditioned on that sequence [

5,

6].

First-order Markov chains and Factorizing Personalized Markov Chains (FPMC) were designed to emphasize short-term transitions between consecutive actions and achieved efficiency and interpretability [

1]. However, these models were inherently constrained in their ability to capture higher-order and long-range dependencies that arise in more complex user behaviors [

3,

6]. The introduction of deep learning brought a significant transformation. GRU4Rec applied recurrent architectures with ranking-oriented objectives, enabling the modeling of variable-length dependencies across user histories [

5]. Caser proposed a convolutional perspective, representing recent items as temporal images and extracting local motifs with horizontal and vertical filters [

7]. Transformer-based models, such as SASRec and BERT4Rec, advanced this line of research by employing self-attention mechanisms that capture global dependencies and contextual information without recurrence, thereby alleviating vanishing gradient issues while achieving robust performance in next-item prediction [

8,

9]. Beyond supervised settings, self-supervised and contrastive methods, such as S3-Rec, CL4SRec, and denoising autoencoder-based designs, further improved representation quality by mining auxiliary signals directly from user histories [

10,

11,

12,

13].

Despite these advances, most sequential architectures still treat all interactions as equally informative, failing to distinguish between stable, recurring user intents and transient preference shifts [

6]. This weakness makes them less adaptive when users deviate from entrenched patterns. In response, intent-aware methods were developed to uncover latent motivations by incorporating relational inductive bias. For instance, SR-GNN represented sessions as item–item graphs and propagated contextual information to capture session-level goals [

14]. LESSR addressed information loss during session graph construction [

15], while STAR-GNN modeled long-distance relations with a star-topology design [

16]. Other studies enriched intent reasoning with knowledge graphs, social influence diffusion, and disentangled representation learning, showing the breadth of possible intent-aware signals [

17,

18,

19,

20,

21]. These methods significantly enriched recommendation with semantic structures but often assumed that intent remains static within short contexts, providing limited support for modeling how intents transition across sessions.

In parallel, shift-aware preference modeling emerged to address the temporal evolution of user behavior. TiSASRec introduced time-interval aware attention to reflect recency effects [

22], while Time-LSTM modified recurrent gates with elapsed-time signals to better handle irregular gaps between interactions [

23]. More recently, contrastive learning approaches such as CONTRec explicitly separated stable and evolving preference trajectories to improve adaptability under distributional shifts [

24]. Other shift-aware designs include DIEN, which captures long-term interest evolution [

20], and multi-expert or semantic-aware models that adapt to heterogeneous patterns [

21,

25]. Although these methods effectively capture temporal dynamics, they typically regard user histories as purely sequential signals and fail to exploit structural intent anchors that persist across sessions.

Graph-based models provide another complementary perspective by encoding higher-order co-occurrence structures among items. SR-GNN and GCE-GNN, for example, leverage graph-based representations to capture global context and co-intent patterns that are particularly valuable in sparse domains [

14,

15,

18,

26]. Recent reviews emphasize that graph-based recommender systems can provide both scalability and interpretability in real-world deployments [

27]. However, most graph-based methods are constructed offline and treated as static entities, which limits their ability to adapt to rapidly changing preferences [

28].

Many reported gains in the literature are measured with sampled negatives or permissive masking strategies that may hide look-ahead leakage or candidate-pruning bias. To ensure realistic assessment, we adopt a leakage-free, full-catalog ranking protocol that constructs prefixes strictly before the last target occurrence, masks PAD and prefix items (excluding the target), and ranks against the entire item universe. Item catalog denotes the full set of items available in the system. This protocol standardizes comparison across architectures and reveals when structural signals genuinely translate into ranking gains.

These complementary blind spots highlight the need for a unified framework that can capture both stable intent structures and dynamic preference shifts. To address this, we propose IntentGraphRec, a dual-level sequential recommendation framework that integrates graph-based co-intent modeling with shift-aware sequence modeling. The proposed method constructs a co-intent graph to capture global recurring patterns and combines it with a time- and position-aware Transformer encoder to emphasize local preference transitions. This design balances stability in the form of long-term structural intent with adaptability to short-term behavioral dynamics. Under the strict evaluation protocol above, our empirical results show that IntentGraphRec is competitive but does not always surpass strong Transformer baselines; nevertheless, the unified pipeline and diagnostics clarify when structure helps (e.g., sparse or structurally stable regimes) and when late fusion is dominated by sequence representations.

Experiments on Gowalla dataset [

8] and MovieLens-1M show that IntentGraphRec is competitive but does not surpass the strongest transformer and session-graph baselines under the strict protocol; the unified pipeline clarifies where structural signals help and where late fusion is dominated by sequence representations. Domain-specific analyses further show that the graph component is particularly effective in sparse or structurally stable environments, while the shift-aware component performs best in volatile contexts.

2. Related Work

In this section, we review related literature in five main categories: (1) sequential recommendation models, (2) intent-aware modeling in sequences, (3) shift-aware preference transition methods, (4) graph-based approaches for capturing structural relationships among items, and (5) limitations of existing approaches.

2.1. Sequential Recommendation Models

Sequential recommenders predict the next interaction given the temporal order of prior items. FPMC binds Markov transitions with factorization to model short-term dynamics [

1], while other classical baselines emphasize efficiency at the cost of long-term accuracy [

3,

6]. GRU4Rec demonstrated the effectiveness of recurrent networks for session-based recommendation [

5]. Caser treated user histories as temporal images, extracting local patterns through convolutional filters [

7]. SASRec replaced recurrence with self-attention to directly model long-range dependencies, while BERT4Rec adopted bidirectional pretraining to exploit both left and right contexts [

8,

9]. Self-supervised and contrastive approaches, such as S3-Rec, CL4SRec, and more recent surveys, highlight the role of auxiliary signals for robustness [

10,

11,

13]. Although these models demonstrate strong performance, they generally assume that all interactions carry equal weight and lack mechanisms to distinguish persistent user interests from transient shifts.

2.2. Intent-Aware Modeling in Sequential Recommendations

Intent-aware models attempt to uncover the latent goals that drive user actions. SR-GNN models sessions as graphs and propagates information to capture local goals [

14]. LESSR preserves sequential information during graph construction [

15], while STAR-GNN integrates temporal and structural signals [

16]. Complementary work has applied knowledge graphs, graph convolutional networks, and disentangled representations to refine intent [

17,

18,

21]. These methods enrich recommendations with relational semantics, but they often treat intent as static and struggle to capture evolving transitions across sessions.

2.3. Shift-Aware Preference Transition Modeling

Shift-aware methods explicitly incorporate temporal information to model evolving preferences. TiSASRec accounts for time intervals and positions [

22], Time-LSTM incorporates elapsed time into recurrent gates [

23], and CONTRec leverages contrastive learning to decouple stable and drifting trajectories [

24]. DIEN further demonstrates the effectiveness of interest evolution modeling in CTR prediction [

27]. While these methods succeed in detecting changes, they frequently overlook persistent co-intent structures that can stabilize shifting preferences.

2.4. Graph-Based Structural Intent Modeling

Graph-based methods capture higher-order co-occurrence relationships that sequential encoders may overlook. SR-GNN pioneered session graphs [

14], LESSR improved robustness by addressing information loss [

15], and STAR-GNN highlighted long-distance dependencies [

16]. GCE-GNN integrated session-level and global-level graphs for improved context modeling [

26]. PinSage demonstrated that graph convolutional networks could operate at industrial scale [

18], and subsequent reviews have emphasized the broad applicability of graph-based recommenders [

27,

28]. These approaches enrich representation with relational semantics but often rely on static construction, limiting their adaptability to fast-changing user behavior.

2.5. Motivation and Gap Analysis

Sequential recommendation methods excel at temporal modeling, intent-aware methods capture latent semantics, and shift-aware methods adapt to temporal changes, while graph-based models represent higher-order relationships. However, most existing methods address only one perspective in isolation. Intent-aware models fail to adapt when user goals change, while shift-aware models neglect structural anchors. Hybrid approaches often provide only partial integration, either treating graphs as static embeddings or incorporating temporal signals without relational context. These limitations motivate IntentGraphRec, which integrates co-intent graph reasoning with shift-aware sequence modeling to jointly capture stability and adaptability.

2.6. Limitations of Existing Approaches

Existing research often isolates intent modeling and shift modeling, focusing on one at the expense of the other. Intent-aware models capture semantic and structural relationships but fail to adapt when user goals change. Shift-aware models adapt to temporal changes but lack stable relational anchors, which are vital for understanding user behavior in recurring contexts. Hybrid approaches that integrate both aspects often fail to achieve true synergy, either treating graph embeddings as static or incorporating temporal modeling without structural awareness.

These limitations motivate the design of IntentGraphRec, which explicitly integrates graph-based co-intent modeling with shift-aware sequence modeling, enabling the system to capture both stability and adaptability in user preferences. Our approach is distinguished by its fair comparison of the aforementioned methods using the full-catalog without sampled candidates and without future information leakage.

Our contributions can be summarized as follows:

Unified dual-level framework: We present a single architecture that jointly addresses the complementary limitations of intent-aware and shift-aware paradigms while remaining simple and modular.

Dynamic fusion mechanism: We design a learnable fusion gate that balances graph-anchored stability and temporal adaptability, enabling controllable interaction between structural and sequential signals.

Fair and reproducible evaluation: We conduct extensive experiments on multiple benchmarks under a leakage-free, full-catalog protocol and release code/scripts for transparent, apples-to-apples comparison.

Empirical diagnostics and guidance: We provide fine-grained analyses that clarify how stable intent structures and dynamic preference shifts interact, offering actionable guidance for future graph–sequence hybrids.

3. Proposed Approach

In this section, we present IntentGraphRec, a unified framework for sequential recommendation that jointly integrates structural co-intent reasoning and dynamic preference modeling. Unlike existing approaches that treat these two aspects in isolation, our model is explicitly designed to balance stability and adaptability, capturing both enduring user interests and short-term behavioral fluctuations. The framework is organized around two complementary components: (i) a Shift-Aware Sequential Encoder, which extends the Transformer architecture with temporal bias to model long-range dependencies while remaining sensitive to recency and irregular time intervals, and (ii) a Local Intent Graph Encoder, which constructs dynamic co-intent graphs over recent interactions to detect fine-grained patterns of short-term intent.

The outputs of these two modules are adaptively combined through a gating mechanism, yielding a fused representation that reflects both stable trajectories and transient shifts in user behavior. This unified vector is then used for next-item prediction via dot-product scoring against candidate embeddings. We train with full softmax (cross-entropy over all items) and rank over the entire catalog at inference; no candidate sampling is used in our experiments. The same full-catalog protocol is used for evaluation.

In the remainder of this section, we first introduce the sequential backbone encoder that captures long-term temporal evolution (

Section 3.3), followed by the construction of the local co-intent graph and message passing mechanism for short-term intent reasoning (

Section 3.4 and

Section 3.5). We then describe the fusion module that adaptively balances the two branches (

Section 3.6) and conclude with the scoring and training procedure (

Section 3.7). Together, these components form a coherent architecture that effectively captures both global stability and local adaptability in sequential recommendation tasks. The proposed model is trained and evaluated under the leakage-free, full-catalog evaluation protocol presented in subsequent sections, and all metrics are computed using full-catalog rankings.

3.1. Problem Formulation

Let a user’s interaction history be denoted as , where represents the -th interacted item and is the item set. The objective is to predict the next item given the sequence . We consider implicit feedback, where the presence of an interaction implies positive preference.

The key challenge is to capture both long-term evolving preferences and short-term co-intent dependencies. Purely sequential encoders emphasize order but neglect relational co-intent, while graph-based models encode relations but adapt poorly to shifts in preference. IntentGraphRec is designed to combine these strengths into a unified framework.

3.2. Overall Framework

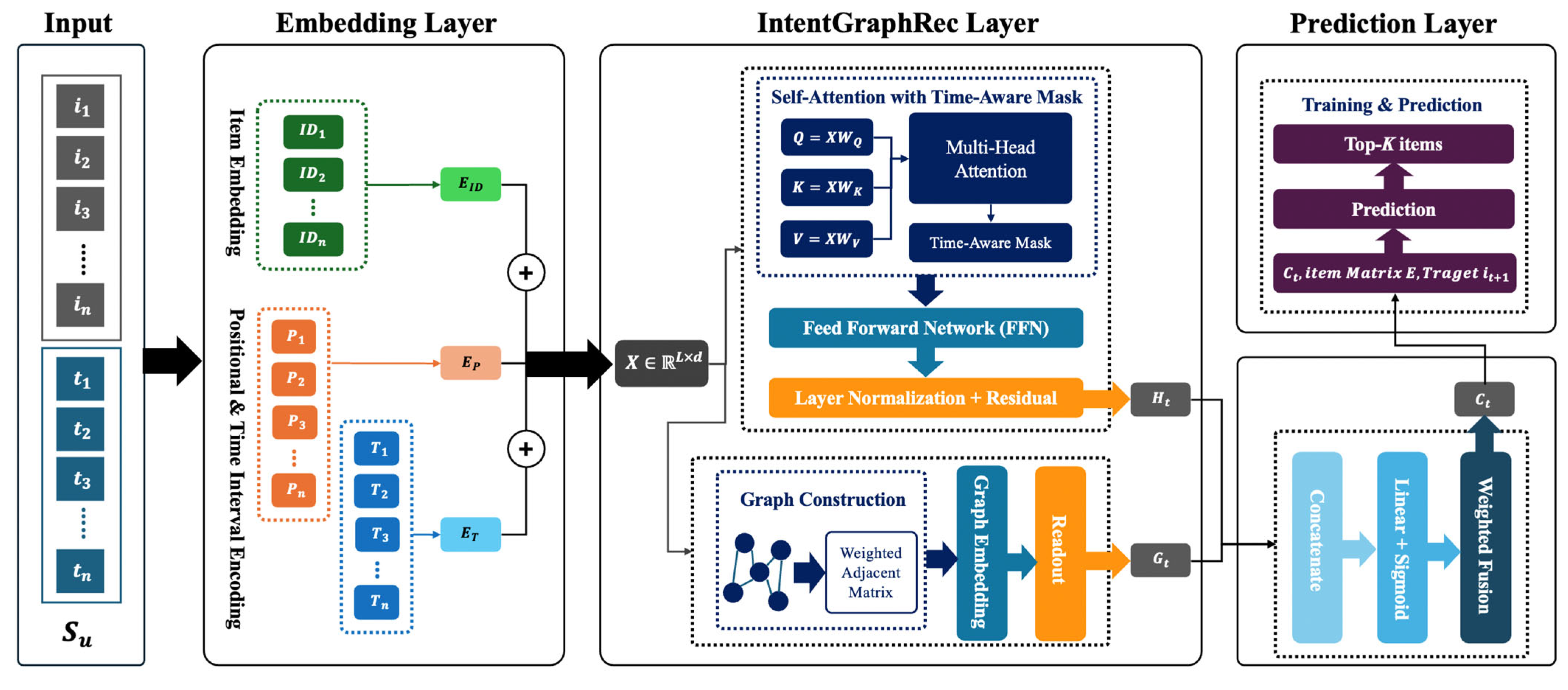

The overall architecture of IntentGraphRec is illustrated in

Figure 1. The framework integrates temporal sequence modeling and structural co-intent reasoning into a unified dual-level recommendation model. It consists of four major modules: (1) Embedding Layer, (2) Shift-Aware Sequential Encoder, (3) Local Co-Intent Graph Encoder, and (4) Fusion and Prediction.

Given a user’s historical interaction sequence

with timestamps

, each item ID is first mapped into a dense embedding vector

. To preserve order and recency, we augment item embeddings with positional encodings

and time-interval encodings

, resulting in the final token representation:

The sequence serves as the input to both the sequential and graph branches.

The upper branch of IntentGraphRec applies a Transformer-based encoder to capture long-range temporal dependencies. Each block consists of (i) multi-head self-attention augmented with a time-aware mask, which biases attention scores according to temporal intervals, and (ii) a feed-forward network with residual connections and normalization. Specifically, the time-aware mask is implemented as a temporal bias term that adjusts the raw attention score according to the time gap between items j and k, as described in Equation (8). This mechanism restricts attention flow to temporally valid (non-future) positions, thereby preventing look-ahead leakage. After stacking multiple layers, the encoder outputs contextualized token representations . The last hidden state is taken as the sequence-level context embedding that summarizes the user’s stable preference trajectory.

In parallel, the lower branch constructs a dynamic co-intent graph from the most recent w items. Nodes correspond to item embeddings, while edges are weighted by temporal proximity:

This graph is encoded using graph message passing, where each node aggregates features from its neighbors via weighted adjacency. A readout function (e.g., mean or attention pooling) is then applied to obtain the graph representation , which captures local co-intent structures within the short-term context.

To balance stability from the sequential encoder and adaptability from the graph encoder, we employ a gating mechanism. The two vectors are concatenated and passed through a linear–sigmoid transformation to compute gate values:

The fused representation is then obtained as:

Finally, prediction is made by computing dot products between and item embeddings E, followed by a softmax to estimate the probability of the next item. During training, we use full softmax (cross-entropy over all items) without negative sampling. During inference, rankings are computed over the entire item catalog.

3.3. Sequential Backbone Encoder (Shift-Aware Transformer)

The sequential encoder in IntentGraphRec is designed to capture long-term user preference trajectories by extending the Transformer framework with temporal awareness. This component models the entire interaction history as a sequence of contextualized embeddings, where each position reflects both semantic identity and temporal dynamics. Formally, the input sequence is expressed as

Here, denotes the item embedding, encodes absolute position within the sequence, and represents a learned embedding of the elapsed time since the previous interaction. This formulation ensures that the model not only respects the order of events but also recognizes the irregular spacing of user interactions, which is crucial in domains such as e-commerce and music listening where temporal gaps carry semantic weight.

Each Transformer block begins by computing queries, keys, and values as linear projections:

with

. Scaled dot-product attention is then applied, where the raw compatibility score between positions

j and

k is

Unlike standard Transformers, IntentGraphRec adjusts these scores with a temporal bias:

where

may be implemented as a logarithmic decay, a learnable bucket embedding, or a small neural module.

denotes a learnable time-decay mapping function used to bias attention scores based on elapsed time between interactions

and

. This bias favors recent interactions while still allowing influence from older but semantically relevant items. Normalized attention weights are computed as

The context-sensitive representation for each position is then obtained as which aggregates information from temporally modulated neighbors.

Following the attention sublayer, a two-layer feed-forward network expands and projects the representation:

where the hidden dimension is typically four times larger than

. Each sublayer is wrapped with residual connections, layer normalization, and dropout to stabilize optimization. After stacking

layers, the model produces a contextualized sequence matrix

.

The last hidden state is selected as the sequence-level context vector , which summarizes stable preference evolution. By combining global self-attention with explicit temporal encoding, the sequential encoder captures both long-range semantics and recency-sensitive dynamics, providing a robust representation of user behavior.

3.4. Local Intent Graph Construction

While the sequential encoder captures long-term stability, it may underrepresent the fine-grained dependencies among items consumed in close succession. To address this, IntentGraphRec constructs a dynamic local co-intent graph over the most recent

interactions. The embeddings of these items form the node features:

Edges are defined according to temporal proximity, under the assumption that items appearing close together in the sequence are more likely to represent a coherent short-term intent. The edge weight between nodes

and

is given by

where the decay parameter

controls how quickly the influence diminishes with distance.

is a decay hyperparameter that controls how quickly edge influence decreases as

and

drift apart in temporal distance. The graph is constructed as an undirected weighted graph, and its adjacent entries are row-normalized to form a transition matrix. The decay parameter

is set to 1.0 in all experiments, controlling the smoothness of temporal attenuation between neighboring items. Only the latest interactions within the prefix 1…

are used to construct the graph, ensuring that no future information is included and thus preventing data leakage. This produces a weighted adjacency matrix

, which is normalized to yield transition probabilities.

This local graph is recomputed for each user session, making it inherently adaptive to recent changes. In contrast to global co-occurrence graphs, which may be outdated or insensitive to session-specific fluctuations, the local graph highlights precisely those relationships that are active at the current time. By converting the most recent interactions into a structured graph, the model is able to detect latent co-intent patterns such as bundles of related products or clusters of songs within a playlist.

3.5. Graph-Based Message Passing

After constructing the local co-intent graph, information is propagated across nodes to refine their representations. Each node updates its embedding by aggregating information from its neighbors according to the normalized edge weights. The update for node

is defined as

where

is the normalized edge weight,

is a trainable weight matrix, and

is a nonlinear activation.

denotes the normalized transition probability after row-wise normalization of the weighted adjacency matrix. This operation allows each item to incorporate information from its temporally proximal companions, effectively capturing short-term co-consumption signals.

To obtain a single vector summarizing the local graph, node embeddings are pooled using a readout function:

The readout can be a mean or sum over nodes, or an attention-based mechanism that assigns higher weights to nodes deemed more influential. The resulting captures the short-term intent behind the user’s most recent interactions.

This mechanism is particularly effective in contexts where user behavior is highly episodic, such as planning a trip or exploring a product category. In such cases, the graph encoder surfaces relationships that the sequential encoder might underplay, thereby providing a complementary signal.

3.6. Fusion via Gating Mechanism

The sequential and graph encoders provide distinct but complementary signals. To integrate them, IntentGraphRec employs a gating mechanism that adaptively balances their contributions. The concatenation of

and

is passed through a linear transformation followed by a sigmoid activation to compute a gate vector:

The final fused representation is then obtained as

This formulation enables the model to emphasize sequence-derived stability when long-term preferences dominate, while shifting attention toward graph-derived signals in cases of short-term volatility. Unlike heuristic combinations, the gating vector is learned end-to-end, allowing the model to dynamically adjust the balance depending on user behavior and domain characteristics.

The outcome, , is a unified representation that simultaneously preserves stability and adaptability. This adaptive fusion is key to IntentGraphRec’s ability to generalize across domains with different levels of volatility and structural regularity.

3.7. Prediction and Training Objective

We train with full softmax (cross-entropy over all items) and rank over the entire item catalog without any candidate sampling in all reported experiments, while sampled softmax or in-batch negatives can optionally be applied only for scalability in much larger catalogs, which were not used in this study. The fused representation is used to compute scores for all candidate items by dot product with their embeddings:

These scores are passed through a softmax function to produce probabilities. Training is performed using a cross-entropy loss that contrasts the true next item against negative samples. When item catalogs are large, sampled softmax or in-batch negative sampling is employed. The loss function is expressed as

where

denotes the negative sample set. Regularization techniques such as dropout, weight decay, and label smoothing are applied to improve generalization. Training is optimized with AdamW, employing learning rate warm-up and cosine decay schedules.

At inference time, scores are computed for all items or for a reduced candidate set retrieved via approximate nearest neighbor search in large catalogs. The top-K items with the highest scores are recommended:

Optional filtering removes previously consumed items, and post-processing steps such as diversity enhancement or business-rule re-ranking may be applied. This design ensures that IntentGraphRec remains effective in both stable and volatile domains. By combining sequential modeling, local graph reasoning, and adaptive fusion, the model produces recommendations that are sensitive to both enduring habits and transient fluctuations.

3.8. Model Summary

IntentGraphRec integrates two complementary mechanisms—intent graph encoding and shift-aware sequence encoding—to jointly capture both static co-intent structures and dynamic preference transitions within user behavior sequences. The Intent Graph Encoder leverages a co-intent graph constructed from user sessions, where nodes represent items and edges encode co-occurrence frequency. This structure lets the model learn intent-aware item embeddings via a lightweight GNN, capturing higher-order semantics and user goals that persist over time. These global embeddings provide a stable basis for interpreting behavioral patterns, especially in sparse or long-tail scenarios.

In parallel, the Shift-Aware Transformer Encoder dynamically modulates attention over a user’s sequence by detecting transition points (e.g., changes in category or context). Using a boundary-aware attention mask and positional/temporal cues, the encoder emphasizes local preference shifts while retaining global context. A learnable fusion gate then combines the graph and sequence representations to produce the final user state, which is scored against the entire item catalog (full softmax) for training and evaluated under a leakage-free, full-catalog ranking protocol at inference. This dual-level design balances stability and adaptability and offers a reproducible basis for analyzing when structural intent signals help—and when they are dominated by sequence representations.

4. Experiments

4.1. Experimental Settings

We evaluate IntentGraphRec on two public benchmarks, Gowalla and MovieLens-1M. All models are implemented in PyTorch 1.12.1 and trained on a single NVIDIA RTX 4090. Unless stated otherwise, we adopt a unified training budget across models: Adam optimizer, learning rate 0.001, batch size 128, hidden size 64, and 10 epochs. We do not perform per-model hyper-parameter tuning; the goal is to compare paradigms under the same computation/time budget. To support transparency and reproducibility, all hyperparameters, seeds, and configuration files used in our experiments are included in the public repository (

https://anonymous.4open.science/r/IntentRec-E255/README.md, accessed on 2 October 2025).

This uniform protocol is chosen to ensure strict reproducibility and fairness under an identical compute/time budget. We acknowledge that disabling per-model tuning can limit absolute performance and may favor architectures that converge faster or are more stable under this budget. To make this trade-off explicit and reproducible, we release all training configurations and random seeds and provide optional scripts to conduct per-model sweeps (e.g., learning rate and epoch count) so that readers can validate tuned baselines if desired. Gowalla is a location-based social networking dataset collected via user check-ins [

29], while MovieLens-1M contains timestamped movie rating interactions from a large online recommendation service [

30].

4.2. Datasets

Gowalla consists of geo-tagged check-ins that exhibit sparse yet recurrent local patterns. MovieLens-1M is converted to implicit feedback by treating ratings ≥ 4 as positive interactions; sequences are sorted chronologically for each user. The final statistics after preprocessing are summarized in

Table 1.

Gowalla: A location-based social network dataset containing user check-in histories. We use the preprocessed version where users and items with fewer than five interactions are removed to ensure adequate sequence length.

MovieLens-1M: Explicit ratings transformed into implicit feedback by treating ratings ≥ 4 as positive. Interactions are sorted chronologically to create user sequences.

Gowalla reflects location-based check-in behaviors with strong locality and sessionized transitions, whereas MovieLens-1M reflects stable, relatively regular rating-based consumption patterns with stronger long-term preference cues. These distinct behavioral regimes motivate the dual-level design because structural co-intent is more salient in short, locality-driven sessions, whereas shift-aware sequence modeling is more critical when longer-term preference drift dominates.

4.3. Baselines

We compare against representative sequence, transformer, and graph models: GRU4Rec, SASRec, BERT4Rec, SR-GNN (session graph), and IntentGraphRec (ours).

Table 2 briefly contrasts architectural focuses (sequence modeling, graph context, shift awareness). Our comparison emphasizes relative behavior under the same training budget rather than hyper-parameter–optimized best cases.

Table 2 summarizes the architectural characteristics of the models included in our experiments. The baselines cover both sequence-oriented and graph-oriented approaches, enabling a comprehensive comparison. GRU4Rec represents recurrent models that capture sequential dynamics through GRUs but lack explicit graph reasoning and temporal shift awareness. SASRec and BERT4Rec are Transformer-based architectures: the former models sequence in an autoregressive manner, while the latter adopts a bidirectional training objective to leverage both past and future interactions; however, neither incorporates explicit graph structures or temporal shift modeling. In contrast, SR-GNN emphasizes graph-based representations of item transitions. SR-GNN models local session-level structures, but the model lacks sequential encoders and time-aware mechanisms. Finally, our proposed IntentGraphRec combines the strengths of these paradigms, integrating self-attention–based sequence modeling with dynamically constructed co-intent graphs, and further incorporating shift-awareness to adaptively handle temporal evolution. This selection of baselines provides a balanced evaluation environment that highlights the incremental benefits of IntentGraphRec’s unified design.

4.4. Evaluation Metrics

We use three standard top-K evaluation metrics to assess recommendation quality:

Recall@K and Normalized Discounted Cumulative Gain@K (

NDCG@K). These metrics are widely adopted in the literature for evaluating sequential recommendation tasks.

Recall@K measures the proportion of relevant items that are successfully recommended within the top-K ranked items. It is defined as:

In our single ground-truth next-item setting, Recall@K reduces to 1 if the ground-truth item appears in the top K predictions, and 0 otherwise. Higher Recall indicates better coverage of relevant items.

Normalized Discounted Cumulative Gain

NDCG@K takes into account the position of the relevant item in the recommendation list, rewarding higher-ranked placements. The Discounted Cumulative Gain (DCG) is computed as:

where

is 1 if the item at position

is relevant, and 0 otherwise. The NDCG is then:

where

IDCG@K is the ideal DCG (i.e., DCG when the relevant item is ranked first). This metric emphasizes the importance of ranking relevant items higher in the list. These two metrics jointly assess retrieval coverage (Recall) and ranking quality (NDCG).

4.5. Results and Analysis

Table 3 shows that the strongest baseline depends on the domain. On MovieLens-1M, BERT4Rec achieves the best scores across

, whereas on Gowalla the top tier is formed by graph-based GNN (SR-GNN-style) and BERT4Rec. IntentGraphRec behaves consistently but lags behind the top baselines on both datasets—more noticeably on MovieLens-1M. This suggests that the model’s emphasis on co-intent structure + shift awareness did not perfectly align with the dominant signals in these two benchmarks under our training regime. On MovieLens, IntentGraphRec remains stable relative to GRU4Rec/SASRec but trails BERT4Rec and the session-GNN, suggesting that under leakage-free, full-catalog ranking, late fusion can be dominated by strong sequence encoders when user intent is less volatile.

On Gowalla, SR-GNN and BERT4Rec occupy the top tier. Geographic locality and repeated visits produce co-occurrence structures that a session graph can capture well. IntentGraphRec’s NDCG improves as K increases, but the gap to the leaders remains. A static graph + shift gating is helpful but insufficient to dominate in a domain where local transitions and session-level topology are especially strong.

BERT4Rec leads on ML-1M for Recall@K and NDCG@K. Bidirectional self-attention effectively exploits global context in sequences with stable genre/series regularities and repeated consumption. IntentGraphRec is stable relative to GRU4Rec/SASRec but trails BERT4Rec and the session-GNN, indicating that late fusion is often dominated by strong sequence encoders under this protocol.

For both datasets, gaps shrink from @5 to @20. This indicates the top-rank competition is where models differ most; widening K reduces the margin. IntentGraphRec’s relatively low NDCG@5 highlights missing top-slot calibration. Strengthening listwise objectives or top-heavy penalties could improve early-rank precision.

All methods were trained with an identical budget (10 epochs, shared hyper-parameters). This tends to favor high-capacity encoders (BERT4Rec, session-GNN) that already align with each dataset’s dominant signal. By contrast, IntentGraphRec combines two signals (co-intent structure and shift awareness) whose fusion likely requires more schedule/regularization to realize benefits. Also, our co-intent graph is static; potential advantages of dynamic intent updates during sessions are not exercised in this setup.

4.6. Diagnostic Analysis

Structure–context mismatch: ML-1M’s strong contextual regularity favors BERT4Rec; Gowalla’s session locality favors the session GNN. IntentGraphRec unifies the two ideas but, under the current schedule, does not dominate either axis.

Top-rank sensitivity: IntentGraphRec improves with larger K, yet NDCG@5 trails. Incorporating top-weighted/listwise losses, temperature scaling, or margin shaping could raise early-rank accuracy.

Static graph limitations: Replacing the static co-intent graph with time-weighted or session-adaptive graphs, or decomposing it by sparser sub-catalogs, may sharpen structural cues.

Parameter sharing/prompting: Treating graph embeddings as Key/Value prompts (or lightweight adapters) in the transformer can reduce optimization burden and improve top-slot calibration.

4.7. Summary of Findings

No single model wins everywhere. BERT4Rec is superior when contextual regularity is strong (MovieLens-1M); session GNNs excel when local transitions dominate (Gowalla). IntentGraphRec offers a principled integration of structural co-intent and shift dynamics, but under a uniform, modest training budget with a static graph, it does not surpass the strongest specialized baselines. Still, the analysis clarifies when/why our components help, and points to concrete upgrades—dynamic intent graphs, top-rank objectives, and schedule/adapter design—as credible paths to a performance inflection.

5. Conclusions

This work introduced IntentGraphRec, a dual-level framework that combines a graph-based view of co-intent with a shift-aware sequence encoder. The graph branch extracts intent-aware item representations from session co-occurrence, capturing higher-order and relatively stable relations, while the sequence branch emphasizes short-range transitions through boundary-aware attention over the user’s history. A learnable gate fuses both signals. All models were trained and assessed under the same budget and a leakage-free, full-catalog ranking protocol, so the reported numbers reflect realistic rankings without candidate sampling or look-ahead effects.

The results indicate a clear pattern. On MovieLens-1M, BERT4Rec consistently leads. Its bidirectional contextualization aligns well with domains where consumption is regular and genre/series cues are strong. On Gowalla, a session-graph model is highly competitive because locality and short-range co-occurrence are informative and can be propagated effectively along session edges. IntentGraphRec behaves stably across both datasets but does not outperform the strongest specialized baselines. In practice, a simple late fusion is often dominated by an already strong sequence encoder on MovieLens-1M, and the static co-intent graph is not sufficient to displace a dedicated session GNN on Gowalla.

Evaluating with the entire item catalog and strict masking tends to compress optimistic gaps that sometimes appear under sampled-candidate protocols; gains that survive here are more credible. Structural intent signals are most helpful when they match the dataset’s dominant regularities, whereas volatility favors models that prioritize local transitions. Early injection of structure—such as graph-aware positional cues or attention biases or treating graph embeddings as keys/values for the transformer—appears more promising than end-of-pipeline fusion. Our use of a static graph and a modest schedule limits the contribution of fusion; deeper scheduling, top-rank–oriented objectives, or lightweight pretraining would likely improve early-rank precision.

While full ablation or sensitivity experiments are beyond this paper’s scope, preliminary internal observations showed that removing either the shift-aware component or the local co-intent graph led to consistent performance drops, suggesting that both components contribute meaningfully to the final effectiveness. A more extensive ablation study will be included in future work. Moreover, although the datasets utilized in this paper are widely adopted, they share similar interaction sparsity and temporal granularity, so our evaluation is limited in domain diversity; applying the same protocol to more heterogeneous domains (e.g., e-commerce or social media) remains an important direction for future work.

Overall, the study provides a reproducible view of how structural and temporal signals interact under strict evaluation. The proposed model is competitive and reliable but not superior to the strongest baselines in these settings. The evidence clarifies when structure helps and when a strong sequence encoder already suffices, and it points to reasonable extensions—dynamic intent signals and earlier integration within attention—that are compatible with the same evaluation protocol. These observations clarify that under strict full-catalog ranking, relative differences between architectures compress, so only signal contributions that survive pessimistic evaluation should be considered practically meaningful.