1. Introduction

Partial differential equations (PDEs) play a critical role in modeling diverse physical phenomena in applied sciences and engineering. Solving the nonlinear PDEs linked with these phenomena is essential for a thorough understanding of certain problems in nature. It is noteworthy that many nonlinear PDEs demonstrate traveling wave solutions known as solitary wave solutions. An important instance is the Fornberg–Whitham equation, which depicts features of wave breaking behavior, fluid mechanical dynamics, and water propagation. The FW equation is stated in the form

which is proposed to model the behavior of wave breaking [

1]. The variable

is explicitly defined as the wave profile, with

x and

t representing the spatial and temporal coordinates. A generalized Fornberg–Whitham equation takes the form

where

is a constant.

Equations (1) and (2) describe the standard and parameterized Fornberg–Whitham models, respectively. To further investigate the decisive role of nonlinear effects on wave behavior, a modified Fornberg–Whitham equation is introduced. This model mathematically originates from the enhancement of the nonlinear convective term, replacing

with

. It provides an ideal mathematical model for studying higher-amplitude waves, more intense wave–wave interactions, and potential wave singularities. The modified Fornberg–Whitham equation is as follows:

The structure of the solutions has so far been analyzed in depth for the FW Equation [

2]. The global existence of a solution to the viscous Fornberg–Whitham equation has been demonstrated in [

3], while the investigation of boundary control was discussed in [

4]. There is a pressing need for further exploration both mathematically and numerically in this area. Developing a stable, higher-order-accurate, and authentic numerical scheme poses significant challenges and difficulties. In addition to the computationally intensive nonlinear term

, two less explored third-order derivative terms are present, the mixed space and time linear term

and the nonlinear dispersion term

, which have the potential to spread localized waves. Direct and simple approximations of these third-order dispersion terms must be avoided to ensure a more precise and accurate numerical representation of wave steepening and spreading. The study by Hornik et al. [

5] demonstrated that deep neural networks serve as approximators for universal functions. Additionally, the work presented in reference [

6] introduced automatic differentiation techniques for neural networks capable of handling high-order differential operators and nonlinear terms. Hence, it is plausible to explore the application of neural networks in solving the FW equation.

Deep learning has emerged as a groundbreaking technology in various scientific domains [

7,

8,

9]. Its exceptional capacity for nonlinear modeling has garnered significant interest in computational mechanics [

10,

11] in recent years. However, training deep neural networks (DNNs) for black-box surrogate modeling typically necessitates a substantial amount of labeled data, a limitation frequently encountered in scientific applications. In 1998, Lagaris et al. [

12] pioneered the utilization of machine learning techniques to address partial differential Equations (PDEs) by employing artificial neural networks (ANNs). By formulating an appropriate loss function, the output generated by the network satisfies the prescribed equation and boundary conditions. Building upon this foundation, Raissi et al. [

13] harnessed automatic differentiation techniques and introduced physics-informed neural networks (PINNs), where the residual of the PDE is integrated into the loss function of fully connected neural networks as a regularizer. This incorporation effectively restricts the solution space to physically feasible solutions. PINNs exhibit a favorable characteristic of being able to operate effectively with limited data, a property that can be seamlessly integrated into the loss function for optimal performance.

Shin et al. [

14] conducted a theoretical study on the solution of partial differential equations using PINNs, suggesting that the solution obtained by PINNs can converge to the true solution of the equation under specific conditions. Mishra et al. [

15] provided an abstract analysis of the sources of generalization errors in PINNs when studying PDEs. PINNs have found widespread applications in solving various types of PDEs, including fractional PDEs [

16,

17] and stochastic PDEs [

18,

19], even when facing with limited training data. The loss function of PINNs typically comprises multiple terms, leading to a scenario where these terms compete during training, making it challenging to minimize all terms simultaneously. An effective strategy to enhance training performance is to adjust the weights assigned to the supervised and residual terms in the loss function [

20,

21]. A significant drawback of PINNs is the high computational cost associated with training, which can detrimentally impact their performance. Jagtap et al. [

22] proposed strategies for both spatial and temporal parallelization to mitigate training costs more efficiently. Additionally, Mattey and Ghosh [

23] highlighted that the accuracy of PINNs may be compromised in the presence of strong nonlinearity and higher-order partial differential operators. The FW equation contains high-order differential operators and nonlinear terms, thus requiring improvements to the PINN method for better solving it.

In some studies on continuous-time PINN methods, the clarity of time–space variables is compromised [

24]. For evolutionary PDEs, accurate initial predictions are essential for PINN effectiveness. Additionally, missing time-dependent properties can cause training issues due to non-convex optimization challenges. Similar problems may arise in broader spatiotemporal domains. Therefore, in the design and implementation of PINN methods, it is crucial to address the treatment of spatiotemporal variables. Incorporating additional supervised data points aligns with the principles of label propagation methodology [

25], enhancing the efficacy of training performance significantly. In this scenario, the application of transfer learning principles should be considered to address this issue. Transfer learning is inspired by the human ability to leverage previously acquired knowledge to address new problems with improved solutions [

26,

27]. This approach may potentially mitigate non-convex properties, leading to efficient and accurate optimization in PINNs [

28,

29]. Domain adaptation is a mature research direction in transfer learning, with the core goal of transferring knowledge learned from a “source domain” to a related but differently distributed “target domain” through techniques such as feature alignment and distribution matching [

30].

In this study, we explore the utilization of PINNs for resolving Fornberg–Whitham-type equations. To improve the precision of predictions and the resilience of training, we introduce a new methodology named domain adaptation for PINNs (DA-PINN). It is capable of efficiently resolving large-scale spatiotemporal issues and demonstrates superior predictive accuracy compared to the PINN method, as validated through tests on Equation (

1). The method efficiently addresses Equation (

2) with minimal computational cost, while maintaining satisfactory prediction accuracy for unknown coefficients in noisy data environments. Additionally, DA-PINN is adept at solving anisotropic problems. Compared to the variational iteration methods [

31], it exhibits enhanced predictive accuracy, with computational efficacy confirmed for Equation (

3).

This study is organized in the following manner.

Section 2 introduces the background and domain adaptation for PINNs.

Section 3 describes details about the proposed method. In

Section 4, various examples are showcased to illustrate the effectiveness of the DA-PINN approach. The final conclusions are drawn in

Section 5.

3. Proposed Method

When mathematicians study Fornberg–Whitham-type equations, a central objective is to seek their traveling wave solutions, which take the form , where c represents the wave speed. For such solutions, it is conventionally assumed that the wave profile decays at infinity, i.e., as . This asymptotic behavior acts as a boundary condition—referred to as the far-field boundary condition. Although not imposed at finite spatial points, it constrains the solution behavior at the boundaries of the unbounded domain. According to the characteristics of FW model equations, we use the PINN framework with different skills to solve these problems. For the FW equation, its boundary condition is specified as the far-field boundary condition, and it is appropriate to use transfer learning based on spatial variables. By using multi-scale neural networks to capture high-frequency information, more accurate solutions can be obtained.

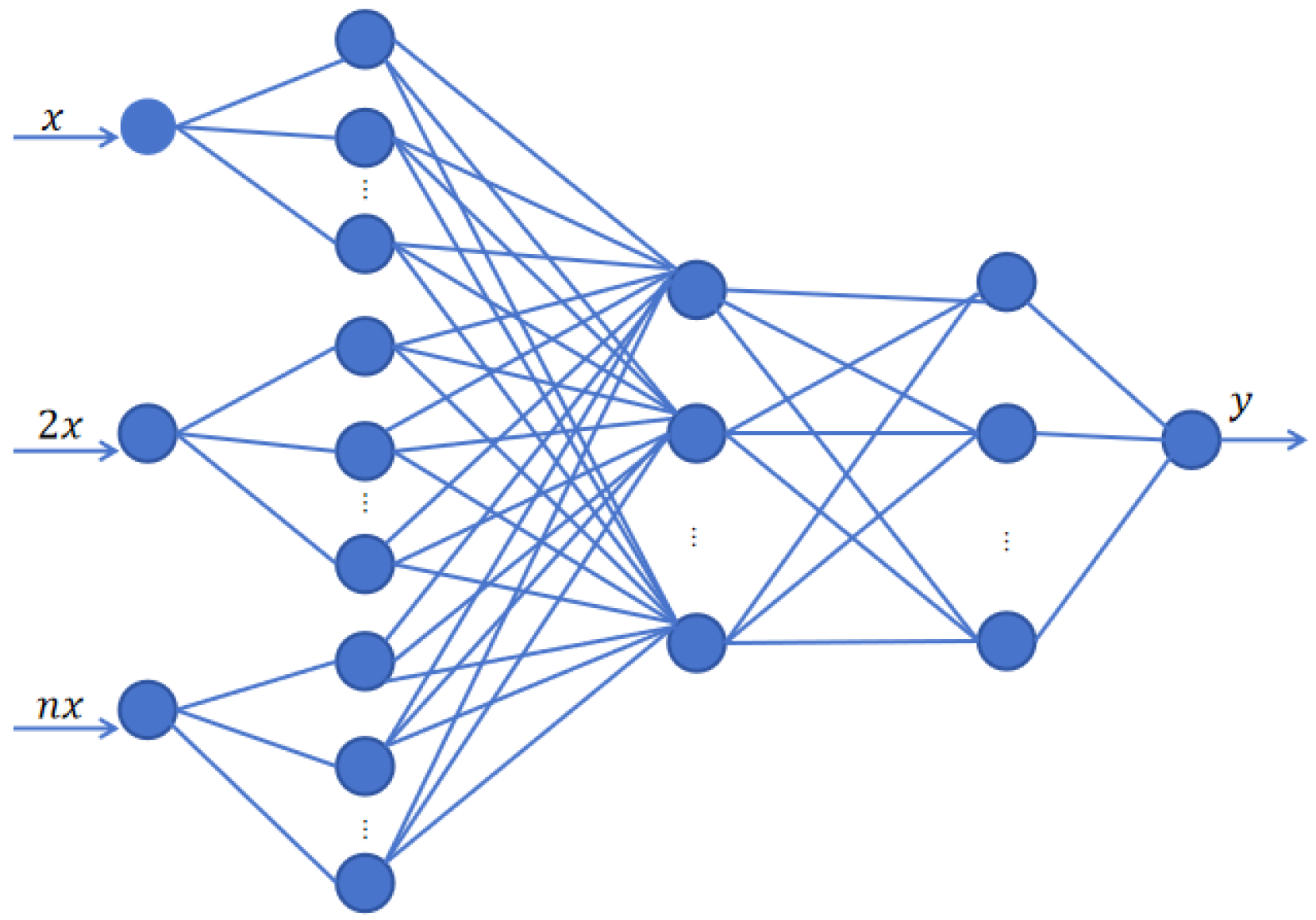

3.1. Mscale-DNN

The F-Principle elucidates the phenomenon where neural networks are susceptible to high-frequency catastrophes, which pose significant challenges to training and generalization that are not readily mitigated by mere adjustments of parameters [

32]. Employing a sequence of

values, extending from 1 to a big number, allows for the construction of a Mscale-DNN framework. This structure is instrumental in enhancing the convergence pace for solutions that span a broad spectrum of frequencies, maintaining consistent precision across these frequencies. The Mscale-DNN network structure is shown in

Figure 1.

3.2. Domain Adaptation in Transfer Learning for PINN

As a well-established research branch in machine learning, domain adaptation essentially addresses the problem of “cross-domain knowledge transfer”. Specifically, when the “source domain” and “target domain” are correlated but differ in data distribution, it enables the knowledge trained on the source domain to be effectively adapted to the target domain through methods such as feature alignment and distribution matching. This logical framework is perfectly applicable to our work: each subproblem we handle can be clearly defined as the ”source domain” and ”target domain” within this framework. In the context of solving partial differential equations (PDEs), to avoid solution discontinuities (e.g., jumps in the solution field at the boundary of source and target domains), we introduce a source domain supervision term into the target domain’s loss function. This facilitates the transfer and alignment of features or knowledge of solutions between subdomains, thereby stabilizing training and improving the overall solution accuracy.

The main idea of domain adaptation in transfer learning for PINNs is to transform the anisotropic problem into several small-scale subproblems. The solving process is divided into two stages. In the initial stage, a traditional PINN is used to obtain the solution to the initial subproblem. In particular, Equation (

1) undergoes training over the temporal interval

or the spatial domain

, employing the conventional PINN approach. The duration of the training interval for the source domain is an adjustable parameter, with its determination being contingent upon the specific problem at hand. In the transfer learning stage, the traditional PINN containing the training information from the previous step is used to solve the problem step by step in a time domain expansion or spatial domain expansion manner. This improved PINN can avoid the problem of traditional PINNs getting stuck in local optima. In the source domain training phase, the dataset

is composed of pairs

, defined as

where

and

are the numbers of residual data and boundary data in the source domain training step, respectively. The formulation of the optimization problem for training in the source domain is restated as follows:

The parameter

is typically initialized randomly in the process of solving Equation (

5). During formal training, the inclusion of additional supervised information can enhance the performance of the PINN [

33]. The solution derived from training within the source domain during the initial phase is utilized as additional supervised learning data for subsequent formal training sessions. The loss function is delineated as follows:

where

represents the weight of the extra supervised learning component. The dataset

S is composed of pairs

and defined as

denotes the loss function of supervised learning, defined as

with the dataset

defined by

or

and

represents the number of points in the dataset. The optimization problem

is solved to derive a numerical solution

for Equation (

1) within the domain

. The specific steps of the algorithm can be found in Algorithm 1.

Remark 1. The concept of incorporating additional supervised data is closely linked to the label propagation technique [25], and the augmentation of supervised learning contributes to enhancing training performance. This technique demonstrates greater prominence in addressing complex problems, a phenomenon validated by the numerical examples in this study. | Algorithm 1: Domain adaptation for PINNs |

Input: Neural network structure; source task; target task.

Step 1: Set training steps K, Neural network parameters initialization;

Step 2: Incorporate physical laws into the design of the neural network’s loss function based on (5). Solve the source task optimization problem (6) in the first stage. Output the neural network parameters ;

Step 3: Domain adaptation: Generate the supervised learning data and the training set in next interval; Employ the parameter of the neural network, obtained upon the conclusion of the preceding training phase, as the initial estimate for the optimization of Equation (7). .

output: The approximate solution of PDE.

|

3.3. Optimization Method

We seek to find

, which minimizes the loss function

. Numerous optimization algorithms exist for minimizing the loss function, with gradient-based optimization techniques commonly utilized in the parameter training process. In the basic form, given an initial value of parameters

,

is the number of training subintervals. The parameters are updated as

stochastic Gradient Descent (SGD) represents a prevalent optimization method, where subsets of data points are randomly chosen to approximate the gradient direction in each iteration. This method proves effective in circumventing poor local minima during the training of deep neural networks, particularly under the one-point convexity condition.

3.4. Error Analysis

Define

F as the set of functions representing a specified neural network architecture, and let

denote the exact solution to the partial differential equation under consideration. Then, we define

as the best approximation to the exact solution

. Let

be the solution obtained by the training net and

be the solution of the net at the global minimum. Therefore, the total error consists of an approximation error

the generalization error

and the optimization error

Enhanced network expressivity in a deep neural network can effectively reduce the approximation error, yet it may lead to a substantial generalization error, characterized by the bias–variance trade-off. Within the PINN framework, the number and distribution of residual points play pivotal roles in determining the generalization error, potentially influencing the loss function configuration—especially when employing a sparse set of residual points near steep solution changes. The optimization error stems from the intricate nature of the loss function, with factors such as network structure (depth, width, and connections) exerting significant influence. Fine-tuning hyperparameters like learning rate and number of iterations can further refine and mitigate optimization errors in the network. We have

3.5. Advantages of PINN Method for Transfer Learning

In this method, the incorporation of an additional supervised learning component enhances training performance and contributes to a reduction in computational time.

The methods can decrease the approximation error by employing multi-scale neural networks, which capture high-frequency information.

This improved PINN avoids the problem of traditional PINNs getting stuck in local optima, thereby reducing optimization errors.

4. Numerical Examples

In this section, we provide multiple numerical illustrations encompassing the Fornberg–Whitham and the modified Fornberg–Whitham equations to show the effectiveness of domain adaptation in transfer learning for PINNs. To demonstrate the feasibility of the proposed algorithm, the baseline PINN method is first used to solve the forward and inverse problems of the Fornberg–Whitham equation, and hyperparameters are analyzed. Then, the improved PINN method is used to improve the boundary error of the region, and the proposed method is tested to have good effects on anisotropic problems. Next, the modified Fornberg–Whitham equation is tested, and the proposed method can obtain higher accuracy numerical results in a shorter time.

To evaluate the performance of our methods, we adopt the relative

error. The methodology is implemented within TensorFlow version 1.3, with variables of the float32 data type. Throughout the experiments, the Swish activation function is utilized. For the Adam optimizer, an exponential decay in the learning rate is set to occur every 50 steps with a decay rate of 0.98. The configuration and termination conditions for the L-BFGS optimizer adhere to the recommendations provided in [

34]. Prior to initiation of the training phase, neural network parameters are randomly determined following the Xavier initialization method, as outlined in [

35].

where

represents either the actual solution or the reference solution, and

denotes the prediction made by the neural network for a point within the test data

. The test data are formulated through the stochastic sampling of points from the spatiotemporal domain. The performance of the neural network is evaluated using the

-error

4.1. Fornberg–Whitham Equation

Consider Equation (

1) with the following initial condition:

We have the exact solution:

The computation is carried out in the domain of

and

. In this assessment, a four-layer fully connected network architecture is employed, featuring 50 neurons in each layer and utilizing the Swish activation function. We also investigate the performance of PINNs with respect to the number of sampling points and boundary points. Specifically, the number of points selected for the trials shown is

. We also determine the optimization strategy. Applying the Adam optimizer to optimize the initial trainable parameters in the neural network. Subsequently, the L-BFGS-B optimizer is deployed to fine-tune the neural network, aiming to achieve higher accuracy. The termination of the L-BFGS-B training process is automatically governed by a predefined increment tolerance. Unless otherwise specified, all experiments in this study adopt the following unified setup and parameter configurations to ensure consistency and reproducibility of results.

4.1.1. Baseline PINN

In this section, we evaluate the performance of the baseline PINN method in data-driven solutions of the Fornberg–Whitham equation and data-driven discovery of Fornberg–Whitham equation. We systematically analyze various hyperparameters within the PINN framework to better understand their influence on the predictive accuracy and overall performance of the model.

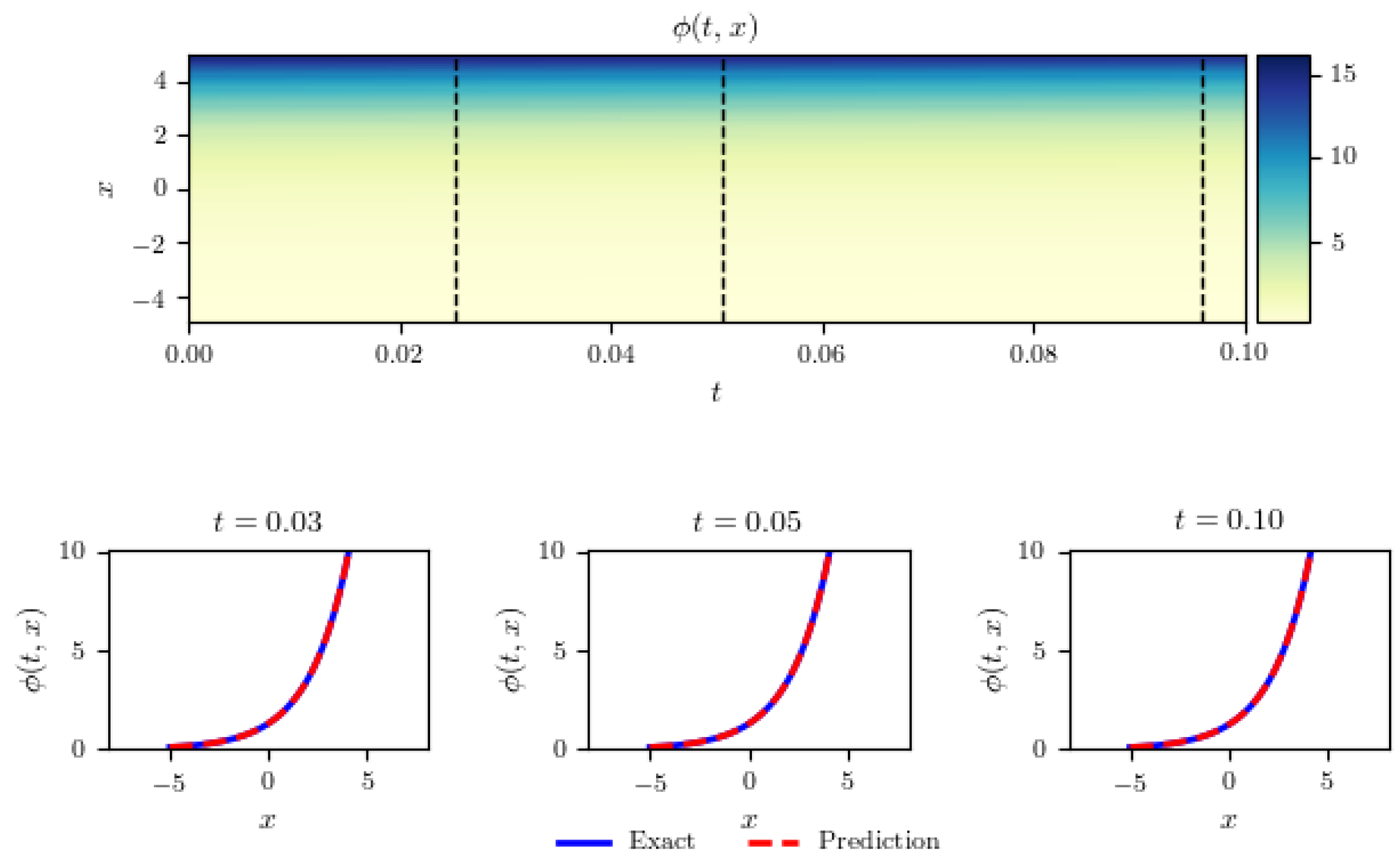

Firstly, consider the issue of data-driven solutions to the FW equation.

Figure 2 encapsulates our findings regarding the data-driven solution to the FW equation. We see that the predicted solution is consistent with the true solution, and the error between the predicted solution and the true solution is small enough. It is feasible to solve the FW equation by the PINN method. With merely a limited set of initial data, the physics-informed neural network effectively grasps the complex nonlinear dynamics inherent in FW equation. This aspect is famously challenging to resolve with precision using conventional numerical techniques, necessitating a meticulous spatial and temporal discretization of the equation. To further evaluate the efficacy of our proposed method, we conduct a series of systematic investigations to measure its predictive accuracy across varying numbers of training and collocation points, as well as different neural network architectures. The relative

errors associated with different quantities of initial and boundary training data

and various numbers of collocation points

are detailed in

Table 1. The observed trend clearly demonstrates an improvement in predictive accuracy with an augmentation in the quantity of training data

, contingent upon an adequate count of collocation points

. This finding accentuates a principal advantage of physics-informed neural networks: the incorporation of the inherent structure of the physical laws via collocation points

facilitates a learning algorithm that is both more precise and efficient in terms of data utilization. Finally,

Table 2 presents the relative

errors derived from varying the number of hidden layers and the number of neurons per layer, whilst maintaining constant the number of initial training points and collocation points at

and

, respectively.

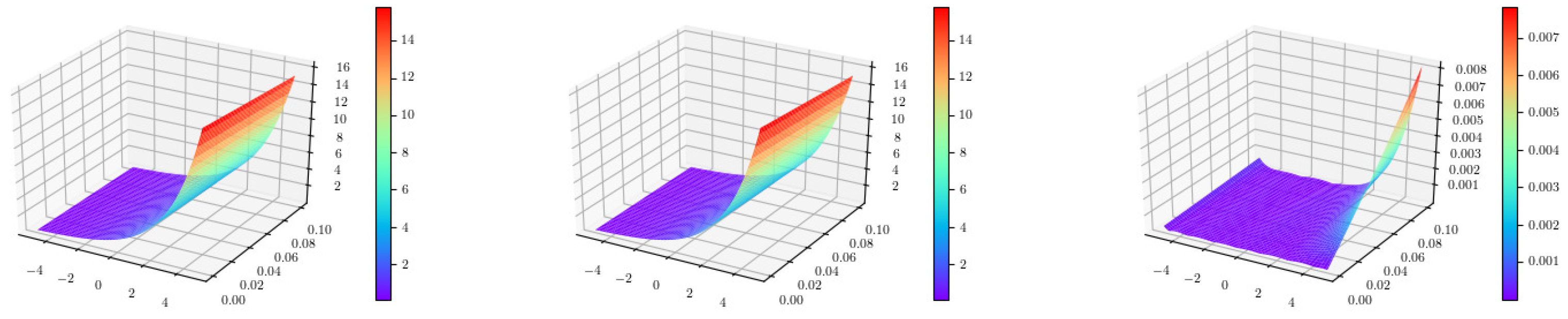

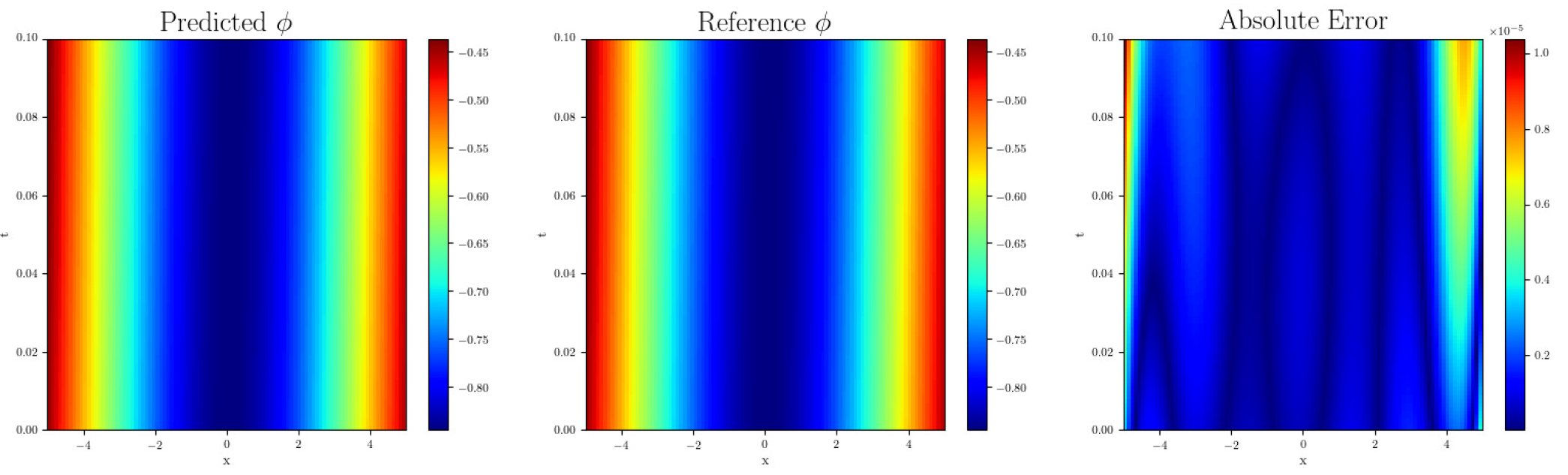

4.1.2. Multiple DA-PINN

Although the PINN method has shown feasibility in solving the forward problem of the FW equation, it may not always achieve the necessary accuracy, especially at the termination time of T = 0.1. Therefore, we attempt to use DA-PINN to solve the above problems, as described in

Section 3 as a more appropriate approach to tackle this issue. Specifically, in the DA-PINN process, set the source domain to (

,

,

) and the target domain to (

).

The results obtained demonstrate that the relative

error of DA-PINN on the test set is 4.051218

, which is significantly lower compared to the relative

error of 8.7981

obtained when using the PINN method. Furthermore,

Figure 3 and

Figure 4 illustrate error distribution of two methods. Specifically, the data from the figures reveal that the errors at T = 0.1 generated by DA-PINN are substantially smaller than the corresponding errors at T = 0.1 obtained using the PINN. This underscores the superior precision and effectiveness of the DA-PINN method for addressing the FW equation compared to the standard PINN approach. The

Error of the numerical solution of Equation (1) solved by DA-PINN under different spatiotemporal slices is shown in

Table 3.

Next, consider the following issues:

,

, and

. To maintain the precision of the source task, it is essential that the length of the source task interval remains reasonably short. Yet, in more challenging scenarios, a sole source target accompanied by a brief pre-training period might fall short of offering an adequate preliminary estimate for the substantive training phase. To address this issue, we introduce a strategy encompassing multiple instances of transfer learning. This strategy entails the implementation of various transfer learning phases, as outlined below:

in the first transfer learning interval

, the standard transfer learning step is reused. The resulting parameters of the neural network are indicated by

. The transfer learning dataset for the

i-th interval is represented by

. In any transfer learning interval

,

, consider the following optimization problem:

The training set of two methods is listed in

Table 4.

In this study, we encounter a problem with , and . To solve this issue, we employ Multiple DA-PINN. However, when using the PINN, we observe a relative error of 6.05 , which indicates that the solution is invalid. In contrast, Multiple DA-PINN produces a relative error of 1.51 , indicating that the solution is valid. Therefore, we conclude that Multiple DA-PINN is a reliable method for solving this particular problem. Our findings demonstrate the effectiveness of Multiple DA-PINN in solving large space issues with high accuracy.

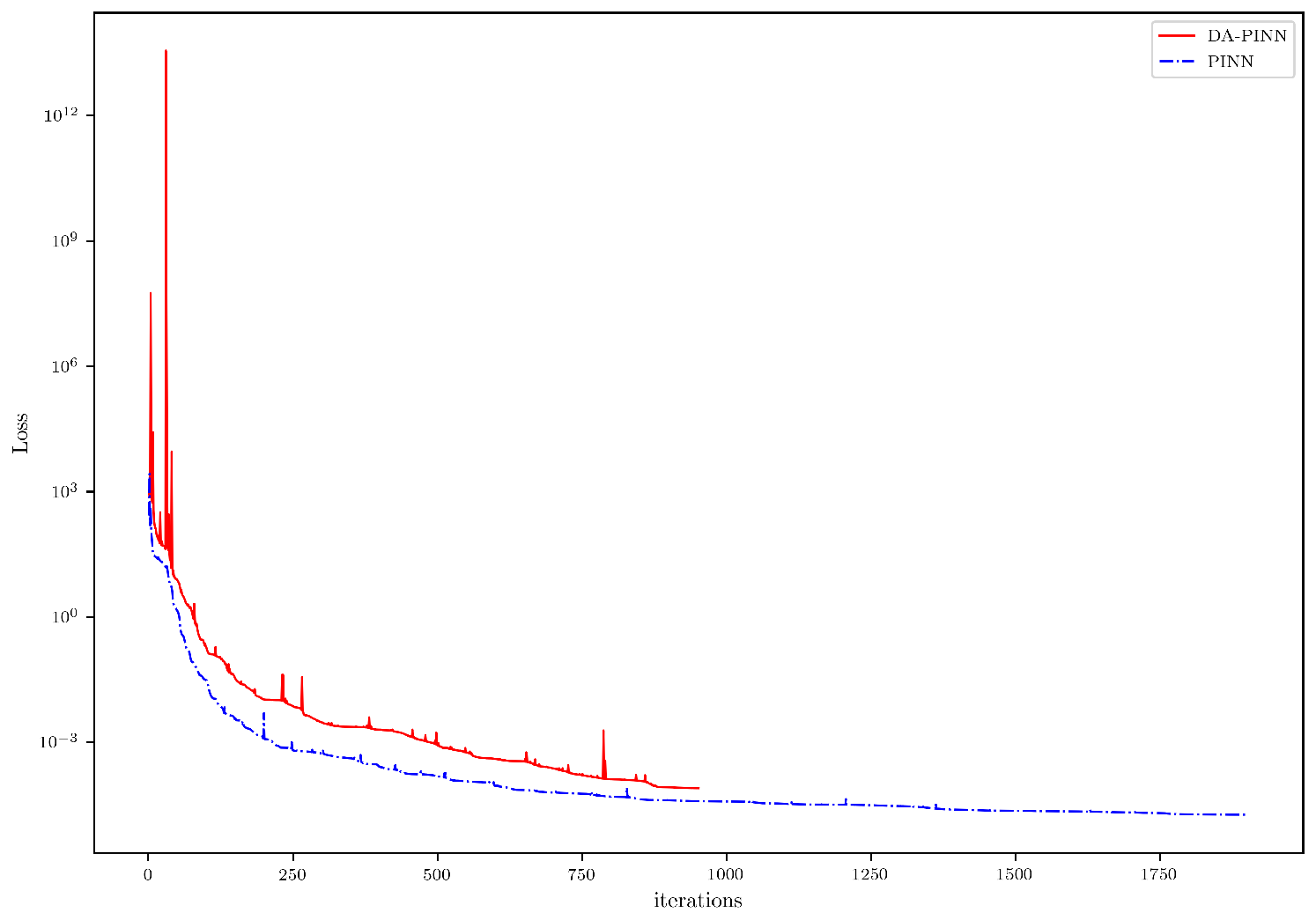

4.1.3. Prediction Results over a Long Time Interval

Given the computational instability encountered when utilizing the standard PINN for long-term evolution simulations, we investigate the potential improvements offered by DA-PINN. The spatial domain for this study is set as [−5, 5], with a time span of T = 20. The relative error for the PINN method in solving this particular problem is measured at 1.27 . In contrast, the relative error for the DA-PINN method in addressing the same issue is notably lower at 2.25 , indicating the effectiveness of the DA-PINN approach in mitigating the aforementioned challenges.

We next evaluate the convergence speed of the proposed method.

Figure 5 illustrates the loss convergence of both the PINN and DA-PINN methods.

Figure 6 illustrates the relative

error of both the PINN and DA-PINN methods. Our results demonstrate that the DA-PINN approach exhibits a faster relative

error convergence rate and significantly reduces errors in comparison to the standard PINN method. These findings suggest that DA-PINN provides an effective solution to addressing the numerical instability encountered when utilizing PINNs for long-term evolution simulations. The improved convergence speed of DA-PINN may facilitate better modeling accuracy and reduce computational cost, thus offering a promising avenue for addressing the aforementioned challenges in numerical simulations.

4.2. Data-Driven Discovery of the FW Equation

In this segment of our research, we focus on the task of data-driven discovery of partial differential equations [

13]. When the observed partial flow field data of Equation (

2) is known and the parameter

is unknown, we can employ the PINN method to reconstruct the complete flow field and infer the unknown parameter

. Our approach involves approximating

through a deep neural network. Under this framework, together with Equation (

2), we derive a physics-informed neural network

. This derivation is facilitated by employing the chain rule to differentiate function compositions via automatic differentiation. Notably, the differential operator parameters

are assimilated as parameters within the physics-informed neural network

. Numerical results obtained with the PINN are displayed in

Figure 7.

The objective of our study is to estimate the unknown parameter

even in the presence of noisy data, utilizing the PINN.

Table 5 summarizes our findings for this case. Our results demonstrate that the physics-informed neural network accurately identifies the unknown parameter

with high precision, even in the presence of noisy training data. Specifically, the estimation error for

is 0.44% under noise-free conditions and 0.55% when training data are corrupted by 1% uncorrelated Gaussian noise.

The Mscale-PINN method is an improved version of the PINN method that enhances solution accuracy by introducing an adaptive scale parameter in the first layer of the network structure. To conduct the comparison, both the Mscale-PINN model and the traditional PINN model are trained using the same dataset and training procedure, with the

error serving as the evaluation metric. A summary of our results for this example is presented in

Table 6. Experimental results demonstrate that the Mscale-PINN method achieves lower relative

error compared to the traditional PINN method when solving Equation (

2). Further analysis reveals that the Mscale-PINN method, by dynamically adjusting the scale parameter, adapts better to the physical processes at different scales, thereby providing more accurate solutions.

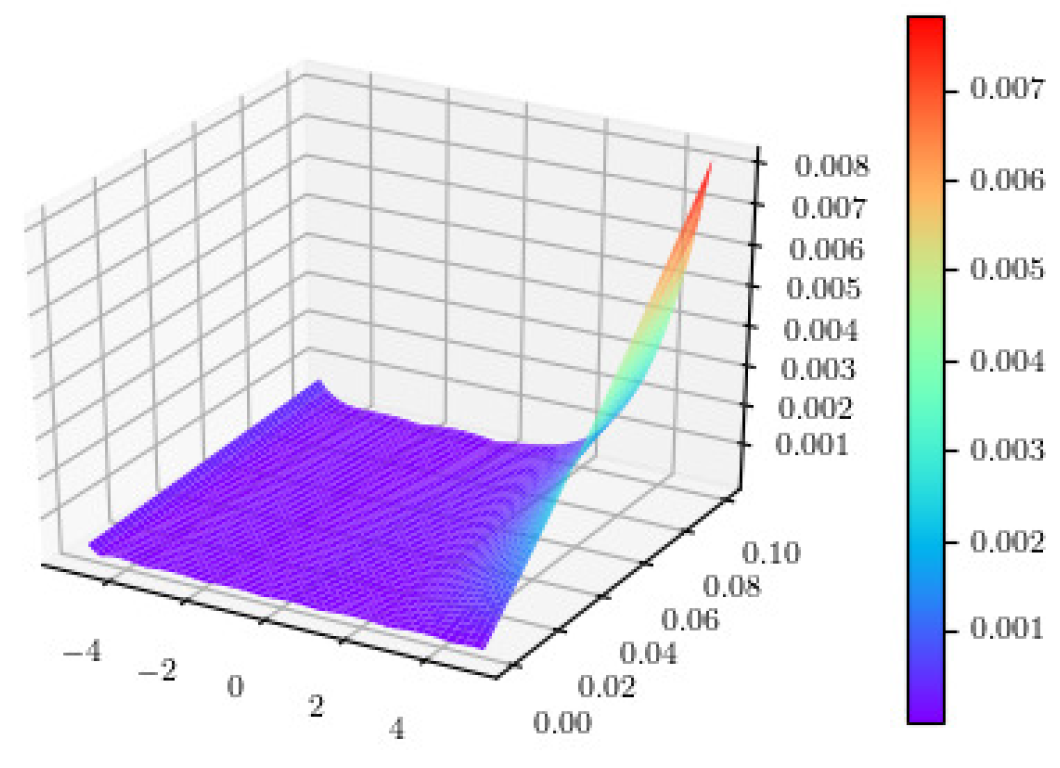

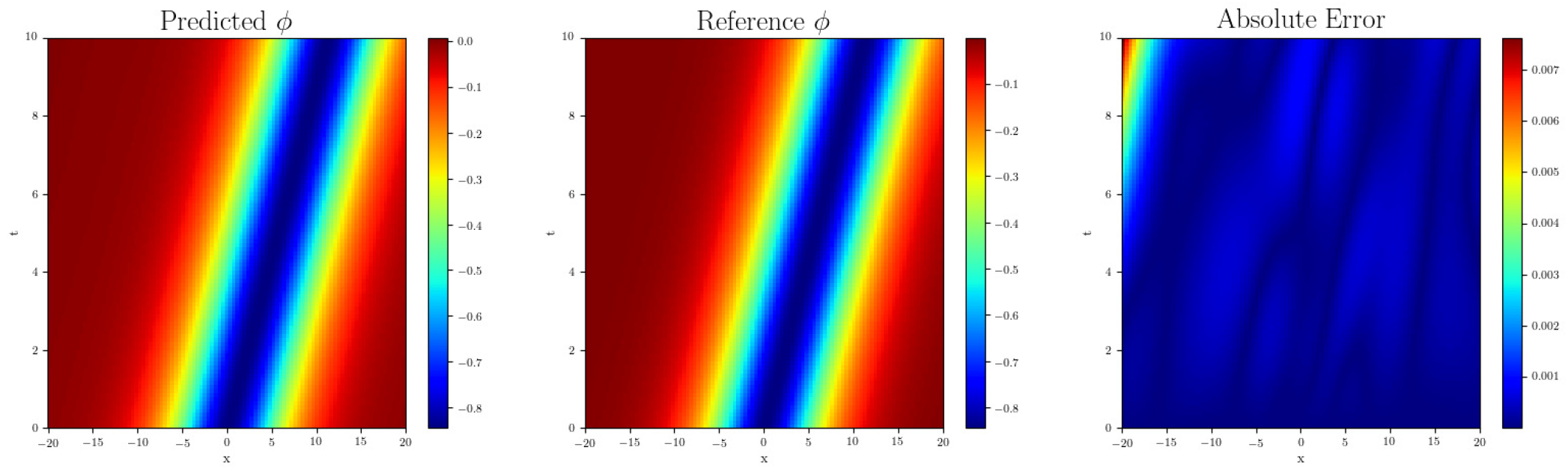

4.3. Modified Fornberg–Whitham Equation

In this section, we compare the proposed DA-PINN method with the variational iteration method (VIM) [

31], as well as the baseline PINN. The VIM is an analytical approximation technique that solves nonlinear differential equations iteratively by constructing correction functionals to establish a reference from traditional numerical methods. As a well-established numerical scheme, the VIM provides solutions with high accuracy within its convergence region and is often used to validate newly proposed numerical methods. Its results can be regarded as a ‘quasi-exact’ solution, allowing for a fair assessment of the accuracy of different numerical approaches. For this example, consider Equation (

3) subject to the following initial condition:

where

c is the wave speed given by

and the corresponding exact solution is

The solution region for this problem is [−5, 5], with T = 0.1. The efficacy and applicability of DA-PINN for the current problem are presented in

Table 7, showing the absolute error of

for different values of

t and

x. The data presented in the tables demonstrate that the absolute errors produced by DA-PINN are considerably lower than those generated using the VIM [

31]. The characteristics of both exact and approximated solutions are depicted in

Figure 8 and

Figure 9.

4.3.1. The Problem of Large Space–Time Domain

In the subsequent analysis, we expand our research to a larger spatiotemporal domain, namely . This expansion enables us to assess the performance of Multiple DA-PINN in handling problems of increasing complexity. By applying Multiple DA-PINN to this extended domain, we aim to ascertain its efficacy in capturing spatiotemporal dynamics in larger-scale problems. The relative error of solving this problem with the multiple DA-PINN method is 8.578 , which is 82% lower than that calculated with the baseline PINN method.

We see in

Figure 10 that the DA-PINN prediction is very accurate and indistinguishable from the exact solution. For the test problem, the absolute errors are conveyed in

Table 8 for different values of

t and

x.

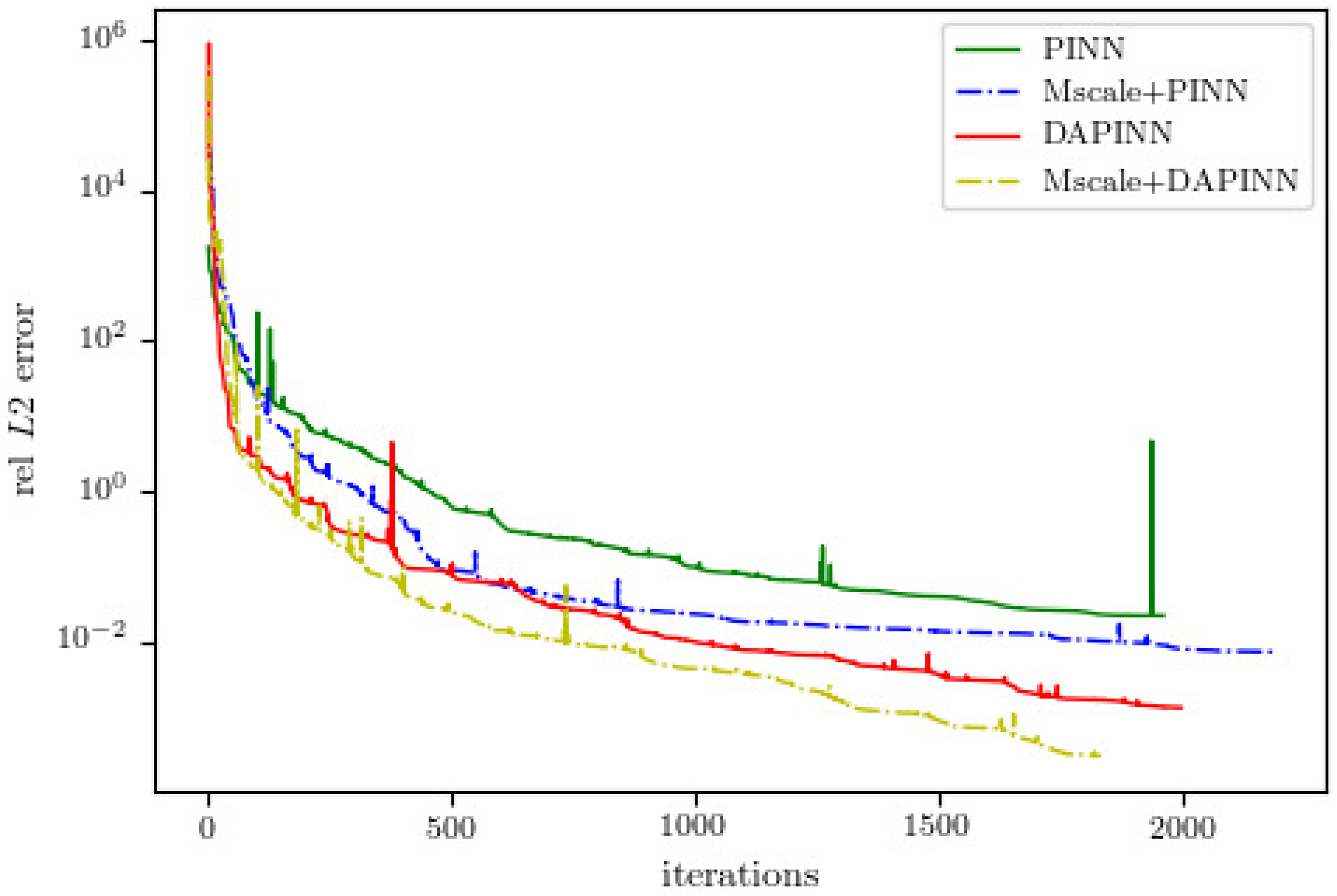

4.3.2. Ablation Analysis Experiments

To systematically evaluate the contribution of each component in the proposed DA-PINN method and its sensitivity to key hyperparameters, a series of ablation and sensitivity experiments are conducted in this section. All experiments are performed on the Fornberg–Whitham equation introduced in

Section 4.2. The primary goal of these experiments is to isolate the two core components of the DA-PINN framework—domain adaptation and the multi-scale structure (Mscale-DNN)—to verify their necessity. Under the settings of spatiotemporal domain

, four model configurations are compared: (a) Baseline PINN: The standard PINN method. (b) PINN + Mscale: Utilizing only the multi-scale network structure without domain adaptation. (c) DAPINN (ours): Employing domain adaptation with a standard fully connected neural network structure. (d) DAPINN + Mscale: Our complete proposed model, integrating both domain adaptation and the multi-scale network structure.

The relative

error is adopted as the evaluation metric, and the results are presented in

Table 9. The convergence process of relative L2 error is shown in

Figure 11.

It is evident from the results that introducing transfer learning yields an order-of-magnitude improvement in accuracy. This indicates that for problems with a certain spatial scale, decomposing complex tasks via domain adaptation strategies is crucial for enhancing PINN performance. Regarding the multi-scale structure, its standalone application brings limited improvements. However, when combined with transfer learning, it further enhances accuracy. This suggests that Mscale-DNN effectively improves the model’s expressive capacity, enabling better fitting of high-frequency components of the solution, thus forming a strong complement to the domain adaptation strategy.