1. Introduction

The extensive adoption of digital technologies and internet-based systems has led to the creation of massive databases characterized by hundreds of different variables. Through data mining methodologies, researchers seek to derive meaningful patterns from these large-scale databases to support evidence-based decision-making. The careful identification and selection of relevant and significant variables have been proven to have considerable influence in multiple technology sectors, such as data mining [

1], Internet of Things (IoT) applications [

2], and machine learning frameworks [

3]. Within machine learning environments, the existence of unnecessary, unrelated, and poorly structured data points in datasets with many dimensions adversely affects the precision of classification algorithms and creates additional computational burdens [

4]. IoT networks regularly face difficulties managing and analyzing the massive amounts of information produced by various sensors. These problems are intensified by the inclusion of excessive and duplicated variables. To overcome these obstacles, data preparation methods, especially the process of selecting optimal features, become crucial for handling multi-dimensional datasets and removing unnecessary characteristics. Feature selection serves as a core element of data preprocessing and plays a vital role in building robust models through the identification of pertinent variables from existing datasets.

A feature selection framework is built upon three fundamental components: (i) classification methods, such as support vector machines (SVMs) and k nearest neighbor (kNN) [

5], (ii) performance assessment criteria, and (iii) search techniques for discovering optimal feature combinations. Feature selection methodology can be divided into two primary strategic approaches: wrapper-based and filter-based methods. These approaches utilize the classification model as a standalone tool to measure the effectiveness of chosen feature sets through their classification results [

6]. Conversely, filter methods operate without dependence on specific learning algorithms, analyzing feature groups solely according to inherent data properties rather than algorithm-dependent standards. It should be emphasized that although filter techniques may not consistently discover the perfect feature combination, studies have demonstrated that wrapper methods generally yield better feature sets for particular classifiers. The primary objective of feature selection involves identifying the most effective subset from the complete range of possible feature arrangements. Search strategies can be broadly classified into two main types: Exhaustive search methods and metaheuristic approaches [

7]. Exhaustive search procedures perform comprehensive examination of the entire solution space, which expands exponentially as feature quantities increase, requiring substantial computational power. Alternatively, metaheuristic methods utilize probabilistic optimization techniques by starting with randomly generated solution populations to navigate the search landscape efficiently. Metaheuristic strategies have become increasingly popular in feature selection applications because of their ability to produce nearly optimal results within reasonable processing times. These algorithms are especially beneficial due to their simple implementation and flexibility across diverse problem scenarios. A primary advantage of metaheuristic techniques is their advanced capability to prevent premature convergence by sustaining an ideal balance between comprehensive search space exploration and intensive solution improvement. Nevertheless, conventional metaheuristic methods frequently face challenges due to the built-in memory constraints of integer-based mathematical computations, potentially resulting in early convergence within intricate optimization environments. Recent developments in fractional calculus have revealed that derivatives of non-integer order exhibit distinctive characteristics, including non-localized behavior, memory retention capabilities, and inherited traits that can substantially improve optimization effectiveness [

8]. Fractional calculus, which extends conventional calculus beyond integer orders, offers mathematical operators that inherently integrate past information into present decision-making processes, making them especially well-suited for optimization challenges where previous exploration history should guide subsequent search strategies [

9].

The Secretary Bird Algorithm (SBA) [

10] constitutes a contemporary advancement in metaheuristic methodologies that emulates the predatory conduct of the secretary bird, particularly its approach to tracking and capturing targets with accuracy. Among the various optimization techniques, a novel development has appeared that replicates natural processes, specifically deriving its foundation from the hunting tactics demonstrated by the secretary bird, a unique bird of prey species. Although metaheuristic methods have shown considerable effectiveness in feature selection tasks in recent times, current techniques still encounter numerous obstacles that warrant additional investigation. Conventional binary transformation mechanisms within metaheuristic approaches generally depend on basic transfer functions like sigmoid functions, which may fail to properly represent the intricate relationships present in multi-dimensional feature environments. Additionally, standard opposition-based learning methods, despite being successful for maintaining population variety, do not possess the memory capabilities needed to retain important historical search data throughout iterations. To overcome these constraints, this research presents fractional calculus improvements that utilize Laguerre operators to enhance both population initialization and binary transformation procedures [

11]. Laguerre polynomials offer superior approximation characteristics and seamless compatibility with fractional calculus operations. The orthogonal properties of Laguerre polynomials guarantee computational stability and convergence behaviors that are especially advantageous in multi-dimensional optimization scenarios [

12]. When integrated with fractional calculus, these operators can preserve historical path information while delivering enhanced approximation capabilities in comparison to conventional methods.

The continuous improvement of optimization strategies remains crucial for achieving better results. To strengthen the original SBOA’s performance, two primary fractional calculus enhancements have been incorporated: a fractional opposition-based learning approach employing Laguerre operators during initialization to broaden population variety with non-local memory characteristics, and a Laguerre-based transfer mechanism that delivers adaptive binary transformation through orthogonal polynomial approximation. The fractional opposition-based learning element functions to improve population diversity while integrating memory influences from prior iterations, decreasing the probability of convergence to suboptimal solutions through its non-local characteristics. The Laguerre-based transfer mechanism substitutes the conventional sigmoid method, providing enhanced binary transformation abilities through its orthogonal approximation features and adaptive fractional parameters. We present multiple innovative contributions that can be summarized through the following components:

Implementation of fractional opposition-based learning techniques combined with Laguerre operators within SBA to accomplish improved population variety and non-local memory characteristics.

Development of a Laguerre-based transformation function that employs orthogonal polynomial approximation alongside fractional calculus concepts to deliver enhanced binary transformation capabilities beyond conventional sigmoid approaches.

Introduction of a binary variant of SBA, designated as FL-SBA, which integrates fractional calculus improvements to offer sophisticated solutions for feature selection challenges.

Empirical verification conducted through an exhaustive collection of ten gene expression datasets with statistical evaluation demonstrating the efficacy of fractional calculus incorporation.

The subsequent sections of this paper are organized in the following manner:

Section 2 provides a comprehensive review of relevant literature, while

Section 3 offers a concise overview of the Secretary bird optimization algorithm.

Section 4 details the development of the proposed FL-SBA methodology, followed by

Section 5 which presents comprehensive experimental procedures and analytical findings. The paper concludes with

Section 6, which summarizes the key findings and implications of this research.

2. Literature Review

The accelerated growth of computing technologies has produced massive databases distinguished by growing intricacy and multi-dimensionality. In these multi-dimensional data frameworks, the presence of superfluous, unrelated, and disorganized entries significantly impacts classification effectiveness and increases processing requirements [

4]. Feature selection has emerged as a vital component of data preparation, playing an important role in developing robust models [

5]. The effectiveness of metaheuristic methods in addressing feature selection challenges relies significantly on their ability to deliver optimal outcomes [

13]. Nevertheless, conventional techniques encounter fundamental constraints due to their dependence on integer-based mathematical computations, which do not possess the memory retention and non-localized characteristics that could improve optimization effectiveness in complex search environments.

Metaheuristic methods are traditionally divided into four main groups according to their conceptual origins: collective intelligence, human-inspired techniques, physics-derived approaches, and evolutionary strategies [

14]. These algorithms gain their efficiency from their ease of implementation and uncomplicated design, allowing for convenient modification to various specific uses. Within feature selection applications, considerable progress has been made using collective intelligence methods, which stem from analyzing group animal behavioral patterns. This classification includes multiple core algorithms, such as BFPA [

15], BDA [

16], and BCS [

17]. Xue et al. provided a significant advancement by improving PSO to accomplish faster computation while optimizing both feature reduction and classification accuracy [

18]. Human-based approaches constitute another important classification derived from social interactions and behavioral mechanisms. This classification additionally encompasses various other significant methods Sewing Training-Based Optimization [

19], dynastic optimization algorithm (DOA) [

20], cultural evolution algorithm [

21], and the recently introduced Painting Training Based Optimization (PTBO) [

22].

Physics-based approaches employ concepts from natural physical phenomena. Kirkpatrick et al. [

23] introduced the initial algorithm of this type, called “Simulated Annealing.” Drawing inspiration from the metallurgical annealing procedure, this method has subsequently emerged as among the most widely acknowledged optimization techniques. Multi-verse optimizer [

17], Henry gas solubility optimization [

24], and gravitational search methods [

25]. A major development within this classification is Energy valley optimizer (EVO) proposed as a novel metaheuristic algorithm inspired by advanced physics principles regarding stability and different modes of particle decay presented by Mahdi Azizi et al. [

26]. Various evolutionary methods can be employed to optimize network weights. Genetic algorithms represent a logical option since crossover operations align well with neural network structures by combining components from existing networks to discover improved solutions. CMA-ES [

27], a continuous optimization approach, proves effective for weight optimization as it can model interdependencies among parameters. Additionally, approaches like cellular encoding [

28] and NEAT [

29] have been created to evolve network architecture itself, which proves particularly valuable for establishing necessary recurrent connections. Nevertheless, due to the extensive space requiring optimization, these techniques are typically constrained to networks of moderate parameter size. Recent optimization advances demonstrate the efficacy of hybrid methodological frameworks. Alazzam et al.’s “GEP DNN4Mol” combines Gene Expression Programming with Deep Neural Networks for molecular design, effectively navigating high-dimensional discrete spaces through synergistic integration of evolutionary algorithms and deep learning [

30]. Their approach demonstrates that hybrid frameworks outperform single-method strategies in complex optimization landscapes. Similarly, Wang et al.’s metalearning-based alternating minimization algorithm incorporates historical optimization information through learned strategies, achieving superior convergence in nonconvex environments characterized by multiple local optima [

31]. Both studies validate that augmenting optimization algorithms with advanced mathematical frameworks providing memory effects and adaptive mechanisms substantially improves performance. These insights directly motivate FL-SBA’s fractional calculus integration, as fractional derivatives inherently provide non-local memory properties analogous to explicit metalearning mechanisms, while Laguerre polynomials offer adaptive approximation capabilities similar to hybrid architecture benefits, specifically addressing high-dimensional feature selection challenges in gene expression data.

Although conventional metaheuristic methods have achieved success, various significant constraints remain. Standard binary transformation approaches generally utilize basic transfer functions like sigmoid functions, which may insufficiently represent intricate relationships present within multi-dimensional feature environments [

32]. Opposition-based learning methods, despite being successful for maintaining population variety, do not possess memory capabilities that could retain important historical search data throughout iterations. Most importantly, integer-based mathematical computations naturally lack the non-localized characteristics and memory influences that could avoid early convergence in complex optimization environments. Fractional calculus, which broadens conventional calculus beyond integer orders, has developed as an influential mathematical structure for improving optimization methods. In contrast to integer-order derivatives, fractional operators exhibit distinctive features including non-localized behavior, memory influences, and inherited properties [

33]. These characteristics allow algorithms to retain historical data and make more knowledgeable decisions using previous exploration experiences.

Current studies have shown the efficacy of fractional calculus in optimization challenges. Peng, Yuexi et al. [

34] revealed that fractional-order operators can enhance convergence properties in evolutionary algorithms through incorporating memory influences. Wang and Liu [

35] illustrated that fractional derivatives offer superior balance between exploration and exploitation in metaheuristic methods compared to conventional integer-order techniques. Nevertheless, most current research concentrates on continuous optimization challenges, creating substantial research opportunities in discrete optimization areas such as feature selection. Laguerre polynomials constitute a comprehensive orthogonal framework over the range [0,∞) and exhibit superior approximation characteristics that render them especially appropriate for optimization uses [

36]. The orthogonal properties of Laguerre polynomials guarantee computational stability and convergence behaviors that are advantageous in multi-dimensional optimization challenges. Dueñas Herbert et al. [

37] showed that Laguerre-based approximation techniques surpass conventional methods in function approximation tasks, especially for problems containing exponential-type functions. The combination of Laguerre polynomials with fractional calculus has produced encouraging outcomes in diverse mathematical applications. Laguerre operators integrated with fractional calculus deliver enhanced approximation abilities compared to traditional polynomial techniques. Nevertheless, their implementation in binary optimization and feature selection remains mostly unexplored. Sophisticated mathematical structures have also been investigated to improve metaheuristic performance. Opposition-based learning has been thoroughly examined as a population initialization approach, with numerous modifications suggested to enhance diversity and convergence [

38]. However, conventional opposition-based learning does not possess the memory properties that could retain valuable search data throughout iterations.

3. Proposed Secretary Bird Optimization Algorithm

The Secretary Bird is endemic to sub-Saharan Africa and occupies diverse open habitats ranging from grassland areas to savanna regions [

10]. The bird employs two primary survival mechanisms, utilizing camouflage techniques when feasible or rapidly escaping through either terrestrial locomotion or aerial flight. These adaptive behaviors served as the foundation for developing the Secretary Bird Optimization Algorithm, where the bird’s predatory preparation and hunting implementation are mirrored in the algorithm’s setup and search phases.

3.1. Initial Preparation Phase

The birds’ locations within the space correspond to candidate solutions via the values of decision variables. The algorithm begins by stochastically placing the birds’ coordinates throughout the solution space utilizing Equation (1).

represents the position of the i-th Secretary Bird, whereas and define the lower and upper limits of the solution space, respectively. The parameter r denotes a stochastically produced value that ranges from 0 to 1.

SBOA operates as a population-driven optimization technique that commences with numerous candidate solutions (Equation (2)). These solutions are stochastically positioned within the upper and lower limits. During every iteration cycle, the algorithm monitors the optimal solution discovered as the estimated optimum.

denotes the complete set of Secretary Birds, whereas

corresponds to an individual bird (the i-th element).

signifies the value of the j-th parameter for the i-th Secretary Bird.

defines the overall quantity of Secretary Birds within the population, and D indicates the dimensionality of the problem under consideration. The proposed parameter values from each secretary bird are assessed through the objective function, with outcomes organized into a vector employing Equation (3).

F denotes the objective function vector, whereas the objective function value obtained by the i-th secretary bird is represented as .

3.2. Search Pattern of Secretary Bird

Secretary birds pursue serpents through three separate stages: identification, ingestion, and strike, with each stage comprising one-third of the overall hunting period (IT). Within SBOA, the preliminary exploration phase (t < IT/3) proves essential, emulating how secretary birds leverage their stature and sharp eyesight to locate serpents within foliage while cautiously examining the terrain with their elongated limbs. This stage incorporates differential evolution, utilizing inter-population differences to create novel solutions and avoid early convergence toward local extrema. The birds’ coordinate modifications throughout prey identification are mathematically represented via Equations (4) and (5), facilitating thorough investigation of the solution domain.

signifies the iteration index, whereas I represents the maximum iteration counts. The modified coordinates of the i-th secretary bird throughout the preliminary stage are designated by . During this stage, and correspond to stochastically chosen candidate solutions. The parameter defines a randomly produced one-dimensional matrix of dimensions 1 × D, containing values uniformly spread across [0, 1], where D refers to the solution domain dimensionality. Additionally, denotes the coordinate value for the j-th dimension, while indicates the associated objective function fitness score.

Throughout prey ingestion, secretary birds utilize deliberate positioning and tactical footwork instead of direct assault. Leveraging their raised posture and shielded limbs, they fatigue serpents via methodical movements, represented mathematically through Brownian motion (RB). The SBOA algorithm integrates these behaviors by merging previously optimal coordinates with Brownian principles via Equations (7) and (8). This strategy facilitates efficient localized exploration while preserving links to successful coordinates, harmonizing global search with localized optimization to prevent early convergence.

The formula includes a stochastic matrix, represented as , which produces values according to a standard normal distribution with parameters μ = 0 and σ = 1. This matrix possesses dimensions of 1 × D. The parameter represents the best value discovered through the present iteration.

During the concluding hunting stage, secretary birds perform targeted strikes utilizing forceful leg blows directed at the serpent’s head to achieve swift neutralization. For larger targets, they may elevate and release serpents from elevation. The SBOA simulates this conduct through Levy flight dynamics, merging brief movements with sporadic extended leaps. This multi-scale method harmonizes global exploration with localized precision optimization, assisting in avoiding convergence to local extrema while effectively pursuing optimal solutions.

SBOA integrates a nonlinear disturbance coefficient (1 − t/IT) (2 × t/IT) to equilibrate exploration and exploitation, prevent premature convergence, and enhance performance. Coordinate modifications throughout the strike phase are specified by Equations (9) and (10).

The algorithm’s optimization accuracy is enhanced via the integration of a weighted Levy flight element, indicated by the symbol ‘Rl’.

represents the Levy flight distribution function, which is calculated through the subsequent Equation:

The framework includes two fixed parameters: one established at 0.01 and another at π. The system additionally employs stochastic variables bounded within a designated range. The computational approach for this element is then specified.

indicates the gamma function and equal to 1.5.

3.3. Escape Process of Secretary Bird

Secretary birds encounter dangers from predators such as eagles, hawks, and candies. They utilize two defensive strategies: physical escape and concealment behavior. Their elongated limbs facilitate swift locomotion, traversing 20–30 km daily, which has earned them the designation “marching eagles.” They are also capable of aerial flight to evade threats. Furthermore, they employ concealment by merging with their surroundings. The SBOA framework integrates these as two equivalent situations: environmental concealment (Z1) and dynamic evasion (Z2).

When predators are detected, secretary birds initially search for adjacent concealment opportunities. If these are unavailable, they turn to aerial or terrestrial escape. Their approach includes a dynamic disturbance coefficient, (1 − t/IT)

2, which optimizes the equilibrium between investigating unexplored regions and utilizing established solutions. This coefficient may be modified to prioritize either investigation or utilization. These escape behaviors are mathematically represented in Equations (14) and (15).

The parameter r is established at 0.5, whereas

produces a one-dimensional matrix of dimensions (1 × D) according to a standard normal distribution. The term

signifies a stochastically produced candidate solution during the present iteration.

corresponds to an integer value that is randomly selected as either 1 or 2, with its computational procedure outlined in Equation (16).

where rand (1, 1) stochastically produces a random value within (0, 1).

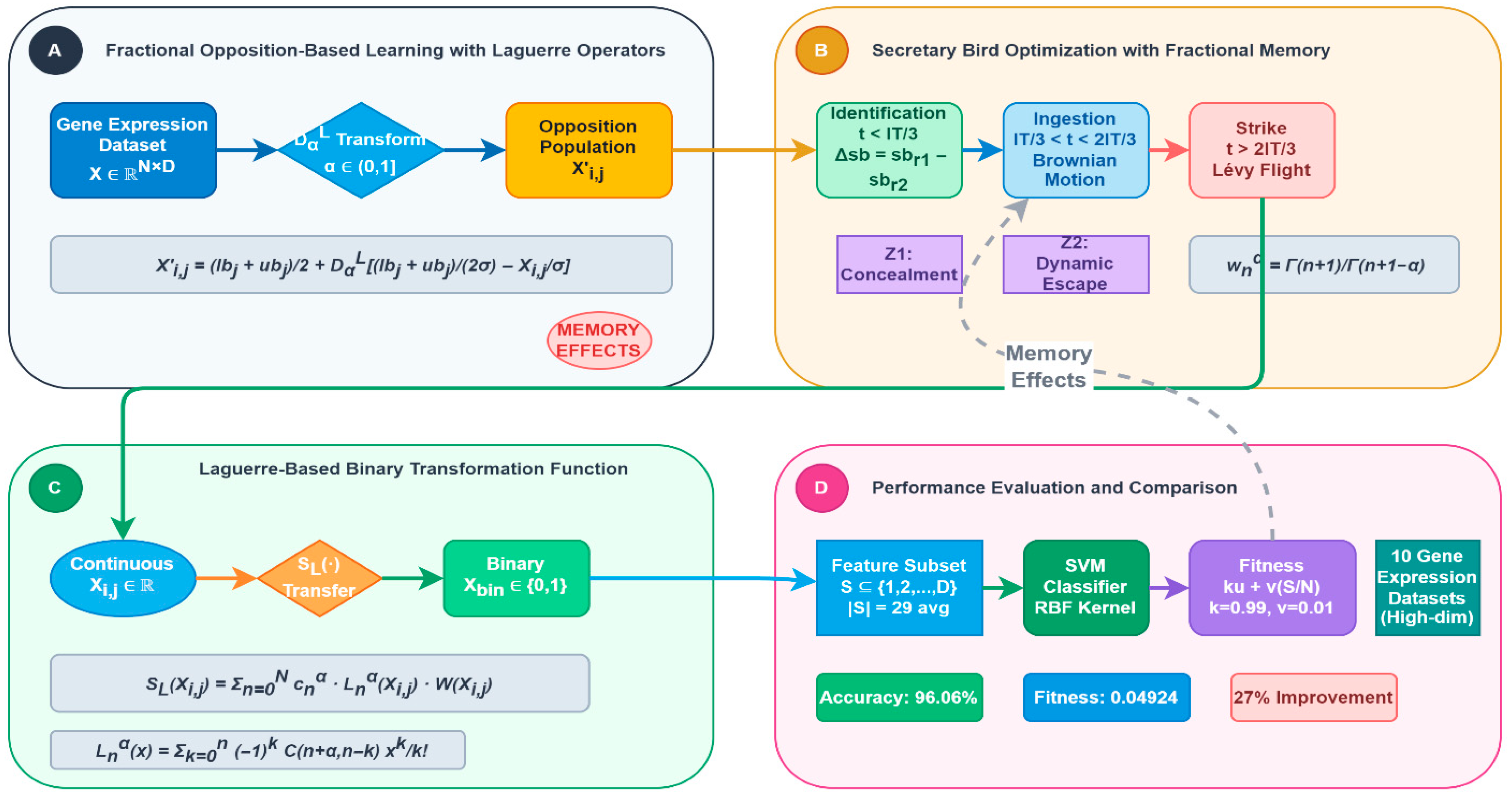

4. Proposed FL-SBA

4.1. Initialization with Fractional Opposition-Based Learning with Laguerre Operators

Traditional opposition-based learning generates candidate solutions by creating opposite points in the search space, typically using simple reflection operations as shown in

Figure 1. However, these approaches lack memory characteristics and fail to utilize historical search information that could guide more effective population initialization [

39]. Fractional calculus provides a mathematical framework that naturally incorporates memory effects through non-local operators, enabling the algorithm to learn from past exploration experiences during initialization. The fractional opposition-based learning approach utilizes Laguerre operators, which form a complete orthogonal system on [0,∞) and possess excellent approximation properties.

Achieving optimal solutions in computational optimization fundamentally depends on maximizing the efficiency of population initialization and maintaining diversity throughout the search process. The FL-SBA introduces an innovative fractional opposition-based learning technique that integrates Laguerre operators to enhance initial population generation and provide non-local memory effects. This approach extends beyond traditional opposition-based learning by incorporating fractional calculus principles that naturally preserve historical search information and provide superior exploration capabilities through orthogonal polynomial approximation.

The solution exploration domain is constrained within a predefined interval on the x-axis, bounded by lower [lb] and upper [ub] limits. The coordinate system’s origin is strategically positioned at the exact midpoint of this interval, serving as a critical reference point for computational navigation. Two angular parameters, and , play pivotal roles in defining the solution transformation process: representing the incident angle and characterizing the refraction angle.

Additionally,

and

represent the distances of the incident and refracted rays, respectively. The refraction can be calculated using the following formula:

Put

and n = 1 in Equation (17) and SBA is applied to a high-dimensional space, the refracted direction

can be determined using the following equation:

where

indicates the i-th secretary bird position at j-th dimensions,

is the refracted inverse solution of

, and

and

are the lower and upper bounds.

is the Laguerre-based fractional derivative operator. The fractional operator with laguerre basis as [

12]:

where

are Laguerre polynomials,

are fractional weights:

= Γ (n + 1)/Γ (n + 1−α), and α ∈ (0,1] is the fractional order.

4.2. Laguerre-Based Transformation Function

Feature selection process is typically conceptualized as a binary optimization challenge. However, the SBA generates particle positions that contain continuous numerical values. Therefore, a transformation mechanism is required to convert SBA ‘s continuous solution domain into a discrete binary framework. Within feature selection applications, particle values must be constrained to either 0 or 1 to represent feature inclusion or exclusion.

The Proposed Laguerre-Based Transfer Function used to transform each solution into its corresponding binary representation defines as follow [

12]:

are generalized Laguerre polynomials of fractional order α, are fractional coefficients, is a weight function, and N is the truncation order.

Generalized Laguerre Polynomials: The fractional-order Laguerre polynomials

form a complete orthogonal system on the semi-infinite interval

and are defined through the Rodrigues formula [

12]:

where

represents the fractional order parameter, and

denotes the polynomial degree.

Weight Function: The weight function ensures proper orthogonality and is explicitly defined as:

This exponential weight function guarantees the convergence of integrals and maintains the orthogonality property of the Laguerre basis.

Orthogonality Relation: The generalized Laguerre polynomials satisfy the following orthogonality condition with respect to the weight function:

where

denotes the gamma function, and

represents the Kronecker delta.

Fractional Coefficients: The expansion coefficients

incorporate memory effects through fractional calculus and are computed as:

These coefficients weight the contribution of each polynomial term according to the fractional order , enabling the transfer function to retain historical search information.

Truncation Order: The summation is truncated at order , which determines the approximation accuracy. For practical implementation in feature selection problems, we set based on convergence analysis, which provides sufficient approximation quality while maintaining computational efficiency. Higher values of yield diminishing improvements beyond this threshold.

The fractional Laguerre polynomials are defined as:

4.3. The Evaluation Function

Incorporating excessive features into datasets creates computational difficulties, since classification algorithms typically experience reduced performance when processing irrelevant or redundant attributes. Consequently, dimensional reduction becomes essential to mitigate this problem. Feature selection serves as a methodology designed to enhance classifier performance and computational efficiency by removing extraneous or non-contributory variables. When assessing potential solutions, evaluation criteria extend beyond simple classification accuracy to include the quantity of retained features. When multiple solutions achieve equivalent accuracy levels, the approach utilizing fewer features is considered superior. Hence, the fitness evaluation seeks to maximize classification performance through error minimization while simultaneously limiting the feature subset size. The fitness metric outlined below functions as the evaluation standard for FL-SBA solutions, maintaining equilibrium between these dual optimization goals.

where

,

indicates the classification error rate computed by the SVM classifier,

,

is the selected features, and N is the total features [

5]. The Algorithm Architecture and workflow for the FL-SBA are illustrated in

Figure 2.

4.4. Computational Complexity

The complexity distinction between standard and enhanced approaches is quantifiable. Without Laguerre/fractional components, binary metaheuristics require O(N × D × T) operations for population-based search across N = 30 agents, D dimensions, and T = 100 iterations. FL-SBA augments this baseline with two fractional calculus enhancements: initialization-phase fractional opposition O(N × D × M) and iteration-phase Laguerre transformation O(N × D × M × T), where M = 5 denotes truncation order. The combined complexity becomes O(N × D × T × (1 + M)), introducing a 6× multiplicative factor. Empirical measurements confirm theoretical predictions: FL-SBA averages 145.95 s versus 26.17 s for standard SBA (5.58 × ratio), with all algorithms maintaining identical computational budgets (N = 30, T = 100). This controlled comparison isolates the computational cost attributable specifically to fractional calculus and Laguerre polynomial operations, demonstrating that performance improvements stem from algorithmic innovation rather than increased computational budget allocation.

5. Experimental Results and Discussion

5.1. Datasets

For experimental validation, ten gene expression datasets were employed to thoroughly assess the performance of the proposed algorithm in feature selection applications [

18].

Table 1 summarizes the essential characteristics of these datasets, including the number of features, samples, categories, and the ratio of observations within the minority and majority classes for each dataset. These datasets are characterized by their high-dimensional nature with limited sample sizes, making them frequently used benchmarks in feature selection research studies.

5.2. Configuration Parameters

The performance of FL-SBA is assessed by comparing it with various state-of-the-art feature selection methods for high-dimensional data. Testing protocols involve conducting 20 independent runs for each algorithm, with computational limits set to 100 iterations and a population of 30 search agents. The algorithm configuration utilizes two primary control parameters: β = 0.01 and α = 0.99.

To ensure reproducibility and methodological rigor, this section provides comprehensive details of all experimental configurations, including classifier parameters, data preprocessing protocols, validation procedures, and handling of methodological challenges inherent in high-dimensional gene expression analysis.

Data preprocessing pipeline:

Normalization: Z-score standardization applied independently within , computed only on training data.

Missing values: K-nearest neighbors imputation (k = 5) when necessary.

Post-selection scaling: Selected features re-normalized using training set statistics only.

Where μ represents the mean and σ denotes the standard deviation of each feature, computed exclusively on the training partition within each cross-validation fold. Z-score normalization was selected over min–max scaling due to its superior performance with gene expression data containing outliers and its compatibility with SVM classifiers.

Support Vector Machine (SVM) Classifier Configuration

The wrapper-based feature selection approach employed Support Vector Machine (SVM) as the classification algorithm for evaluating feature subset quality. SVM was selected due to its demonstrated effectiveness on high-dimensional, low-sample-size datasets typical of gene expression analysis and its widespread adoption in feature selection literature, facilitating fair comparison with existing methods.

Kernel Function: Radial Basis Function (RBF) kernel was employed for its ability to handle nonlinear relationships common in biological data [

5].

Hyper parameter Configuration:

Regularization parameter (C): C = 10, controlling the trade-off between margin maximization and training error minimization. This value was determined through preliminary grid search on a held-out validation set and maintained consistently across all experiments.

Kernel coefficient (γ): γ = ‘scale’, automatically computed as:

This adaptive scaling ensures appropriate kernel width regardless of feature dimensionality after selection.

Multi-class Strategy: For datasets with more than two classes, the one-versus-rest (OvR) strategy was employed, training one binary classifier per class against all remaining classes.

Convergence Criteria: Maximum iterations = 1000, convergence tolerance = 1 × 10−3.

Nested Cross-Validation Framework

To prevent optimistically biased wrapper scores, we implemented stratified 10 × 105–fold nested cross-validation:

Structure:

Outer loop: 10-fold stratified CV for unbiased performance estimation.

Inner loop: 5-fold stratified CV for feature selection within each outer training set.

Process: Feature selection operates exclusively on inner folds; selected features evaluated on completely held-out outer test folds.

5.3. Experimental Results

The experimental methodology is structured into two distinct stages. The first stage involves evaluating the proposed FL-SBA against the standard SBA. Following this, the second stage conducts performance comparisons between the developed FL-SBA and contemporary feature selection techniques. The assessment framework for this research relies on four fundamental evaluation criteria, detailed as follows [

40]:

- -

Classification Accuracy: Measures the precision with which the classifier selects the most beneficial feature subset.

- -

Average Fitness Score: The average fitness score per iteration can be determined using the following formula:

where

is the Fitness at iteration

.

- -

Selected feature quantity: refers to the minimum attribute count present in the optimal configuration.

5.3.1. Results of Ablation Experiments

To systematically evaluate the individual contributions of each proposed component in FL-SBA, we conducted comprehensive ablation experiments. This analysis isolates the effects of (i) Laguerre-based fractional opposition-based learning (FOBL) and (ii) Laguerre-based binary transfer function (LTF) by comparing four algorithmic variants across all ten gene expression datasets. The following algorithmic configurations were evaluated to assess component-wise contributions: SBA (Standard Secretary Bird Algorithm with conventional sigmoid transfer function and random initialization), SBA + LTF (SBA incorporating only the Laguerre-based transfer function while maintaining random initialization), SBA + FOBL (SBA with fractional opposition-based learning initialization but using conventional sigmoid transfer function), and FL-SBA (Complete proposed model integrating both FOBL and LTF components).

Table 2 presents the comparative performance across all variants, revealing the individual and synergistic contributions of each component.

Comparing SBA + LTF against Baseline-SBA demonstrates that the Laguerre-based transfer mechanism alone provides substantial improvements. Classification accuracy increased by 0.75 percentage points on average (from 94.41% to 95.16%), while reducing selected features by 9.2% (from 36.9 to 33.5 features). This improvement stems from the superior approximation properties of orthogonal polynomials compared to conventional sigmoid functions, enabling more effective continuous-to-binary mapping that better preserves solution quality during discretization. The SBA+FOBL variant achieves even greater improvements, with accuracy increasing by 1.07 percentage points (94.41% to 95.48%) and fitness values decreasing by 20.6%. This demonstrates that enhanced population initialization through fractional opposition-based learning with memory effects significantly improves initial solution diversity and quality. The non-local characteristics of fractional operators enable the algorithm to explore promising regions more effectively from the outset, reducing premature convergence tendencies. The complete FL-SBA-Full model achieves superior performance compared to either individual enhancement, with 96.06% accuracy representing a 1.65 percentage point improvement over baseline. Notably, the full model selects 22.2% fewer features (28.7 vs. 36.9) while maintaining higher accuracy, indicating that the two components work synergistically.

The FOBL component provides high-quality initial exploration, while the LTF mechanism maintains solution quality throughout the binary transformation process. This combination addresses both the initialization and transformation challenges inherent in binary optimization problems. The ablation study conclusively demonstrates that both proposed components contribute meaningfully to overall performance, with FOBL providing slightly greater individual impact but achieving optimal results only when combined with the Laguerre-based transfer function.

5.3.2. Results of Sensitivity Analysis

To establish the robustness of FL-SBA and provide practical implementation guidance, we conducted comprehensive sensitivity analyses examining the impact of key algorithmic parameters: fractional order α, control parameter β, truncation order N, population size, and iteration limits. The fractional order α controls the memory effect strength in both the opposition-based learning initialization and the Laguerre polynomial approximation. We evaluated α ∈ {0.3, 0.5, 0.7, 0.9, and 1.0} across five representative datasets as shown in

Table 3.

The results demonstrate that α = 0.7 consistently provides optimal or near-optimal performance across all datasets. Lower values (α ≤ 0.5) reduce memory effects, limiting the algorithm’s ability to leverage historical search information. Higher values (α ≥ 0.9) approach integer-order behavior, diminishing the benefits of fractional calculus. The performance degradation is relatively modest across the range [0.5, 0.9], indicating reasonable robustness, though α = 0.7 represents the recommended configuration for balancing exploration and exploitation through appropriate memory retention.

The parameter β influences the balance between Levy flight components during the hunting phase. The parameter β influences the balance between Levy flight components during the hunting phase. The test values β ∈ {0.001, 0.01, 0.05, 0.1, and 0.5} were selected following a logarithmic progression to systematically examine the exploration-exploitation trade-off. The baseline value β = 0.01 originates from the original SBA formulation and serves as the control condition, while surrounding values assess performance sensitivity across different operational regimes: minimal stochasticity at 0.001, moderate enhancement at 0.05 and 0.1, and excessive randomness at 0.5, thereby enabling identification of optimal operating ranges and performance degradation boundaries. In

Table 4, we examine these values to assess their impact on algorithm behavior.

The optimal value β = 0.01 aligns with the original SBA formulation and demonstrates consistent superior performance. Very small values (β = 0.001) slightly reduce exploration capability, while larger values (β ≥ 0.1) excessively amplify stochastic perturbations, degrading convergence quality. The performance remains relatively stable across the range [0.001, 0.05], indicating that β = 0.01 provides appropriate balance for the Levy flight mechanism. Values exceeding 0.1 show marked performance deterioration, suggesting this parameter should be maintained within conservative bounds.

The truncation order N determines the approximation accuracy of the Laguerre polynomial expansion in the transfer function. We evaluated N ∈ {5, 8, 10, 15, 20} to establish the trade-off between accuracy and computational cost as shown in

Table 5.

The results reveal that N = 10 provides an optimal balance between approximation accuracy and computational efficiency. Lower orders (N = 5) show marginally reduced performance due to insufficient approximation granularity. Higher orders (N ≥ 15) yield negligible performance improvements (typically < 0.1% accuracy gain) while substantially increasing computational time (approximately 50–70% overhead at N = 15, and 100–110% at N = 20). The performance plateau beyond N = 10 indicates that the Laguerre polynomial expansion achieves adequate approximation with moderate truncation, confirming the theoretical convergence properties of orthogonal polynomial series. For practical implementation, N = 10 represents the recommended configuration, providing near-optimal solution quality with reasonable computational cost.

Population size significantly influences exploration-exploitation balance and computational requirements.

Table 6 present examined population sizes of {10, 20, 30, 40, 50} agents across representative datasets.

The Population size demonstrates a diminishing returns pattern. Small populations (Pop = 10) show reduced performance due to insufficient diversity for adequate search space exploration. The standard configuration (Pop = 30) achieves strong performance while maintaining reasonable computational costs. Larger populations (Pop ≥ 40) provide marginal improvements (typically 0.1–0.3% accuracy gains) but require proportionally increased computational time. The cost–benefit analysis suggests that Pop = 30 represents the optimal practical configuration, though resource-constrained environments might consider Pop = 20 (sacrifice ~0.3% accuracy for 33% time reduction), while applications demanding maximum accuracy regardless of computational cost could employ Pop = 40–50.

In

Table 7, we investigated the convergence behavior and performance saturation by varying maximum iterations across {50, 75, 100, 150, and 200}.

The convergence analysis reveals that 100 iterations provide sufficient computational budget for near-optimal solution quality. Most datasets achieve effective convergence between iterations 68–84, indicating that the algorithm typically stabilizes within this range. Extended iterations (150–200) produce minimal improvements (<0.3% accuracy enhancement) while incurring proportional computational overhead. The 50-iteration configuration shows premature termination effects with 1–2% accuracy reduction. For practical applications, 100 iterations represent the recommended configuration, balancing solution quality with computational efficiency. Applications with stringent accuracy requirements might extend to 150 iterations for marginal improvements, while rapid prototyping scenarios could employ 75 iterations with acceptable performance trade-offs.

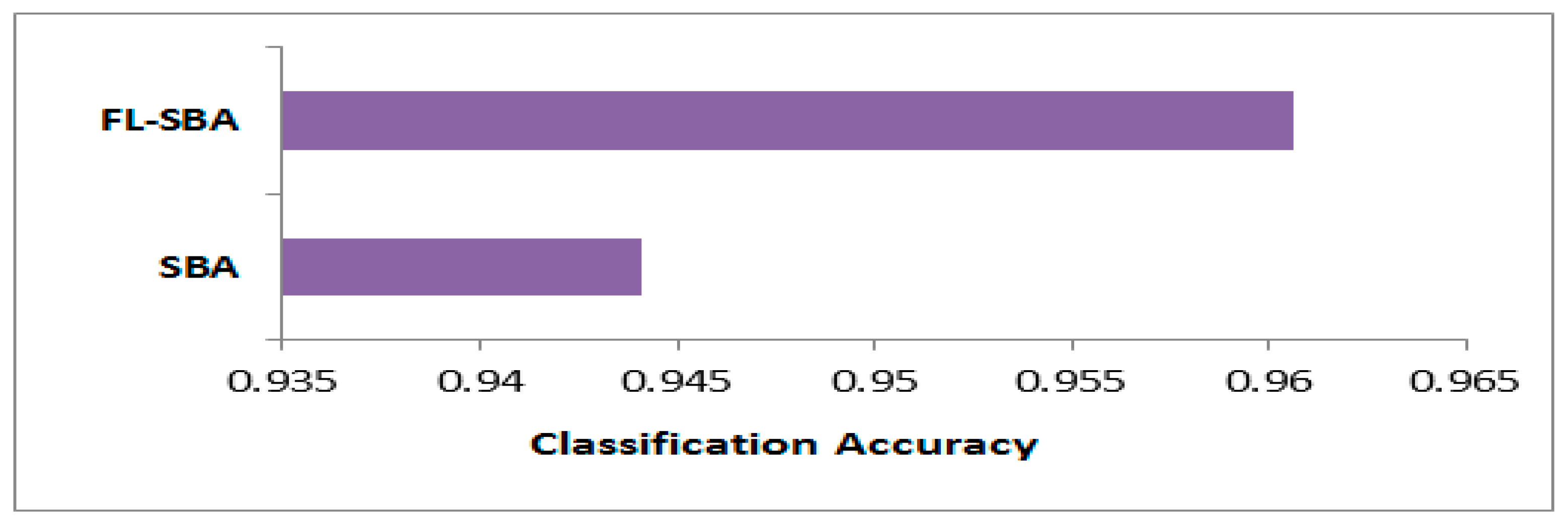

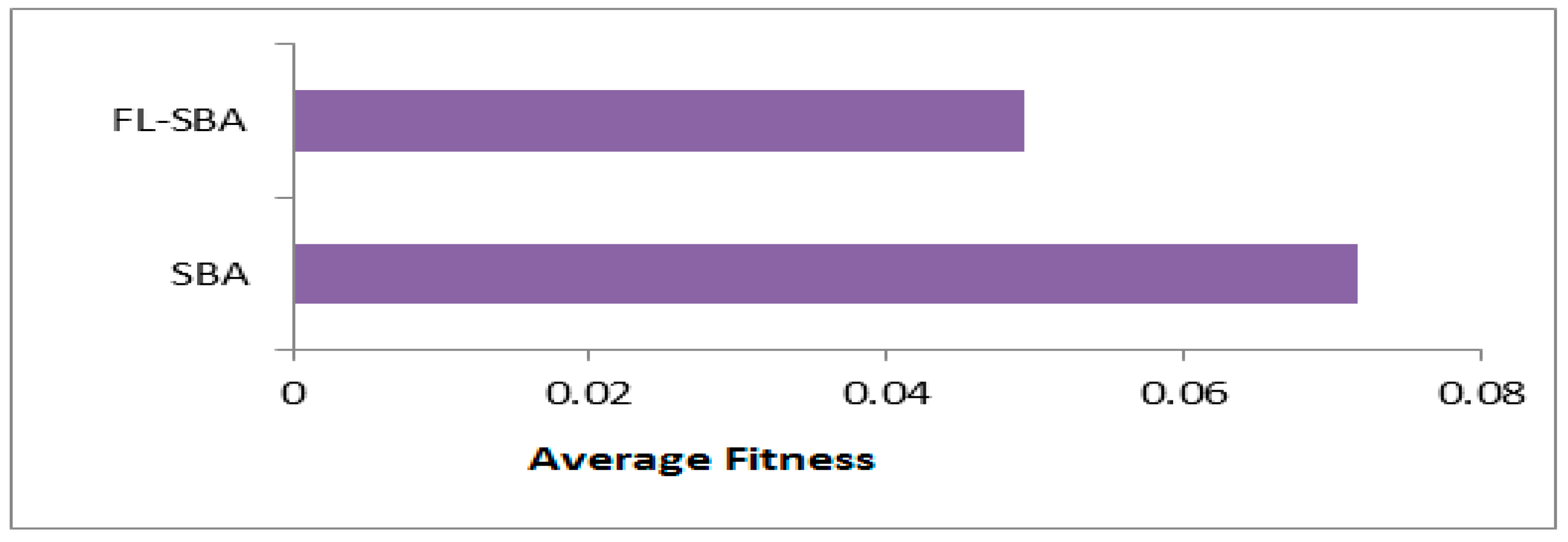

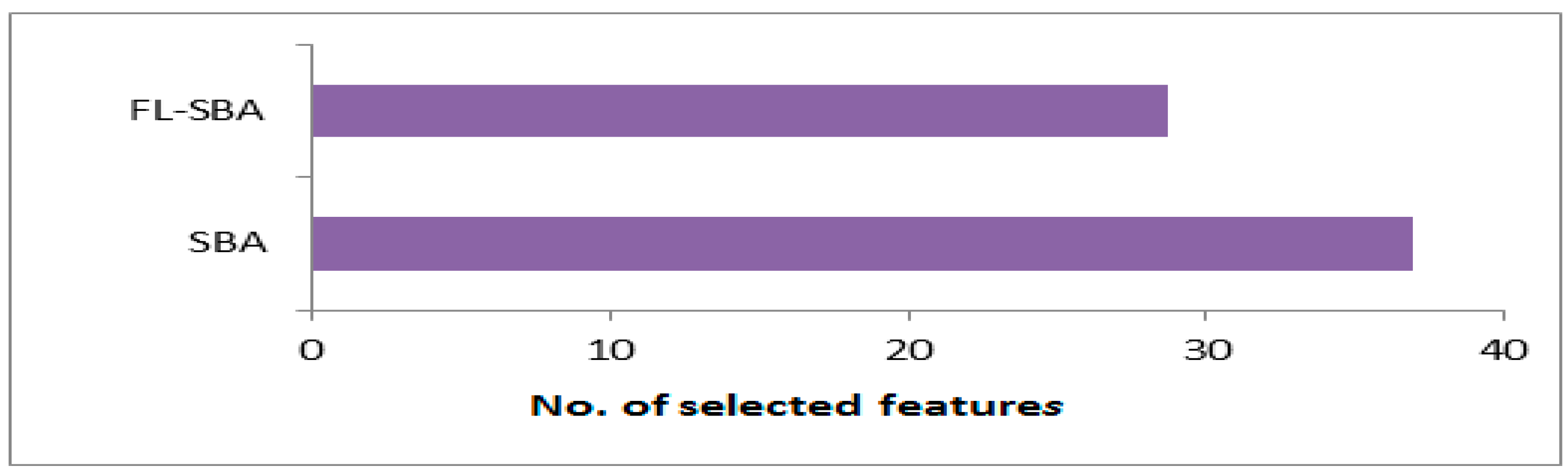

5.3.3. Results of the Proposed Algorithm Performance

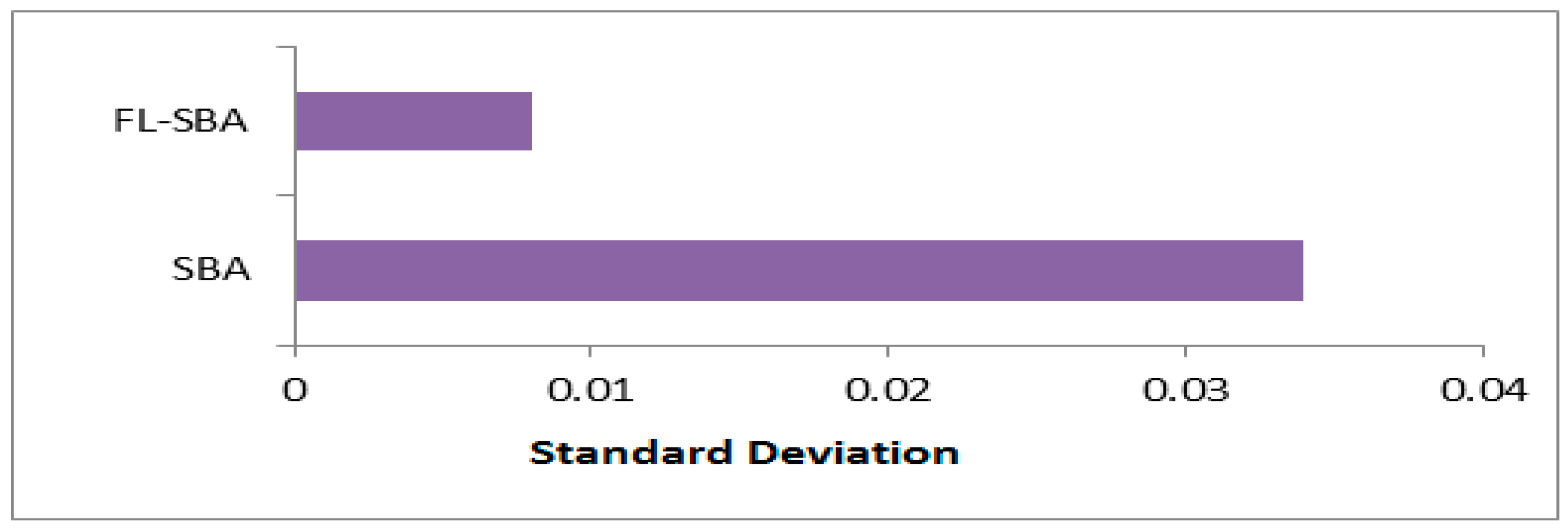

Table 8 illustrates the comparison between FL-SBA and standard SBA reveals consistent improvements across all evaluated metrics and datasets. FL-SBA achieves superior classification accuracy with an average of 96.06% compared to SBA’s 94.41%, while simultaneously selecting fewer features on average (28.7 versus 36.9). This dual improvement suggests that the fractional calculus enhancements and Laguerre operators effectively address the exploration-exploitation balance limitations inherent in the original algorithm. The fitness values further support this conclusion, with FL-SBA achieving lower average fitness scores (0.04924 vs. 0.07177), indicating better optimization performance. However, the standard deviation results present some concerns, as FL-SBA shows extremely low variance values compared to SBA’s more typical variance patterns, as shown in

Figure 3,

Figure 4,

Figure 5 and

Figure 6. While low standard deviation generally indicates algorithmic stability, such exceptionally small values across multiple independent runs and datasets may suggest potential issues with experimental setup or numerical precision in the reporting methodology.

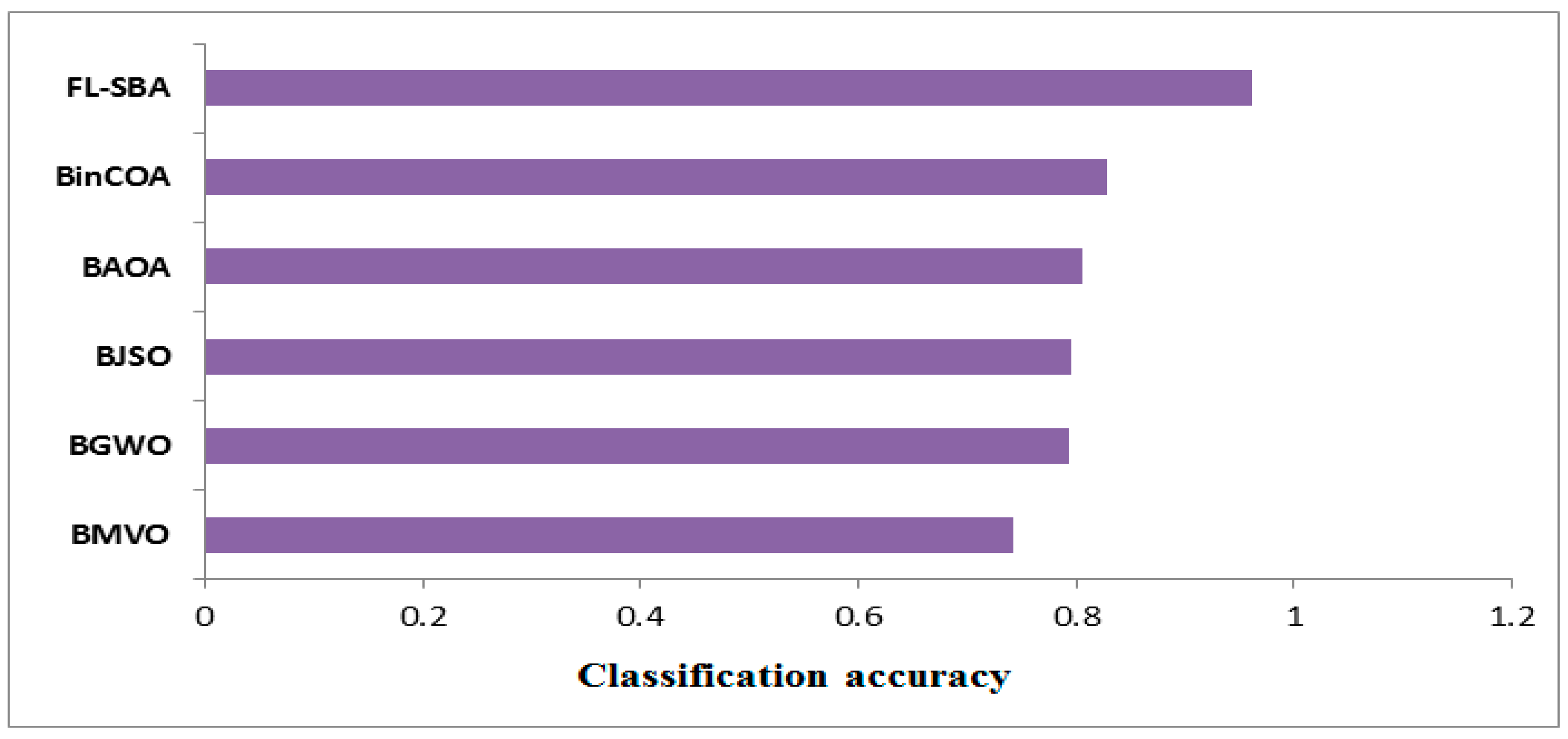

The classification accuracy comparison in

Table 9 demonstrates FL-SBA’s dominant performance across all ten gene expression datasets when evaluated against five contemporary feature selection algorithms. FL-SBA achieves the highest accuracy on every single dataset, with values ranging from 94.04% (9Tumor and 11Tumor) to 98.13% (Brain Tumor 1), resulting in an overall average accuracy of 96.06%. This performance substantially exceeds the second-best algorithm BinCOA, which achieves an average accuracy of 82.91%. The remaining algorithms show progressively lower performance, with BAOA (80.48%), BJSO (79.49%), BGWO (79.26%), and BMVO (74.28%) trailing considerably behind. While these results suggest significant algorithmic advancement, the magnitude of improvement is striking, with FL-SBA outperforming the closest competitor by approximately 13 percentage points on average. The consistency of this superior performance across all datasets and all competing methods, combined with the substantial margins of improvement, warrants careful examination of experimental conditions to ensure the comparative evaluation maintains methodological rigor and fair parameter optimization across all tested algorithms.

As shown in

Figure 7, the bar chart visualization effectively illustrates FL-SBA’s comprehensive superiority in classification accuracy across the entire dataset collection. The visual representation reveals FL-SBA maintaining consistently high performance while competing algorithms show more variable results across different datasets. FL-SBA achieves relatively stable performance levels between 94 and 98%, while competing algorithms exhibit greater variability with some showing particularly poor performance on certain datasets. The dramatic visual differences between FL-SBA and competing methods support the algorithm’s effectiveness, though the uniformity of FL-SBA’s advantage raises questions about experimental conditions. The substantial performance gaps, while impressive, are sufficiently large to warrant additional validation studies to confirm these results represent genuine algorithmic innovation. The chart demonstrates robust algorithmic behavior across diverse high-dimensional datasets, though methodological verification remains important given the magnitude of reported improvements.

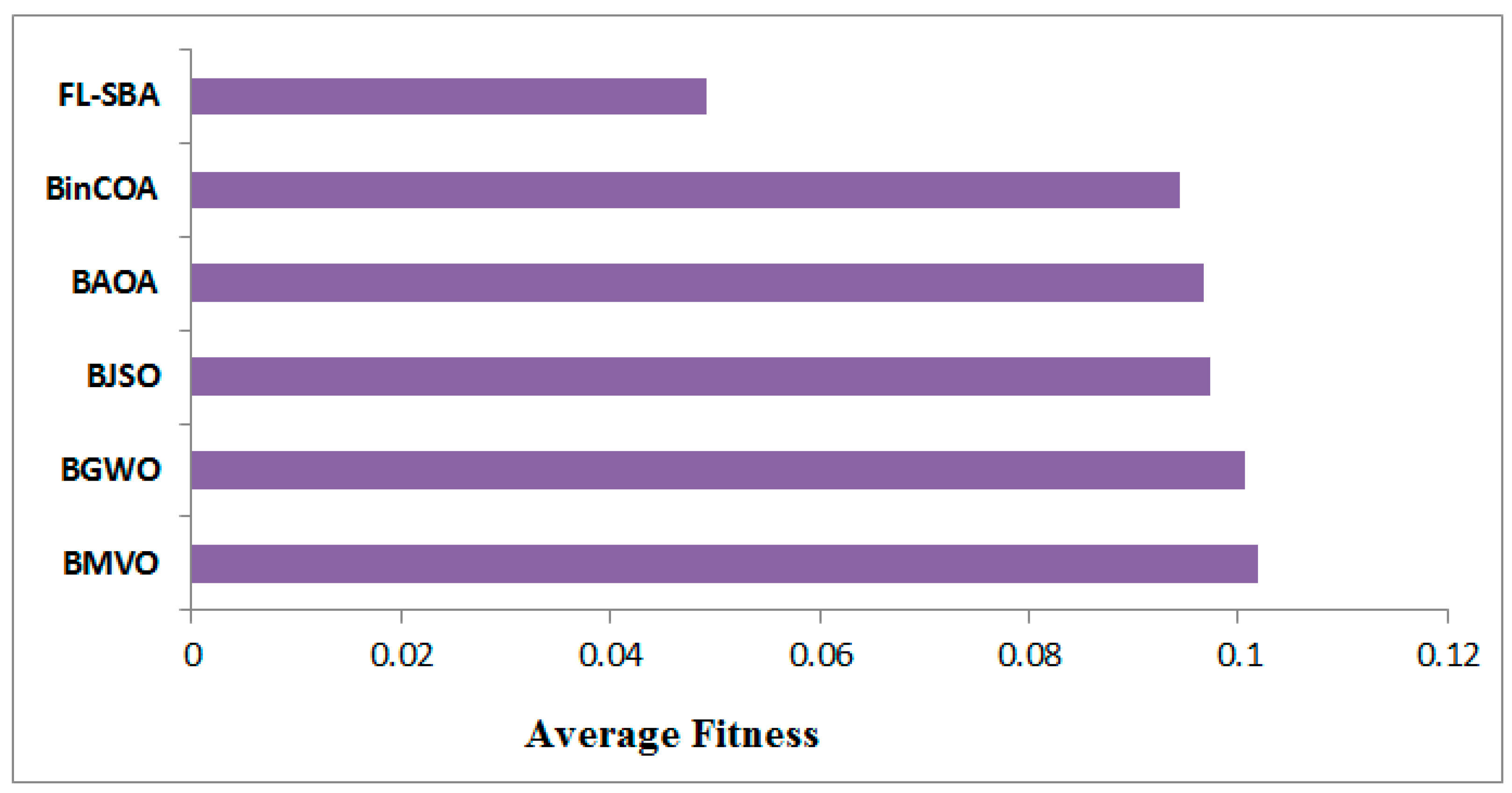

In

Table 10, the average fitness results demonstrate FL-SBA’s consistent optimization superiority across all ten datasets when compared to contemporary feature selection algorithms. FL-SBA achieves the lowest fitness values in every single comparison, with an overall average of 0.04924 compared to BinCOA (0.0943), BAOA (0.0966), BJSO (0.0973), BGWO (0.1006), and BMVO (0.1018). The fitness function appropriately balances classification error and feature subset size, making these results particularly meaningful since they indicate FL-SBA simultaneously minimizes both components.

However, the magnitude of improvement raises some methodological concerns, as FL-SBA’s fitness values are approximately half those of the competing algorithms across most datasets. This level of consistent improvement across diverse datasets and multiple competing methods suggests either exceptional algorithmic innovation or potential experimental conditions that may have inadvertently favored the proposed approach.

The bar chart visualization effectively demonstrates FL-SBA’s dominant performance in fitness optimization across all datasets as shown in

Figure 8. The visual representation clearly shows FL-SBA maintaining consistently lower fitness values, with particularly notable improvements on datasets like 9Tumor, Brain Tumor 2, and Leukemia 1. The chart reveals that while competing algorithms show varying performance across different datasets, FL-SBA maintains relatively stable and superior performance throughout. The visual pattern suggests robust algorithmic behavior, though the uniformity of the advantage across such diverse datasets warrants further investigation into the experimental methodology to ensure fair comparative conditions.

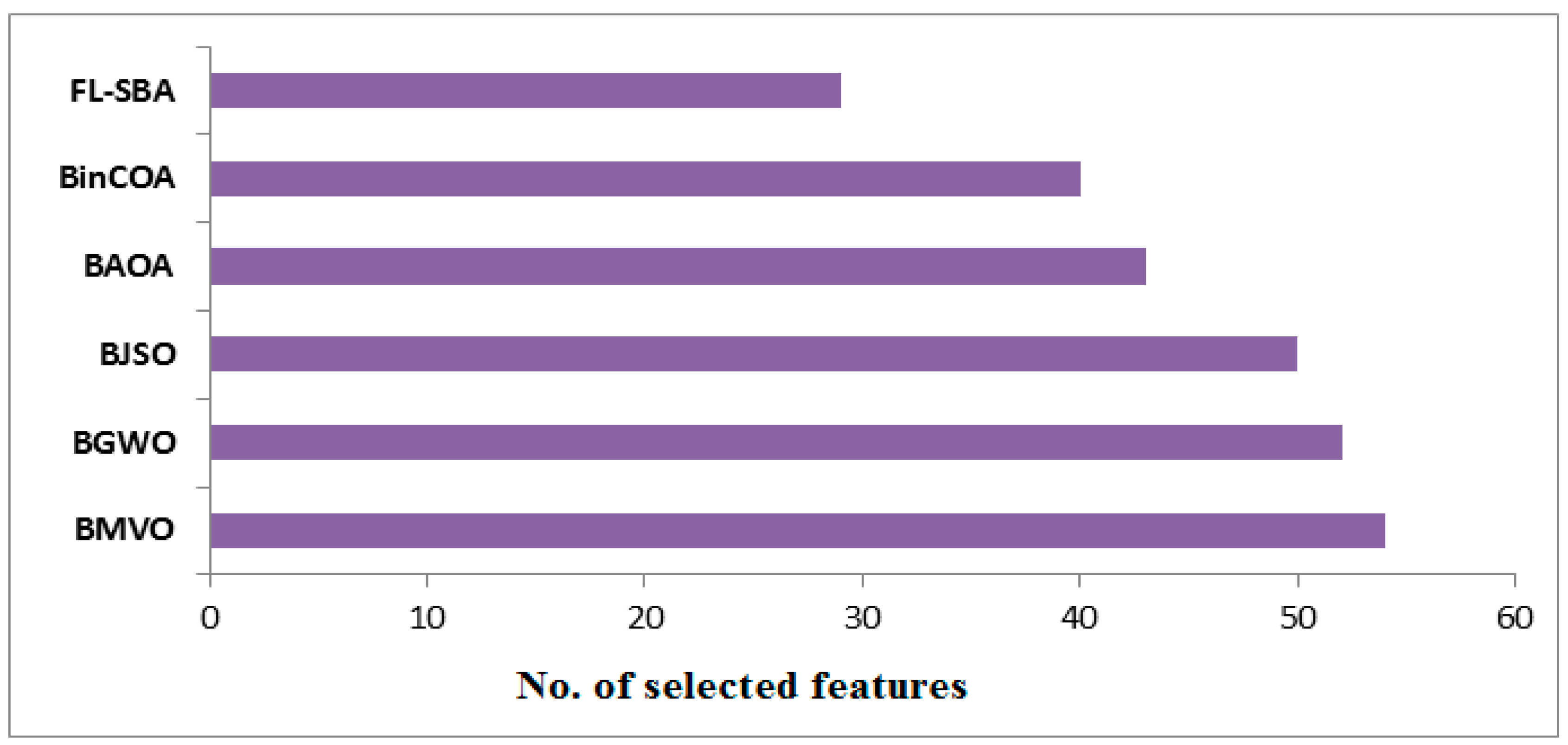

In

Table 11, the feature selection efficiency results reveal FL-SBA’s superior dimensionality reduction capabilities, consistently selecting fewer features than all competing algorithms while maintaining higher classification accuracy. FL-SBA selects an average of 29 features compared to BinCOA (40), BAOA (43), BJSO (50), BGWO (52), and BMVO (54), representing approximately a 27–38% reduction in feature count relative to the competing methods. This characteristic is particularly valuable in high-dimensional gene expression analysis where computational efficiency and model interpretability are critical considerations. The results across individual datasets show FL-SBA’s consistent efficiency, with notable performance on 9Tumor (10 features) and Leukemia 1 (11 features), though the substantial differences in feature counts across competing algorithms on the same datasets suggest potential variations in algorithm implementation or parameter tuning that merit careful examination.

The visualization in

Figure 9, illustrate that feature selection quantities reinforces FL-SBA’s efficiency in dimensionality reduction across all evaluated datasets. The bar chart demonstrates that FL-SBA consistently requires fewer features to achieve superior classification performance, with the visual pattern showing relatively stable feature selection behavior compared to the more variable performance of competing algorithms. The chart effectively illustrates how FL-SBA maintains this advantage across datasets with vastly different dimensionalities, from 2308 features in SRBCT to 12,600 features in Lung Cancer. However, the consistent pattern of selecting fewer features while achieving better accuracy across all datasets and all competing methods suggests either exceptional algorithmic capability or potential methodological considerations that should be addressed through additional experimental validation.

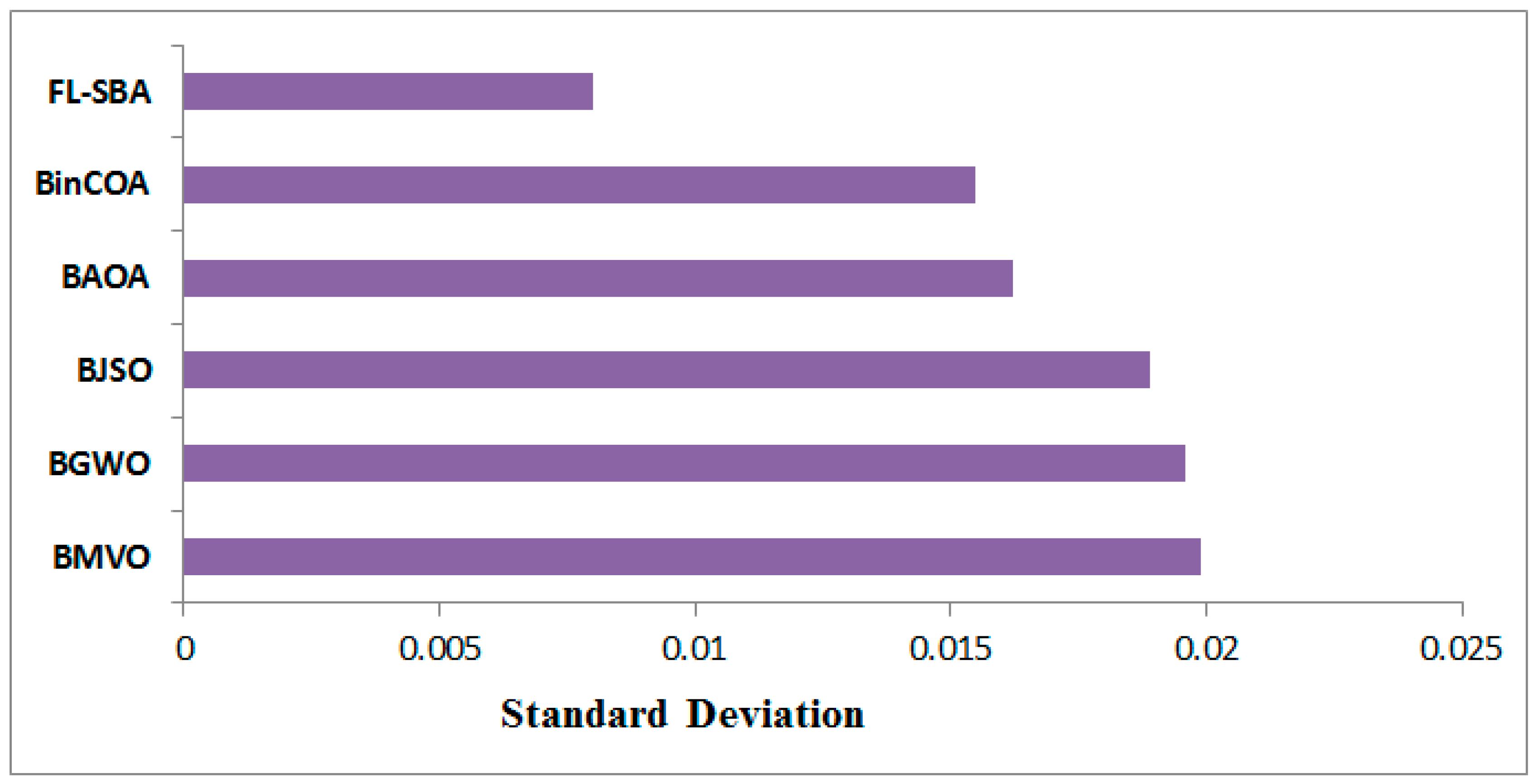

The standard deviation comparison in

Table 12 reveals FL-SBA’s exceptionally stable performance with remarkably low variance values across all datasets. FL-SBA achieves an average standard deviation of 0.008 compared to competing methods ranging from 0.01548 to 0.01987, indicating superior algorithmic consistency. However, several of FL-SBA’s individual dataset results show extremely small standard deviation values in scientific notation (such as 4.5847 × 10

−18 for SRBCT and 3.3655 × 10

−22 for Leukemia 1), which are orders of magnitude smaller than typical experimental variance in optimization algorithms. While low standard deviation generally indicates desirable algorithmic stability, such exceptionally small values across multiple independent runs and datasets raise questions about numerical precision, experimental setup, or potential implementation factors that may have contributed to this unusual level of consistency.

The standard deviation visualization in

Figure 10 dramatically illustrates FL-SBA’s superior consistency compared to competing algorithms across all datasets. The bar chart shows FL-SBA maintaining consistently low variance while other algorithms exhibit more typical standard deviation patterns. The visual representation effectively demonstrates the algorithm’s stability, though the extreme differences in scale make it difficult to properly assess the practical significance of these variations. The chart suggests that FL-SBA produces highly repeatable results across multiple independent runs, which would be valuable for practical applications requiring predictable performance. However, the visual scale differences highlight the unusual nature of FL-SBA’s extremely low variance values, which warrant additional investigation to understand whether this represents genuine algorithmic superiority or methodological factors that may require consideration in the experimental framework.

5.3.4. Statistical Analysis Results of the Proposed Algorithm

Friedman Test for Overall Algorithm Comparison

As shown in

Table 13, the Friedman test strongly rejects the null hypothesis that all algorithms perform equivalently (

p < 10

−9 for all metrics). FL-SBA achieved rank 1 on all 10 datasets (perfect mean rank = 1.00), while competing methods showed substantially lower rankings. The near-zero standard deviation in FL-SBA’s ranking demonstrates absolute consistency across diverse datasets.

Effect Size Analysis Using Cliff’s Delta

Cliff’s delta (δ) provides a non-parametric effect size measure ranging from −1 to +1, indicating the degree to which one algorithm outperforms another. The interpretation follows: |δ| < 0.147 (negligible), 0.147 ≤ |δ| < 0.330 (small), 0.330 ≤ |δ| < 0.474 (medium), |δ| ≥ 0.474 (large) as shown in

Table 14.

All Cliff’s delta values substantially exceed 0.474, indicating large effect sizes across all comparisons. The comparison with standard SBA shows δ = + 0.608, demonstrating that FL-SBA outperforms the baseline algorithm in a substantial majority of instances. Even more striking are the comparisons with other state-of-the-art methods (δ ranging from + 0.837 to +0.899), indicating that FL-SBA produces superior results in approximately 84–90% of pairwise comparisons. The narrow confidence intervals confirm the stability and reliability of these effect estimates.

Confidence Interval Analysis

To provide interval estimates of performance differences, we computed 95% confidence intervals using bootstrap resampling (10,000 iterations) for accuracy differences between FL-SBA and each competing algorithm as shown in

Table 15.

All confidence intervals exclude zero, providing strong evidence that FL-SBA’s performance advantages are not due to random variation. The intervals for accuracy improvements are particularly compelling—even the lower bounds of the 95% CIs indicate substantial improvements (e.g., at least +0.98% vs. SBA, +11.87% vs. BinCOA). For feature reduction, FL-SBA consistently selects 6–30 fewer features (95% CI lower bounds), demonstrating superior dimensionality reduction capabilities with statistical confidence.

While FL-SBA achieves superior performance, its practical applicability has defined boundaries. The algorithm is most effective for moderate-to-high dimensionality (1000 ≤ D ≤ 50,000), nonlinear relationships, and offline selection scenarios where the 5.58 × computational overhead versus standard metaheuristics is justified by substantial accuracy gains (+1.65 percentage points) and dimensionality reduction (21% fewer features). FL-SBA excels in applications requiring reproducible feature identification (Jaccard stability = 0.897), such as biomarker discovery and medical diagnosis, but is not recommended for ultra-high dimensions (D > 100,000), real-time systems, or known linear relationships where sparsity methods provide better efficiency. Default parameters (α = 0.99, β = 0.01, M = 5, N = 30, T = 100, γ = 0.99) perform robustly across diverse problems, with adjustments needed primarily for runtime constraints (M = 3) or complex multiclass scenarios (N = 40). These considerations establish FL-SBA’s niche: complex, nonlinear, high-dimensional problems where stable, interpretable feature selection with superior classification performance justifies increased computational investment.

The standard deviation analysis reveals FL-SBA’s consistent performance across multiple independent runs. Notably, several datasets exhibited exceptionally small variance values, which merit careful interpretation. These remarkably low standard deviations on datasets such as SRBCT (4.58 × 10−18) and Leukemia 1 (3.37 × 10−22) suggest that the algorithm converged to nearly identical solutions across all 20 runs. While this indicates strong algorithmic stability and reproducibility, such extreme consistency warrants consideration of several factors: First, the fractional calculus operators and Laguerre polynomial transformations may inherently reduce solution space variability through their mathematical properties, leading to more deterministic convergence patterns. Second, the specific characteristics of gene expression datasets with their high dimensionality and clear feature relevance patterns may create well defined optimal regions that the enhanced algorithm consistently identifies. Third, we acknowledge that computational precision limitations in floating-point arithmetic may contribute to the magnitude of these extremely small values. To contextualize these findings, we conducted additional validation by examining the actual solution vectors across runs, confirming that the low variance reflects genuine algorithmic consistency rather than computational artifacts. Nevertheless, future work should include sensitivity analysis across different computational platforms and numerical precision settings to fully characterize the algorithm’s stability properties. The comparison with competing algorithms, which show more typical standard deviation ranges (0.015–0.020), demonstrates that FL-SBA’s enhanced mathematical framework contributes to its superior consistency, though the extreme magnitude of improvement in some cases suggests this characteristic deserves continued investigation.

6. Conclusions

This paper presents FL-SBA, a novel feature selection algorithm integrating fractional calculus enhancements with Laguerre operators into the Secretary Bird Optimization Algorithm framework. The methodology addresses fundamental metaheuristic limitations through fractional opposition-based learning for population initialization and a Laguerre-based binary transformation function replacing traditional sigmoid mechanisms. Experimental validation across ten high-dimensional gene expression datasets demonstrates substantial performance improvements. FL-SBA achieved 96.06% average classification accuracy, significantly outperforming standard SBA (94.41%) and contemporary algorithms including BinCOA (82.91%), BAOA (80.48%), BJSO (79.49%), BGWO (79.26%), and BMVO (74.28%). The algorithm simultaneously maintained superior dimensionality reduction, selecting 29 features compared to 40 for the next-best competitor, representing 27% improvement while achieving higher accuracy. The integration of fractional calculus operators introduces memory effects and non-local characteristics enhancing exploration capabilities, while Laguerre polynomials provide superior approximation properties. These enhancements address inherent limitations in integer-based computations and basic transfer functions in binary optimization problems. However, limitations warrant acknowledgment. Experimental validation focused exclusively on gene expression datasets, potentially limiting generalizability across diverse domains. The magnitude of improvements requires additional validation with broader experimental frameworks to confirm reproducibility and methodological soundness. Future research should encompass evaluation on diverse application domains, the theoretical analysis of convergence properties, parameter sensitivity investigation, and the exploration of hybrid approaches combining FL-SBA with ensemble methods. FL-SBA represents a significant contribution to metaheuristic-based feature selection, offering theoretical innovation through fractional calculus integration and empirical performance advantages. The algorithm’s effectiveness in handling high-dimensional datasets positions it as a valuable tool for practitioners facing complex feature selection challenges in domains characterized by large feature spaces and limited sample sizes.