Improved Federated Learning Incentive Mechanism Algorithm Based on Explainable DAG Similarity Evaluation

Abstract

1. Introduction

- An innovative similarity evaluation mechanism based on edge weight matrix is proposed: Each participating vehicle uses local data to train a specially designed GNN model to generate an edge weight matrix that can fully reflect the data feature distribution and internal relationship structure. Efficient matrix similarity measurement algorithms are developed, including similarity calculation based on spectral analysis, distance measurement based on information theory, and pattern recognition methods based on machine learning, to achieve accurate quantitative evaluation of participants’ data quality, learning ability, and behavior patterns.

- A decentralized intelligent mutual evaluation and scoring system is designed: A mutual evaluation and dynamic feedback mechanism between participating vehicles was established, and comprehensive scoring was performed based on edge weight matrix similarity, historical behavior records, and real-time performance. The system uses multi-dimensional evaluation indicators and adaptive weight adjustment strategies to effectively identify and isolate malicious participants while motivating the active contributions of honest participants.

- An optimized hybrid blockchain–DAG architecture is developed: Based on the existing PermiDAG framework, targeted architecture optimization and functional expansion were carried out, and the system was designed specifically for the storage, transmission, verification, and synchronization requirements of the GNN edge weight matrix. The local DAG network is responsible for handling high-frequency similarity calculations, score updates, and anomaly detection tasks, and the main blockchain ensures the security, consistency, and immutability of key decision-making information.

- An adaptive intelligent aggregation optimization algorithm is implemented: Based on the trust score, similarity analysis, and quality assessment results, a multi-level adaptive model aggregation strategy was designed. The algorithm can dynamically adjust the aggregation weight distribution according to the credibility, contribution quality, and data representativeness of the participants, and it gives priority to high-quality model updates to participate in global aggregation, thereby significantly improving the convergence speed, model accuracy, and robustness of federated learning.

- A comprehensive multi-level security protection system is constructed: Deeply integrated differential privacy technology, a secure multi-party computing protocol, a homomorphic encryption algorithm, and blockchain consensus mechanism form a comprehensive security protection system covering the entire life cycle of data collection, transmission, processing, storage and use. While ensuring the data privacy and commercial confidentiality of participants, it effectively resists various active attacks, passive attacks, and reasoning attacks.

2. Related Work

2.1. Internet of Vehicles and Edge Computing

2.2. Directed Acyclic Graph Technology in Distributed Systems

2.3. Federated Learning and Privacy Protection

2.4. Graph Neural Networks in Internet of Vehicle Systems

2.5. Trust Mechanism and Quality Assessment

3. Proposed Method

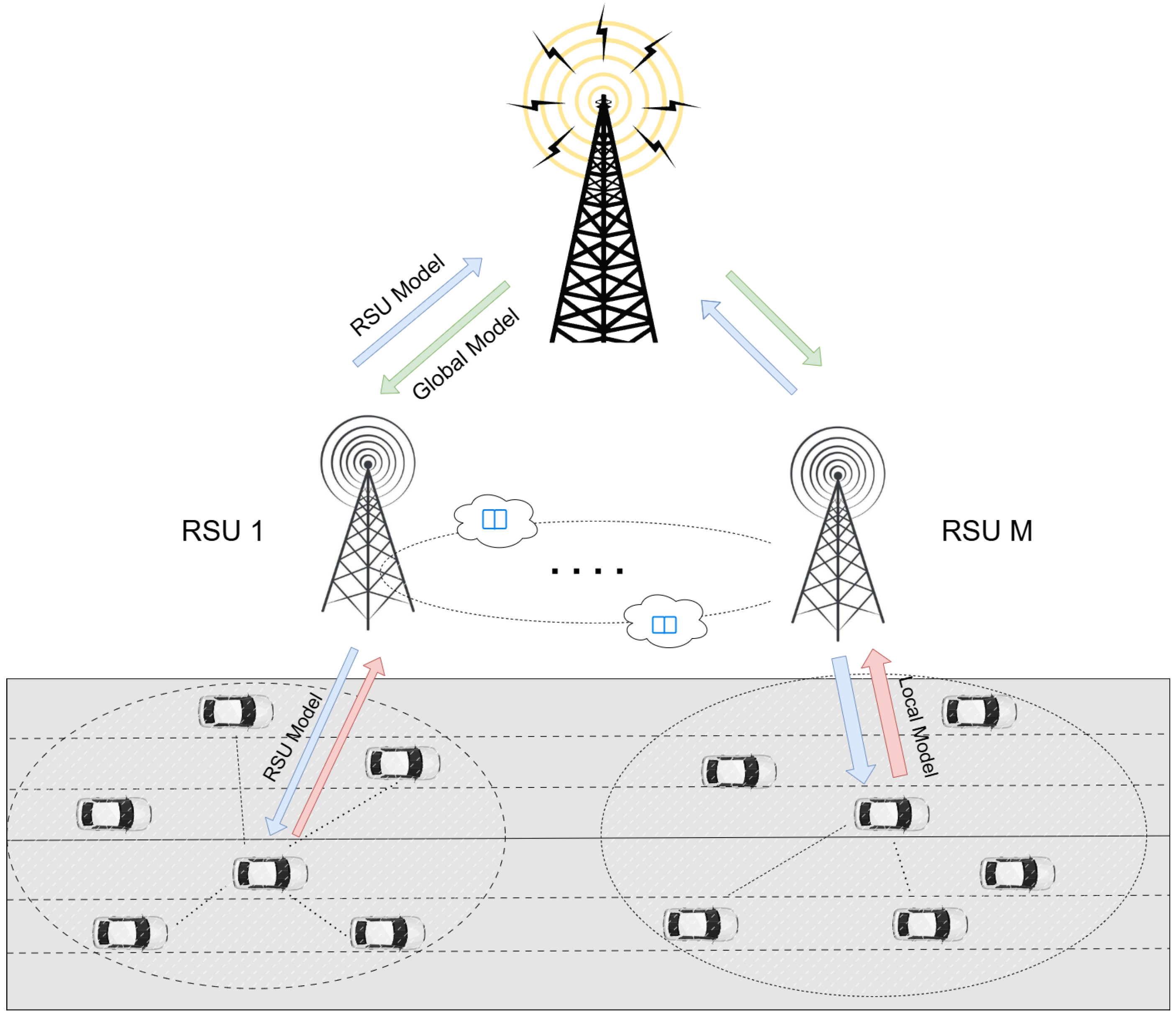

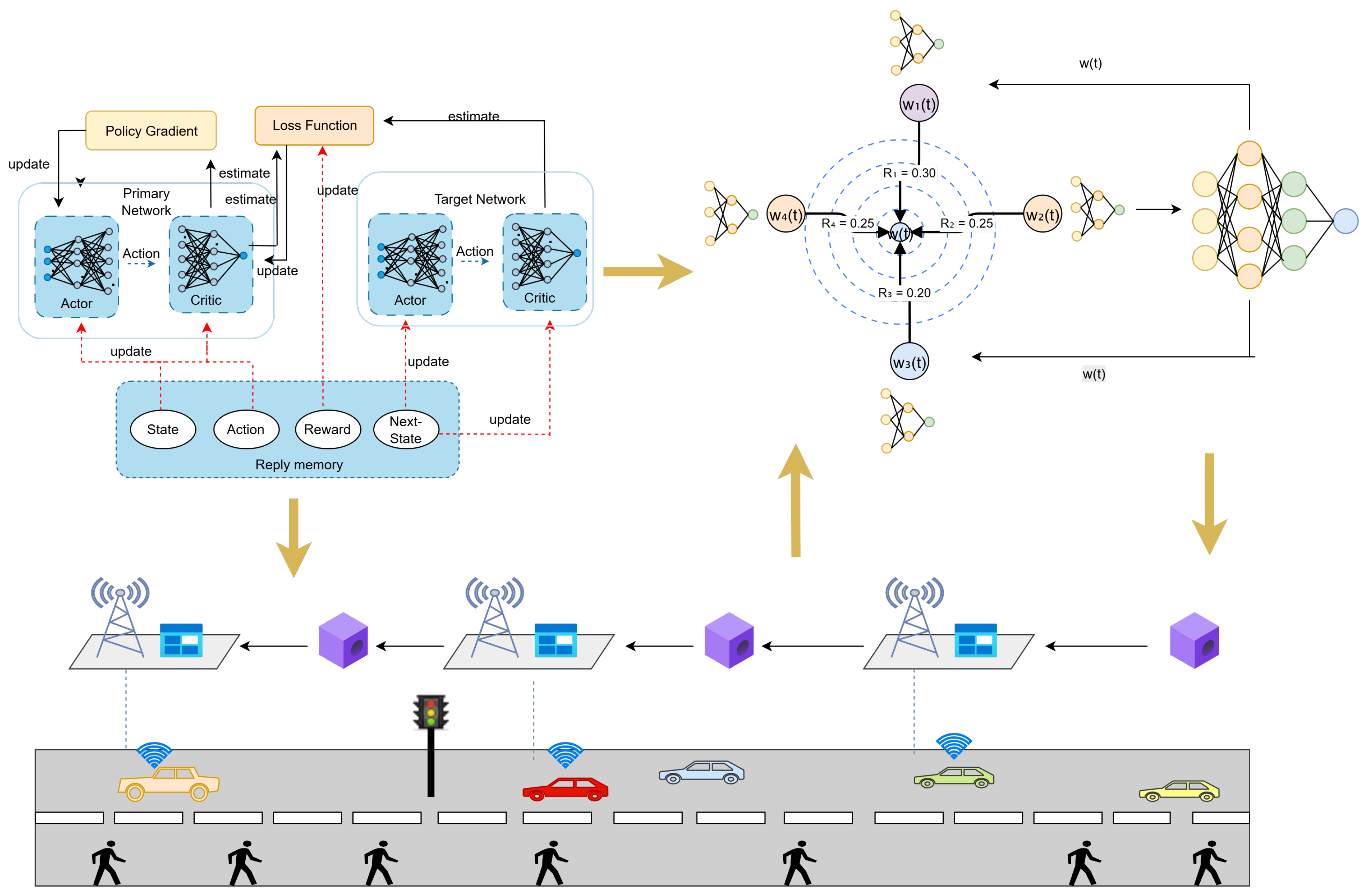

3.1. System Model

3.2. Interpretable GNN-Based Federated Learning Framework

3.3. Federated Learning and Structural Reputation-Driven Asynchronous Sharing Process Design

| Algorithm 1 Structural Reputation-Driven Asynchronous Federated Learning |

|

| Algorithm 2 Asynchronous Verification and Structure-Aware Aggregation |

|

| Algorithm 3 DDPG Node Selection with Structural Reputation |

|

3.4. Similarity Verification Mechanism of Structural Reputation

- Participant selection: In order to improve the operation efficiency and training accuracy, the participant selection stage selects nodes with a higher resource amount within a given communication time to participate in federated learning. The selected nodes also serve as validators. At the beginning, the server initializes the federated learning process by selecting a global machine learning model and initializing the parameters . Then, the server selects the optimal node with high computing and communication capabilities through an algorithm based on DRL and distributes the parameter vector to each node .

- GNN local training: Local training is implemented using a distributed gradient descent algorithm based on graph neural networks. In iteration t, each participating vehicle trains a local GNN model on its data according to and updates the model by calculating the local gradient descent :where is the learning rate of distributed gradient descent. Vehicle then sends the trained local model parameters to nearby RSUs and uploads them for further verification and aggregation.

- Edge weight similarity evaluation: This is the core innovation of our method. Each participating vehicle uses local data to train the GNN model and extract the edge weight matrix . By calculating the similarity between the edge weight matrices of different vehicles, evaluation of the contribution quality of the participants and the mutual trust score is achieved.

- Aggregation: The aggregator retrieves updated local parameters from the permissioned blockchain and performs global aggregation by aggregating the local models of participating nodes into a weighted global model :where N is the number of participating nodes, and is the contribution weight of node i to the entire training process in iteration t based on edge weight similarity evaluation.

3.5. Materiality Assessment Based on MMD

3.6. Explainable Reputation Mechanism Based on DAG Verification

3.7. Local DAG Framework Design Driven by Structural Reputation

- Model update parameters

- Structural reputation value

- Local training time

- Referenced parent transaction hash list

- GNN edge weight matrix summary

- Structural perception ability: Even if the model trained by a node performs well in terms of accuracy, if its data structure deviates seriously from the mainstream vehicle, the structural reputation will be automatically reduced to avoid misleading model aggregation;

- Improved anti-attack ability: Through the global comparison of structural distribution, forged or atypical data inputs can be effectively identified, thereby enhancing the security and robustness of the entire system.

3.8. Cumulative Weight and Transaction Verification Mechanism Under Structural Reputation

- Enhanced stability: If a transaction structure has a high reputation value and is repeatedly verified by multiple high-reputation nodes, it will eventually gain a significant weight increase;

- Suppress structural deviation: If a transaction has a large gap with the structural reputation value calculated by other nodes, is negative or close to zero, which means that there is a lack of structural consensus, and its final weight will also decrease;

- Improve anomaly detection capability: Some malicious nodes may upload model updates with forged structures, but because they cannot pass multi-source verification, their cumulative reputation will quickly decrease and be excluded from the main aggregation path.

- Transaction selection: Node starts the structural reputation verification task and selects two candidate transactions from the local DAG;

- Structure matching evaluation: The local GNN model is used to process the data features of the two transactions, respectively, and calculate their structural similarity with the data of this node;

- Reputation difference judgment: The locally evaluated structural reputation value is compared with the structural reputation attached to the original transaction. If the difference , it is considered to be verified;

- Transaction reference: After verification, the node points the hash of the new transaction to the above two transactions, forming a new edge in the DAG;

- Structural feature broadcast: The node broadcasts the structural summary information of the new transaction to the surrounding vehicles for reference by subsequent verifiers.

3.9. Structural Reputation-Oriented Consensus Foundation

- The path length exceeds the set threshold , ensuring the stability of structural transmission;

- The total weight exceeds the threshold , indicating that the transaction structure has been widely accepted;

- Or, it is referenced by more than M reputation nodes at time t.

- No need for global synchronization, adapting to the characteristics of asynchronous and distributed IoV;

- Strong anti-malicious attack capability, because the path needs to continuously accumulate reputation rather than a one-time breakthrough;

- More fair, as weak computing vehicles can also obtain verification opportunities through structural stability.

- High-quality path fast detection: High-reputation transactions are more likely to be visited by walking, thereby accelerating their consensus building speed;

- Enhanced isolation of low-quality transactions: Transactions with abnormal structures or low reputation naturally fade out of the consensus main path due to their low probability of being visited;

- Dynamic self-adjustment: The system can automatically adjust according to network load, vehicle activity, or reputation, thereby achieving flexible scheduling.

- Structural forgery detection mechanism: By performing randomness checks and mutual verification on the structural embedding output by the GNN model, we prevent nodes from privately constructing highly idealized edge weight matrices to defraud high reputation;

- Multi-round structural verification: After each transaction is added to the DAG, it must undergo multiple rounds of cross-verification of the walk path to ensure that its structural reputation is consistently evaluated in most nodes;

- Reputation leakage penalty mechanism: If a node submits revoked transactions multiple times, its reputation will be dynamically downgraded and will be cited with a low probability in subsequent walks, thereby reducing its system influence.

4. Deep Reinforcement Node Selection Mechanism Driven by Structural Reputation

4.1. Combinatorial Optimization of Integrated Structural Reputation and Cost Trade-Offs

- (1)

- Local training cost:

- (2)

- Communication cost:

- (3)

- Structural reputation cost:

- (4)

- Comprehensive cost function:

- (5)

- Geographic location constraint:

- (6)

- Optimization modeling:

4.2. Proposed DDPG Node Selection Algorithm Driven by Structural Reputation

- (1)

- Critic network design and training:

- (2)

- Actor network design and update strategy:

- (3)

- Reward function design—structural reputation-driven mechanism:

5. Experiments

5.1. Dataset

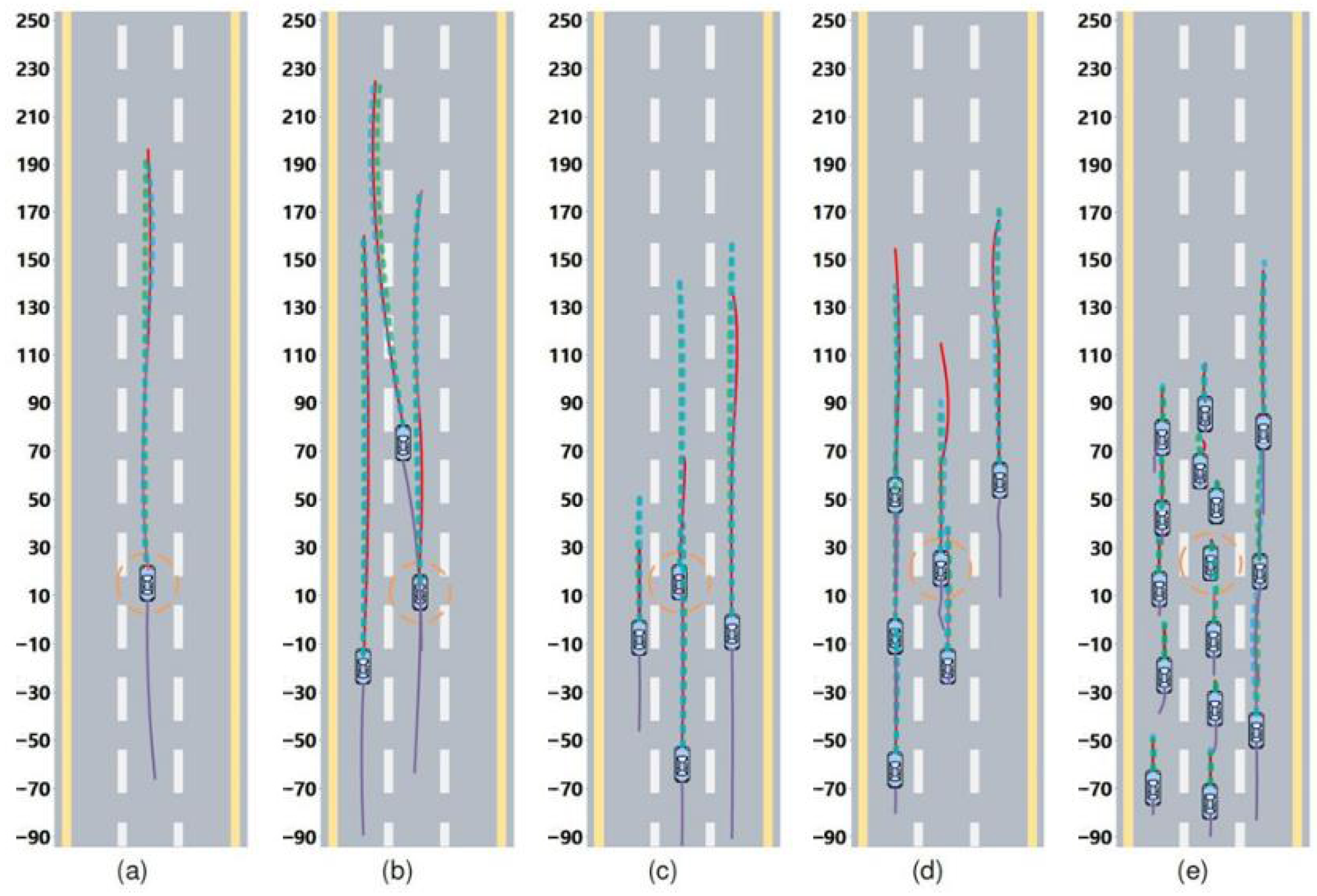

5.2. Experimental Results

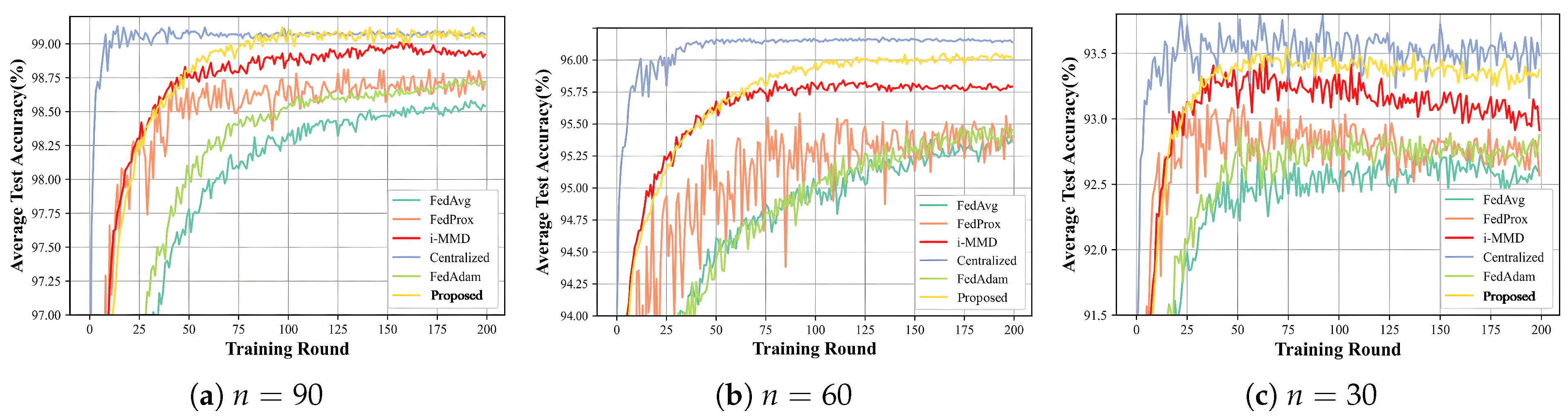

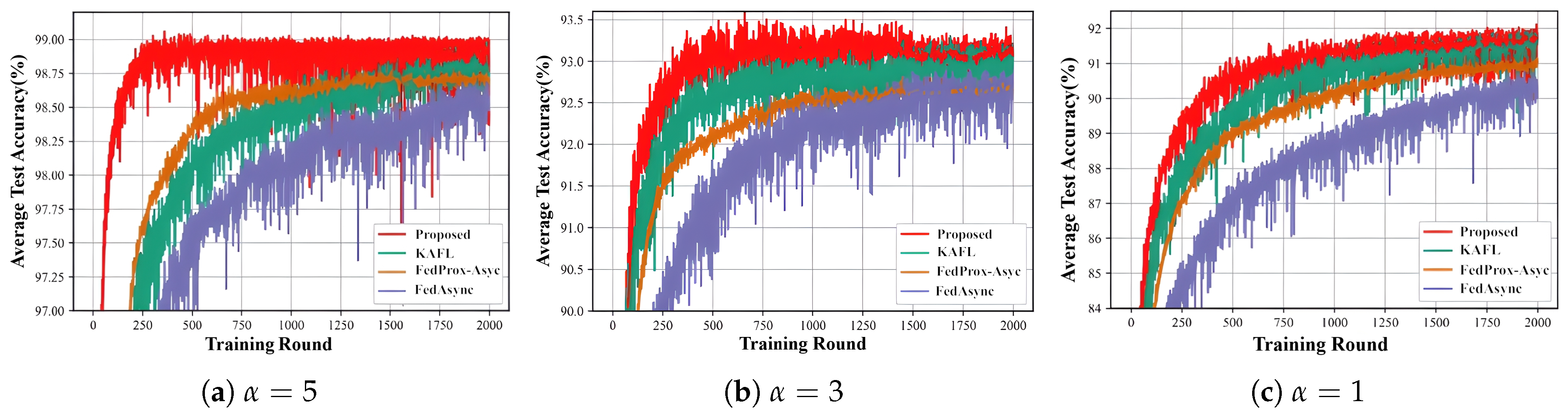

Performance Analysis of Synchronization Algorithm

- FedAvg (blue line): Federated average algorithm, which is the basic method of federated learning. It updates the global model by simply averaging the local training models of each client;

- FedProx (red line): Federated proximal algorithm, which is an extended version of FedAvg. It introduces proximal terms in the objective function to deal with system heterogeneity and stabilizes the training process by limiting the degree to which local updates deviate from the global model;

- i-MMD (green line): An algorithm based on MMD, which is used to measure the data distribution differences between different data providers. The smaller the i-MMD value, the more similar the data is, and the smaller the impact on model accuracy;

- Centralized (purple line): Centralized learning method, as a reference for the upper bound of performance;

- FedAdam (brown line): Federated adaptive optimization algorithm. The optimizer is introduced into the federated learning framework, combining adaptive learning rate and decentralization characteristics;

- Proposed (yellow line): The algorithm proposed in this paper.

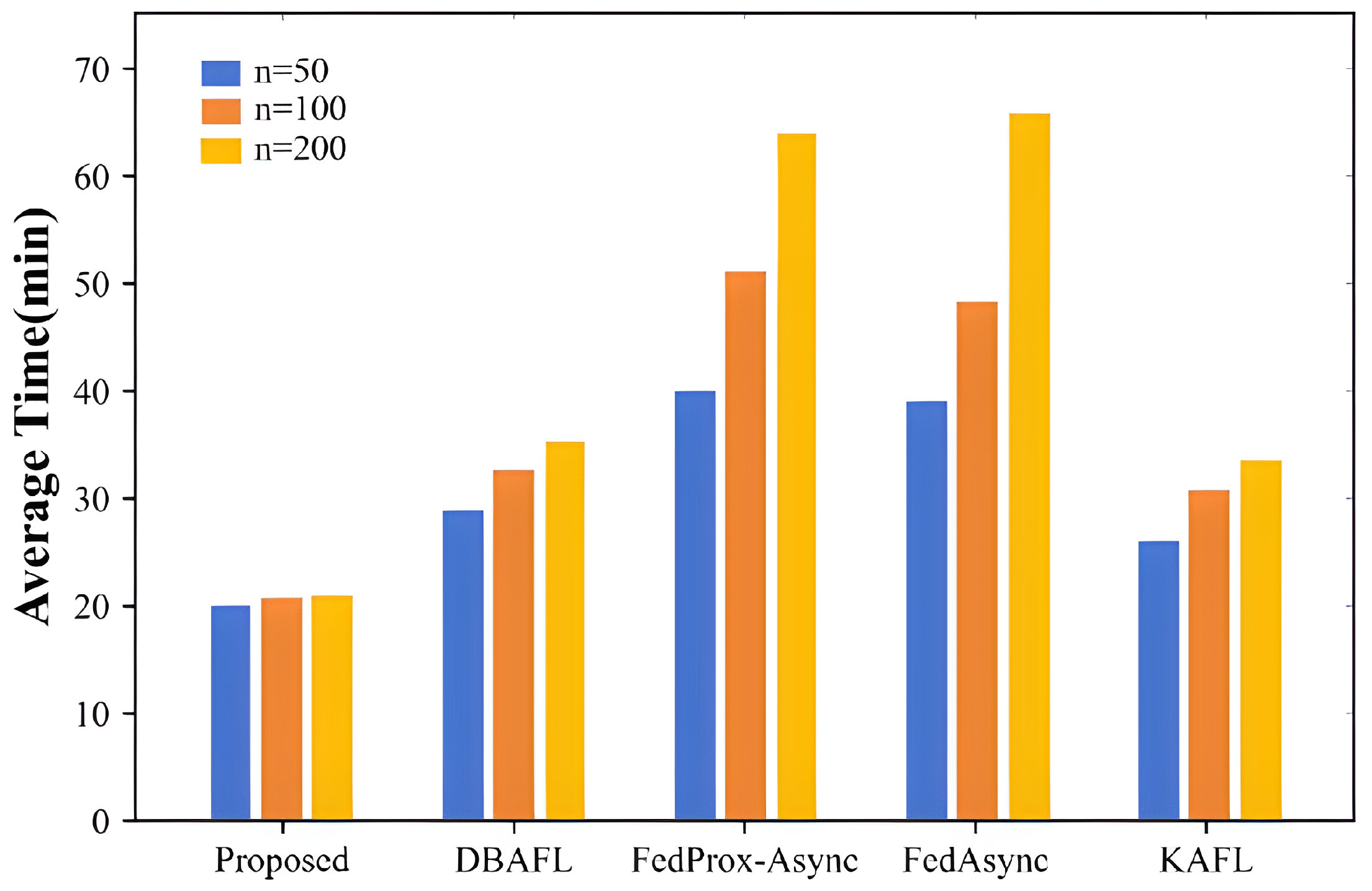

- Proposed (red line): the asynchronous federated learning algorithm we proposed;

- KAFL (green line): the knowledge-aware federated learning algorithm, which uses knowledge distillation technology to allow clients to partially share model information based on its usefulness instead of applying a complete model update;

- FedProx-Async (orange line): the asynchronous federated proximal algorithm, which combines the FedProx method with the FedAsync algorithm extension and carries proximal terms in the asynchronous setting to stabilize updates to handle the inaccuracy caused by device heterogeneity and outliers;

- FedAsync (purple line): the federated asynchronous learning algorithm, which is an asynchronous version of federated learning. Local model updates from different clients arrive at the server in a less synchronous mode, without waiting for all clients to complete local training.

- Proposed: our proposed algorithm;

- DBAFL: blockchain-based asynchronous federated learning algorithm;

- FedProx-Async: asynchronous federated proximal algorithm;

- FedAsync: federated asynchronous learning algorithm;

- KAFL: knowledge-aware federated learning algorithm.

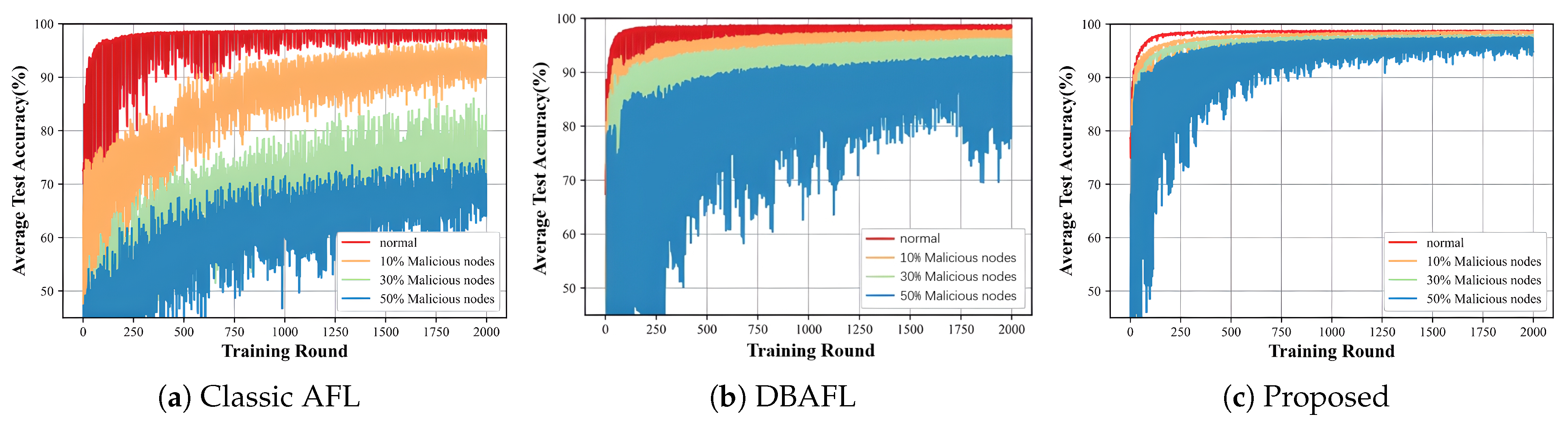

- Classic AFL (blue area): Classic asynchronous federated learning algorithm, using traditional parameter aggregation method;

- DBAFL (orange area): Decentralized blockchain-assisted federated learning algorithm, using blockchain technology to enhance the decentralized characteristics and security of the system;

- Proposed (red area): Our proposed federated learning algorithm with enhanced robustness.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Andrews, J.G.; Buzzi, S.; Choi, W.; Hanly, S.V.; Lozano, A.; Soong, A.C.; Zhang, J.C. What will 5G be? IEEE J. Sel. Areas Commun. 2014, 32, 1065–1082. [Google Scholar] [CrossRef]

- Boban, M.; Kousaridas, A.; Manolakis, K.; Eichinger, J.; Xu, W. Connected roads of the future: Use cases, requirements, and design considerations for vehicle-to-everything communications. IEEE Veh. Technol. Mag. 2018, 13, 110–123. [Google Scholar] [CrossRef]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. A survey on mobile edge computing: The communication perspective. IEEE Commun. Surv. Tutor. 2017, 19, 2322–2358. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, H.; Li, R. Deep graph reinforcement learning for mobile edge computing: Challenges and solutions. IEEE Netw. 2024, 38, 314–323. [Google Scholar] [CrossRef]

- Wang, S.; Tuor, T.; Salonidis, T.; Leung, K.K.; Makaya, C.; He, T.; Chan, K. Adaptive federated learning in resource constrained edge computing systems. IEEE J. Sel. Areas Commun. 2019, 37, 1205–1221. [Google Scholar] [CrossRef]

- Nakamoto, S.; Bitcoin, A. A peer-to-peer electronic cash system. Bitcoin 2008, 4, 15. [Google Scholar]

- Yuan, Y.; Wang, F.Y. Towards blockchain-based intelligent transportation systems. In Proceedings of the 2016 IEEE 19th International Conference on Intelligent Transportation Systems (ITSC), Rio de Janeiro, Brazil, 1–4 November 2016; pp. 2663–2668. [Google Scholar]

- Buterin, V. A next-generation smart contract and decentralized application platform. White Pap. 2014, 3, 1–36. [Google Scholar]

- Zhang, Y.; Zheng, D.; Deng, R.H. Security and privacy in smart health: Efficient policy-hiding attribute-based access control. IEEE Internet Things J. 2018, 5, 2130–2145. [Google Scholar] [CrossRef]

- Popov, S. The tangle. White Pap. 2018, 1, 30. [Google Scholar]

- Silvano, W.F.; Marcelino, R. Iota Tangle: A cryptocurrency to communicate Internet-of-Things data. Future Gener. Comput. Syst. 2020, 112, 307–319. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated learning: Challenges, methods, and future directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and open problems in federated learning. Found. Trends Mach. Learn. 2021, 14, 1–210. [Google Scholar] [CrossRef]

- Lim, W.Y.B.; Luong, N.C.; Hoang, D.T.; Jiao, Y.; Liang, Y.C.; Yang, Q.; Niyato, D.; Miao, C. Federated learning in mobile edge networks: A comprehensive survey. IEEE Commun. Surv. Tutor. 2020, 22, 2031–2063. [Google Scholar] [CrossRef]

- Lu, Y.; Huang, X.; Zhang, K.; Maharjan, S.; Zhang, Y. Communication-efficient federated learning for digital twin edge networks in industrial IoT. IEEE Trans. Ind. Inform. 2020, 17, 5709–5718. [Google Scholar] [CrossRef]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A comprehensive survey on graph neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4–24. [Google Scholar] [CrossRef]

- Zhou, J.; Cui, G.; Hu, S.; Zhang, Z.; Yang, C.; Liu, Z.; Wang, L.; Li, C.; Sun, M. Graph neural networks: A review of methods and applications. AI Open 2020, 1, 57–81. [Google Scholar] [CrossRef]

- Yu, B.; Yin, H.; Zhu, Z. Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting. arXiv 2017, arXiv:1709.04875. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Hamilton, W.; Ying, Z.; Leskovec, J. Inductive representation learning on large graphs. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Contreras-Castillo, J.; Zeadally, S.; Guerrero-Ibañez, J.A. Internet of vehicles: Architecture, protocols, and security. IEEE Internet Things J. 2017, 5, 3701–3709. [Google Scholar] [CrossRef]

- Liu, J.; Kato, N.; Ma, J.; Kadowaki, N. Device-to-device communication in LTE-advanced networks: A survey. IEEE Commun. Surv. Tutor. 2014, 17, 1923–1940. [Google Scholar] [CrossRef]

- Zhang, K.; Leng, S.; He, Y.; Maharjan, S.; Zhang, Y. Cooperative content caching in 5G networks with mobile edge computing. IEEE Wirel. Commun. 2018, 25, 80–87. [Google Scholar] [CrossRef]

- Wang, C.; Liang, C.; Yu, F.R.; Chen, Q.; Tang, L. Computation offloading and resource allocation in wireless cellular networks with mobile edge computing. IEEE Trans. Wirel. Commun. 2017, 16, 4924–4938. [Google Scholar] [CrossRef]

- Zhang, K.; Leng, S.; Peng, X.; Pan, L.; Maharjan, S.; Zhang, Y. Artificial intelligence inspired transmission scheduling in cognitive vehicular communications and networks. IEEE Internet Things J. 2018, 6, 1987–1997. [Google Scholar] [CrossRef]

- Dai, H.N.; Zheng, Z.; Zhang, Y. Blockchain for Internet of Things: A survey. IEEE Internet Things J. 2019, 6, 8076–8094. [Google Scholar] [CrossRef]

- Zhang, K.; Zhu, Y.; Maharjan, S.; Zhang, Y. Edge intelligence and blockchain empowered 5G beyond for the industrial Internet of Things. IEEE Netw. 2019, 33, 12–19. [Google Scholar] [CrossRef]

- Androulaki, E.; Barger, A.; Bortnikov, V.; Cachin, C.; Christidis, K.; De Caro, A.; Enyeart, D.; Ferris, C.; Laventman, G.; Manevich, Y.; et al. Hyperledger fabric: A distributed operating system for permissioned blockchains. In Proceedings of the Thirteenth EuroSys Conference, Porto, Portugal, 23–26 April 2018; pp. 1–15. [Google Scholar]

- Szabo, N. Smart contracts: Building blocks for digital markets. Extropy J. Transhumanist Thought 1996, 18, 28. [Google Scholar]

- Bentov, I.; Lee, C.; Mizrahi, A.; Rosenfeld, M. Proof of activity: Extending bitcoin’s proof of work via proof of stake [extended abstract] y. ACM SIGMETRICS Perform. Eval. Rev. 2014, 42, 34–37. [Google Scholar] [CrossRef]

- Kshetri, N. 1 Blockchain’s roles in meeting key supply chain management objectives. Int. J. Inf. Manag. 2018, 39, 80–89. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the Artificial Intelligence and Statistics, Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Xie, C.; Koyejo, S.; Gupta, I. Asynchronous federated optimization. arXiv 2019, arXiv:1903.03934. [Google Scholar]

- Dwork, C. Differential privacy. In Proceedings of the International Colloquium on Automata, Languages, and Programming, Venice, Italy, 10 July 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1–12. [Google Scholar]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated optimization in heterogeneous networks. Proc. Mach. Learn. Syst. 2020, 2, 429–450. [Google Scholar]

- Qi, J.; Lin, F.; Chen, Z.; Tang, C.; Jia, R.; Li, M. High-quality model aggregation for blockchain-based federated learning via reputation-motivated task participation. IEEE Internet Things J. 2022, 9, 18378–18391. [Google Scholar] [CrossRef]

- Lu, Y.; Huang, X.; Dai, Y.; Maharjan, S.; Zhang, Y. Blockchain and federated learning for privacy-preserved data sharing in industrial IoT. IEEE Trans. Ind. Inform. 2019, 16, 4177–4186. [Google Scholar] [CrossRef]

- Cao, X.; Fang, M.; Liu, J.; Gong, N.Z. Fltrust: Byzantine-robust federated learning via trust bootstrapping. arXiv 2020, arXiv:2012.13995. [Google Scholar]

- Ji, S.; Zhang, J.; Zhang, Y.; Han, Z.; Ma, C. LAFED: A lightweight authentication mechanism for blockchain-enabled federated learning system. Future Gener. Comput. Syst. 2023, 145, 56–67. [Google Scholar] [CrossRef]

- Konečnỳ, J.; McMahan, H.B.; Yu, F.X.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated learning: Strategies for improving communication efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- He, C.; Balasubramanian, K.; Ceyani, E.; Yang, C.; Xie, H.; Sun, L.; He, L.; Yang, L.; Yu, P.S.; Rong, Y.; et al. Fedgraphnn: A federated learning system and benchmark for graph neural networks. arXiv 2021, arXiv:2104.07145. [Google Scholar]

- Cho, J.H.; Swami, A.; Chen, R. A survey on trust management for mobile ad hoc networks. IEEE Commun. Surv. Tutor. 2010, 13, 562–583. [Google Scholar] [CrossRef]

- Yu, W.; Liang, F.; He, X.; Hatcher, W.G.; Lu, C.; Lin, J.; Yang, X. A survey on the edge computing for the Internet of Things. IEEE Access 2017, 6, 6900–6919. [Google Scholar] [CrossRef]

- Xiao, H.; Zhao, J.; Pei, Q.; Feng, J.; Liu, L.; Shi, W. Vehicle selection and resource optimization for federated learning in vehicular edge computing. IEEE Trans. Intell. Transp. Syst. 2021, 23, 11073–11087. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv 2015, arXiv:1509.02971. [Google Scholar]

- Gretton, A.; Borgwardt, K.M.; Rasch, M.J.; Schölkopf, B.; Smola, A. A kernel two-sample test. J. Mach. Learn. Res. 2012, 13, 723–773. [Google Scholar]

- Li, J.; Ma, G.; Yang, W.; Li, R.; Wang, H.; Gu, Z. FedDDPG: A reinforcement learning method for federated learning-based vehicle trajectory prediction. Array 2025, 27, 100450. [Google Scholar] [CrossRef]

- Wang, P.; Huang, X.; Cheng, X.; Zhou, D.; Geng, Q.; Yang, R. The apolloscape open dataset for autonomous driving and its application. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2702–2719. [Google Scholar] [CrossRef]

- Li, X.; Ying, X.; Chuah, M.C. Grip++: Enhanced graph-based interaction-aware trajectory prediction for autonomous driving. arXiv 2019, arXiv:1907.07792. [Google Scholar]

| Participants | Proposed | PoQRBFL | PoTQBFL | FoolsGold | FLTrust | LAFED |

|---|---|---|---|---|---|---|

| n = 30 | 0.936 | 0.921 | 0.909 | 0.922 | 0.919 | 0.915 |

| n = 60 | 0.962 | 0.957 | 0.917 | 0.931 | 0.942 | 0.948 |

| n = 90 | 0.992 | 0.974 | 0.963 | 0.979 | 0.966 | 0.977 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, W.; Zhou, Y. Improved Federated Learning Incentive Mechanism Algorithm Based on Explainable DAG Similarity Evaluation. Mathematics 2025, 13, 3507. https://doi.org/10.3390/math13213507

Lin W, Zhou Y. Improved Federated Learning Incentive Mechanism Algorithm Based on Explainable DAG Similarity Evaluation. Mathematics. 2025; 13(21):3507. https://doi.org/10.3390/math13213507

Chicago/Turabian StyleLin, Wenhao, and Yang Zhou. 2025. "Improved Federated Learning Incentive Mechanism Algorithm Based on Explainable DAG Similarity Evaluation" Mathematics 13, no. 21: 3507. https://doi.org/10.3390/math13213507

APA StyleLin, W., & Zhou, Y. (2025). Improved Federated Learning Incentive Mechanism Algorithm Based on Explainable DAG Similarity Evaluation. Mathematics, 13(21), 3507. https://doi.org/10.3390/math13213507