Bayesian Network Applications in Decision Support Systems

Abstract

1. Introduction to Decision Support Systems

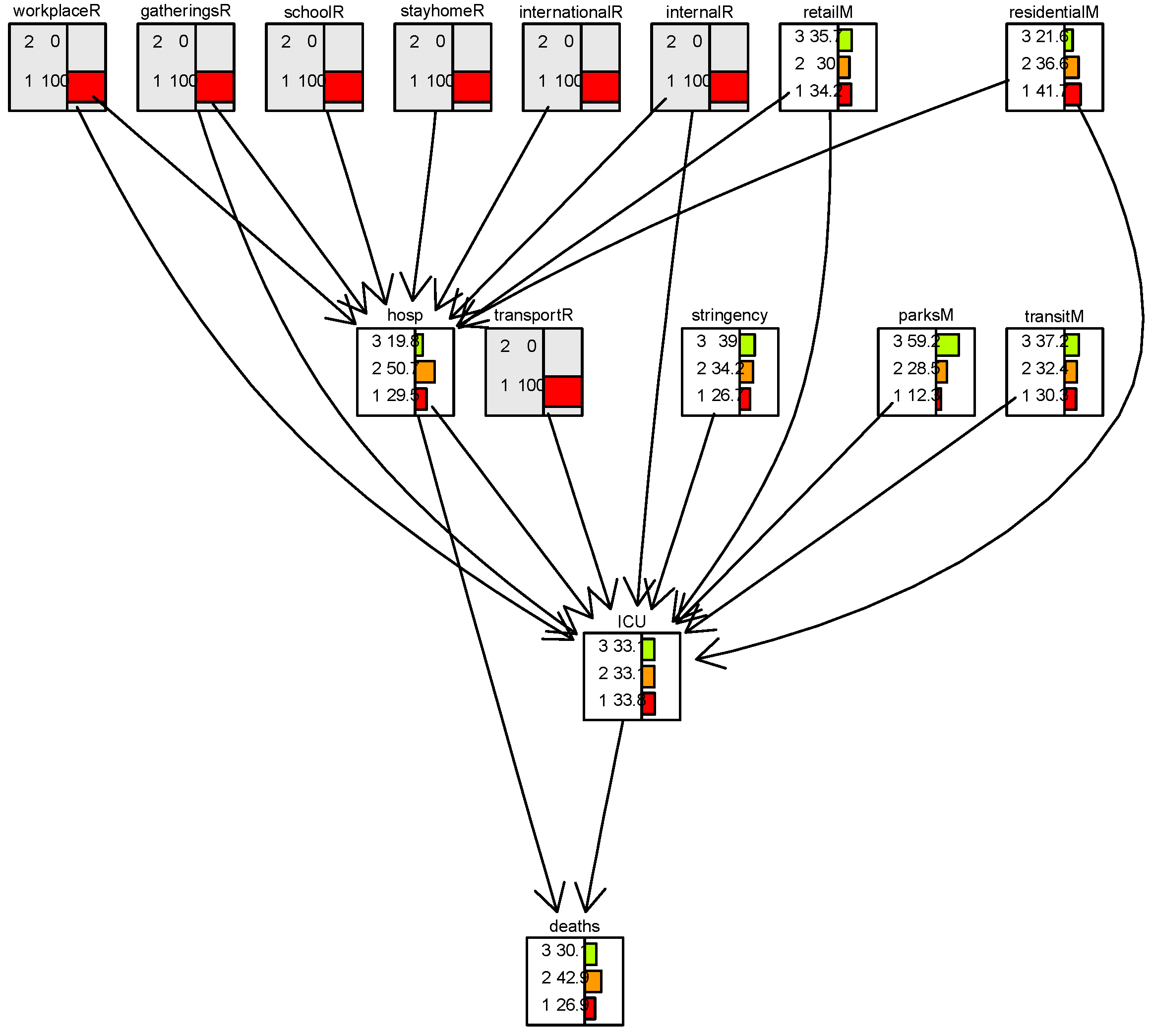

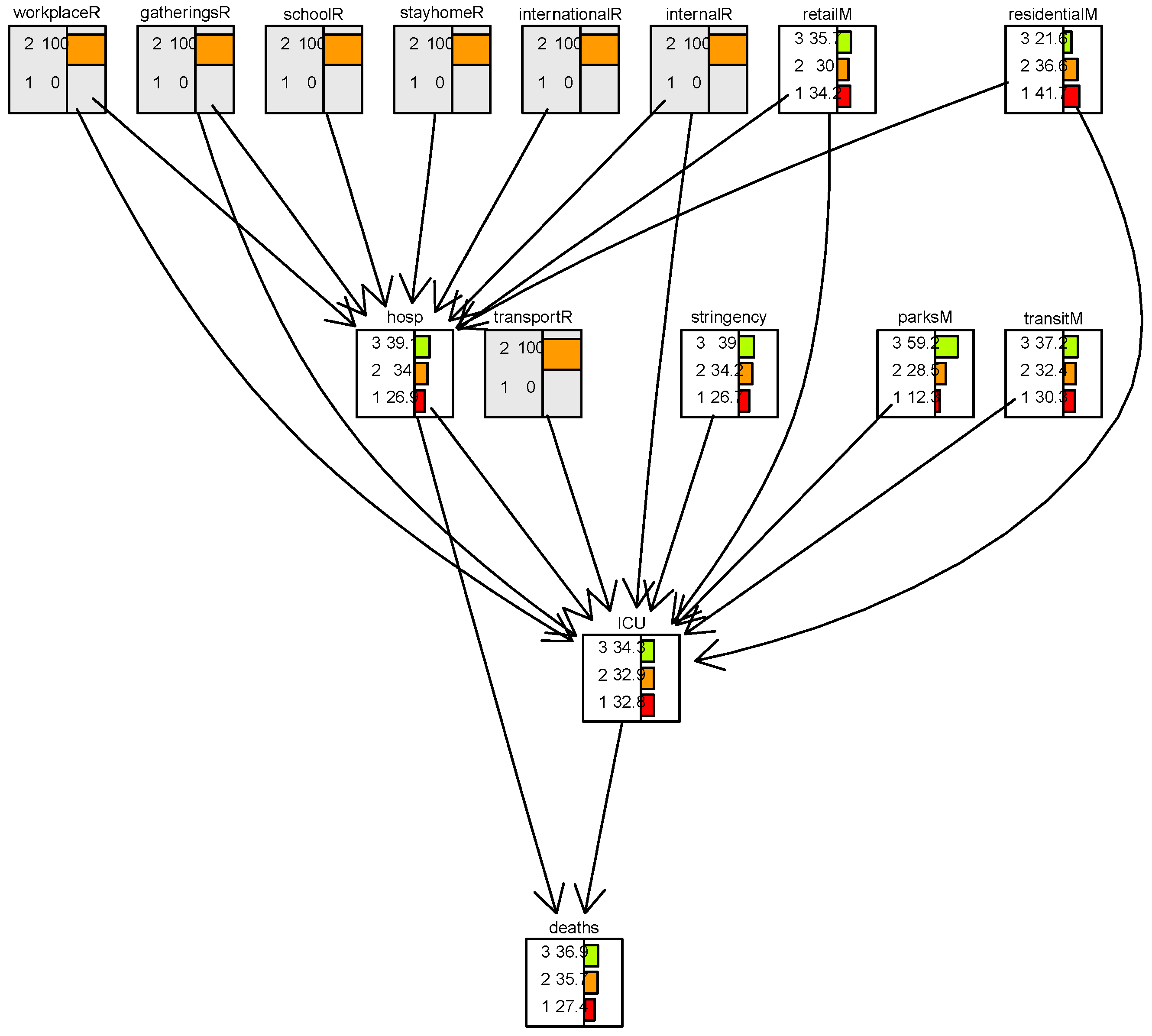

2. The COVID-19 Case Study

3. The Website Usability Case Study

- i.

- The time the visitors wait until the beginning of the file download. This is used as a measure of page responsiveness.

- ii.

- The download time. This is used as a measure of page performance.

- iii.

- The time from download completion to the visitor’s request for the next page. This is a time stamp of when the visitor reads the page content, but also does other things, some of them unrelated to the page content.

- Design—At this state, data is collected and analyzed. System architects and designers develop guidelines and operating procedures representing accumulated knowledge and experience on preventing operational failures. A prototype DSUID is then developed.

- Testing—the prototype DSUID is subjected to beta testing. This is repeated when new website versions are launched for evaluating the way they are actually being used.

- Tracking—ongoing DSUID tracking systems are required to handle changing operational patterns. Statistical process control (SPC) is employed to monitor the user experience by comparing actual results to expected results, acting on the gaps.

- The first and lowest layer—user activity. This layer records significant user actions (involving screen changes or server-side processing).

- The second layer—page hit attributes. This layer consists of download time, processing time, and user response time.

- The third layer—transition analysis. The third-layer data are about transitions and repeated form submission (indicative of visitors’ difficulties in form filling).

- The fourth layer—user problem indicator identification. Indicators of possible navigational difficulty, including (a) predicted estimates for site exit by the time elapsed until the next user action (as no exit indication is recorded on the server log file), (b) backward navigation, and (c) transitions to main pages, interpreted as escaping the current sub task.

- The fifth layer—usage data. This consists of usage statistics, such as the following:

- Average entry time;

- Average download time;

- Average time between repeated form submission;

- Average time on website (indicating content related behavior);

- Average time on a previous screen (indicating ease of link finding).

- The sixth layer—statistical decision. For each of the page attributes, DSUID compares the data over the exceptional page views to those over all page views. The null hypothesis is that (for each attribute) the statistics of both samples are the same. A simple two-tailed t test can be used to reject it, and therefore to conclude that certain page attributes are potentially problematic. A typical error level is set to 5%.

- The seventh and top layer—interpretation. For each of the page attributes, DSUID provides a list of possible reasons for the difference between the statistics over the exceptional navigation patterns and that over all of the page hits. Typically, the usability analyst decides which of the potential source of visitors’ difficulties is applicable to the particular deficiency.

4. The Political Conflict Resolution Case Study

- (i)

- Integrating data from different sources and in different update timings and units;

- (ii)

- Defining composite indicators that provide unified views;

- (iii)

- Tracking and modeling trends at various levels of the system hierarchy;

- (iv)

- Analyzing alternative scenarios for supporting decision makers.

- I.

- Map: Mapping categorizes factors into economic, security, and geo-spatial (demographic) domains. In this phase, experts determine indicators reflecting domains of control by geographical area. A methodology for supporting this part is the Goals–Question–Metrics (GQM) approach presented in Van Solingen et al. [22]. The GQM steps are to (1) generate a set of goals, (2) derive a set of questions relating to the goals, and (3) develop a set of metrics needed to answer the questions. Data can be viewed using dynamic graphs with the ability to zoom in on any individual indicator and by navigating the data hierarchy.

- II.

- Construct: This stage involves developing an integrated database combining indicators from different domains, by year or by quarter. In this phase, research teams load data into a database and compute indices relative to a common annual baseline. The politography decision support system updates a configuration file with indicator names and identifies missing values and outliers. We determine data subsets for use in integration (by year or quarter) and conduct linkage analysis to obtain integrated data.

- III.

- Identify: Here, trends are identified in individual indicators and composite indicators and relative control levels are computed by year and by entity over one of the predetermined territories listed above. For trend analysis, we compute composite indicators by domain using the median. Bar charts, trend charts, and variable cluster analysis are used to identify the most representative cluster indicator. In addition, domains are combined to compute an overall trend with a composite indicator. The method applied to define composite indicators is to compute individual indicators relative to a base year and then using the yearly median across indicators. To derive the combined composite indicators for each indicator, Yi(x), we define a desirability function di(Yi), which assigns numbers between 0 and 1 to the values of Yi. The value di(Yi) = 0 represents an undesirable value of Yi and di(Yi) = 1 represents a desirable or ideal value. The individual desirabilities are then combined to an overall desirability index using the geometric mean of the individual desirabilities:where k denotes the number of indicators. Notice that if any response Yi is completely undesirable (di(Yi) = 0), then the overall desirability is zero. To account for this “zero control,” we apply an additional step that mitigates such cases and the desirability function is used as a composite indicator based on individual indicators. The final composite indicators are plotted on a Y by X graph, with four triangular quadrants. Each triangle represents a different combination of Israeli and PA control levels. For more on desirability functions, see Derringer and Suich [23].Overall Desirability Function = [(d1(Y1) x d2(Y2))x … dk(Yk))]1/k

- IV.

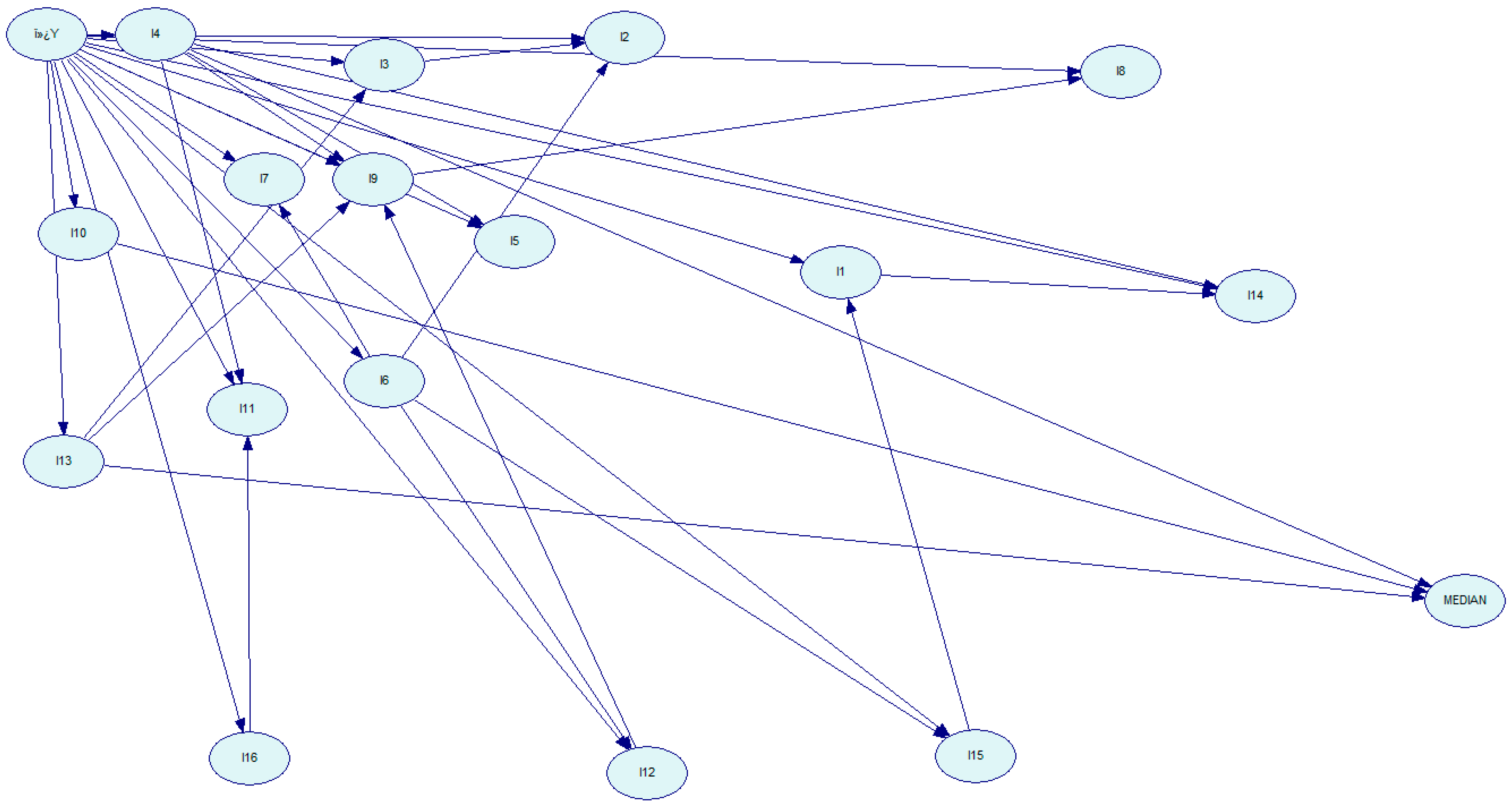

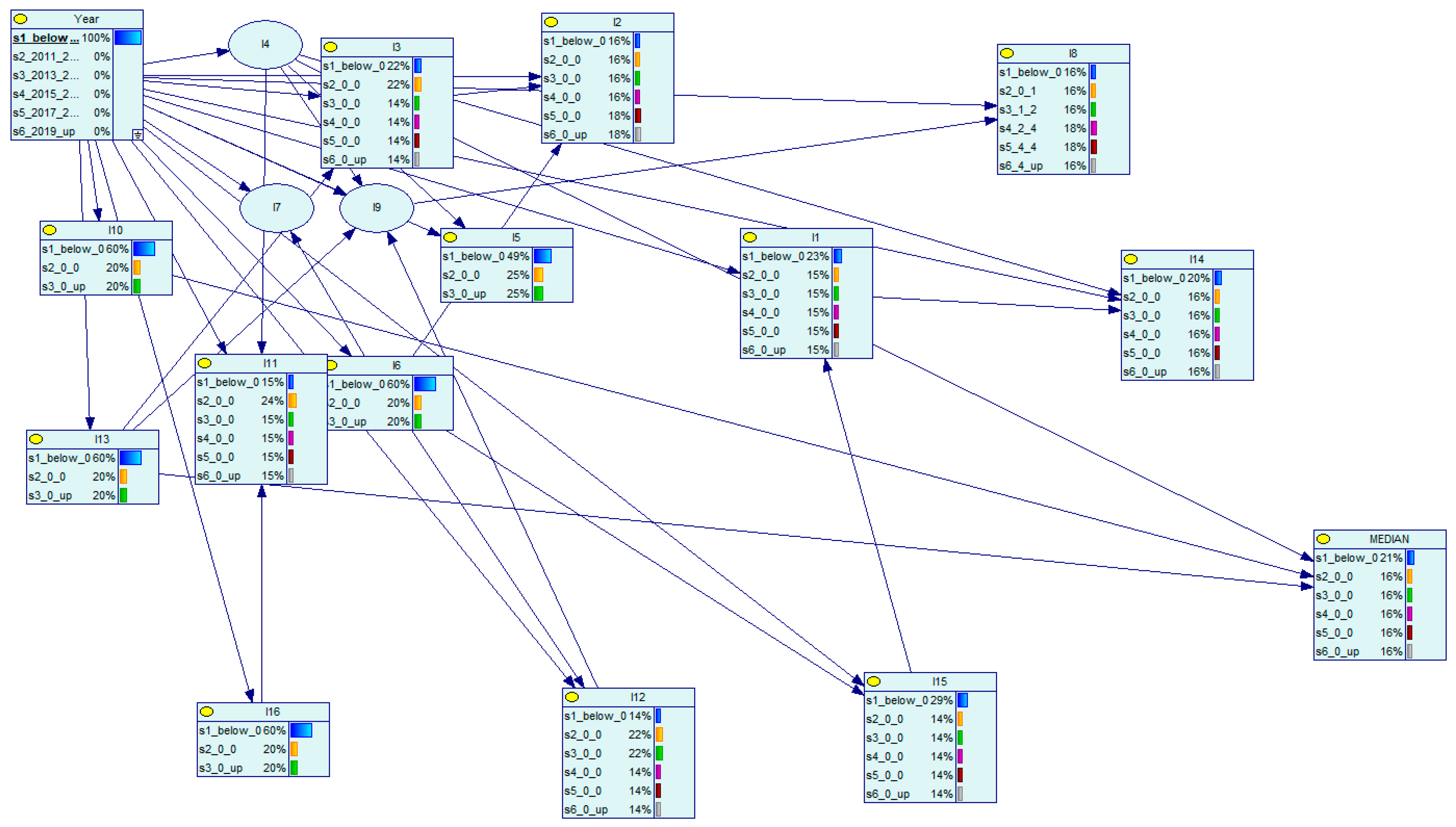

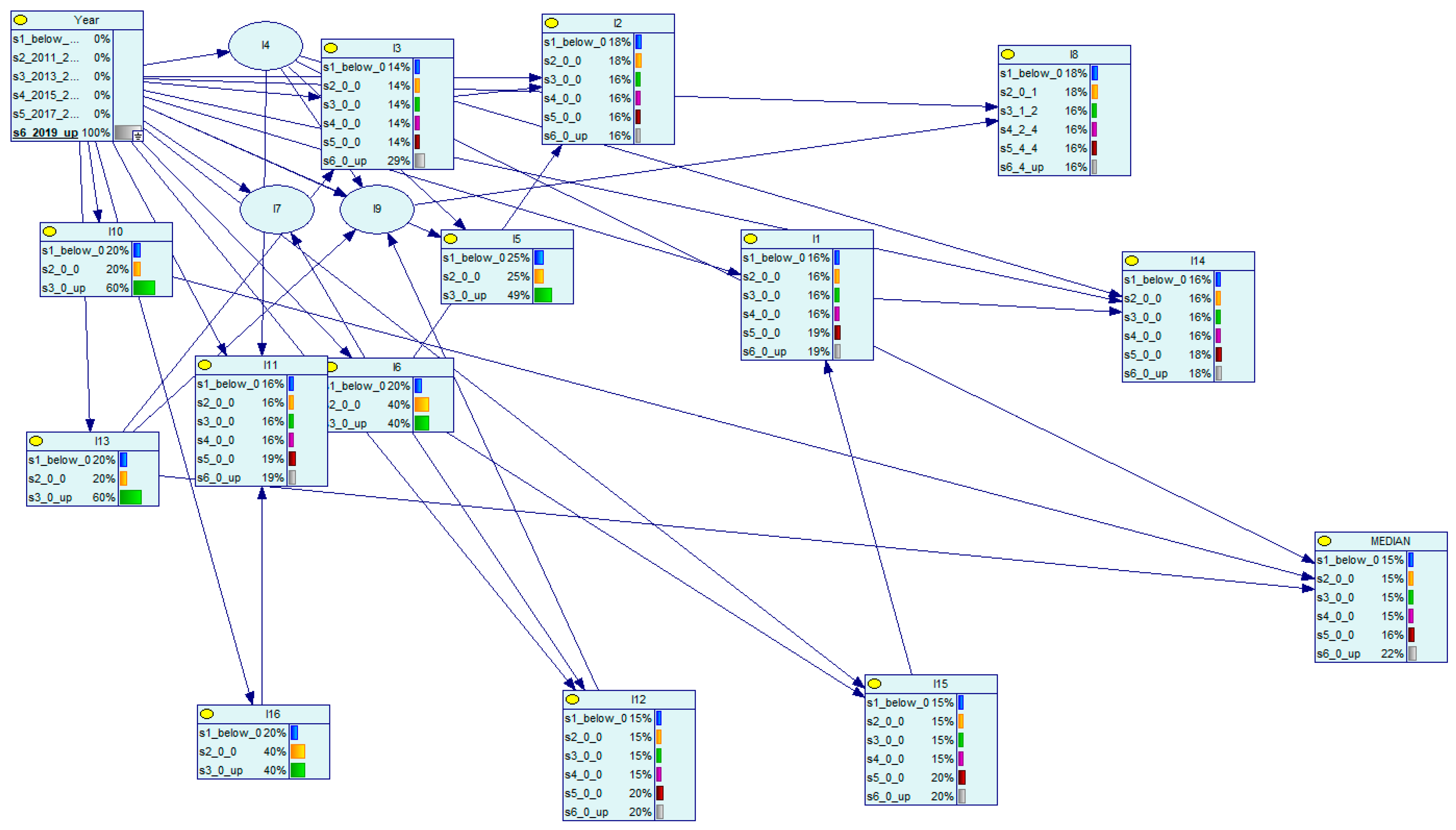

- Analyze: Scenarios are analyzed by determining the list and contribution of indicators affecting target indicators. Here, we apply Bayesian network analysis in order to understand the links between indicators from the same or different domains, and how changes in the level of one indicator influence the other indicators. This provides the ability to run what-if scenarios to assist policymakers in making informed decisions that account for the consequences of policy decisions. Below, we demonstrate an application of Bayesian networks to a subset of 18 indicators labeled I1–I16 over 12 years (2010–2021). The data analyzed is calibrated to the year 2022 as the baseline. The last column, labeled MEDIAN, is the median of the row used as a composite indicator representing the specific year. We first discretized the data and the indicator data was classified into three groups of equal width.

5. Discussion and Future Research Pathways

Funding

Data Availability Statement

Conflicts of Interest

References

- Keen, P.G. Decision support systems: A research perspective. In Decision Support Systems: Issues and Challenges: Proceedings of an International Task Force Meeting; Center for Information Systems Research, Massachusetts Institute of Technology, Sloan School of Management: Boston, MA, USA, 1980; pp. 23–44. [Google Scholar]

- Sprague, R.H., Jr. A framework for the development of decision support systems. In MIS Quarterly; Carlson School of Management University of Minnesota: Minneapolis, MN, USA, 1980; pp. 1–26. [Google Scholar]

- Bonczek, R.H.; Holsapple, C.W.; Whinston, A.B. Foundations of Decision Support Systems; Academic Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Hanke, F.; Bita, I.M.; von Heißen, O.; Julian, W.; Aschot, H.; Roman, D. AI-augmented systems engineering: Conceptual application of retrieval-augmented generation for model-based systems engineering graph. Proc. Des. Soc. 2025, 5, 439–448. [Google Scholar] [CrossRef]

- Kenett, R.S.; Harel, A.; Ruggeri, F. Agile Testing with User Data in Cloud and Edge Computing Environments. In Analytic Methods in Systems and Software Testing; John Wiley and Sons: Hoboken, NJ, USA, 2018; pp. 353–371. [Google Scholar]

- Pearl, J. Causal diagrams for empirical research. Biometrika 1995, 82, 669–688. [Google Scholar] [CrossRef]

- Kenett, R.S. On generating high InfoQ with Bayesian networks. Qual. Technol. Quant. Manag. 2016, 13, 309–332. [Google Scholar] [CrossRef]

- Kenett, R.S. Bayesian networks: Theory, applications and sensitivity issues. Encycl. Semant. Comput. Robot. Intell. 2017, 1, 1630014. [Google Scholar] [CrossRef]

- Kenett, R.S. Introduction aux Réseaux Bayésiens et Leurs Applications in Statistique et causalité; Bertrand, F., Saporta, G., Thomas-Agnan, C., Eds.; Editions Technip: Paris, France, 2021. [Google Scholar]

- Pearl, J.; Mackenzie, D. The Book of Why: The New Science of Cause and Effect; Basic Books: New York, NY, USA, 2018. [Google Scholar]

- Zhang, Y.; Kim, S. Gaussian Graphical Model Estimation and Selection for High-Dimensional Incomplete Data Using Multiple Imputation and Horseshoe Estimators. Mathematics 2024, 12, 1837. [Google Scholar] [CrossRef]

- Scutari, M.; Denis, J.B. Bayesian Networks: With Examples in R; Chapman and Hall/CRC: Boca Raton, FL, USA, 2021. [Google Scholar]

- Kenett, R.S.; Manzi, G.; Rapaport, C.; Salini, S. Integrated analysis of behavioural and health COVID-19 data combining Bayesian networks and structural equation models. Int. J. Environ. Res. Public Health 2022, 19, 4859. [Google Scholar] [CrossRef] [PubMed]

- Bargain, O.; Aminjonov, U. Trust and compliance to public health policies in times of COVID-19. J. Public Econ. 2020, 192, 104316. [Google Scholar] [CrossRef] [PubMed]

- Borgonovi, F.; Andrieu, E. Bowling together by bowling alone: Social capital and COVID-19. Soc. Sci. Med. 2020, 265, 113501. [Google Scholar] [CrossRef] [PubMed]

- Yilmazkuday, H. Stay-at-home works to fight against COVID-19: International evidence from Google mobility data. J. Hum. Behav. Soc. Environ. 2021, 31, 210–220. [Google Scholar] [CrossRef]

- He, C.; Di, R.; Tan, X. Bayesian Network Structure Learning Using Improved A* with Constraints from Potential Optimal Parent Sets. Mathematics 2023, 11, 3344. [Google Scholar] [CrossRef]

- Scutari, M. Learning Bayesian networks with the bnlearn R package. J. Stat. Softw. 2010, 35, 1–22. [Google Scholar] [CrossRef]

- Harel, A.; Kenett, R.S.; Ruggeri, F. Modeling web usability diagnostics on the basis of usage statistics. In Statistical Methods in e-Commerce Research; John Wiley and Sons: Hoboken, NJ, USA, 2008; pp. 131–172. [Google Scholar]

- Almasi, S.; Bahaadinbeigy, K.; Ahmadi, H.; Sohrabei, S.; Rabiei, R. Usability evaluation of dashboards: A systematic literature review of tools. BioMed Res. Int. 2023, 2023, 9990933. [Google Scholar] [CrossRef] [PubMed]

- Arieli, S.; Jacob, R.B.; Hirschberger, G.; Hirsch-Hoefler, S.; Kenett, A.; Kenett, R.S. A Decision Support Tool Integrating Data and Advanced Modeling. 2024. Available online: https://www.preprints.org/frontend/manuscript/2ca01bfd9883060d7dc9f200b43b2a46/download_pub (accessed on 20 October 2024).

- Van Solingen, R.; Basili, V.; Caldiera, G.; Rombach, H.D. Goal Question Metric approach. In Encyclopedia of Software Engineering; John Wiley and Sons: Hoboken, NJ, USA, 2002. [Google Scholar]

- Derringer, G.; Suich, R. Simultaneous optimization of several response variables. J. Qual. Technol. 1980, 12, 214–219. [Google Scholar] [CrossRef]

- Kenett, R.S.; Bortman, J. The digital twin in Industry 4.0: A wide-angle perspective. Qual. Reliab. Eng. Int. 2022, 38, 1357–1366. [Google Scholar] [CrossRef]

- Elkefi, S.; Asan, O. Digital twins for managing health care systems: Rapid literature review. J. Med. Internet Res. 2022, 24, e37641. [Google Scholar] [CrossRef] [PubMed]

- Yossef Ravid, B.; Aharon-Gutman, M. The social digital twin: The social turn in the field of smart cities. Environ. Plan. B Urban Anal. City Sci. 2023, 50, 1455–1470. [Google Scholar] [CrossRef]

- Pittavino, M.; Dreyfus, A.; Heuer, C.; Benschop, J.; Wilson, P.; Collins-Emerson, J.; Torgerson, P.R.; Furrer, R. Comparison between generalized linear modelling and additive Bayesian network; identification of factors associated with the incidence of antibodies against Leptospira interrogans sv Pomona in meat workers in New Zealand. Acta Trop. 2017, 173, 191–199. [Google Scholar] [CrossRef] [PubMed]

- Carrodano, C. Data-driven risk analysis of nonlinear factor interactions in road safety using Bayesian networks. Sci. Rep. 2024, 14, 18948. [Google Scholar] [CrossRef] [PubMed]

- Ruggeri, F.; Banks, D.; Cleveland, W.S.; Fisher, N.I.; Escobar-Anel, M.; Giudici, P.; Raffinetti, E.; Hoerl, R.W.; Lin, D.K.J.; Kenett, R.S.; et al. Is There a Future for Stochastic Modeling in Business and Industry in the Era of Machine Learning and Artificial Intelligence? Appl. Stoch. Models Bus. Ind. 2025, 41, e70004. [Google Scholar] [CrossRef]

- Geddes, P. Civics: As applied sociology. In Start of the Project Gutenberg Ebook 13205; 1904; Volume 1, pp. 100–118. Available online: https://www.gutenberg.org/files/13205/13205-h/13205-h.htm (accessed on 20 October 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kenett, R.S. Bayesian Network Applications in Decision Support Systems. Mathematics 2025, 13, 3484. https://doi.org/10.3390/math13213484

Kenett RS. Bayesian Network Applications in Decision Support Systems. Mathematics. 2025; 13(21):3484. https://doi.org/10.3390/math13213484

Chicago/Turabian StyleKenett, Ron S. 2025. "Bayesian Network Applications in Decision Support Systems" Mathematics 13, no. 21: 3484. https://doi.org/10.3390/math13213484

APA StyleKenett, R. S. (2025). Bayesian Network Applications in Decision Support Systems. Mathematics, 13(21), 3484. https://doi.org/10.3390/math13213484