1. Introduction

Current extreme climate events pose significant threats to both natural and human systems. Such events are anticipated to exemplify the impacts of climate change. These climatic shifts contribute to the increasing intensity, frequency of rainfall, and irregular monsoon patterns, as well as more severe and prolonged droughts in some areas [

1]. Rainfall data serves as a critical component for analyzing the spatial and temporal patterns of precipitation changes in response to climate change. Such analysis can essentially facilitate water resources planning, rainfall-runoff forecasting, and reservoir operation. In relation to this, rain gauge networks are typically established to enable the direct measurement of rainfall, capturing both the spatial and temporal variability of precipitation within a catchment area. Given the inherently high variability of rainfall and its complex spatial distribution, the development of a well-designed rain gauge network is important for cost-effective operation and maintenance while enhancing the overall accuracy of rainfall data. A wide range of techniques have been proposed for the optimal design of rain gauge station networks. These methods primarily include statistical methods [

2,

3], entropy-based techniques [

4,

5,

6], satellite-based remote sensing technologies [

7,

8], machine learning [

9,

10], and optimization approaches.

Among them, statistical methodologies constitute some of the most extensively developed approaches for rain gauge network design. These include copula, cross-correlation analysis, generalized least squares, and variance reduction techniques. Kriging-based geostatistical methods, in particular, are the most widely applied for both the design and evaluation of rain gauge networks. The kriging techniques alone were employed in earlier studies due to its well-balanced combination of flexibility and statistical optimality. The Best Linear Unbiased Estimator (BLUE) property of kriging under the unbiasedness condition ensures that the minimum variance of estimation error is achieved. This property makes it particularly effective in identifying stations within specific regions that do not substantially contribute to reducing the estimated variance. It also provides guidance for the strategic placement of additional stations at selected locations to minimize the overall estimation error. Kassim and Kottegoda [

11] compared simple kriging and disjunctive kriging in selecting the optimal configuration of rain gauges over an area of approximately 1800 square kilometers surrounding Birmingham, United Kingdom, using rainfall data from 13 stations recorded during storm events. Adhikary et al. [

12] employed the variance reduction method within the framework of ordinary kriging (OK), utilizing standard parametric variogram functions for the design of a rain gauge network in the Middle Yarra River catchment in Victoria, Australia. Their study was further extended through the application of ordinary cokriging and kriging with external drift (KED), incorporating digital elevation data as a secondary variable, to interpolate monthly rainfall in two Australian catchments [

13]. These two cokriging models outperformed deterministic interpolation methods.

Another advancement of kriging within the context of optimal network design lies in its integration with other optimization techniques. In such a framework, the kriging variance can be employed as an objective function or performance metric, enabling more effective and systematic network optimization. Because station design problems are often complex, large-scale, and nonlinear, conventional and simple heuristic optimization methods are generally unsuitable for large-scale spatial optimization. Consequently, metaheuristic approaches such as particle swarm optimization, genetic algorithms (GA), and simulated annealing (SA), are commonly utilized to address these challenges. The study by Aziz et al. [

14] applied a particle swarm optimization technique integrated with geostatistics to design a rain gauge network in Johor State. The approach minimized kriging variance by examining other meteorological variables, including rainfall, elevation, humidity, solar radiation, temperature, and wind speed. While the Artificial Bee Colony (ABC) algorithm was utilized with the block OK approach by the work of Attar et al. [

15] for the rain gauge network design in the southwestern part of Iran. A key focus of their study was to mitigate the curse of dimensionality via a hybrid framework that combines various objective functions with the ABC algorithm. Bayat et al. [

16] introduced a two-phase methodology to analyze the spatiotemporal structure of precipitation within the Namak Lake watershed in central Iran, based on three decades of data collected from 105 rainfall monitoring stations. In the initial phase, the entropy was employed as a decision-making tool to determine the optimal number of rain gauge stations required for effective coverage. The subsequent phase coupled GA with geostatistical approaches, namely OK and Bayesian maximum entropy, to optimize the spatial configuration of the rain gauge network. This optimization process was guided by three objective functions, which are the minimization of the expected estimation variance, the minimization of the mean squared error, and the maximization of the coefficient of determination (

). Pardo-Igúzquiza [

17] combined the kriging method with SA to derive an optimal rainfall configuration. The objective function was formulated based on two key criteria, which are the kriging variance estimated from synthetic rainfall datasets and the associated economic cost. The optimization process addressed two scenarios, which are the optimal selection of a subset from the existing stations and the optimal augmentation of the current network. The use of SA was also employed in the work by Wadoux et al. [

18]. They extended the geostatistical interpolation of rainfall data through the use of the KED model by merging rain gauge and radar data in the north of England for a one-year period. The rain gauge sampling design was optimized by minimizing the space-time average KED variance. Omer et al. [

19] compared SA and GA methods coupled with OK and universal kriging (UK) based on data from more than 90 stations over the period from 1998 to 2019. The SA exhibits a lower average kriging variance (AKV) compared to GA when adding the optimal or removing the redundant locations from the monitoring network.

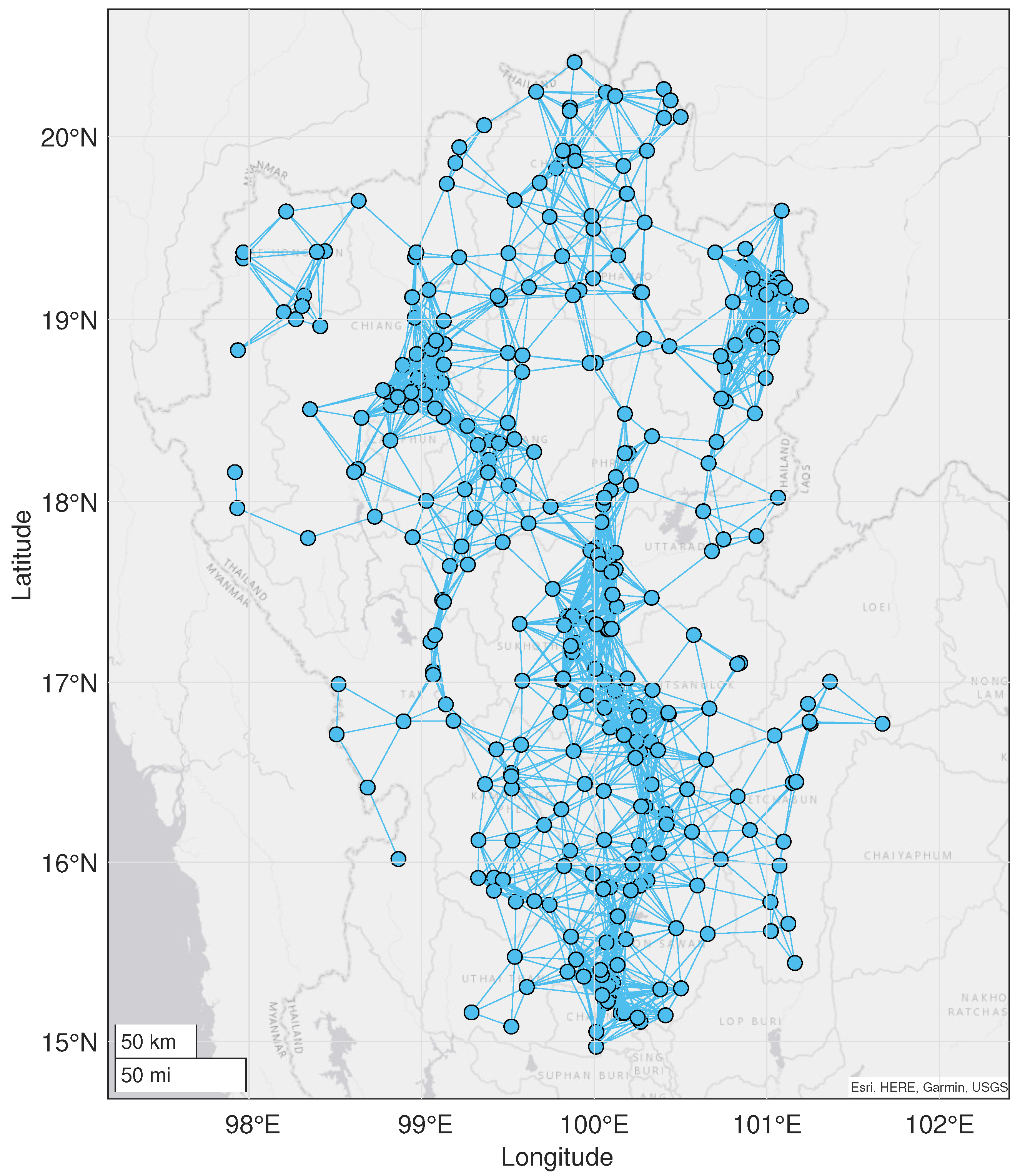

From another perspective on station reduction, the station network can be represented within a graph-based framework. Donges et al. [

20] and Scarsoglio et al. [

21] investigated the placement of monitoring stations in climate networks, where nodes correspond to stations and edges capture the relationships between station pairs. Their studies utilized graph representations solely for descriptive purposes, rather than as a means of facilitating station reduction. Several studies have also explored the integration of correlation analysis with graph-theoretic approaches for various applications related to climate patterns. Building upon the concept of correlation-based graph construction, Ebert-Uphoff and Deng [

22] introduced correlation graphs that synthesize traditional graph structures with correlation coefficients derived from climate data. This foundational work was further developed by [

23], who utilized correlation matrices computed from data correlation coefficients as weight matrices for connected graphs, leading to the identification of small-world network properties within the underlying data structure. In 2009, Sukharev et al. [

24] applied k-means clustering and graph partitioning algorithms to analyze correlation structures of variable pairs through pointwise correlation coefficients and canonical correlation analysis. Traditional graph approaches often depend on correlation coefficients, which may fail to capture the full structural complexity and hierarchy of networks, while these methods typically cluster nearby stations exhibiting similar meteorological patterns, they may overlook deeper, less apparent interrelationships. To date, numerous studies have employed graph-based approaches primarily to represent various types of networks, particularly correlation or climate networks. Clustering techniques have also been frequently utilized to identify influential nodes or to extract specific network properties. However, there remains a noteworthy gap in the existing research, which stems from the lack of a systematic integration of fundamental graph-theoretic concepts, such as connectedness and centrality, into station design. The application of these concepts can help preserve the continuity of spatial information across the domain when certain stations are removed.

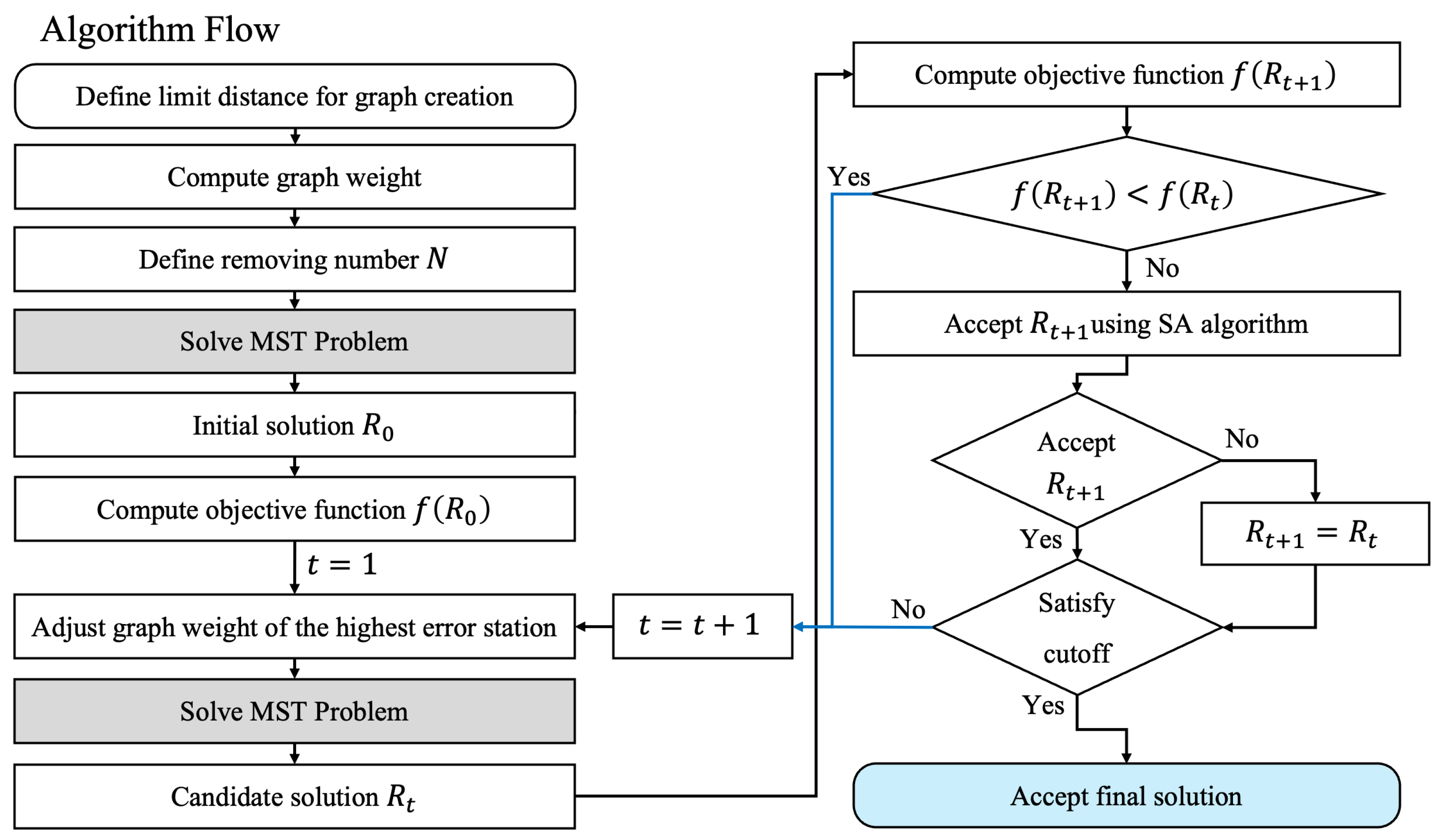

The primary objective of this research is the augmentation of the SA optimization technique through the integration of the graph theory, with the AKV serving as the objective function. In the preprocessing stage, potential station removals are determined via the minimum spanning tree (MST) problem, where the importance of each station within the network is assessed using graph-theoretic weights. Instead of relying solely on correlation coefficients to compute these weights, we incorporate centrality analysis by including both the clustering coefficient and betweenness centrality. The clustering coefficient quantifies the local interconnectedness of node’s neighbors, whereas the betweenness centrality measures the frequency with which a station appears on the shortest paths between other nodes in the network. To evaluate performance, monthly rainfall data from 317 stations across Thailand over the period 2012–2022 were employed, with 9 years (2012–2020) allocated for training and 2 years (2021–2022) for validation.

The remainder of this paper is organized as follows.

Section 2 describes the study area and the data utilized for the analysis.

Section 3 provides a description of techniques related to our proposed method.

Section 4 presents the detailed algorithm of the hybrid MST-SA approach with adaptive graph weighting. In

Section 5, the performance of our proposed method is discussed. Conclusions of this study are drawn in

Section 6. List of all variables and parameters is provided in

Table 1.

2. Study Area and Data

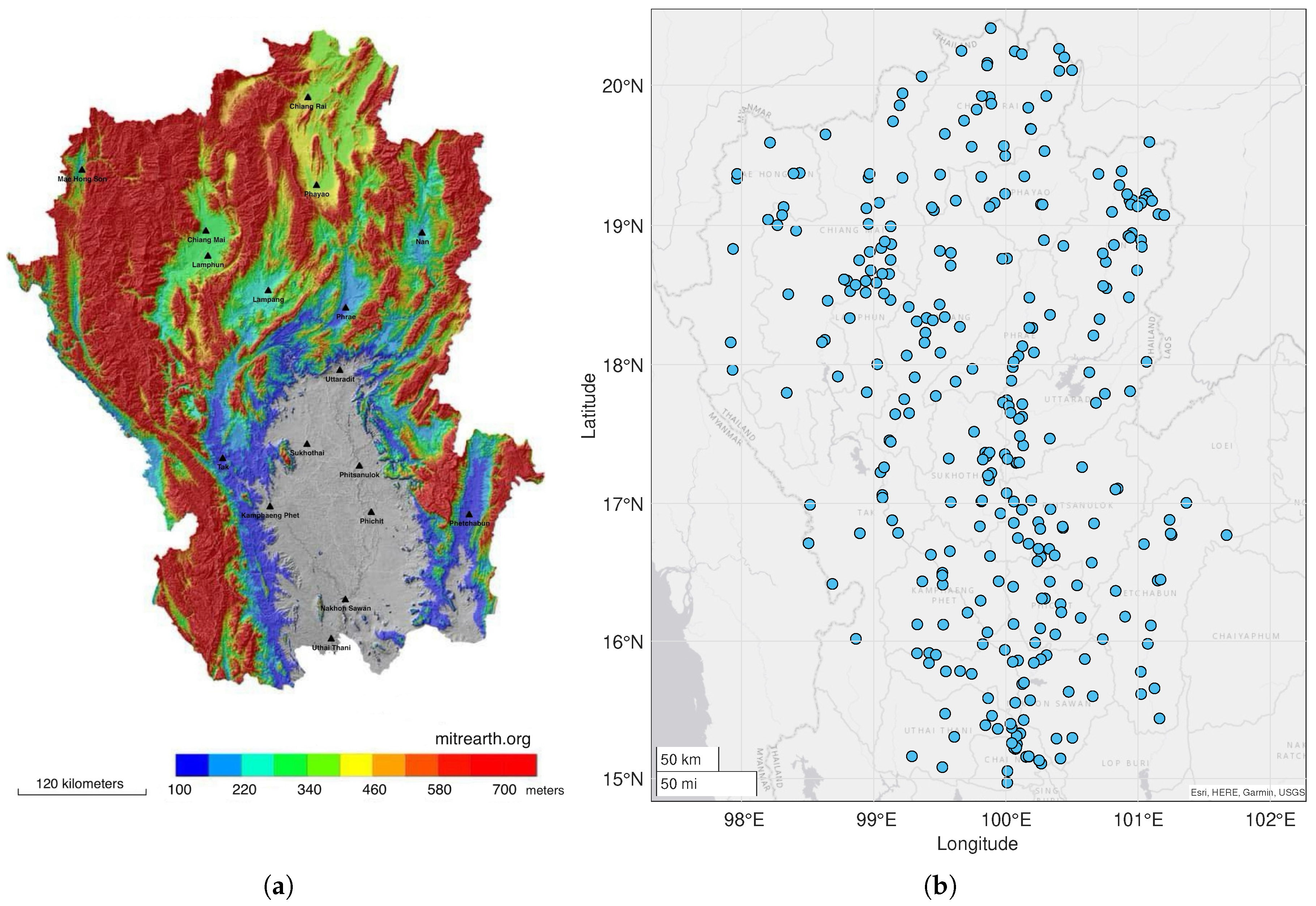

Our domain of study is located in the northern region of Thailand, which lies between latitudes 14°56′17″–20°27′5″ North and longitudes 97°20′38″–101°47′31″ East, covering 17 provinces with an area of 172,277 km

2. The northern region of Thailand can be divided into upper and lower parts. Topographically, see

Figure 1a (adapted from

www.mitrearth.org), the upper north is characterized as a mountainous zone, dominated by north–south oriented ranges. Between these ranges lie fertile intermontane basins and river valleys, which give rise to four principal tributaries (Ping, Wang, Yom and Nan) that form the headwaters of the Chao Phraya River in central Thailand. In contrast, the lower-north region serves as a transition zone between the rugged highlands of the upper north and the flat central plain, characterized by broad river valleys and extensive floodplains.

The annual rainfall variation across this region is highly heterogenous, with between 70% and 80% of its total annual rainfall occurring during the wet season from May to September. These rainfalls are influenced from the Intertropical Convergence Zone (ITCZ) and the monsoon trough, which dominate during the wet season. Two primary mechanisms account for most of the rainfall include the southwest monsoons prevailing from mid-May to early-June, and westward-propagating tropical stroms originating in the south China sea and western Pacific, which reach their peak in September. Notably, rainfall associated with tropical storms contributes nearly 70% of the total rainfall in Thailand during September [

25].

Monthly data used in this study includes rainfall, humidity, atmospheric pressure, and temperature, were obtained from the Thai Meteorological Department (TMD) and the National Hydroinformatics and Climate Data Center, developed by the Hydro-Informatics Institute (HII). To mitigate the effects of misleading correlations that may arise from prolonged periods without rainfall, our analysis focuses on wet-season characteristics (April–October) for the years 2012–2022. Within the 11-year dataset, the first 9 years served as the training dataset, while the final 2 years are treated as the interpolation testing set. Each dataset was attained from 317 monitoring stations as shown in

Figure 1b, consisting of both human-operated and self-automated stations. Based on the frequency of westward-propagating tropical storms, this has led to a high density of rain gauge networks on the eastern side of the study region. Another dense cluster is noticeable in the central area, where major cities and densely populated communities are situated. On the other hand, the network is less concentrated along the western border adjacent to Myanmar, as this region is characterized by high mountain ranges and sparse population.

Table 2 presents the statistical summary of the mean daily rainfall for each month across 317 rain gauges. Throughout the seven-month study period spanning 11 years, higher monthly mean daily rainfall values were observed during July to September, with the peak value of 159.21 mm recorded in August. In contrast, the lowest monthly mean daily rainfall, amounting to 58.31 mm, occurred in April. Moreover, the standard deviation of the mean daily rainfall data were generally consistent with the mean. In other words, higher mean values tended to correspond to higher standard deviations. All months in the study exhibit positive skewness, indicating that the distribution of rainfall values across the domain is right-skewed. This suggests that a small number of stations experience relatively high rainfall intensities compared to the majority of observations.

3. Preliminaries

This section presents fundamental concepts related to graph theory and optimization and their uses in this study. Topics include some graph definitions, centrality measures, the minimum spanning tree, and simulated annealing.

3.1. Graph Definitions

Definition 1 (Ref. [

26])

. Given an undirected and connected graph , where V is the set of vertices and E is the set of edges. Let denote the weight of the edge between nodes i and j, where . Let be a spanning tree of G. A tree is said to be minimum spanning tree (MST) if its total edge weight is minimized among all possible spanning trees of G, i.e., Definition 2 (Ref. [

27])

. A set of vertices is a cut set if its deletion increases the number of connected components in a graph. The cut set of graph G is denoted . A cut set refers to a set of nodes whose removal results in the division of a connected graph into two or more disconnected components. Examining cut sets enables the identification of critical links or stations within the monitoring network, highlighting points where failure could disrupt the overall connectivity and significantly hinder data collection or communication.

Definition 3 (Ref. [

28])

. The clustering coefficient of vertex v, denoted by , is defined as the number of edges between the vertices within the immediate neighborhood of the vertex divided by the number of all possible edges between them. It can be computed bywhere is the number of edges between neighbors of v and is the number of edges that connect to vertex v. Clustering Coefficient is a metric assessing how strongly nodes in a graph tend to form clusters. It reflects the probability that two neighbors of a given node are also directly connected to each other. A low clustering coefficient signifies the sparse local connectivity of a node, indicating that the node’s neighbors are poorly connected to one another, thereby reducing the node’s embeddedness within densely interconnected substructures.

Definition 4 (Ref. [

29])

. The betweenness of vertex v, denoted by , is defined as the proportion of the shortest paths between every pair of vertices that pass through the given vertex v towards all the shortest paths. It can be written aswhere i and j are two distinct vertices of G not equal to v, is the number of shortest paths from i to j that pass through v, and is the total number of shortest paths from i to j. Betweenness centrality measures how frequently a node appears on all shortest paths between other pairs of nodes. Nodes with high betweenness are vital for facilitating the flow of information throughout the network, as they frequently serve as bridges along crucial communication routes.

The clustering coefficient and betweenness together offer valuable insights into the significance of various locations and connections within the weather monitoring network, thereby aiding in the identification of optimal sites for station deployment. This complementary combination allows our optimization framework to simultaneously manage global connectivity (from betweenness) and local redundancy (from clustering coefficient), which cannot achieve by a single metric alone.

Definition 5 (Ref. [

30])

. Given an undirected graph G, the line graph has the edges of G as its vertices, i.e., . Two vertices in are adjacent if and only if the corresponding edges in G share a common endpoint. A line graph is a transformed representation of the original graph where edges are converted to nodes, enabling the analysis of edge properties. By applying centrality measures to line graphs, we can assess the importance of edges, i.e., the relationships between nodes in the original graph.

3.2. Minimum Spanning Tree Problem

The MST problem aims to find a subset of edges in a connected and undirected graph

that connects all vertices together, without any cycles and with the minimum possible total edge weight. The common formulation of MST can be represented in the form of linear integer programming as follows:

Each edge

has a weight

. We define binary decision variables

Constraints (5) and (6) keep the graph as a tree and ensure it remains connected, respectively.

The application of MST problem for node selection problems is appropriate when the objective is to maintain connectivity while minimizing total edge weights.

3.3. Ordinary Kriging

Suppose that

is a spatial random process over a spatial domain

D and

. Let

be a collection of samples at observed locations

. The estimate

at non-visited site

can be expressed as a linear combination of

n measurements

where

represents the kriging weight assigned to

.

The OK approach satisfies the property of intrinsically stationarity, in which the expected value of the difference between

and

is zero, and the variance of this difference is determined by the lag vector

. Moreover, the kriging variance, which is the variance of the estimation error, can be formulated using the semivariogram, which describes the covariance structure among the sampled data points. It is given by [

31]

By employing the Lagrange multiplier technique on Equation (

10) under the unbiasedness constraint

, we obtain

where

denotes the Lagrange multiplier, and the kriging weight can be computed from [

32]

where

represents the number of distinct pairs separated by the lag vector

. Under the assumption of isotropy, the semivariogram estimator

depends only on the Euclidear distance

. Based on the empirical semivariogram, the spatial continuity over the domain can be approximated using smooth parametric models, including exponential, spherical and Gaussian functions. In this study, we adopt the exponential model, expressed as

where the parameters

and

are nonnegative and are determined by applying the least squares method to achieve their optimal values.

3.4. Simulated Annealing

The SA technique is a probabilistic heuristic optimization method introduced by Kirkpatrick et al. [

33] and Černý, V. [

34]. The algorithm is inspired by the metallurgical process of annealing, wherein a material is rapidly heated and subsequently gradually cooled until it attains the ground state. Throughout this process, the atoms within the metal rearrange themselves toward a configuration that minimizes the system’s energy. In the optimization analogy, the atomic or molecular structure represents the configuration of sampling points, while the objective function reflects the energy level of the system. The SA algorithm aims to prevent entrapment in a local optimal solution by utilizing the Metropolis criterion and generating new candidate solutions within the vicinity of the current solution. The algorithm’s performance is significantly influenced by the selection of the cooling schedule and the neighborhood structure. The SA algorithm based on the linear cooling schedule is detailed in Algorithm 1.

| Algorithm 1 Simulated Annealing |

Initialize the solution and the initial temperature T Set the cooling rate , while termination is not satisfied do Generate a candidate solution Calculate the change in energy if then Accept the candidate solution else Accept with probability end if Update the current solution Update the temperature end while Return the optimal solution

|

6. Conclusions

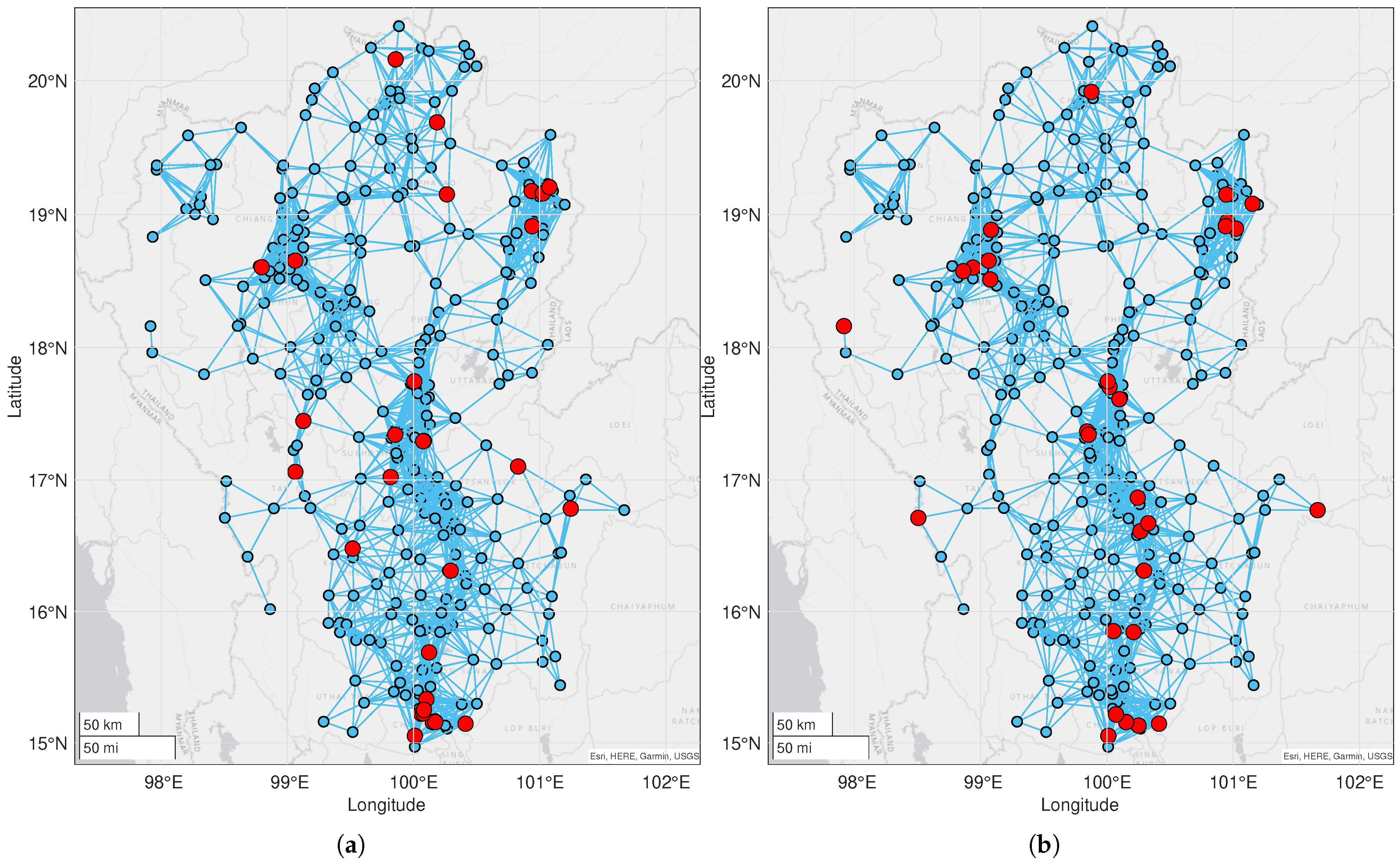

Previous studies on optimal station network design have primarily focused on correlation-based graph methods to assess data similarity, without explicitly accounting for the underlying network structure. The main objective of this study is to incorporate centrality measures with correlation analysis within a graph-based optimization framework for monitoring network reduction. The comparative performance of correlation-based and centrality-enhanced approaches is demonstrated in the case of the rain gauge network in the northern Thailand over the period 2012–2022. The proposed method consistently attains optimal solutions with faster convergence across all scenarios compared to those obtained solely through correlation-based analysis. Notably, in cases involving the removal of 15, 20, 25, and 30 stations, only the centrality-integrated approaches achieved optimal solutions within the limited number of computational iterations. This finding underscores the importance of incorporating centrality measures, particularly as the number of removed stations increases. Furthermore, the cooling rate sensitivity analysis validates centrality integration’s importance across different algorithmic parameters. A higher cooling rate, , typically results in a larger number of iterations for the algorithm to reach convergence. However, in certain instances, the SA method may accept suboptimal solutions during the search, which leads to the overall computational cost. This suggests that slower temperature decreases enhance the algorithm’s ability to explore broader solution space.

By combining betweenness centrality and clustering coefficient, the approach enables balanced node selection that offers additional structural insights into node importance within the network. At the same time, it preserves structural connectivity and ensures comprehensive spatial representation, resulting in distributed removal patterns that mitigate coverage gaps often encountered in traditional methods. The comparison in

Figure 5 reveals that the centrality-integrated approach (

Figure 5a) selects removal nodes distributed across the network coverage area in which it avoids the isolation of nodes within the network. In contrast, when centrality is not considered (

Figure 5b), the removed stations tend to cluster in the central part of the map, with additional removals occurring at locations distant from these clusters. To ensure a well-distributed coverage of the monitoring stations across the study area, the trade-off parameter other than

should be used.

In addition to supporting cost-effective operation and maintenance, the proposed method also facilitates water resource management planning through dynamic, topology-aware scenarios for network contraction and expansion. This helps ensuring the network remains informative and connected even when stations are added or removed. Moreover, government agencies responsible for managing monitoring stations, such as the Department of Water Resources, the TMD, and the Pollution Control Department, can leverage this approach to plan and adjust monitoring networks in response to environmental changes and budgetary constraints. This approach can also enhance data connectivity and integration, enabling more effective analysis and supporting sustainable water resource management. Despite the favorable results of this study, a common limitation of hybrid approaches lies in the requirement for an optimally tuned weighting parameter. Future research should therefore explore adaptive weighting schemes, data-driven optimization algorithms, and the integration of artificial intelligence (AI) and machine learning techniques to enhance analytical capabilities and overall model performance. Extensions to dynamic networks and validation across diverse geographical and climatic contexts are also recommended to further demonstrate the practical applicability of the methodology. Additionally, more advanced kriging techniques should be explored to better match the specific characteristics of different variable types.