1. Introduction

Large language models achieve strong performance across a variety of linguistic tasks, but only at the cost of extensive training resources and massive data consumption. This raises a central question in model design: how can we optimize training efficiency under limited data conditions? One promising direction for improving efficiency is curriculum learning, which organizes training data in a sequence that reflects increasing task difficulty. This paradigm has been shown to benefit both machine learning and cognitive modeling [

1,

2], highlighting data ordering as an effective strategy for guiding model learning in a stable and progressive manner. A central challenge in this approach lies in defining a principled measure of curriculum difficulty, since difficulty effectively acts as a regulator of distributional entropy during training. Therefore, the choice of difficulty metric can critically determine how efficiently a model learns under limited data conditions.

To address this challenge, we introduce a readability-based function that organizes training inputs by difficulty using the Flesch Reading Ease (FRE) score [

3]. FRE quantifies textual comprehensibility through a regression formula grounded in human reading behavior, providing a principled metric that reflects developmental learning stages. Drawing inspiration from child language acquisition, we note that children achieve remarkable linguistic competence from limited input [

4,

5,

6], suggesting that curricula modeled on human learning trajectories may yield more data-efficient and cognitively grounded training methodologies [

7].

This observation is supported by developmental evidence showing that children can infer grammatical structure and derive meaning from remarkably sparse input, acquiring core elements of syntax and morphology within the first few years of life, despite limited vocabulary and exposure [

4,

5,

6]. Children achieve this level of linguistic ability from relatively low amounts of input—especially when compared to modern language models, which often require well over 10,000 words of training data for every single word a 13-year-old child has encountered [

7]. This gap in learning efficiency highlights a critical opportunity: by modeling training trajectories after human development, we may move toward training methodologies that are not only more data-efficient, but also cognitively grounded and empirically motivated.

Thus, we propose a curriculum learning framework based on established readability metrics, which we call the Reading-level Guided Curriculum. Among readability indices, we employ the Flesch Reading Ease (FRE) score, a widely validated psycholinguistic measure closely associated with human reading development. Owing to its simplicity and interpretability, FRE provides a cognitively grounded and reproducible basis for structuring our Reading-level Guided Curriculum.

Furthermore, unlike large-scale models that primarily aim to maximize performance through massive data consumption, our study deliberately constrains the training data to a human-comparable scale. The goal is not to rival the raw performance of large models, but rather to test whether cognitively inspired training trajectories—analogous to human language acquisition under limited exposure—can yield efficient learning and competitive improvements even in data-restricted conditions.

Our results also show that a curriculum guided by the psycholinguistic FRE score improves efficiency in low-resource settings while aligning with insights from human language development. This highlights the potential of our method as a structured and cognitively inspired training strategy for small language models. Our contributions can be summarized as follows:

We propose a curriculum that is substantially simpler and more interpretable than existing approaches.

By controlling for the confounding factor of large-scale data, we isolate pretraining methodology as the primary driver of improvement.

Unlike prior curriculum learning approaches that often yielded inconclusive results, our method produces clear and positive learning signals.

2. Related Works

2.1. Importance of Curriculum for Language Models

2.1.1. Curriculum Learning

Curriculum learning is a training strategy in which examples are presented in an organized order—from simpler to more complex—rather than randomly, allowing models to learn progressively as humans do [

1].

The concept can also be understood as a form of continuation method. Instead of tackling a hard non-convex optimization problem directly, training begins with a simplified version of the problem and gradually shifts to the original one. This staged transition helps the model find better minima, achieving lower training loss and stronger generalization.

Formally, following Bengio et al. [

1], let

s denote a sample drawn from the target distribution

. At training stage

(

), the reweighted distribution is defined as:

where

s is a training sample;

is the original target distribution over samples;

is a weighting function that determines the importance of sample s at stage ;

is the reweighted distribution at stage .

The weighting function

increases monotonically with

. At early stages, simpler data points are emphasized, while as

grows, increasingly complex examples are incorporated. At the final stage (

),

meaning that the model is trained on the full target distribution without any reweighting.

A curriculum is then characterized by the following two conditions. First, the entropy of the training distribution must increase monotonically over time:

where

denotes the entropy of the reweighted data distribution

, which reflects the overall diversity of training samples at stage

, and

represents a small positive increment indicating the transition to a slightly later stage. This condition ensures that while early stages emphasize a narrow band of difficulty, later stages introduce broader diversity. Second, the weights of individual examples must also grow monotonically with

:

where

denotes the weighting function that determines the importance of each training sample

s at stage

, and

represents a small positive increment indicating a transition to a subsequent stage. Once an example is included, its importance does not diminish; instead, more difficult examples are layered on top, enabling the learner to build upon earlier knowledge.

In this way, curriculum learning provides a stable and intuitive framework that guides models to handle increasingly complex inputs, making it particularly well-suited for domains such as language modeling, where gradual acquisition plays a central role.

2.1.2. Linguistic Indicators in Curriculum Learning

Several studies have explored ways to leverage linguistic indicators for curriculum learning in order to improve model performance [

8,

9,

10]. These works are often motivated by the intuition that, much like in human learning, models benefit from progressing from simpler inputs to more complex ones. What distinguishes this line of research from other curriculum methods is that the idea of difficulty is defined through linguistic indicators, which makes the curriculum not only an effective training strategy but also a linguistically meaningful way. While these studies share the same overarching motivation, they differ substantially in how linguistic difficulty is measured.

Oba et al. [

9] operationalized difficulty through syntactic complexity, using measures such as dependency tree depth and the number of syntactic constituents to approximate stages of linguistic acquisition. While this syntactic approach offered a linguistically grounded perspective, the observed performance gains were relatively limited. Interestingly, later investigations [

10] showed that even surface-level indicators such as sentence length and word rarity could yield improvements when used to design curricula. Recently, a multi-view curriculum framework was introduced in 2023 [

8], drawing on more than 200 linguistic complexity indices. Their results showed meaningful gains across downstream tasks, underscoring the potential of linguistically rich curricula. But at the same time, the reliance on such a large set of features made the framework less transparent and straightforward to apply.

These prior studies collectively demonstrate the promise of leveraging linguistic indicators for curriculum design, yet they also leave open questions about which indicators are most effective and how they should be operationalized. Syntactic-complexity-based curricula [

9], while grounded in solid linguistic theory, translated into only modest performance gains in practice. At the other end of the spectrum, multi-view frameworks [

8] that integrate hundreds of linguistic indices achieved stronger results but did so at the cost of interpretability and practical applicability, as their reliance on extensive feature sets made them cumbersome to adopt consistently. This tension suggests a broader gap: existing approaches either fall short in impact, oversimplify the notion of difficulty, or become too complex to implement reliably. Beyond such extremes, there is room to explore indicators that not only reflect surface-level statistics but also align more closely with cognitive perspectives on language learning.

Building on this view, we propose a Reading-level Guided Curriculum learning framework grounded in readability metrics that have been widely applied in educational and applied linguistic contexts. By relying on well-established readability measures, the framework keeps the training process computationally lightweight while remaining aligned with human intuitions about text difficulty. We investigate whether insights from human language development can promote more efficient learning dynamics in data-limited language models, regardless of model size or training scale.

2.2. Flesch Reading Ease Score

As discussed in the previous section, this study builds on deep insights from human developmental patterns and examines the Flesch Reading Ease (FRE) score as a readability-based measure that can serve as a cognitively grounded foundation. Among the many possible criteria, we focus on FRE for two main reasons: (1) it is a intuitive and quantitative metric that integrates sentence length and lexical complexity into a single interpretable value, and (2) it has been widely applied in educational contexts for English learners, aligning well with the philosophical goal of this work to treat language models as learning entities.

2.2.1. Interpretability Through Integration

The FRE score was originally designed to evaluate the accessibility of written English, integrating sentence length and lexical complexity into a single interpretable value. The metric was first developed using 363 passages from the McCall–Crabbs Standard Test Lessons in Reading, where children’s reading comprehension performance served as the foundation. The criterion, denoted as , was defined as the average grade level at which children could correctly answer 75% of comprehension questions, providing a numerical benchmark for the grade level required to understand a given text.

To model the relationship between textual features and grade-level comprehension, a multiple regression analysis was conducted with two predictors: syllables per 100 words (

) and average sentence length in words (

). The resulting regression equation was:

This indicates that texts with longer sentences and more syllabic words require higher grade levels to comprehend. To complement the regression analysis,

Table 1 summarizes the descriptive statistics and pairwise correlations among the predictors (

,

) and the criterion

.

As shown in the table, indicates a moderate positive association, meaning that passages with longer sentences also tend to contain more syllables per 100 words. Likewise, and show that texts with more syllabic words and longer sentences tend to require higher grade levels.

Because this raw regression predicted grade levels rather than ease of reading, an additional transformation was introduced to yield an interpretable “ease” score. The predicted grade level was first multiplied by 10 to create a 0–100 scale (so that one point corresponds to one-tenth of a grade), and the signs of the predictors were reversed so that higher values would correspond to easier texts. The final FRE formula in terms of the original variables was:

As many implementations use average syllables per word (

) rather than syllables per 100 words, the formula can equivalently be expressed as:

where

denotes average sentence length (words per sentence) and

denotes average syllables per word.

In summary, the FRE score is derived through three steps: (1) defining a comprehension-based criterion from children’s performance, (2) linking this criterion to textual length features via regression, and (3) transforming the predicted grade level into a 0–100 ease scale. This process shows how sentence length and lexical complexity can be integrated into a single interpretable measure, thereby demonstrating interpretability through integration.

2.2.2. Practicality for Controlling Text Difficulty

As discussed above, the FRE score—originally designed to assess the accessibility of written English—has been widely adopted in educational contexts, particularly for evaluating materials targeted at children and second-language learners. In educational practice, it is employed to evaluate school texts for consistency with children’s developmental levels [

11], with its role extending to research on child language development where FRE serves as an experimental tool for calibrating linguistic stimuli [

12]. Studies on children’s reading comprehension and processing time often adopt FRE as a standardized scale for comparing textual difficulty [

13].

While alternative readability or complexity indices such as syntactic depth, lexical sophistication, or multi-view linguistic feature sets mentioned above in

Section 2.1.2 have also been explored in prior curriculum learning studies, these approaches often require extensive feature extraction, yield less interpretable signals, or introduce computational overhead. Notably, a recent study [

14] also included FRE among multiple difficulty metrics and found it to be one of the most effective indicators for improving training efficiency. FRE score provides a single, transparent value that has been validated in both educational practice and psycholinguistic research. Its simplicity and interpretability make it particularly suitable for our goal of developing a lightweight yet cognitively motivated curriculum framework for small-model training.

3. Proposed Method

In this work, we extend the general framework of curriculum learning introduced by [

1] by incorporating the FRE score as a measure of linguistic difficulty. We introduce a curriculum parameter,

(

), which controls the progression from easy to difficult inputs during training. At stage

, the distribution is defined as:

where

s denotes a training unit (e.g., a sentence or a paragraph depending on the experimental setting),

is the target distribution over such units, and

is a weighting function explicitly determined by the FRE score of

s. Intuitively,

prioritizes easier units at earlier stages and gradually introduces more complex ones, thereby making the progression of linguistic complexity.

At lower values of , the weighting function favors sentences with higher FRE scores (i.e., easier sentences). As increases, progressively lower FRE scores (i.e., more complex sentences) are incorporated. The progression of thus corresponds to a transparent trajectory of increasing linguistic complexity:

: the distribution emphasizes easier sentences.

: progressively more complex sentences are added.

: the full target distribution is restored without any reweighting.

The mapping between FRE scores and the weighting function can be illustrated through a simple example. Consider three sentences of different difficulty levels: Sentence A (FRE = 90, easy), Sentence B (FRE = 70, medium), and Sentence C (FRE = 40, hard). At the early stage of training (), the weighting function prioritizes high-FRE sentences, and thus only Sentence A is emphasized. As increases (e.g., ), medium-level sentences such as Sentence B are also incorporated. Finally, at , the full target distribution is recovered, and all sentences, including the more complex Sentence C, are included. This stepwise inclusion illustrates how the weighting function is explicitly determined by the FRE score: higher values are favored earlier in the curriculum, and progressively lower values are introduced as training advances.

Unlike prior cognitively motivated curricula that struggled with effectiveness or relied on complex heuristics, our approach offers a lightweight and interpretable mechanism for controlling training difficulty. In this way, our formulation integrates FRE scores directly into the curriculum, providing a principled and interpretable mechanism for controlling linguistic difficulty during training.

By construction, preserves the two central conditions of curriculum learning: (1) monotonic increase of entropy—as grows, the curriculum expands to cover a broader range of FRE scores; (2) monotonic growth of weights—once easy examples are introduced, their relative importance does not diminish, and harder examples are layered on top. Furthermore, we apply this simple equation to various curriculum designs and configurations in order to investigate its effectiveness in supporting efficient training and enhancing small model performance. Accordingly, the formulation offers a simple yet effective and interpretable approach to defining and regulating linguistic difficulty during training. The details of the experimental settings are presented in the following section.

4. Experimental Settings

To investigate how data diversity affects curriculum learning, we designed experiments under two dataset settings: single and merged. In the single setting, curricula were constructed at three units (sentence, group, and paragraph), while in the merged setting, only two units (sentence and grouped) were feasible. This results in five conditions in total, allowing us to analyze how reading-level guided difficulty ordering impacts learning. To operationalize text difficulty within these settings, we compute an FRE score for each setting. We then sort all text segments computed, dividing them into three equal-sized bins (tertiles). As a result, the top third is categorized as easy, the middle third as medium, and the bottom third as hard. The specific difficulty boundaries used for single and merged dataset settings are summarized in

Table 2, which also reports the mean FRE score within each level.

4.1. Comparison of Settings

In our experiments, we consider a total of five configurations. First, based on the type of training dataset, we distinguish between two settings: the

single setting, where curriculum learning is applied to a single corpus, and the

merged setting, where different corpora are assigned to different curriculum levels to more closely simulate the developmental trajectory of child language acquisition. Within the single setting, the FRE score is computed at three units: sentence, group, and paragraph. In contrast, the merged setting includes only sentence- and group-based curricula, since the merged corpora do not consistently form coherent paragraphs. This yields three variants for the single setting and two for the merged setting, resulting in five configurations in total. This setup allows us to examine the impact of reading-level-guided difficulty ordering and to identify effective training strategies. The specific sources and characteristics of the datasets used in the single and merged settings are described in

Section 4.2, while this section focuses on the three unit levels at which FRE is computed.

Group unit: Sentences are split and scored individually by FRE, then coarsely partitioned into three bins—easy, medium, and hard. Within each bin, samples are randomly shuffled without further ordering, resulting in a coarse-grained curriculum. This corresponds to the procedure of Algorithm 1 (group), where difficulty increases stage by stage, but fine-grained ordering is not enforced.

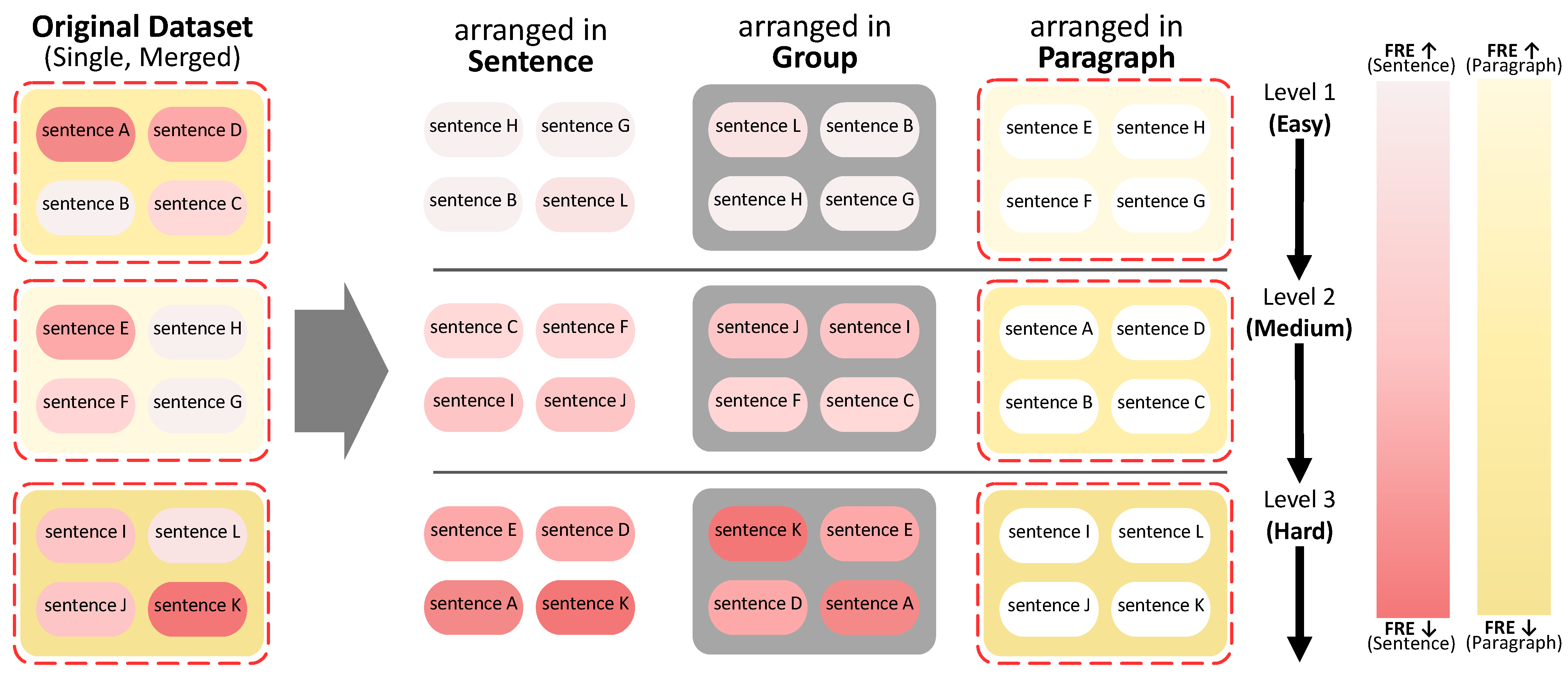

Figure 1 shows the visualization of the overall training data organization, where the color shading indicates FRE difficulty (lighter = easier, darker = harder) and sentences within each stage are arranged without internal ordering.

Sentence unit: Sentences are again grouped into three stages, but unlike the group unit setting, each sentence is strictly ordered from easiest to hardest according to its FRE score. This corresponds to the procedure of Algorithm 2 (sentence), which enforces a fully ordered, fine-grained curriculum where difficulty increases both across and within stages. In

Figure 1, this is represented by progressively darker sentence shading within each block, showing that the model processes inputs in a strictly increasing FRE sequence.

Paragraph unit: Instead of scoring at the sentence level, entire paragraphs are treated as indivisible units. FRE is computed across the full paragraph, and units are then partitioned into easy, medium, and hard stages based on tertile boundaries of the paragraph-level distribution. This corresponds to Algorithm 3 (paragraph), ensuring contextual and semantic relations across sentences are preserved within each input. In

Figure 1, paragraphs are highlighted as larger yellow-shaded blocks, emphasizing that multiple sentences are grouped and treated as one training unit, rather than being split. The difficulty still follows easy → medium → hard progression, but determined at the paragraph level.

As mentioned above, across all three settings, samples are ordered by their FRE values and split into three subsets—easy, medium, and hard—by dividing the ordered list into thirds. Training proceeds stage by stage for epochs, in the order of easy → medium → hard.

| Algorithm 1 Group |

Require: , epochs per stage

Ensure: Trained parameters - 1:

- 2:

for each do - 3:

SentenceSplit (xi) - 4:

for each do - 5:

ComputeFRE (si,k) - 6:

- 7:

OrderBy - 8:

Split U into thirds: - 9:

Shuffle each subset - 10:

Initialize parameters - 11:

for

do - 12:

for to do - 13:

Update on - 14:

return

|

| Algorithm 2 Sentence |

Require: , epochs per stage

Ensure: Trained parameters - 1:

- 2:

for each do - 3:

SentenceSplit (xi) - 4:

for each do - 5:

ComputeFRE (si,k) - 6:

- 7:

OrderBy

- 8:

Split U into thirds: - 9:

Initialize parameters - 10:

for

do - 11:

for to do - 12:

Update on - 13:

return

|

| Algorithm 3 Paragraph |

Require: , epochs per stage

Ensure: Trained parameters - 1:

- 2:

for each do - 3:

ComputeFRE (xi) - 4:

- 5:

OrderBy

- 6:

Split U into thirds: - 7:

Initialize parameters - 8:

for

do - 9:

for to do - 10:

Update on - 11:

return

|

4.2. Datasets

4.2.1. Pretraining Datasets

Our dataset size was deliberately capped at 100 million words, which corresponds to roughly the number of words a 13-year-old child has been exposed to over their lifetime [

15]. By constraining models to human-comparable input sizes, we aim to approximate plausible cognitive models of human learning. Training models on quantities of data closer to what humans actually encounter provides a valuable lens for understanding what enables humans to acquire language so efficiently, and can help illuminate the mechanisms underlying language learning.

Having defined the curriculum construction and training procedure within the scope of 100 M words, the subsequent experiments are divided according to two dataset settings. In the

single setting, we used only the

Cosmopedia [

16] corpus, a synthetic text dataset that contains diverse formats such as textbooks, blogs, and stories generated with Mixtral-8x7B-Instruct-v0.1. Cosmopedia provides a sufficiently broad and coherent resource, which expects the model to capture general linguistic regularities.

In the

merged setting, we constructed a mixture of corpora that naturally span a broad range of linguistic complexity. Specifically, we included data from

CHILDES [

17],

Storybook [

18], manually curated datasets from

Gutenberg Children’s Literature (

https://www.gutenberg.org/ebooks/bookshelf/20 (accessed on 6 May 2025)), and a subset of

Cosmopedia, thereby covering a wide spectrum of linguistic variation.

We deliberately combined the above datasets that span different levels of readability, so that the merged corpus reflects a graded progression of linguistic complexity. Specifically, the sentences from

CHILDES [

17] and

Storybook [

18] primarily fall above an FRE score of 80, corresponding to the “easy” or “very easy” readability levels typically associated with fifth to sixth grade readers, and were included to provide the model with exposure to simple and coherent linguistic patterns resembling children’s early language input. The

Gutenberg Children’s Literature corpus mostly occupies the FRE range of 40 to 80, covering “fairly easy” to “fairly difficult” levels, and was chosen to gradually introduce richer vocabulary and moderately complex syntax that reflect the progression to more advanced readers. Finally, a subset of

Cosmopedia contributes a greater proportion of sentences below an FRE score of 40, representing challenging and low-readability content, thereby ensuring that the model is also exposed to advanced discourse structures and dense informational content (refer to

Table 3 for the detailed interpretation of FRE scores). To verify that our readability-based categorization reflects consistent human perception, we conducted a human evaluation with three annotators, yielding a moderate–substantial inter-rater agreement (Fleiss’

≈ 0.588).

4.2.2. Evaluation Datasets

We evaluate our models using datasets from a subset of GLUE [

19] and SuperGLUE [

20], including seven tasks: BoolQ, MNLI, MRPC, MultiRC, QQP, RTE, and WSC, together with three additional zero-shot tasks: BLiMP, EWOK, and Reading.

GLUE provides a collection of nine natural language understanding tasks covering textual entailment, similarity, and classification, and has become a widely adopted benchmark for evaluating pretrained models on a diverse range of linguistic phenomena [

19]. SuperGLUE consists of more challenging tasks that require deeper reasoning and diverse task formats [

20]. For our evaluation, CoLA, SST2, MNLI-mm, and QNLI were not included, as these tasks are highly correlated with other datasets such as BLiMP or MNLI. Overall, adopting GLUE as one of the evaluation tasks provides a standardized and comprehensive testbed for evaluating whether our curriculum-learning approach leads to general improvements across diverse NLU tasks.

In addition to these established benchmarks, we further evaluate on three targeted zero-shot tasks, which enable us to measure the models’ ability to generalize beyond training without task-specific tuning.

BLiMP [

21] is a suite of minimal pairs targeting grammatical phenomena. It evaluates whether models prefer the grammatically correct sentence over an ungrammatical counterpart. Since BLiMP directly measures fine-grained grammatical generalization, it is especially relevant for testing whether a readability-based curriculum strengthens models’ sensitivity to core linguistic rules. EWOK [

22] assesses models’ world knowledge by testing their ability to distinguish plausible from implausible contexts. Evaluating factual and commonsense reasoning beyond pure syntax or semantics, EWOK tests whether gains from our training method extend to broader knowledge grounding.

Reading [

23] contains 205 English sentences (1726 words), for which cloze probabilities, predictability ratings, and computational surprisal estimates are aligned with behavioral and neural measures. Crucially, the reading component includes two complementary tasks: self-paced reading (SPR), which records reaction times as participants reveal words one by one, and eye-tracking, which captures fine-grained gaze measures such as fixations and regressions. Whereas SPR reflects more controlled, consciously paced reading behavior, eye-tracking captures more natural and immediate processing dynamics.

The evaluation of the reading task followed the framework of the BabyLM 2025 Challenge [

24], where model predictions are assessed using regression analyses that measure the increase in explained variance (

) when surprisal is added as a predictor for human reading measures. Specifically, eye-tracking variables are analyzed without spillover effects, whereas self-paced reading includes a one-word spillover term to account for delayed processing influences from the previous word. The spillover effect captures the phenomenon that cognitive load from a word can extend to the subsequent word, influencing its reading time. Higher

values indicate that model-derived surprisal explains more variance in reaction times or gaze durations, providing a cognitively grounded test of how closely model processing aligns with human processing dynamics.

For all reported results, we averaged performance over three random seeds to ensure fairness. Details of the hyperparameter settings used for fine-tuning on GLUE tasks are provided in

Appendix A.

4.3. Backbone Models

We conducted experiments using two widely adopted architectures: GPT-2 [

25] and BERT [

26]. These models served as the backbones for all curriculum and baseline training runs. GPT-2 was used for autoregressive language modeling, while BERT was used for masked language modeling in a bidirectional setting. Details of model hyperparameters are provided in

Table A1.

Choosing these two complementary architectures allowed us to examine the effectiveness of curriculum learning across both the encoder-based and decoder-based paradigms. We deliberately employed moderate-sized, widely used models rather than larger state-of-the-art models. As smaller models provide a controlled environment where the effects of curriculum learning can be isolated without confounding from extreme model capacity, under the 100 M word constraint. In addition, given our deliberate 100 M word limitation, employing much larger recent models would be suboptimal, since their parameter scale requires substantially more data to train effectively. Using moderate-sized, widely validated models thus provides a more controlled testbed for evaluating the specific contribution of curriculum learning.

5. Results

5.1. The Result of the Single Setting

In this section, we present the analysis of the single setting, where curriculum learning is applied to a single dataset, one of the two configurations defined by dataset composition. We first examine (1) zero-shot tasks to observe the intrinsic performance without relying on fine-tuning data, followed by (2) GLUE tasks that require fine-tuning, and finally (3) a detailed analysis to gain deeper insights into the effectiveness of our curriculum. All results reported in this section are averaged over three independent runs with different random seeds, ensuring that the improvements are not due to chance from a single run. Cross-validation was not applied, as it is computationally prohibitive in pretraining settings; the multi-seed evaluation serves as a practical and widely accepted alternative.

5.1.1. Evaluation on Zero-Shot Tasks

We evaluated six zero-shot tasks, as shown in

Table 4, after training a small language model using a curriculum structured from easier to more difficult documents and sentences based on their FRE scores. Among the various training strategies, the

paragraph setting—where the curriculum was constructed at the paragraph level—consistently yielded superior performance across most tasks regardless of model architecture. In particular, substantial improvements were observed in tasks evaluating the grammatical competence of language models. For BLiMP, the GPT-2 architecture achieved a 12.31% improvement over the baseline, while the BERT architecture achieved a 19.83% improvement. Similarly, in the more grammatically demanding Supplement BLiMP task, performance increased by 11.18% and 12.80%, respectively.

Furthermore, in the Ewok task, which evaluates factual knowledge acquisition, the FRE-based curriculum also resulted in performance improvements, suggesting that utilizing readability indicators—commonly applied to evaluate the difficulty of children’s books—can have practical benefits for knowledge-intensive tasks in small language models.

5.1.2. Evaluation on GLUE Tasks

Table 5 demonstrates that within the

single setting, our curriculum learning approach can positively influence natural language understanding capabilities. In particular, the paragraph unit setting—where the curriculum was constructed based on FRE scores computed at the

paragraph unit—achieved an average improvement of +6.07 over the baseline, delivering superior performance across most subtasks. While there was a slight drop in tasks such as WSC, the overall improvements were far greater. This suggests that in NLU tasks, not only text difficulty but also contextual understanding across consecutive sentences plays a crucial role.

A striking example is the Multi-Genre Natural Language Inference (MNLI) task, which showed an impressive improvement of 16.87. Since MNLI requires the model to classify the relationship between a pair of sentences as entailment, contradiction, or neutral, semantic and contextual reasoning is essential. For example, given the premise “How do you know? All this is their information again.” and the hypothesis “This information belongs to them.”, the correct label is entailment. This is because the premise already presupposes that the information belongs to “them,” and the hypothesis simply restates this fact in a more concise way. In other words, if the premise is true, the hypothesis must also be true, thereby establishing an entailment relation.

Since tasks like MNLI rely heavily on semantic and contextual inference between sentence pairs, preserving broader context and capturing complex structures are critical. This explains why paragraph-level training, which better retains contextual integrity, likely outperformed sentence-level training in this setting.

5.2. Detailed Analysis: The Effect of Reading-Level Guided Curriculum

5.2.1. Evaluation on Zero-Shot Tasks

In

Table 6, we analyze whether each curriculum level exerts an appropriate positive effect when training with the paragraph unit, which showed the best performance in the single setting. The table reports the performance on three zero-shot tasks (BLiMP, BLiMP-S, EWoK) at each curriculum level (easy, medium, hard), relative to the baseline.

As the curriculum progresses, we observe consistent performance gains across both model architectures, indicating that each stage contributes positively in a balanced manner. At the easy level, especially, tasks involving grammaticality judgment, such as BLiMP and BLiMP-S show notable improvements: GPT-2 achieves gains of 8.86% and 11.96%, while BERT improves by 8.18% and 11.18%, respectively. These results suggest that grammaticality-related tasks particularly benefit from exposure to linguistically simpler text in the early stages of the curriculum. In contrast, the EWoK dataset, which evaluates factual knowledge acquisition, shows only a marginal improvement of 0.31, implying that such tasks may be less sensitive to gains from easier text and instead require more complex input to yield substantial benefits.

5.2.2. Evaluation on Reading Task

Table 7 shows the performance of the reading task in the single setting, where curriculum learning was conducted using FRE scores computed at the paragraph level. The task evaluates how closely the model mirrors human-like perceptions of textual difficulty, comparing results against the baseline after each curriculum stage.

Beyond grammaticality judgment tasks, improvements were also observed in the reading tasks, which measure how closely language models align with human processing patterns. In the baseline without a curriculum, the scores remained close to zero. However, when training was structured according to decreasing FRE scores (that is, progressing from easier to more difficult texts), performance consistently improved across all settings. Notably, the paragraph setting yielded the strongest gains, achieving improvements of 4.12 on the eye-tracking task and 2.37 on the self-paced reading task compared to the baseline. These results indicate that language models trained with an FRE-based curriculum tend to struggle at similar points as humans, such as on passages where readers naturally slow down and fixate longer due to perceived difficulty. In this sense, the approach encourages models to exhibit processing patterns more closely aligned with human cognitive responses.

5.2.3. Evaluation on GLUE Task

Table 8 presents the performance on the GLUE subtasks after fine-tuning with their respective training datasets, following curriculum learning based on FRE scores computed at the paragraph level in the single setting. Similar to the earlier zero-shot tasks, all curriculum levels show improvements over the baseline, and furthermore, performance steadily increases as the curriculum progresses, reaching 63.94, 66.01, and 67.46 at the easy, medium, and hard levels, respectively.

Among the notable observations, the first is that the WSC task diverges from the overall trend of gradual improvement. Because this task requires reasoning about the referents of pronouns (coreference resolution), the easy-level data—consisting of simpler structures and clearer sentences—appears to support the learning of “surface-level” coreference patterns. However, as training progresses and later stages become dominated by more complex data with long sentences and nested clauses, the model may dilute the coreference signal or absorb additional noise, leading to reduced performance. Second, in the case of the MNLI task, we observe exponential growth beginning at the medium stage. Since this task relies heavily on contextual inference, it can be interpreted that a certain level of curriculum progression is necessary before robust performance emerges.

5.3. The Result of Merged Setting

5.3.1. Evaluation on Zero-Shot Tasks

Table 9 shows that our curriculum learning approaches on a

merged setting demonstrate strong performance in three zero-shot evaluation tasks: BLiMP, Supplementary BLiMP, and Ewok. Across most evaluations, the

grouped setting consistently outperformed others, providing empirical evidence that our approach offers a cognitively aligned learning strategy that enables small models to perform better in zero-shot tasks without any example-based supervision.

An interesting observation is the contrast between the single setting and the merged setting. While the paragraph unit proved highly effective in the single setting, its performance was less stable in the merged setup. Unlike the single dataset, which is composed of coherent documents from a single source, the merged dataset is constructed by merging documents from diverse domains and contexts. In such cases, although sentences are sorted by FRE scores, the disruption of contextual continuity appears to negatively impact learning.

5.3.2. Evaluation on GLUE Task

Table 10 demonstrates that the reading-level guided curriculum learning approach, when applied to models trained on our merged setting, leads to improved natural language understanding in BERT. Averaged GLUE scores reveal a clear trend: as the curriculum becomes more fine-grained—specifically when FRE scores are applied at the sentence level—performance improves. This suggests that our curriculum strategy contributes meaningfully to enhancing language understanding in small-scale models.

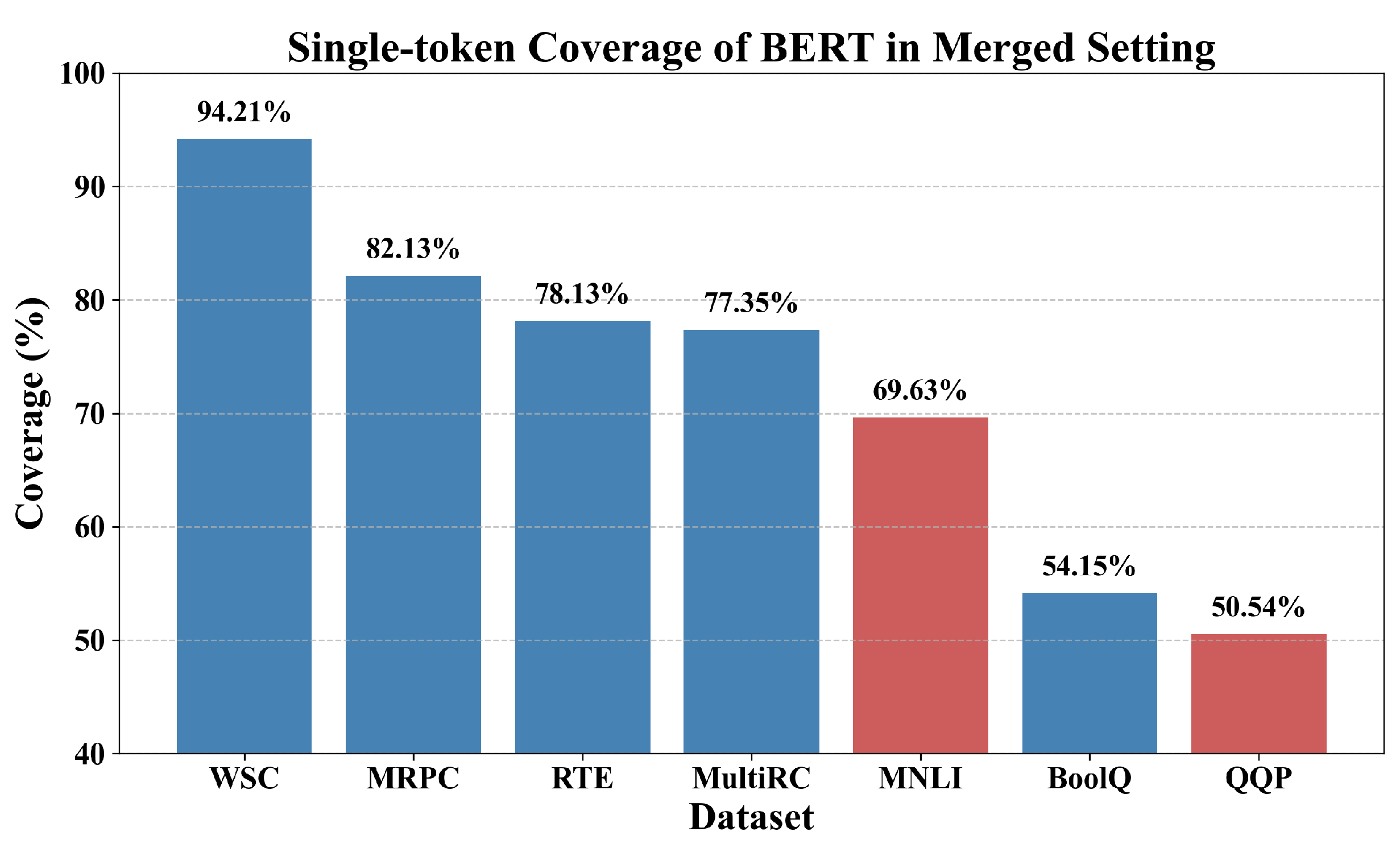

Among the tasks, MNLI and QQP exhibited relatively weaker performance. As shown in

Figure 2, these datasets have notably low single-token coverage, with less than half of the unique words preserved as individual tokens. This high degree of subword fragmentation likely hindered the model’s ability to capture word-level semantics. In contrast, BoolQ did not suffer a performance drop. Our analysis suggests that, despite the impact of token fragmentation in MNLI and QQP, the model’s early exposure to simpler sentences—analogous to human child language acquisition—helped form a stronger linguistic foundation, leading to improved reasoning and inference in BoolQ.

6. Conclusions

This study demonstrated that a reading-level guided curriculum, based on the Flesch Reading Ease (FRE) score, can significantly enhance the learning efficiency of small language models under data-constrained conditions. There are three key contributions through a curriculum learning approach based on the FRE score. First, by leveraging a single readability metric—the FRE score—we propose a curriculum that is substantially simpler and more interpretable than existing approaches. Second, by restricting pretraining data to 100 M tokens, we control for the factor of large-scale data and isolate pretraining methodology as the primary driver of improvement. Third, unlike prior curriculum learning studies that often yielded inconclusive results, this work provides clear and positive learning signals, demonstrating the effectiveness of readability-based curricula. Especially, our approach improved both grammatical generalization and factual reasoning in zero-shot and downstream settings, with gains of up to +19.83% on tasks like BLiMP and consistent improvements in GLUE benchmarks.

In addition to these contributions, applying the curriculum across different levels of granularity—ranging from sentences to grouped segments and paragraphs—and under both single-source and heterogeneous datasets revealed coherent patterns of improvement. These outcomes emphasize that the value of this work lies not only in the method itself but also in its systematic application under diverse training conditions. Thus, the findings highlight that a carefully designed, readability-based curriculum can translate methodological simplicity into tangible efficiency gains and stronger generalization, offering a practical and cognitively motivated pathway for advancing small language models.

At a more fine-grained level, the experimental results further suggest concrete guidance for practice. According to the experimental results, the effectiveness of our curriculum varied depending on the dataset composition. In the single setting—where training data came from a coherent source—paragraph unit curriculum consistently yielded robust gains.

In contrast, for merged datasets drawn from heterogeneous sources, the grouped-level curriculum was more effective. Maintaining internal coherence within each phase led to more stable improvements. Therefore, when applying our method, we found the following:

For a single setting, adopting the paragraph unit allows for fine-grained progression and leads to strong generalization.

For a merged setting, it is more desirable to construct each curriculum level with the group unit from contextually similar sources to preserve coherence within each level.

In addition, our tokenizer coverage analysis in

Figure 2 revealed that tasks with low single-token preservation (e.g., MNLI and QQP) exhibited diminished performance, suggesting that excessive subword segmentation may hinder semantic understanding in small models. This finding emphasized the importance of aligning tokenization granularity with curriculum design.

While our method demonstrated strong zero-shot and fine-tuning performance, limitations remained. The current framework relies on surface-level readability metrics and does not yet incorporate deeper semantic or discourse-level complexity. In addition, it remains to be verified whether the proposed approach can scale effectively to larger datasets and more powerful model architectures. Beyond scalability, since the Flesch Reading Ease score is an English-specific readability measure, its cross-linguistic applicability remains to be verified. Investigating cognitively grounded readability metrics in other languages will be an important step toward extending the framework’s generality beyond English. Future work will also explore its applicability to a broader range of downstream tasks, including question answering and summarization, as well as its extension to multilingual, instruction-tuned, or few-shot settings. Nevertheless, the simplicity, interpretability, and architecture-agnostic nature of our approach position it as a promising framework not only for advancing cognitively plausible and data-efficient NLP systems but also as a practical option in resource-limited environments where small-scale models must be deployed.