Modular Multi-Task Learning for Emotion-Aware Stance Inference in Online Discourse

Abstract

1. Introduction

- A data augmentation strategy based on back-translation is introduced to expand the NLPCC2016-task4 Weibo dataset from 3K to 12K samples, thereby enhancing linguistic diversity and contextual coverage.

- A dual-layered neural architecture is designed that integrates RoBERTa-based contextual embeddings with BiCARU layers to capture temporal and directional flow in text.

- Sentiment analysis is incorporated as an auxiliary task within the MTL framework, enabling emotional cues to act as complementary signals for stance inference across varied topics.

2. Related Work

2.1. Neural and Ensemble Advances

2.2. Multi-Task Learning for Sentiment and Stance

3. Methodology

3.1. Multi-Task Learning Framework

- Stance Detection

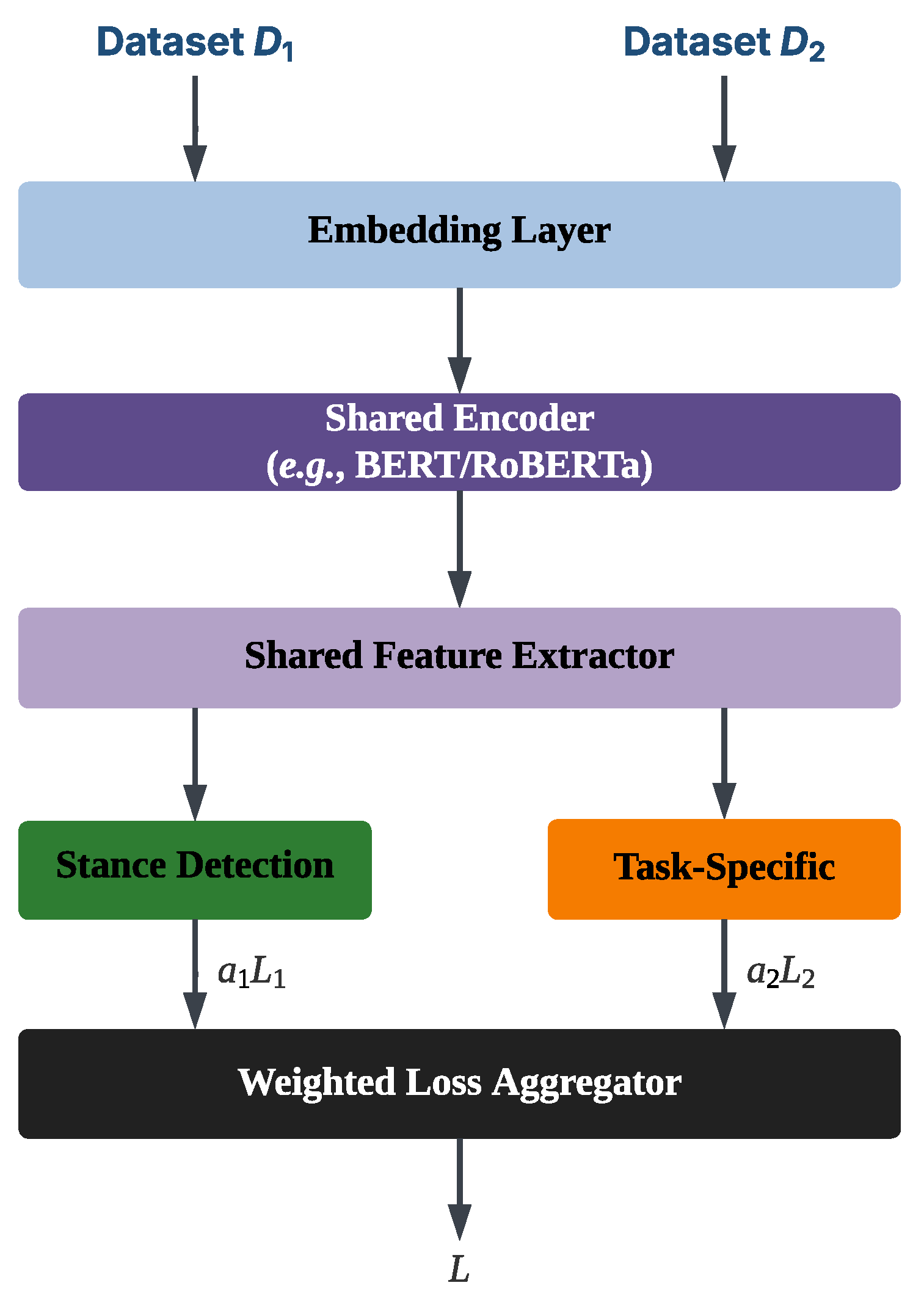

- captures general syntactic and semantic patterns that are applicable to all tasks, enabling cross-task transfer. These layers usually comprise pre-trained transformer encoders (e.g., BERT and RoBERTa), which offer contextualised token embeddings that act as a shared basis. As illustrated in Figure 1, the shared encoder processes inputs from multiple datasets and outputs a unified representation space.

- Task-Specific

- refine shared features to meet the unique decision-making needs of each task without diluting shared knowledge. Each task head may include additional attention mechanisms, classification layers, or gating modules to isolate task-relevant signals. In Figure 1, each task-specific branch receives the shared features and computes its own loss, which is then aggregated via a weighted loss function.

- Gradient Normalisation

- Task gradients are rescaled to prevent dominant tasks from overwhelming others during backpropagation.

- Dynamic task weighting

- The coefficients are periodically adjusted based on validation performance to ensure that underperforming tasks receive more attention.

3.2. Application to Social Media Stance Detection

- Stance Detection

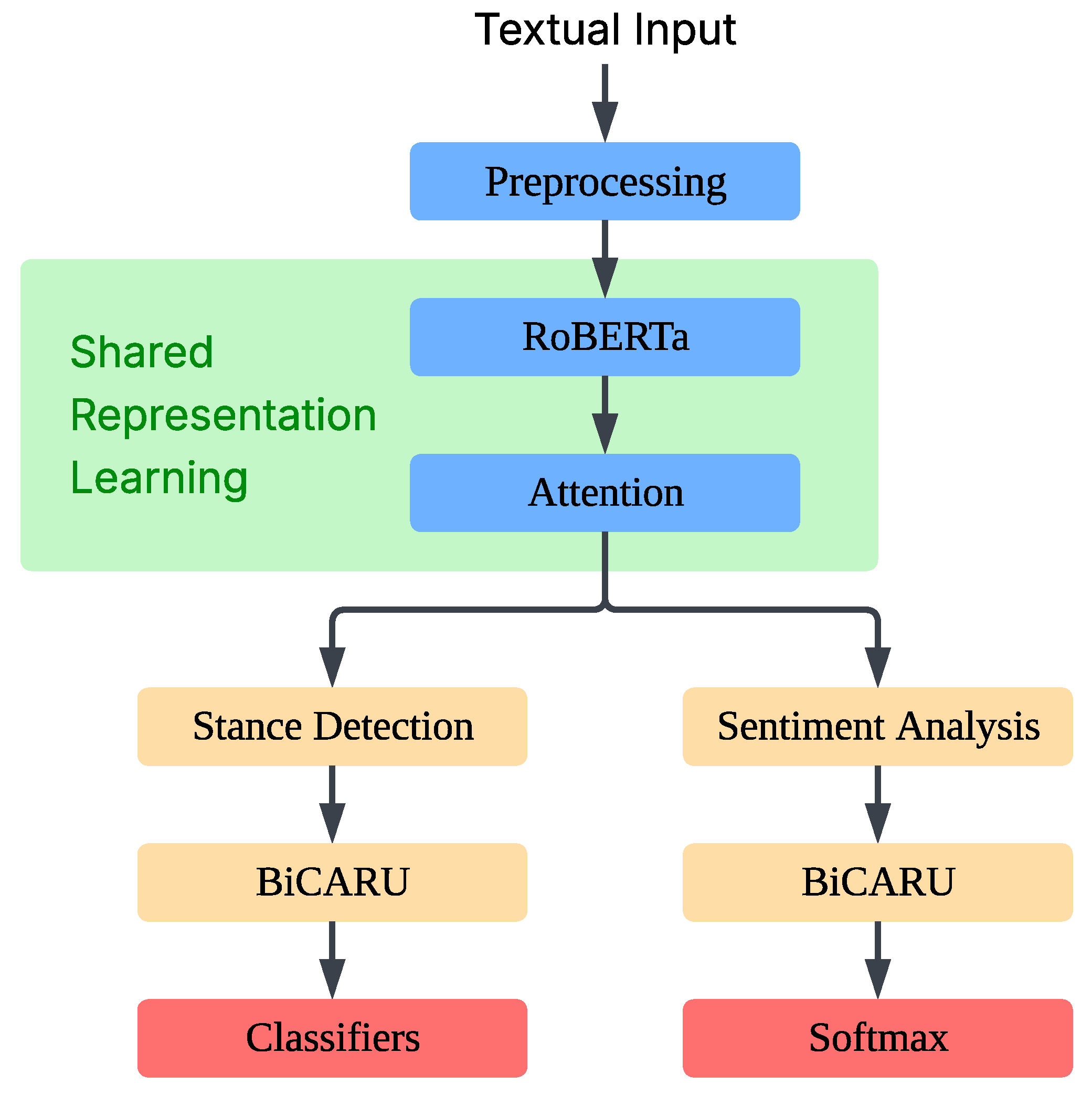

- The shared embeddings are passed through a BiCARU module, which models bidirectional contextual dependencies. This is followed by three binary classifiers, each of which is responsible for identifying a specific stance polarity (e.g., favourable, unfavourable, neutral).

- Sentiment Analysis

- The same BiCARU module is used to extract emotionally salient features, which are then processed by a Sigmoid activation layer to generate sentiment predictions.

4. Shared Representation Learning

4.1. RoBERTa Encoding and Representation Sharing

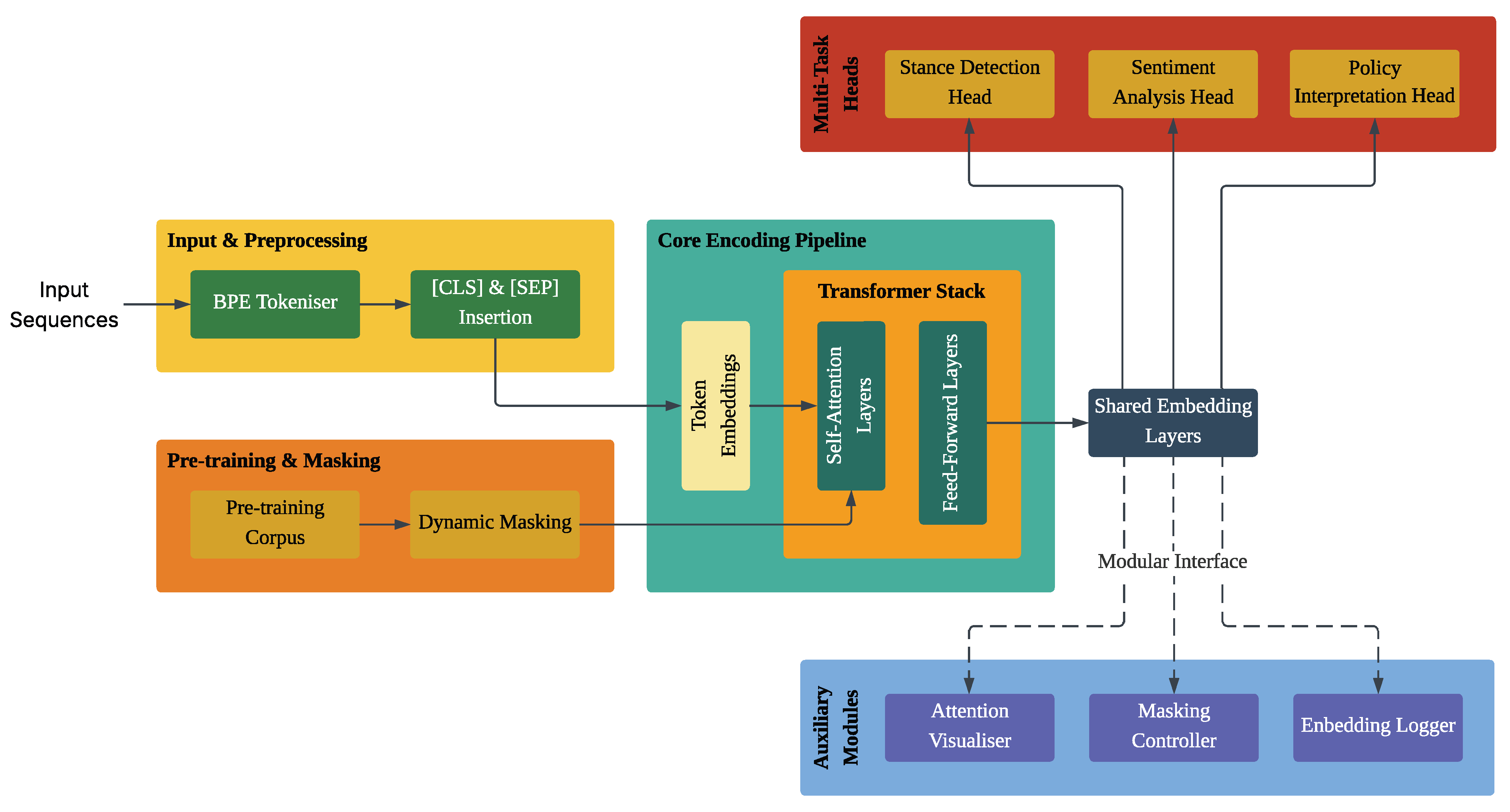

- Input and Preprocessing: The model begins by applying BPE to segment the input sequences into consistent subword units, thereby facilitating multilingual compatibility. Special tokens, such as [CLS] and [SEP], are then inserted to indicate task boundaries and segment transitions. These tokens are then embedded in a high-dimensional vector space for subsequent processing.

- Core Encoding Pipeline: The embedded tokens are then processed through a stack of Transformer layers comprising self-attention and feed-forward submodules. This iterative encoding mechanism captures syntactic proximity and semantic depth, enabling the model to perform complex tasks such as stance detection, sentiment classification, and policy interpretation.

- Shared Embedding Layer: A unified embedding space serves as the central representational hub, interfacing with both task-specific and auxiliary modules. This shared layer minimises redundancy and promotes efficient reuse of features across heterogeneous tasks.

- Multi-Tasks Heads: Task-specific modules for stance detection, sentiment analysis, and policy interpretation operate in parallel, drawing from the shared contextual embeddings. This parallelism supports contrastive reasoning and facilitates the alignment of latent semantic cues across tasks.

- Auxiliary Modules: Complementary components such as the attention visualiser, masking controller, and embedding logger are integrated via modular interfaces. These operate concurrently with the encoding pipeline, enhancing interpretability and control without disrupting representational integrity.

- Modularity and Extensibility: The architecture’s modular design supports the seamless integration of new tasks and diagnostic tools. This ensures scalability in multitask and multilingual environments while preserving auditability and operational transparency.

4.2. Attention Dynamics and Cross-Task Feature Propagation

5. Modular Stance Inference Architecture

5.1. CARU-Based Bidirectional Representation Learning

5.2. Contextual Encoding and Target Segmentation

5.3. Reformulated Stance Classification with Conditional Routing

6. Multi-Task Integration and Auxiliary Module Design

6.1. Task-Specific Heads for Joint Optimisation

6.2. Auxiliary Modules for Interpretability and Diagnostics

6.2.1. Attention Visualisation Module

6.2.2. Dynamic Masking Controller

6.2.3. Embedding Trace Logger

7. Experimental Results and Discussion

7.1. Training Environment, Configuration, and Strategy

7.2. Evaluation Metrics

7.3. Results Analysis and Discussion

7.4. Error Analysis

7.5. Ablation Study

7.5.1. Quantitative Performance Comparison

7.5.2. Module-Level Behavioural Impact

7.5.3. Error Typology Across Configurations

7.6. Limitations and Future Work

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Burnham, M. Stance detection: A practical guide to classifying political beliefs in text. Political Sci. Res. Methods 2024, 13, 611–628. [Google Scholar] [CrossRef]

- Sykora, M.; Elayan, S.; Hodgkinson, I.R.; Jackson, T.W.; West, A. The power of emotions: Leveraging user generated content for customer experience management. J. Bus. Res. 2022, 144, 997–1006. [Google Scholar] [CrossRef]

- Zhu, H.; Feng, D.; Chen, X. Introduction. In Social Identity and Discourses in Chinese Digital Communication; Routledge: London, UK, 2024; pp. 1–21. [Google Scholar] [CrossRef]

- Alonso, M.A.; Vilares, D.; Gómez-Rodríguez, C.; Vilares, J. Sentiment Analysis for Fake News Detection. Electronics 2021, 10, 1348. [Google Scholar] [CrossRef]

- Williamson, S.M.; Prybutok, V. The Era of Artificial Intelligence Deception: Unraveling the Complexities of False Realities and Emerging Threats of Misinformation. Information 2024, 15, 299. [Google Scholar] [CrossRef]

- Cheng, K.; Xue, X.; Chan, K. Zero emission electric vessel development. In Proceedings of the 2015 6th International Conference on Power Electronics Systems and Applications (PESA), Hong Kong, China, 15–17 December 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Adesoga, T.O.; Olaiya, O.P.; Obani, O.Q.; Orji, M.C.U.; Orji, C.A.; Olagunju, O.D. Leveraging AI for transformative business development: Strategies for market analysis, customer insights, and competitive intelligence. Int. J. Sci. Res. Arch. 2024, 12, 799–805. [Google Scholar] [CrossRef]

- Omowole, B.M.; Olufemi-Phillips, A.Q.; Ofodile, O.C.; Eyo-Udo, N.L.; Ewim, S.E. Big data for SMEs: A review of utilization strategies for market analysis and customer insight. Int. J. Sch. Res. Multidiscip. Stud. 2024, 5, 1–18. [Google Scholar] [CrossRef]

- Lin, X.; Yang, T.; Law, S. From points to patterns: An explorative POI network study on urban functional distribution. Comput. Environ. Urban Syst. 2025, 117, 102246. [Google Scholar] [CrossRef]

- Yenduri, G.; Ramalingam, M.; Selvi, G.C.; Supriya, Y.; Srivastava, G.; Maddikunta, P.K.R.; Raj, G.D.; Jhaveri, R.H.; Prabadevi, B.; Wang, W.; et al. GPT (Generative Pre-Trained Transformer)—A Comprehensive Review on Enabling Technologies, Potential Applications, Emerging Challenges, and Future Directions. IEEE Access 2024, 12, 54608–54649. [Google Scholar] [CrossRef]

- Wang, H.; Zhao, H.; Li, B. Bridging Multi-Task Learning and Meta-Learning: Towards Efficient Training and Effective Adaptation. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; Volume 139, pp. 10991–11002. [Google Scholar]

- Ouyang, W.; Gu, X.; Ye, L.; Liu, X.; Zhang, C. Exploring Hydrological Variable Interconnections and Enhancing Predictions for Data-Limited Basins Through Multi-Task Learning. Water Resour. Res. 2025, 61, e2023WR036593. [Google Scholar] [CrossRef]

- Alturayeif, N.; Luqman, H.; Ahmed, M. A systematic review of machine learning techniques for stance detection and its applications. Neural Comput. Appl. 2023, 35, 5113–5144. [Google Scholar] [CrossRef]

- Chan, K.H. Using admittance spectroscopy to quantify transport properties of P3HT thin films. J. Photonics Energy 2011, 1, 011112. [Google Scholar] [CrossRef][Green Version]

- Rane, N.; Choudhary, S.P.; Rane, J. Ensemble deep learning and machine learning: Applications, opportunities, challenges, and future directions. Stud. Med. Health Sci. 2024, 1, 18–41. [Google Scholar] [CrossRef]

- Sakib, M.; Mustajab, S.; Alam, M. Ensemble deep learning techniques for time series analysis: A comprehensive review, applications, open issues, challenges, and future directions. Clust. Comput. 2024, 28, 73. [Google Scholar] [CrossRef]

- Munmun, Z.S.; Akter, S.; Parvez, C.R. Machine Learning-Based Classification of Coronary Heart Disease: A Comparative Analysis of Logistic Regression, Random Forest, and Support Vector Machine Models. OALib 2025, 12, e13054. [Google Scholar] [CrossRef]

- Avci, C.; Budak, M.; Yağmur, N.; Balçık, F. Comparison between random forest and support vector machine algorithms for LULC classification. Int. J. Eng. Geosci. 2023, 8, 1–10. [Google Scholar] [CrossRef]

- Sharma, A.; Prakash, C.; Manivasagam, V. Entropy-Based Hybrid Integration of Random Forest and Support Vector Machine for Landslide Susceptibility Analysis. Geomatics 2021, 1, 399–416. [Google Scholar] [CrossRef]

- Li, S.; Shi, W. Incorporating Multiple Textual Factors into Unbalanced Financial Distress Prediction: A Feature Selection Methods and Ensemble Classifiers Combined Approach. Int. J. Comput. Intell. Syst. 2023, 16, 162. [Google Scholar] [CrossRef]

- Huang, X.; Chan, K.H.; Wu, W.; Sheng, H.; Ke, W. Fusion of Multi-Modal Features to Enhance Dense Video Caption. Sensors 2023, 23, 5565. [Google Scholar] [CrossRef]

- Liu, J.; Liu, Z.; Li, Q.; Kong, W.; Li, X. Multi-Domain Controversial Text Detection Based on a Machine Learning and Deep Learning Stacked Ensemble. Mathematics 2025, 13, 1529. [Google Scholar] [CrossRef]

- Jamialahmadi, S.; Sahebi, I.; Sabermahani, M.M.; Shariatpanahi, S.P.; Dadlani, A.; Maham, B. Rumor Stance Classification in Online Social Networks: The State-of-the-Art, Prospects, and Future Challenges. IEEE Access 2022, 10, 113131–113148. [Google Scholar] [CrossRef]

- Alturayeif, N.; Luqman, H.; Ahmed, M. Enhancing stance detection through sequential weighted multi-task learning. Soc. Netw. Anal. Min. 2023, 14, 7. [Google Scholar] [CrossRef]

- Pattun, G.; Kumar, P. Feature Engineering Trends in Text-Based Affective Computing: Rules to Advance Deep Learning Models. Int. Res. J. Multidiscip. Technovation 2025, 7, 87–107. [Google Scholar] [CrossRef]

- Im, S.; Chan, K. Vector quantization using k-means clustering neural network. Electron. Lett. 2023, 59, e12758. [Google Scholar] [CrossRef]

- Fitz, S.; Romero, P. Neural Networks and Deep Learning: A Paradigm Shift in Information Processing, Machine Learning, and Artificial Intelligence. In The Palgrave Handbook of Technological Finance; Springer International Publishing: Cham, Switzerland, 2021; pp. 589–654. [Google Scholar] [CrossRef]

- Borisov, V.; Leemann, T.; Seßler, K.; Haug, J.; Pawelczyk, M.; Kasneci, G. Deep Neural Networks and Tabular Data: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 7499–7519. [Google Scholar] [CrossRef]

- Chan, K.H.; Im, S.K. Using Four Hypothesis Probability Estimators for CABAC in Versatile Video Coding. ACM Trans. Multimed. Comput. Commun. Appl. 2023, 19, 40. [Google Scholar] [CrossRef]

- Dai, G.; Liao, J.; Zhao, S.; Fu, X.; Peng, X.; Huang, H.; Zhang, B. Large Language Model Enhanced Logic Tensor Network for Stance Detection. Neural Netw. 2025, 183, 106956. [Google Scholar] [CrossRef]

- Im, S.K.; Chan, K.H. More Probability Estimators for CABAC in Versatile Video Coding. In Proceedings of the 2020 IEEE 5th International Conference on Signal and Image Processing (ICSIP), Nanjing, China, 23–25 October 2020; pp. 366–370. [Google Scholar] [CrossRef]

- Graves, A. Long Short-Term Memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Springer: Berlin/Heidelberg, Germany, 2012; pp. 37–45. [Google Scholar] [CrossRef]

- Cho, K.; van Merrienboer, B.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder–Decoder Approaches. In Proceedings of SSST-8, Eighth Workshop on Syntax, Semantics and Structure in Statistical Translation; Association for Computational Linguistics: Stroudsburg, PA, USA, 2014. [Google Scholar] [CrossRef]

- Chan, K.H.; Ke, W.; Im, S.K. CARU: A Content-Adaptive Recurrent Unit for the Transition of Hidden State in NLP. In Neural Information Processing; Springer International Publishing: Cham, Switzerland, 2020; pp. 693–703. [Google Scholar] [CrossRef]

- Aljrees, T.; Cheng, X.; Ahmed, M.M.; Umer, M.; Majeed, R.; Alnowaiser, K.; Abuzinadah, N.; Ashraf, I. Fake news stance detection using selective features and FakeNET. PLoS ONE 2023, 18, e0287298. [Google Scholar] [CrossRef]

- Zhang, H.; Li, Y.; Zhu, T.; Li, C. Commonsense-based adversarial learning framework for zero-shot stance detection. Neurocomputing 2024, 563, 126943. [Google Scholar] [CrossRef]

- Raiaan, M.A.K.; Mukta, M.S.H.; Fatema, K.; Fahad, N.M.; Sakib, S.; Mim, M.M.J.; Ahmad, J.; Ali, M.E.; Azam, S. A Review on Large Language Models: Architectures, Applications, Taxonomies, Open Issues and Challenges. IEEE Access 2024, 12, 26839–26874. [Google Scholar] [CrossRef]

- Dong, L.; Su, Z.; Fu, X.; Zhang, B.; Dai, G. Implicit Stance Detection with Hashtag Semantic Enrichment. Mathematics 2024, 12, 1663. [Google Scholar] [CrossRef]

- Li, Y.; Sun, Y.; Zhu, N. BERTtoCNN: Similarity-preserving enhanced knowledge distillation for stance detection. PLoS ONE 2021, 16, e0257130. [Google Scholar] [CrossRef]

- Im, S.K.; Chan, K.H. Multi-lambda search for improved rate-distortion optimization of H.265/HEVC. In Proceedings of the 2015 10th International Conference on Information, Communications and Signal Processing (ICICS), Singapore, 2–4 December 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Elforaici, M.E.A.; Azzi, F.; Trudel, D.; Nguyen, B.; Montagnon, E.; Tang, A.; Turcotte, S.; Kadoury, S. Cell-Level GNN-Based Prediction of Tumor Regression Grade in Colorectal Liver Metastases From Histopathology Images. In Proceedings of the 2024 IEEE International Symposium on Biomedical Imaging (ISBI), Athens, Greece, 27–30 May 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, Y.; Yang, Q. Multi-Task Learning in Natural Language Processing: An Overview. ACM Comput. Surv. 2024, 56, 295. [Google Scholar] [CrossRef]

- Pham, V.H.S.; Tran, L.A.; Bui, D.K.; Nguyen, Q.T. Artificial Intelligence in Construction Safety Risk Management: A Comprehensive Review and Future Research Perspectives. In International Conference on Civil Engineering and Architecture, Proceedings of 7th International Conference on Civil Engineering and Architecture, Da Nang, Vietnam, 7–9 December 2024; Springer Nature: Singapore, 2025; Volume 2, pp. 212–221. [Google Scholar] [CrossRef]

- Zhang, Y.; Ma, D.; Tiwari, P.; Zhang, C.; Masud, M.; Shorfuzzaman, M.; Song, D. Stance-level Sarcasm Detection with BERT and Stance-centered Graph Attention Networks. ACM Trans. Internet Technol. 2023, 23, 1–21. [Google Scholar] [CrossRef]

- Ke, W.; Chan, K.H. A Multilayer CARU Framework to Obtain Probability Distribution for Paragraph-Based Sentiment Analysis. Appl. Sci. 2021, 11, 11344. [Google Scholar] [CrossRef]

- Benbakhti, B.; Kalna, K.; Chan, K.; Towie, E.; Hellings, G.; Eneman, G.; De Meyer, K.; Meuris, M.; Asenov, A. Design and analysis of the As implant-free quantum-well device structure. Microelectron. Eng. 2011, 88, 358–361. [Google Scholar] [CrossRef]

- Zhang, Y.; Yu, Y.; Zhao, D.; Li, Z.; Wang, B.; Hou, Y.; Tiwari, P.; Qin, J. Learning Multitask Commonness and Uniqueness for Multimodal Sarcasm Detection and Sentiment Analysis in Conversation. IEEE Trans. Artif. Intell. 2024, 5, 1349–1361. [Google Scholar] [CrossRef]

- Harris, S.; Hadi, H.J.; Ahmad, N.; Alshara, M.A. Fake News Detection Revisited: An Extensive Review of Theoretical Frameworks, Dataset Assessments, Model Constraints, and Forward-Looking Research Agendas. Technologies 2024, 12, 222. [Google Scholar] [CrossRef]

- Lan, X.; Gao, C.; Jin, D.; Li, Y. Stance Detection with Collaborative Role-Infused LLM-Based Agents. In Proceedings of the International AAAI Conference on Web and Social Media, Buffalo, NY, USA, 3–6 June 2024; Volume 18, pp. 891–903. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019. [Google Scholar] [CrossRef]

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. The Performance of LSTM and BiLSTM in Forecasting Time Series. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 3285–3292. [Google Scholar] [CrossRef]

- Chan, K.H.; Im, S.K. BI-CARU Feature Extraction for Semantic Analysis. In Proceedings of the 2022 5th International Conference on Information and Communications Technology (ICOIACT), Yogyakarta, Indonesia, 24–25 August 2022; pp. 183–187. [Google Scholar] [CrossRef]

- Kodati, D.; Tene, R. Advancing mental health detection in texts via multi-task learning with soft-parameter sharing transformers. Neural Comput. Appl. 2024, 37, 3077–3110. [Google Scholar] [CrossRef]

- Liu, J.; Cui, P. Data Heterogeneity Modeling for Trustworthy Machine Learning. In Proceedings of the 31st ACM SIGKDD Conference on Knowledge Discovery and Data Mining V.2, Toronto, ON, Canada, 3–7 August 2025; pp. 6086–6095. [Google Scholar] [CrossRef]

- Chan, K.H.; Ke, W.; Im, S.K. A General Method for Generating Discrete Orthogonal Matrices. IEEE Access 2021, 9, 120380–120391. [Google Scholar] [CrossRef]

- Kang, L.; Yao, J.; Du, R.; Ren, L.; Liu, H.; Xu, B. A Stance Detection Model Based on Sentiment Analysis and Toxic Language Detection. Electronics 2025, 14, 2126. [Google Scholar] [CrossRef]

- Das, R.; Singh, T.D. Multimodal Sentiment Analysis: A Survey of Methods, Trends, and Challenges. ACM Comput. Surv. 2023, 55, 270. [Google Scholar] [CrossRef]

- Ansel, J.; Yang, E.; He, H.; Gimelshein, N.; Jain, A.; Voznesensky, M.; Bao, B.; Bell, P.; Berard, D.; Burovski, E.; et al. PyTorch 2: Faster Machine Learning Through Dynamic Python Bytecode Transformation and Graph Compilation. In Proceedings of the 29th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, Volume 2 (ASPLOS ’24), La Jolla, CA, USA, 27 April–1 May 2024. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. arXiv 2014. [Google Scholar] [CrossRef]

- Joulin, A.; Grave, E.; Bojanowski, P.; Mikolov, T. Bag of Tricks for Efficient Text Classification. arXiv 2016. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018. [Google Scholar] [CrossRef]

- Pu, Q.; Li, F. RoBERTa-BiLSTM: A Chinese Stance Detection Model. In Proceedings of the 2024 5th International Seminar on Artificial Intelligence, Networking and Information Technology (AINIT), Nanjing, China, 29–31 March 2024; pp. 2199–2203. [Google Scholar] [CrossRef]

- Pu, Q.; Huang, F.; Li, F.; Wei, J.; Jiang, S. Integrating Emotional Features for Stance Detection Aimed at Social Network Security: A Multi-Task Learning Approach. Electronics 2025, 14, 186. [Google Scholar] [CrossRef]

| Task | Dataset | Label Types | Sample Count | Source |

|---|---|---|---|---|

| Stance Detection | NLPCC2016-task4 | support oppose neutral | 4,000 | NLPCC2016 |

| PKU-2020 | favor against neutral | 6,500 | PKU Weibo Corpus | |

| SemEval-2016 (Twitter) | favor against neutral | 4,870 | SemEval-2016 Task 6 | |

| Sentiment Analysis | weibo_senti_100k | positive negative | 119,988 | OpenWeibo |

| Parameter | Value | Description |

|---|---|---|

| Optimizer | AdamW | Adaptive weight decay optimiser |

| Learning Rate | 0.001 | Initial learning rate |

| 0.5 | First moment coefficient | |

| 0.999 | Second moment coefficient | |

| Scheduler | Cosine Annealing + Warm-up | Learning rate scheduling strategy |

| Batch Size | 32 per GPU | Number of samples per GPU |

| Epochs | 100 | Total training iterations |

| Normalisation | Group Normalisation | Applied to all layers |

| Weight Decay | Applied to encoder only |

| Model | ||||||

|---|---|---|---|---|---|---|

| Baseline | TextCNN [59] | 0.6351 | 0.6309 | 0.6392 | 0.6264 | 0.6525 |

| FastText [60] | 0.6334 | 0.6152 | 0.6516 | 0.6385 | 0.6285 | |

| BERT [61] | 0.7579 | 0.7547 | 0.7610 | 0.7727 | 0.7448 | |

| Advanced | RoBERTa [50] | 0.7652 | 0.7616 | 0.7687 | 0.7819 | 0.7501 |

| RoBERTa-BiLSTM [62] | 0.7711 | 0.7538 | 0.7883 | 0.7801 | 0.7631 | |

| MTL-RoBERTa-BiLSTM [63] | 0.7872 | 0.7885 | 0.7860 | 0.7842 | 0.7818 | |

| Proposed | CARU-MTL | 0.7748 | 0.7662 | 0.8034 | 0.7594 | 0.7902 |

| BiCARU-MTL | 0.7886 | 0.7881 | 0.8091 | 0.7652 | 0.7920 |

| Comparison | Test | Statistic | p-Value |

|---|---|---|---|

| BiCARU-MTL vs. RoBERTa-BiLSTM | McNemar’s test | <0.001 | |

| BiCARU-MTL vs. TextCNN | Paired t-test |

| Model | ||||||

|---|---|---|---|---|---|---|

| Baseline | TextCNN [59] | 0.7015 | 0.6942 | 0.7038 | 0.7065 | 0.6981 |

| Advanced | RoBERTa-BiLSTM [62] | 0.7428 | 0.7391 | 0.7482 | 0.7410 | 0.7445 |

| Proposed | BiCARU-MTL | 0.7642 | 0.7675 | 0.7619 | 0.7632 | 0.7651 |

| Model | ||||||

|---|---|---|---|---|---|---|

| Advanced | RoBERTa-BiLSTM [62] | 0.598 | 0.601 | 0.592 | 0.602 | 0.596 |

| Proposed | BiCARU-MTL (zero-shot) | 0.612 | 0.617 | 0.609 | 0.610 | 0.613 |

| Error Type | Frequency (%) | Description |

|---|---|---|

| Favor vs. None Confusion | 37% | Implicit support, sarcasm, rhetorical ambiguity |

| Sentiment–Stance Mismatch | 28% | Emotional polarity conflicts with stance label |

| Topic Drift | 21% | Multiple targets or mid-sentence context shift |

| Tokenisation Artifacts | 14% | Informal language disrupts semantic segmentation |

| Configuration | Precision | Recall | F1-Score |

|---|---|---|---|

| Full Model | 80.42 | 79.15 | 79.72 |

| Sentiment Head Removed | 77.21 | 76.48 | 76.84 |

| Gradient Normalisation Disabled | 78.03 | 77.12 | 77.57 |

| Fixed Task Weights | 78.42 | 77.65 | 78.03 |

| Module Removed | Attention Shift | Convergence Stability | Adaptability |

|---|---|---|---|

| Sentiment Head | Towards syntax | Stable | Low |

| Gradient Normalisation | Dispersed | Volatile | Moderate |

| Fixed Task Weights | Balanced | Stable | Low |

| Error Type | Full Model | No Sentiment Head | No Gradient Normalisation |

|---|---|---|---|

| Emotionally Misleading | 12% | 30% | 14% |

| Contextual Ambiguity | 18% | 22% | 30% |

| Topic Drift | 10% | 12% | 16% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Im, S.-K.; Chan, K.-H. Modular Multi-Task Learning for Emotion-Aware Stance Inference in Online Discourse. Mathematics 2025, 13, 3287. https://doi.org/10.3390/math13203287

Im S-K, Chan K-H. Modular Multi-Task Learning for Emotion-Aware Stance Inference in Online Discourse. Mathematics. 2025; 13(20):3287. https://doi.org/10.3390/math13203287

Chicago/Turabian StyleIm, Sio-Kei, and Ka-Hou Chan. 2025. "Modular Multi-Task Learning for Emotion-Aware Stance Inference in Online Discourse" Mathematics 13, no. 20: 3287. https://doi.org/10.3390/math13203287

APA StyleIm, S.-K., & Chan, K.-H. (2025). Modular Multi-Task Learning for Emotion-Aware Stance Inference in Online Discourse. Mathematics, 13(20), 3287. https://doi.org/10.3390/math13203287