1. Introduction

In winter, the images of high-voltage power transmission lines (HPTLs) often suffer from haze, which makes the following vision tasks hard to perform effectively. Therefore, single-image dehazing of HPTL is an important preprocessing for the power system.

Image dehazing consistently remains a significant research challenge in machine vision. However, for the HPTL images, the heavy fog is non-homogeneous due to the spatially random distribution and varying concentration. Therefore, a strong nonlinear model is necessary to restore clear images from non-homogeneous haze. A more challenging issue is that the hazy images of HPTL lack paired clear labels, which are essential for supervised learning.

Previous works on domain adaptation for single-image dehazing show that the unsupervised learning approach is often tailored to the specific scenarios of the hazy images by designing specialized neural network architectures and knowledge transfer mechanisms. The key distinguishing characteristics of HPTL images are the structural features, which are represented as lines, corners, and topological features. In addition to these fundamental challenges, the structural characteristics of HPTL images introduce further complexity to the dehazing task.

To address the dehazing challenges, this paper proposes a novel dehazing neural network named FIF-RSCT-Net. Leveraging the characteristics of HPTL images, the network incorporates a Feature Intersection Fusion (FIF) mechanism and a Residual Separable Convolution Transformer (RSCT) module to enhance feature representation and reconstruction performance. The FIF-RSCT-Net acquires the dehazing knowledge through two stages: a supervised dehazing stage using labeled images from the original domain, and an unsupervised iterative learning stage that transfers the learned dehazing knowledge to unlabeled HPTL images. The principal contributions of this work are summarized as follows:

(1) In the FIF-RSCT-Net, an RSCT module is presented to model the topological relationships of the image. This architecture employs separable convolutions to generate Query, Key, and Value parameters of the Transformer, significantly improving computation efficiency. Furthermore, a FIF module is introduced to deeply fuse the features extracted by RSCT, which can improve the transferability of the model across diverse domains.

(2) A novel Spatial–Channel Feature Intersection (SCFI) module is proposed for FIF-RSCT Net to enhance the extraction of line and point features in shallow convolutional layers. This module incorporates a Channel Feature Intersection (CFI) method, which stacks the weighted channel features alternately to improve the network convergence.

(3) An iterative learning mechanism guided by a Line Segment Detector (LSD) loss function is designed to effectively transfer the learned dehazing knowledge from the labeled domain to unlabeled HPTL images. The unsupervised iterative learning method can improve the restoration of hazy HPTL images.

The remaining sections are structured as follows:

Section 3 details the architecture of the proposed FIF-RSCT-Net;

Section 4 explains the unsupervised iterative learning mechanism for dehazing knowledge transfer;

Section 5 presents the experiments and results analysis; and the conclusion is provided in

Section 6.

2. Background and Related Works

In the field of single-image dehazing, traditional methods typically operate without requiring paired labels for model training. A significant milestone in single-image dehazing was established by He et al. in 2009. They proposed the Dark Channel Prior (DCP) [

1] method, which is based on the classical atmospheric scattering model (ASM) and exploits the dark channel prior to estimate the transmission map for ASM. Subsequently, many methods inherited from DCP and introduced other information, such as context [

2], Rank-One Prior [

3], and saturation line prior [

4]. The traditional methods typically rely on manually physical models; hence, they are hard to describe the strong non-linear feature of the dehazing process.

Deep learning demonstrates powerful nonlinear modeling capacities, which have led to tremendous success [

5,

6]. Hence, it has been introduced into single-image dehazing. The fundamental architectures include convolutional neural networks (CNNs) [

7,

8,

9,

10,

11,

12,

13,

14] and transformers [

15,

16]. Researchers have developed multitudinous frameworks, DehazeNet [

17], multi-scale CNN [

18,

19,

20,

21], Ranking-CNN [

22], cascaded CNN [

23,

24], Color Transferred CNN [

25], All-in-One Dehazing Network [

26], Light-DehazeNet [

27], Quaternion neural networks [

27], and the application scenarios [

28,

29,

30] encompass remote sensing hazy image [

31,

32,

33,

34,

35], under water image [

36,

37,

38], autonomous driving [

39,

40,

41], coal mining [

42], and image restoration [

43,

44].

The aforementioned deep learning methods all require clear labels for supervised training. However, obtaining the clean image to serve as a labels for hazy HPTL images is often impractical in real-world scenarios. Consequently, the core challenge in HPTL single-image dehazing lies in developing a deep neural network-based dehazing method to restore images without paired labels.

In recent years, the research has progressively shifted toward unsupervised dehazing techniques to overcome this limitation. The methods primarily fall into two categories: the generator-discriminator network (GAN)-based framework and domain adaptation approaches.

Within the GAN framework, the clear images are synthesized by the generator, while the discriminator evaluates their authenticity against the characteristics of real clear images. However, the generator produces plausible, clear images without being rigidly bound to the details of the input hazy images. Hence, the prior knowledge is introduced into GAN to guide the clear image production process. DFP-Net [

45] incorporates dark channel prior, and UIDF-Net [

46] applies the frequency information. Similar approaches have been extensively explored in [

47,

48,

49,

50,

51]. Essentially, the GAN-based methods are supervised learning. They need a clear image as the reference criterion.

Domain adaptation for image dehazing follows a two-stage process. At first, the model is trained on hazy images with paired ground truth labels in the original domain. Then, the learned dehazing knowledge is transferred into the unlabeled target domain. Hence, the core challenge lies in designing an effective knowledge transfer mechanism to bridge the domain gap. To address this issue, the DCM-dehaze method [

52] establishes a bidirectional contour constraint optimization to enhance the feature capture ability from the pretrained domain to the target domain. TOLPnet [

53] presents a Typhoon Optimization algorithm to optimize the hyperparameters of a CNN model for knowledge transfer. In reference [

54], the transfer learning is separated into two stages, combining synthetic-to-real domain adaptation with degraded-to-clear domain transformation. UCL-Dehaze [

55] applies the patch and pixel- wise contrastive learning for unsupervised transfer. In Haze Style Transfer (HST) [

56], the multiple domains of hazy styles are defined based on K-means clustering, and the dehazing process is considered as transferring the special instance of HST into the no-haze style. In ContourDCP [

57], the training labels for the dehazed model are reformulated using the dark channel prior through a contour-based approach.

Obviously, for the non-homogeneous hazy images of HPTL, the neural network architecture can incorporate structural features into the knowledge transfer mechanism to enhance the restoration of power lines. However, in the hazy conditions of HPTL, these critical features frequently suffer from severe degradation or even complete disappearance. Consequently, the core challenge in unsupervised HPTL image dehazing lies in augmenting a pre-trained model’s capacity to (1) effectively extract and reconstruct these critical line, point, and topological features, and (2) maintain strong domain adaptability for HPTL scenarios. These two aspects represent the key contributions that distinguish our work from existing methods.

3. Architecture

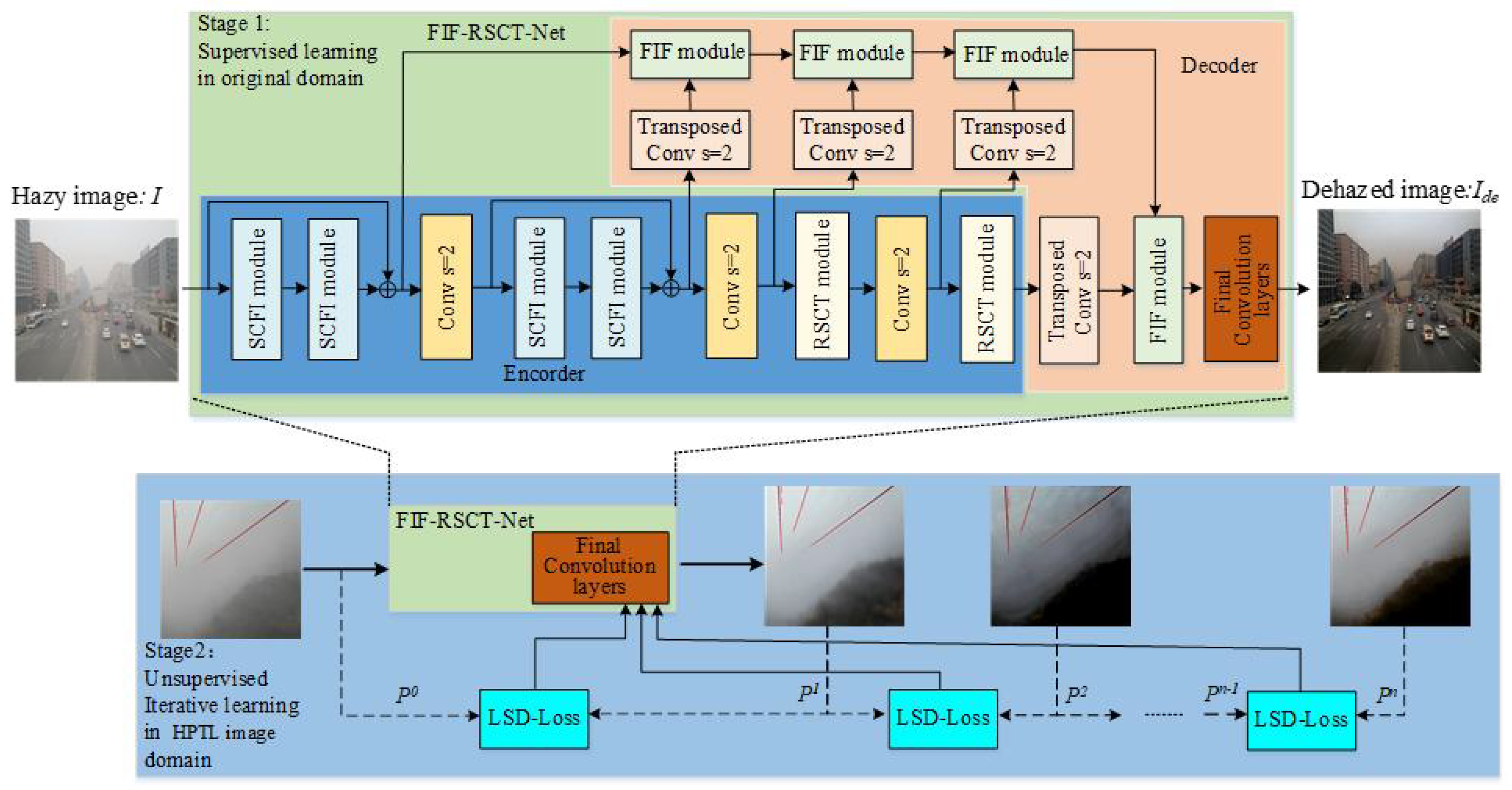

The proposed novel HPTL image dehazing neural network, FIF-RSCT-Net, is illustrated in

Figure 1. The acquisition of dehazing knowledge is implemented through supervised and unsupervised iterative learning stages.

Firstly, in the supervised learning stage, the FIF-RSCT-Net is trained in the labeled original image domain. In the unsupervised iterative learning stages, the final convolution layers are fine-tuned through iterative learning, which enables effective transfer of dehazing knowledge to the HPTL image domain under the guidance of the LSD loss function.

The critical challenge of FIF-RSCT-Net for HPTL image dehazing lies in enhancing the model’s capability to capture generalizable knowledge for image dehazing; meanwhile, the learned dehazing knowledge should be more readily transferred to HPTL image domain.

To address this issue, in the FIF-RSCT-Net, the SCFI modules, RSCT modules, and FIF modules are proposed and integrated into the classical encoder–decoder structure. In the encoder, the paired SCFI module constitutes a residual network to jointly extract spatial and channel features from shallow layers. While the deep layers are composed of RSCT modules. The FIF modules are applied to fuse the extracted features and restore the clear image.

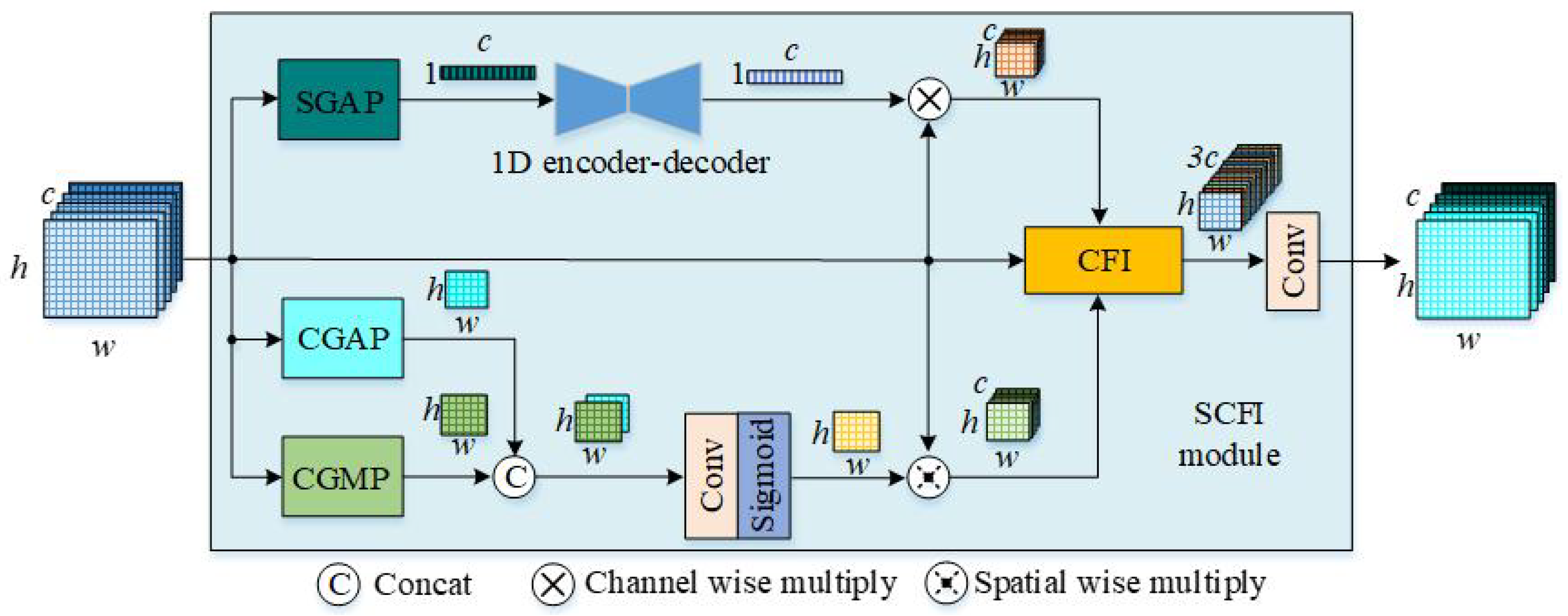

3.1. SCFI Module

In a hazy HPTL image, the shallow features are typically explicit representations of line structures and point features. These low-level features serve as crucial guidance for the decoder during clear image reconstruction. Notably, such features demonstrate strong domain adaptability, making them particularly transferable to the target domain. Motivated by this observation, this paper proposes the SCFI module, whose structure is detailed in

Figure 2.

Since a convolutional network provides a straightforward approach to mapping spatial representations into channel dimensions, the primary concept of the SCFI module is to integrate spatial and channel-wise features, for which a weighted fusion mechanism is proposed.

The channel weights are generated through a spatial global average pooling (SGAP) followed by a 1D encoder–decoder. The SGAP computes the mean activation of each channel to represent the average energy distribution, which is decomposed into the channels. Subsequently, the channel mean features are transferred into weight coefficients through the 1D encoder–decoder network.

The spatial weights are derived from a dual-branch pooling mechanism composed of channel global maximum pooling (CGMP) and channel global average pooling (CGAP). These complementary operations extract both peak and mean activations of the channels. Then, the features are concatenated to form the spatial energy descriptors that will be converted into the weight coefficients by a convolution and sigmoid activation function. Assigned coefficients serve as spatial and channel descriptors, which are then fused by the CFI module.

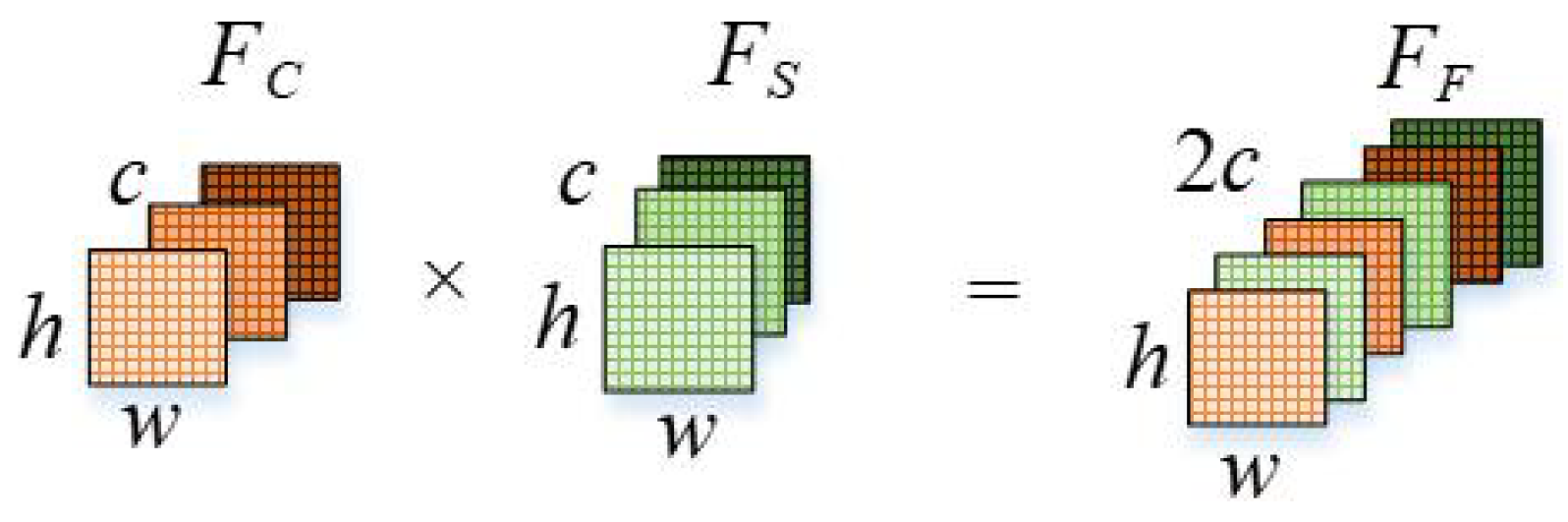

3.2. CFI Module

The CFI module is motivated by the deep feature fusion. The channel-weighted features and spatial-weighted features constitute distinct complementary representations of the original feature in different feature spaces. It implies that the feature fusion operation essentially performs a mapping from both channel and spatial spaces to an integrated feature space .

The common fusion approaches typically employ simple concatenation, where is appended behind to form the combined feature . However, this method would lead to abrupt gradient transitions at feature boundaries. From a theoretical perspective, such a discontinuous characteristic poses significant mathematical modeling challenges; hence, the fusion network would be hard to converge during training process.

To address these limitations, this paper introduces the CFI module, which implements a more sophisticated fusion strategy as illustrated in

Figure 3.

The feature fusion is implemented through an interleaved channel stacking strategy. This mechanism smoothens the gradual transitions between the distinct feature spaces and . The smooth fusion features provide more stable gradient flow during backpropagation to improve the convergence of the network.

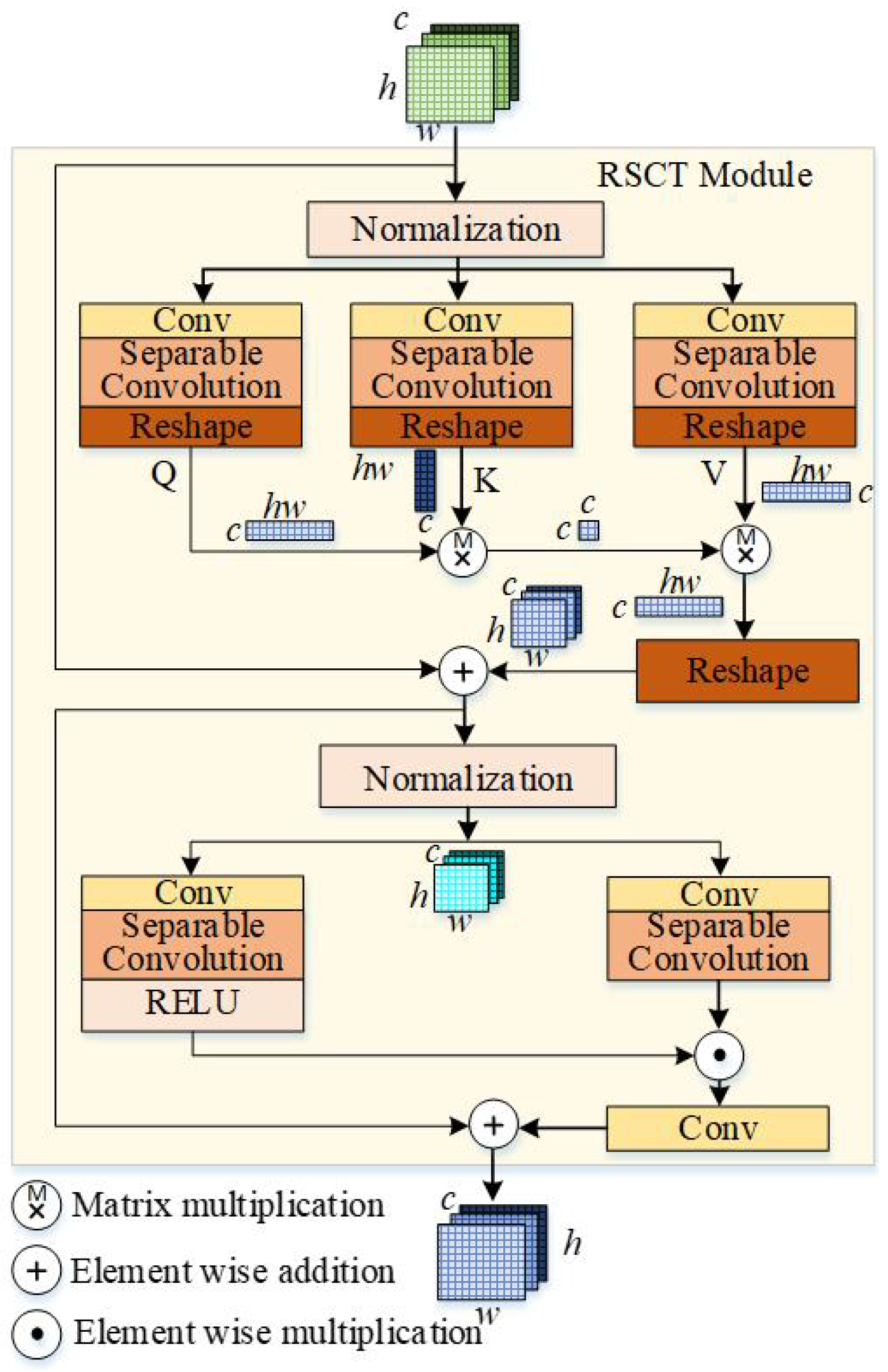

3.3. RSCT Module

The deep features in the encoder inherently describe global relationships. Therefore, the topological features of the HPTL image could be explicitly represented in the deep layers of the encoder. Transformer is excellent at modeling such global dependencies effectively through the self-attention mechanism, which operates via trainable Query (

Q), Key (

K), and Value (

V) matrices. Generally, these matrices are initialized randomly. However, for the single-image dehazing of HPTL, the

Q,

K, and

V demands more sophisticated feature representation to ensure the dehazing knowledge transfer. Consequently, in this section, the RSCT module is proposed to achieve this purpose. The structure is shown in

Figure 4.

The primary innovation of the RSCT module lies in the reconstruction of the Q, K, and V matrices, which are replaced by the separable convolution.

The convolution operations inherently capture local relationships of the input information. It implies that the modified Q, K, and V can represent the topology information of the HPTL image. Furthermore, the dual residual connections structure in the RSC-Transformer can avoid the gradient vanishing and explosion during training. Therefore, the RSCT module can converge more quickly. That implies the dehazing knowledge represented by the RSCT module would be more easily transferred from the original domain to the HPTL image domain.

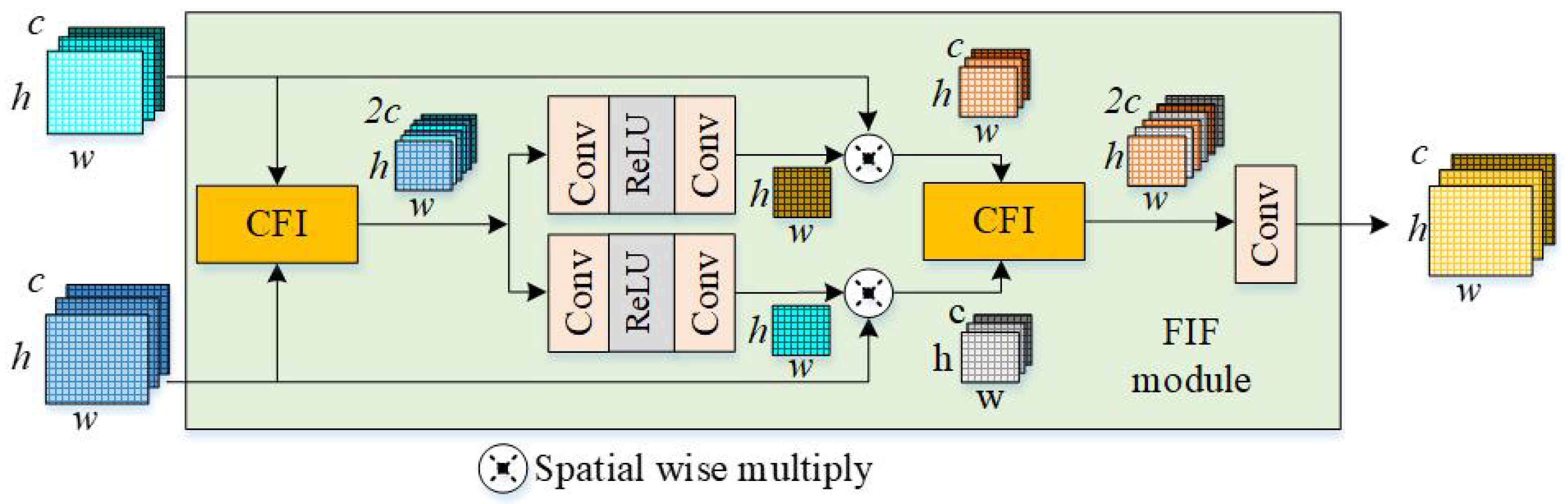

3.4. FIF Module in Decoder

The decoder in the proposed architecture is designed to reconstruct a clear image from the extracted multi-scale features. As illustrated in

Figure 1, the restoration process is guided by four distinct scale features. To enable effective feature fusion, each scale feature should be upsampled to the same spatial resolution using transposed convolution layers with stride = 2. The proposed FIF module is depicted in

Figure 5. Firstly, the FIF module computes the spatial weights for each position in the feature maps. Then, the weights are multiplied by the input feature spatially to emphasize spatially relevant features. Following the same principle as the CFI module, the fusion process employs an intersection mechanism to optimally combine features while maintaining the gradient stability.

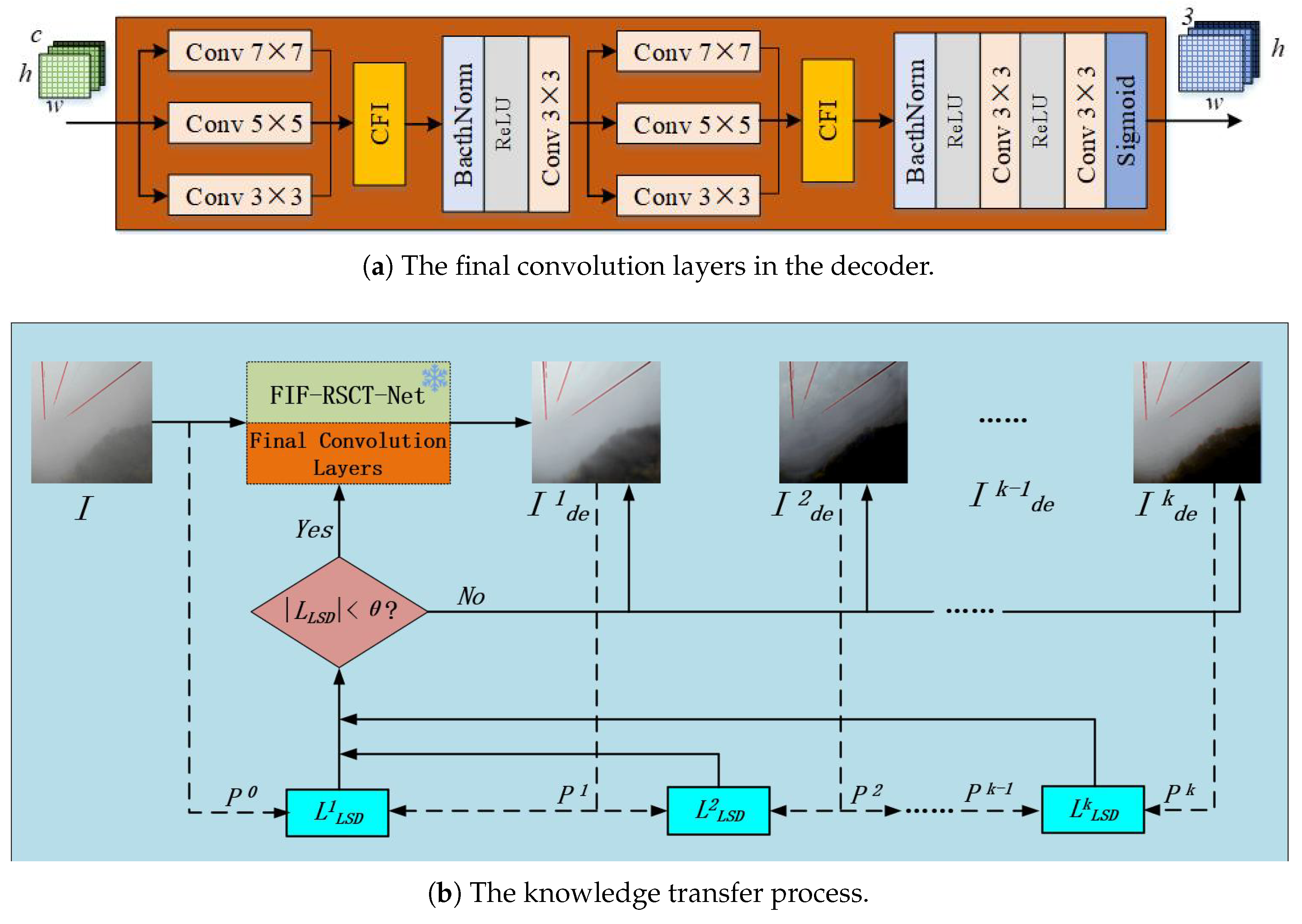

It is important to note that the decoder incorporates the final convolution layers to restore the image. These layers will be fine-tuned during the dehazing knowledge transfer with unsupervised iterative learning. The final convolution layers follow a conventional structure that is illustrated in

Figure 6a.

The FIF-RSCT-Net training in the original domain employs a supervised learning approach. Let the dehazed image and label be denoted by

and

. The loss function is defined using the smooth L1 loss, formulated as follows:

where the symbol

C and

are, respectively, the channel and pixel index;

H and

W are the height and width of the image.

4. Unsupervised Iterative Learning for Dehazing Knowledge Transfer

The learned dehazing knowledge from the original domain requires effective transfer to the unlabeled HPTL image domain. To address this, this paper proposes an iterative learning mechanism that fine-tunes the final convolution layers of the dehazing neural network, by which the learned dehazing knowledge can be transferred to HPTL image domain.

For the HPTL images, the critical information is a topological structure that is always represented through line structure. Consequently, line features in a hazy HPTL image can serve as the primary targets for dehazing. Hence, we employ the Line Segment Detector (LSD) [

58] to monitor line visibility in the network output. The iterative learning process continues until the visible lines stop increasing.

For the

iteration, suppose network output is

, the result of LSD is denoted by

, written as follows:

where

denotes the pixel index.

is the function of LSD. We define the loss function for fine tuning the final convolutional layers as follows:

Theoretically, if the lines in the HPTL image are perfectly reconstructed, the

is close to zero. In practice, we empirically set a maximum iteration number

and minimum threshold value

, which provides a good balance between visual line completeness and computational efficiency. If

or

, the iterative learning stops. The unsupervised iterative learning mechanism is illustrated in

Figure 6b and Algorithm 1.

| Algorithm 1 Unsupervised Iterative Learning for Dehazing Knowledge Transfer |

Input: A hazy HPTL image I, iterative number , maximum iteration number , minimum threshold value .

NN: the final convolutional layers of FIF-RSCT-Net will be fine-tuned, while the other layers weights are frozen.

Output: Dehazed HPTL image

For ; ;

Get the neural network output =NN(I) If : Check the lines in the image by Equation ( 2), and get Continue For-loop Else Check the lines in the image by Equation ( 2), and get Calculate the loss for NN by Equation ( 3) If : Break For-loop Else: Loss Back Propogation to adjust weights of the final convolution layers End if End if

End For loop

Output |

5. Experiment and Result Analysis

5.1. The Dataset and Experiment Procedures

The proposed methodology comprises two key components. The first is the developed FIF-RSCT-Net to enhance the feature extraction in the supervised learning, and the second is the dehazing knowledge transfer through the iterative learning method based on LSD loss. Therefore, in the experiment, the two different kinds of datasets are respectively adopted to validate the effectiveness of each component.

The supervised learning datasets include I-Haze [

59], O-Haze [

60], NH-Haze [

61], and SOTS [

62]. Among which, I-Haze, O-Haze, and NH-Haze are the natural hazy environment datasets, while SOTS is the synthetic hazy image dataset. In the dehazing knowledge transfer step, the natural HPTL images with different concentrations are applied to iteratively fine-tuning the dehazing neural network. In the experiment, five classical dehazing algorithms, DCP, FFANet [

63], GCANet [

64], GDNet [

65], and MSBDN [

66], are introduced for comparative analysis.

In the supervised learning stage, because the training samples have clear labels, the performance evaluation indicators are Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index Measure (SSIM). In unsupervised iterative learning, the subjective assessment is adopted to evaluate the results, since the paired ground truth of the HPTL images is lacking.

5.2. Training Details

The configuration of computer server applied for training is as follows: CPU: CEOX Gold 6146 × 2, RAM: 192 GB, GPU RTX 2080Ti × 6, video memory: 66 GB, OS: Ubuntu 20.04, and Pytorch version: 1.8.0. In the supervised training, the optimizer adopts Adadelta. The learning rate scheduling is the cosine annealing learning rate, and the initial is 0.001. The epoch is set as 100. During the unsupervised iterative learning, the weights except the final convolution layers are frozen. It should be noted that the gradient backpropagation of loss function Equation (

3) cannot directly adopt the loss back in PyTorch if the LSD is implemented by numpy. In our code, the loss gradient is computed by the custom function. And the parameters are set as maximum iteration

and the threshold

.

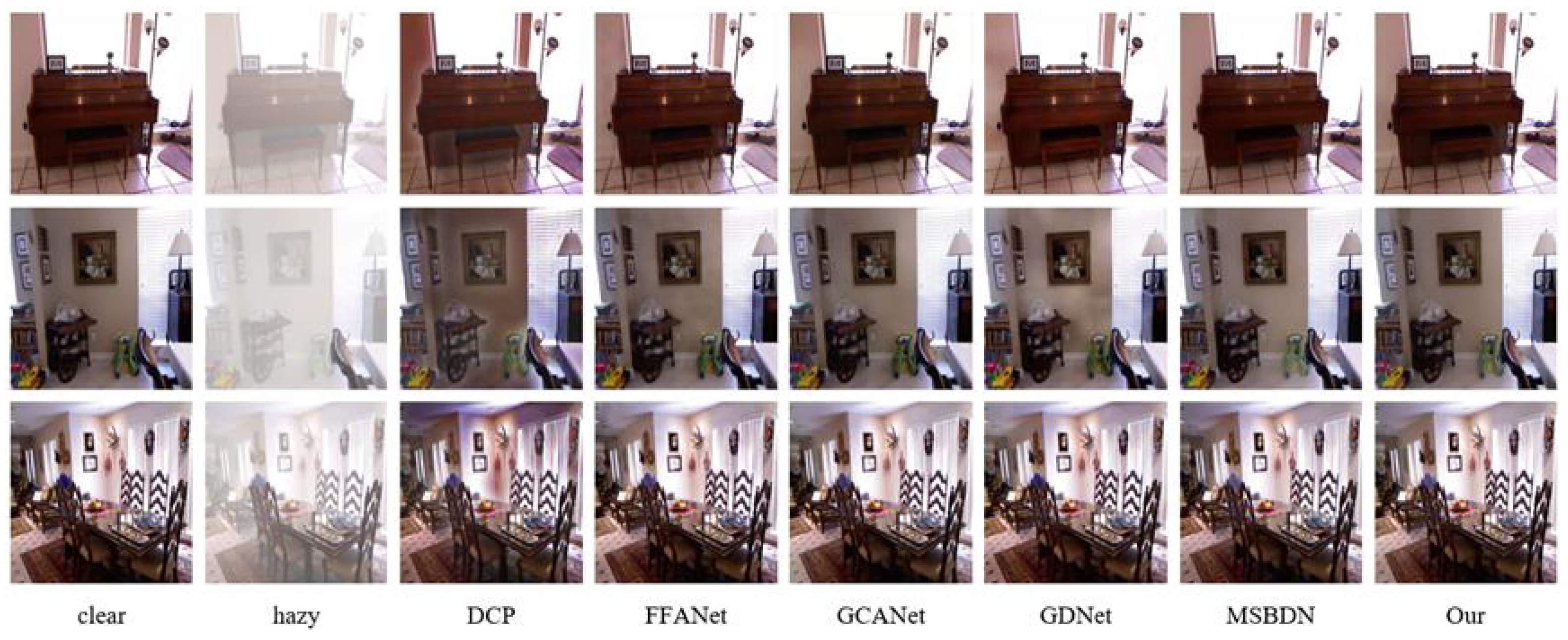

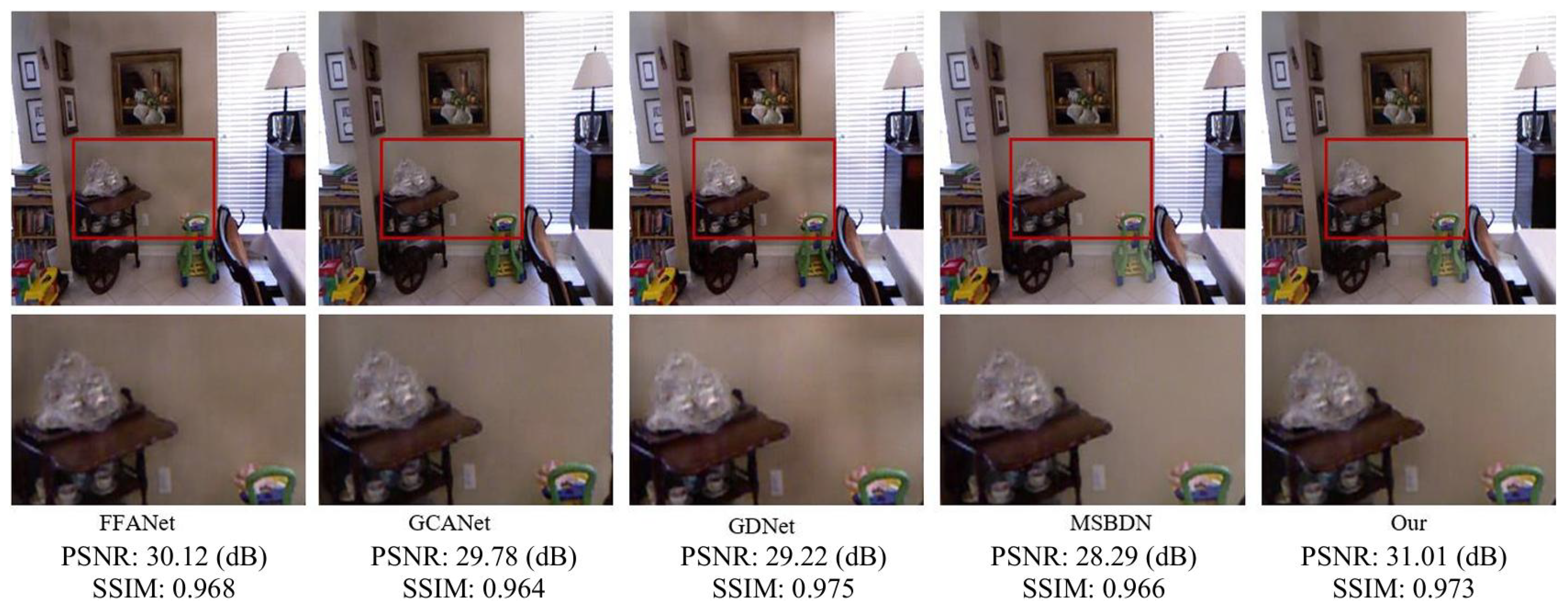

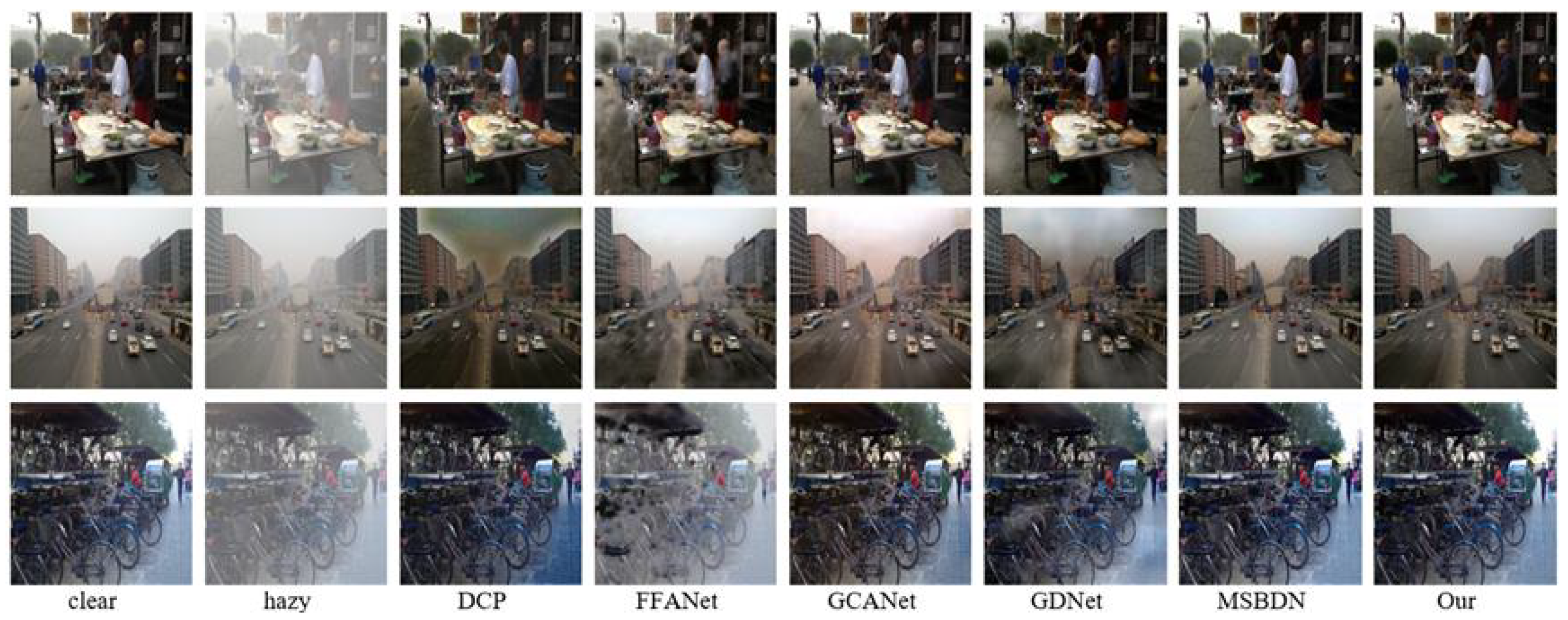

5.3. The Dehazing Experiment on Synthetic Foggy Image Dataset of SOTS

The SOTS dataset contains synthetically generated haze effects applied to both indoor and outdoor scenes. The image dehazing results for indoor images are shown in

Figure 7.

As illustrated in

Figure 7, all the methods demonstrate effectiveness in image dehazing. However, the restored image obtained by DCP exhibits an overall dark appearance and generates noticeable artifacts around object boundaries. In contrast, FFANet, GCANet, GDNet, MSBDN, and our proposed method produce significantly milder artifacts. The primary differences among these algorithms lie in the finer details, as evidenced by the close-up comparisons in

Figure 8.

As shown in

Figure 8, the white wall region, marked with a red box, exhibits noticeable dark blocks in the dehazed results of GDNet, GCANet, and FFANet. Although these artifacts are significantly reduced in MSBDN, the brightness is elevated slightly. Therefore, our method achieves the best dehazing performance.

The dehazing results for outdoor images from the SOTS dataset are presented in

Figure 9. It is obvious that the DCP fails to restore the sky region naturally, introducing noticeable artifacts. The details comparative analysis of the other algorithms are provided in

Figure 10.

In the dehazed details, FFANet exhibits conspicuous dark block artifacts. Notably, both the tree (Region A) and stormwater drainage grate (Region B) are inadequately restored in most methods. While GDNet and our algorithm demonstrate superior performance for the tree reconstruction, GDNet still presents a dark region near the drainage grate. In contrast, our algorithm achieves optimal restoration for both structural elements, demonstrating robust dehazing capability.

The quantitative results of PSNR and SSIM about the dehazing effectiveness on SOTS are reported in

Table 1. Our algorithm obtains the best PSNR both in indoor and outdoor dehazing images and achieves the best and second-best SSIM in the outdoor and indoor image dehazing, respectively.

The Dehazing Experiment on Nature Foggy Image Datasets

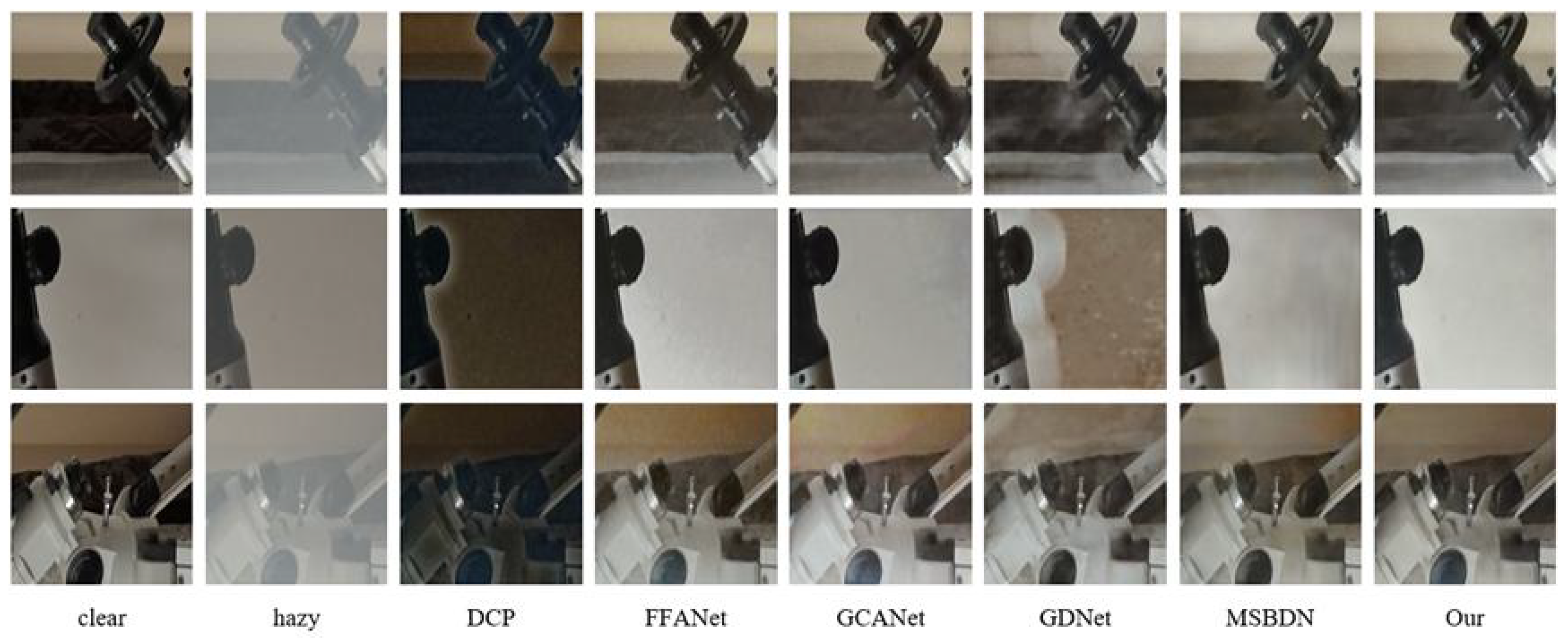

(1) The experiment on the homogeneous hazy image

For the natural hazy image datasets I-Haze and O-Haze, the fog in the two datasets could be considered as homogeneous because the fog concentration remains minimal fluctuation.

The comparative experiment results are presented in

Figure 11 and

Figure 12 respectively. As we can find, FFANet, GCAet, MSBDN, and our method demonstrate effective dehazing performance. However, in

Figure 11, MSBDN and FFANet exhibit slight darkened patches in the white background regions. In

Figure 12, the four algorithms show no significant differences in dehazing performance through subjective evaluation.

Quantitative evaluations further confirm the superiority of our approach. As shown in

Table 2, our method achieves the highest PSNR and SSIM scores on both I-Haze and O-Haze datasets, outperforming all comparative algorithms.

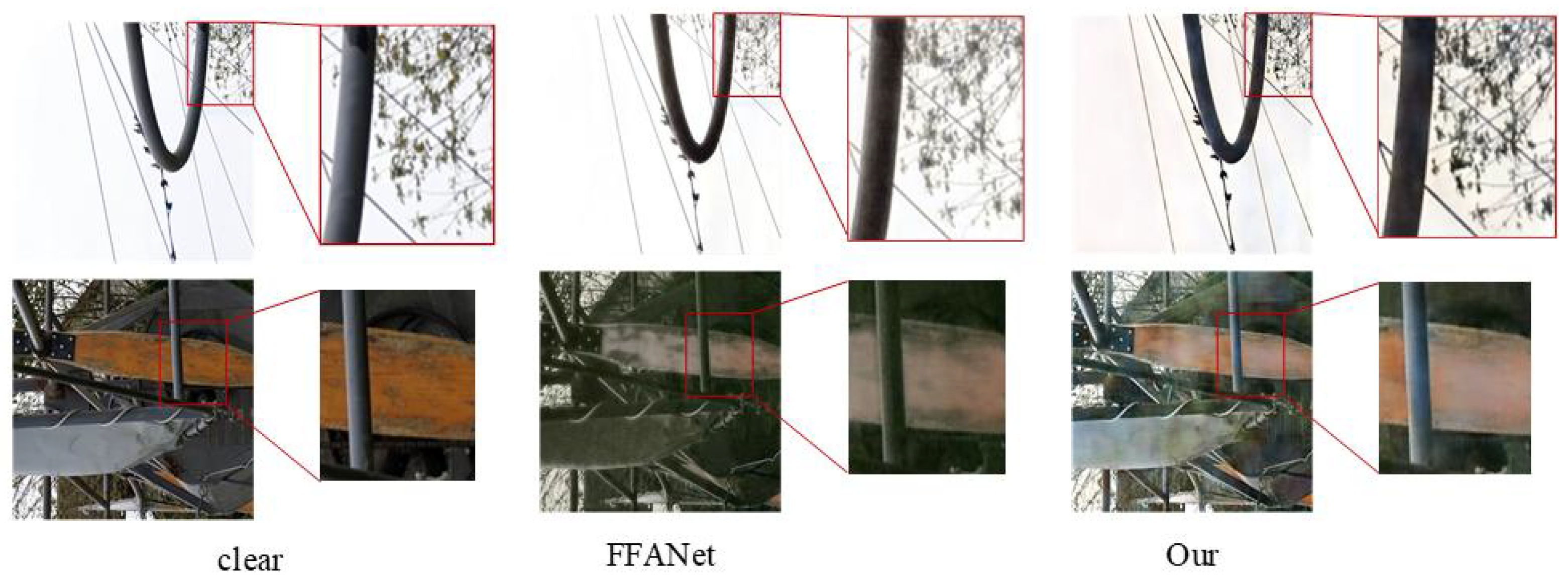

(2) The experiment on the non-homogeneous hazy image

Significant variations in dehazing performance are observed on the NH-Haze dataset, where the non-homogeneous fog distribution exhibits random spatial patterns and uneven concentration levels. As demonstrated in

Figure 13, only FFANet and our proposed method achieve satisfactory dehazing results.

The detailed comparison between these two approaches is presented in

Figure 14. Our method demonstrates superior performance in both structural preservation (particularly for fine leaf details) and color fidelity. Quantitative evaluations further confirm this advantage, with our method achieving state-of-the-art metrics of 21.55 dB PSNR and 0.765 SSIM, outperforming all comparative methods.

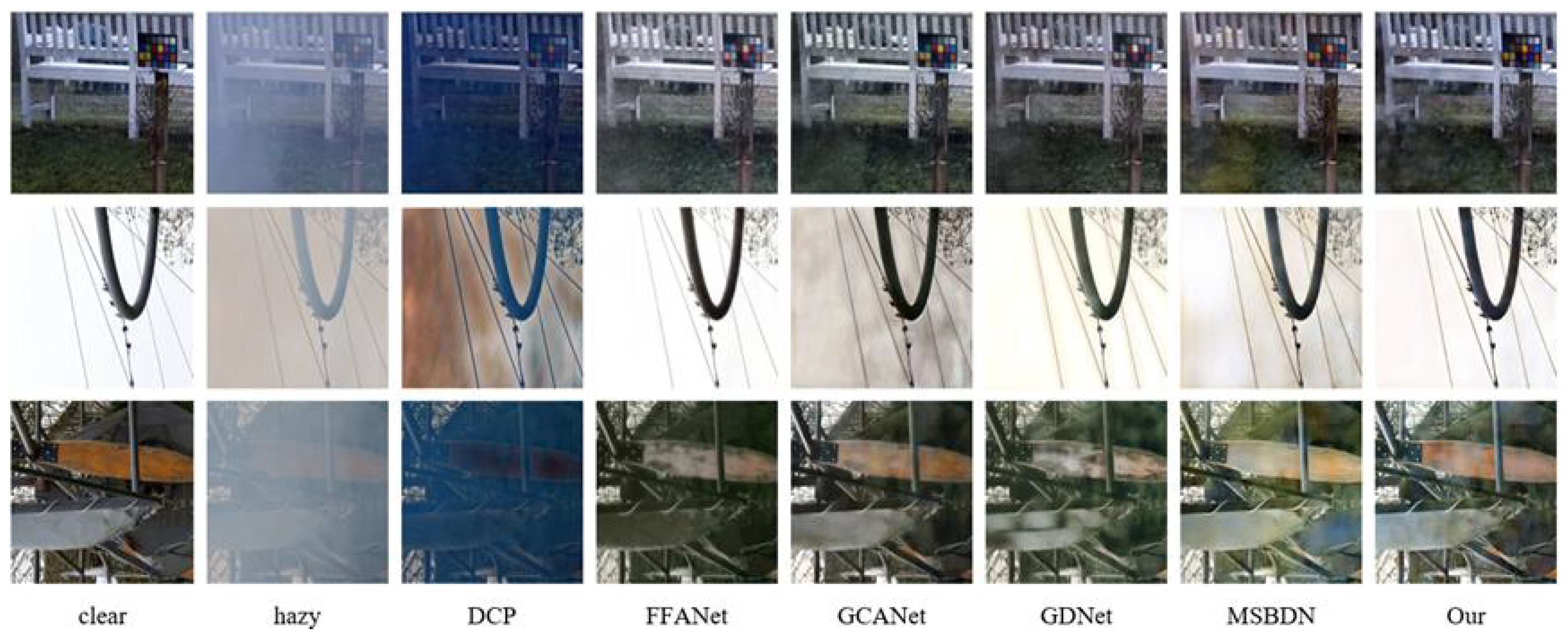

5.4. The Dehazing Experiment on HPTL Images

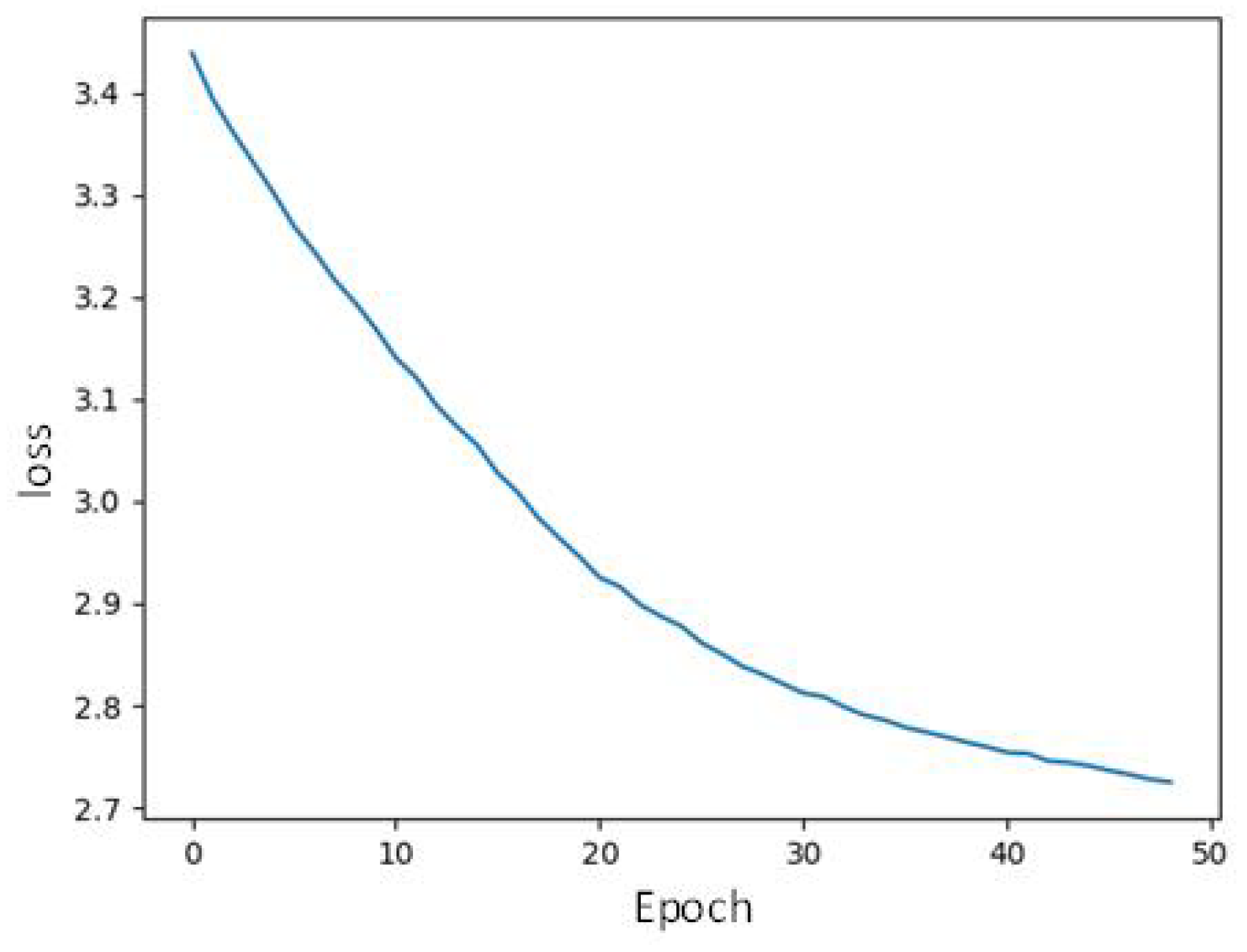

The dehazing knowledge of the neural network is transferred by the proposed unsupervised iterative learning. As demonstrated by the training loss convergence shown in

Figure 15, the LSD loss can converge to the optimal station. It indicates that the final convolution layers undergo gradual fine-tuning until the dehazing knowledge transfer is completed.

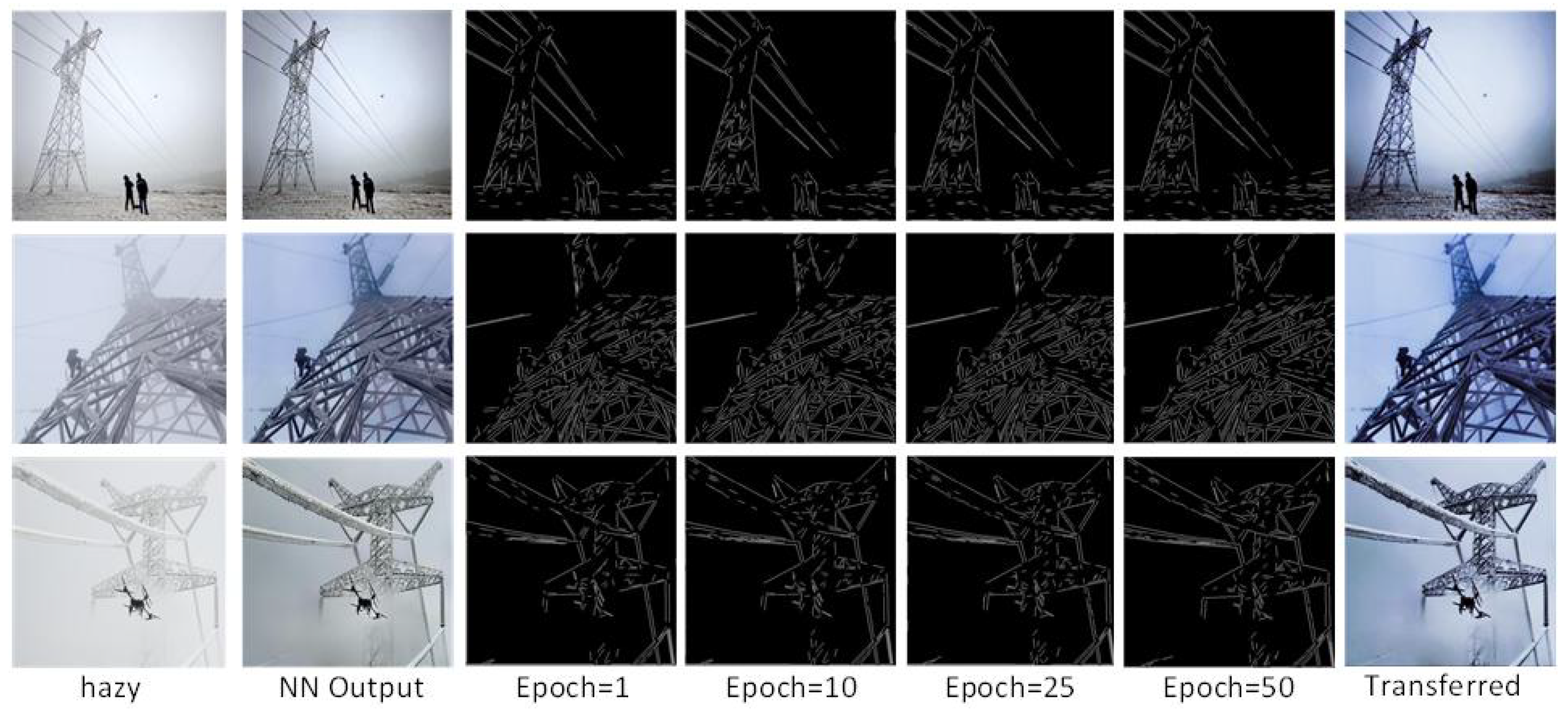

The lines detected by LSD are illustrated in

Figure 16, where the “NN Output” image denotes the dehazed result of the neural network, and the “Transferred” means the output of unsupervised iterative learning. It is obvious that some missed line segments can be identified during iterations and the fog covering HPTL has been dehazed.

To comprehensively evaluate the proposed method’s performance, we conducted extensive experiments on the natural HPTL dataset containing images with varying fog densities (light, moderate, and dense). We compared our approach with five dehazing algorithms: DCP, FFANet, GCANet, GDNet, and MSBDN. All methods were applied directly to the hazy images under identical conditions for fair comparison. The experimental results are presented in

Figure 17,

Figure 18 and

Figure 19, which demonstrate the comparative performance across different fog concentrations.

As presented in the results, DCP fails to restore the sky regions, exhibiting significant artifacts. The dark patches can be found in GDNet and FFANet, as shown in

Figure 16 and

Figure 17. Among comparative methods, GCANet, MSBDN, and our algorithm demonstrated superior performance on subjective perception. The GCANet results have a yellow color cast. While the unsupervised iterative learning method demonstrates superior capability in preserving and enhancing linear structures, maintaining natural color balance, and achieving visually pleasing results without common artifacts.

6. Conclusions

This paper has presented a FIF-RSCT-Net for HPTL image dehazing under the hybrid supervised and unsupervised iterative learning approach. In the network, the novelty SCFI, FIF, and RSCT modules have been proposed according to the HPTL image characteristics. It has learned the generalized dehazing knowledge that can be more efficiently transferred to the HPTL image domain. In the unsupervised iterative learning, the LSD loss function has been defined to guide the dehazing knowledge transfer, by which the power transmission line segments can be restored effectively.

Comparative experiments have validated the effectiveness of our approach on I-Haze, O-Haze, NH-Haze, SOTS, and HPTL image datasets. The proposed method achieves the best dehazing performance on supervised learning and knowledge transfer. In the non-homogeneous haze datasets, the method achieves PSNR scores of 23.13 dB, 25.80 dB, and 21.55 dB, alongside SSIM values of 0.863, 0.774, and 0.765 on I-Haze, O-Haze, and NH-Haze, respectively.

Future work will incorporate the specific features of HPTL images, such as local topological and global consistency, into the unsupervised iterative learning stage to enhance the naturalness of restored images.

Author Contributions

Conceptualization, X.C. and K.X.; methodology, X.C., K.X., and W.Y.; software, X.C., W.Y., H.S., and K.W.; validation, X.C., K.X., and W.Y.; formal analysis, X.C., K.X., and W.Y.; data curation, K.X., W.Y., and H.S.; writing—original draft preparation, X.C. and K.X.; writing—review and editing, X.C., W.Y., H.S., and K.W.; supervision, H.S. and K.W.; project administration, X.C., K.X., and W.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CNN | Convolutional Neural Network |

| GAN | Generator-Discriminator Network |

| HST | Haze Style Transfer |

| LSD | Line Segment Detector |

| CFI | Channel Feature Intersection |

| RSCT | Residual Separable Convolution Transformer |

| FIF-RSCT | Feature Intersection Fusion and Residual Separable Convolution Transformer |

| SCFIM | Spatial–Channel Feature Intersection Module |

| RSC-Transformer | Residual Separable Convolution Transformer |

| SGAP | Spatial Global Average Pooling |

| CGMP | Channel Global Maximum Pooling |

| CGAP | Channel Global Average Pooling |

| PSNR | Peak Signal-to-Noise Ratio |

| SSIM | Structural Similarity Index Measure |

| HPTL | High-Voltage Power Transmission Line |

References

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [CrossRef]

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient image dehazing with boundary constraint and contextual regularization. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 617–624. [Google Scholar]

- Liu, J.; Liu, R.W.; Sun, J.; Zeng, T. Rank-one prior: Real-time scene recovery. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 8845–8860. [Google Scholar] [CrossRef]

- Ling, P.; Chen, H.; Tan, X.; Jin, Y.; Chen, E. Single image dehazing using saturation line prior. IEEE Trans. Image Process. 2023, 32, 3238–3253. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1–9. [Google Scholar] [CrossRef]

- Zhang, S.; He, F. DRCDN: Learning deep residual convolutional dehazing networks. Vis. Comput. 2020, 36, 1797–1808. [Google Scholar] [CrossRef]

- Li, Y.; Liu, Y.; Yan, Q.; Zhang, K. Deep dehazing network with latent ensembling architecture and adversarial learning. IEEE Trans. Image Process. 2020, 30, 1354–1368. [Google Scholar] [CrossRef]

- Meng, J.; Li, Y.; Liang, H.; Ma, Y. Single-image dehazing based on two-stream convolutional neural network. J. Artif. Intell. Technol. 2022, 2, 100–110. [Google Scholar] [CrossRef]

- Liu, C.; Guo, J.; Zhang, X.; Wu, D.; Yu, L. Expert Credibility Prediction Model Based on Fuzzy C-Means Clustering and Similarity Association. IEEE Trans. Fuzzy Syst. 2025, 33, 2719–2729. [Google Scholar] [CrossRef]

- Liu, C.; Zhang, X.; Zhao, H.; Liu, Z.; Xi, X.; Yu, L. LMCBert: An Automatic Academic Paper Rating Model Based on Large Language Models and Contrastive Learning. IEEE Trans. Cybern. 2025, 55, 2970–2979. [Google Scholar] [CrossRef]

- Liu, C.; Zhang, X.; Xi, X.; Wan, C.; Yu, L. A novel diversity-based selective ensemble method for small sample expert credibility assessment. Sci. China Technol. Sci. 2025, 68, 1720407. [Google Scholar] [CrossRef]

- Liu, C.; Bing, R.; Xi, X.; Dai, W.; Yuan, G. Heterogeneous Graph Structure Learning for Experts Selection in Academic Evaluation. IEEE Trans. Comput. Soc. Syst. 2025. early access. [Google Scholar] [CrossRef]

- Dudhane, A.; Patil, P.W.; Murala, S. An end-to-end network for image de-hazing and beyond. IEEE Trans. Emerg. Top. Comput. Intell. 2020, 6, 159–170. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 154–169. [Google Scholar]

- Ren, W.; Pan, J.; Zhang, H.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks with holistic edges. Int. J. Comput. Vis. 2020, 128, 240–259. [Google Scholar] [CrossRef]

- Jiang, H.; Lu, N. Multi-scale residual convolutional neural network for haze removal of remote sensing images. Remote Sens. 2018, 10, 945. [Google Scholar] [CrossRef]

- Zhang, J.; Tao, D. FAMED-Net: A fast and accurate multi-scale end-to-end dehazing network. IEEE Trans. Image Process. 2019, 29, 72–84. [Google Scholar] [CrossRef]

- Song, Y.; Li, J.; Wang, X.; Chen, X. Single image dehazing using ranking convolutional neural network. IEEE Trans. Multimed. 2017, 20, 1548–1560. [Google Scholar] [CrossRef]

- Li, C.; Guo, J.; Porikli, F.; Fu, H.; Pang, Y. A cascaded convolutional neural network for single image dehazing. IEEE Access 2018, 6, 24877–24887. [Google Scholar] [CrossRef]

- Haouassi, S.; Wu, D. Image dehazing based on (CMT net) cascaded multi-scale convolutional neural networks and efficient light estimation algorithm. Appl. Sci. 2020, 10, 1190. [Google Scholar] [CrossRef]

- Yin, J.L.; Huang, Y.C.; Chen, B.H.; Ye, S.Z. Color transferred convolutional neural networks for image dehazing. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 3957–3967. [Google Scholar] [CrossRef]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. Aod-net: All-in-one dehazing network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4770–4778. [Google Scholar]

- Ullah, H.; Muhammad, K.; Irfan, M.; Anwar, S.; Sajjad, M.; Imran, A.S.; De Albuquerque, V.H.C. Light-DehazeNet: A novel lightweight CNN architecture for single image dehazing. IEEE Trans. Image Process. 2021, 30, 8968–8982. [Google Scholar] [CrossRef]

- Gong, X.; Du, H.; Zheng, Z. MFFormer: Multi-level boosted transformer expanded by feature interaction block. Signal Image Video Process. 2025, 19, 96. [Google Scholar] [CrossRef]

- Tran, L.A.; Park, D.C. Distilled pooling transformer encoder for efficient realistic image dehazing. Neural Comput. Appl. 2025, 37, 5203–5221. [Google Scholar] [CrossRef]

- Sun, H.; Li, S.; Du, B.; Zhang, L.; Ren, D.; Tong, L. Dynamic-Routing 3D-Fusion Network for Remote Sensing Image Haze Removal. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5606416. [Google Scholar] [CrossRef]

- Gonde, K.; Patil, P.W.; Vipparthi, S.K.; Murala, S.; Patil, P.; Kimbahune, V. AeroDehazeNet: Exploiting Selective Multi-Scale Transformers for Aerial Image Dehazing. In Proceedings of the 2024 IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Niagara Falls, ON, Canada, 15–16 July 2024; pp. 1–8. [Google Scholar]

- Zheng, Y.; Su, J.; Zhang, S.; Tao, M.; Wang, L. Dehaze-tggan: Transformer-guide generative adversarial networks with spatial-spectrum attention for unpaired remote sensing dehazing. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5634320. [Google Scholar] [CrossRef]

- Dong, H.; Song, T.; Qi, X.; Jin, G.; Jin, J.; Ma, L. Prompt-Guided Sparse Transformer for Remote Sensing Image Dehazing. IEEE Geosci. Remote Sens. Lett. 2024, 21, 8003505. [Google Scholar] [CrossRef]

- Mallesh, S.; Haripriya, D. Efficient transformer architecture for extraction of global and local dependencies to dehaze RS satellite images. Signal Image Video Process. 2024, 18, 8899–8909. [Google Scholar] [CrossRef]

- Li, S.; Zhou, Y.; Kung, S.Y. PSRNet: A Progressive Self-Refine Network for Lightweight Optical Remote Sensing Image Dehazing. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5648413. [Google Scholar] [CrossRef]

- Wu, C.; Yu, S.; Luo, T.; Rao, Q.; Hu, Q.; Xu, J.; Zhang, L. Underwater image enhancement via dehazing and color restoration. arXiv 2024, arXiv:2409.09779. [Google Scholar] [CrossRef]

- Luan, X.; Fan, H.; Wang, Q.; Yang, N.; Liu, S.; Li, X.; Tang, Y. FMambaIR: A hybrid state space model and frequency domain for image restoration. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4201614. [Google Scholar] [CrossRef]

- Saad Saoud, L.; Hussain, I. Gated Fusion Network with Reprogramming Transformer Refiners For Adaptive Underwater Image Dehazing. SSRN 2025, 5297591. [Google Scholar]

- Zhang, Z.; Feng, Z.; Long, A.; Wang, Z. LWTD: A novel light-weight transformer-like CNN architecture for driving scene dehazing. Int. J. Mach. Learn. Cybern. 2025, 16, 1303–1326. [Google Scholar] [CrossRef]

- Cui, Y.; Zhu, J.; Knoll, A. Enhancing perception for autonomous vehicles: A multi-scale feature modulation network for image restoration. IEEE Trans. Intell. Transp. Syst. 2025, 26, 4621–4632. [Google Scholar] [CrossRef]

- Li, Z.; Kuang, W.; Bhanu, B.; Deng, Y.; Chen, Y.; Xu, K. Low-Visibility Scene Enhancement by Isomorphic Dual-Branch Framework With Attention Learning. IEEE Trans. Intell. Transp. Syst. 2025, 26, 7127–7141. [Google Scholar] [CrossRef]

- Jing, Z.; Yingying, F.; Hongan, L.; Sizhe, D.; Jinming, M. Safety helmet recognition algorithm in spray dust removal scenario of coal mine working face. Min. Saf. Environ. Prot. 2024, 51, 9–16. [Google Scholar]

- Wu, J.; Liu, Z.; Huang, F.; Luo, R. Adaptive haze pixel intensity perception transformer structure for image dehazing networks. Sci. Rep. 2024, 14, 22435. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Hu, B.; Liu, F.; Wu, X.; Ding, W.; Zhou, J. IPT-ILR: Image pyramid transformer coupled with information loss regularization for all-in-one image restoration. IEEE Trans. Circuits Syst. Video Technol. 2024, 35, 4341–4356. [Google Scholar] [CrossRef]

- Liu, J.; Wang, S.; Chen, C.; Hou, Q. DFP-Net: An unsupervised dual-branch frequency-domain processing framework for single image dehazing. Eng. Appl. Artif. Intell. 2024, 136, 109012. [Google Scholar] [CrossRef]

- Zhao, A.; Li, L.; Liu, S. UIDF-Net: Unsupervised Image Dehazing and Fusion Utilizing GAN and Encoder–Decoder. J. Imaging 2024, 10, 164. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, Y.; Liu, Z. Unsupervised dehazing of multi-scale residuals based on weighted contrastive learning. Signal Image Video Process. 2025, 19, 1–11. [Google Scholar] [CrossRef]

- Guo, X.; Tao, Y.; Zhang, Y.; Xu, B.; Zheng, J.; Ji, G. A Robust Image Dehazing Model Using Cycle Generative Adversarial Network with an Improved Atmospheric Scatter Model. In Proceedings of the International Conference on Artificial Neural Networks, Lugano, Switzerland, 17–20 September 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 273–286. [Google Scholar]

- Liang, Y.; Li, S.; Cheng, D.; Wang, W.; Li, D.; Liang, J. Image dehazing via self-supervised depth guidance. Pattern Recognit. 2025, 158, 111051. [Google Scholar] [CrossRef]

- Ding, B.; Zhang, R.; Xu, L.; Liu, G.; Yang, S.; Liu, Y.; Zhang, Q. U2D2 Net: Unsupervised unified image dehazing and denoising network for single hazy image enhancement. IEEE Trans. Multimed. 2023, 26, 202–217. [Google Scholar] [CrossRef]

- Li, C.; Zhang, X.; Zhang, W.; Su, H.; Bi, L. Uvcgan-Dehaze: A dehazing method for unpaired images. Soft Comput.-A Fusion Found. Methodol. Appl. 2024, 28, 12217. [Google Scholar] [CrossRef]

- Fan, S.; Xue, M.; Ning, A.; Zhong, S. Addressing domain discrepancy: A dual-branch collaborative model to unsupervised dehazing. Pattern Recognit. Lett. 2025, 189, 150–156. [Google Scholar] [CrossRef]

- Lin, F.; Wang, J.; Pedrycz, W.; Zhang, K.; Ablameyko, S. A typhoon optimization algorithm and difference of CNN integrated bi-level network for unsupervised underwater image enhancement. Appl. Intell. 2024, 54, 13101–13120. [Google Scholar] [CrossRef]

- Zhang, Y.; Cai, Y.; Yan, D.; Lin, R. Real-world scene image enhancement with contrastive domain adaptation learning. ACM Trans. Multimed. Comput. Commun. Appl. 2024, 20, 1–23. [Google Scholar] [CrossRef]

- Wang, Y.; Yan, X.; Wang, F.L.; Xie, H.; Yang, W.; Zhang, X.P.; Qin, J.; Wei, M. UCL-dehaze: Toward real-world image dehazing via unsupervised contrastive learning. IEEE Trans. Image Process. 2024, 33, 1361–1374. [Google Scholar] [CrossRef]

- Park, E.; Yoo, J.; Sim, J.Y. Universal dehazing via haze style transfer. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 8576–8588. [Google Scholar] [CrossRef]

- Dave, C.; Patel, H.; Kumar, A. Unsupervised single image dehazing—A contour approach. J. Vis. Commun. Image Represent. 2024, 100, 104119. [Google Scholar] [CrossRef]

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A line segment detector. Image Process. Line 2012, 2, 35–55. [Google Scholar] [CrossRef]

- Ancuti, C.; Ancuti, C.O.; Timofte, R.; De Vleeschouwer, C. I-HAZE: A dehazing benchmark with real hazy and haze-free indoor images. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Poitiers, France, 24–27 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 620–631. [Google Scholar]

- Ancuti, C.O.; Ancuti, C.; Timofte, R.; De Vleeschouwer, C. O-haze: A dehazing benchmark with real hazy and haze-free outdoor images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 754–762. [Google Scholar]

- Ancuti, C.O.; Ancuti, C.; Timofte, R. NH-HAZE: An image dehazing benchmark with non-homogeneous hazy and haze-free images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 444–445. [Google Scholar]

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking single-image dehazing and beyond. IEEE Trans. Image Process. 2018, 28, 492–505. [Google Scholar] [CrossRef]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature fusion attention network for single image dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11908–11915. [Google Scholar]

- Das, S.; Islam, M.S.; Amin, M.R. GCA-Net: Utilizing gated context attention for improving image forgery localization and detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LS, USA, 21–24 June 2022; pp. 81–90. [Google Scholar]

- Liu, X.; Ma, Y.; Shi, Z.; Chen, J. Griddehazenet: Attention-based multi-scale network for image dehazing. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7314–7323. [Google Scholar]

- Dong, H.; Pan, J.; Xiang, L.; Hu, Z.; Zhang, X.; Wang, F.; Yang, M.H. Multi-scale boosted dehazing network with dense feature fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2157–2167. [Google Scholar]

Figure 1.

Architecture of the proposed FIF-RSCT-Net.

Figure 1.

Architecture of the proposed FIF-RSCT-Net.

Figure 2.

Structure of SCFI module.

Figure 2.

Structure of SCFI module.

Figure 4.

The structure of RSCT module.

Figure 4.

The structure of RSCT module.

Figure 5.

The structure of FIF module.

Figure 5.

The structure of FIF module.

Figure 6.

Illustration of the decoder structure and knowledge transfer process.

Figure 6.

Illustration of the decoder structure and knowledge transfer process.

Figure 7.

Indoor image dehazing on SOTS.

Figure 7.

Indoor image dehazing on SOTS.

Figure 8.

The details of Indoor image dehazing on SOTS.

Figure 8.

The details of Indoor image dehazing on SOTS.

Figure 9.

Outdoor image dehazing on SOTS.

Figure 9.

Outdoor image dehazing on SOTS.

Figure 10.

The details of outdoor image dehazing on SOTS.

Figure 10.

The details of outdoor image dehazing on SOTS.

Figure 11.

The nature image dehazing result on I-Haze dataset.

Figure 11.

The nature image dehazing result on I-Haze dataset.

Figure 12.

The nature image dehazing result on O-Haze dataset.

Figure 12.

The nature image dehazing result on O-Haze dataset.

Figure 13.

The nature image dehazing result on NH-haze dataset.

Figure 13.

The nature image dehazing result on NH-haze dataset.

Figure 14.

The detail of image dehazing result on NH-Haze dataset.

Figure 14.

The detail of image dehazing result on NH-Haze dataset.

Figure 15.

The unsupervised training process.

Figure 15.

The unsupervised training process.

Figure 16.

Unsupervised Iterative learning process of dehazing knowledge transfer. Transferred indicates the output of knowledge transfer.

Figure 16.

Unsupervised Iterative learning process of dehazing knowledge transfer. Transferred indicates the output of knowledge transfer.

Figure 17.

The dehazing result of light fog HPTL image.

Figure 17.

The dehazing result of light fog HPTL image.

Figure 18.

The dehazing results of moderate fog HPTL image.

Figure 18.

The dehazing results of moderate fog HPTL image.

Figure 19.

The dehazing results of dense fog HPTL image.

Figure 19.

The dehazing results of dense fog HPTL image.

Table 1.

PSNR and SSIM of dehazing image on SOTS.

Table 1.

PSNR and SSIM of dehazing image on SOTS.

| Algorithm | Indoor | Outdoor |

|---|

| PSNR(dB)↑ | SSIM↑ | PSNR (dB)↑ |

SSIM↑

|

|---|

| DCP | 19.31 | 0.860 | 15.54 | 0.826 |

| FFANet | 30.12 | 0.968 | 19.55 | 0.804 |

| GCANet | 29.78 | 0.964 | 21.67 | 0.880 |

| GDNet | 29.22 | 0.975 | 17.70 | 0.807 |

| MSBDN | 28.29 | 0.966 | 18.25 | 0.809 |

| Our | 31.01 | 0.973 | 21.74 | 0.881 |

Table 2.

PSNR and SSIM of dehazing image on natural foggy image datasets.

Table 2.

PSNR and SSIM of dehazing image on natural foggy image datasets.

| Algorithms | I-Haze | O-Haze | NH-Haze |

|---|

| PSNR (dB)↑ | SSIM↑ | PSNR (dB)↑ | SSIM↑ | PSNR (dB)↑ | SSIM↑ |

|---|

| DCP | 13.21 | 0.622 | 15.32 | 0.581 | 13.29 | 0.577 |

| FFANet | 21.28 | 0.842 | 24.69 | 0.759 | 20.37 | 0.747 |

| GCANet | 21.45 | 0.841 | 24.32 | 0.759 | 19.95 | 0.734 |

| GDNet | 20.37 | 0.824 | 23.08 | 0.745 | 20.93 | 0.752 |

| MSBDN | 22.07 | 0.860 | 24.21 | 0.764 | 20.07 | 0.754 |

| Our | 23.13 | 0.863 | 25.80 | 0.774 | 21.55 | 0.765 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).