1. Introduction

In this paper, we consider the unconstrained optimization problem of minimizing the quadratic function,

where

is the coefficient matrix of the quadratic term,

. In order to solve (

1), common optimization methods usually take the following iterative approach:

where

,

is called stepsize. Different method has different definition of the stepsize, so the studies on stepsize are diverse. The most common method is the classical steepest descent method [

1], whose stepsize is called Cauchy stepsize,

this method to find the stepsize is also called accurate one-dimensional line search, and Forsythe proved the rate of convergence of the classical steepest descent is linear in [

2]. Although the stepsize

is effective, the classical steepest descent method will not work well when the condition number of

A is large, see [

3] for details.

In order to ensure the convergence speed and reduce the amount of computation, Borwein and Barzilai [

4] proposed a new stepsize, they turned the iterative formula into

where

,

I is identity matrix. It is similar to the quasi-Newton method [

5],

can be regarded as an approximate Hessian matrix of

f at

. And then, in order for

to have quasi-Newton property, they chose

so that

meets the following condition:

and they have

where

,

and

denotes the Euclidean norm. Additionally, in another way to choose the stepsize

, they let

satisfy

and then they have another stepsize

As we can see, if

, then

, so

is a long stepsize and

is a short stepsize. So

will perform better than

in solving some optimization problems, see [

6,

7] for details. And the methods that use

or

as stepsize are collectively referred to as BB methods.

In recent years, there has been a lot of research on the convergence and stepsize modification of the BB methods [

8,

9,

10]. From previous studies, it can be found that the convergence rate of BB methods are usually

R-superlinear and

R-linear. For example, in [

4], Barzilai and Borwein proved

R-superlinear convergence of their method with the stepsize

for solving two-dimensional strictly convex quadratics. And Dai and Liao [

11] proved

R-linear convergence of the BB method for

n-dimensional strictly convex quadratics. Now we give the definition of these two rates of convergence as follows.

Definition 1. According to the above formula, the R-convergence rate can be divided into two cases:

(i) When , the sequence of iterated points is said to have R-superlinear convergence rate;

(ii) When , the sequence of iterated points is said to have R-linear convergence rate.

In addition to solving quadratics, the BB method can also solve nonlinear optimization problems. Raydan [

12] proposed a global Barzilai-Borwein method for unconstrained optimization problems by combining with the nonmonotone line search proposed by Grippo et al. [

13]. Dai and Fletcher [

14] developed projected BB methods for solving large-scale box-constrained quadratic programming. Additionally, Huang and Liu [

15] extended the projected BB methods by using smoothing techniques, and they modified it to solve non-Lipschitz optimization problems. In [

16], Dai considered alternating the Cauchy stepsize and the BB stepsize, and proposed an alternate step gradient method. And in [

17], Zhou et al. proposed an Adaptive Barzilai-Borwein (ABB) method which alternated

and

.

In addition, the relationship between BB stepsizes and the spectrum of the Hessian matrices of the objective function has also attracted wide attention. Based on the ABB method in [

17], Frassoldati et al. [

18] tried to use

close to the reciprocal of the minimum eigenvalue of the Hessian matrix. Their first implementation of this idea was denoted by ABBmin1 and in order to better the iteration effect, they proposed another method, denoted by ABBmin2. De Asmundis et al. [

19] used the spectral property of the stepsize in [

20] to propose an SDC method, the SDC indicated that the Cauchy stepsize

was alternated with the Constant one. In [

21], the Broyden class of quasi-Newton method approximates the inverse of the Hessian matrix by

where

,

and

are the BFGS and DFP matrices satisfying the formula

, respectively. In the quasi-Newton method, these are the two most common corrections for

. Among them, the DFP correction was first proposed by Daviden [

22] and later explained and developed by Fletcher and Powell [

23], while the BFGS correction was summarized from the quasi-Newton method proposed by Broyden, Fletcher, Goldfarb and Shanno independently in 1970 [

24,

25,

26,

27]. Similarly, applying this idea to the BB method, Dai et al. [

28] solved the following equation

to obtain the convex combination of

and

where

, and they further proved that the family of spectral gradient methods of (

12) have R-superlinear convergence for two-dimensional strictly convex quadratics.

In addition to the several stepsize definitions mentioned above, there are also some BB-like stepsize. In [

29], Dai et al. set

and

, where

, they obtained a positive BB-like stepsize by averaging

and

geometrically as follows

whose simplification is equivalent to

and they proved the

R-superlinear convergence of the method. In addition, (

14) can also be seen as a delay extension of the stepsize proposed by Dai and Yang in [

30],

Interestingly, it has been shown in [

30] that (

15) will eventually approach the minimum value of

, precisely

where

and

are the minimum and maximum eigenvalues of

A, and their corresponding eigenvectors are

and

, respectively. The minimum stepsize of (

16) is the optimal stepsize in [

31], i.e.,

In this paper, we mainly research on the three-dimensional cases. That is to say, the coefficient matrix

A of the quadratic term is a third-order matrix. In [

29], their BB-like method applied only to

,

. Based on it, we modify the stepsize in (

14) as follows:

and make it applicable to both cases

and

,

. For the case

,

, we generalize it to a more general form which is

, where

. For these two cases, we have carried out the proof of convergence and numerical experiments.

The paper is organized as follows. In

Section 2, we analyze the new BB-like method which uses the stepsize

for the case of

,

and

,

, we prove the rate of convergence of the new method is

R-superlinear. Additionally, we extend this case to n-dimension, which means that

, where

. And we prove the rate of convergence in the

n-dimensional case is

R-linear.

Section 3 provides the research of the case

,

, and we prove the global convergence of this case under some assumption. In

Section 4, we give some numerical experiment results to show the effectiveness of the new method. Finally, the conclusions are given in

Section 5.

3. The Case Where A Is an Asymmetric Matrix and Its Convergence Analysis

In this case, we consider that

where

. Clearly, in this case,

A has a double characteristic root and it is not a symmetric matrix, so the analysis of this case will be different from that in

Section 2.

Firstly, we give two initial iteration points

, which satisfy

In this case,

so the stepsize will be

And by

, we have

That is to say

we set

so we can obtain

As we know and , but we cannot be sure whether M is positive or negative. So in order to prove the global convergence, we consider two cases and .

Theorem 4. When , the sequence of gradient norms converges to zero.

Proof. At first, we assume that

for all

. Since

, so

and

. Next, as for the product term in (

67) we discuss it in two cases

and

.

Case (i). When

, we have

. By (

67),

If

i.e.,

,

where

, so

converges to zero.

And if

i.e.,

,

where

so

,

converges to zero.

Case (ii). When

, we have

, and

, then

hold. By (

67),

Since (

69) is the same as (

68), so the proof of Case (ii) is similar to Case (i), and

converges to zero, too.

According to the above analysis, we finish the proof. □

Before we prove the case of , we set and then we will give the following theorem.

Theorem 5. When , if , we assume , the sequence of gradient norms converges to zero; if , the sequence also converges to zero.

Proof. Firstly, for any

we assume that

. By (

67), whether

is positive or negative, we have

If

, we can see that

, so

. As we know,

Let

, then we have

From the assumption , we can obtain and , so the sequence converges to zero.

And if , it follows that and then . Since so it is obvious that converges to zero.

Above all, we finish the proof. □

From the above two theorems, we can see that when , , the new method is globally convergent. In order to prove the convergence of the new method, we add the assumption condition , but the value of Q does not need to be considered in the actual calculation, and it does not affect the computational efficiency of the new method.

4. Numerical Results

In this section, we present the results of some numerical experiments on how the new BB-like method using the new stepsize

compares with other BB methods in solving optimization problems. The main difference between the different methods we compare here is the choice of the stepsize. We finally choose the following stepsizes for comparison:

[

4],

[

4],

[

30],

[

32] and the stepsize

in [

28]. From (

12) we can see the stepsize in [

28] is a convex combination, so in our experiments we set

and use it to represent this method. In addition to this, for the case when

A is an

n-dimensional symmetric matrix, we compare our BB-like method with the ABBmin1 and ABBmin2 methods in [

18], and ABB method in [

17]. The calculation results of all methods were completed by Python (v3.9.13). All the runs were carried out on a PC with an Intel Core i5, 2.3 GHz processor and 8 GB of RAM. For the examples we wanted to solve in the numerical experiments, we chose the following termination condition:

for some given

, so that we can obtain the expected results.

In our numerical experiments, we mainly considered five types of optimization problems. And now we give the five examples in specific forms as follows.

Example 1. Consider the following optimization problem,where , initial point , . For Example 1, we compared the number of iterations and the minimum points of the new method with the other five methods in solving optimization problems when

changes. The specific results are shown in

Table 1. Moreover, we give a comparison of the CPU time of different methods when solving Example 1 in

Figure 1.

Example 2. Consider the following optimization problem,where , initial point , . For Example 2, we give the comparison results of the number of iterations and the minimum points of each method when solving the optimization problems with different values of

a and

b in

Table 2. And we give a comparison of the CPU time of different methods when solving Example 2 in

Figure 2.

Example 3. Consider the following optimization problem,where , and the initial point can be chosen at random. For Example 3, due to the particularity of its form,

A is not a symmetric matrix but other forms of BB methods require

A to be a symmetric positive definite matrix. Therefore, we only give the results of the number of iterations and the minimum points of this kind of optimization problems by using the new method when the initial points change in

Table 3.

Example 4. Consider the following optimization problem,where , , and the initial point can be chosen at random. For Example 4, we chose two other methods, ABBmin1 and ABBmin2 methods, to compare with our method. The parameters of ABBmin1 and ABBmin2 methods were selected as in [

18], which were

,

and

, respectively. The initial points we chose were

,

and

. For each initial point, we randomly chose ten different sets of values of

,

, which satisfied

,

10,000, and

was evenly distributed between 1 and 10,000 for

.

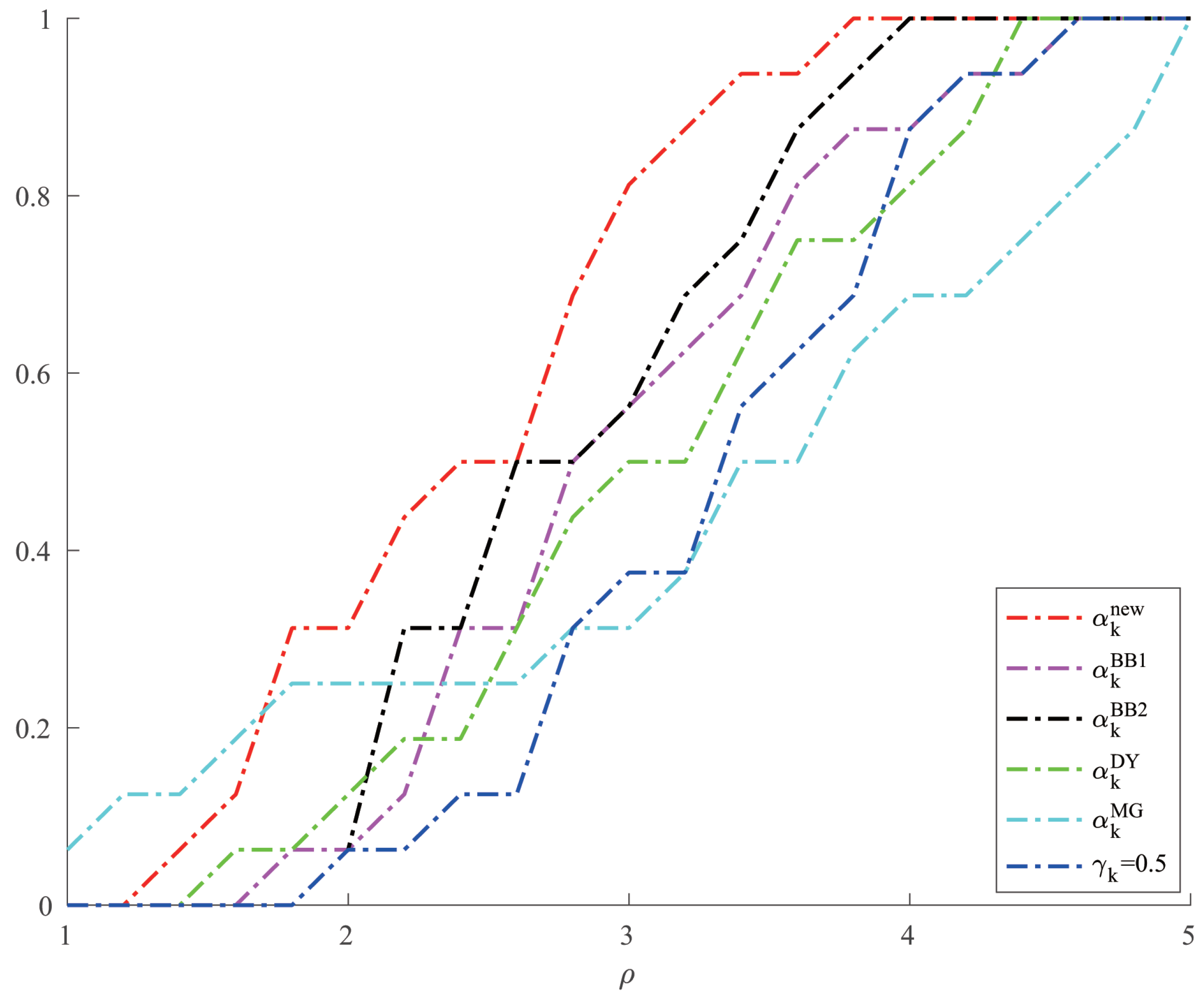

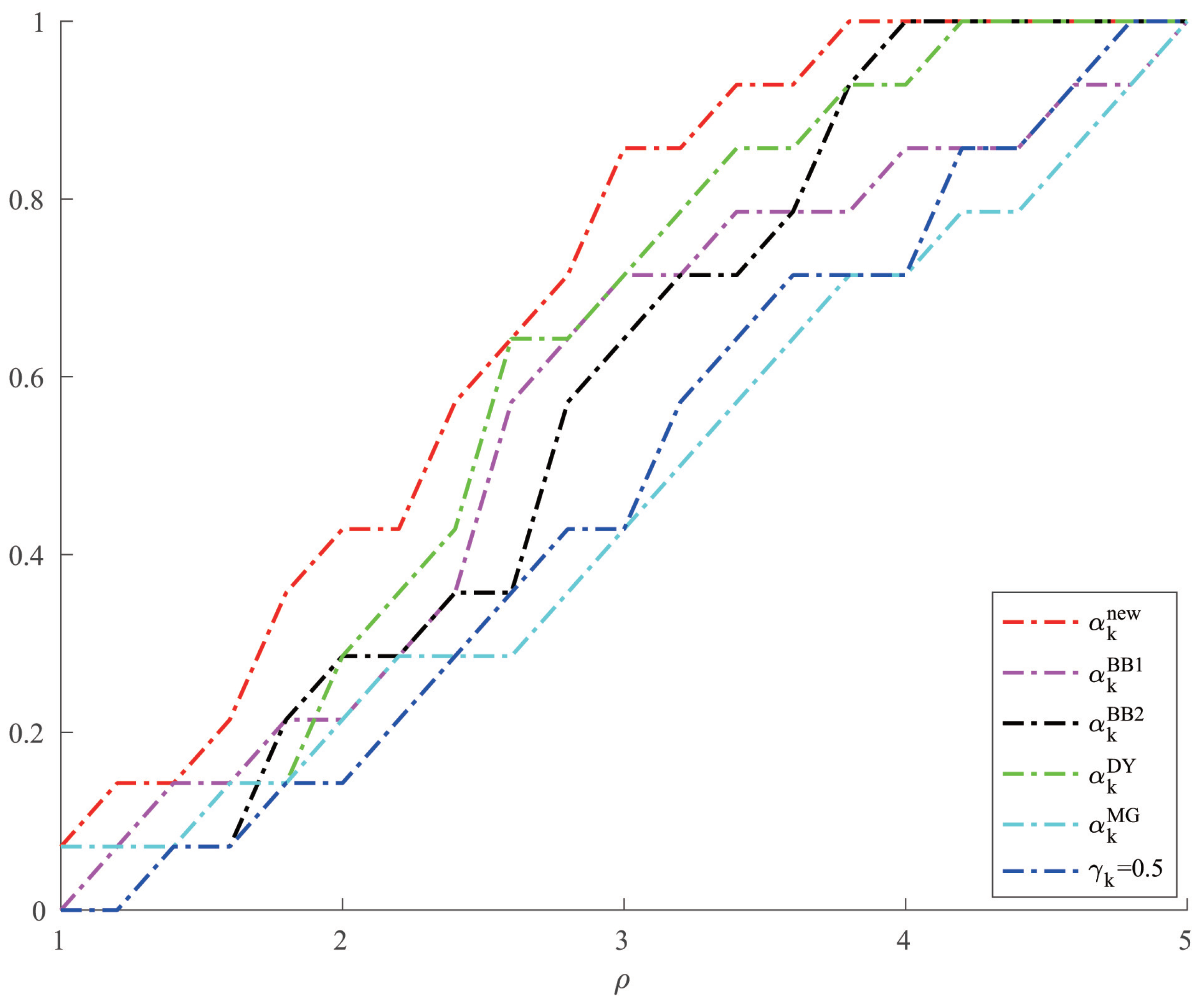

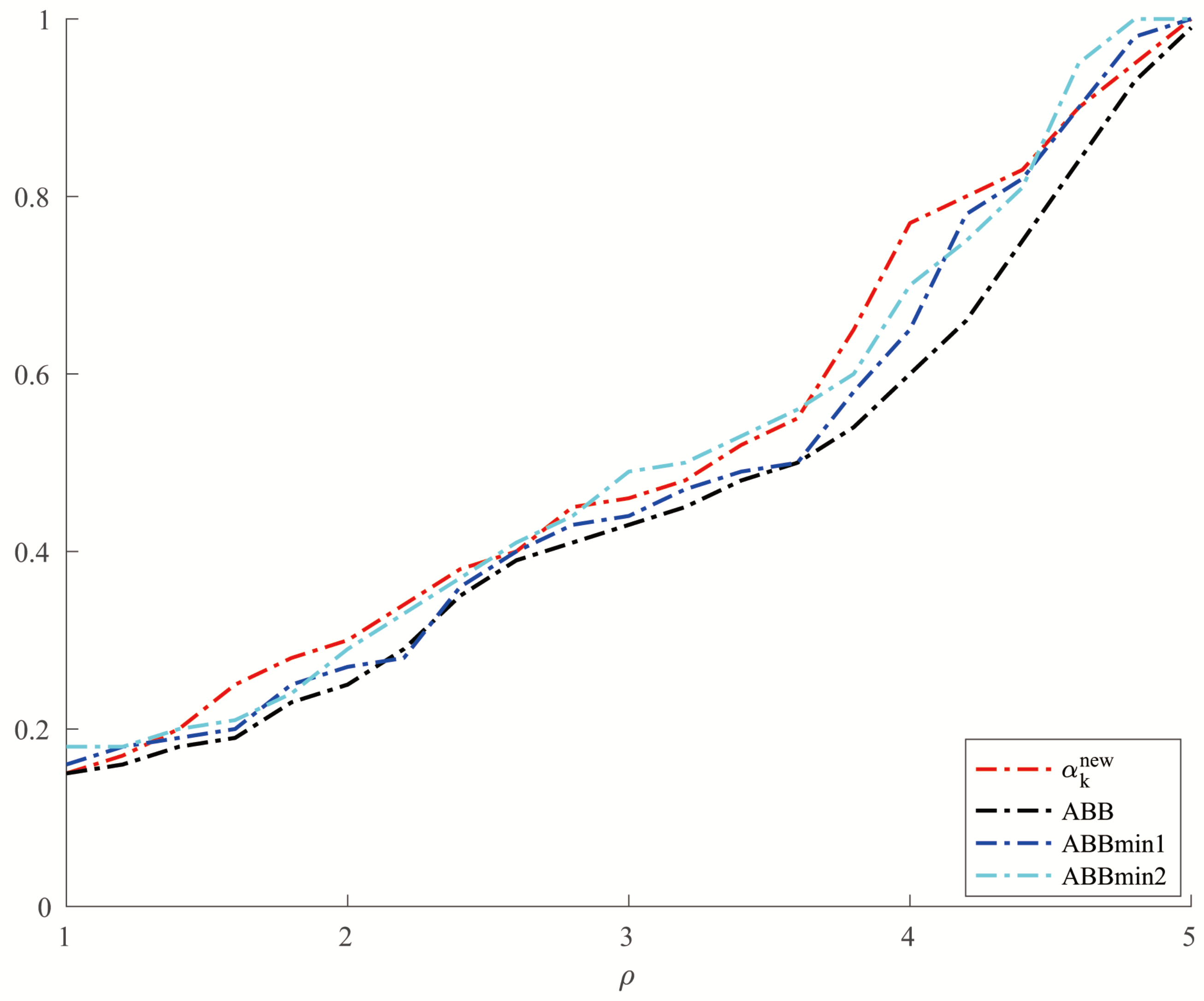

Figure 3 and

Figure 4, respectively, show the results of the comparison of the number of iterations and the CPU time when the three methods solve Example 4.

Example 5 (Random problems in [

33])

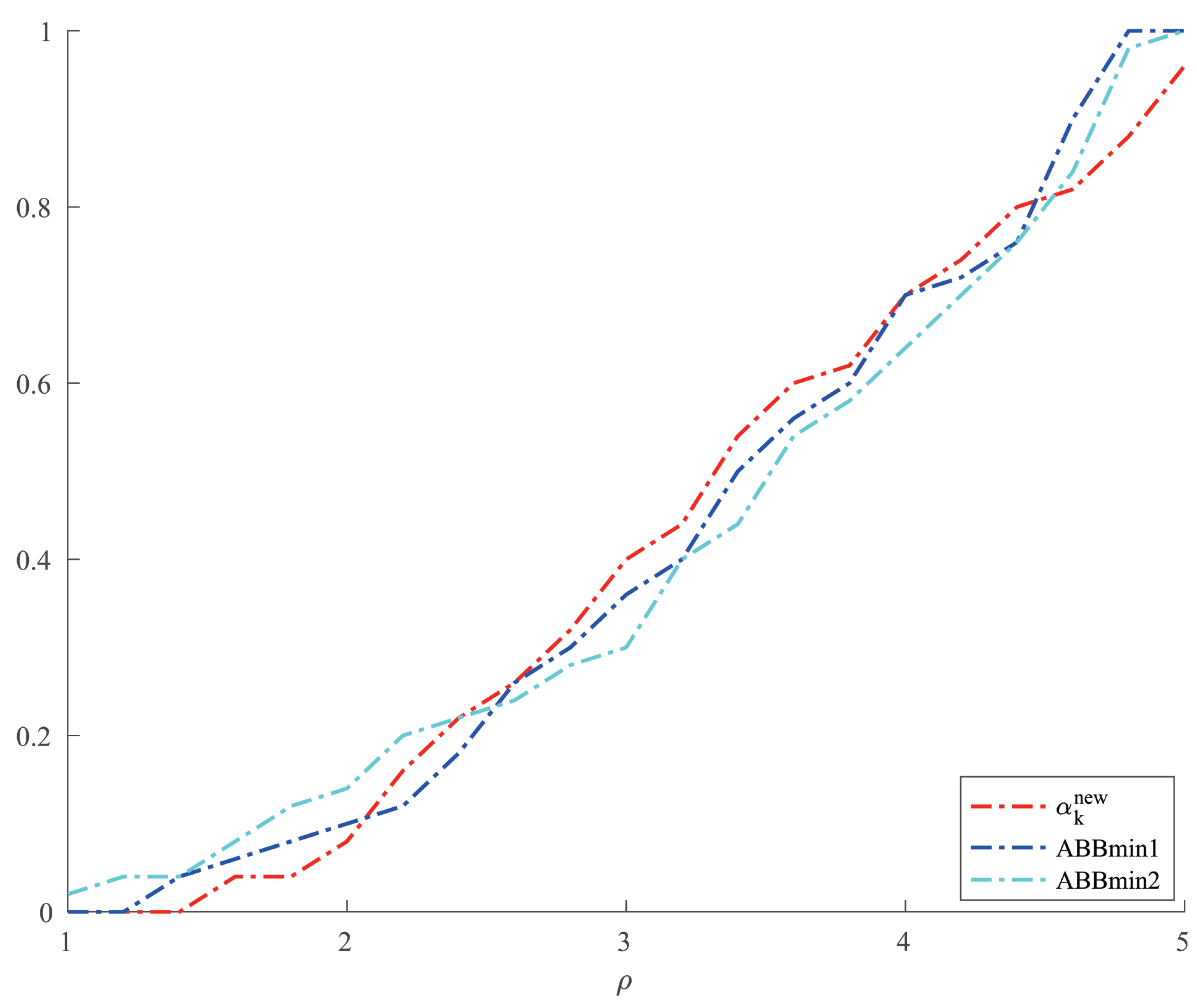

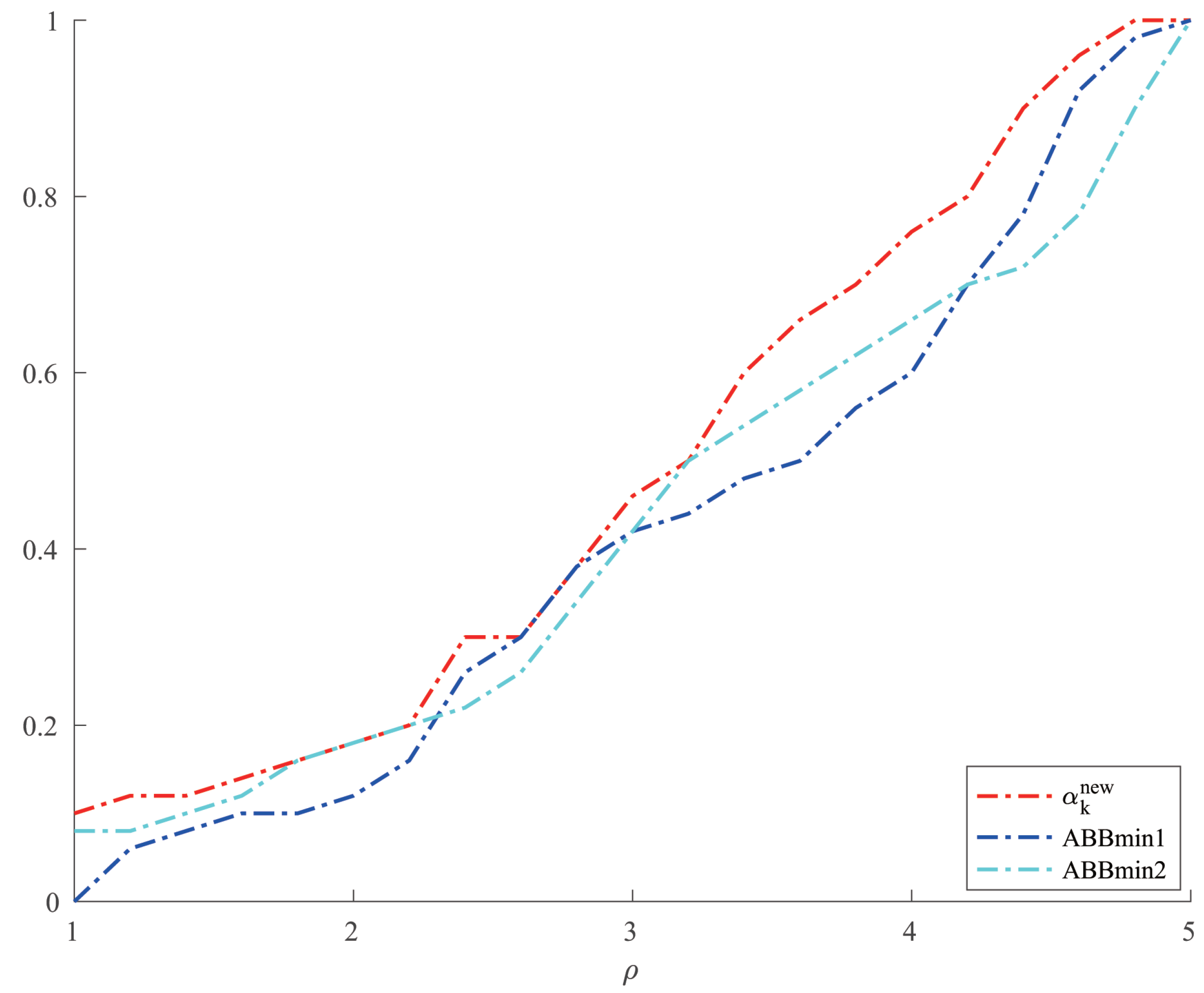

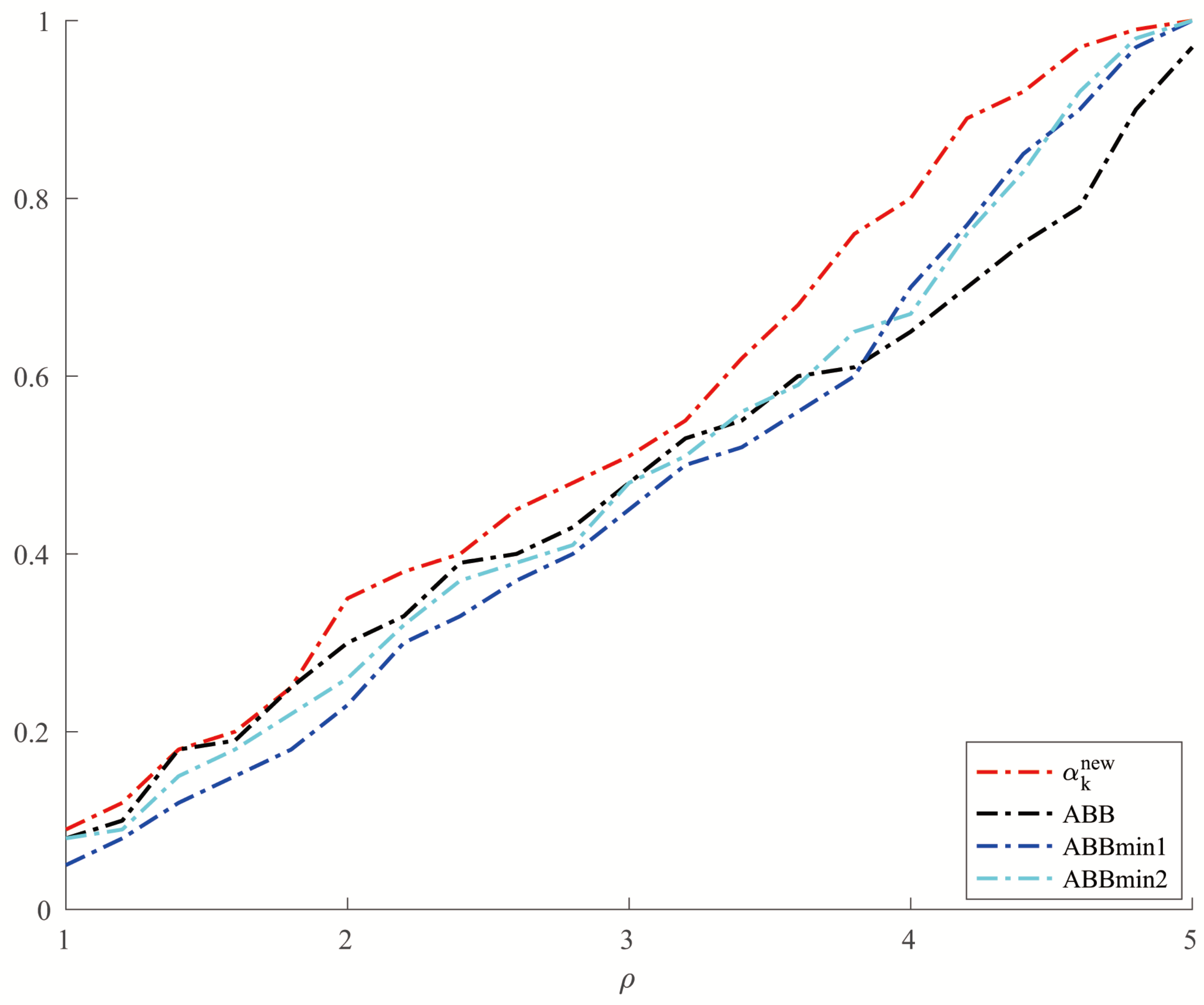

. Consider , whereand , , are unitary random vectors, is a diagonal matrix where , , and is randomly generated between 1 and condition number for . We set , , and the initial point . For Example 5, we set

and allowed a maximum of 10,000 iterations. In order to make a better comparison, we chose three other methods, which were ABBmin1 and ABBmin2 methods, and ABB method. The parameters used by the ABBmin1 and ABBmin2 methods were the same as Example 4. And for the ABB method, we set

, which was different from that in [

17]. In the experiments, three values of the condition number

cond: were chosen. For each value of

, ten instances with

evenly distributed in

were generated,

. The comparison of the number of iterations and the CPU time of several methods in solving Example 5 are shown in

Figure 5 and

Figure 6.

In all tables, ‘iter’ represents the number of iterations and ‘’ represents the optimal solution. And the vertical axis of each figure shows the percentage of problems solved by different methods within the minimum value of the metric factor .

From

Table 1 and

Table 2, we can see that the new method has no obvious advantage in the number of iterations when solving the optimization problems like Examples 1 and 2 and the solution accuracy can reach the level of other methods. However, in terms of CPU time, we can see from

Figure 1 and

Figure 2 that the new method has a clear advantage over other compared methods. When solving optimization problems like Example 3, it is not difficult to see from

Table 3 that the new method can complete well in terms of the number of iterations and the accuracy of the minimum points. And from

Figure 3 and

Figure 4, we can see that there is no significant difference in the number of iterations between the three methods when solving the problems such as Example 4, but the new method has a slight advantage in terms of the CPU time. For the random problems like Example 5, we can see from

Figure 5 that the new method and ABBmin2 method perform better in terms of the number of iterations, while in terms of the CPU time, we can see from

Figure 6 that the new method still has obvious advantages.