Physics-Informed Feature Engineering and R2-Based Signal-to-Noise Ratio Feature Selection to Predict Concrete Shear Strength

Abstract

1. Introduction

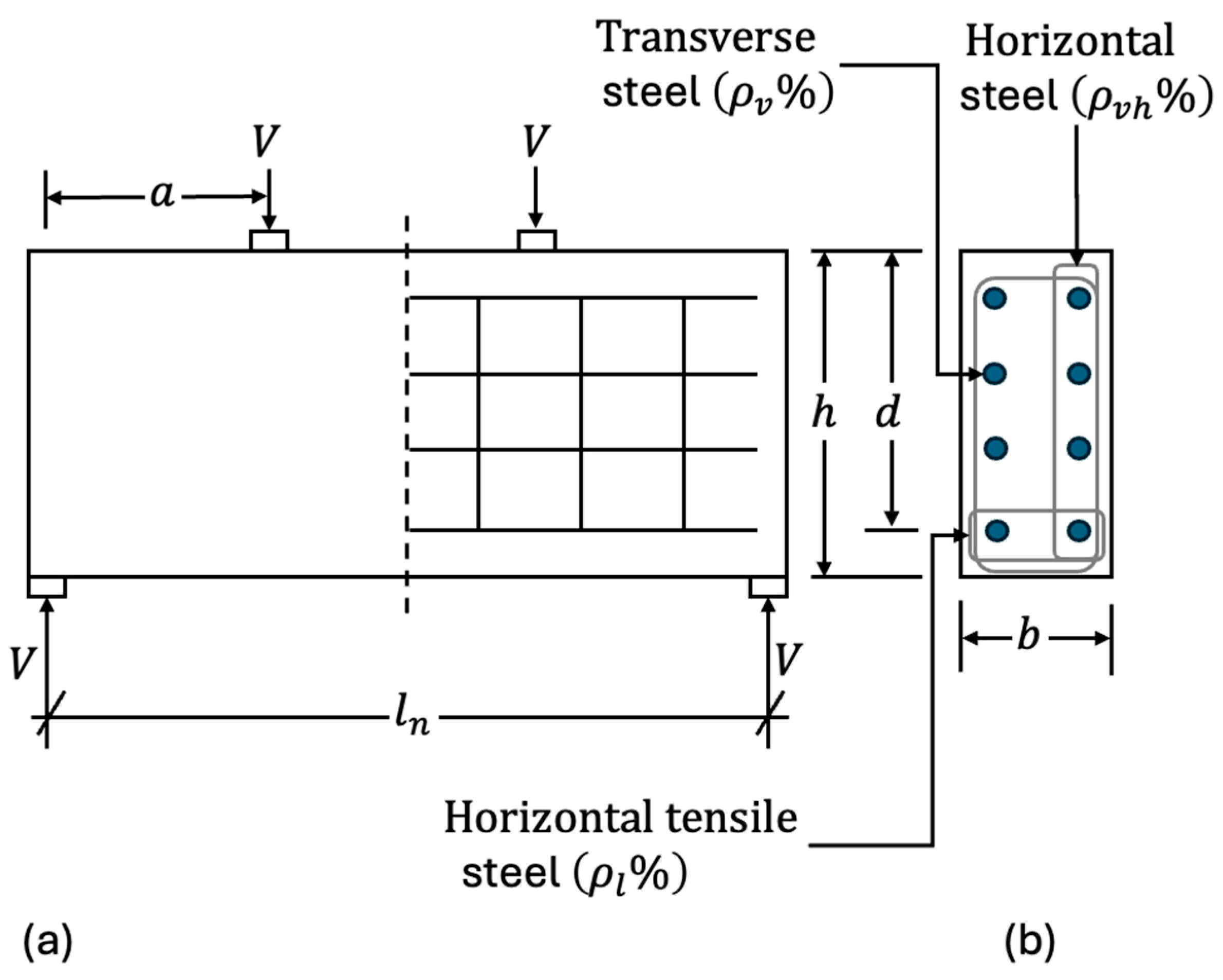

2. Motivation: Concrete Shear Strength

2.1. Experimental Dataset

2.2. Performance Metrics

2.3. ANNs for Concrete Shear Strength Modeling

2.4. Physics-Informed Feature Engineering

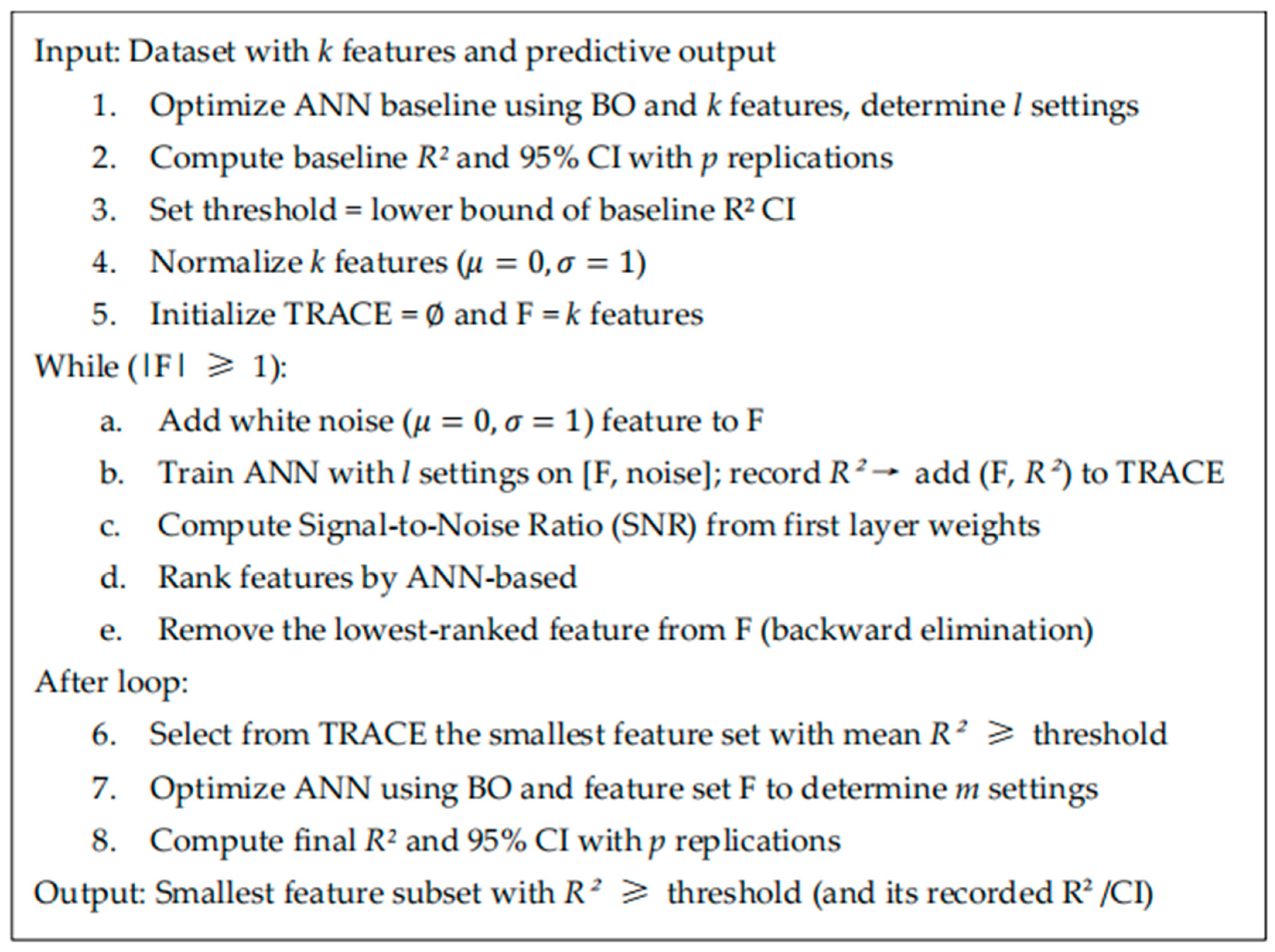

3. Methodology

3.1. Artificial Neural Networks

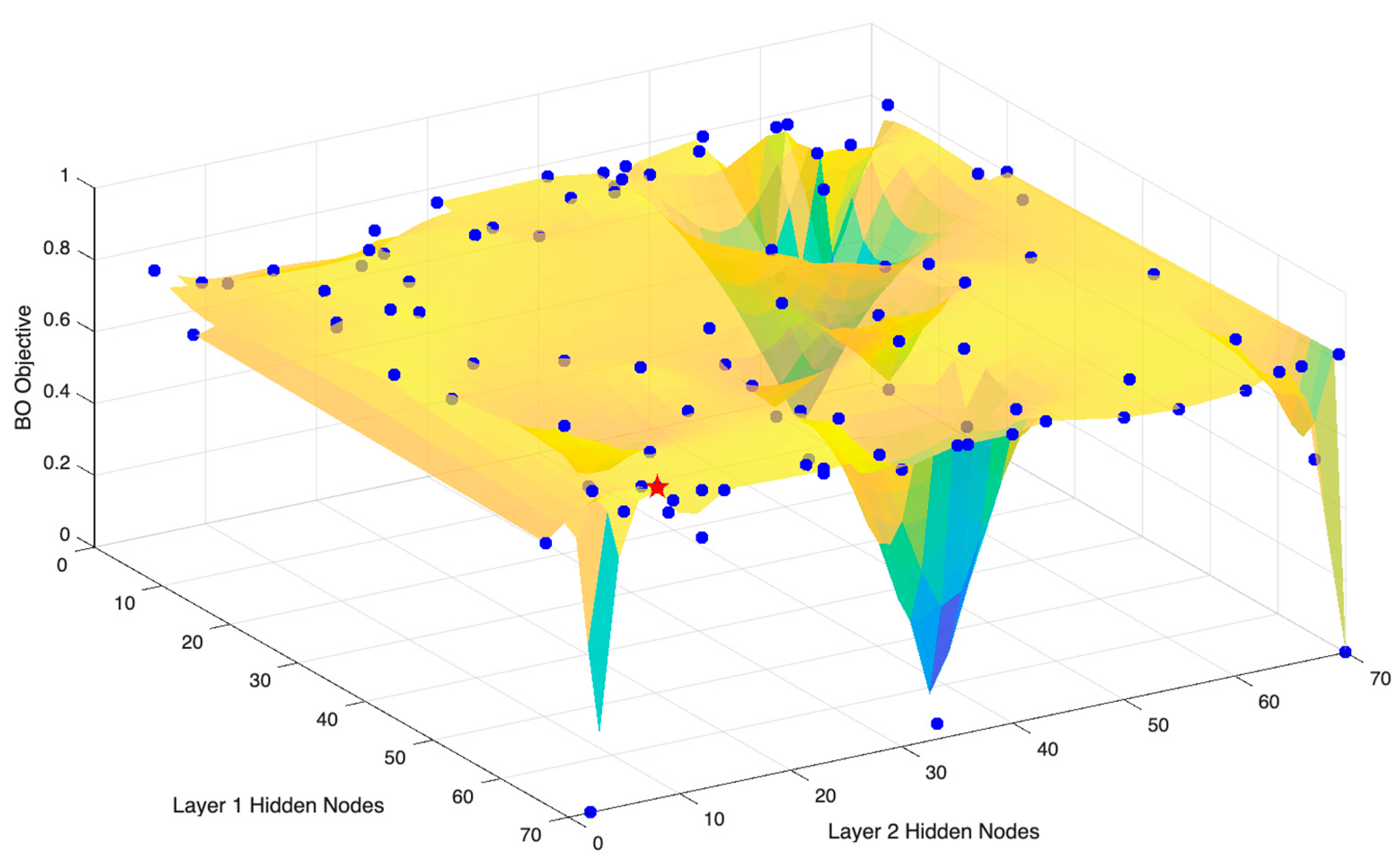

3.2. Hyperparameter Determination

3.3. Feature Saliency in Artificial Neural Networks

3.4. ANN-SNR Predictive Stopping Rules

4. Results

4.1. Baseline Results

4.2. ANN-SNR Feature Selection

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ANN | Artificial Neural Network |

| CI | Confidence Interval |

| BO | Bayesian Optimization |

| SNR | Signal-to-Noise Ratio |

References

- Bihl, T.; Sands, T. Dynamic System Modeling for Flexible Structures. Online J. Robot. Autom. Technol. 2024, 3, 1–4. [Google Scholar]

- Young, W.; Weckman, G.; Brown, M.; Thompson, J. Extracting knowledge of concrete shear strength from artificial neural networks. Int. J. Ind. Eng. Theory Appl. Pract. 2008, 15, 26–35. [Google Scholar]

- Hornik, K.; Stinchcombe, M.; White, H. Universal approximation of an unknown mapping and its derivatives using multilayer feedforward networks. Neural Netw. 1990, 3, 551–560. [Google Scholar] [CrossRef]

- Londhe, S.; Kulkarni, P.; Dixit, P.; Shah, S.; Joshi, S.; Sahu, P. Unveiling the Artificial Neural Network Mystery with Special Reference to Applications in Civil Engineering. Comput. Eng. Phys. Model. 2024, 7, 18–44. [Google Scholar]

- Bauer, K.; Alsing, S.; Greene, K. Feature screening using signal-to-noise ratios. Neurocomputing 2000, 31, 29–44. [Google Scholar] [CrossRef]

- Sanad, A.; Saka, M. Prediction of ultimate shear strength of reinforced-concrete deep beams using neural networks. J. Struct. Eng. 2001, 127, 818–828. [Google Scholar] [CrossRef]

- Ghosh, S.K.; Brewe, J. The most notable changes from ACI 318-14 to ACI 318-19 for precast concrete. PCI J. 2024, 69, 23–35. [Google Scholar] [CrossRef]

- Kuchma, D.; Wei, S.; Sanders, D.; Belarbi, A.; Novak, L. Development of the One-Way Shear Design Provisions of ACI 318-19 for Reinforced Concrete. ACI Struct. J. 2019, 116, 285–296. [Google Scholar] [CrossRef]

- Murphy, J.; Paal, S. A combined transfer learning physics informed interpretable machine learning approach to modelling the shear strength of concrete walls. In Proceedings of the EG-ICE 2025: AI-Driven Collaboration for Sustainable and Resilient Built Environments Conference, Glasgow, UK, 1–4 July 2025. [Google Scholar]

- Smith, K.; Vantsiotis, A. Shear strength of deep beams. J. Proc. 1982, 79, 201–213. [Google Scholar]

- Rogowsky, D.; MacGregor, J. Shear Strength of Deep Reinforced Concrete Continuous Beams; University of Alberta: Edmonton, AB, Canada, 1983. [Google Scholar]

- Tan, K.; Kong, F.; Teng, S.; Guan, L. High-strength concrete deep beams with effective span and shear span variations. Struct. J. 1995, 92, 395–405. [Google Scholar]

- Shin, S.; Lee, K.; Moon, J.; Ghosh, S. Shear strength of reinforced high-strength concrete beams with shear span-to-depth ratios between 1.5 and 2.5. Struct. J. 1999, 96, 549–556. [Google Scholar]

- Tan, K.; Lu, H. Shear behavior of large reinforced concrete deep beams and code comparisons. Struct. J. 1999, 96, 836–846. [Google Scholar]

- Oh, J.; Shin, S. Shear strength of reinforced high-strength concrete deep beams. Struct. J. 2001, 98, 164–173. [Google Scholar]

- Bresler, B.; Scordelis, A. Shear strength of reinforced concrete beams. J. Proc. 1963, 60, 51–74. [Google Scholar]

- Moody, K.; Viest, I.; Elstner, R.; Hognestad, E. Shear strength of reinforced concrete beams Part 1—Tests of simple beams. J. Proc. 1954, 51, 317–332. [Google Scholar]

- Ramakrishnan, V.; Ananthanarayana, Y. Ultimate strength of deep beams in shear. J. Proc. 1968, 65, 87–98. [Google Scholar]

- Kong, F.; Robins, P.; Cole, D. Web reinforcement effects on deep beams. J. Proc. 1970, 67, 1010–1018. [Google Scholar]

- Subedi, N.; Vardy, A.; Kubotat, N. Reinforced concrete deep beams some test results. Mag. Concr. Res. 1986, 38, 206–219. [Google Scholar] [CrossRef]

- Yoon, Y.; Cook, W.; Mitchell, D. Minimum shear reinforcement in normal, medium, and high-strength concrete beams. Struct. J. 1996, 93, 576–584. [Google Scholar]

- Foster, S.; Gilbert, R. Experimental studies on high-strength concrete deep beams. Struct. J. 1998, 95, 382–390. [Google Scholar]

- de Paiva, H.; Siess, C. Strength and behavior of deep beams in shear. J. Struct. Div. 1965, 91, 19–41. [Google Scholar] [CrossRef]

- Angelakos, D.; Bentz, E.; Collins, M. Effect of concrete strength and minimum stirrups on shear strength of large members. Struct. J. 2001, 98, 291–300. [Google Scholar]

- Kani, M.; Huggins, M.; Wittkopp, R. Shear in Reinforced Concrete; University of Toronto: Toronto, ON, Canada, 1979. [Google Scholar]

- Krefeld, W.; Thurston, C. Studies of the shear and diagonal tension strength of simply supported reinforced concrete beams. J. Proc. 1966, 63, 451–476. [Google Scholar]

- De Cossio, R.; Siess, C. Behavior and strength in shear of beams and frames without web reinforcement. J. Proc. 1960, 56, 695–736. [Google Scholar]

- Hsuing, W.; Frantz, G. Transverse Stirrup Spacing in RC Beam. J. Struct. Eng. 1985, 11, 353–362. [Google Scholar] [CrossRef]

- Watstein, D.; Mathey, R. Strains in beams having diagonal cracks. J. Proc. 1958, 55, 717–728. [Google Scholar]

- Morrow, J.; Viest, I. Shear strength of reinforced concrete frame members without web reinforcement. J. Proc. 1957, 53, 833–869. [Google Scholar]

- Ferguson, P. Some implications of recent diagonal tension tests. J. Proc. 1956, 53, 157–172. [Google Scholar]

- Van Den Berg, F. Shear strength of reinforced concrete beams without web reinforcement. J. Proc. 1962, 59, 1587–1600. [Google Scholar]

- Clark, A. Diagonal tension in reinforced concrete beams. J. Proc. 1951, 48, 145–156. [Google Scholar]

- Ahmad, S.L.D. Flexure-shear interaction of reinforced high strength concrete beams. Struct. J. 1987, 84, 330–341. [Google Scholar]

- Rajagopalan, K.; Ferguson, P. Exploratory shear tests emphasizing percentage of longitudinal steel. J. Proc. 1968, 65, 634–638. [Google Scholar]

- Ozcebe, G.; Ersoy, U.; Tankut, T. Minimum flexural reinforcement for T-beams made of higher strength concrete. Can. J. Civ. Eng. 1999, 26, 525–534. [Google Scholar] [CrossRef]

- Kong, P.; Rangan, B. Shear strength of high-performance concrete beams. Struct. J. 1998, 95, 677–688. [Google Scholar]

- Tan, K.; Kong, F.; Teng, S.; Weng, L. Effect of web reinforcement on high-strength concrete deep beams. Struct. J. 1997, 94, 572–582. [Google Scholar]

- Chang, T.; Kesler, C. Correlation of Sonic Properties of Concrete with Creep and Relaxation; University of Illinois: Champaign, IL, USA, 1956. [Google Scholar]

- Xie, Y.; Ahmad, S.; Yu, T.; Hino, S.; Chung, W. Shear ductility of reinforced concrete beams of normal and high-strength concrete. Struct. J. 1994, 91, 140–149. [Google Scholar]

- Sarsam, K.; Al-Musawi, J. Shear design of high-and normal strength concrete beams with web reinforcement. Struct. J. 1992, 89, 658–664. [Google Scholar]

- Johnson, M.; Ramirez, J. Minimum shear reinforcement in beams with higher strength concrete. Struct. J. 1989, 86, 376–382. [Google Scholar]

- Roller, J.J.; Russell, H.G. Shear Strength of High-Strength Concrete Beams with Web Reinforcement. ACI Struct. J. 1990, 87, 191–198. [Google Scholar] [CrossRef]

- Rigotti, M. Diagonal Cracking in Reinforced Concrete Deep Beams: An Experimental Investigation. Ph.D. Thesis, Concordia University, Montreal, QC, Canada, 2002. [Google Scholar]

- Yoshida, Y. Shear Reinforcement for Large Lightly Reinforced Concrete Members. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 2000. [Google Scholar]

- Cao, S. Size Effect and the Influence of Longitudinal Reinforcement on the Shear Response of Large Reinforced Concrete Members. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 2001. [Google Scholar]

- Laupa, A.; Siess, C.; Newmark, N. The Shear Strength of Simple-Span Reinforced Concrete Beams Without Web Reinforcement; Civil Engineering Studies SRS-052; University of Illinois at Urbana-Champaign: Champaign, IL, USA, 1953. [Google Scholar]

- Bazant, Z.; Kazemi, M. Size effect on diagonal shear failure of beams without stirrups. ACI Struct. J. 1991, 88, 268–276. [Google Scholar] [CrossRef]

- Yang, K.; Chung, H.; Lee, E.; Eun, H. Shear characteristics of high-strength concrete deep beams without shear reinforcements. Eng. Struct. 2003, 25, 1343–1352. [Google Scholar] [CrossRef]

- Shioya, T.; Iguro, M.; Nojiri, Y.; Akiyama, H.; Okada, T. Shear strength of large reinforced concrete beams. Fract. Mech. Appl. Concr. 1990, 118, 259–280. [Google Scholar]

- Kutner, M.; Nachtsheim, C.; Neter, J.; Li, W. Applied Linear Statistical Models; McGraw-Hill Irwin: New York, NY, USA, 2005. [Google Scholar]

- Markou, G.; Bakas, N. Prediction of the shear capacity of reinforced concrete slender beams without stirrups by applying artificial intelligence algorithms in a big database of beams generated by 3D nonlinear finite element analysis. Comput. Concr. 2021, 28, 533–547. [Google Scholar]

- Bihl, T.; Young, W., II; Moyer, A.; Frimel, S. Artificial neural networks and data science. In Encyclopedia of Data Science and Machine Learning; IGI Global: Hershey, PA, USA, 2023; pp. 899–921. [Google Scholar]

- Bihl, T.; Schoenbeck, J.; Steeneck, D.; Jordan, J. Easy and efficient hyperparameter optimization to address some artificial intelligence “ilities”. In Proceedings of the Hawaii International Conference on System Sciences, Maui, HI, USA, 7–10 January 2020; pp. 6965–6974. [Google Scholar]

- Wang, X.; Jin, Y.; Schmitt, S.; Olhofer, M. Recent advances in Bayesian optimization. ACM Comput. Surv. 2023, 55, 287. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An introduction to variable and feature selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Moore, K.; Bihl, T.; Bauer, K., Jr.; Dube, T. Feature extraction and feature selection for classifying cyber traffic threats. J. Def. Model. Simul. 2017, 14, 217–231. [Google Scholar] [CrossRef]

- Bihl, T.; Bauer, K.; Temple, M. Feature selection for RF fingerprinting with multiple discriminant analysis and using ZigBee device emissions. IEEE Trans. Inf. Forensics Secur. 2016, 11, 1862–1874. [Google Scholar] [CrossRef]

- Steppe, J.; Bauer, K.J. Improved feature screening in feedforward neural networks. Neurocomputing 1996, 13, 47–58. [Google Scholar] [CrossRef]

- Belue, L.; Bauer, K.J. Determining input features for multilayer perceptrons. Neurocomputing 1995, 7, 111–121. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Angelakos, D. The Influence of Concrete Strength and Longitudinal Reinforcement Ratio on the Shear Strength of Large-Size Reinforced Concrete Beams With, and Without, Transverse Reinforcement. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 1999. [Google Scholar]

| Variable | Description | [Range] (S.D.) and Units |

|---|---|---|

| Width of the beam | [1.5, 79.1] (4.45) inches | |

| Effective depth of the beam | [0.8, 118.1] (10.12) inches | |

| Compressive strength of concrete used in the beam | [880, 18,170] (0.31) psi | |

| Yield strength of the shear reinforcement | [20.3, 258] (20.54) ksi | |

| Longitudinal reinforcement ratio (steel reinforcement by area of concrete) | [0.001, 0.070] (0.012) | |

| Vertical shear reinforcement ratio | [0.001, 0.029] (0.003) | |

| Longitudinal shear reinforcement ratio | [0, 0.077] (0.004) | |

| Shear span (distance from the applied load and the support) | [2.4, 708.6] (40.78) inches | |

| Shear span-to-depth ratio | [0.27, 9.7] (1.82) | |

| Clear span of the beam | [5.6, 1417.2] (81.5) inches | |

| Measured shear strength of the specimen at the face of the support | [0.33, 502.35] (53.78) kips | |

| Predicted shear strength at the face of the support (by sources) | [0.20, 828.21] (44.49) kips |

| Algorithm | Features | Architecture | R2 |

|---|---|---|---|

| Baseline [2] | ,, | logsig [7, 4] LM with MSE | Mean R2: 0.866 Range R2: [0.841, 0.892] |

| Baseline [2] | , | logsig [7, 4] LM with MSE | Mean R2: 0.877 Range R2: [0.832, 0.903] |

| Hyperparameter | Description | Range |

|---|---|---|

| Layer 1 nodes | Neurons in the first hidden layer; controls initial feature extraction. | [3, 70] |

| Layer 2 nodes | Neurons in the second hidden layer; refines and combines features. | [1, 70] |

| Activation function | Nonlinear function used for each node in the hidden layers | [tansig, logsig, poslin] |

| Regularization | L2 penalty to improve generalization and reduce overfitting | [0, 0.4] |

| Training | Training algorithm used | [LM, BR, SCG] |

| Patience | Maximum epochs before training stops | [4000, 5000] |

| Performance Metric | Error function for optimization | [MAE, MSE, MSEReg] |

| BO Iterations | Iterations for BO to search over this space | 100 |

| Algorithm | Features | Architecture | R2 |

|---|---|---|---|

| BO-ANN | ,, | tansig [68, 15] LM with MAE Reg = 0.005395 | Mean R2: 0.8564 95% CI: [0.8208, 0.8920] |

| BO-ANN Engineered | tansig [61, 14] LM with MAE Reg = 0.0017861 | Mean R2: 0.6518 95% CI: [0.5524, 0.7512] | |

| BO-ANN Hybrid | ,, | tansig [64, 24] LM with MAE Reg = 0.0015075 | Mean R2: 0.8428 95% CI: [0.8181, 0.8675] |

| Iteration | Features and SNR (dB) | R2 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.16 | −0.03 | 0.31 | −1.6 | 0.21 | −1.07 | −0.85 | −0.51 | −0.58 | −0.34 | 0.841 |

| 2 | 1.08 | −0.42 | 0.63 | - | −1.44 | 0.09 | −0.77 | −0.19 | −0.64 | −0.57 | 0.867 |

| 3 1 | −0.41 | −0.57 | 0.74 | - | - | −0.09 | −1.06 | −0.18 | 0.25 | −0.55 | 0.843 |

| 4 1 | 1.16 | 0.53 | 1.02 | - | - | −0.05 | - | 0.45 | 1.32 | 1.23 | 0.822 |

| 5 | 1.99 | 0.79 | 3.24 | - | - | - | - | 1.29 | 1.14 | 0.97 | 0.722 |

| 6 | −0.86 | - | −0.51 | - | - | - | - | −0.53 | −0.72 | −0.74 | 0.541 |

| 7 | 0.96 | - | 1.33 | - | - | - | - | 1.41 | 0.86 | 1.38 | 0.682 |

| 8 | −1.17 | - | −1.40 | - | - | - | - | −0.17 | - | −1.04 | 0.754 |

| 9 | −0.82 | - | - | - | - | - | - | −0.05 | - | 0.98 | 0.547 |

| 10 | - | - | - | - | - | - | - | 0.98 | - | 0.59 | 0.576 |

| 11 | - | - | - | - | - | - | - | - | - | −0.72 | 0.022 |

| Iteration | Features and SNR (dB) | R2 | ||||

|---|---|---|---|---|---|---|

| 1 1 | 1.14 | 0.37 | −0.58 | 0.16 | −0.49 | 0.591 |

| 2 | −0.51 | −0.97 | - | −1.33 | 0.17 | 0.526 |

| 3 | 0.44 | −1.07 | - | - | 0.26 | 0.481 |

| 4 | −0.01 | - | - | - | 0.41 | 0.356 |

| 5 | - | - | - | - | −0.42 | 0.202 |

| Iter | Features and SNR (dB) | R2 | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 14.3 | 12.1 | 9.92 | 13.7 | 11.7 | 23.1 | 20.35 | 11.5 | 15.2 | 25.1 | 15.7 | 16.4 | 15.7 | 14.8 | 19.5 | 0.78 |

| 2 | 11.5 | 10.4 | - | 9.9 | 5.69 | 17.5 | 14.5 | 13.4 | 14.3 | 21.4 | 9.66 | 18.7 | 9.65 | 2.89 | 5.35 | 0.841 |

| 3 | 11.7 | 15.7 | - | 9.57 | 2.41 | 13.29 | 8.52 | 11.73 | 10.35 | 15.7 | 5.34 | 18.98 | 5.34 | - | 6.91 | 0.804 |

| 4 | 5.44 | −0.61 | - | −0.99 | - | 9.74 | 4.94 | 4.46 | −1.54 | 11.55 | 1.38 | 5.95 | 1.38 | - | 8.57 | 0.786 |

| 5 | 4.01 | - | - | −0.81 | - | 3.18 | 3.47 | 3.44 | - | 6.70 | 4.71 | 6.85 | 1.83 | - | 4.36 | 0.799 |

| 6 | 6.97 | - | - | - | - | 11.83 | 9.63 | 8.13 | - | 15.87 | 6.59 | 13.10 | 6.59 | - | 12.2 | 0.818 |

| 7 | 11.89 | - | - | - | - | 17.04 | 12.47 | 8.02 | - | 18.27 | 14.07 | 16.08 | - | - | 16.83 | 0.812 |

| 8 1 | −1.46 | - | - | - | - | 0.37 | 2.74 | - | - | 6.15 | −0.37 | 1.60 | - | - | 4.11 | 0.832 |

| 9 | - | - | - | - | - | 18.89 | 16.41 | - | - | 20.39 | 15.39 | 18.37 | - | - | 21.24 | 0.801 |

| 10 | - | - | - | - | - | 22.80 | 16.95 | - | - | 23.11 | - | 21.82 | - | - | 21.35 | 0.805 |

| 11 | - | - | - | - | - | 3.28 | - | - | - | 5.73 | - | 4.67 | - | - | 5.86 | 0.763 |

| 12 | - | - | - | - | - | - | - | - | - | 21.64 | - | 10.54 | - | - | 17.88 | 0.659 |

| 13 | - | - | - | - | - | - | - | - | - | 16.3 | - | - | - | - | 14.53 | 0.652 |

| 14 | - | - | - | - | - | - | - | - | - | 1.9 | - | - | - | - | - | 0.162 |

| Algorithm | Features | Architecture | R2 |

|---|---|---|---|

| BO-ANN-SNR3 | tansig [68, 15] LM with MAE Reg = 0.005395 | Mean R2: 0.8358 95% CI: [0.8160, 0.8556] | |

| BO-ANN-SNR4 | ,, | tansig [68, 15] LM with MAE Reg = 0.005395 | Mean R2: 0.7824 95% CI: [0.7494, 0.8154] |

| BO-ANN-SNR Engineered | tansig [61, 14] LM with MAE Reg = 0.0017861 | Mean R2: 0.6518 95% CI: [0.5524, 0.7512] | |

| BO-ANN-SNR Hybrid | tansig [64, 24] LM with MAE Reg = 0.015075 | Mean R2: 0.8524 95% CI: [0.8312, 0.8736] |

| Algorithm | Features | R2 |

|---|---|---|

| BO-ANN-SNR3 | 8 raw data features | Mean R2: 0.8358 95% CI: [0.8160, 0.8556] |

| BO-ANN | 10 raw data features | Mean R2: 0.8564 95% CI: [0.8208, 0.8920] |

| BO-ANN-SNR Hybrid | 7 features: 4 raw, 3 engineered | Mean R2: 0.8524 95% CI: [0.8312, 0.8736] |

| Best-Young [2] | 8 raw data features | Mean R2: 0.877 Range R2: [0.832, 0.903] |

| Best-Murphy and Paal [8] | 18 data features: 17 raw, 1 engineered | Max R2: 0.877 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bihl, T.J.; Young, W.A., II; Moyer, A. Physics-Informed Feature Engineering and R2-Based Signal-to-Noise Ratio Feature Selection to Predict Concrete Shear Strength. Mathematics 2025, 13, 3182. https://doi.org/10.3390/math13193182

Bihl TJ, Young WA II, Moyer A. Physics-Informed Feature Engineering and R2-Based Signal-to-Noise Ratio Feature Selection to Predict Concrete Shear Strength. Mathematics. 2025; 13(19):3182. https://doi.org/10.3390/math13193182

Chicago/Turabian StyleBihl, Trevor J., William A. Young, II, and Adam Moyer. 2025. "Physics-Informed Feature Engineering and R2-Based Signal-to-Noise Ratio Feature Selection to Predict Concrete Shear Strength" Mathematics 13, no. 19: 3182. https://doi.org/10.3390/math13193182

APA StyleBihl, T. J., Young, W. A., II, & Moyer, A. (2025). Physics-Informed Feature Engineering and R2-Based Signal-to-Noise Ratio Feature Selection to Predict Concrete Shear Strength. Mathematics, 13(19), 3182. https://doi.org/10.3390/math13193182