A Multi-LiDAR Self-Calibration System Based on Natural Environments and Motion Constraints

Abstract

1. Introduction

2. Related Work

2.1. Feature-Based Methods

2.2. Motion-Constrained Methods

2.3. Deep Learning-Based Methods

3. Problem Formulation and Overview

- Preprocessing: Where a ground plane assumption reduces motion to planar DoF (fixing , roll, and pitch) and yields ; a virtual RGB–D projection produces 2D feature/depth maps, and ORB with motion consistency filtering provides robust associations that are refined by 3D ICP.

- Optimization for hand–eye calibration: Optimization for hand–eye calibration, where yaw is estimated from straight segments via motion constraints, and—assuming the INS is aligned to the vehicle frame—planar translation is solved during curved/compound motion via INS–LiDAR consistency.

- Error evaluation: Error evaluation, which continuously monitors plane-alignment (normal angle and mean offset) and LiDAR–INS odometry discrepancies (translation and rotation); threshold exceedances indicate drift and trigger online recalibration.

4. Preprocessing

4.1. Ground Plane Fitting for Motion Dimensionality Reduction

4.1.1. Point Cloud Acquisition and Ground Plane Estimation

4.1.2. Global Plane Parameter Optimization

4.2. Fast Point Cloud Registration Using Virtual Camera Model

4.2.1. Virtual Camera Projection

4.2.2. Feature Map and ORB Matching

4.2.3. ICP Refinement

5. Hand–Eye Calibration

5.1. Yaw from Straight Motion

5.1.1. Motion Direction Extraction

5.1.2. Optimization to Determine Yaw Angle

5.2. Translation from Curved/Compound Motion

5.3. Combined Extrinsic Parameters

6. Precision Evaluation

6.1. Ground Plane Error

6.2. Odometry Consistency Error

7. Experiments and Results

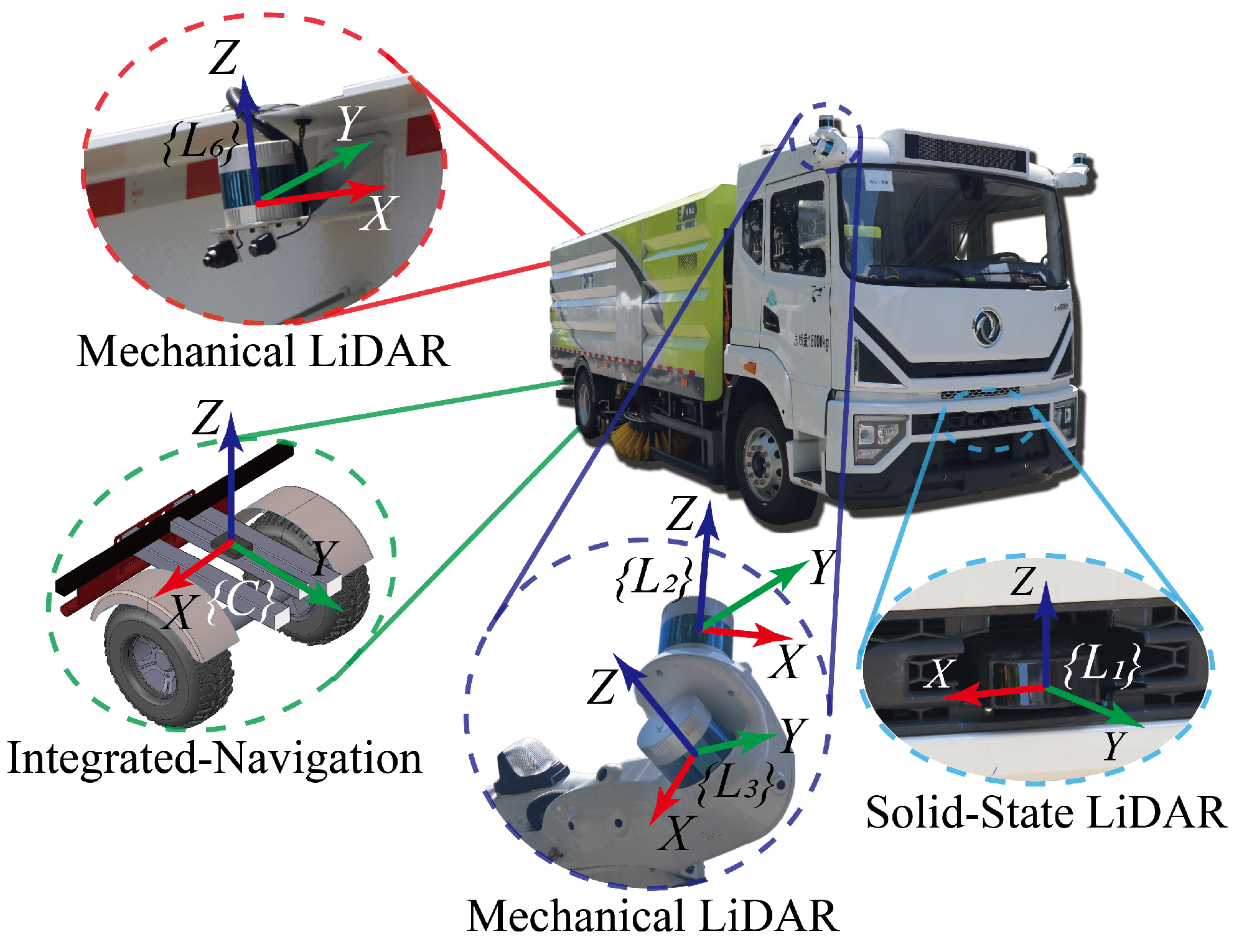

7.1. Experiment Setup

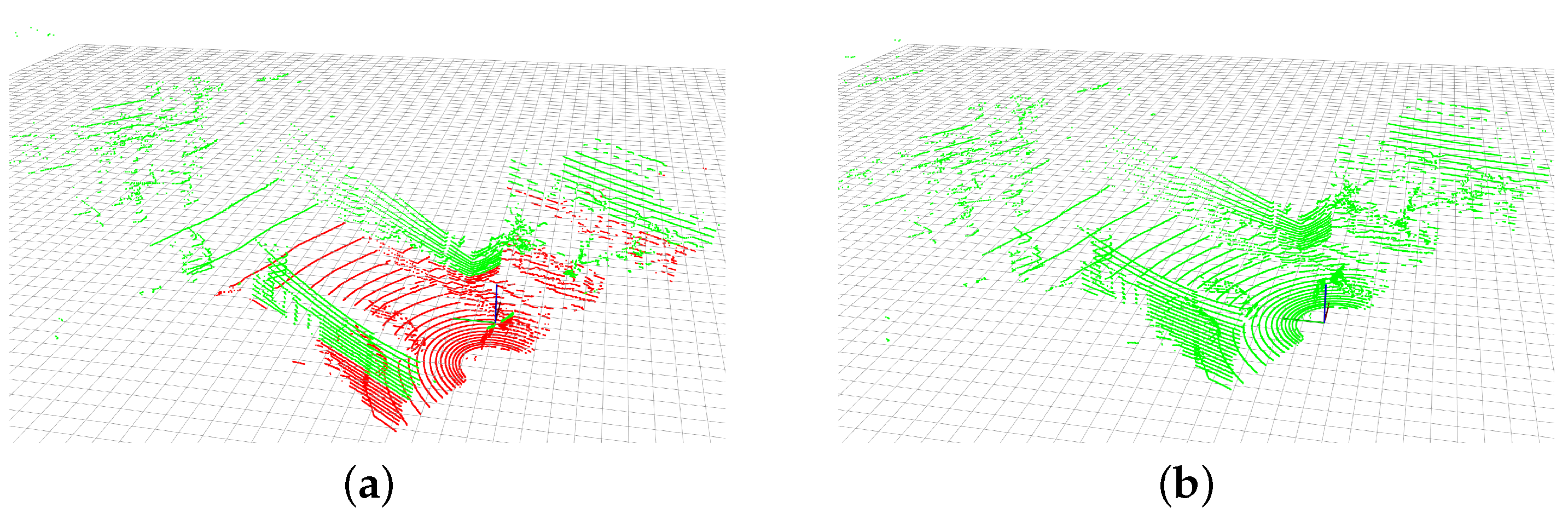

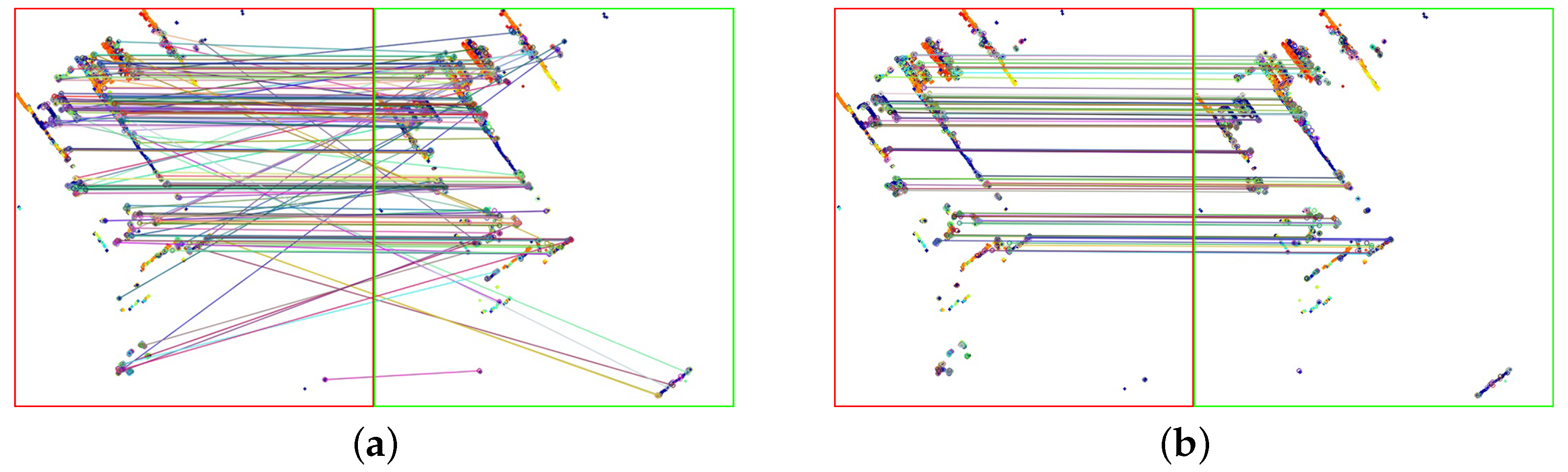

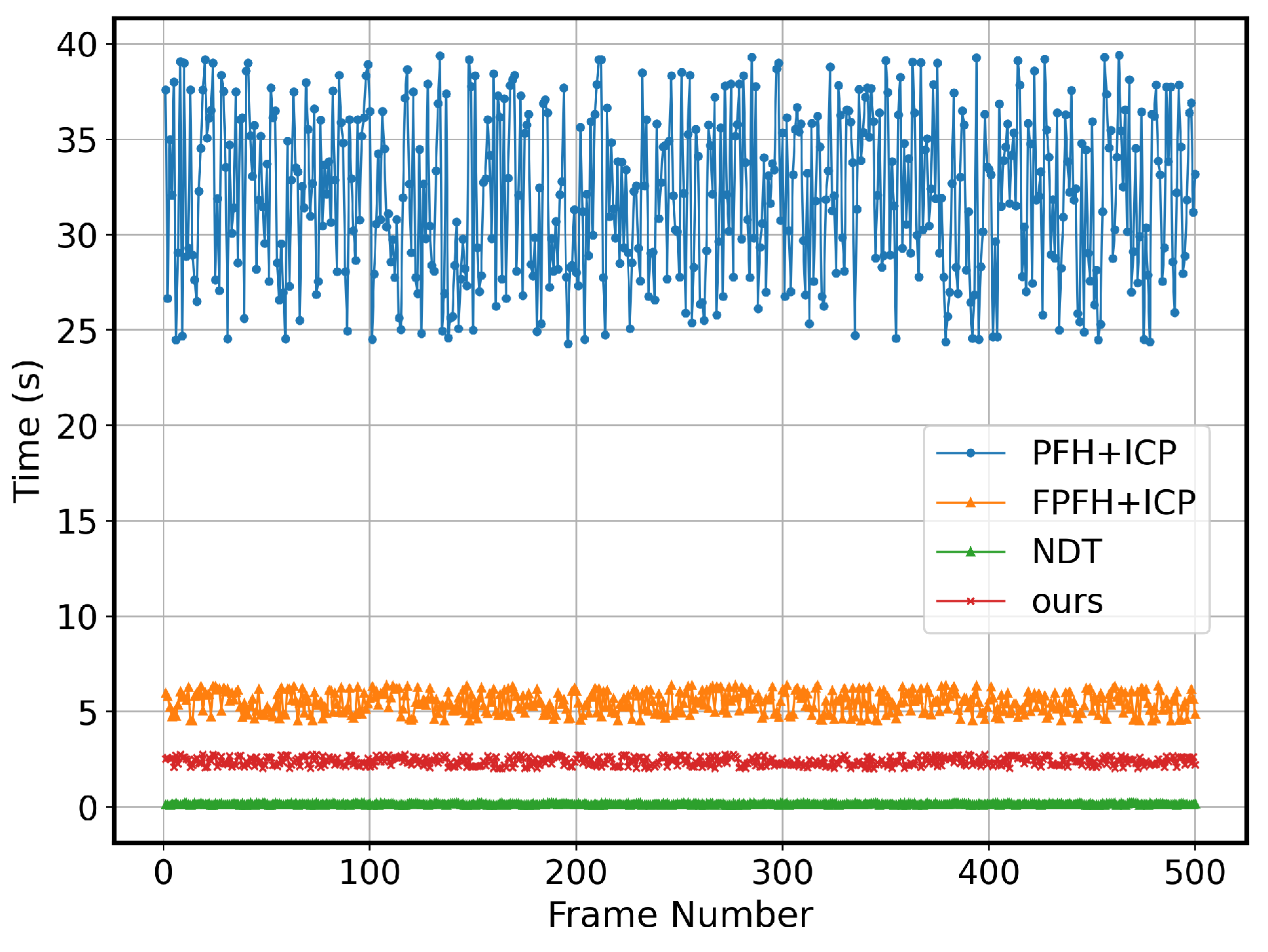

7.2. Registration Methods Comparison

7.3. Real Vehicle Experiment

7.3.1. Calibration Process

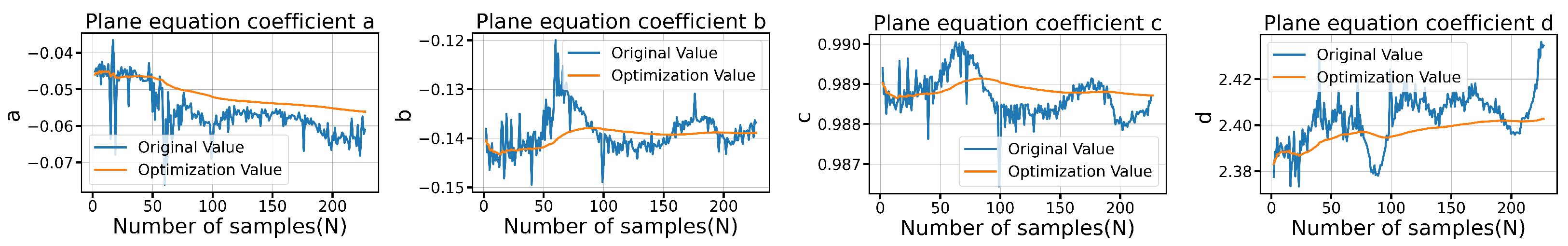

- Ground fitting phase: multi-frame ground RANSAC fitting combined with the Lagrange multiplier method was used to achieve convergence of the ground parameters, as illustrated in Figure 9. The figure shows the initial values of each coefficient during vehicle movement and the changes in optimization results as the number of samples increases. The blue curves represent the initial values of each parameter, while the orange curves show the optimization process. As the vehicle moves, several factors contribute to parameter fluctuations: ground unevenness, suspension vibrations, LiDAR point cloud noise, and errors in ground fitting. After accumulating data over multiple frames, the final plane parameters stabilize, and these parameters are then used for LiDAR ground plane alignment.

- Initial straight-driving phase: the ground plane was fitted, and the yaw angle was estimated using the motion constraint method. During the combined straight and curved driving stages, translation parameters in the X and Y directions were estimated.

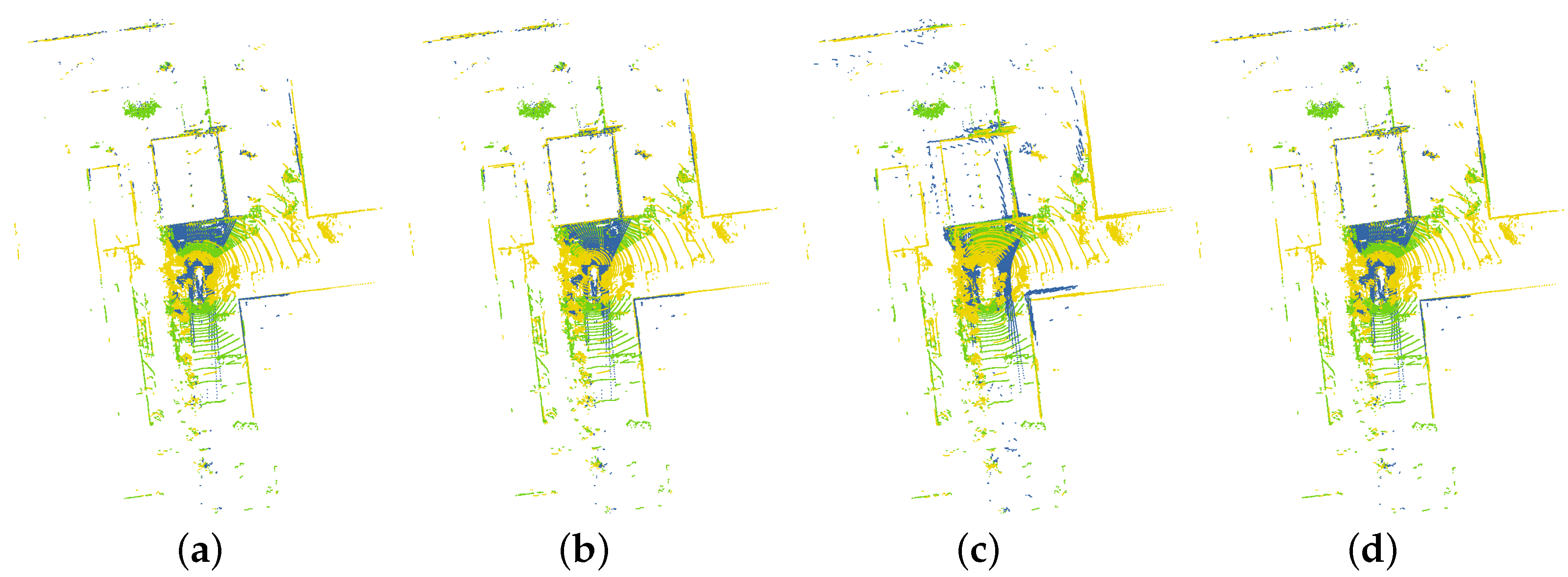

- Optimization of degrees of freedom (DoF) parameters in the dimension-reduced plane: As shown in Figure 10, yaw stabilizes over straight segments, and translation converges over combined segments. Fluctuations reflect registration noise, time synchronization, and sampling effects, and convergence improves with more samples.

7.3.2. Calibration Results and Accuracy Evaluation

7.4. nuScenes Dataset Experiment

7.4.1. Calibration Results

7.4.2. Accuracy Evaluation

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Huang, J.; Huang, G.; Zhu, Z.; Ye, Y.; Du, D. Bevdet: High-performance multi-camera 3D object detection in bird-eye-view. arXiv 2021, arXiv:2112.11790. [Google Scholar]

- Zhou, B.; Krähenbühl, P. Cross-view transformers for real-time map-view semantic segmentation. In Proceedings of the IEEE/CVF Conference Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13760–13769. [Google Scholar]

- Man, Y.; Gui, L.Y.; Wang, Y.X. BEV-guided multi-modality fusion for driving perception. In Proceedings of the IEEE/CVF Conference Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 21960–21969. [Google Scholar]

- Lin, Z.; Liu, Z.; Xia, Z.; Wang, X.; Wang, Y.; Qi, S.; Dong, Y.; Dong, N.; Zhang, L.; Zhu, C. RCBEVDet: Radar-camera fusion in bird’s eye view for 3D object detection. In Proceedings of the IEEE/CVF Conference Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 14928–14937. [Google Scholar]

- Liang, T.; Xie, H.; Yu, K.; Xia, Z.; Lin, Z.; Wang, Y.; Tang, T.; Wang, B.; Tang, Z. Bevfusion: A simple and robust lidar-camera fusion framework. Adv. Neural Inf. Process. Syst. 2022, 35, 10421–10434. [Google Scholar]

- Yang, H.; Zhang, S.; Huang, D.; Wu, X.; Zhu, H.; He, T.; Tang, S.; Zhao, H.; Qiu, Q.; Lin, B.; et al. Unipad: A universal pre-training paradigm for autonomous driving. In Proceedings of the IEEE/CVF Conference Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 15238–15250. [Google Scholar]

- Chang, D.; Zhang, R.; Huang, S.; Hu, M.; Ding, R.; Qin, X. Versatile Multi-LiDAR Accurate Self-Calibration System Based on Pose Graph Optimization. IEEE Trans. Robot. 2023, 8, 4839–4846. [Google Scholar] [CrossRef]

- Fent, F.; Kuttenreich, F.; Ruch, F.; Rizwin, F.; Juergens, S.; Lechermann, L.; Nissler, C.; Perl, A.; Voll, U.; Yan, M.; et al. MAN TruckScenes: A multimodal dataset for autonomous trucking in diverse conditions. arXiv 2024, arXiv:2407.07462. [Google Scholar] [CrossRef]

- Gurumadaiah, A.K.; Park, J.; Lee, J.-H.; Kim, J.; Kwon, S. Precise Synchronization Between LiDAR and Multiple Cameras for Autonomous Driving: An Adaptive Approach. IEEE Trans. Intell. Veh. 2025, 10, 2152–2162. [Google Scholar] [CrossRef]

- Seidaliyeva, U.; Ilipbayeva, L.; Utebayeva, D.; Smailov, N.; Matson, E.T.; Tashtay, Y.; Turumbetov, M.; Sabibolda, A. LiDAR Technology for UAV Detection: From Fundamentals and Operational Principles to Advanced Detection and Classification Techniques. Sensors 2025, 25, 2757. [Google Scholar] [CrossRef] [PubMed]

- Ou, J.; Huang, P.; Zhou, J.; Zhao, Y.; Lin, L. Automatic extrinsic calibration of 3D LIDAR and multi-cameras based on graph optimization. Sensors 2022, 22, 2221. [Google Scholar] [CrossRef]

- Zeng, T.; Gu, X.; Yan, F.; He, M.; He, D. YOCO: You Only Calibrate Once for Accurate Extrinsic Parameter in LiDAR-Camera Systems. arXiv 2024, arXiv:2407.18043. [Google Scholar] [CrossRef]

- Petek, K.; Vödisch, N.; Meyer, J.; Cattaneo, D.; Valada, A.; Burgard, W. Automatic target-less camera-LiDAR calibration from motion and deep point correspondences. arXiv 2024, arXiv:2404.17298. [Google Scholar] [CrossRef]

- Jiao, J.; Yu, Y.; Liao, Q.; Ye, H.; Fan, R.; Liu, M. Automatic calibration of multiple 3D LiDARs in urban environments. In Proceedings of the IEEE/RSJ International Conference Intelligent Robots and Systems, Macau, China, 4–8 November 2019; pp. 15–20. [Google Scholar] [CrossRef]

- Wang, F.; Zhao, X.; Gu, H.; Wang, L.; Wang, S.; Han, Y. Multi-Lidar system localization and mapping with online calibration. Appl. Sci. 2023, 13, 10193. [Google Scholar] [CrossRef]

- Das, S.; Boberg, B.; Fallon, M.; Chatterjee, S. IMU-based online multi-lidar calibration. In Proceedings of the 2024 IEEE Intelligent Vehicles Symposium, Jeju Island, Republic of Korea, 2–5 June 2024; pp. 3227–3234. [Google Scholar]

- Gao, C.; Spletzer, J.R. On-line calibration of multiple LIDARs on a mobile vehicle platform. In Proceedings of the IEEE International Conference Robotics and Automation, Anchorage, AK, USA, 3–8 May 2010; pp. 279–284. [Google Scholar] [CrossRef]

- Yoo, J.H.; Jung, G.B.; Jung, H.G.; Suhr, J.K. Camera-LiDAR calibration using iterative random sampling and intersection line-based quality evaluation. Electronics 2024, 13, 249. [Google Scholar] [CrossRef]

- Liu, Y.; Cui, X.; Fan, S.; Wang, Q.; Liu, Y.; Sun, Y.; Wang, G. Dynamic validation of calibration accuracy and structural robustness of a multi-sensor mobile robot. Sensors 2024, 24, 3896. [Google Scholar] [CrossRef]

- Lee, H.; Chung, W. Extrinsic calibration of multiple 3D LiDAR sensors by the use of planar objects. Sensors 2022, 22, 7234. [Google Scholar] [CrossRef]

- Choi, D.G.; Bok, Y.; Kim, J.S.; Kweon, I.S. Extrinsic calibration of 2-D lidars using two orthogonal planes. IEEE Trans. Robot. 2015, 32, 83–98. [Google Scholar] [CrossRef]

- Shi, B.; Yu, P.; Yang, M.; Wang, C.; Bai, Y.; Yang, F. Extrinsic calibration of dual LiDARs based on plane features and uncertainty analysis. IEEE Sens. J. 2021, 21, 11117–11130. [Google Scholar] [CrossRef]

- Yan, G.; Liu, Z.; Wang, C.; Shi, C.; Wei, P.; Cai, X.; Ma, T.; Liu, Z.; Zhong, Z.; Liu, Y.; et al. Opencalib: A multi-sensor calibration toolbox for autonomous driving. Softw. Impacts 2022, 14, 100393. [Google Scholar] [CrossRef]

- Jiao, J.; Liao, Q.; Zhu, Y.; Liu, T.; Yu, Y.; Fan, R. A novel dual-lidar calibration algorithm using planar surfaces. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium, Paris, France, 9–12 June 2019; pp. 1499–1504. [Google Scholar]

- Nie, M.; Shi, W.; Fan, W.; Xiang, H. Automatic extrinsic calibration of dual LiDARs with adaptive surface normal estimation. IEEE Trans. Instrum. Meas. 2022, 72, 1000711. [Google Scholar] [CrossRef]

- Claussmann, L.; Revilloud, M.; Gruyer, D.; Glaser, S. A review of motion planning for highway autonomous driving. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1826–1848. [Google Scholar] [CrossRef]

- Teng, S.; Hu, X.; Deng, P.; Li, B.; Li, Y.; Ai, Y. Motion planning for autonomous driving: The state of the art and future perspectives. IEEE Trans. Intell. Veh. 2023, 8, 3692–3711. [Google Scholar] [CrossRef]

- Kong, J.; Pfeiffer, M.; Schildbach, G.; Borrelli, F. Kinematic and dynamic vehicle models for autonomous driving control design. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium, Seoul, Republic of Korea, 28 June–1 July 2015; pp. 1094–1099. [Google Scholar]

- Chen, L.; Wu, P.; Chitta, K.; Jaeger, B.; Geiger, A.; Li, H. End-to-end autonomous driving: Challenges and frontiers. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10164–10183. [Google Scholar] [CrossRef]

- Jiao, J.; Ye, H.; Zhu, Y.; Liu, M. Robust Odometry and Mapping for Multi-LiDAR Systems With Online Extrinsic Calibration. IEEE Trans. Robot. 2022, 2022, 351–371. [Google Scholar] [CrossRef]

- Huang, G.; Huang, H.; Xiao, W.; Dang, Y.; Xie, J.; Gao, X.; Huang, Y. A sensor fusion-based optimization method for indoor localization. In Proceedings of the Fourth International Conference Mechanical Engineering, Intelligent Manufacturing, and Automation Technology (MEMAT 2023), Guilin, China, 20–22 October 2023; Volume 13082, pp. 184–190. [Google Scholar]

- Liu, X.; Zhang, F. Extrinsic calibration of multiple LiDARs of small FoV in targetless environments. IEEE Robot. Autom. Lett. 2021, 6, 2036–2043. [Google Scholar] [CrossRef]

- Kim, T.Y.; Pak, G.; Kim, E. GRIL-Calib: Targetless Ground Robot IMU-LiDAR extrinsic calibration method using ground plane motion constraints. IEEE Robot. Autom. Lett. 2024, 9, 5409–5416. [Google Scholar] [CrossRef]

- Della Corte, B.; Andreasson, H.; Stoyanov, T.; Grisetti, G. Unified motion-based calibration of mobile multi-sensor platforms with time delay estimation. IEEE Robot. Autom. Lett. 2019, 4, 902–909. [Google Scholar] [CrossRef]

- Yin, J.; Yan, F.; Liu, Y.; Zhuang, Y. Automatic and targetless LiDAR-Camera extrinsic calibration using edge alignment. IEEE Sens. J. 2023, 23, 19871–19880. [Google Scholar] [CrossRef]

- Yu, J.; Vishwanathan, S.V.N.; Günter, S.; Schraudolph, N.N. A quasi-Newton approach to non-smooth convex optimization. In Proceedings of the 25th International Conference Machine Learning, Helsinki, Finland, 5–9 July 2008. [Google Scholar]

- Caponetto, R.; Fortuna, L.; Graziani, S.; Xibilia, M.G. Genetic algorithms and applications in system engineering: A survey. Trans. Inst. Meas. Control 1993, 15, 143–156. [Google Scholar] [CrossRef]

- Tan, Z.; Zhang, X.; Teng, S.; Wang, L.; Gao, F. A Review of Deep Learning-Based LiDAR and Camera Extrinsic Calibration. Sensors 2024, 24, 3878. [Google Scholar] [CrossRef]

- Liao, Y.; Li, J.; Kang, S. SE-Calib: Semantic edges based LiDAR-camera boresight online calibration in urban scenes. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1000513. [Google Scholar] [CrossRef]

- Liu, Z.; Tang, H.; Zhu, S.; Han, S. SemAlign: Annotation-free camera-LiDAR calibration with semantic alignment loss. In Proceedings of the 2021 IEEE/RSJ International Conference Intelligent Robots and Systems, Prague, Czech Republic, 27 September–1 October 2021; pp. 15–20. [Google Scholar] [CrossRef]

- Zhu, J.; Xue, J.; Zhang, P. CalibDepth: Unifying depth map representation for iterative LiDAR-camera online calibration. In Proceedings of the 2023 IEEE International Conference Robotics and Automation, London, UK, 29 May–2 June 2023; pp. 279–284. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, C.; Liu, Y. CalibRank: Effective LiDAR-camera extrinsic calibration by multi-modal learning to rank. In Proceedings of the IEEE International Conference Image Processing, Abu Dhabi, United Arab Emirates, 27–30 October 2020; pp. 2152–2162. [Google Scholar] [CrossRef]

- Iyer, G.; Murthy, J.K.; Krishna, K.M. CalibNet: Self-supervised extrinsic calibration using 3D spatial transformer networks. In Proceedings of the 2018 IEEE/RSJ International Conference Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Yuan, K.; Guo, Z.; Wang, Z.J. RGGNet: Tolerance aware LiDAR-camera online calibration with geometric deep learning and generative model. IEEE Robot. Autom. Lett. 2020, 5, 6956–6963. [Google Scholar] [CrossRef]

- Lv, X.; Wang, B.; Dou, Z.; Ye, D.; Wang, S. LCCNet: LiDAR and camera self-calibration using cost volume network. In Proceedings of the 2021 IEEE/CVF Conference Computer Vision and Pattern Recognition Workshops, Nashville, TN, USA, 19–25 June 2021; pp. 20–25. [Google Scholar] [CrossRef]

- Mharolkar, S.; Zhang, J.; Peng, G.; Liu, Y.; Wang, D. RGBDTCalibNet: End-to-end online extrinsic calibration between a 3D LiDAR, an RGB camera and a thermal camera. In Proceedings of the IEEE 25th International Conference Intelligent Transportation Systems, Macau, China, 8–12 October 2022; pp. 8–12. [Google Scholar] [CrossRef]

- Yu, Z.; Feng, C.; Liu, M.Y.; Ramalingam, S. CaseNet: Deep category-aware semantic edge detection. In Proceedings of the 2017 IEEE Conference Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 21–26. [Google Scholar] [CrossRef]

- Kang, J.; Doh, N.L. Automatic targetless camera-lidar calibration by aligning edge with gaussian mixture model. J. Field Robot. 2020, 37, 158–179. [Google Scholar] [CrossRef]

- Tao, L.; Pei, L.; Li, T.; Zou, D.; Wu, Q.; Xia, S. CPI: LiDAR-camera extrinsic calibration based on feature points with reflection intensity. In Proceedings of the Spatial Data and Intelligence 2020, Shenzhen, China, 8–9 May 2020; pp. 8–9. [Google Scholar] [CrossRef]

- Wang, W.; Nobuhara, S.; Nakamura, R.; Sakurada, K. SOIC: Semantic online initialization and calibration for LiDAR and camera. arXiv 2020, arXiv:2003.04260. [Google Scholar] [CrossRef]

- Derpanis, K.G. Overview of the RANSAC algorithm. Image Rochester NY 2010, 4, 2–3. [Google Scholar]

- Bertsekas, D.P. Constrained Optimization and Lagrange Multiplier Methods; Academic Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Dai, J.S. Euler–Rodrigues formula variations, quaternion conjugation and intrinsic connections. Mech. Mach. Theory 2015, 92, 144–152. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. Brief: Binary robust independent elementary features. In Proceedings of the Computer Vision–ECCV 2010, Heraklion, Crete, Greece, 5–11 September 2010; pp. 778–792. [Google Scholar]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the 3rd International Conference 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001. [Google Scholar]

- Hsieh, C.-C. B-spline wavelet-based motion smoothing. Comput. Ind. Eng. 2001, 41, 59–76. [Google Scholar] [CrossRef]

- Rusu, R.B.; Blodow, N.; Marton, Z.C.; Beetz, M. Aligning point cloud views using persistent feature histograms. In Proceedings of the IEEE/RSJ International Conference Intelligent Robots and Systems, Nice, France, 22–26 September 2008. [Google Scholar]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast point feature histograms (FPFH) for 3D registration. In Proceedings of the IEEE International Conference Robotics and Automation, Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Biber, P.; Straßer, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the IEEE/RSJ International Conference Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 27–31 October 2003; Volume 3. [Google Scholar]

| LiDAR Name | OpenCalib [23] | Jiao et al. [23] | Ours | |||

|---|---|---|---|---|---|---|

|

Translation

Error (cm) |

Rotation

Error (°) |

Translation

Error (cm) |

Rotation

Error (°) |

Translation

Error (cm) |

Rotation

Error (°) | |

| Primary | 2.658 | 2.126 | 5.152 | 3.252 | 5.276 | 3.158 |

| Rear | 3.691 | 1.296 | 8.701 | 6.154 | 6.085 | 4.768 |

| Left Top | 2.521 | 3.096 | 2.711 | 3.088 | 5.312 | 2.186 |

| Left Bottom | 3.154 | 3.642 | 63.157 | 45.642 | 6.023 | 2.956 |

| Right Top | 3.734 | 2.501 | 7.606 | 7.642 | 5.624 | 3.246 |

| Right Bottom | 4.599 | 1.577 | 126.154 | 30.654 | 3.681 | 4.036 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, Y.; Hu, J.; Yang, Z.; Xu, W.; He, S.; Hu, B. A Multi-LiDAR Self-Calibration System Based on Natural Environments and Motion Constraints. Mathematics 2025, 13, 3181. https://doi.org/10.3390/math13193181

Tang Y, Hu J, Yang Z, Xu W, He S, Hu B. A Multi-LiDAR Self-Calibration System Based on Natural Environments and Motion Constraints. Mathematics. 2025; 13(19):3181. https://doi.org/10.3390/math13193181

Chicago/Turabian StyleTang, Yuxuan, Jie Hu, Zhiyong Yang, Wencai Xu, Shuaidi He, and Bolun Hu. 2025. "A Multi-LiDAR Self-Calibration System Based on Natural Environments and Motion Constraints" Mathematics 13, no. 19: 3181. https://doi.org/10.3390/math13193181

APA StyleTang, Y., Hu, J., Yang, Z., Xu, W., He, S., & Hu, B. (2025). A Multi-LiDAR Self-Calibration System Based on Natural Environments and Motion Constraints. Mathematics, 13(19), 3181. https://doi.org/10.3390/math13193181