Weather-Corrupted Image Enhancement with Removal-Raindrop Diffusion and Mutual Image Translation Modules

Abstract

1. Introduction

2. Related Work

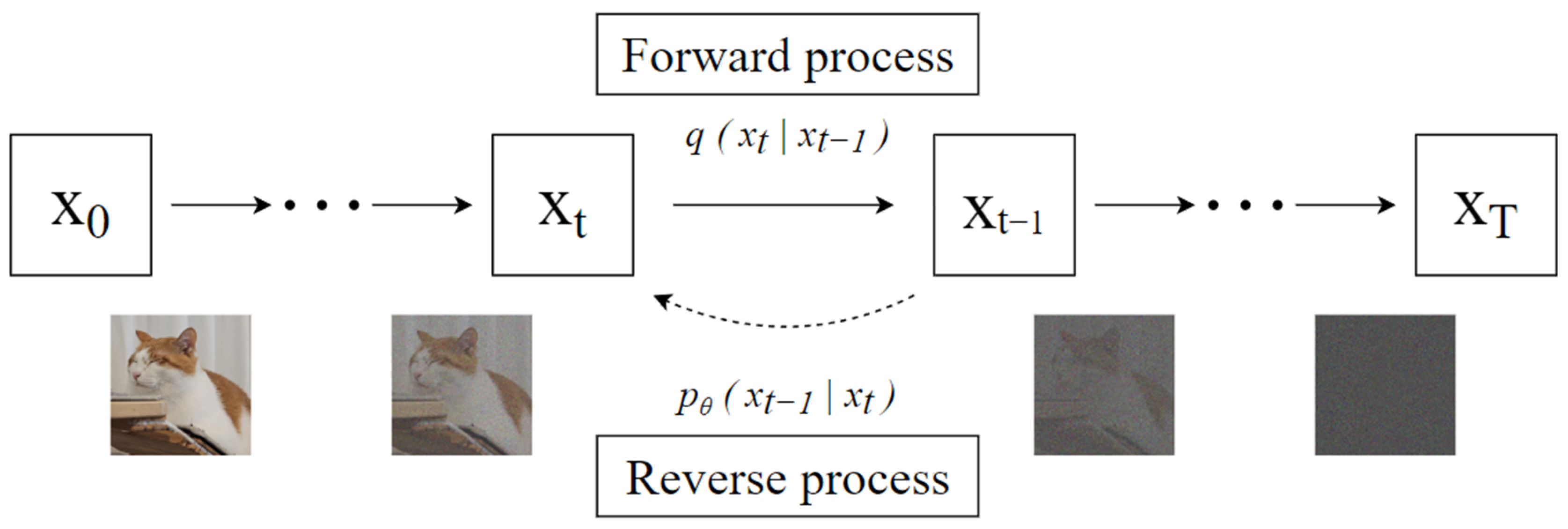

2.1. GAN-Based Methods and Emergence of Diffusion Models

2.2. Palette Diffusion Model

3. Proposed Method

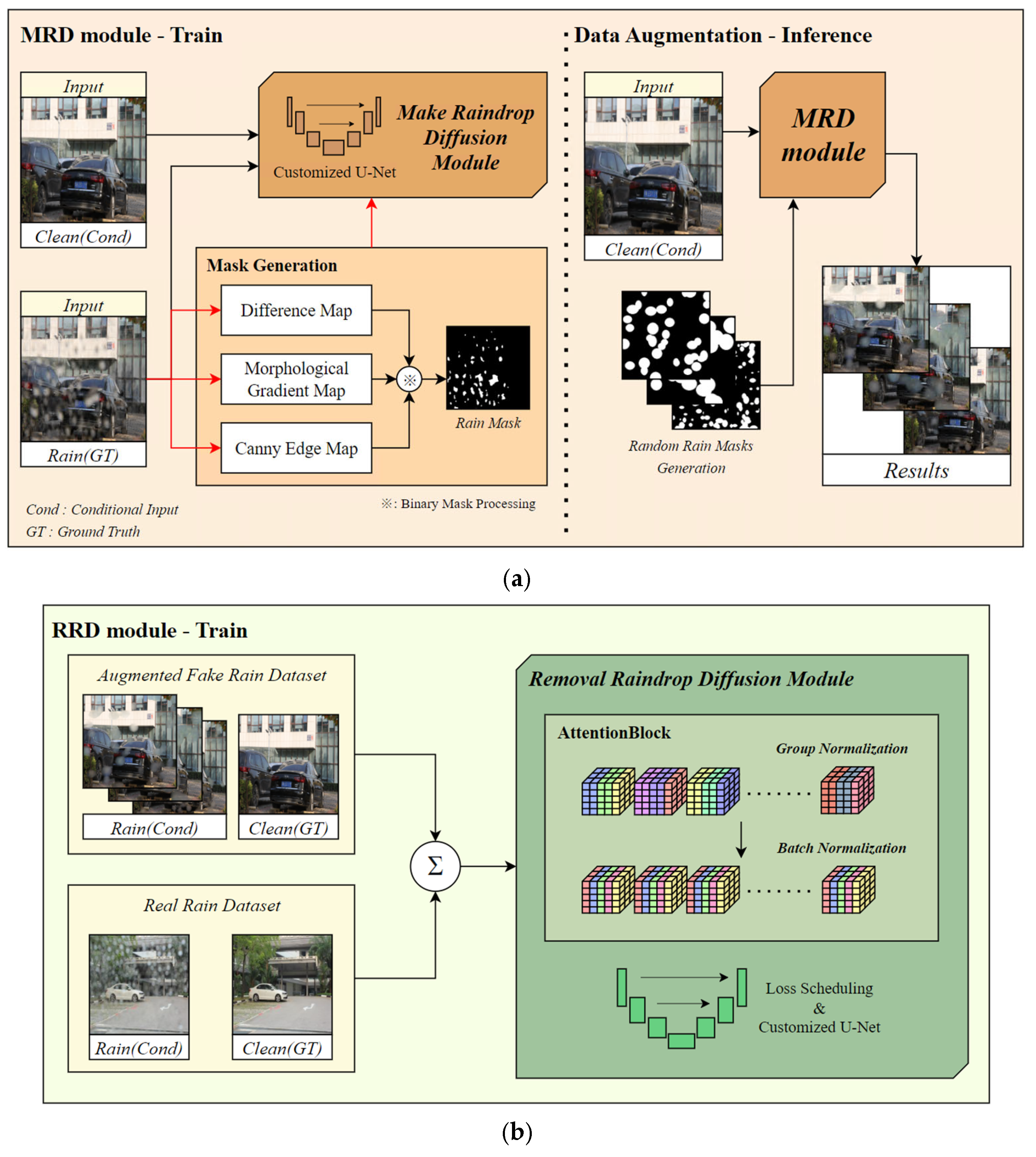

- The MRD module enhances dataset diversity and improves model generalization by synthesizing raindrop-degraded images using an inpainting approach guided by difference maps and edge-based masks.

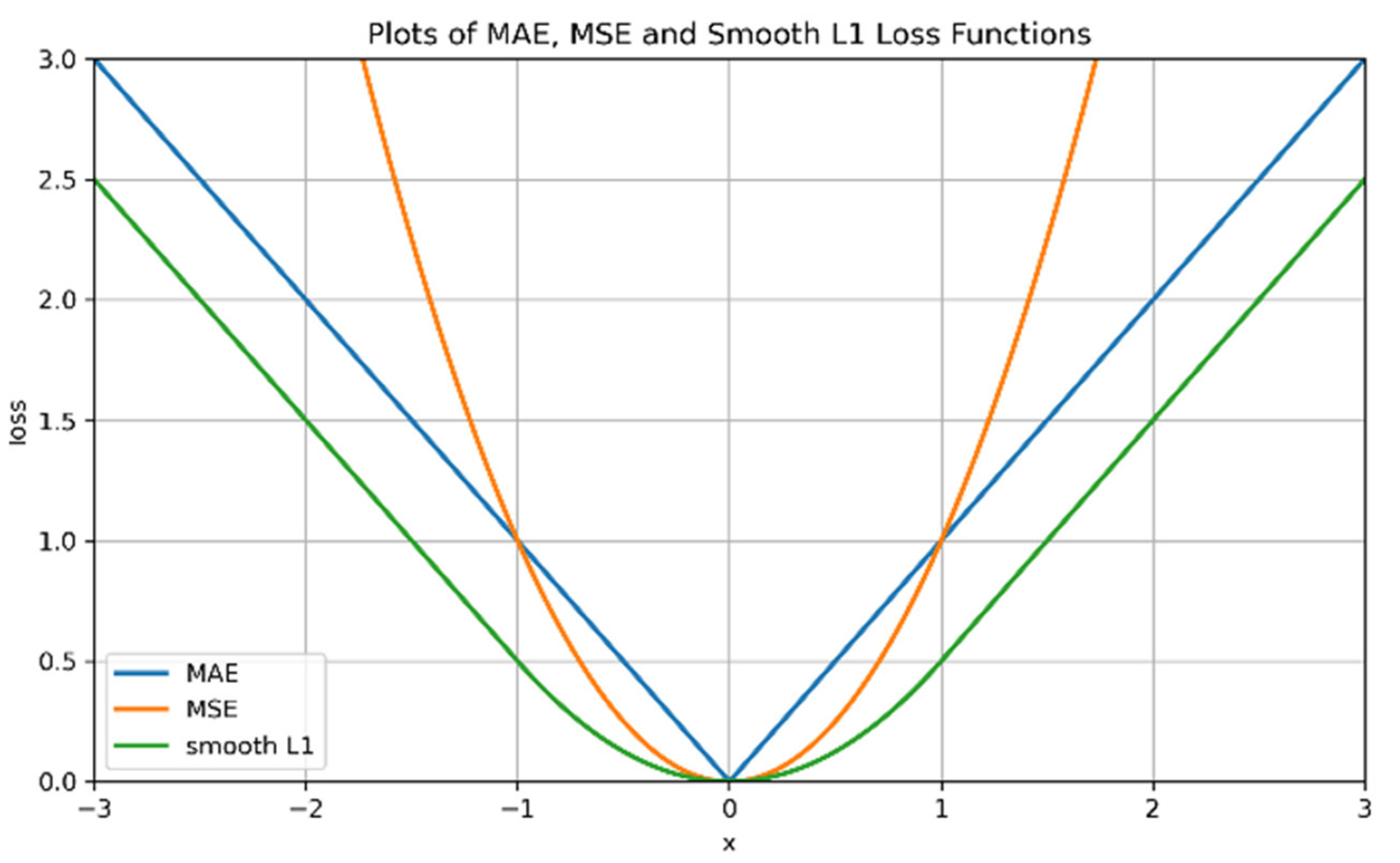

- The RRD module performs image-to-image translation using the augmented dataset, incorporating smooth L1 loss and BN to preserve structural details. During inference, DDIM-based sampling reduces computational cost and accelerates inference speed significantly.

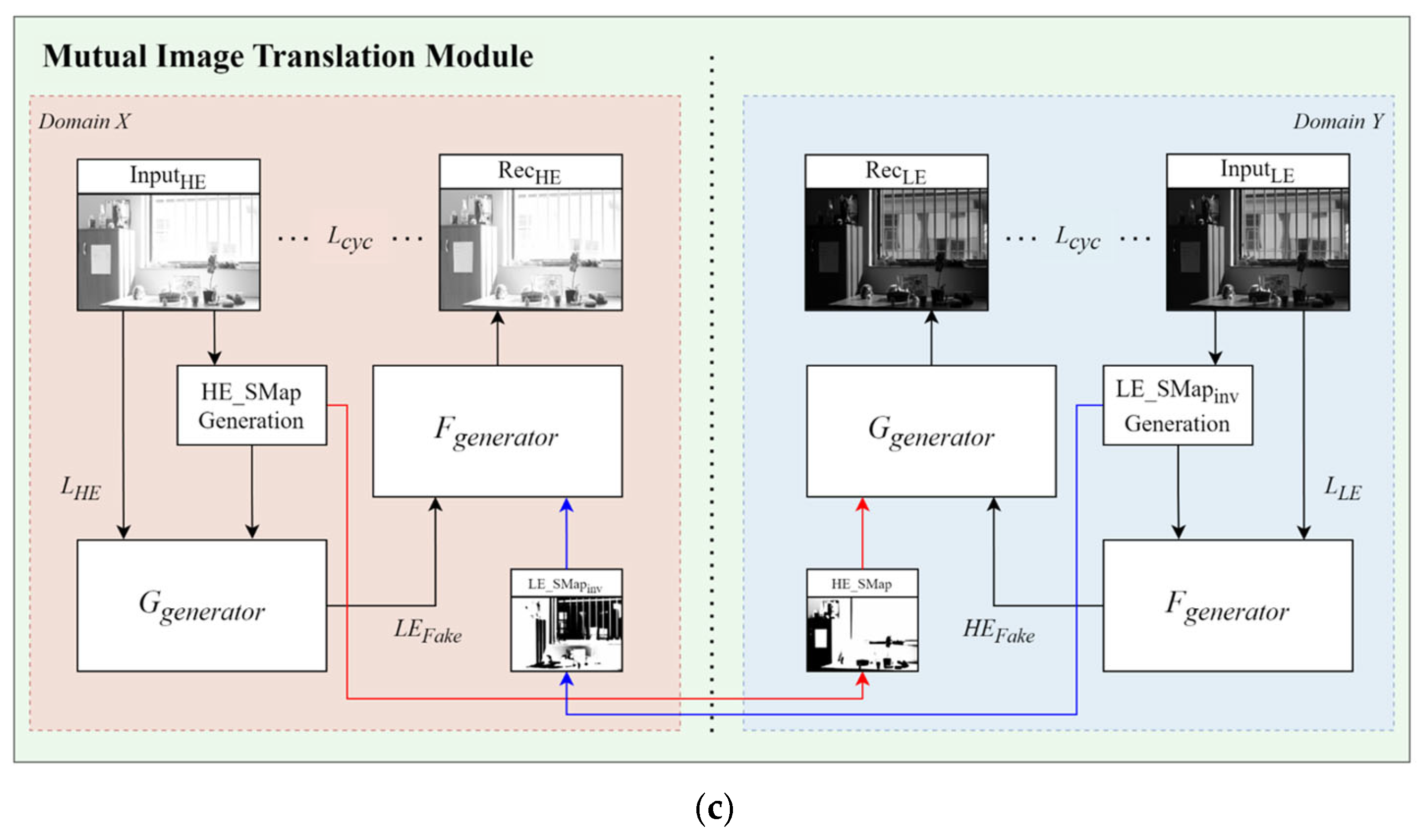

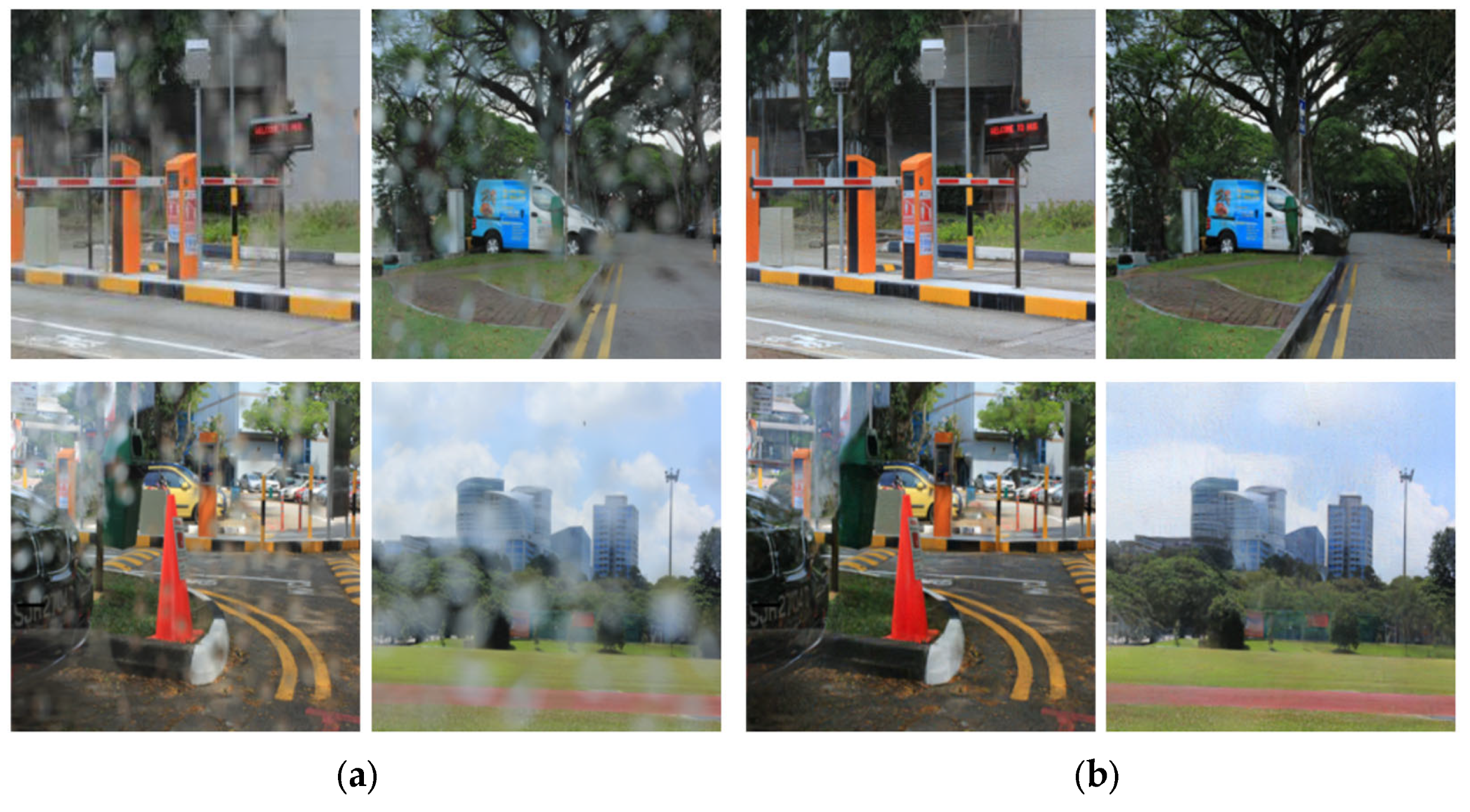

- In the post-processing stage, HDR tone correction is performed using MITM, followed by image blending to prevent overexposure artifacts. Chroma compensation is employed to mitigate color distortions caused by luminance adjustments, ensuring overall color consistency.

3.1. Make-Raindrop Diffusion Module

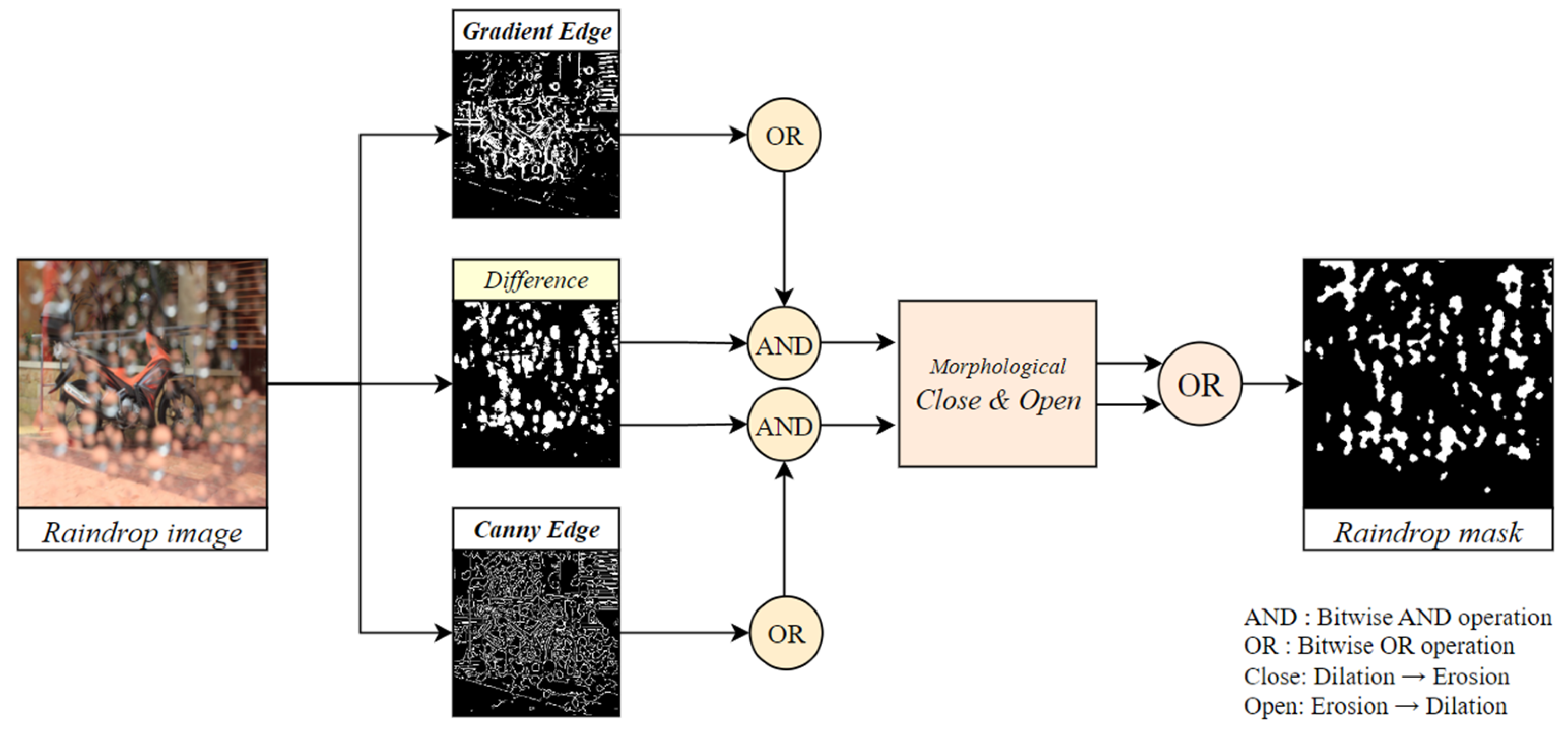

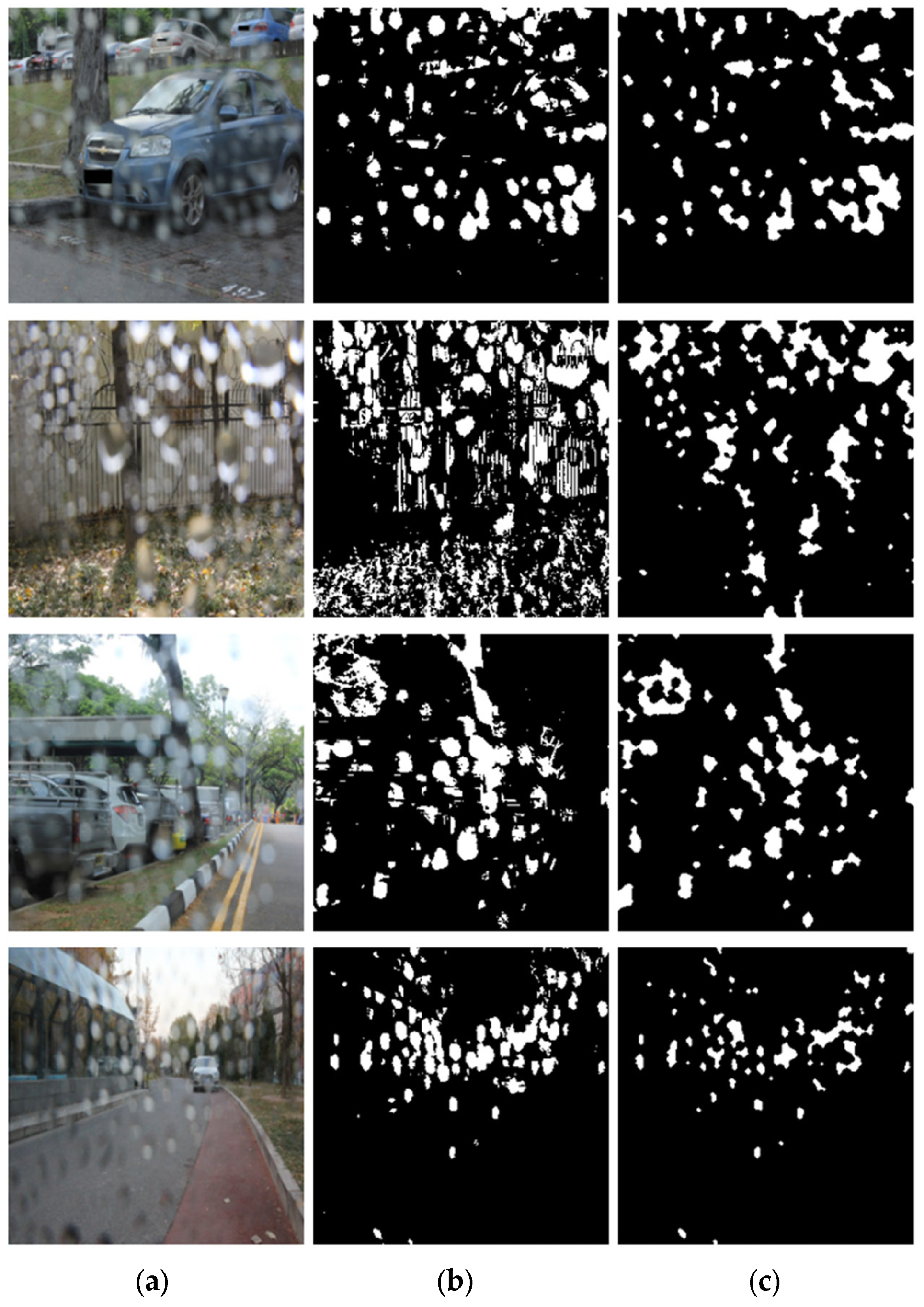

3.1.1. Make-Raindrop Diffusion Module and Binary Mask Processing

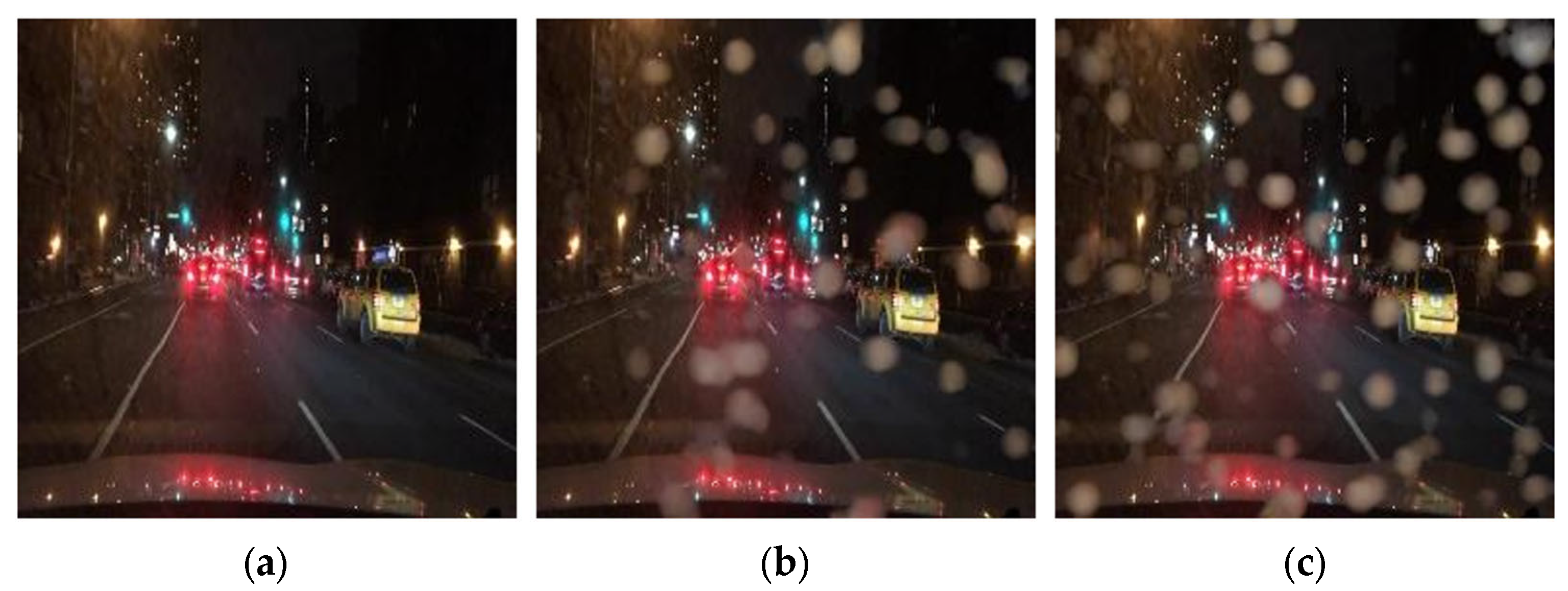

3.1.2. Data Augmentation

3.2. Removal-Raindrop Diffusion Module

3.3. Post-Processing Stage

4. Simulation Results

4.1. Evaluation Metric

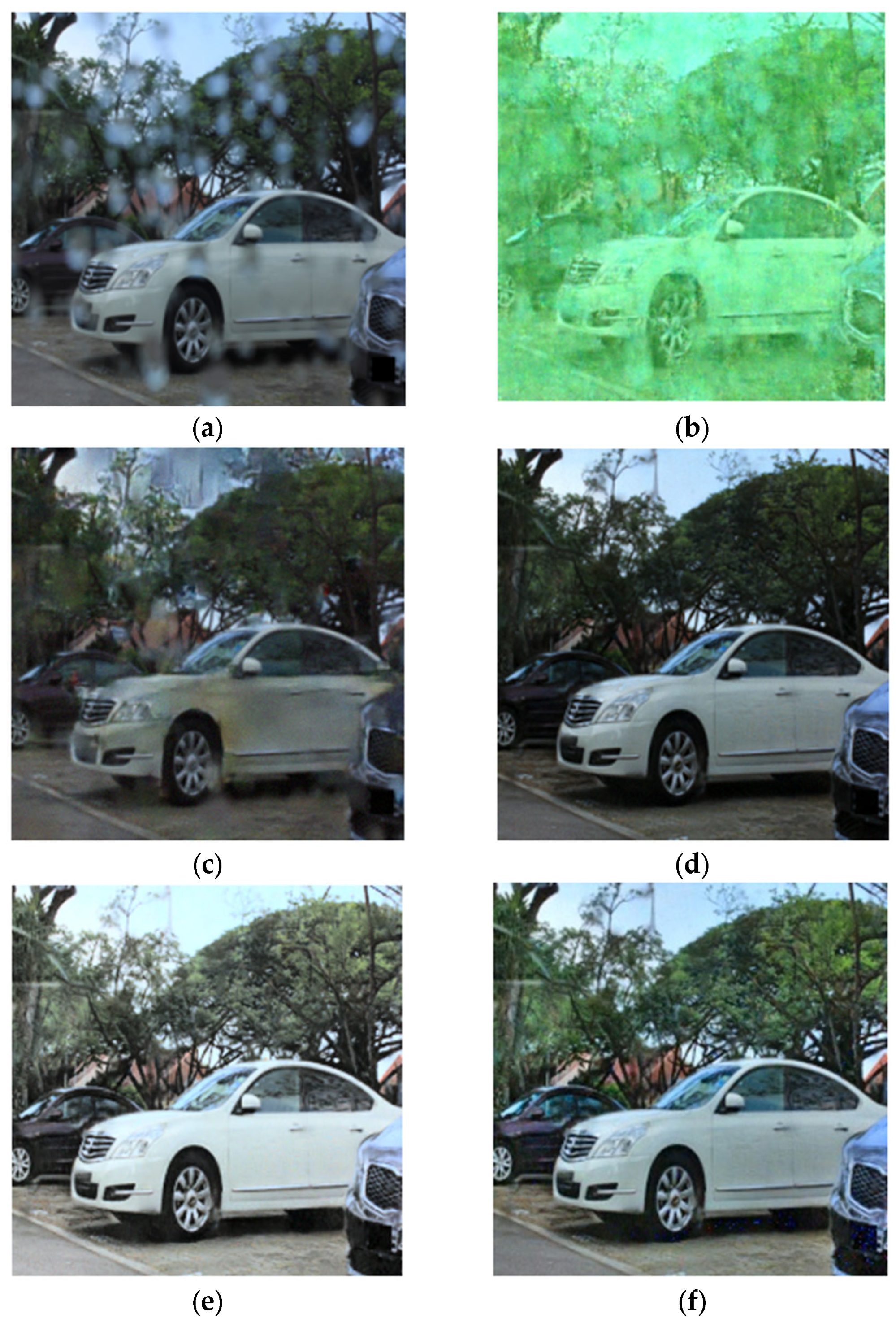

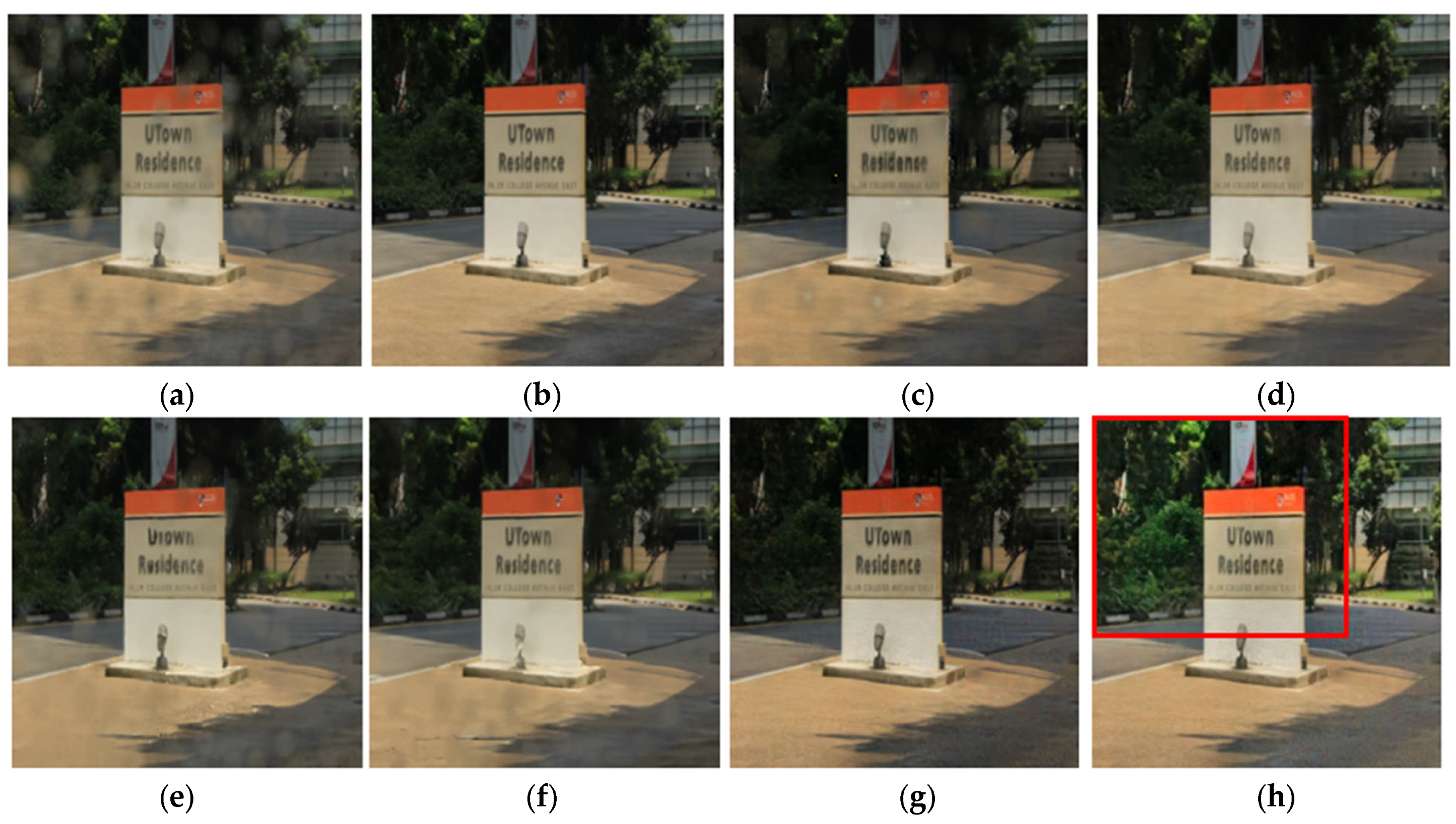

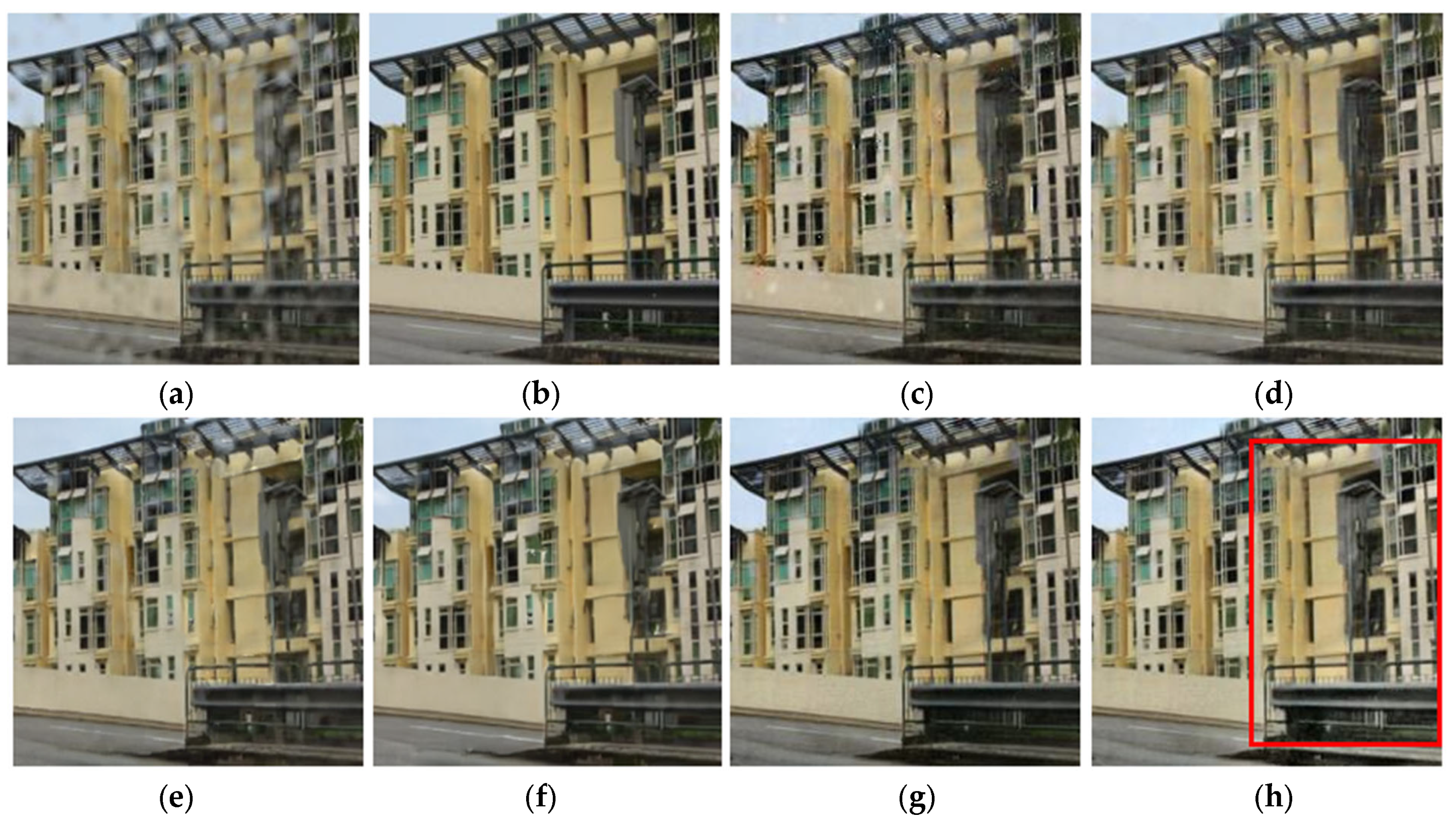

4.2. Ablation Experiments

- Case 1: The original Palette diffusion model was configured for an image-to-image setting and trained for raindrop removal on a dataset of 5000 images (1000 real and 4000 synthetic).

- Case 2: The dataset size was kept at 5000 while replacing Group Normalization with Batch Normalization to assess the impact of normalization on color stability.

- Case 3: The dataset was expanded to approximately 11,000 images and the same training procedure was repeated to evaluate whether MRD generated synthetic data improves generalization.

- Case 4: Only the tone mapping module was applied in post processing to examine luminance balancing in the RRD output, and color correction was excluded in this setting.

- Case 5: The full pipeline with blending and chroma compensation was applied. This is the final proposed method, which preserves color balance after tone adjustment and suppresses both over saturation and desaturation.

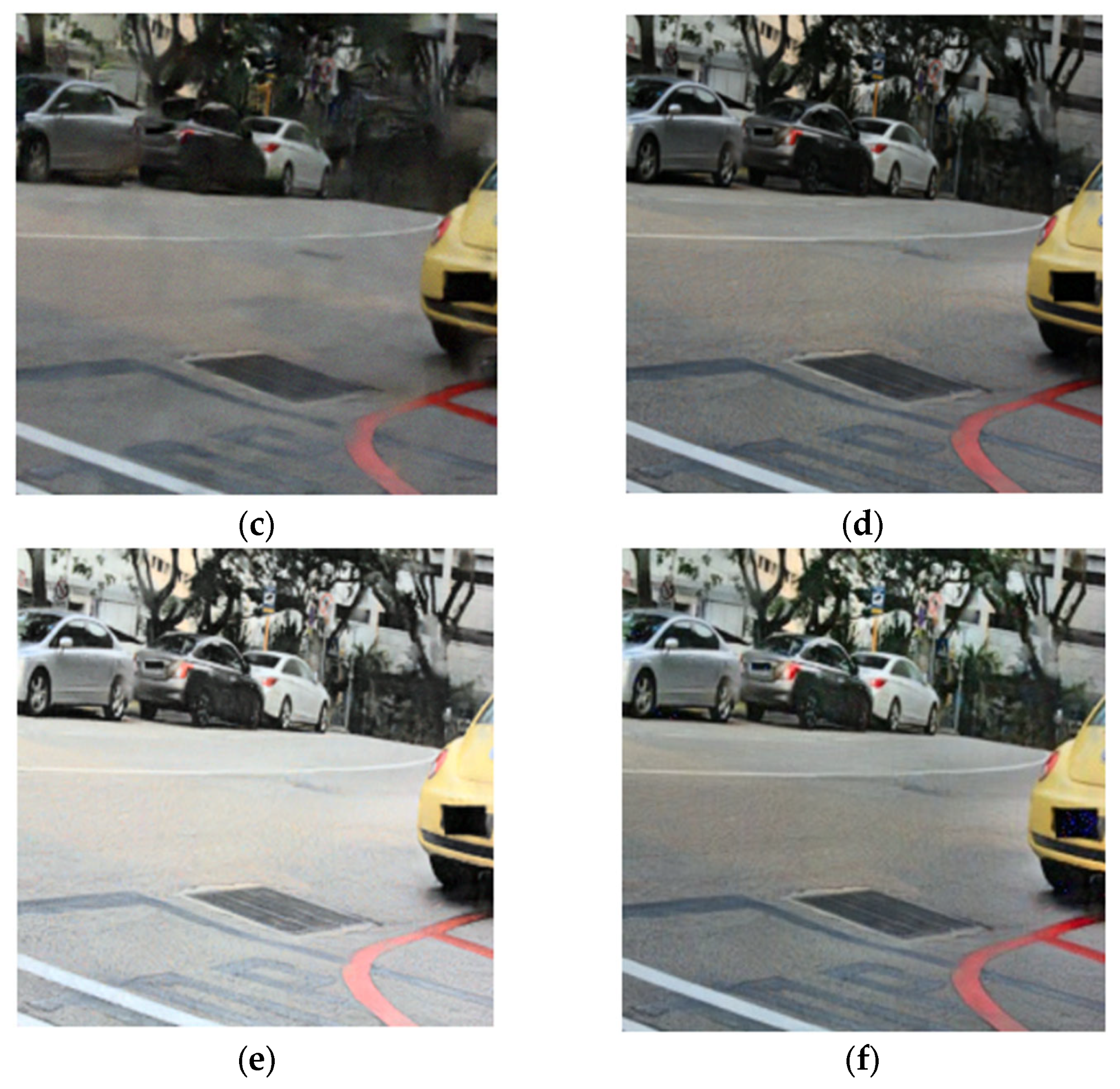

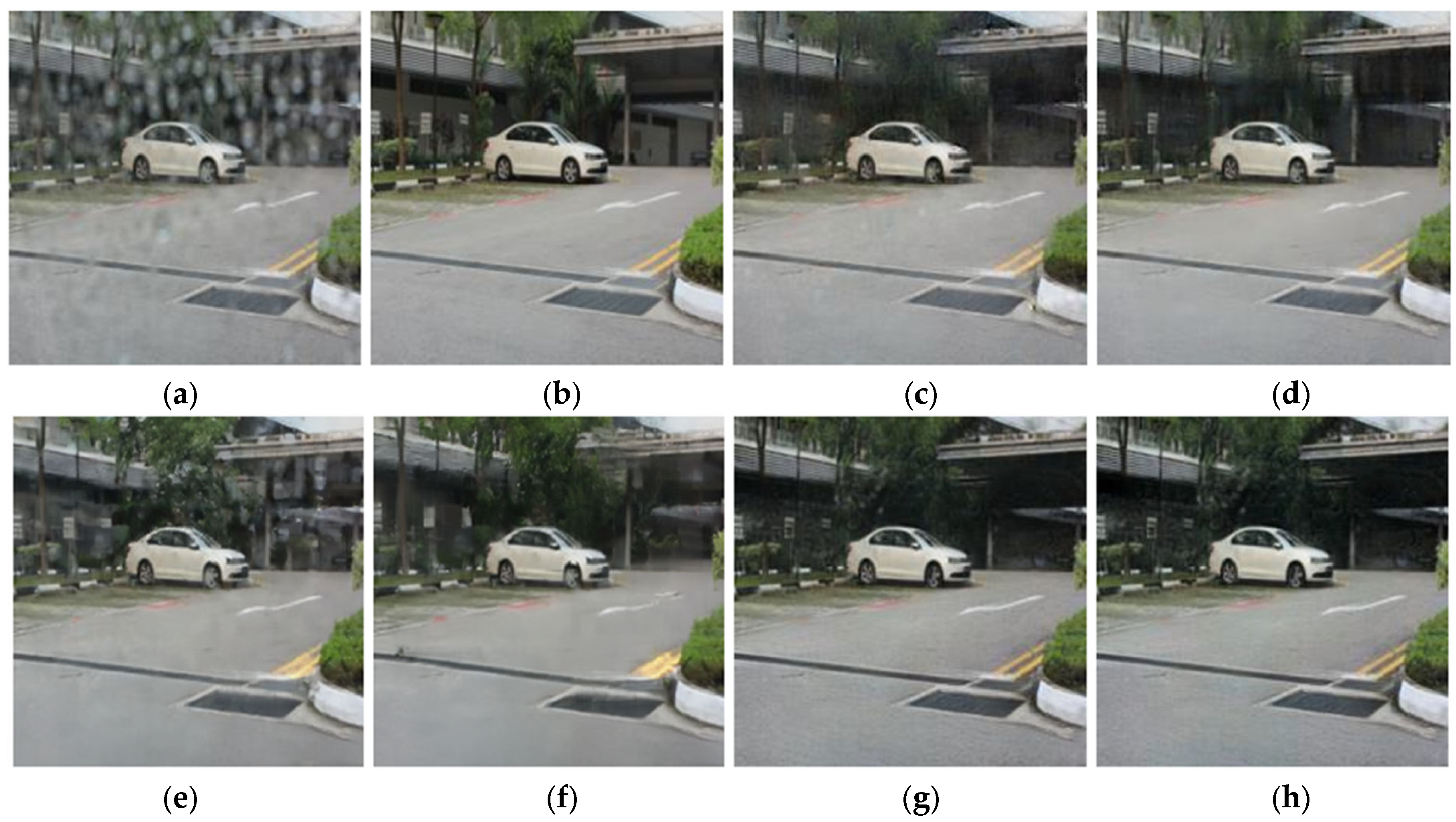

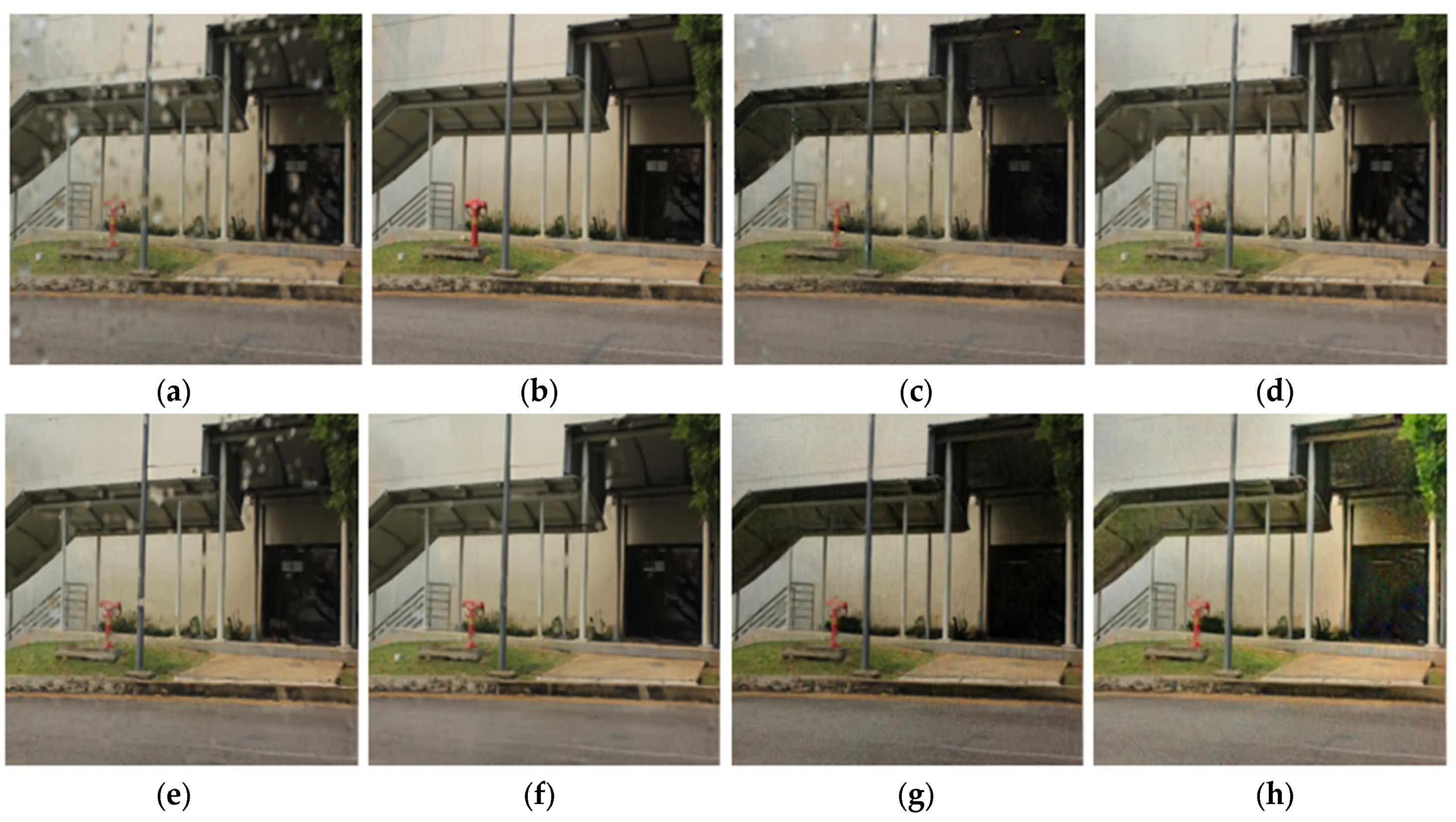

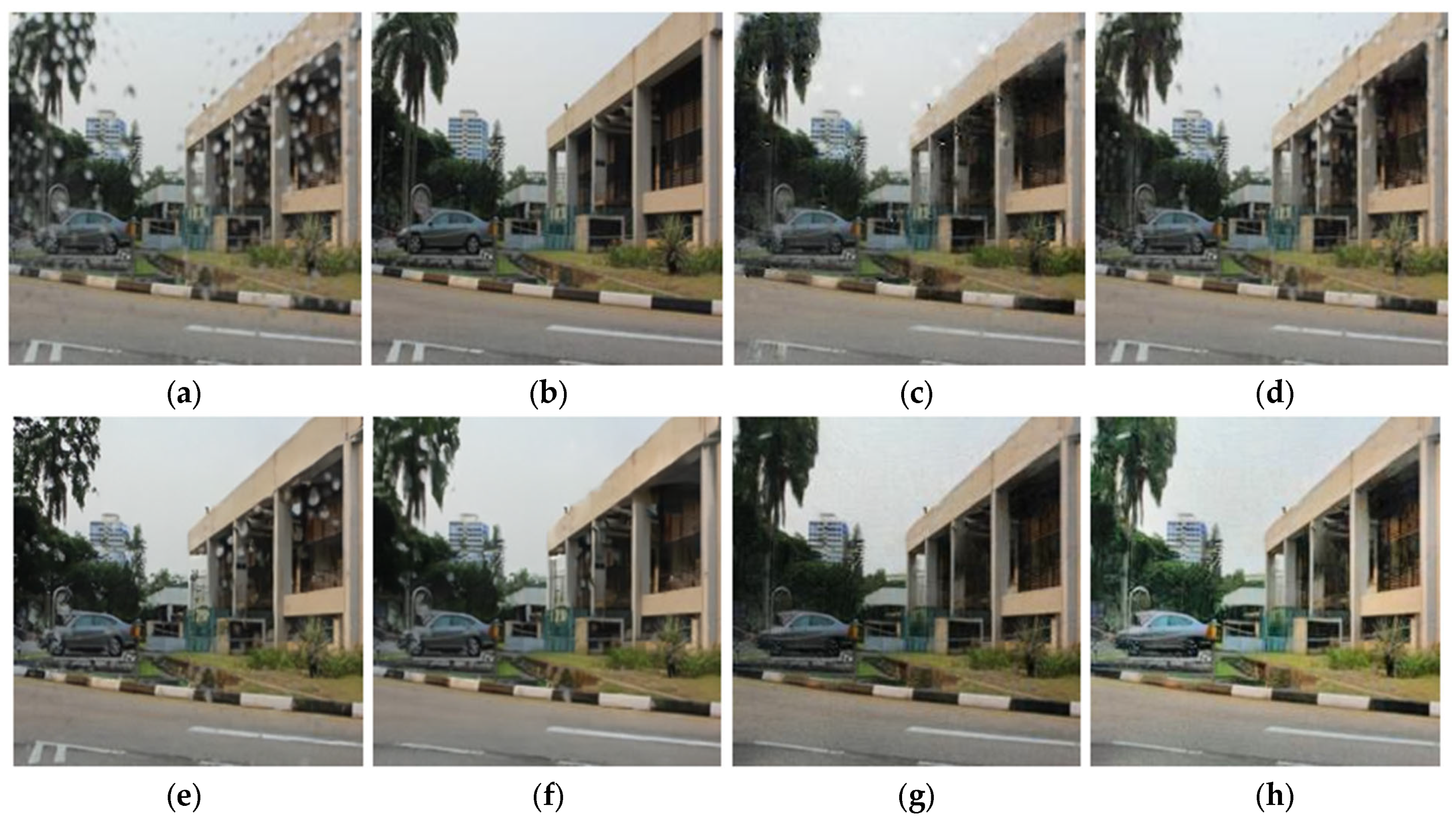

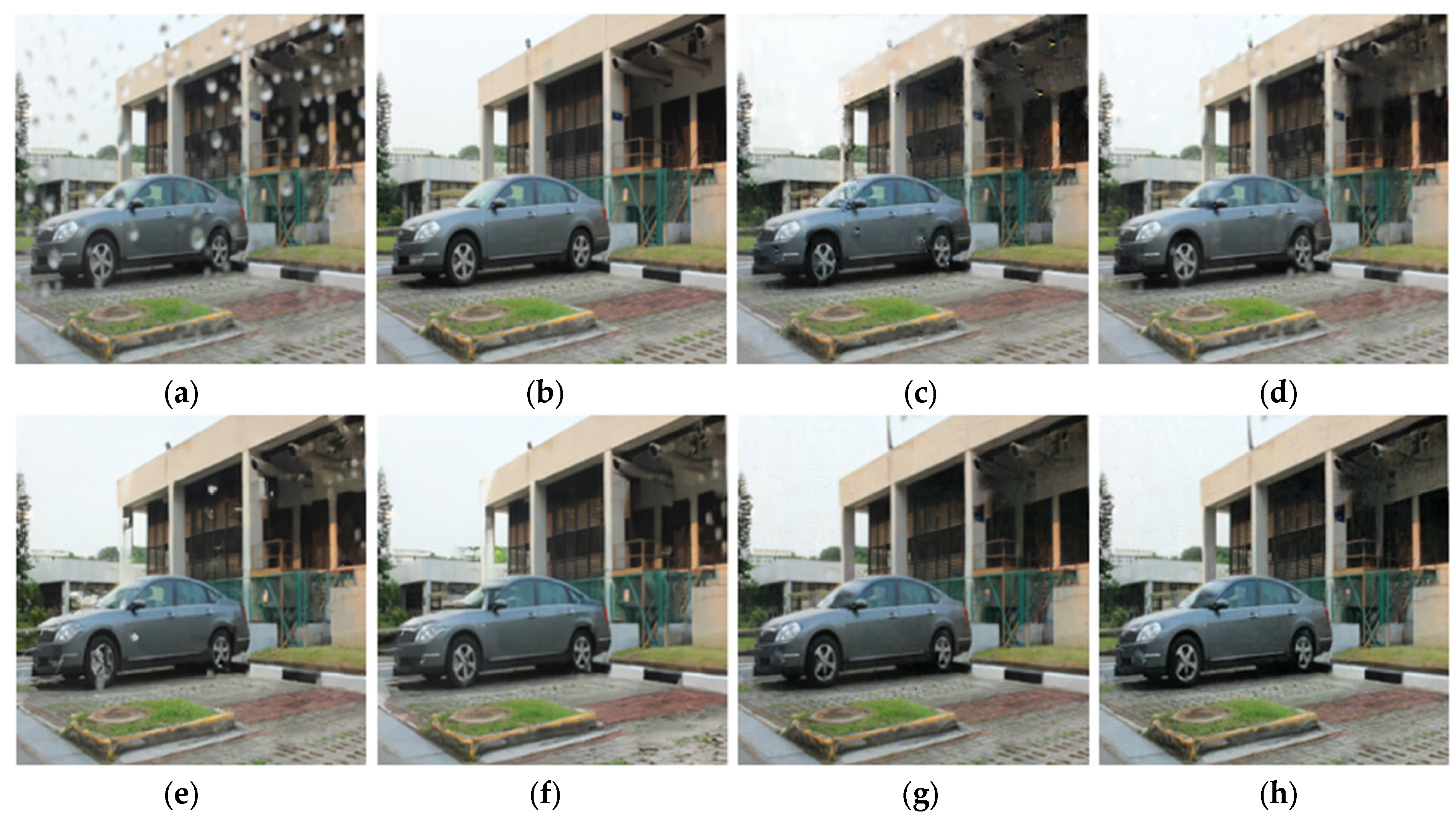

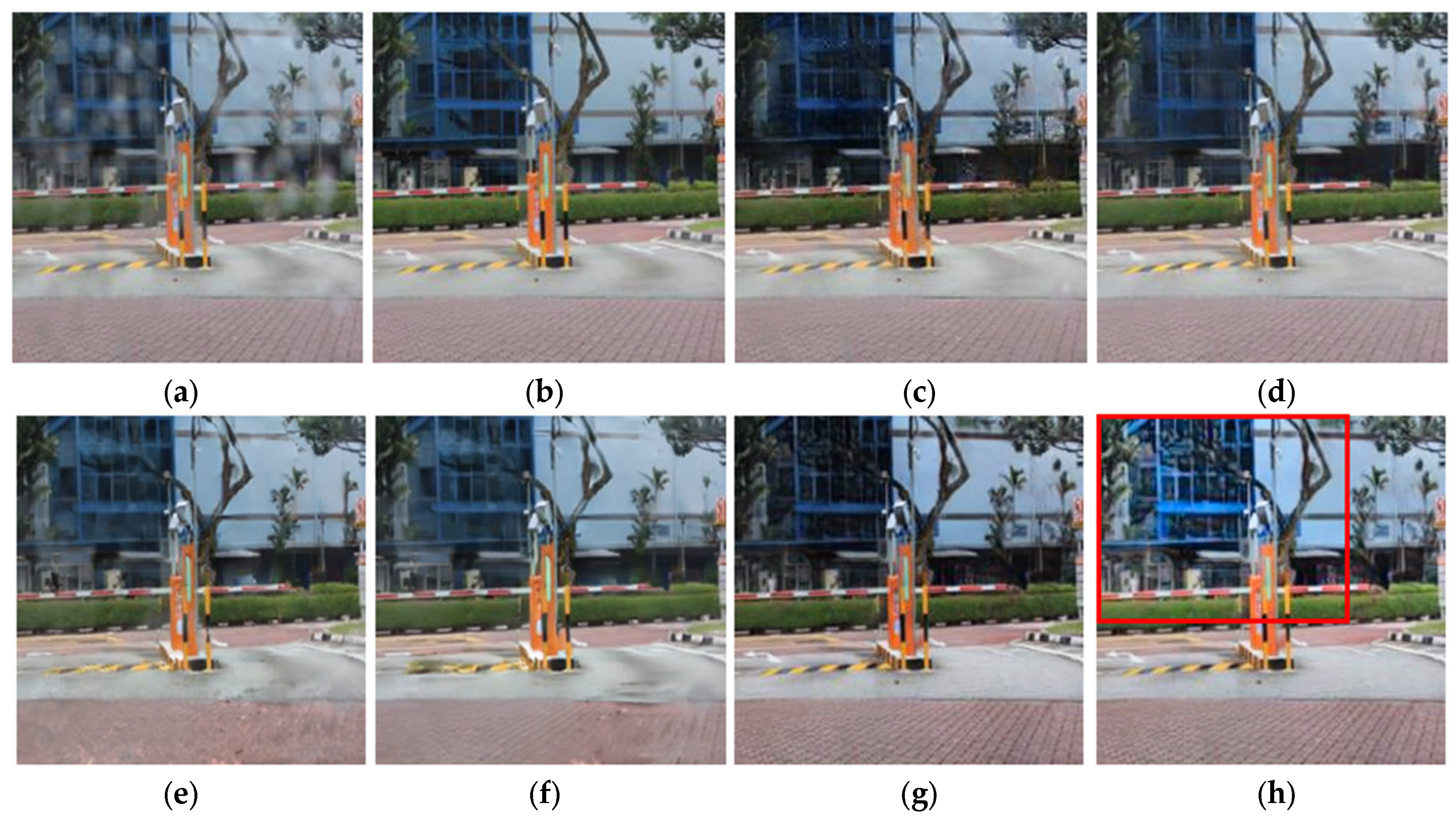

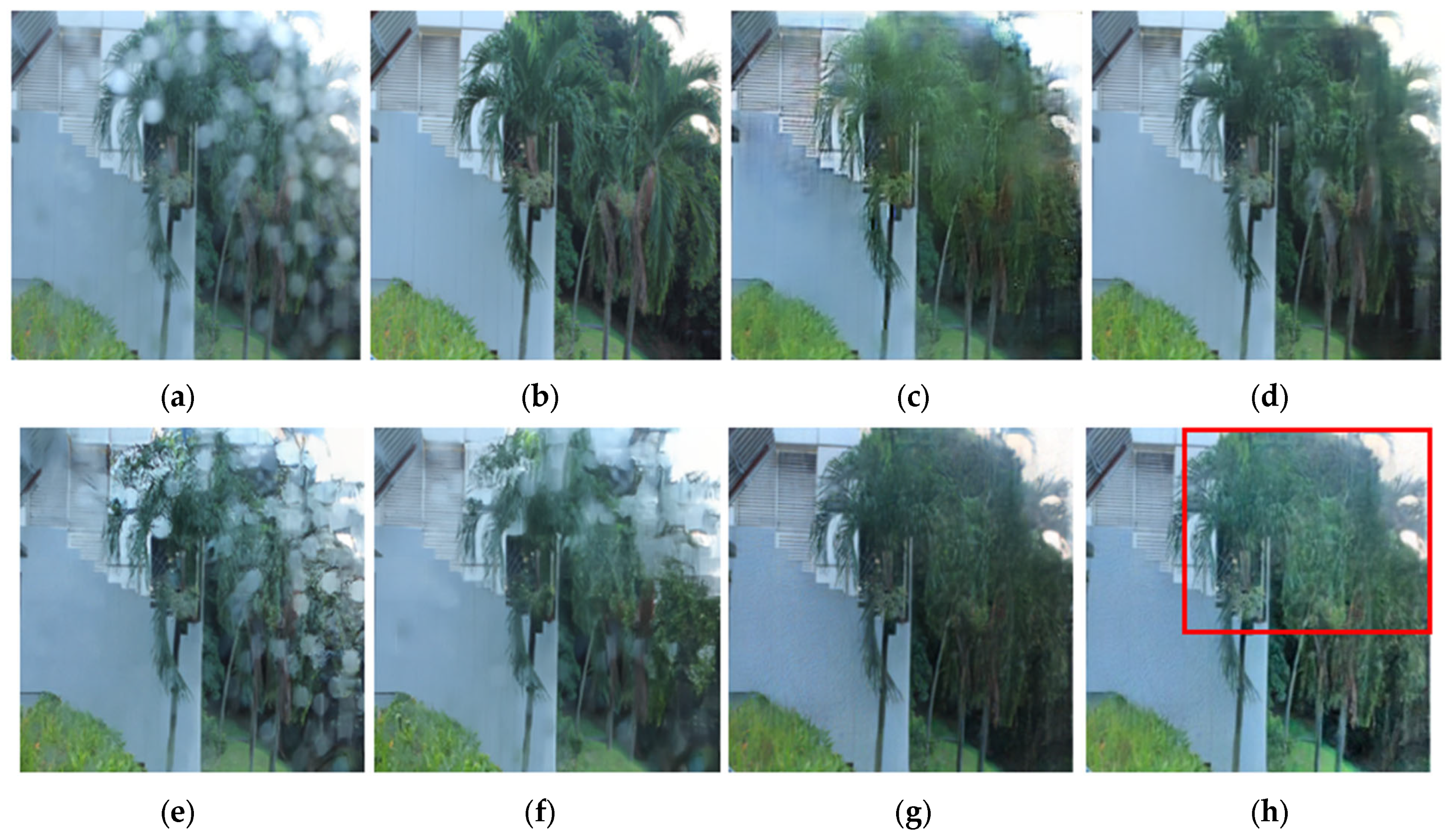

4.3. Comparative Experiments

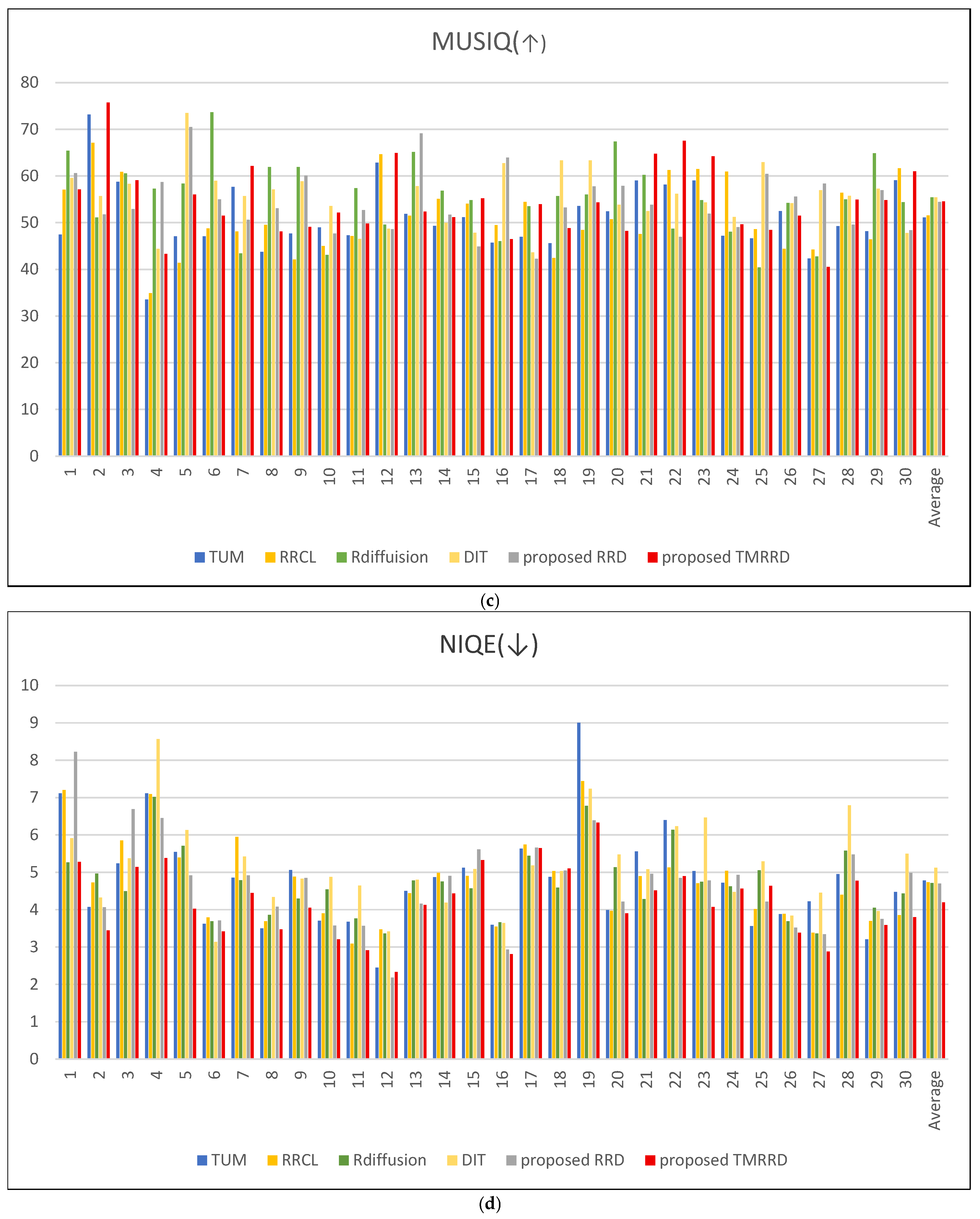

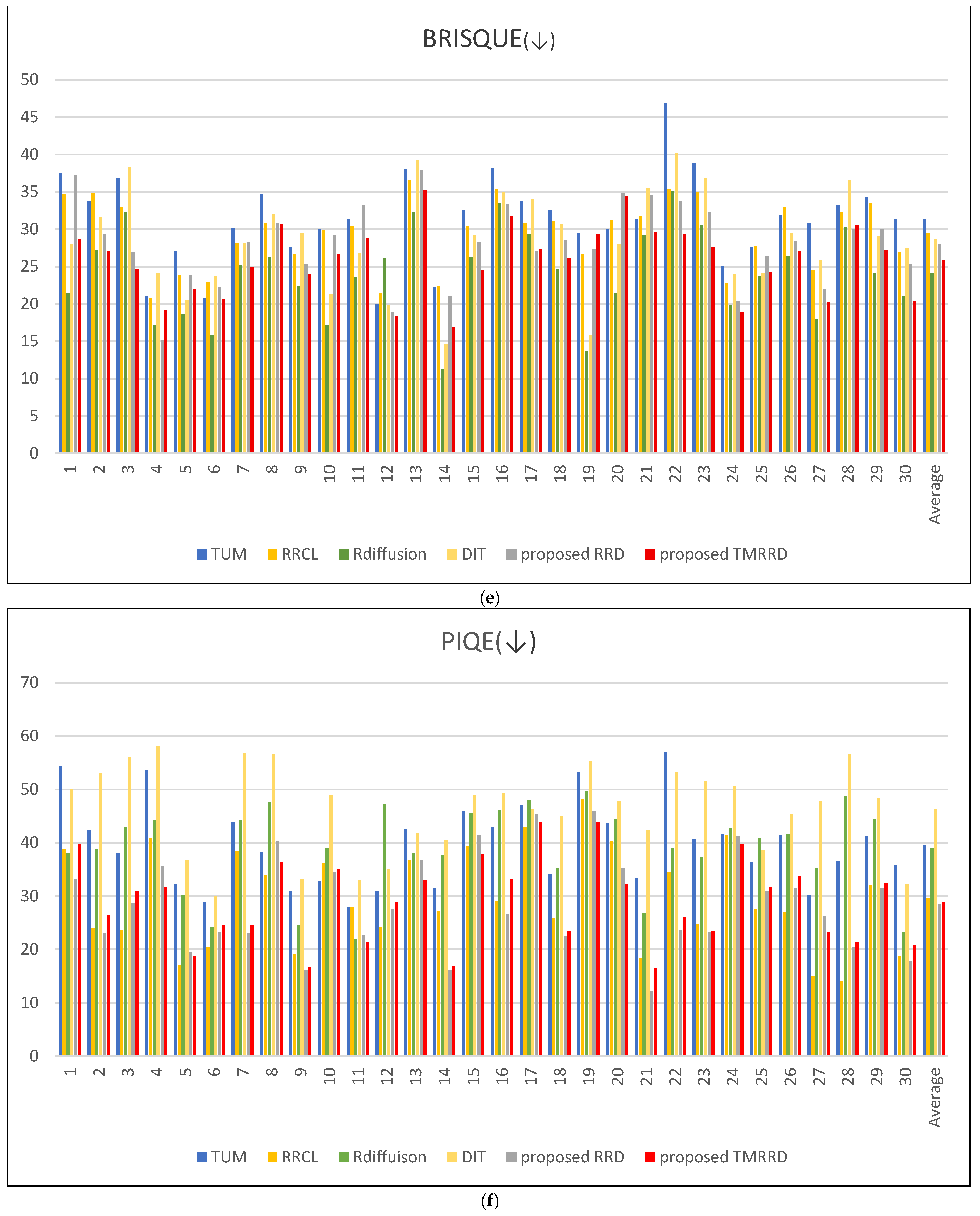

4.4. Quantitative Evaluation

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, Y.; Carballo, A.; Yang, H.; Takeda, K. Perception and sensing for autonomous vehicles under adverse weather conditions a survey. ISPRS J. Photogramm. Remote Sens. 2023, 196, 146–177. [Google Scholar] [CrossRef]

- Hamzeh, Y.; Rawashdeh, S.A. A review of detection and removal of raindrops in automotive vision systems. J. Imaging 2021, 7, 52. [Google Scholar] [CrossRef] [PubMed]

- He, D.; Shang, X.; Luo, J. Adherent mist and raindrop removal from a single image using attentive convolutional network. Neurocomputing 2022, 505, 178–187. [Google Scholar] [CrossRef]

- Lee, Y.; Jeon, J.; Ko, Y.; Jeon, B.; Jeon, M. Task-driven deep image enhancement network for autonomous driving in bad weather. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation ICRA, Xi’an, China, 30 May–5 June 2021; pp. 13746–13753. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar] [CrossRef]

- Peng, Y. A comparative analysis between GAN and diffusion models in image generation. Trans. Comput. Sci. Intell. Syst. Res. 2024, 5, 189–195. [Google Scholar] [CrossRef]

- Sohl-Dickstein, J.; Weiss, E.A.; Maheswaranathan, N.; Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In Proceedings of the 32nd International Conference on Machine Learning ICML, Lille, France, 6–11 July 2015; Volume 37, pp. 2256–2265. [Google Scholar] [CrossRef]

- Su, Z.; Zhang, Y.; Shi, J.; Zhang, X.-P. A survey of single image rain removal based on deep learning. ACM Comput. Surv. 2024, 56, 103. [Google Scholar] [CrossRef]

- Soboleva, V.; Shipitko, O. Raindrops on windshield dataset and lightweight gradient-based detection algorithm. In Proceedings of the 2021 IEEE Symposium Series on Computational Intelligence SSCI, Orlando, FL, USA, 5–8 December 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Saharia, C.; Chan, W.; Chang, H.; Lee, C.A.; Ho, J.; Salimans, T.; Fleet, D.J.; Norouzi, M. Palette image-to-image diffusion models. In Proceedings of the SIGGRAPH 2022 Conference, Vancouver, BC, Canada, 8–11 August 2022. [Google Scholar] [CrossRef]

- He, C.; Lu, H.; Qin, T.; Li, P.; Wei, Y.; Loy, C.C. Diffusion models in low-level vision a survey. IEEE Trans. Neural Netw. Learn. Syst. 2025, 34, 1972–1987. [Google Scholar] [CrossRef]

- Barron, J.T. A general and adaptive robust loss function. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition CVPR, Long Beach, CA, USA, 15–20 June 2019; pp. 4326–4334. [Google Scholar] [CrossRef]

- Ioffe, S. Batch renormalization towards reducing minibatch dependence in batch-normalized models. Adv. Neural Inf. Process. Syst. 2017, 30, 1945–1953. [Google Scholar] [CrossRef]

- Song, J.; Meng, C.; Ermon, S. Denoising diffusion implicit models. In Proceedings of the International Conference on Learning Representations ICLR, Vienna, Austria, 4 May 2021. [Google Scholar] [CrossRef]

- Go, Y.-H.; Lee, S.-H.; Lee, S.-H. Multiexposed image-fusion strategy using mutual image translation learning with multiscale surround switching maps. Mathematics 2024, 12, 3244. [Google Scholar] [CrossRef]

- Wang, S.-Y.; Wang, O.; Zhang, R.; Owens, A.; Efros, A.A. CNN-generated images are surprisingly easy to spot for now. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition CVPR, Seattle, WA, USA, 14–19 June 2020; pp. 8695–8704. [Google Scholar] [CrossRef]

- Goodfellow, I. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Qian, R.; Tan, R.T.; Yang, W.; Su, J.; Liu, J. Attentive generative adversarial network for raindrop removal from a single image. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition CVPR, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2482–2491. [Google Scholar] [CrossRef]

- Nguyen, D.M.; Lee, S.-W. UnfairGAN an enhanced generative adversarial network for raindrop removal from a single image. Expert Syst. Appl. 2023, 228, 118232. [Google Scholar] [CrossRef]

- Zheng, K.; Chen, Y.; Chen, H.; He, G.; Liu, M.-Y.; Zhu, J.; Zhang, Q. Direct discriminative optimization your likelihood-based visual generative model is secretly a GAN discriminator. arXiv 2025, arXiv:2503.01103. [Google Scholar] [CrossRef]

- Dhariwal, P.; Nichol, A. Diffusion models beat GANs on image synthesis. Adv. Neural Inf. Process. Syst. 2021, 34, 8780–8794. [Google Scholar] [CrossRef]

- Meng, C.; He, Y.; Song, Y.; Song, J.; Wu, J.; Zhu, J.-Y.; Ermon, S. SDEdit guided image synthesis and editing with stochastic differential equations. In Proceedings of the International Conference on Learning Representations ICLR, Virtual, 25 April 2022. [Google Scholar] [CrossRef]

- Sasaki, H.; Willcocks, C.G.; Breckon, T.P. UNIT-DDPM unpaired image translation with denoising diffusion probabilistic models. arXiv 2021, arXiv:2104.05358. [Google Scholar] [CrossRef]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. SwinIR image restoration using Swin Transformer. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops ICCVW, Montreal, QC, Canada, 10–17 October 2021; pp. 1833–1844. [Google Scholar] [CrossRef]

- Chen, L.; Chu, X.; Zhang, X.; Sun, J. Simple baselines for image restoration. In Proceedings of the Computer Vision—ECCV 2022, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 17–33. [Google Scholar] [CrossRef]

- Evans, A.N.; Liu, X.U. A morphological gradient approach to color edge detection. IEEE Trans. Image Process. 2006, 15, 1454–1463. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Haralick, R.M.; Sternberg, S.R.; Zhuang, X. Image analysis using mathematical morphology. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 4, 532–550. [Google Scholar] [CrossRef]

- Moritz, N.; Hori, T.; Le Roux, J. Capturing multi-resolution context by dilated self-attention. In Proceedings of the IEEE International Conference on Acoustics Speech and Signal Processing ICASSP, Toronto, ON, Canada, 6–11 June 2021; pp. 6429–6433. [Google Scholar] [CrossRef]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision ICCV, Venice, Italy, 22–29 October 2017; pp. 2242–2251. [Google Scholar] [CrossRef]

- Quan, R.; Yu, X.; Liang, Y.; Yang, Y. Removing raindrops and rain streaks in one go. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition CVPR, Virtual, 19–25 June 2021; pp. 9147–9156. [Google Scholar] [CrossRef]

- Roboflow Universe. CarsAndTrafficSignal Dataset. 2025. Available online: https://universe.roboflow.com/pandyavedant18-gmail-com/carsandtrafficsignal (accessed on 22 August 2025).

- Jin, Y.; Li, X.; Wang, J.; Zhang, Y.; Zhang, M. Raindrop clarity a dual-focused dataset for day and night raindrop removal. In Proceedings of the Computer Vision—ECCV 2024, Milan, Italy, 29 September–4 October 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Eds.; Springer: Cham, Switzerland, 2025; pp. 1–17. [Google Scholar] [CrossRef]

- Han, Y.-K.; Jung, S.-W.; Kwon, H.-J.; Lee, S.-H. Rainwater-removal image conversion learning with training pair augmentation. Entropy 2023, 25, 118. [Google Scholar] [CrossRef]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar] [CrossRef]

- Chen, W.-T.; Huang, Z.-K.; Tsai, C.-C.; Yang, H.-H.; Ding, J.-J.; Kuo, S.-Y. Learning multiple adverse weather removal via two-stage knowledge learning and multi-contrastive regularization toward a unified model. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 17632–17641. [Google Scholar] [CrossRef]

- Özdenizci, O.; Legenstein, R. Restoring vision in adverse weather conditions with patch-based denoising diffusion models. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10346–10357. [Google Scholar] [CrossRef]

- Ying, Z.; Niu, H.; Gupta, P.; Mahajan, D.; Ghadiyaram, D.; Bovik, A. From patches to pictures PaQ-2-PiQ mapping the perceptual space of picture quality. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition CVPR, Seattle, WA, USA, 14–19 June 2020; pp. 3575–3585. [Google Scholar] [CrossRef]

- Wang, J.; Chan, K.C.K.; Loy, C.C. Exploring CLIP for assessing the look and feel of images. Proc. AAAI Conf. Artif. Intell. 2023, 37, 2555–2563. [Google Scholar] [CrossRef]

- Ke, J.; Wang, Q.; Wang, Y.; Milanfar, P.; Yang, F. MUSIQ multiscale image quality transformer. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision ICCV, Montreal, QC, Canada, 10–17 October 2021; pp. 5128–5137. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a completely blind image quality analyzer. IEEE Signal Process. Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Venkatanath, N.; Praneeth, D.; Bh, M.C.; Channappayya, S.S.; Medasani, S.S. Blind image quality evaluation using perception based features. In Proceedings of the 2015 Twenty First National Conference on Communications NCC, Mumbai, India, 27 February–1 March 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Blau, Y.; Mechrez, R.; Timofte, R.; Michaeli, T.; Zelnik-Manor, L. The 2018 PIRM Challenge on perceptual image super-resolution. In Proceedings of the European Conference on Computer Vision ECCV Workshops, Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2019; pp. 334–355. [Google Scholar] [CrossRef]

- Ma, C.; Yang, C.-Y.; Yang, X.; Yang, M.-H. Learning a no-reference quality metric for single-image super-resolution. Comput. Vis. Image Underst. 2017, 158, 1–16. [Google Scholar] [CrossRef]

- Yang, J.; Du, B.; Wang, D.; Zhang, L. ITER image-to-pixel representation for weakly supervised HSI classification. IEEE Trans. Image Process. 2024, 33, 257–272. [Google Scholar] [CrossRef]

- Wang, D.; Hu, M.; Jin, Y.; Miao, Y.; Yang, J.; Xu, Y.; Qin, X.; Ma, J.; Sun, L.; Li, C.; et al. HyperSIGMA hyperspectral intelligence comprehension foundation model. IEEE Trans. Pattern Anal. Mach. Intell. 2025, in press. [Google Scholar] [CrossRef]

| RRCL [34] | TUM [36] | RDiffusion [37] | DIT [33] | Proposed | ||

|---|---|---|---|---|---|---|

| RRD | TMRRD | |||||

| Clean accuracy | 84.9% | 79.2% | 77.4% | 79.2% | 95.2% | 95.2% |

| Name | Components of Each Stage |

|---|---|

| Case 1 | Palette based image-to-image translation |

| Case 2 | Batch normalization instead of Group normalization |

| Case 3 | RRD module |

| Case 4 | RRD with MITM tone mapping only |

| Case 5 | TMRRD with tone blending and color compensation |

| RRCL | TUM | RDiffusion | DIT | Proposed | ||

|---|---|---|---|---|---|---|

| RRD | TMRRD | |||||

| PaQ-2-PiQ | 69.9767 | 69.3781 | 71.5601 | 71.7653 | 71.5080 | 71.80081 |

| CLIP-IQA+ | 0.6310 | 0.5732 | 0.6349 | 0.6496 | 0.6608 | 0.6452 |

| MUSIQ | 51.1206 | 51.5334 | 55.4226 | 55.4252 | 54.4827 | 54.5679 |

| NIQE | 4.7855 | 4.7384 | 4.7156 | 5.1256 | 4.6992 | 4.1983 |

| BRISQUE | 31.3016 | 29.4883 | 24.1244 | 28.6625 | 28.0645 | 25.8919 |

| PIQE | 39.6359 | 29.5934 | 38.9378 | 46.2912 | 28.5374 | 28.9483 |

| PI | 3.4175 | 3.3398 | 3.3472 | 3.9030 | 3.6340 | 3.0632 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Go, Y.-H.; Lee, S.-H. Weather-Corrupted Image Enhancement with Removal-Raindrop Diffusion and Mutual Image Translation Modules. Mathematics 2025, 13, 3176. https://doi.org/10.3390/math13193176

Go Y-H, Lee S-H. Weather-Corrupted Image Enhancement with Removal-Raindrop Diffusion and Mutual Image Translation Modules. Mathematics. 2025; 13(19):3176. https://doi.org/10.3390/math13193176

Chicago/Turabian StyleGo, Young-Ho, and Sung-Hak Lee. 2025. "Weather-Corrupted Image Enhancement with Removal-Raindrop Diffusion and Mutual Image Translation Modules" Mathematics 13, no. 19: 3176. https://doi.org/10.3390/math13193176

APA StyleGo, Y.-H., & Lee, S.-H. (2025). Weather-Corrupted Image Enhancement with Removal-Raindrop Diffusion and Mutual Image Translation Modules. Mathematics, 13(19), 3176. https://doi.org/10.3390/math13193176