Quantum Computing Meets Deep Learning: A QCNN Model for Accurate and Efficient Image Classification

Abstract

1. Introduction

1.1. Motivation and Research Contribution

- This study presents a fully Quantum Convolutional Neural Network (QCNN) model designed specifically for binary classification on the MNIST focusing on distinguishing between digits 0 and 1. We further extend to the classification of the wines by analyzing the wine dataset. While previous approaches often rely on either classical deep learning models or hybrid quantum-classical structures, our work explores a purely quantum model that is both compact and efficient.

- One of the main contributions is the design of a shallow QCNN architecture that operates on small subsets of qubits at each layer. This avoids the use of deep parameterized quantum circuits, which are prone to noise and training instability, particularly on current quantum hardware. By keeping the circuit depth low and the architecture modular, we ensure better trainability and scalability.

- We also introduce a specific quantum unitary block based on a combination of Ry, Rz, U3, and CNOT gates. These blocks form the building units of our convolutional layers and together include only 45 trainable parameters in total. This efficient gate design reduces resource consumption while maintaining expressivity.

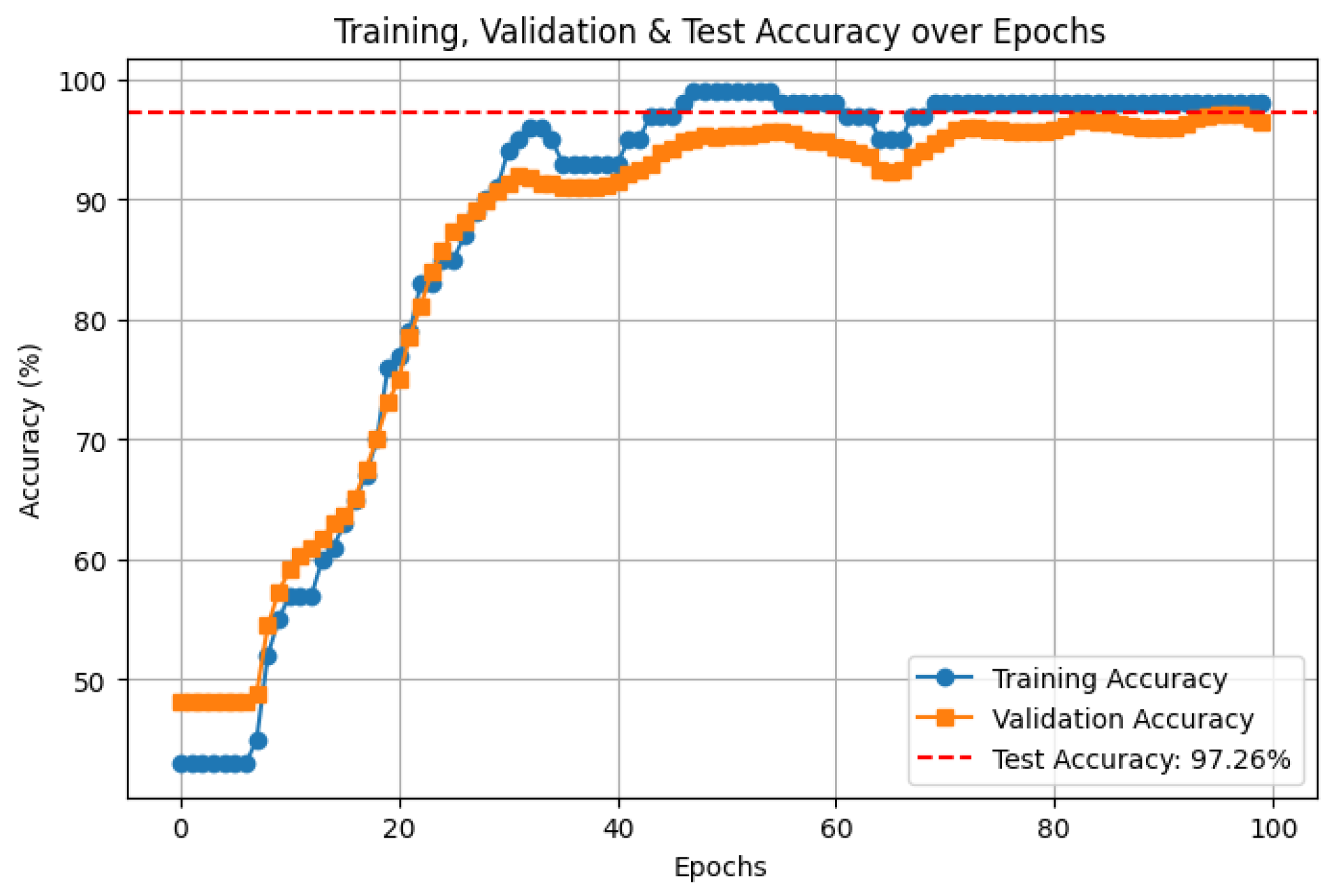

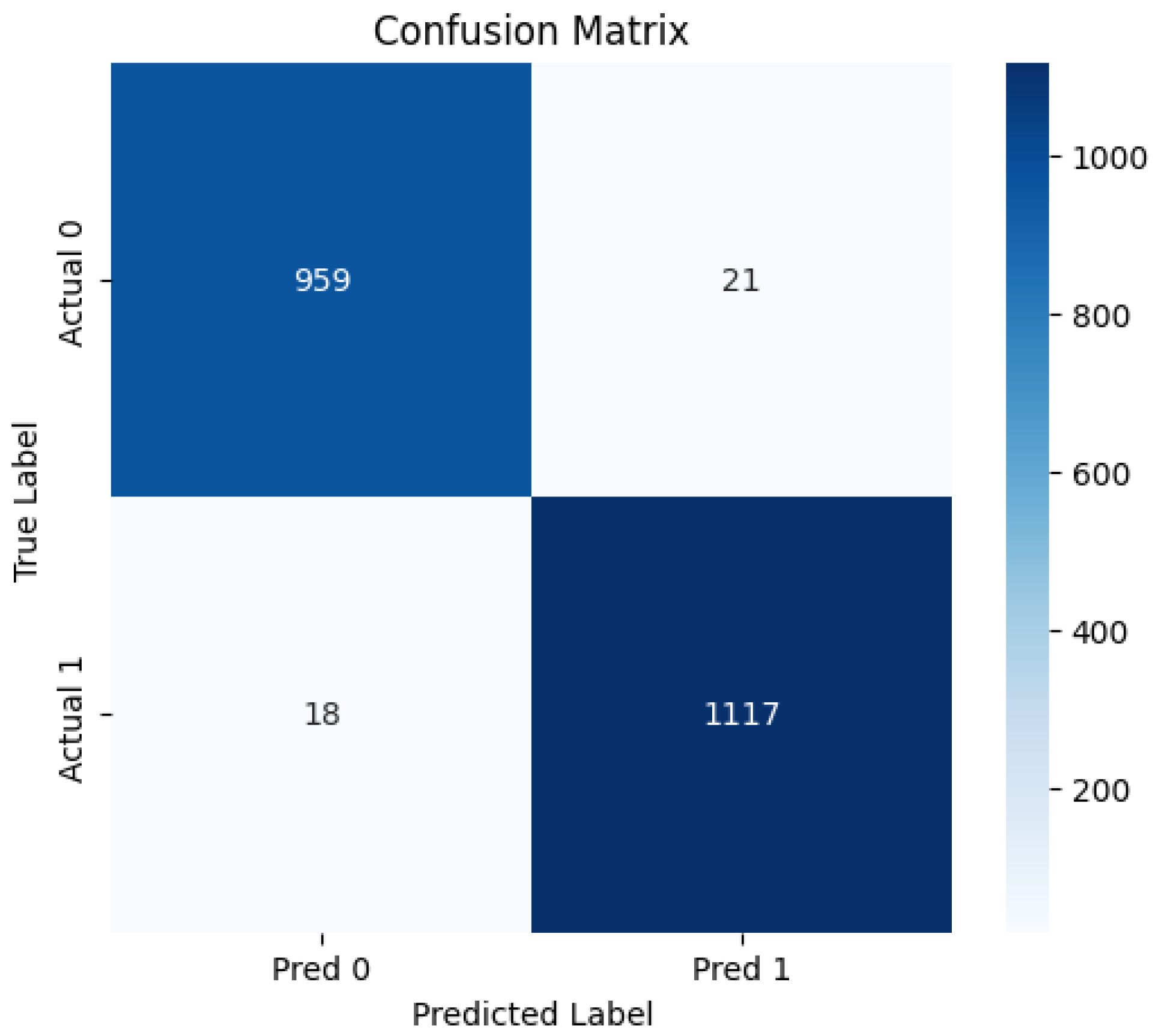

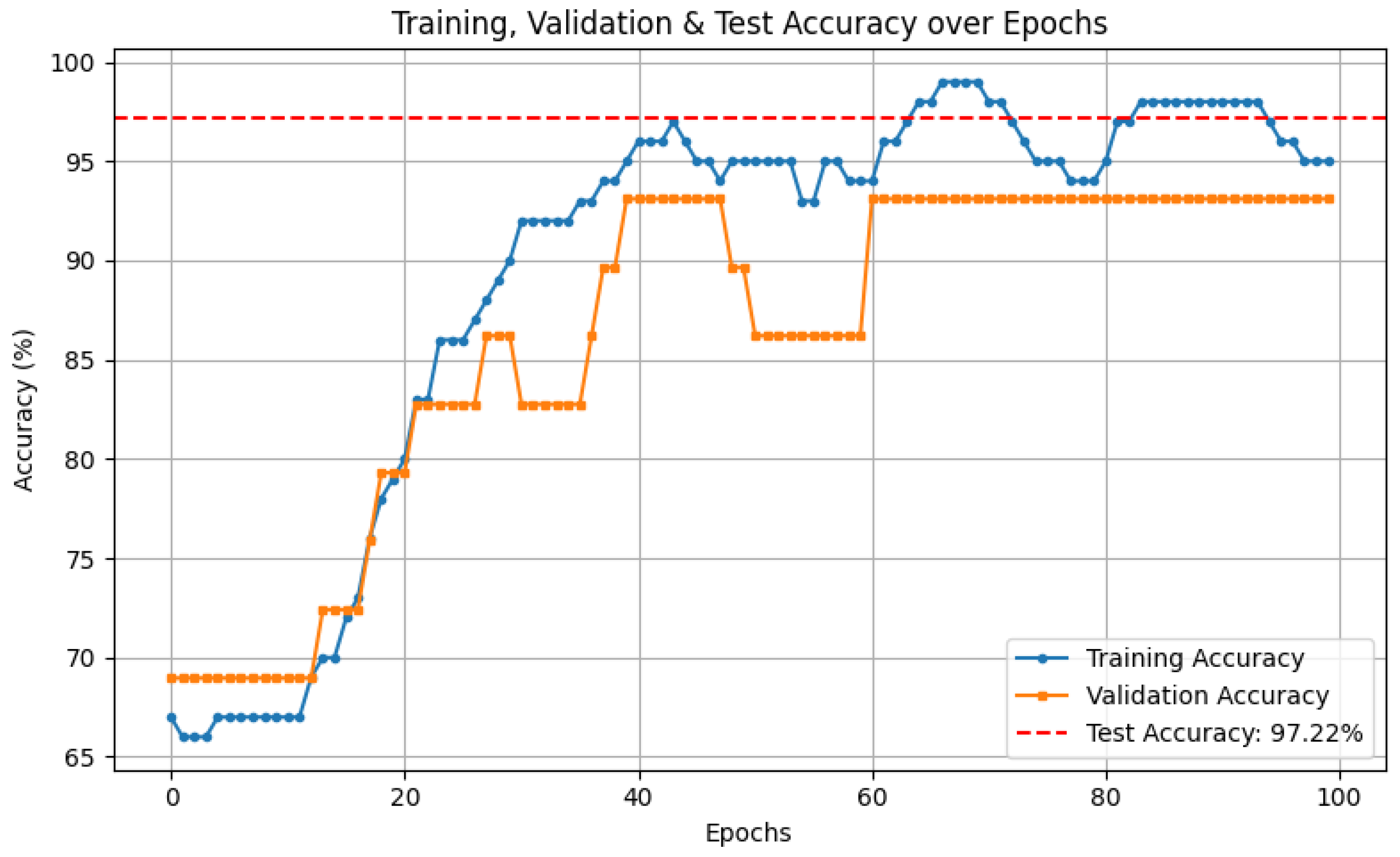

- Despite the simplicity of the architecture, our model achieves an accuracy of 97.26% on the MNIST dataset binary classification task and 97.22% in the classification of wine by analyzing the wine dataset. Our results shows that the proposed approach is still competitive and demonstrate the potential of quantum inspired methods for practical classification tasks. We also provide a computational analysis to show how our model compares favorably in terms of parameter count, gate complexity, and memory requirements.

- Altogether, this work demonstrates that a carefully structured shallow quantum model can perform competitively with more complex alternatives, making it a promising candidate for practical use on near-term quantum hardware.

1.2. Organization

2. Related Work

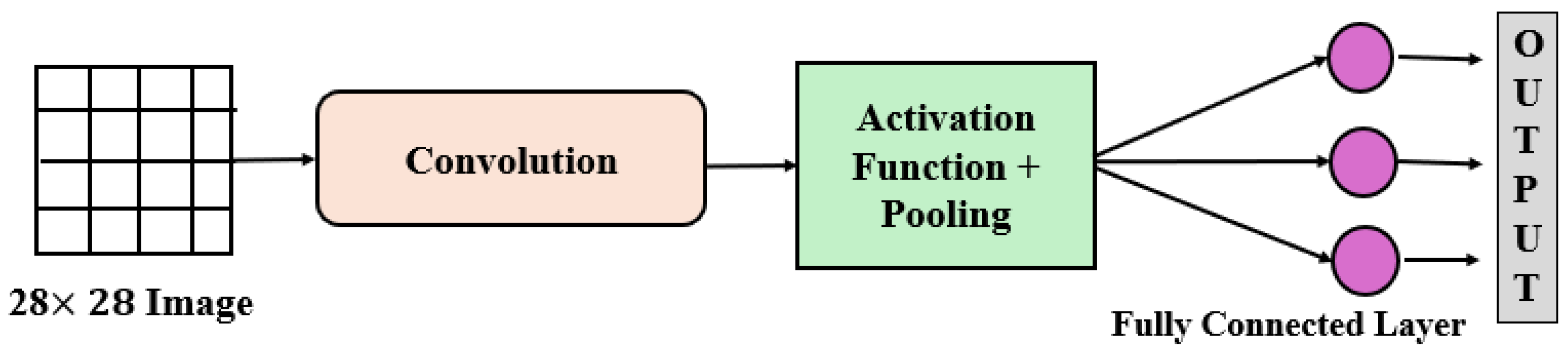

2.1. Convolutional Neural Network

2.2. Quantum Machine Learning

2.3. Quantum Convolutional Neural Network (QCNN)

3. Performance of Neural Network Models in Classification

4. Preliminaries

4.1. Quantum State Transformation

4.2. Quantum Measurement

4.3. Data Encoding

- 1.

- Polynomial Complexity: The number of quantum gates required for encoding should increase at a polynomial or sub-polynomial rate as the number of qubits expands. If the gate count grows exponentially, the encoding becomes impractical.

- 2.

- Hardware Efficiency: The state preparation circuit should be designed so that single- and two-qubit quantum gates can be implemented efficiently, without introducing excessive hardware costs or increasing error rates.

4.3.1. Amplitude Encoding

- are the amplitude coefficients that define the likelihood of observing the corresponding basis state |j〉.

- Each basis state |j〉 represents one possible fundamental state in an n-qubit quantum framework.

4.3.2. Angle Encoding

- Rescaling pixel values: Since pixel intensity values typically range from 0 to 1 (after normalization), they are mapped to the range [0,] for quantum encoding.

- Calculation of the rotation angle: Given a pixel value at position (x, y), the corresponding rotation angle is computed as

- Application of the rotation gate: Each qubit is assigned to one pixel and is rotated using the gate.

- Final quantum state representation: Using n qubits, the resulting quantum state obtained by applying the gate to each qubit is

- •

- n is the number of qubits (equal to the number of pixels).

- •

- (rescaled classical data feature).

- •

- and can be fixed values or additional learnable parameters.

- •

- denotes the initial zero state of all qubits before encoding.

4.4. CNN and QCNN

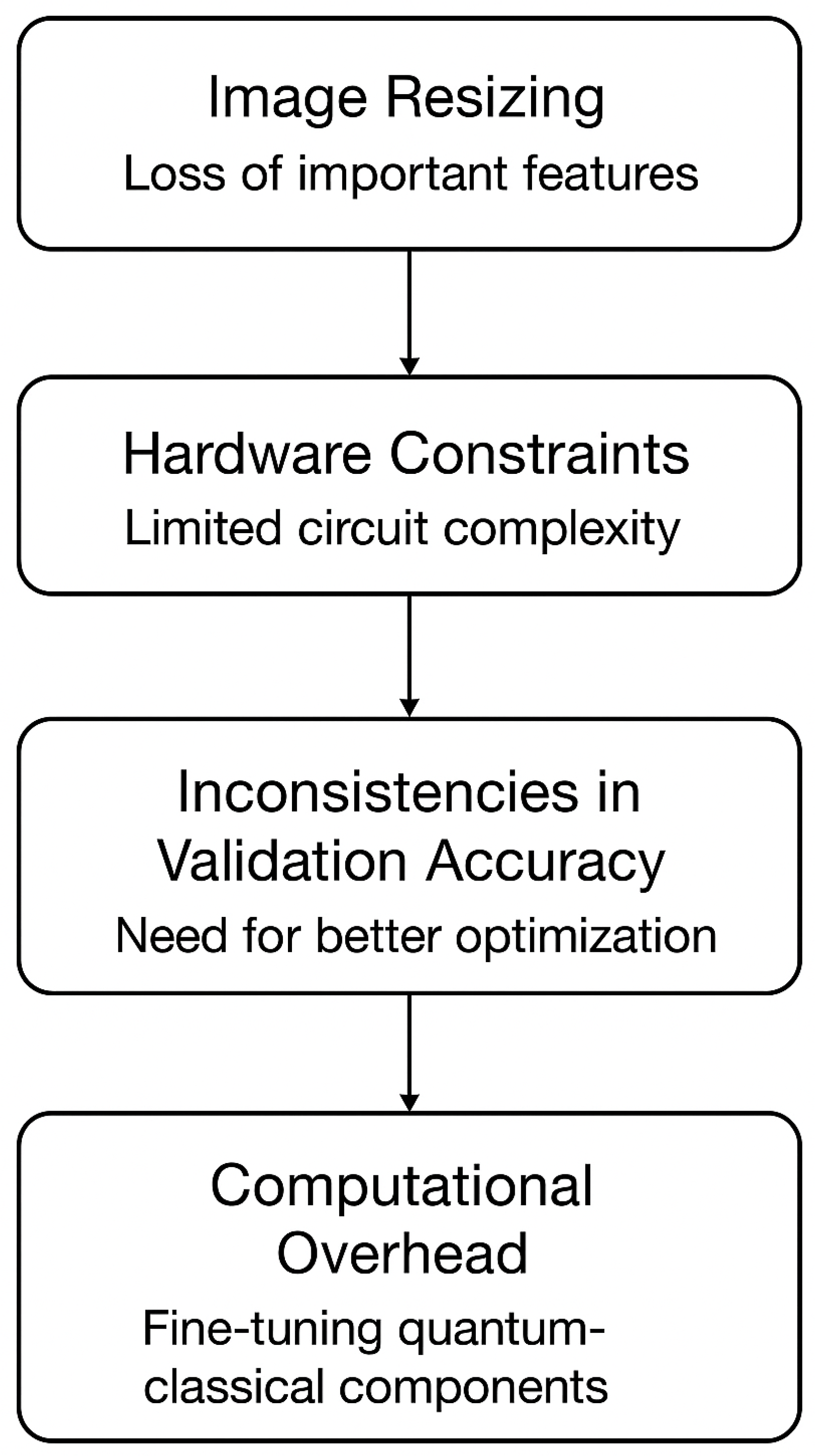

4.5. Computational Efficiency and Challenges in QCNN

5. Material and Methodology

5.1. Dataset Preparation

- 1.

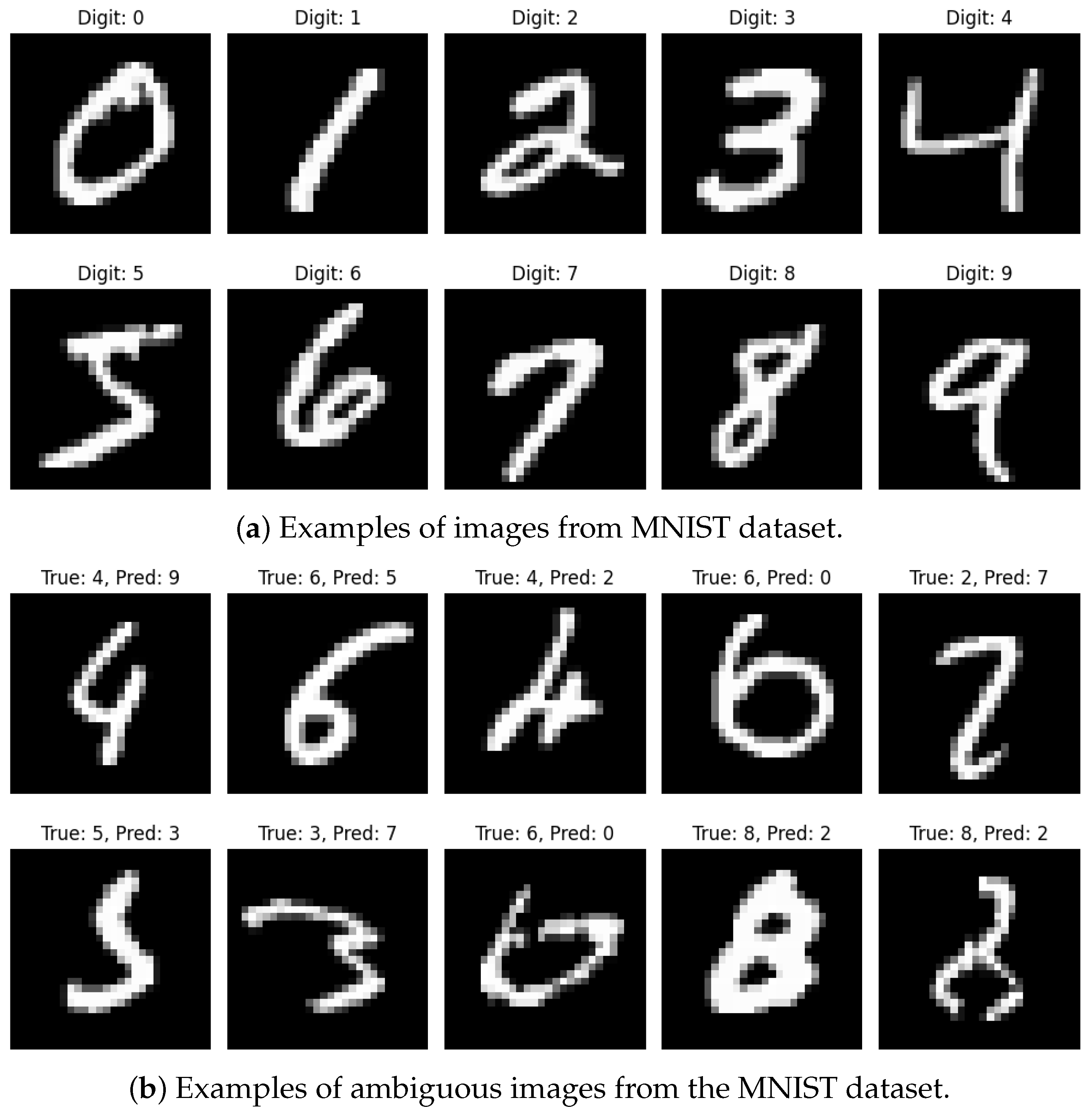

- MNISTThe Modified National Institute of Standards and Technology (MNIST) dataset is a widely recognized set of grayscale images showcasing handwritten digits spanning from 0 to 9. This dataset comprises a total of 70 K images, with 60 K allocated for model training and the remaining 10 K reserved for evaluation. Each image has a resolution of 28 × 28 pixels. The goal is to classify the digit in each image using a neural network. This dataset is widely used in machine learning; notably, PQCs have also been evaluated on this dataset to measure their performance in Quantum Machine Learning. Examples of images from this dataset are shown in Figure 3.

- 2.

- PreprocessingAfter loading the MNIST dataset, which comprises handwritten digits from 0 to 9, an additional axis is added to the NumPy array. Initially, the dataset has a shape of (60,000, 28, 28), but it is reshaped to (60,000, 28, 28, 1) to match the input format required by CNNs. CNN models accept input in the form of (Batch Size, Height, Width, Channels), where the additional axis represents the grayscale channel of the images.Next, the pixel values are normalized from the range [0, 255] to [0, 1]. In this normalized scale, 0 represents black and 1 represents white, which simplifies the processing and learning from the data effectively. Binary classification is used here which means only 0 and 1 digits are filtered out. Image and labels corresponds to digits 0 or 1 are selected and resized each image from to (one-dimensional representation for quantum embedding).

- 3.

- Data SplittingIn total, the MNIST dataset contains 70 K images, with 60 K designated for training and 10 K for testing. However, in some cases, reducing the dataset size can help speed up training and quickly evaluate model performance. Generally data splitting is performed into training, validation, and testing sets. For our experiment, we worked with a subset of the dataset, selecting 12,665 images for training and testing, out of which 80% were used for the training and 20% were used for the validation, and 2115 testing images were held untouched during the training/validation split.Although the MNIST dataset is widely used, it includes some images that are either distorted or ambiguous, making them difficult even for humans to classify. As shown in Figure 3, these challenging examples can be tricky to interpret. However, our quantum model achieves over accuracy in correctly identifying the numbers, even in such cases.

- 4.

- Wine DatasetThe Wine dataset is a popular dataset in machine learning, originally gathered by researchers at the University of Camerino in Italy. It contains information about different types of wines, specifically those made from three different grape cultivars (or varieties) grown in the same region. Each wine sample has 13 different features, things like alcohol content, levels of various acids, minerals, and other chemical properties that describe the wine’s composition.

- 5.

- PreprocessingThe dataset includes 178 samples, each labeled as belonging to one of three classes: Class 0, Class 1, or Class 2 with each class representing a wine made from a different grape variety.In our Quantum Machine Learning model, we use this dataset for a binary classification task. That means we simplify the problem to just identifying whether a wine is from Class 0 (one specific grape type) or not (which includes both Class 1 and Class 2). Since our quantum model uses 8 qubits, we only use the first 8 features from the dataset, and we scale them appropriately to be used as inputs in a quantum circuit. The model learns patterns from this reduced feature set and tries to correctly classify new wine samples.

- 6.

- Data SplittingTo effectively train and evaluate the model, the data are split into three parts. First, 80% of the total data, 142 samples, are used for training and validation, while the remaining 20%, 36 samples, are reserved for final testing. From the 142 training samples, another split is made: 80% (113 samples) are used to train the model and 20% (29 samples) are set aside as a validation set to monitor performance during training. This ensures the model not only learns well but also generalizes effectively to new, unseen data.

5.2. Simulations

5.3. Framework of QCNN

- 1.

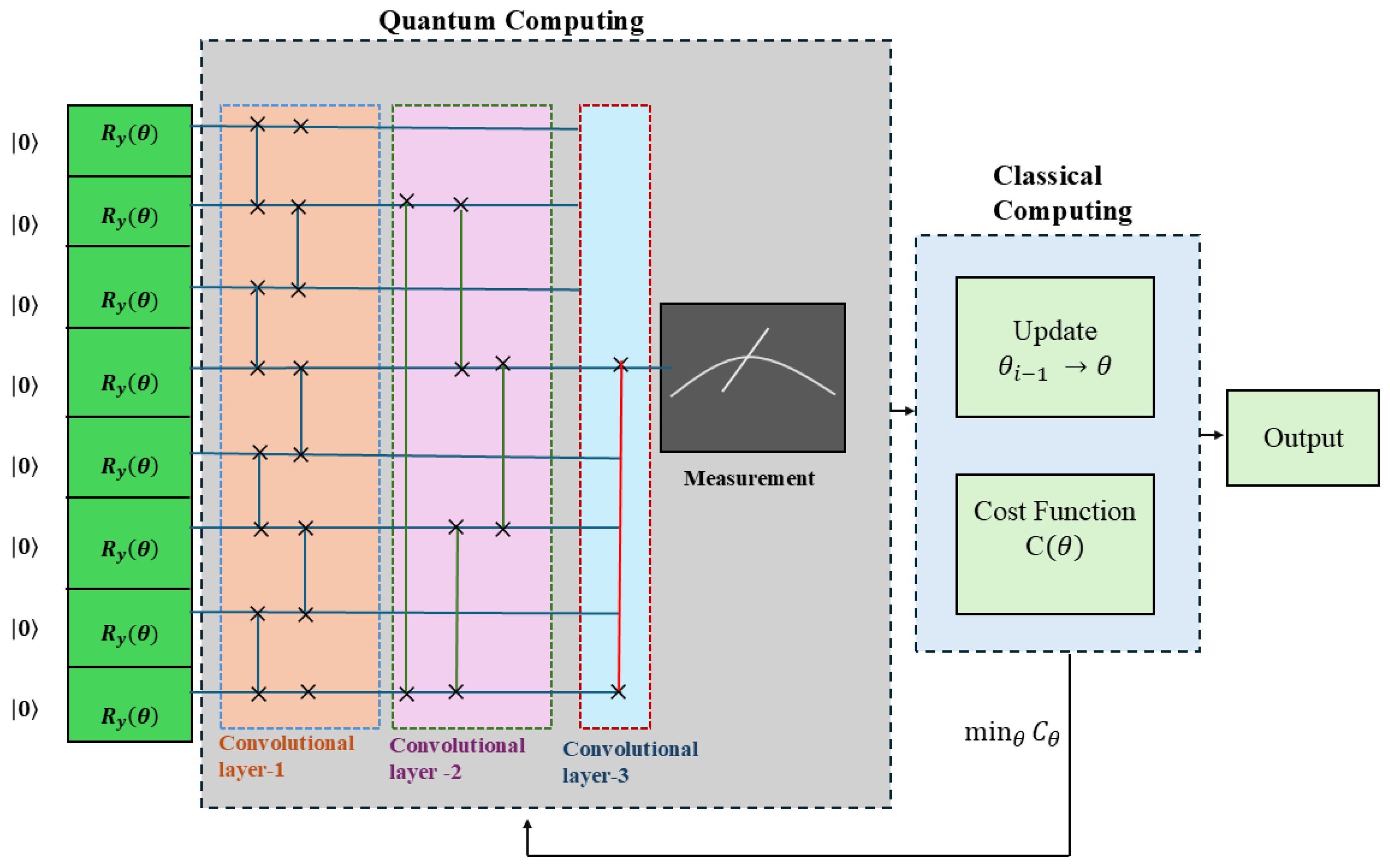

- Encoding and Convolution FilterIn this model, angle encoding is used for state preparation (shown in Figure 4 in green colored box), where classical data are transformed into a quantum state using rotations on 8 qubits. The Ry gate is used here because it applies rotations around the Y-axis of the Bloch sphere, which are easy to visualize and interpret. In variational quantum circuits, having a single rotation axis simplifies training and reduces the risk of overparameterization. Most quantum hardware and simulators like PennyLane natively support Ry gates with lower error rates than general U3 gates, making them a practical and reliable choice. Paired with CNOTs for entanglement, this structure supports efficient and interpretable quantum feature extraction while remaining scalable. This structure consists of three convolutional layers, each applying a quantum unitary operation to specific pairs of qubits. The first convolution layer applies unitary operation to adjacent qubits. The second convolution layer applies gates to long-range qubits, which leads to an increase in connectivity. The third convolution layer reduces the information to single qubit and applies to the initial qubit (Qubit 0) and the middle qubit (Qubit 4) to summarize extracted features. This is similar to the final fully connected layer in a classical CNN: it effectively merges information from different regions of the qubit, registering them into a single representation. QCNN ultimately measures a single qubit by computing the Pauli-Z expectation value of the middle qubit.

- 2.

- Cost FunctionThe ansatz’s variational parameters are optimized to reduce the cost function based on the training dataset. In this research, we assess the performance of QCNN architecture using mean squared error loss function.Mean Squared Error Loss FunctionThe output of our quantum circuit is not a probability in the traditional sense. It is an expectation value of a Pauli-Z observable, which ranges from −1 to +1. Since this output is continuous rather than probabilistic, MSE works naturally to measure how close the model’s output is to the target label (which we map accordingly).To formulate the cost function for training the QCNN, first, the original data Labels 0 and 1 needs to be mapped to +1 and −1, respectively. This is performed because the Pauli-Z measurement of a qubit can only yield eigenvalues +1 or −1. Mathematically, this mapping is represented aswhereThe MSE cost function between QCNN’s prediction and actual class label is shown in Equation (16):is the cost function that we want to minimize.indicates the Pauli-Z expectation value of the output qubit for the training example. This is the value predicted by the QCNN. The Pauli-Z expectation is given by , where is the amplitude of the qubits to be measured in the state |0〉 and is the amplitude of the qubit to be measured in the state |1〉. As we mapped with in Equation (15), then we can say that if is large, the measurement is likely to be +1 (closer to state and if is large, the measurement is likely to be −1 (closer to state |1〉). By minimizing the cost function, QCNN adjusts its parameters such that, if the label is 0, the state should be as close as possible to |0〉, meaning is maximized. If the label is 1, the state should be as close as possible to |1〉, meaning is maximized.

- 3.

- TrainingTo train this model, the Adam optimizer (Adaptive Moment Estimation) is employed with an initial learning rate of 0.01. A smaller value of learning rate results in slower but stable training and higher value can cause instability. To enhance training efficiency, we implement a learning rate scheduler and use early stopping in Keras to mitigate overfitting. Training is set to a maximum of 100 epochs. Instead of using all training data at once, the model selects 25 random samples per training step. This is known as minibatch training, which improves efficiency and convergence.If the validation loss does not improve for three consecutive epochs, the learning rate is reduced by a factor of 0.1. The updated learning rate follows the equation

5.4. Computational Cost Analysis

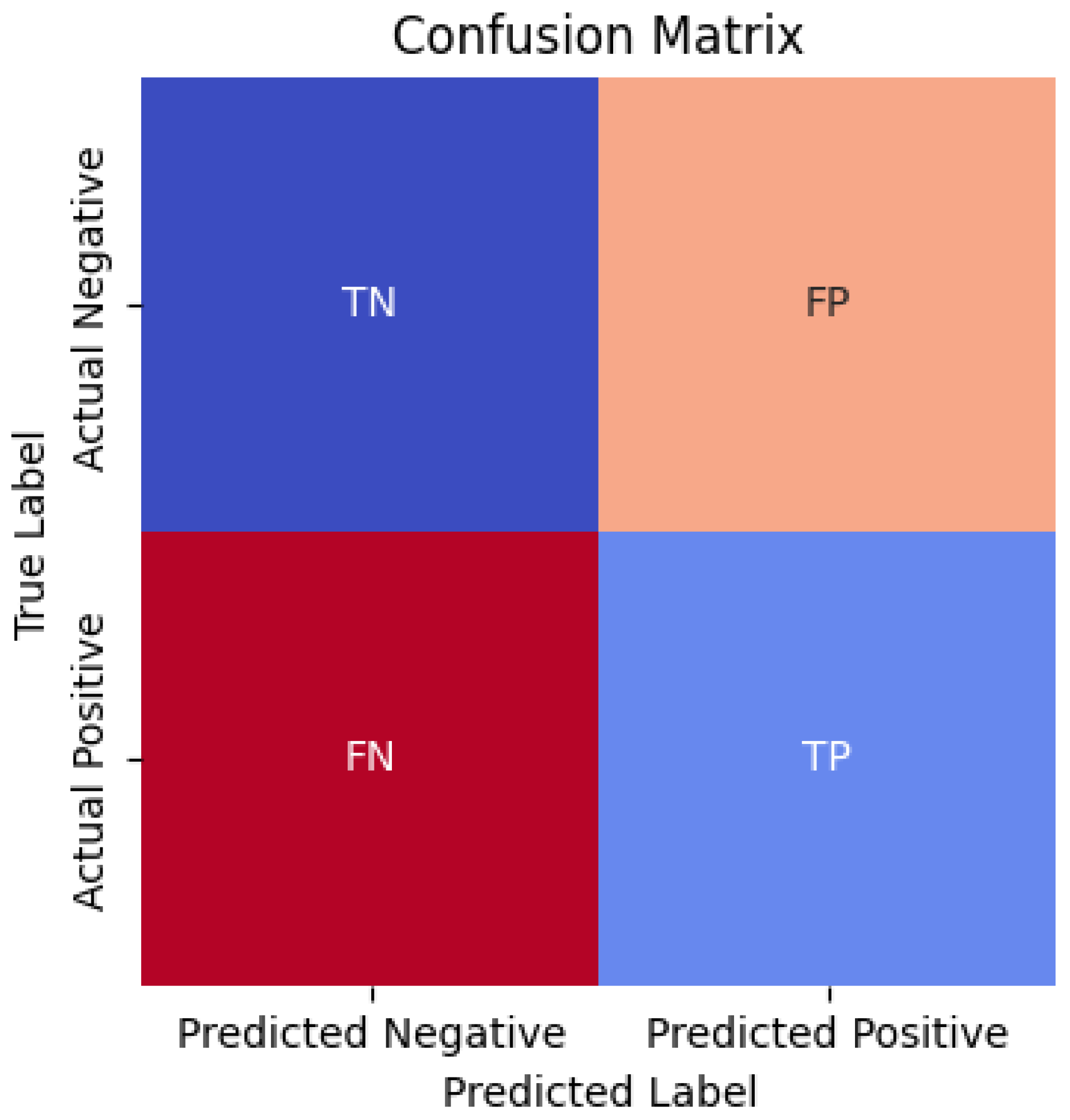

6. Performance Measures

7. Results and Discussion

- 1.

- MNIST

7.1. Performance

7.2. Accuracy and Loss

7.2.1. Accuracy Metrics

7.2.2. Loss Metrics

7.3. Principal Component Analysis Visualization

7.4. Confusion Matrix

- 2.

- Wine Dataset

8. Conclusions and Future Directions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Zhou, L.; Cai, J.; Ding, S. The identification of ice floes and calculation of sea ice concentration based on a deep learning method. Remote Sens. 2023, 15, 2663. [Google Scholar] [CrossRef]

- Cai, J.; Ding, S.; Zhang, Q.; Liu, R.; Zeng, D.; Zhou, L. Broken ice circumferential crack estimation via image techniques. Ocean Eng. 2022, 259, 111735. [Google Scholar] [CrossRef]

- Xia, W.; Pu, L.; Zou, X.; Shilane, P.; Li, S.; Zhang, H.; Wang, X. The design of fast and lightweight resemblance detection for efficient post-deduplication delta compression. Acm Trans. Storage 2023, 19, 1–30. [Google Scholar] [CrossRef]

- Li, M.; Jia, T.; Wang, H.; Ma, B.; Lu, H.; Lin, S.; Cai, D.; Chen, D. Ao-detr: Anti-overlapping detr for X-ray prohibited items detection. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 12076–12090. [Google Scholar] [CrossRef]

- Zhou, Z.; Li, Z.; Zhou, W.; Chi, N.; Zhang, J.; Dai, Q. Resource-saving and high-robustness image sensing based on binary optical computing. Laser Photonics Rev. 2025, 19, 2400936. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning Cambridge; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 23 September 2025).

- Wang, W.; Yin, B.; Li, L.; Li, L.; Liu, H. A Low Light Image Enhancement Method Based on Dehazing Physical Model. Comput. Model. Eng. Sci. (CMES) 2025, 143, 1595. [Google Scholar] [CrossRef]

- Gan, X. GraphService: Topology-aware constructor for large-scale graph applications. ACM Trans. Archit. Code Optim. 2025, 22, 1–24. [Google Scholar] [CrossRef]

- Gan, X.; Li, T.; Gong, C.; Li, D.; Dong, D.; Liu, J.; Lu, K. GraphCSR: A Degree-Equalized CSR Format for Large-scale Graph Processing. Proc. VLDB Endow. 2025, 18, 4255–4268. [Google Scholar] [CrossRef]

- Liu, J.; Jiang, G.; Chu, C.; Li, Y.; Wang, Z.; Hu, S. A formal model for multiagent Q-learning on graphs. Sci. China Inf. Sci. 2025, 68, 192206. [Google Scholar] [CrossRef]

- Shi, J.; Liu, C.; Liu, J. Hypergraph-based model for modelling multi-agent q-learning dynamics in public goods games. IEEE Trans. Netw. Sci. Eng. 2024, 11, 6169–6179. [Google Scholar] [CrossRef]

- Zhang, Z.W.; Liu, Z.G.; Martin, A.; Zhou, K. BSC: Belief shift clustering. IEEE Trans. Syst. Man Cybern. Syst. 2022, 53, 1748–1760. [Google Scholar] [CrossRef]

- Yuan, X.; Sun, J.; Liu, J.; Zhao, Q.; Zhou, Y. Quantum simulation with hybrid tensor networks. Phys. Rev. Lett. 2021, 127, 040501. [Google Scholar] [CrossRef] [PubMed]

- Zuo, C.; Zhang, X.; Zhao, G.; Yan, L. PCR: A parallel convolution residual network for traffic flow prediction. IEEE Trans. Emerg. Top. Comput. Intell. 2025, 9, 3072–3083. [Google Scholar] [CrossRef]

- Zhu, J.; Wang, X.; Cao, G.; Xu, L.; Cao, Y. Quantum Interval Neural Network for Uncertain Structural Static Analysis. Int. J. Mech. Sci. 2025, 303, 110646. [Google Scholar] [CrossRef]

- Ruan, Y.; Xue, X.; Shen, Y. Quantum image processing: Opportunities and challenges. Math. Probl. Eng. 2021, 2021, 6671613. [Google Scholar] [CrossRef]

- Xu, L.; Wang, X.; Wang, Z.; Cao, G. Hybrid quantum genetic algorithm for structural damage identification. Comput. Methods Appl. Mech. Eng. 2025, 438, 117866. [Google Scholar] [CrossRef]

- Wei, S.; Chen, Y.; Zhou, Z.; Long, G. A quantum convolutional neural network on NISQ devices. AAPPS Bull. 2022, 32, 2. [Google Scholar] [CrossRef]

- Zuo, Y.; Hu, Y.; Xu, Y.; Wang, Z.; Fang, Y.; Yan, J.; Jiang, W.; Peng, Y.; Huang, Y. Learning Guided Implicit Depth Function with Scale-aware Feature Fusion. IEEE Trans. Image Process. 2025, 34, 3309–3322. [Google Scholar] [CrossRef]

- Asif, H.; Basit, A.; Innan, N.; Kashif, M.; Marchisio, A.; Shafique, M. PennyLang: Pioneering LLM-Based Quantum Code Generation with a Novel PennyLane-Centric Dataset. arXiv 2025, arXiv:2503.02497. [Google Scholar]

- Henderson, M.; Shakya, S.; Pradhan, S.; Cook, T. Quanvolutional neural networks: Powering image recognition with quantum circuits. Quantum Mach. Intell. 2020, 2, 2. [Google Scholar] [CrossRef]

- Yousif, M.; Al-Khateeb, B.; Garcia-Zapirain, B. A new quantum circuits of quantum convolutional neural network for X-ray images classification. IEEE Access 2024, 12, 65660–65671. [Google Scholar] [CrossRef]

- Reka, S.S.; Karthikeyan, H.L.; Shakil, A.J.; Venugopal, P.; Muniraj, M. Exploring quantum machine learning for enhanced skin lesion classification: A comparative study of implementation methods. IEEE Access 2024, 12, 104568–104584. [Google Scholar] [CrossRef]

- Shi, S.; Wang, Z.; Li, J.; Li, Y.; Shang, R.; Zhong, G.; Gu, Y. Quantum convolutional neural networks for multiclass image classification. Quantum Inf. Process. 2024, 23, 189. [Google Scholar] [CrossRef]

- Tomar, M.; Prajapat, S.; Kumar, D.; Kumar, P.; Kumar, R.; Vasilakos, A.V. Exploring the Role of Material Science in Advancing Quantum Machine Learning: A Scientometric Study. Mathematics 2025, 13, 958. [Google Scholar] [CrossRef]

- Fukushima, K. Neocognitron: A self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol. Cybern. 1980, 36, 193–202. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Yang, Y.; Hospedales, T.M. Deep neural networks for sketch recognition. arXiv 2015, arXiv:1501.07873. [Google Scholar]

- Ballester, P.; Araujo, R. On the performance of GoogLeNet and AlexNet applied to sketches. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Li, F.-F. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar]

- Sharma, N.; Jain, V.; Mishra, A. An analysis of convolutional neural networks for image classification. Procedia Comput. Sci. 2018, 132, 377–384. [Google Scholar] [CrossRef]

- Jmour, N.; Zayen, S.; Abdelkrim, A. Convolutional neural networks for image classification. In Proceedings of the 2018 International Conference on Advanced Systems and Electric Technologies (IC_ASET), Hammamet, Tunisia, 22–25 March 2018; pp. 397–402. [Google Scholar]

- Beohar, D.; Rasool, A. Handwritten digit recognition of MNIST dataset using deep learning state-of-the-art artificial neural network (ANN) and convolutional neural network (CNN). In Proceedings of the 2021 International Conference on Emerging Smart Computing and Informatics (ESCI), Pune, India, 5–7 March 2021; pp. 542–548. [Google Scholar]

- Rebentrost, P.; Mohseni, M.; Lloyd, S. Quantum support vector machine for big data classification. Phys. Rev. Lett. 2014, 113, 130503. [Google Scholar] [CrossRef] [PubMed]

- Ostaszewski, M.; Sadowski, P.; Gawron, P. Quantum image classification using principal component analysis. arXiv 2015, arXiv:1504.00580. [Google Scholar] [CrossRef]

- Ruan, Y.; Xue, X.; Liu, H.; Tan, J.; Li, X. Quantum algorithm for k-nearest neighbors classification based on the metric of hamming distance. Int. J. Theor. Phys. 2017, 56, 3496–3507. [Google Scholar] [CrossRef]

- Cong, I.; Choi, S.; Lukin, M.D. Quantum convolutional neural networks. Nat. Phys. 2019, 15, 1273–1278. [Google Scholar] [CrossRef]

- Kerenidis, I.; Landman, J.; Prakash, A. Quantum algorithms for deep convolutional neural networks. arXiv 2019, arXiv:1911.01117. [Google Scholar] [CrossRef]

- Li, Y.; Zhou, R.G.; Xu, R.; Luo, J.; Hu, W. A quantum deep convolutional neural network for image recognition. Quantum Sci. Technol. 2020, 5, 044003. [Google Scholar] [CrossRef]

- Hur, T.; Kim, L.; Park, D.K. Quantum convolutional neural network for classical data classification. Quantum Mach. Intell. 2022, 4, 3. [Google Scholar] [CrossRef]

- Yousif, M.; Al-Khateeb, B. Quantum Convolutional Neural Network for Image Classification. Fusion Pract. Appl. 2024, 15, 655–667. [Google Scholar]

- Hassan, E.; Hossain, M.S.; Saber, A.; Elmougy, S.; Ghoneim, A.; Muhammad, G. A quantum convolutional network and ResNet (50)-based classification architecture for the MNIST medical dataset. Biomed. Signal Process. Control 2024, 87, 105560. [Google Scholar] [CrossRef]

- Sayed, R.; Azmi, H.; Shawkey, H.; Khalil, A.H.; Refky, M. A systematic literature review on binary neural networks. IEEE Access 2023, 11, 27546–27578. [Google Scholar] [CrossRef]

- Yao, P.; Wu, H.; Gao, B.; Tang, J.; Zhang, Q.; Zhang, W.; Yang, J.J.; Qian, H. Fully hardware-implemented memristor convolutional neural network. Nature 2020, 577, 641–646. [Google Scholar] [CrossRef] [PubMed]

- Van Pham, K.; Van Nguyen, T.; Tran, S.B.; Nam, H.; Lee, M.J.; Choi, B.J.; Truong, S.N.; Min, K. Memristor binarized neural networks. J. Semicond. Technol. Sci. 2018, 18, 568–588. [Google Scholar] [CrossRef]

- Pham, K.V.; Tran, S.B.; Nguyen, T.V.; Min, K.S. Asymmetrical training scheme of binary-memristor-crossbar-based neural networks for energy-efficient edge-computing nanoscale systems. Micromachines 2019, 10, 141. [Google Scholar] [CrossRef] [PubMed]

- Huang, M.; Zhao, G.; Wang, X.; Zhang, W.; Coquet, P.; Tay, B.K.; Zhong, G.; Li, J. Global-gate controlled one-transistor one-digital-memristor structure for low-bit neural network. IEEE Electron Device Lett. 2020, 42, 106–109. [Google Scholar] [CrossRef]

- Wu, H. CNN-Based Recognition of Handwritten Digits in MNIST Database. Research School of Computer Science, The Australia National University, Canberra, Australia. 2018. Available online: https://users.cecs.anu.edu.au/~Tom.Gedeon/conf/ABCs2018/paper/ABCs2018_paper_104.pdf (accessed on 23 September 2025).

- Cohen, G.; Afshar, S.; Tapson, J.; Van Schaik, A. EMNIST: Extending MNIST to handwritten letters. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 2921–2926. [Google Scholar]

- Gope, B.; Pande, S.; Karale, N.; Dharmale, S.; Umekar, P. Handwritten digits identification using mnist database via machine learning models. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1022, 012108. [Google Scholar] [CrossRef]

- Qian, Y.; Wang, X.; Du, Y.; Wu, X.; Tao, D. The dilemma of quantum neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 5603–5615. [Google Scholar] [CrossRef]

- Nielsen, M.A.; Chuang, I.L. Quantum Computation and Quantum Information; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Oh, S.; Choi, J.; Kim, J. A tutorial on quantum convolutional neural networks (QCNN). In Proceedings of the 2020 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 16–18 October 2020; pp. 236–239. [Google Scholar]

- Choi, J.; Oh, S.; Kim, J. Quantum approximation for wireless scheduling. Appl. Sci. 2020, 10, 7116. [Google Scholar] [CrossRef]

- Abdulrida, R.; A-Monem, M.E.; Jaber, A.M. Quantum image watermarking based on wavelet and geometric transformation. Iraqi J. Sci. 2020, 61, 153–163. [Google Scholar] [CrossRef]

- Khan, A.A.; Ahmad, A.; Waseem, M.; Liang, P.; Fahmideh, M.; Mikkonen, T.; Abrahamsson, P. Software architecture for quantum computing systems-A systematic review. J. Syst. Softw. 2023, 201, 111682. [Google Scholar] [CrossRef]

- Kwak, Y.; Yun, W.J.; Jung, S.; Kim, J. Quantum neural networks: Concepts, applications, and challenges. In Proceedings of the 2021 Twelfth International Conference on Ubiquitous and Future Networks (ICUFN), Jeju Island, Republic of Korea, 17–20 August 2021; pp. 413–416. [Google Scholar]

- Fisher, M.P.; Khemani, V.; Nahum, A.; Vijay, S. Random quantum circuits. Annu. Rev. Condens. Matter Phys. 2023, 14, 335–379. [Google Scholar] [CrossRef]

- Zheng, J.; Gao, Q.; Lü, J.; Ogorzałek, M.; Pan, Y.; Lü, Y. Design of a quantum convolutional neural network on quantum circuits. J. Frankl. Inst. 2023, 360, 13761–13777. [Google Scholar] [CrossRef]

- Huang, R.; Tan, X.; Xu, Q. Learning to learn variational quantum algorithm. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 8430–8440. [Google Scholar] [CrossRef] [PubMed]

- LaRose, R.; Coyle, B. Robust data encodings for quantum classifiers. Phys. Rev. A 2020, 102, 032420. [Google Scholar] [CrossRef]

- Kosaraju, N.; Sankepally, S.R.; Mallikharjuna Rao, K. Categorical data: Need, encoding, selection of encoding method and its emergence in machine learning models-A practical review study on heart disease prediction dataset using pearson correlation. In Proceedings of the International Conference on Data Science and Applications: ICDSA 2022, Kolkata, India, 26–27 March 2022; Volume 1, pp. 369–382. [Google Scholar]

- Yao, F.; Zhang, H.; Gong, Y. Difsg2-ccl: Image reconstruction based on special optical properties of water body. IEEE Photonics Technol. Lett. 2024, 36, 1417–1420. [Google Scholar] [CrossRef]

- Hu, C.; Dong, B.; Shao, H.; Zhang, J.; Wang, Y. Toward purifying defect feature for multilabel sewer defect classification. IEEE Trans. Instrum. Meas. 2023, 72, 1–11. [Google Scholar] [CrossRef]

| Reference | Technique Used | Highlights | Limitations |

|---|---|---|---|

| Fukushima (1980) [27] | Neocognitron | Introduced hierarchical layers for feature extraction, laying the foundation for CNNs. | Did not fully address scalability and complex dataset generalization. |

| LeCun (1989) [28] | CNN | Essential for image classification tasks using backpropagation. | Limited computational power restricted its adoption. |

| LeCun et al. (1998) [29] | LeNet-5 | Designed for handwritten ZIP code recognition on MNIST; incorporated convolution, pooling, and fully connected layers. | Restricted to digit classification, lacked generalization to broader datasets. |

| Krizhevsky et al. (2012) [30] | AlexNet | Introduced ReLU, dropout, and GPU training; won ILSVRC 2012. | Large parameter count; prone to overfitting on smaller datasets. |

| Simonyan & Zisserman (2014) [31] | VGG | Used deeper architectures with smaller filters, improving accuracy. | High memory and computational costs. |

| Szegedy et al. (2015) [32] | GoogleNet | Introduced Inception modules for efficient feature extraction. | Complex design; less flexible for modifications. |

| He et al. (2016) [33] | ResNet | Introduced residual learning to solve vanishing gradient problem. | Overhead in very deep networks; risk of overfitting. |

| Huang et al. (2017) [34] | DenseNet | Dense connections improved gradient flow and parameter efficiency. | Increased memory requirements. |

| Yang et al. (2015) [35] | CNNs vs. Humans | CNNs achieved 74.9% accuracy vs. humans’ 73.1%, surpassing human performance. | Limited to specific datasets; generalization uncertain. |

| Karpathy et al. (2014) [37] | CNN on YouTube videos | Trained on 1M videos, extracted robust features, achieved 63.3% accuracy. | Weakly labeled data; noisy dataset. |

| Neha et al. (2018) [38] | CNN (CIFAR-10/100) | Performance analysis on standard datasets. | Dataset-specific evaluation; lacks generalization insights. |

| Jmour et al. (2018) [39] | CNN (Traffic Signs) | Achieved 93.3% accuracy on ImageNet with batch size 10. | Smaller batch size may affect scalability. |

| Drishti et al. (2021) [40] | ANN vs CNN (MNIST) | ANN: 1.31% baseline error; CNN: 0.91% error (60 k images, 10 epochs, batch size 200). | Limited to handwritten digits; not tested on more complex datasets. |

| Reference | Technique Used | Highlights |

|---|---|---|

| Rebentrost et al. [41] | Quantum Support Vector Machine (QSVM) | Proposed QSVM for classification tasks, demonstrating the potential of quantum speedup in supervised learning. |

| Ostaszewski et al. [42] | Quantum Principal Component Analysis (QPCA) | Introduced a quantum-based algorithm applying PCA for image pattern recognition. |

| Ruan et al. [43] | Quantum K-Nearest Neighbors (QKNNs) | Developed a quantum algorithm inspired by classical KNN, efficiently computing Hamming distance between test samples and training vectors. |

| Reference | Focus | Highlights |

|---|---|---|

| Cong et al. (2019) [44] | Foundational QCNN | Proposed QCNN inspired by classical CNNs; introduced hierarchical PQCs, quantum downsampling, and entanglement. Effective in classifying quantum states and solving many-body problems. |

| Henderson et al. (2020) [22] | Quanvolutional Layer | Developed QCNN for MNIST classification. Introduced quanvolutional layer, showing quantum circuits enhance feature extraction and classification accuracy. |

| Kerenidis et al. (2019) [45] | Deep QCNN with speedup | Proposed quantum approach to deep CNNs with non-linear activations and pooling. Numerical simulations on MNIST showed practical viability and speed improvements. |

| Li et al. (2020) [46] | Quantum Image Recognition | Applied hierarchical quantum renormalization and fractal-based scaling for image recognition. Demonstrated efficiency and accuracy gains in QCNN-based models. |

| Hur et al. (2022) [47] | Quantum Encoding & Optimization | Proposed QCNN with two-qubit interactions. Compared models based on circuit structures, encoding, preprocessing, cost functions, and optimization. Tested on MNIST and Fashion-MNIST. |

| Yousif et al. (2024) [48] | Enhanced QCNNs | Showed QCNNs overcome CNN limitations in efficiency and generalization. Used quantum circuits for convolution/pooling to handle high-dimensional data effectively. |

| Hassan et al. (2024) [49] | Biomedical QCNN | Proposed hybrid QCNN + ResNet50 model for medical image classification, achieving improved accuracy and highlighting QCNN’s potential in healthcare. |

| Reference | Model Used | Dataset | Accuracy (%) |

|---|---|---|---|

| [51] | CNN | MNIST | 96 |

| [52] | BNN | MNIST | 96.1 |

| [53] | Binary-Memristor Crossbar | MNIST | 91.7 |

| [54] | 1T1DM Architecture | MNIST | 89.34 |

| [55] | CNN (LeNet-5) | MNIST | 94.0 |

| [56] | CNN | MNIST | 96.5 |

| [57] | SVM | MNIST | 95.88 |

| [58] | QNN | WINE | 75–85 |

| , | , | |

| , | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prajapat, S.; Tomar, M.; Kumar, P.; Kumar, R.; Vasilakos, A.V. Quantum Computing Meets Deep Learning: A QCNN Model for Accurate and Efficient Image Classification. Mathematics 2025, 13, 3148. https://doi.org/10.3390/math13193148

Prajapat S, Tomar M, Kumar P, Kumar R, Vasilakos AV. Quantum Computing Meets Deep Learning: A QCNN Model for Accurate and Efficient Image Classification. Mathematics. 2025; 13(19):3148. https://doi.org/10.3390/math13193148

Chicago/Turabian StylePrajapat, Sunil, Manish Tomar, Pankaj Kumar, Rajesh Kumar, and Athanasios V. Vasilakos. 2025. "Quantum Computing Meets Deep Learning: A QCNN Model for Accurate and Efficient Image Classification" Mathematics 13, no. 19: 3148. https://doi.org/10.3390/math13193148

APA StylePrajapat, S., Tomar, M., Kumar, P., Kumar, R., & Vasilakos, A. V. (2025). Quantum Computing Meets Deep Learning: A QCNN Model for Accurate and Efficient Image Classification. Mathematics, 13(19), 3148. https://doi.org/10.3390/math13193148