1. Introduction

Uncertainty reasoning is a powerful tool for representing, quantifying, and manipulating incomplete, imprecise, ambiguous, or conflicting information. Several theories have been proposed to model different uncertainty problems, including probability theory [

1], fuzzy sets [

2], rough sets [

3], D-S evidence theory [

4,

5], and Bayesian inference [

6,

7], which have been further applied in many scenarios, including time-series analysis [

8], decision-making [

9,

10], deep learning [

11,

12], game theory [

13], and disaster reduction [

14].

D-S evidence theory has attracted significant attention for its effectiveness in managing the discordance and non-specificity of evidence [

15]. The core principle of Dempster’s Rule of Combination (DRC) is to resolve conflicts by discarding contradictory components. However, this approach may produce counter-intuitive results when the evidence is highly conflicting [

16,

17,

18]. Various methods have been proposed to address this issue, which can generally be divided into two main categories: the first category emphasizes modifying the combination rule, while the second focuses on pre-processing the evidence before fusion.

Several contributions to the first type of methods include the unnormalized combination rule in the transferable belief model [

19], the disjunctive rule [

20], and alternative normalization schemes [

21]. However, uncertainty can arise from a lack of knowledge, particularly when the sources of evidence are unreliable or corrupted. Modifying the combination rule alone cannot fully rectify the situation, as counter-intuitive results often originate from the evidence sources rather than just the fusion mechanism [

22]. As a result, many researchers have shifted their focus to the second type of methods, which highlight the pre-processing of evidence to reduce conflict before fusion. Representative methods in this category include simple averaging of basic belief assignments (BBAs) [

23] and weighted averaging of BBAs [

24] based on the Jousselme divergence [

25]. Building on this direction, a key challenge is designing measures that provide a clear mathematical foundation while yielding more reliable credibility assessments for use in weighted-average fusion. From the perspective of the mathematical tools employed, these methods can be broadly classified into three categories: The first is belief-entropy-based weighting [

26,

27,

28], which evaluates the uncertainty associated with a single piece of evidence. The second is divergence-based weighting [

24,

29], which quantifies the informational difference between evidence pairs. The third is hybrid approaches integrating belief-entropy-based weighting and divergence-based weighting to enhance credibility assessment [

30,

31,

32].

The essence of handling conflicting evidence is to identify one or more unreliable sources of evidence. Research on belief entropy mainly focuses on depicting discordance and non-specificity [

33,

34,

35], modeling the amount of information required to reduce uncertainty to certainty. Therefore, divergence-based methods, which directly quantify the differences between pairs of BBAs, are more suitable for calculating credibility. A representative work of the divergence-based method is the Belief Jensen–Shannon divergence (BJS) [

36], the first to extend the Jensen–Shannon divergence [

37] into the context of the D-S evidence theory. Following the paradigm established by BJS, subsequent works in this context often focus on mining deeper semantic relationships between pairs of evidence to enhance credibility assessment. Some works model the set-structural correlations among all focal subsets to better characterize their correlation within BBAs [

29,

38,

39,

40]. Others introduce fractal-based divergences to separate similar and conflicting evidence across scales [

41,

42]. A third line quantifies BJS-generalized divergence among BBAs and develops a GEJS-weighted fusion method [

43]. A fourth line extends belief divergence contexts based on quantum-theoretic tools [

44,

45,

46]. Methods built on these mathematical foundations have generally performed well in pattern classification tasks. They are seen as generalizations of classical probabilistic methods in the context of D-S evidence theory, which establish strong links to information-theoretic principles.

Most existing methods primarily evaluate either the differences between pairs of BBAs or the divergence among multi-source evidence [

43]. However, there is a significant gap in our understanding of the deeper mathematical and informational relationships among the entire set of BBAs from a broader perspective. In practice, some BBAs show high similarity, reflecting a consensus, while others differ significantly, indicating distinct belief groups. Simply determining the credibility of a BBA based on the evaluations of all other BBAs, or relying solely on pairwise comparisons, overlooks the valuable information gathered at the group level through consensus. By grouping BBAs with similar belief structures into clusters and assigning those with dissimilar structures to separate clusters [

47,

48], we can better interpret conflicts among BBAs. This approach allows us to optimize fusion outcomes by leveraging both intra-cluster and inter-cluster information, leading to more transparent decision-making. This challenge is especially critical in real-world applications such as multi-sensor target recognition, where a single conflicting report can distort consensus among multiple sources [

49].

Therefore, we introduce a novel cluster-level information fusion framework to fill the gap in modeling group-level consensus among BBAs, shifting the analytical perspective from traditional BBA-to-BBA comparisons to a more holistic view of BBAs-to-BBAs. A key contribution is the development of a new cluster–cluster divergence measure, , which captures both the mass distribution and the structural differences between evidence groups. This measure is integrated into a reward-based greedy evidence assignment rule that dynamically assigns new evidence to optimize inter-cluster separation and intra-cluster consistency. Validation on benchmark pattern classification tasks shows that the proposed method outperforms traditional D-S evidence theory methods, demonstrating the effectiveness of its novel cluster-level perspective.

The rest of this paper is organized as follows:

Section 2 introduces foundational theories.

Section 3 proposes our cluster-level information fusion framework.

Section 4 illustrates the method with numerical examples.

Section 5 validates the method on classification tasks.

Section 6 concludes the paper.

2. Preliminaries

This section lays the theoretical groundwork for our proposed framework. We begin by reviewing the fundamentals of D-S evidence theory, which forms the basis for representing and combining uncertain information. We then introduce fractal theory, a concept that we will later leverage to construct representative centroids for evidence clusters. Finally, we summarize several key divergence measures from information theory, as these will be essential for quantifying the dissimilarity between evidence clusters.

2.1. D-S Evidence Theory

D-S evidence theory [

4,

5] is a mathematical framework for representing uncertain and ambiguous information. It allows for allocating trust to a set of hypotheses

, rather than to a single hypothesis, and is therefore considered to be an extension of traditional probability theory. Key concepts of D-S evidence theory are outlined below.

Definition 1 (Frame of Discernment)

. Let Θ be a set of N mutually exclusive and exhaustive non-empty hypotheses, called a frame of discernment, denoted by The power set of Θ, denoted as , consists of all of its subsets:where the empty set is denoted by ⌀. Singleton sets denote sets containing only one element, and a set containing multiple elements is called a multi-element set. All of the above sets are subsets of . Definition 2 (Basic Belief Assignment)

. A basic belief assignment (also called a mass function) is a function satisfyingandwhere each set with is called a focal element. Specifically, a set of basic belief assignments is: Definition 3 (Dempster’s Rule of Combination)

. Dempster’s Rule of Combination fuses basic belief assignments from multiple independent information sources into a single consensus BBA. Given two basic belief assignments and defined on the same Θ, the combined mass function m is defined as follows:where the conflict coefficientis defined to measure the degree of conflicts between two BBAs. The information fusion problem can be regarded as recursively combining n basic belief assignments into a new basic belief assignment using Dempster’s Rule of Combination, in the following form: Dempster’s Rule of Combination is a commonly used mechanism in evidential reasoning. However, it can produce counter-intuitive results when applied to highly conflicting evidence, which poses a challenge to motivating the development of our cluster-based information fusion framework.

2.2. Fractal

Fractal theory offers a mathematical framework for describing objects that embody statistical self-similarity, a property where their structural patterns are replicated on various magnification scales [

50].

The generalization of fractal theory in D–S evidence theory formalizes belief refinement as a self-similar process of unit-time splitting. This process uncovers the hierarchical structure in a piece of evidence by iteratively redistributing the mass of a multi-element focal element to its members in the power set.

In uncertainty representation, the Maximum Deng Entropy Separation Rule (MDESR) [

51], based on Deng entropy (also called belief entropy) [

52,

53], can recursively separate a mass function in a way that maximizes the Deng entropy at each step. It guarantees that the information volume [

54] strictly increases and converges to a stable value during iterations. Previous work [

54] has investigated how to maximize the Deng entropy of the mass function after each step of fractalization and derived its analytical optimal solution: the maximum Deng entropy.

In our work, this fractal-based maximum entropy principle is instrumental in defining the centroid of an evidence cluster, guaranteeing that it evolves in the most unbiased manner as new evidence is incorporated, a concept further detailed in

Section 3.2.

Definition 4 (Maximum Deng Entropy)

. When BBA is set tothe Deng entropy reaches its maximum value, which is called information volume. Therefore, the maximum Deng entropy can be expressed as follows: 2.3. Divergence

Definition 5 (Kullback–Leibler Divergence)

. In information theory, the Kullback–Leibler divergence [55] measures the discrepancy from one probability distribution to another. For discrete distributions and with the same sample space, it is defined as follows: Definition 6 (Jensen–Shannon Divergence)

. The Jensen–Shannon divergence [37], which has its roots in earlier concepts like the "increment of entropy" for measuring distances between random graphs [56], overcomes the asymmetric issue of Kullback–Leibler divergence by taking the average distribution and definingwhere is always finite, symmetric, and its square root is a metric. Definition 7 (Belief Jensen–Shannon Divergence)

. Belief Jensen–Shannon divergence is a generalization of Jensen–Shannon divergence. Consider two BBAs, and , defined on Θ. The Belief Jensen–Shannon divergence [36] is defined as follows: Definition 8 (Euclidean Distance)

. For two vectors and in the same real coordinate space, the Euclidean distance is the -norm of their difference:which corresponds to the standard geometric distance between points. Definition 9 (Hellinger Distance)

. Given two discrete probability distributions and with the same sample space, the Hellinger distance [57] is defined as follows: Hellinger distance is symmetric, bounded in , and a proper metric.

These divergences are foundational to our framework, providing the mathematical tools to quantify both the internal consistency of BBAs within a cluster and an important baseline used in D-S evidence theory.

3. The Cluster-Level Information Fusion Framework

In this section, we introduce a comprehensive framework for cluster-level information fusion. The framework first groups multiple pieces of evidence into appropriate clusters. A cluster centroid is then established based on fractal geometry principles, after which inter-cluster divergences are quantified and new evidence is dynamically assigned to the most suitable cluster. Finally, a weighted fusion of evidence is carried out using cluster-level credibility weights.

3.1. Inspirations of the Cluster-Level View and Information Fusion Framework

In information fusion, the reliability of a given piece of evidence is often assessed by measuring its divergence, entropy, and inherent properties within D-S evidence theory, typically through pairwise BBA comparisons. However, this conventional process tends to overlook the structural differences between multiple pieces of evidence. Within a given collection of evidence, many of the corresponding mass functions are often highly similar. In contrast, conflicting evidence is typically characterized by mass functions that deviate significantly from those of the majority.

A classical example in the literature [

24] is illustrated to show both the source of the conflict and how the results of the fusion can vary depending on the relationships among pieces of evidence.

Example 1 (Sensor Data for Target Recognition)

. In an automatic target recognition system based on multiple sensors, assume that the real target is . The system collects the following five pieces of evidence from five different sensors in Table 1: In Example 1, intuitively, the BBAs , , , and all assign their belief to , but none of them achieve a relatively high confidence. Meanwhile, the maximum value of is 0.90, pointing to decisively. Therefore, although most of the evidence supports , the conflicting evidence precludes the possibility of making a direct and accurate judgment. To demonstrate the source of the conflict, we compare three situations in terms of pairwise average Dempster conflict coefficient:

Case 1: Only similar BBAs are considered; is the average of over all pairs with .

Case 2: The conflicting BBA versus the others. is the average of for .

Case 3: All five BBAs are considered; is the average of on all pairs.

For each case, we compute the average Dempster conflict coefficient K. The results show the following:

When only similar BBAs are counted, the average conflict coefficient , indicating high consistency.

When the conflicting BBA is compared to the others, , revealing its direct disagreement with the majority.

When all BBAs are included, the average conflict coefficient , indicating increased overall inconsistency.

For Cases 1 and 3, we fuse the BBAs using the Dempster combination rule. The fusion result is listed in

Table 2.

As illustrated in Example 1 and

Table 2, including conflicting evidence amplifies the overall conflict, resulting in an unreliable and counter-intuitive fusion outcome. In contrast, fusing only similar evidence leads to a more robust and accurate result. These results demonstrate that if BBAs are fused too hastily, without considering their similarities and discrepancies at the group level, there is a much greater chance of evidence conflicts, which can damage the reliability of the fusion result.

However, when evidence sources with similar characteristics are grouped into clusters and dissimilar sources are separated, the original conflicts among individual BBAs are not eliminated but reappear as discrepancies between clusters. This cluster-level perspective facilitates a more systematic and interpretable analysis of evidential conflict by distinguishing intra-cluster consensus from inter-cluster divergence. By aggregating similar BBAs, one can more clearly reveal the intrinsic structure of information, enabling the identification of consensus within clusters and the isolation of conflicting perspectives across clusters. This approach helps to reveal the underlying relationships among evidence sources and establishes the foundations for more interpretable information fusion and decision-making.

Motivated by these observations, we propose a novel cluster-level information fusion framework. In this framework,

cluster centroids are defined via the maximum Deng entropy fractal operation, which captures the representative feature of each cluster. Incoming BBAs are assigned to the most appropriate cluster using an adaptive evidence allocation rule. Once all BBAs have been allocated, the cluster-level information fusion algorithm performs a weighted average using intra-cluster and inter-cluster information. Ultimately, the fusion results are converted into probabilistic values [

58,

59] for pattern classification or decision-making processes.

3.2. The Construction of a Cluster

Definition 10 (A Single Cluster (

))

. Given a collection of BBAs , the i-th cluster, denoted by , is any subset of BBAs from :where denotes the number of BBAs contained in the i-th cluster. If , we set , representing an “empty cluster” currently holding no BBAs. Each cluster is an unordered set with no repeated elements. Each BBA is assigned to exactly one cluster, which is denoted as . In other words, each BBA is exclusively allocated to a single cluster. The allocation algorithm is proposed in Algorithm 1. The cluster partition, denoted as , forms a partition of the full BBA set such thatandwhere K is defined as the number of clusters containing at least one BBA. The primary objective of clustering within our framework is to model two fundamental effects: the aggregation of similar beliefs within a cluster and the separation of conflicting beliefs between clusters, akin to fractals, which exhibit self-similarity and multiscale characteristics [

34,

60]. In the literature, fractal operators have been used to analyze BBAs by quantifying the maximum information volume that a single BBA can achieve, which reflects its highest level of uncertainty [

51,

54]. Fractal operations also simulate the dynamic evolution process of pignistic probability transformation (PPT) [

34,

61]. During the fractal process, the masses associated with non-singleton sets gradually decrease, while the masses on singleton sets increase. As a result, a BBA approximately converges to a pignistic probability distribution [

62]. When applying fractal theory to multiple pieces of evidence, the self-similar nature of fractals amplifies the intrinsic information of these pieces of evidence. As fractal iterations proceed, the unity among similar BBAs becomes more apparent, while the differences between dissimilar BBAs are magnified [

63]. As a result, modeling a cluster centroid using a fractal-based approach yields a representative summary for each cluster.

There are several ways to define fractal operators on BBAs [

51,

62,

63,

64]. However, the most justifiable construction is the maximum Deng entropy fractal. This approach yields the most conservative evolution, maximizing entropy to avoid introducing any unjustified bias in the belief update process [

65,

66]. In practice, we utilize the MDESR method [

51] to ensure that the BBA fractal strictly follows maximum entropy principles.

Definition 11 (Maximum Deng Entropy Fractal Operator

F)

. We define the maximum Deng entropy fractal operator F, which redistributes the mass of each non-empty focal element in a BBA to all of its non-empty subsets in the splitting result: Definition 12 (

h-Order Fractal BBA

)

. Given the original BBA , we define its h-order fractal mass function after h fractal iterations as follows:where h is called the fractal order (), which indicates how many times the fractal operator is applied to the BBA. Besides the above explicit form, an equivalent recursive form is given by In particular, when , the fractal-order mass function degrades to the original BBA . Additionally, for every , defines a valid mass function on , taking values in , satisfying and for all .

While the h-order fractal BBA refines individual evidence, our framework requires a representative BBA to summarize the collective belief of a cluster. We then introduce the cluster centroid, which is constructed by aggregating the fractal BBAs of all of its members.

Definition 13 (Cluster Centroid

)

. The cluster centroid refers to a virtual BBA that summarizes the overall characteristics of all BBAs within a cluster. It is given by the arithmetic mean of these fractal BBAs on the power set of Θ:where is the number of BBAs in cluster and denotes the mass assigned to set A after applying the h-th-order fractal operator F to within . It follows that and for all , so is itself a valid BBA. Remark 1. To maintain consistency between the scale of the cluster and the fractal operation, we adopt the convention that the cluster size determines the fractal order: The fractal order h increases linearly with the number of elements in the cluster: for a singleton cluster, ; for a cluster of two elements, ; and so on. When , we have , so the cluster centroid degrades to the original BBA. If a new BBA is later added to , the following parameters are updated as follows: While the arithmetic mean definition of the cluster centroid is straightforward, updating it from scratch requires operations. As increases, this cost becomes prohibitive for large-scale or online clustering. To address this, the equivalent recursive update definition that we introduce significantly reduces this burden, lowering the per-update complexity to .

Definition 14 (Recursive Update of the Cluster Centroid

)

. Given a cluster with existing elements and its previous centroid , the centroid is updated to order h upon the insertion of a new BBA . The new centroid is computed as the weighted average of the transformed existing centroid and the transformed new BBA:where is the h-order fractal BBA corresponding to the newly inserted , and F is the fractal operator. The equivalence between Definitions 13 and 14 is established in Theorem 1. The proof for Theorem 1 is provided in

Appendix A for completeness.

Theorem 1 (Equivalence of Definitions 13 and 14)

. The cluster centroid, , has two definitions, the recursive form and the arithmetic average form, which are identical for any shared linear fractal operator F.

3.3. The Divergence Between Clusters

A measure is required to quantify the informational divergence between clusters. However, since cluster centroids constructed via fractal operators can reside on different fractal orders, direct comparison would violate the axioms of a metric. Therefore, unifying all cluster centroids into the same fractal order is necessary before divergence calculation.

Definition 15 (Global Maximal Fractal Order

H)

. To address the incomparability arising from different fractal orders, we introduce the global maximal fractal order:where denotes the fractal order of cluster i. The choice of a fractal order unification strategy is an open question. We use the maximum fractal order, as it may more thoroughly reveal the informational difference within the cluster’s fractal structure.

Remark 2. Given any two clusters and with fractal orders and , respectively, their centroids are aligned to order H as follows: Directly comparing cluster centroids is insufficient, as it overlooks their internal structure. Different clusters may concentrate their belief on entirely different focal elements. For instance, one cluster might gain high confidence in a proposition because many of its member BBAs strongly support it. In contrast, another cluster’s support for the same proposition might be weak and sparse, indicating a lack of consensus belief within the group. To capture this structural difference, we need to quantify how a cluster’s support is distributed across focal elements. Accordingly, we propose a scale weight for each subset .

Definition 16 (Scale Weight

)

. The scale weight quantifies the proportion of BBAs in the cluster that assign a non-negligible mass to a set A. Formally, for every BBA in cluster p, and each set A, a soft weighting function is computed:where the parameters δ and ε determine the sensitivity and softness of the counting threshold, respectively, and both are chosen as small positive constants. The aggregated and normalized scale weight is then defined as follows:where indicates the relative support degree of set A in cluster p, normalized over all focal elements, ranging from and summing up to 1. Thus, we associate each cluster p with a feature vector, whose component for each focal set A is defined as follows:and analogously for q. Definition 17 (Cluster–Cluster Divergence

)

. The cluster-to-cluster divergence is defined as follows: The divergence between clusters is determined by two factors: the scale weight of a focal set

A, given by

; and the support strength, measured by

. From a mathematical perspective, it is defined as the Euclidean distance between clusters in a high-dimensional space; thus, it naturally satisfies non-negativity, symmetry, and the triangle inequality. This formulation allows the divergence

to be viewed as an unnormalized generalization of the Hellinger distance to the D-S evidence theory level. Additionally, the range of

is

. The full theorem and proof for these properties are given in

Appendix B. Based on the proofs, we conclude that

satisfies all of the properties of a pseudo-metric.

3.4. Cluster-Driven Evidence Assignment Rule

The core idea of this section is how to optimally assign a newly observed BBA, denoted as , to either an existing cluster () or a new one (). This process sequentially allocates each BBA in the set to its most suitable cluster, balancing the cohesion of similar beliefs within a cluster and the separation of conflicting beliefs across clusters. The decision criterion is based on the reward of each evidence assignment strategy, selecting the one with the highest reward greedily.

Definition 18 (Evidence Assignment Strategy)

. Let the current cluster partition be denoted by , and consider all possible assignment decisions for . We formulate the assignment problem as the selection of an optimal strategy among alternatives: For each candidate strategy k, we construct a temporary clustering result , in which is either added to the k-th existing cluster (for ) or forms a singleton cluster (for ). Denote the number of clusters after this assignment as , withand, thus, the number of inter-cluster pairs is . We measure the internal consistency of a cluster using the BJS divergence [

36], introduced in

Section 2.3. In our framework, we define the

intra-cluster divergence as the average of all pairwise divergences between cluster BBA members, calculated using

as a confirmed metric [

36]. A lower intra-cluster BJS value therefore indicates a higher degree of consensus within the cluster.

Definition 19 (Intra-Cluster Divergence

)

. The intra-cluster divergence of cluster is defined as follows: Definition 20 (Strategy Reward

)

. For each candidate BBA and evidence assignment strategy k, the corresponding reward is The primary component of the numerator reflects the average divergence between clusters. In contrast, the main component of the denominator captures the average divergence within clusters, which is temporarily updated based on the new cluster structure derived from strategy k.

By default, the hyperparameters

and

are set to 1, giving the same weight to cluster separation and internal consistency. However, the relative importance of these two objectives should not be assumed to be the same across all situations. Different application scenarios often have unique structural and statistical properties, making the use of a one-size-fits-all approach for parameterization impractical. Ideally, the values of

and

should be determined by data-driven approaches or based on specific requirements. Our work employs a data-driven approach to determine the optimal

hyperparameter pair suitable for each classification task. The details are presented in

Section 5.

The optimal strategy is then obtained by selecting the strategy that maximizes the reward:

Definition 21 (Optimal Strategy

)

. Guided by the reward , each new BBA is sequentially tested in all possible strategies, and the best option is executed.

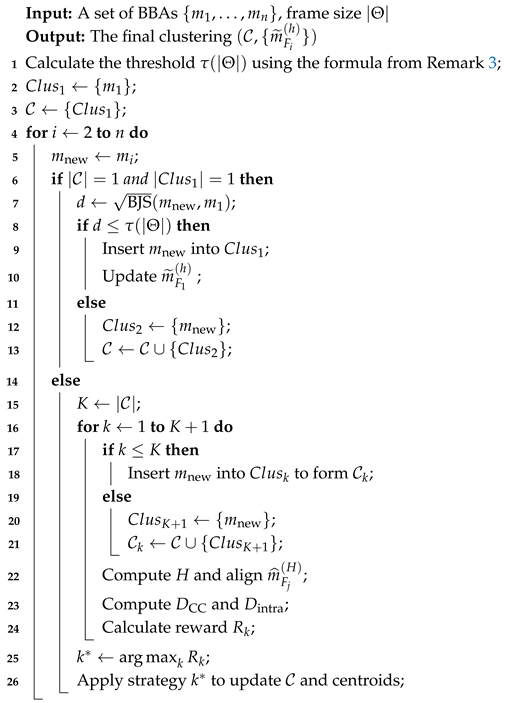

In Example 8, when

is incorporated, the initial configuration and the outcome of each candidate strategy are as illustrated in

Figure 1. The process demonstrates the reward associated with each strategy and the justification for the optimal assignment.

3.5. The Cluster-Level Information Fusion Algorithm

This subsection outlines our proposed framework for cluster-level information fusion, which consists of two stages. In the first stage, we sequentially add the incoming BBAs to the clusters while updating the cluster framework. The second stage involves fusing all of the evidence by taking into account both the divergence within clusters and the divergence between clusters.

3.5.1. The First Stage of the Algorithm

During the first stage, the incoming BBAs are processed sequentially through the following steps:

Cluster Construction: Construct the centroid of each cluster based on the maximum-entropy fractal operation F.

Evaluate Strategies: For each newly arriving BBA, compute the reward for all potential assignments, whether to an existing cluster or a new one.

Evidence Assignment: Assign the BBA to the cluster that yields the highest reward, and update the corresponding cluster centroid.

The detailed steps of the first stage are outlined in Algorithm 1.

Remark 3. When only a single BBA exists in the system, the arrival of a second BBA must determine whether to create a new cluster or merge into the existing cluster. This "cold-start" decision is made only once. Since there is no prior empirical structure at this stage, we treat each BBA as a random point drawn from a uniform Dirichlet distribution over the entire range of evidence. By setting the threshold at the distance median, denoted as , this approach is designed so that, on average, half of all equally uninformative evidence pairs are merged while the other half are split. The closed-form expression for this parameter-free boundary iswhich evaluates to for and stabilizes at for larger frames. Consequently, identical BBAs () are always merged, while maximally conflicting BBAs () are always split. The complete derivation process is listed in Appendix C. | Algorithm 1: Sequential Incorporation of BBAs into Clusters |

![Mathematics 13 03144 i001 Mathematics 13 03144 i001]() |

3.5.2. The Second Stage of the Algorithm

In the second stage, we start with the cluster partition generated by the online procedure (Algorithm 1), which remains fixed throughout this stage. This stage transforms the cluster-level structure into credibility weights for each piece of evidence, which are then used to compute a weighted average evidence for the final information fusion outcome.

It is worth noting that the first-stage output provides valuable structural information for decision-making. Clustering helps identify which BBAs convey similar beliefs and which diverge. This information is the foundation for an interpretable adjustment mechanism implemented through an

expert bias coefficient,

, used in Equation (

40), where

. This coefficient controls the relative trust placed in larger versus smaller clusters. When

, the credibility of larger clusters is reinforced, emphasizing consensus. In contrast, when

, the influence of large clusters is weakened, allowing smaller clusters to have a greater impact. Small

values can potentially highlight rare but valuable perspectives. Conceptually, larger clusters containing multiple similar BBAs are generally considered to be more trustworthy. Therefore, higher values of

may seem preferable. However, as demonstrated in

Section 5.5.2, application experiments show that increasing the weight of larger clusters may not always lead to better outcomes.

In the second stage of the algorithm, each BBA

receives a credibility, which is then used in weighted fusion methods [

24] and a probability transformation (PT). The algorithm closes the loop from “forming clusters” to “using clusters”. Let

denote the

j-th BBA in

,

be the number of BBAs contained in

, and

K be the total number of clusters in the partition

. The whole algorithm is described as follows:

- Step 1:

Compute Intra-Cluster Divergence: For each cluster

, compute the BJS divergence between all pairs of BBAs and obtain the average intra-cluster divergence for each

:

- Step 2:

Compute Inter-Cluster Divergence: For each cluster

, compute the mean divergence to all other clusters:

- Step 3:

Calculate Support Degree: Using the expert bias coefficient

and hyperparameter pair

, calculate the support degree for BBA

:

The support degree reflects the combined effect of cluster size, intra-cluster conformity, and inter-cluster separability.

- Step 4:

Calculate Credibility Degree: Normalize all support degrees to obtain the credibility degree of each BBA:

- Step 5:

Compute Weighted-Average Evidence: Construct the weighted-average BBA:

- Step 6:

Fuse via Dempster’s Rule: Combine

with itself

times to obtain the fused mass function

:

- Step 7:

Make Final Decision: Apply PT to the fused mass function

to make the final decision. In this framework, we use the PPT [

67], denoted as

, to convert

into a probability distribution. The singleton hypothesis with the highest probability is selected as the output:

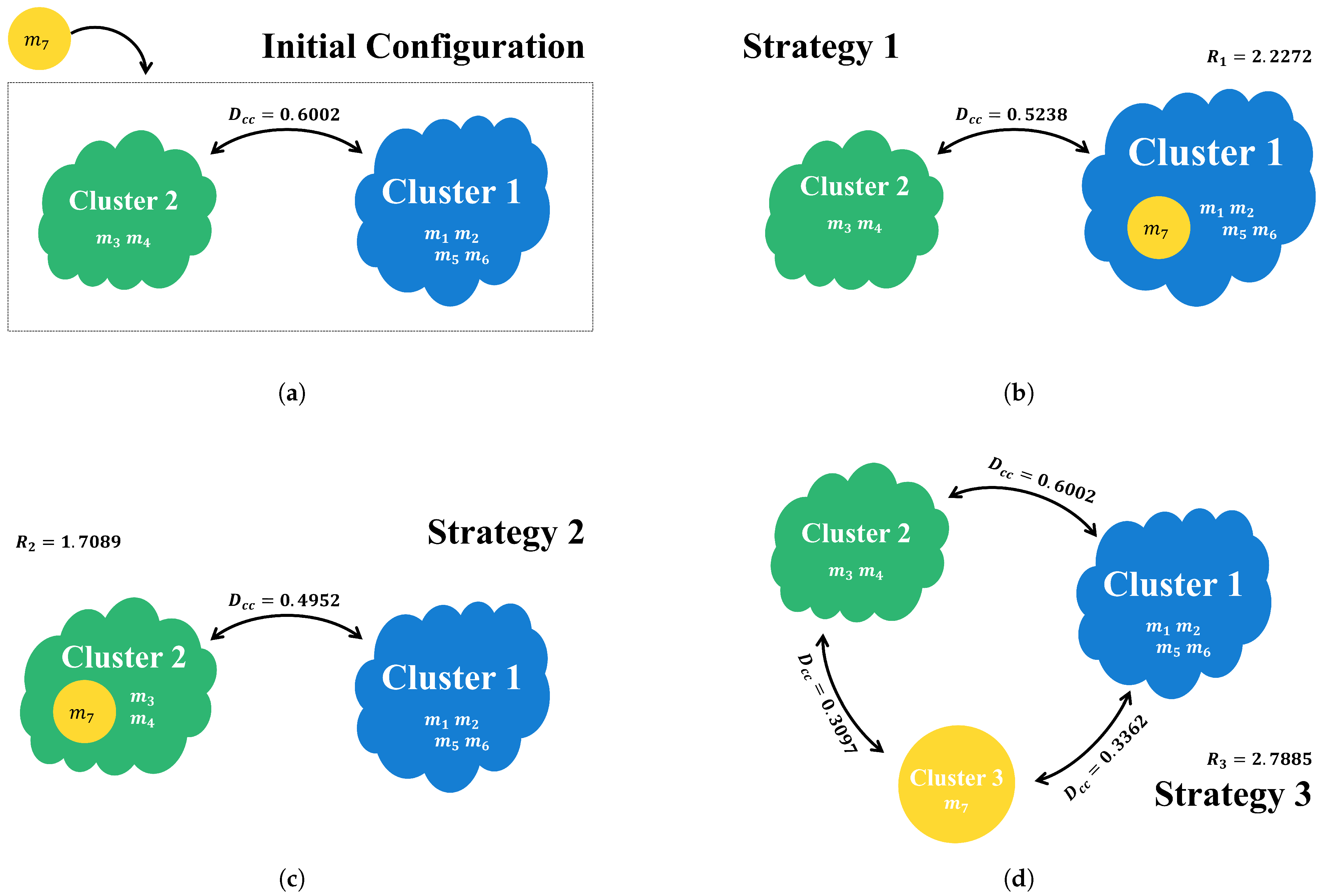

Section 3.5.1 organizes the incoming BBAs into distinct clusters in sequential order, as shown on the left side of

Figure 2. Subsequently,

Section 3.5.2 uses this structure to calculate credibility weights and perform fusion, as depicted on the right side of

Figure 2. This process creates a framework that spans from cluster construction to decision-making.

3.5.3. Algorithm Complexity Analysis

In the previous sections, we have defined n as the total number of BBAs and K as the number of non-empty clusters. The dimension of each BBA on the frame of discernment is . Throughout the discussion, we assume the use of the recursive centroid update from Definition 14. Consequently, updating a centroid by adding a BBA to a cluster of size costs in time, while its storage remains .

The analysis of the framework’s computational complexity begins with the online clustering stage. We evaluate the cost of assigning a single incoming BBA, :

Cluster Structure Construction: Creating a temporary cluster by adding to a cluster of size is dominated by the centroid update. This requires computing the -th-order fractal of , which costs .

Complexity: A single pairwise calculation is dominated by computing scale weights, , taking . Other steps are less costly, so the overall cost is bounded by , where is the larger cluster’s size.

An Evidence Assignment Strategy: Evaluating a single strategy, e.g., adding to , requires temporary updates to the cluster’s centroid, its intra-cluster divergence , and inter-cluster values. Recomputing up to of these divergences is the complexity bottleneck, at a cost of .

Evaluate All of the Strategies: To assign one BBA, all strategies are evaluated. For balanced clusters of average size , the cost per BBA is approximately . Summing over all BBAs, the total online clustering cost becomes .

Having established the complexity of the online clustering stage, we now evaluate the computational cost of the weight calculation stage, performed once after clustering is complete:

BBA Credibility Weights: This stage computes weights in three steps. Step 1 calculates all intra-cluster divergences (), costing up to . Step 2, the dominant operation, computes average inter-cluster divergences () from a full pairwise matrix, at a cost of . Step 3 is a negligible calculation.

Cluster-Level BBA Fusion: This stage performs the BBA fusion (Steps 4–6). The normalization and weighted averaging steps cost up to . The main cost is from Step 6, which recursively applies Dempster’s rule times. With each combination costing , the total cost is , a significant term that is independent of K. This final step is also common to several other classical methods.

Combining the costs from both stages, the dominant terms are the total online clustering cost and the calculation of BBA credibility weights. The complexity is

For a fixed frame of discernment , the algorithm’s runtime is primarily determined by the online clustering stage, scaling polynomially with the number of BBAs n and the number of clusters K.

In parallel with time analysis, the total complexity of the algorithm’s memory is composed of several key components. The main costs are to store the complete set of

n BBAs, the

K cluster centroids, and the pairwise

and BJS matrices. These require

,

,

, and

space, respectively. Therefore, the total memory consumption can be expressed as follows:

The term for storing pairwise BJS divergences for intra-cluster calculations is the dominant factor in terms of space. In typical scenarios where , the space complexity simplifies to being governed by the storage of the BBAs and their pairwise internal divergences.

4. Numerical Example and Discussion

In this section, we provide illustrative examples of the proposed methods, with a focus on , the evidence assignment rule, and the numerical information fusion results. The hyperparameter pair and the expert bias coefficient are set to 1 throughout this section.

4.1. The Properties of Cluster–Cluster Divergence

4.1.1. Metric Properties

Example 2 is illustrated to show the metric properties of , including non-negativity, symmetry, and triangle inequality.

Example 2 (A Weakly Clustered Case in a Low-Dimensional Frame of Discernment)

. Consider a frame of discernment with three focal elements , , and . Five BBAs are given in Table 3. In the example, the cluster characteristics are not obvious: the BBAs display varying tendencies. From an intuitive perspective, the set is most likely to be divided into three clusters: (i) a cluster biased towards : , (ii) a cluster biased towards : , and (iii) a highly uncertain cluster with most mass on : .

Following the proposed method, we compute the cluster–cluster divergence matrix

, as shown in

Table 4.

From the matrix above, several metric properties are immediately evident:

Non-Negativity: All entries are greater than or equal to zero.

Symmetry: holds for all .

Identity of Indiscernibles: for all clusters i.

We further verify that

satisfies the triangle inequality. For any three distinct clusters

i,

j, and

k, it must hold that

We enumerate all three possible cases:

Each inequality holds, illustrating that satisfies the triangle inequality. Additionally, the divergence matrix satisfies both non-negativity and symmetry. This example demonstrates that is a valid pseudo-metric over clusters of BBAs, even in weakly separable scenarios.

4.1.2. Numeric Examples Demonstrating Cluster–Cluster Divergence

To validate the effectiveness of the proposed cluster–cluster divergence , we present a sequence of designed examples, where each cluster is composed of four BBAs. We start by directly extracting four similar BBAs from Example 1 and renumbering them as to .

Example 3 (Cluster

)

. This cluster is composed of the BBAs in Table 5, sharing supports for the singleton focal set and supports for the multi-element set : Example 4 is derived from Example 3, maintaining singleton sets’ distribution while changing the distribution among multi-element sets.

Example 4 (Cluster

)

. This cluster closely resembles but focuses its multi-element set on rather than . It consists of the BBAs listed in Table 6: Example 5 has an identical multi-element set structure to Example 3, but it expresses an opposing belief on the singleton set:

Example 5 (Cluster

)

. This cluster mirrors the focal structure of Example 3 but places most of the beliefs on instead of , forming an opposing belief pattern. It consists of the BBAs listed in Table 7: The preceding analysis in

Section 3 has mentioned that the cluster–cluster divergence

can capture both structural and belief-based differences across clusters. Specifically, the structural disparity is captured by the scale weights

, which reflect the relative importance of each focal element. To better understand the impact of these weights, we now examine how variations in cluster structure affect the divergence values.

The scale weight in examples reflects how strongly a cluster supports multi-element sets. In Example 3 and Example 4, the support for multi-element sets varies significantly. Some clusters assign substantial belief to certain multi-element sets through multiple BBAs, while others provide little or no support to the same set, resulting in different structural weights.

For example, in cluster

, we compute the support

and the corresponding scale weight

:

Accordingly, the weights of all multi-element sets

,

,

of all clusters are summarized in

Table 8.

Clusters and share identical values of because they have exactly the same ratio of BBAs supporting the focal element . In contrast, shows an obviously different weighting pattern, with a dominant focus on .

To better understand the full origin of the divergence values, we further analyze the contributions of both singleton sets and multi-element sets to each pairwise divergence. Specifically, for each pair of clusters

, we compute the partial squared difference originating from multi-element sets via

and then separate this from the contribution of singleton focal sets. The results are summarized in

Table 9.

The results reveal distinct patterns of divergence among the clusters. The difference between and primarily arises from conflicting support on multi-element sets ( vs. ), while their singleton beliefs remain largely aligned, resulting in a low divergence of . In contrast, the divergence between and is entirely based on singleton sets. Despite both clusters showing identical support for , they express opposing beliefs regarding singletons ( vs. ), leading to a higher divergence of approximately . Similarly, and diverge in both multi-element sets and singleton sets, but the dominant contribution still originates from singleton sets, pushing the overall divergence to .

The results in

Table 8 and

Table 9 demonstrate that the proposed divergence measure can capture structural differences among clusters. It also captures the opposing belief distributions across clusters, whether these disparities originate from differing multi-element sets or direct conflicts in singleton sets.

4.1.3. Comparison of the Existing Conflict Measurements

The following examples show a direct comparison of

against several classical belief divergences: BJS divergence [

36], B divergence and RB divergence [

29], and the classical Dempster conflict coefficient

K [

4]. Since

is designed to measure cluster–cluster divergence, whereas most other divergences operate at the BBA-BBA level, it is natural to regard the case

and

as a degenerate form of BBA-level divergence.

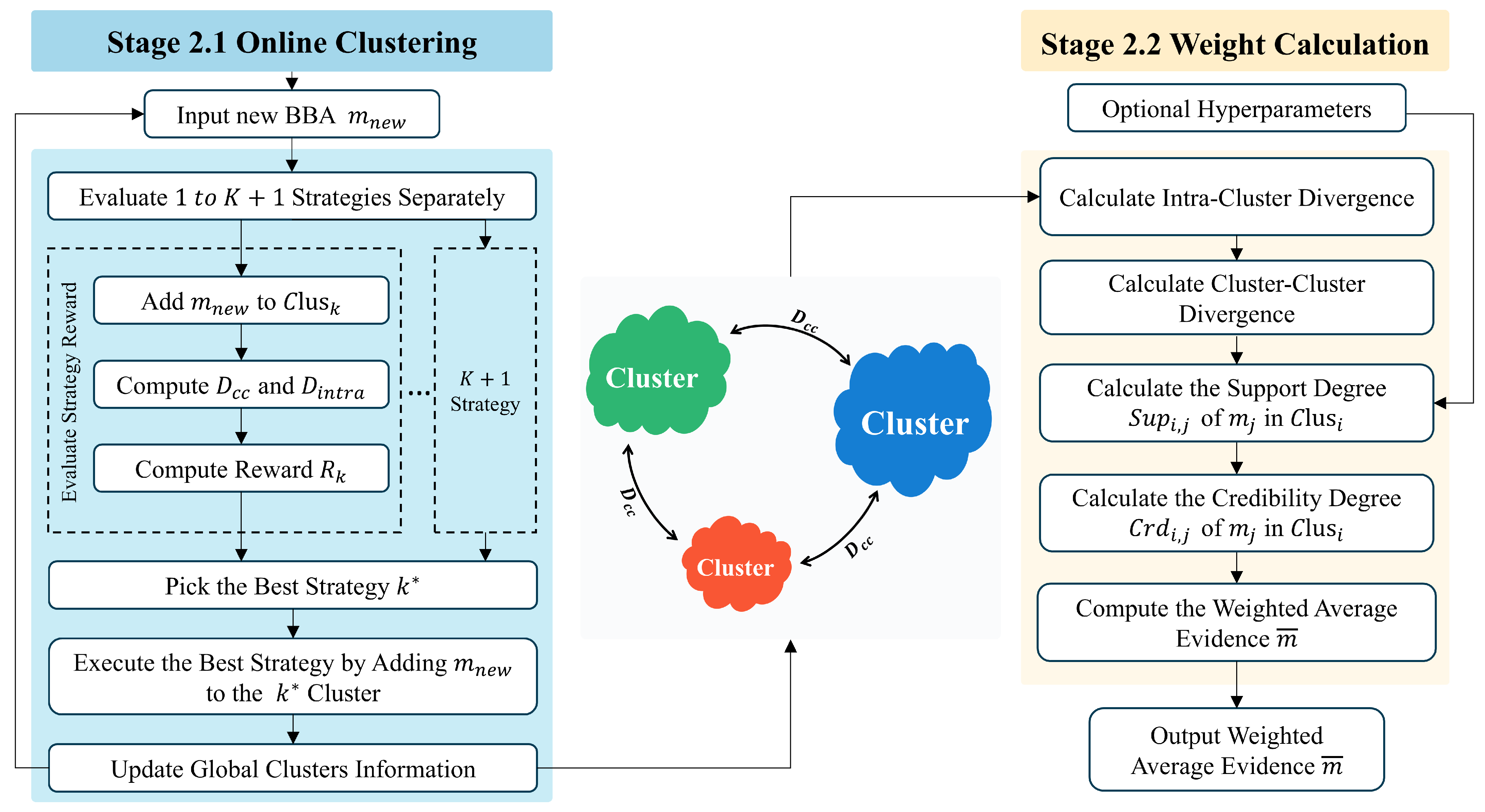

Example 6 (Symmetric Conflict)

. We construct a symmetric pair of conflicting BBAs on the singleton frame of discernment :where α varies continuously over the interval . This formulation guarantees that and show symmetric belief structures with opposing preferences. Figure 3 compares the divergence

with classical belief divergences and the Dempster conflict coefficient

K under symmetric belief settings. In

Figure 3a, it is evident that

responds sharply to minor changes in belief, exceeding

when

, whereas the BJS, B, and RB divergences remain close to zero.

Figure 3b focuses on the high-conflict region (

), where

approaches its upper limit of

.

Example 7 (Asymmetric Conflict)

. We construct an asymmetric conflict scenario between two BBAs defined over the frame of discernment . The belief structures are given bywhere α varies within . In this formulation, holds a constant belief in hypothesis , while gradually transitions from agreement to complete opposition. Figure 4 illustrates the divergence behavior in the asymmetric setting described in Example 7, where

is fixed with near-certain confidence in

. The proposed

demonstrates sharp sensitivity across the entire range of

, rising above

even when

. In contrast, the BJS, B, and RB divergences remain below

. As

approaches 1,

continues to increase smoothly, nearing its theoretical upper bound of

.

4.2. The Cluster Construction

To demonstrate the online clustering stage proposed in this framework, we provide a detailed numerical simulation based on Example 8.

Example 8 (Fractal BBA Example)

. This example consists of seven BBAs listed in Table 10. The BBAs , , and show a strong preference for hypothesis . In contrast, and strongly support hypothesis . The BBA shows an even more decisive preference for hypothesis , while represents the complete uncertainty for all of the hypotheses. Following the evidence assignment rule in

Section 3.4, the cluster centroids are recursively updated. At each iteration, we evaluate the reward

for each strategy, determine the optimal cluster assignment, and compute the updated centroid

. The complete clustering process is demonstrated as follows:

- Round 1:

Arrival of : No clusters exist, so

initiates a new cluster:

- Round 2:

Arrival of : There is a single cluster. Decide whether to merge

into

by comparing the threshold

:

- Round 3:

Arrival of : Evaluate gain from merging versus new cluster creation:

- Round 4:

Arrival of : Three strategies considered, with rewards:

- Round 5:

Arrival of : Rewards across all clusters:

- Round 6:

Arrival of : BBA aligns with

:

- Round 7:

Arrival of : Completely uncertain BBA results in highest reward for new cluster:

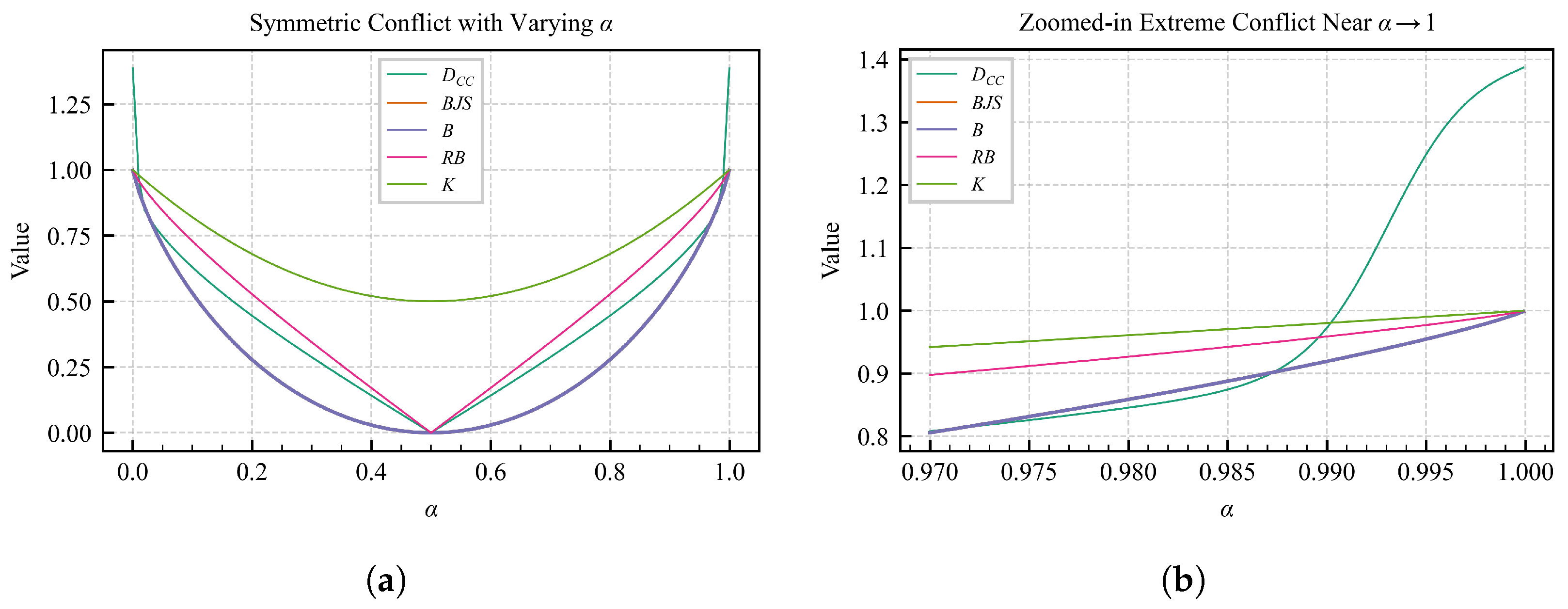

After all BBAs are assigned, the final cluster structure is as visualized in

Figure 5. Cluster 1 strongly supports hypothesis

, Cluster 2 supports hypothesis

, and Cluster 3 represents an uncertain or neutral belief. This validates the effectiveness of the proposed rule in separating conflicting evidence while maintaining internal consistency.

4.3. Comparison with Classical Information Fusion Methods

To assess the effectiveness of the proposed framework, we conduct a comparative analysis against several classical information fusion methods, including the Dempster method [

4,

5], the Murphy method [

23], the Deng method [

24], the BJS-based method [

36], and the RB-based method [

29]. The evaluation uses the classical example presented in Example 1. The objective is to evaluate whether each method yields the correct target recognition outcome.

The results of the information fusion from different methods are presented in

Table 11. Each row shows the fused mass function

on focal elements after applying the corresponding information fusion method to the five BBAs. A direct comparison reveals that the classical Dempster’s rule yields highly counter-intuitive results, assigning the vast majority of belief to an incorrect hypothesis (

) due to the high degree of conflict. In contrast, all weighted-average methods successfully identify the true target

. Among this group, our proposed method concludes a satisfying final decision. It assigns a mass of

to the correct hypothesis, the highest value across all compared methods, narrowly surpassing other classical baselines like Deng’s method and the RB-based method (both at

). This result demonstrates that our method effectively concentrates belief mass on the correct hypothesis.

In addition to comparing the fused mass functions

, we also examine the final weights assigned to each BBA, as shown in

Table 12. The weight distribution reveals each method’s reliability assessment of the input evidence.

Compared with classical methods, the proposed method achieves more decisive belief concentration on the correct target while effectively suppressing the impact of conflicting evidence . The proposed method also demonstrates a smooth and balanced allocation: it assigns relatively high weights to reliable BBAs (, , , ). This demonstrates greater detectiveness to outlier BBAs and a more substantial ability to extract consensus from the input BBAs. Notably, unlike Murphy’s method, which uniformly distributes weights and fails to distinguish between reliable and conflicting evidence, our approach adaptively discounts outliers. It accentuates trustworthy sources, resulting in more robust and interpretable information fusion outcomes.

5. Applications of Cluster-Level Information Fusion Framework in Pattern Classification Tasks

This section applies the proposed cluster-level information fusion framework to pattern classification tasks and compares its performance with that of several representative baselines. We begin by introducing the overall problem statement for pattern classification within the D-S evidence theory. Next, we detail the practical methodology used to identify and determine the optimal hyperparameter pairs. The experimental results are then presented, followed by an in-depth analysis of the framework’s hyperparameters and a comprehensive ablation experiment to assess the contribution of each component.

5.1. Problem Statement

Pattern classification is a fundamental application area for uncertainty reasoning. In D-S evidence theory, the overall methodology of uncertainty reasoning is as detailed in the following section:

BBA Generation: Transforming classical datasets into BBAs is crucial in applying D-S evidence theory to pattern classification. Various representative methods have been proposed to achieve this transformation, including statistical, geometric, and heuristic approaches [

68,

69,

70]. Among these methods, the statistical-based methods for generating BBAs are as follows: Each attribute of the training samples is used to estimate Gaussian parameters for each class. A likelihood-based intersection degree between each class’s observed attribute value and the Gaussian models is calculated when a new test sample arrives. The intersection degrees are then normalized and converted into BBAs over a frame of discernment.

Information Fusion: Multiple BBAs generated from the same sample are fused using various methods. In traditional methods, DRC [

4,

5] is employed in most of the cases. However, DRC can lead to counter-intuitive outcomes when conflicting evidence exists. Alternative methods have been proposed to address such issues, including Murphy’s simple averaging method [

23] and Deng’s weighted-average method [

24]. Additionally, Xiao proposed a BJS-based information fusion method [

36] to evaluate credibility better. To enhance the BJS method in capturing the non-specificity of evidence, Xiao further introduced the RB divergence [

29], which can be seen as a generalization form from BJS divergence.

Probability Transformation: After the fused mass function is obtained, the probability transformation is applied to transform the function into a probability distribution. This allows a final decision to be made in a probabilistic classification manner. Classic PT methods include the PPT [

67] (which distributes the mass proportionally among singleton sets), entropy-based PT methods [

71], and network-based PT methods [

72].

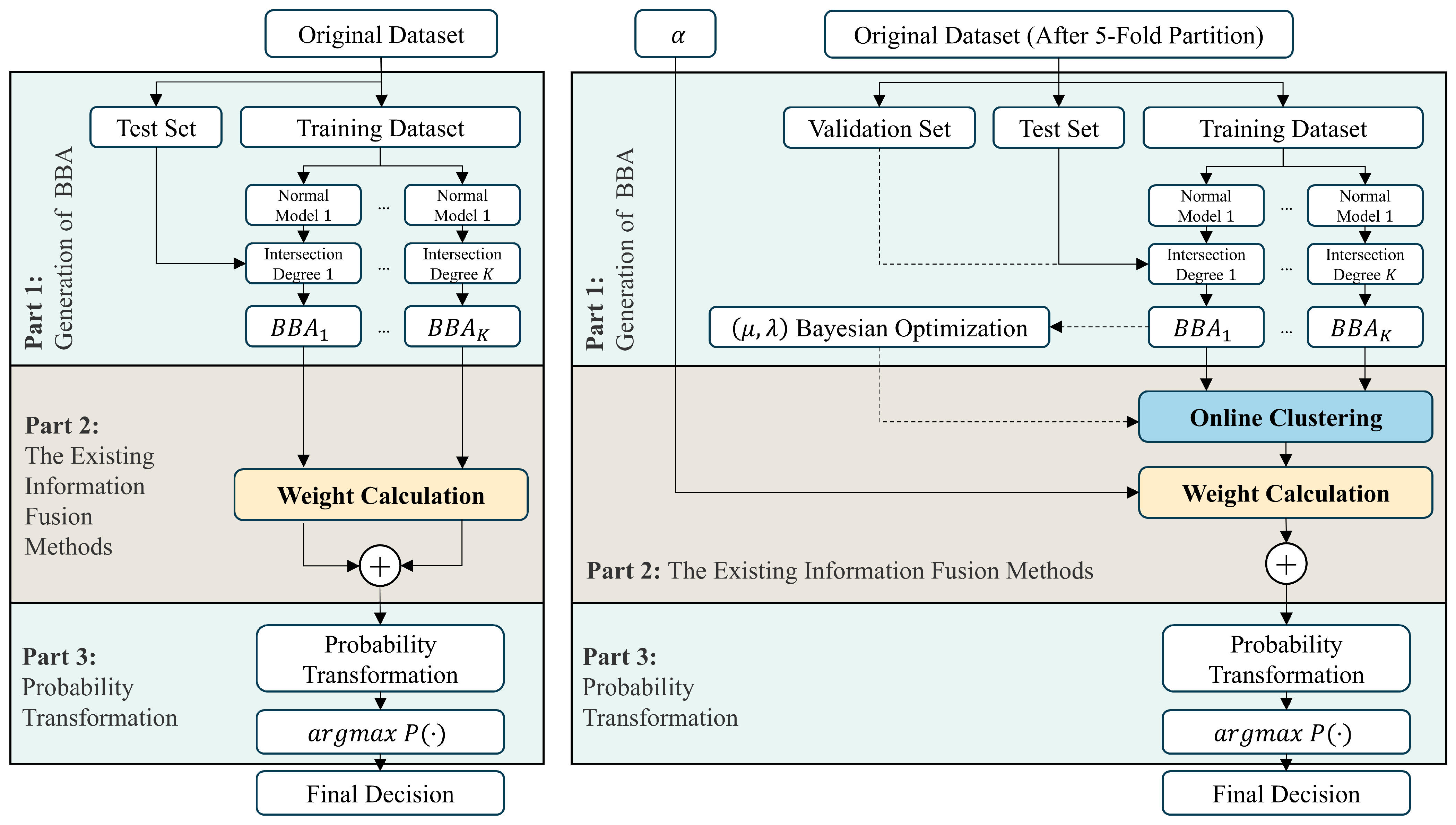

The pipeline of the classification experiment is depicted in

Figure 6, which contrasts the conventional approach—where all BBAs are fused in a single step—with our proposed cluster-level framework. In contrast to the traditional method, our framework incorporates online clustering, data-driven selection of hyperparameter pairs, and expert bias coefficient adjustment prior to the final decision-making stage.

5.2. Experiment Description

We conducted pattern classification experiments on four widely used benchmark datasets from the UCI Machine Learning Repository: Iris [

73], Wine [

74], Seeds [

75], and Glass [

76].

The Iris dataset consists of 150 samples, each characterized by four continuous attributes. The dataset is evenly divided among three classes: Setosa, Versicolor, and Virginica. Due to its low dimensionality and clear class separability, it serves as a standard benchmark for pattern classification tasks.

The Wine dataset consists of 178 instances, each characterized by 13 chemical features, including alcohol, malic acid, and proanthocyanins. The samples are categorized into three different types of wine. Compared to the Iris dataset, the Wine dataset has more features (more BBAs in a sample) and shows a slight imbalance in class distribution.

The Seeds dataset consists of 210 samples representing three varieties of wheat seeds. Each sample has seven morphological features. Unlike the Iris dataset, the class boundaries in this dataset are much less distinct, making it a moderately challenging classification task.

The Glass dataset contains 214 samples, each characterized by 9 chemical components and organized into 6 different glass types. These types include three types of window glass—building windows float-processed (BF), building windows non-float-processed (BN), and vehicle windows float-processed (VF)—as well as containers, tableware, and headlamps. This dataset raises significant challenges due to its high class cardinality, imbalanced sample distribution, and many conflicting or indistinguishable features.

For each dataset, we follow the same experimental pipeline. BBAs are generated using the Gaussian distribution-based method described by Xu et al. [

69], where each class is modeled by a Gaussian estimated from training data, and sample likelihoods are normalized across classes to generate valid BBAs. All experiments utilize a nested 5-fold cross-validation method. The raw datasets are first stratified into five equally sized folds. In the

i-th outer iteration, one fold is held out as an independent test set, while the remaining four folds constitute a large training set for that test set. Within each iteration of the outer loop, a complete inner 4-fold cross-validation is performed on the four available folds to generate the validation set that belongs to that test fold. In this inner loop, an inner Gaussian distribution model is trained on three folds to generate a validation fold. This process is repeated for all four inner folds, and all of the folds are then concatenated to assemble a single, comprehensive validation set that belongs to that test fold.

For each test fold, we used Bayesian optimization (BO) to select the optimal hyperparameter pair

that maximizes classification accuracy, with the expert bias coefficient

fixed at 1. This process produces a unique

pair for each fold. Our method, applied with these optimal hyperparameter pairs, is assessed on the corresponding test fold. We discuss the detailed methods for BO in

Section 5.3. The final performance is reported as the average across all five folds. Because the entire selection procedure is data-driven, the resulting set of optimal hyperparameters may reflect structural properties of the dataset, which are further discussed in

Section 5.5.

After the BBA generation, the information fusion method is applied, and the fusion results are converted into probabilities using the PPT method. We compare the proposed method against seven representative baselines, which are grouped into two categories. The first includes modern machine learning-based hybrid methods: evidential deep learning [

12] and DS-SVM [

77,

78]. The second category consists of five representative baselines in D-S evidence theory: Dempster’s combination rule [

4,

5], Murphy’s simple averaging method [

23], Deng’s distance-weighted fusion [

24], Xiao’s BJS-based approach [

36], and Xiao’s RB-based approach [

29].

5.3. Determination of Hyperparameter Pairs

Selecting appropriate hyperparameter pairs

is a key challenge in this work. A well-chosen pair can significantly boost the performance of the proposed framework, as demonstrated in

Section 5.6. However, the relationship between these hyperparameters and the framework performance is highly complex and non-linear. Brute-force methods, such as grid search, become computationally expensive in this scenario, as they require evaluating a vast combination of values and may still miss the true optimum. We therefore employ a Bayesian optimization strategy to approximate the optimal hyperparameter pair with a tractable number of computational evaluations.

5.3.1. Bayesian Optimization Principle and Procedure

In our approach, we treat the selection of

as a black-box optimization problem. The objective function takes a candidate pair

as the input and returns the classification accuracy on the validation fold as the output. Notably, each objective function evaluation can be computationally expensive, as its complexity can grow exponentially with the frame of discernment of BBA, in line with the statement in

Section 3.5.3. This high cost motivates the use of BO.

BO constructs a surrogate model of the objective function. In this work, we use a Tree-structured Parzen Estimator (TPE), a type of sequential model-based optimization, which predicts performance for untried hyperparameters and provides uncertainty estimates. This surrogate model captures the non-linear, possibly multi-modal relationship between pairs and accuracy. It allows the optimizer to infer promising regions of the search space from limited data. We utilize an acquisition function based on the expected improvement criterion to guide the search. At each iteration, the expected improvement criterion selects the next to evaluate by quantifying the expected gain in accuracy over the current best, given the surrogate’s predictions. This balances exploration of uncertain regions against exploitation of known high-performance regions, thereby accelerating convergence to an optimum.

In practice, we implemented the above procedure using the Optuna optimization framework with its TPE sampler. A TPE is a form of BO that models the probability densities of good and bad solutions to suggest promising hyperparameters directly. This approach is well suited for our problem, as it efficiently handles the expansive search space. We define log-uniform search ranges for both and , each from to 1000. A log scale was chosen because the optimal values could span several orders of magnitude, and sampling in log-space allows both small and large candidate values to be explored. The is held fixed at 1 during this search (i.e., we optimize only and ). For each outer test fold of the nested cross-validation, we perform 50 BO iterations on the corresponding validation set. In each trial, the optimizer proposes a new pair based on past evaluations. The full pipeline is executed with those values, and the resulting accuracy is returned to the optimizer to guide the next trial. Over all 50 trials, the search refines its focus on better regions of the space. Finally, the pair yielding the highest accuracy is selected as the optimal setting for that test fold. This entire process is repeated independently for each test fold in the nested cross-validation process.

5.3.2. Representative Hyperparameter Pairs and Sensitivity Analysis

The representative optimal

pairs identified for each dataset are summarized in

Table 13. These pairs vary widely across the four classification tasks, indicating that each dataset demands a different balance of the two hyperparameters. It should be noted that these “representative” pairs are used as reference points rather than as unique true optima. For each dataset, the representative hyperparameters and the underlying information revealed by them are analyzed in detail in

Section 5.5.

To understand the stability of the BO and the sensitivity of each dataset to

, we examine the distribution of the selected hyperparameters over 100 independent optimization trials.

Figure 7 depicts this variability by illustrating the log-scale values of the optimal hyperparameters,

and

, obtained across these trials for the Iris, Wine, and Seeds datasets.

For the Iris dataset, the optimal hyperparameter pairs are relatively dispersed. The Bayesian optimization returned a variety of

combinations yielding near-maximal accuracy, rather than a single sharply defined optimum. In the log-scale trace of

Figure 7a, both

(orange) and

(blue) fluctuate over roughly two orders of magnitude across the 100 folds. Notably, one particular pair

recurred as the top choice in many folds, yet other runs found good results at much larger

or

values. This broad plateau feature in the accuracy landscape indicates that the Iris classification task is relatively robust to hyperparameter selection. In other words, as long as

falls within a certain tolerant range, the classification performance remains near-optimal. Occasional outlier runs did pick extreme values, appearing as isolated spikes in

Figure 7a, but these likely reflect fold-specific abnormalities or local maxima in the validation objective. Overall, the Iris results suggest that our method’s performance does not sharply deteriorate with small-to-moderate changes in

, a fortunate property that simplifies tuning for this dataset.

In contrast, the Wine dataset demonstrates a much sharper and more sensitive hyperparameter profile. The distribution of selected

across folds is very similar: in many of the 100 trials, the optimizer converged to nearly the same values. This suggests that the validation accuracy has a pronounced peak in that region, and that the Bayesian optimizer consistently finds it. The globally optimal pair for Wine was identified to be approximately

, which lies in a very small corner of the search space. Indeed, even slight deviations from this pair can cause a noticeable drop in accuracy. Such a narrow optimum means that the Wine task is highly sensitive to the exact hyperparameter setting, requiring fine-grained tuning for the best results. The log-scale trace in

Figure 7b highlights this behavior: the majority of folds show

and

hovering in a tight band, with very few variations. We do observe a handful of outlier runs where the optimizer sampled radically different values. For example, one fold chose

and

, orders of magnitude off the usual optimum. These rare spikes likely indicate that the optimizer is temporarily attracted to a local optimum or experiencing noisy evaluation in that particular fold. Nevertheless, such cases were isolated. The consistency of the chosen hyperparameters in most folds underscores that, for Wine, the most accurate candidate hyperparameter pairs are range-limited. Leaving the optimal region by even a small amount in

space significantly degrades performance.

The Seeds dataset exhibits an intermediate pattern of behavior. Like Wine, Seeds favors very small hyperparameter values: the representative optimum is

, in the order of

for both parameters. However, the accuracy surface for Seeds is somewhat flatter around its peak, implying a moderate robustness to parameter variation. This can be seen in

Figure 7c as a wider scatter in the

and

traces: while many folds do concentrate near the tiny values, there are several folds where one of the parameters is noticeably larger. For instance, in a few trials,

spiked one to two orders higher while

remained very small; conversely, in one case,

took a moderately higher value while

stayed minimal. The fact that such divergent pairs could still emerge as optima in different folds suggests that the Seeds dataset’s performance landscape has a broader “near-optimal” basin. There may be a range of

combinations that yield almost equally good results. We do note a few outliers, which likely correspond to either local minima or idiosyncrasies in that fold’s data split. Overall, the Seeds results indicate a balance between sensitivity and tolerance. The optimal region is small, but it is not so razor-sharp that minor departures are immediately punishing.

5.3.3. Complexity Analysis

From a computational standpoint, the hyperparameter tuning process exhibits a favorable complexity profile by adopting BO. A traditional grid search over two hyperparameters, each with candidate values of G, would necessitate model evaluations. In our case, BO may converge after a fixed number of trials T (e.g., in our experiments), reducing the complexity to . In other words, instead of testing thousands of combinations, the optimizer approximately locates a high-performing pair in just 50 intelligent iterations. This efficiency is critical to keeping the overall training procedure tractable. Importantly, the memory requirements of the hyperparameter search remain unchanged compared to brute-force search. Thus, we achieve a more efficient determination of the pair without compromising the integrity of the search.

5.4. Experimental Results

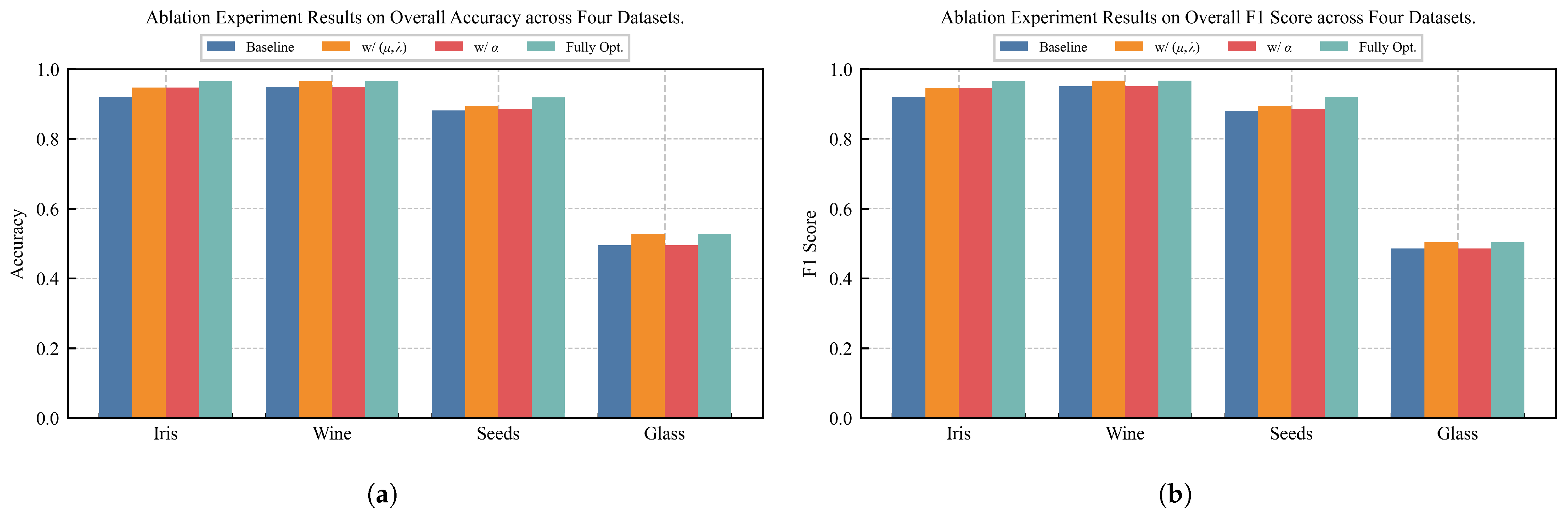

The classification accuracy for each class, along with the overall accuracy and macro F1 score for the proposed method and seven baseline methods, is presented in

Table 14. Notably, the results for our method were obtained using optimal hyperparameter pairs

established independently for each outer fold of the cross-validation. Our method’s results are also based on an optimized hyperparameter

, which is 1/8, 1, 1/5, and 1 on the Iris, Wine, Seeds, and Glass datasets, respectively.

In the context of classical D-S evidence theory methods, the results suggest that the proposed framework offers a competitive performance advantage over the other D-S evidence theory baselines across the four classification tasks. For instance, on the Iris dataset, our method achieves an overall accuracy of 96.67%, compared to 94.67% from the next best-performing D-S evidence theory method. This advantage is also notable on the highly complex Glass dataset. In this task, where the accuracy of some classical methods is limited—such as Dempster’s rule, which has an accuracy of only 35.51%—our method achieves the highest accuracy among the D-S evidence theory methods, at 52.80%. This result indicates a greater robustness in managing severe class imbalance and feature conflict.

When compared with modern machine learning-based hybrid methods, it is evident that these methods set a high performance benchmark. As shown in

Table 14, the DS-SVM model in particular demonstrates excellent performance, achieving the highest overall accuracy on the Wine dataset and a significantly superior result on the complex Glass dataset.

Beyond overall accuracy, it is worthwhile to assess performance, especially for tasks involving significant class imbalance, such as the Glass dataset [

79].

Table 15 thus provides an analysis using additional metrics, including precision, recall, the Matthews Correlation Coefficient (MCC), and the Area Under the Curve (AUC). Within this context, our proposed framework’s performance is noteworthy. It achieves the highest scores among the D-S evidence theory methods in precision, recall, and the MCC. The MCC is an informative metric for imbalanced data, as it provides a single, balanced measure of classification quality. Achieving a higher MCC score suggests that our framework provides a more balanced classification outcome than the other D-S baselines. This may suggest that structuring evidence into clusters before information fusion is a promising strategy for mitigating the distortion of class imbalance and contributing to a more robust decision-making process.

Regarding the adaptability of the proposed framework, an analysis of the experimental results provides several insights into its performance under different conditions. The framework appears to be adaptable to the dimensionality of the feature space tested in this work. It achieved the highest overall accuracy on the low-dimensional Iris dataset and maintained competitive accuracy on the higher-dimensional Wine dataset. As all of the experiments were conducted on datasets with small sample sizes, the results demonstrate the method’s applicability in such conditions. Furthermore, the framework was tested on datasets with varying degrees of class separability, from the relatively clear boundaries in Iris to the highly overlapping classes in Seeds and Glass. The model’s effectiveness in these more complex scenarios suggests that the non-linear process inherent in evidence clustering may be a factor in its ability to handle data that is not easily separable.

5.5. Analysis of Hyperparameters

The proposed methods introduce three hyperparameters: a

pair and the expert bias coefficient

. The

pair is applied in Equations (

35) and (

40), where it helps to balance the importance of intra-cluster similarity against inter-cluster differences when making decisions. The expert bias coefficient

is used in Equation (

40). Experts in the field can calibrate a value to

based on their knowledge and experience after analyzing the results from online clustering, thus balancing consensus with minority opinion. Further analysis and discussion on the

pair can be found in

Section 5.5.1, while

Section 5.5.2 discusses the expert bias coefficient

.

5.5.1. Analysis of Hyperparameter Pair

In the algorithm’s reward and the support degree calculation process, the average inter-cluster divergence is raised to the power of ; acts as a sensitivity coefficient, modulating the non-linear importance in weight calculation:

When , since the numerator of excluding the value is generally less than 1, larger values attenuate inter-cluster differences: inter-cluster divergences raised to powers greater than 1 become relatively smaller, resulting in a reduced penalty for the corresponding weights.

When , similarly, smaller values of amplify differences between clusters, leading the algorithm to focus on the information differences between clusters in the cluster selection.

Meanwhile, serves as a sensitivity coefficient for intra-cluster divergence, controlling how strongly intra-cluster divergence impacts and the support weight:

When , intra-cluster differences are further suppressed, similar to the effect of increasing .

When , a smaller value of amplifies the effects of intra-cluster conflict: even minor differences within the cluster, when raised to a power less than 1, become relatively larger.

Our experimental pipeline, illustrated in the right part of

Figure 6, utilizes a nested five-fold cross-validation process. This procedure yields a distinct optimal

pair for each of the five outer folds. For analytical clarity, we reuse the representative pair for each classification task, as summarized in

Table 13. An analysis of these pairs reveals that the optimal hyperparameter strategy is highly dataset-dependent, with each task favoring a unique balance, as detailed below:

For the Iris dataset, the strategy favors strict internal consistency. In the Iris dataset, each sample comprises four BBAs, namely, , , , and . Under an optimal pair, clustering reveals that two-cluster patterns dominate (74.67%), while single- or multi-cluster patterns are rare. The most frequent clusterings show , , and often grouped together, with separate. The mean credibility weights are highest for , followed by and , with the lowest. This structure matches the dataset’s properties, with the Setosa class well separated and the other two partially overlapping. The selected moderately amplifies between-cluster separation, while the extremely small enforces strict within-cluster consistency.

For the Wine and Seeds datasets, an aggressive differentiation strategy yields optimal results. A common characteristic of these datasets within our framework is their tendency to form highly fragmented clusters, with many BBAs being isolated into singleton clusters. Despite the Wine dataset having higher feature dimensionality and the Seeds dataset having a more balanced class distribution, they benefit from an identical parameterization strategy, as shown in

Table 13. The optimal pair serves to maximally amplify differences. An extremely small

value improves the separation signal between clusters, while a minimal

imposes a high penalty on internal inconsistency. This strategy is designed to distill the most representative “core” evidence from fragmented or overlapping data.

The Glass dataset presents a unique challenge due to its structural complexity and class imbalance. Its six classes are highly overlapping in feature space, and the class sizes are extremely imbalanced. Clustering results from the proposed method indicate that 97% of samples are divided into two clusters of sizes 1 and 8. The optimal parameter combination is uncommon, with a very large value that effectively suppresses the influence of inter-cluster differences. This avoids over-penalizing unavoidable cluster conflicts and prevents mistaking true classes, which is essential due to the fuzzy boundaries of classes. Meanwhile, a moderate value amplifies intra-cluster variation, allowing more internal diversity without harsh penalties. This pair of hyperparameters reduces differences between clusters while encouraging consistency within clusters, which is crucial for data with considerable variation within classes and structural uncertainty.

5.5.2. Analysis of Expert Bias Coefficient

In the proposed method, the expert bias coefficient

is introduced in Equation (

40). This coefficient functions as a mechanism to balance the influence of consensus (large clusters) against minority opinions (small clusters):

amplifies the effect of cluster size, causing credibility to grow exponentially. This strategy reinforces the consensus of the majority.

establishes a linear relationship where credibility is directly proportional to cluster size. This is regarded as the default, neutral option.

diminishes the influence of cluster size, thereby giving greater weight to smaller clusters. This approach can highlight rare but valuable patterns.

A common assumption is that larger clusters are generally more reliable. Thus, increasing

is expected to consistently improve performance. However, our experiments revealed a surprising finding: a larger

does not consistently enhance accuracy. To explore this further, we fixed the optimal

pair and conducted classification experiments using various

values, as shown in

Table 16. The results indicate that the optimal

is also highly dependent on the intrinsic characteristics of each dataset.

For datasets with well-defined and balanced class structures, such as Iris and Seeds, a smaller value that trusts minority clusters proves optimal. Optimal performance is achieved with for the Iris dataset and for the Seeds dataset. Both datasets feature a balanced class composition, limited classes and relatively clear feature separability. In such cases, small clusters often represent high-purity class information rather than noise. A low value prevents this valuable information from being diluted by the consensus of larger clusters by assigning more equitable weights across clusters of all sizes.

In contrast, for datasets characterized by high complexity, massive BBA amounts, obvious class overlap, or severe imbalance, such as Wine and Glass, a neutral is the most effective strategy. Optimal performance for both datasets is achieved when . These datasets feature significant class overlap or severe imbalance, leading to clustering results with a mix of large, dominant clusters and small clusters representing minority classes. A neutral value strikes a critical balance: it avoids over-amplifying the influence of potential outliers (which would occur with a low ) while also preventing the dismissal of information from rare classes (which would occur with a high ). The linear weighting of this neutral strategy is thus optimal for handling their complex data structures.

Based on the empirical results presented in

Section 5.5.2, the selection of an optimal

should be guided by the structural characteristics of the cluster revealed during the final clustering phase, rather than by a fixed rule. The following principles can serve as a guide for practical applications: