Bayesian Optimization for the Synthesis of Generalized State-Feedback Controllers in Underactuated Systems

Abstract

1. Introduction

2. Literature Review

2.1. Underactuated Systems

2.2. Bayesian Optimization

3. Theoretical Framework

3.1. Underactuated Systems

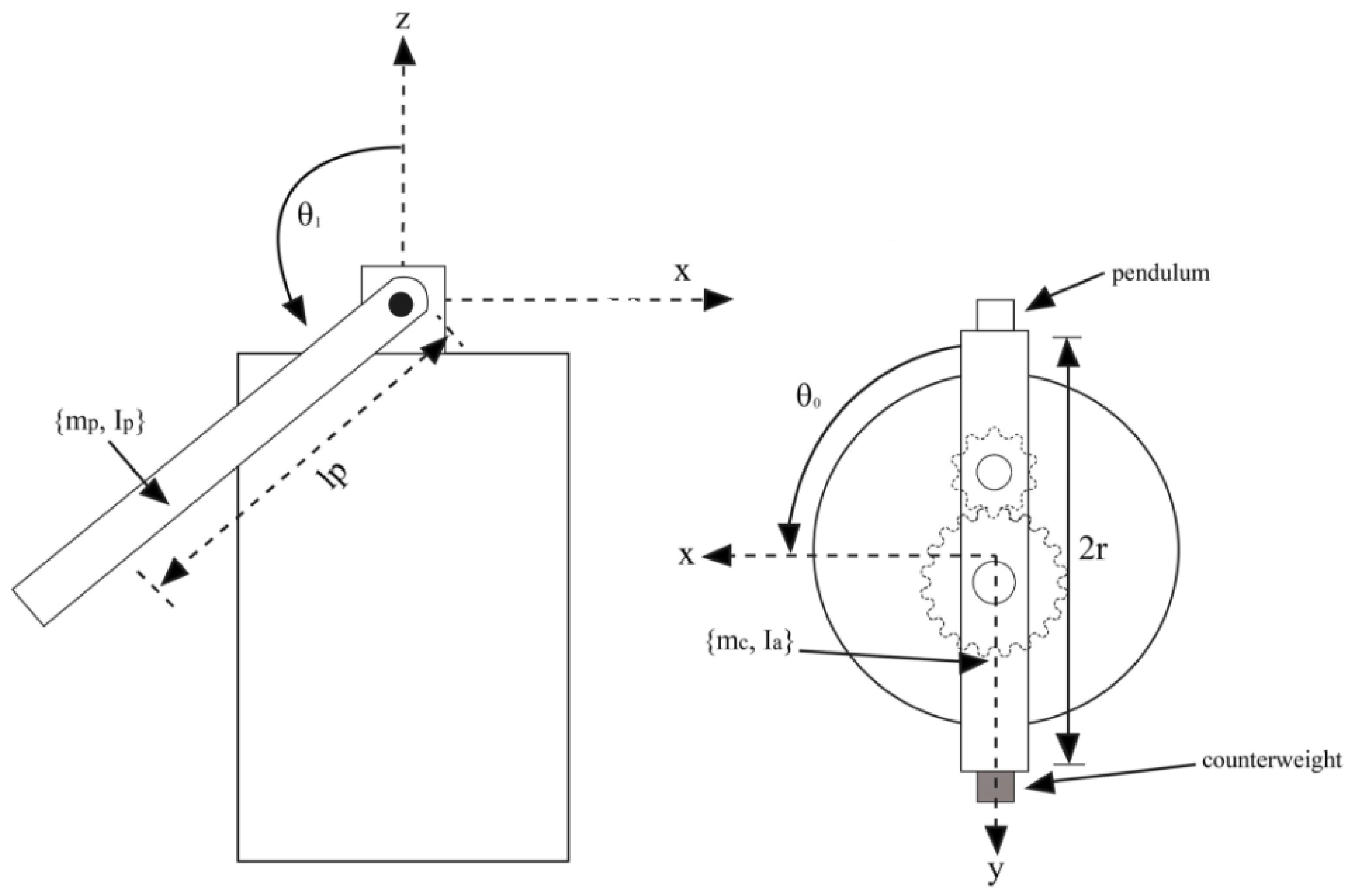

3.2. Rotary Inverted Pendulum

3.3. Model Formulation for the Rotary Inverted Pendulum

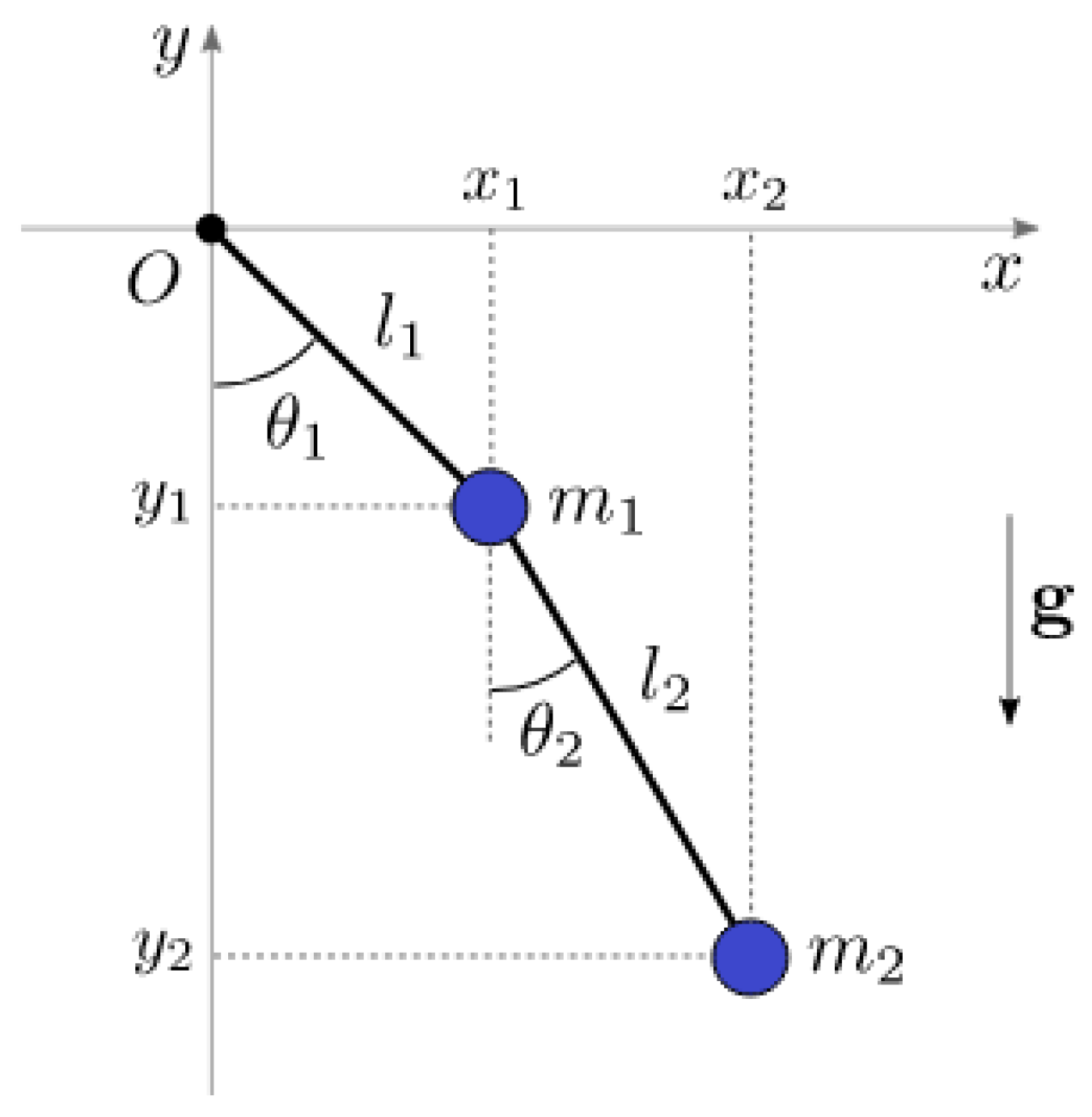

3.4. Model Formulation for Double Inverted Pendulum

4. Controller Synthesis

4.1. Linear Quadratic Regulator

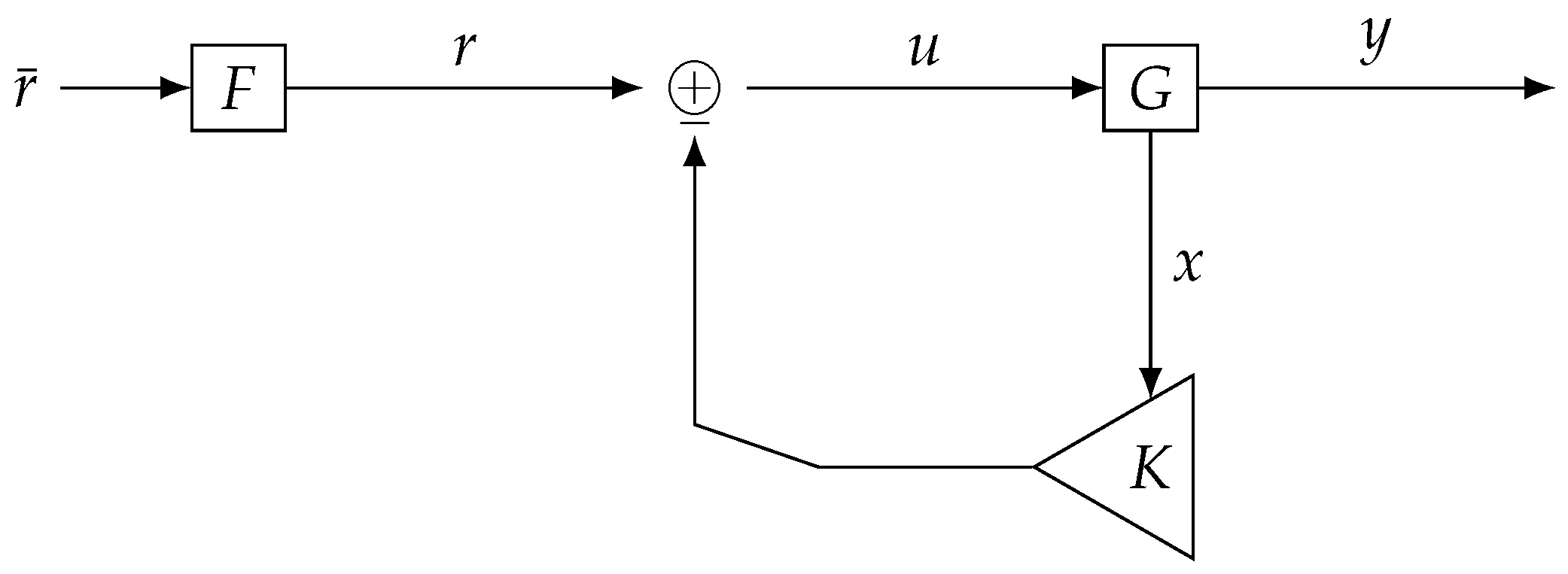

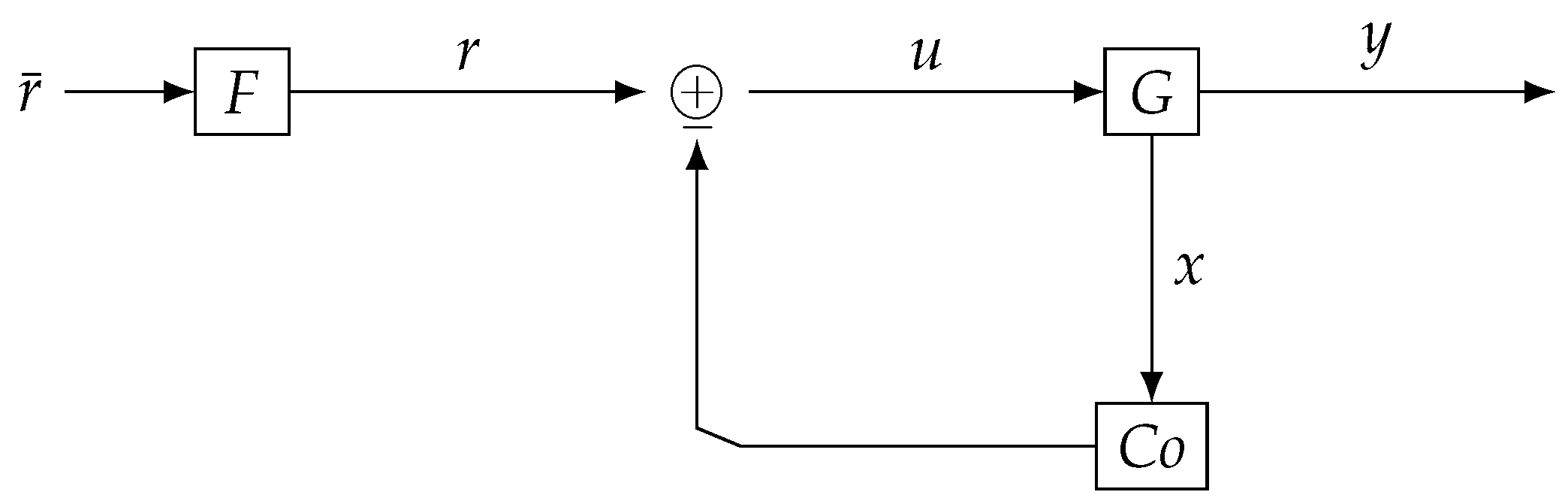

4.2. State-Feedback Controller

4.3. Lyapunov–Based Stability Analysis

5. Bayesian Optimization

- -

- is the best function value observed so far;

- -

- is the posterior mean at ;

- -

- is the posterior standard deviation;

- -

- is a small positive parameter encouraging exploration;

- -

- and denote the cumulative distribution function (CDF) and the probability density function (PDF) of the standard normal distribution, respectively.

6. Simulation Results

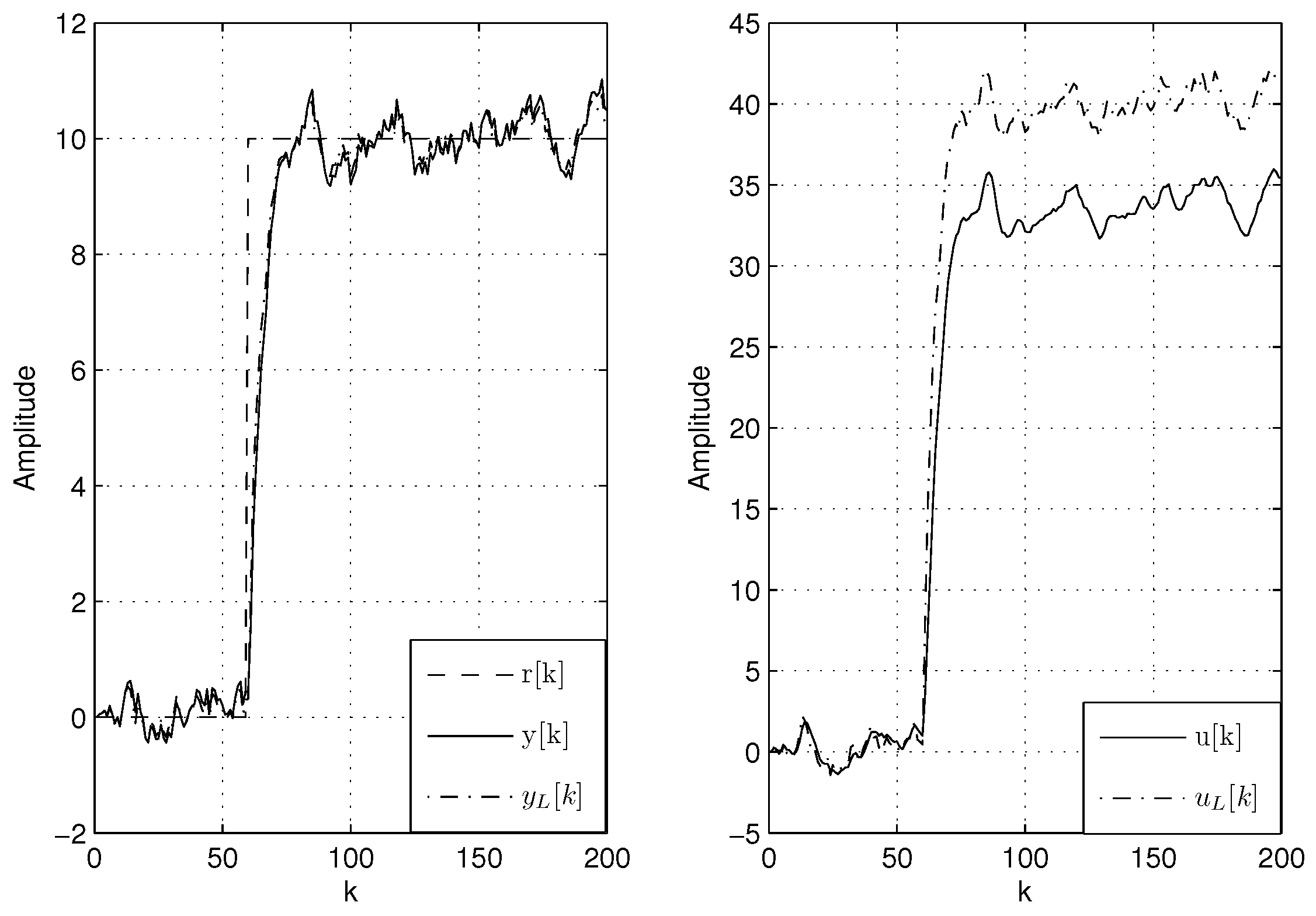

6.1. Toy Example

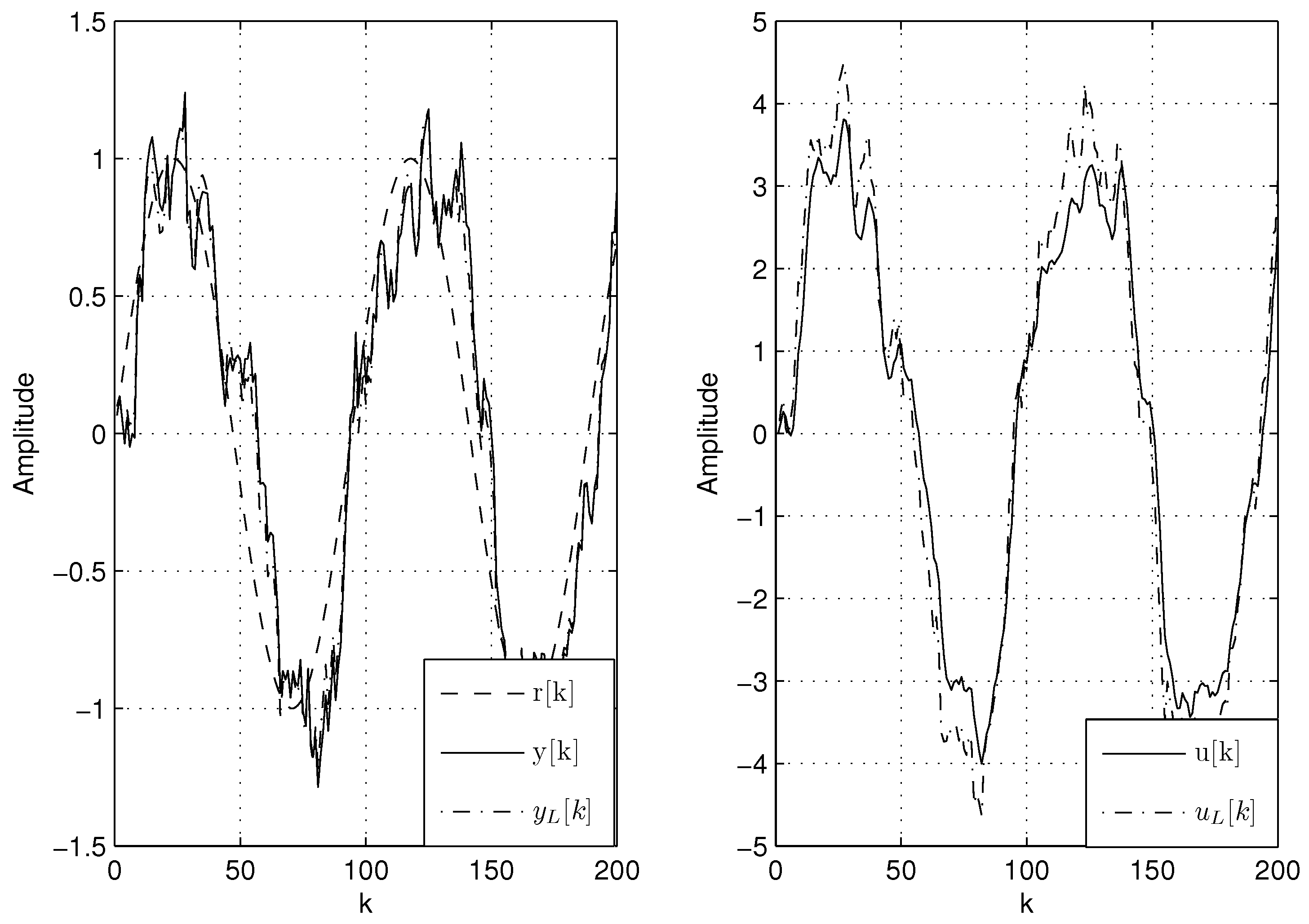

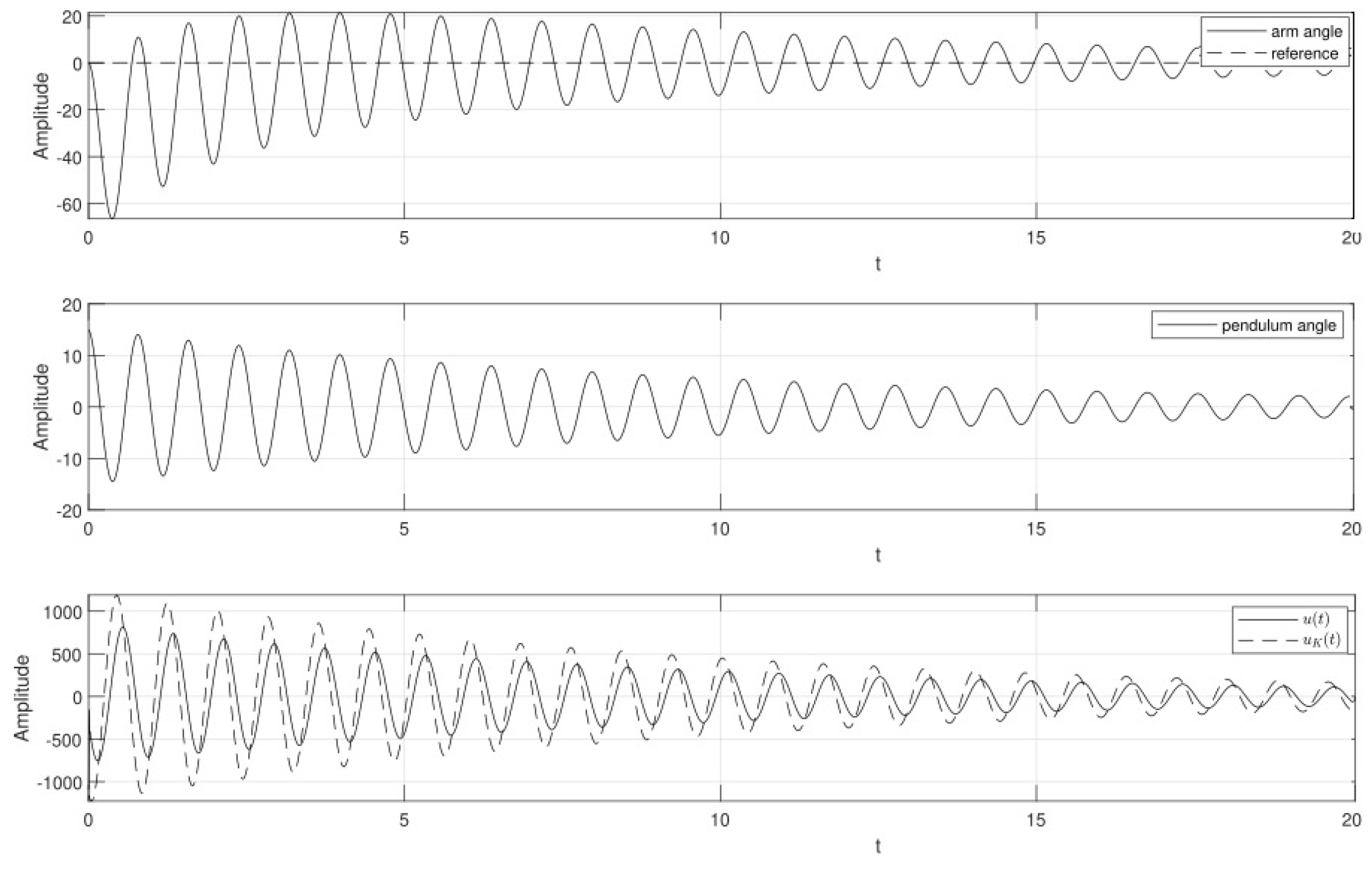

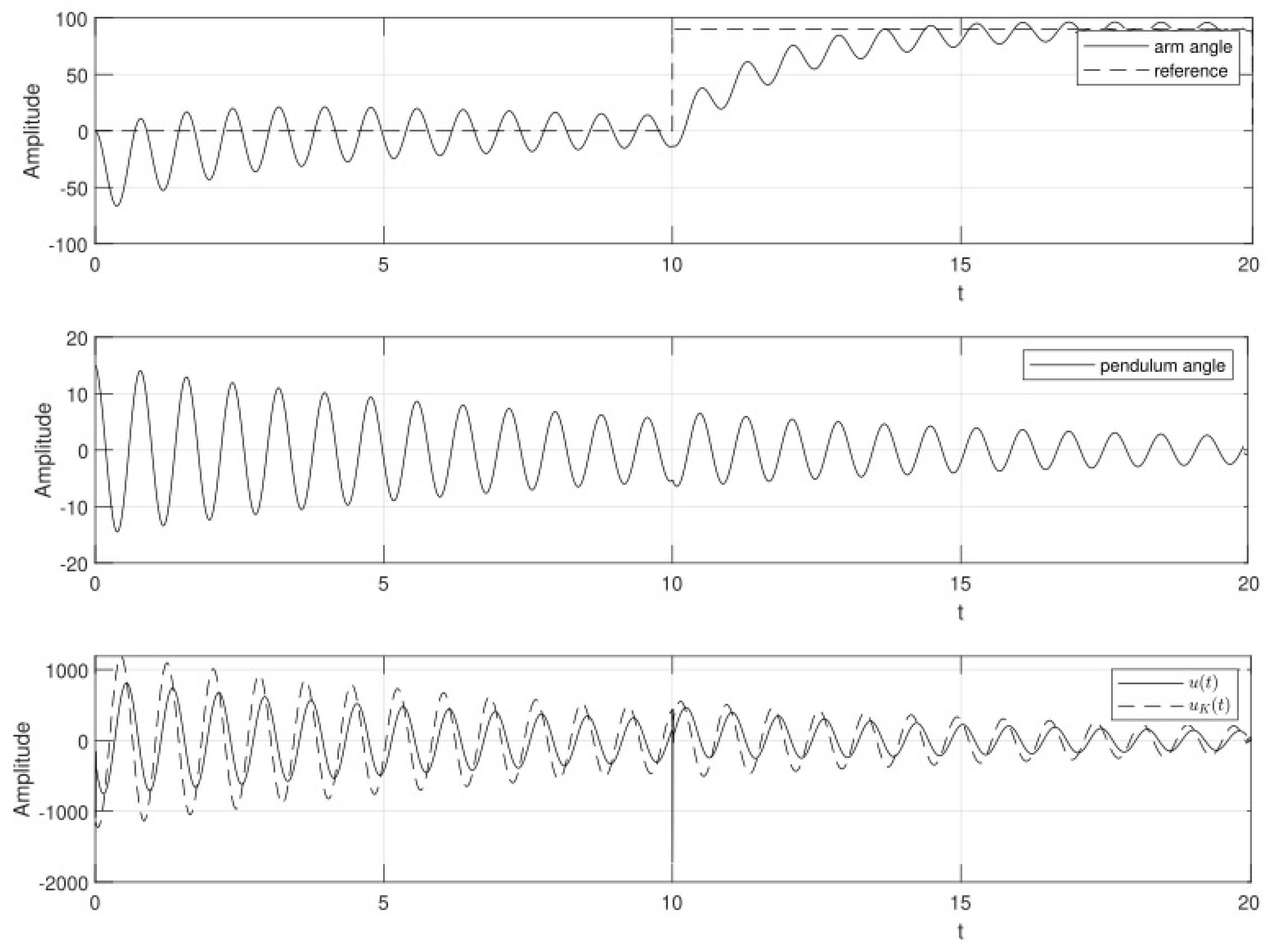

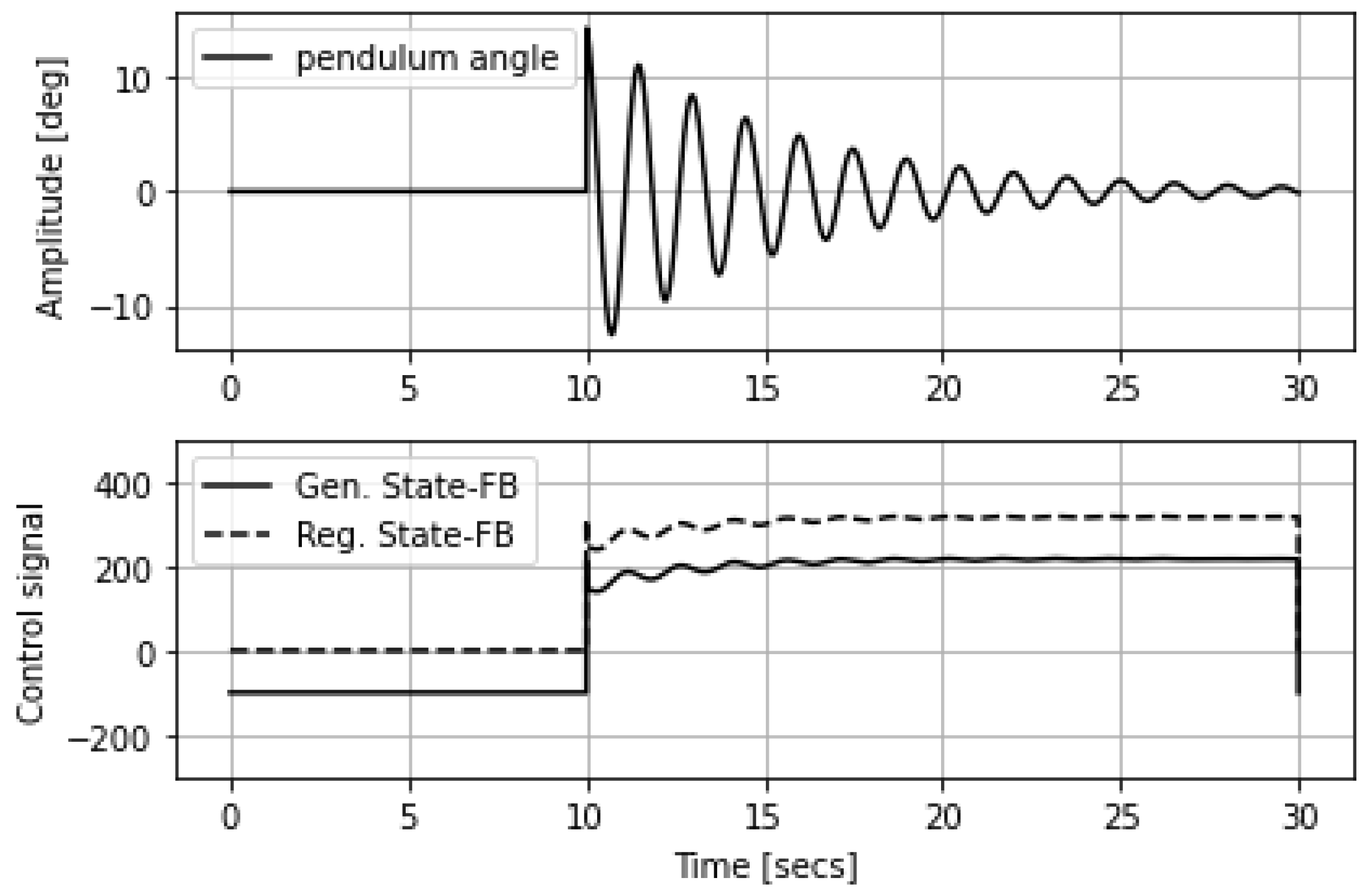

6.2. Experimental Results: Rotary Inverted Pendulum

6.3. Double Inverted Pendulum

6.4. Numerical Performance

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ARE | Algebraic Riccati Equation |

| BO | Bayesian Optimization |

| CDF | cumulative distribution function |

| DC | direct current |

| DIP | double inverted pendulum |

| EI | Expected Improvement |

| GP | Gaussian Process |

| ISE | Integral Square Error |

| LQR | Linear Quadratic Regulator |

| probability density function | |

| RIP | rotary inverted pendulum |

| SISO | Single Input Single Output |

| Summary of key symbols, definitions, and units used throughout the manuscript. | ||

| Symbol | Description | Units |

| State vector of the plant | – | |

| State vector for the controller | – | |

| Control input signal | Volts (V) | |

| Output measurement | – | |

| Filtered reference signal | – | |

| Desired reference trajectory | – | |

| State-space matrices of the plant | – | |

| Controller state-space matrices | – | |

| K | Feedback gain matrix (static controller) | – |

| F | Pre-filter gain matrix | – |

| P | Solution to the Algebraic Riccati Equation | – |

| LQR weighting matrices | – | |

| Augmented closed-loop system matrix | – | |

| Spectral norm (largest singular value) | – | |

| Eigenvalues of a matrix | – | |

| , | Process and measurement zero-mean noise | Gaussian |

| , | Covariance matrices for and | – |

| J | Cost function | – |

| Lyapunov function | – | |

| Posterior mean of surrogate function (BO) | – | |

| Posterior standard deviation (BO) | – | |

| Exploration parameter in acquisition function | – | |

| , | Standard Gaussian CDF and PDF | – |

| , | Arm and pendulum angles (Rotary Inverted Pendulum) | radians |

| , | Angular velocity and acceleration | rad/s, rad/s2 |

| , , | Inertia, Coriolis and gravity matrices | – |

| Applied torque | Nm | |

| Motor current | A | |

| Motor voltage input | V | |

| , | Angular velocities | rad/s |

| , | Gear ratios | – |

| Motor torque constant | Nm/A | |

| , | Armature resistance and inductance | , H |

| Normal distribution with zero mean and covariance P | – | |

References

- Olfati-Saber, R. Nonlinear Control of Underactuated Mechanical Systems with Application to Robotics and Aerospace Vehicles. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2001. [Google Scholar]

- Spong, M.W. Underactuated mechanical systems. In Control Problems in Robotics and Automation; Springer: Berlin/Heidelberg, Germany, 2005; pp. 135–150. [Google Scholar]

- Birglen, L.; Laliberté, T.; Gosselin, C.M. Underactuated Robotic Hands; Springer: Berlin/Heidelberg, Germany, 2007; Volume 40. [Google Scholar]

- Anderson, B.D.; Moore, J.B. Optimal Control: Linear Quadratic Methods; Courier Corporation: North Chelmsford, MA, USA, 2007. [Google Scholar]

- Solís Cid, M.A. Reinforcement Learning on Control Systems with Unobserved States. Ph.D. Thesis, Universidad Técnica Federico Santa María, Valparaíso, Chile, 2017. [Google Scholar]

- Yu, H.; Liu, Y.; Yang, T. Closed-loop Tracking Control of a Pendulum-driven Cart-pole Underactuated System. Proc. Inst. Mech. Eng. Part I J. Syst. Control. Eng. 2008, 222, 109–125. [Google Scholar] [CrossRef]

- Åström, K.J.; Furuta, K. Swinging up a Pendulum by Energy Control. Automatica 2000, 36, 287–295. [Google Scholar] [CrossRef]

- Cui, Z.; Zeng, J.; Sun, G. A fast particle swarm optimization. Int. J. Innov. Comput. Inf. Control. 2006, 2, 1365–1380. [Google Scholar]

- Kong, L.; He, W.; Dong, Y.; Cheng, L.; Yang, C.; Li, Z. Asymmetric Bounded Neural Control for an Uncertain Robot by State Feedback and Output Feedback. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 1735–1746. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Barbieri, E.; Alba-Flores, R. On the Infinite-horizon LQ tracker. Syst. Control. Lett. 2000, 40, 77–82. [Google Scholar] [CrossRef]

- Qin, C.; Zhang, H.; Luo, Y. Online Optimal Tracking Control of Continuous-time Linear Systems with Unknown Dynamics by using Adaptive Dynamic Programming. Int. J. Control. 2014, 87, 1000–1009. [Google Scholar] [CrossRef]

- Kiumarsi, B.; Lewis, F.L.; Modares, H.; Karimpour, A.; Naghibi-Sistani, M.B. Reinforcement Q-learning for optimal tracking control of linear discrete-time systems with unknown dynamics. Automatica 2014, 50, 1167–1175. [Google Scholar] [CrossRef]

- Watkins, C.J.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Kumar, Y.P.; Pradeep, D.J.; Chakravarthi, M.K.; Reddy, G.P. Deep Learning-Based PID Controller Tuning for Effective Speed Control of DC Shunt Motors. In Proceedings of the 2025 IEEE Open Conference of Electrical, Electronic and Information Sciences (eStream), Vilnius, Lithuania, 24 April 2025; pp. 1–6. [Google Scholar]

- Saat, S.; Ahmad, M.A.; Ghazali, M.R. Data-driven brain emotional learning-based intelligent controller-PID control of MIMO systems based on a modified safe experimentation dynamics algorithm. Int. J. Cogn. Comput. Eng. 2025, 6, 74–99. [Google Scholar] [CrossRef]

- Suid, M.H.; Ahmad, M.A. Optimal tuning of sigmoid PID controller using nonlinear sine cosine algorithm for the automatic voltage regulator system. ISA Trans. 2022, 128, 265–286. [Google Scholar] [CrossRef] [PubMed]

- Aoki, M. On some convergence questions in bayesian optimization problems. IEEE Trans. Autom. Control. 1965, 10, 180–182. [Google Scholar] [CrossRef]

- Poloczek, M.; Wang, J.; Frazier, P. Multi-Information Source Optimization. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30, pp. 4288–4298. [Google Scholar]

- Ghoreishi, S.F.; Imani, M. Bayesian Optimization for Efficient Design of Uncertain Coupled Multidisciplinary Systems. In Proceedings of the 2020 American Control Conference (ACC), Denver, CO, USA, 1–3 July 2020; pp. 3412–3418. [Google Scholar]

- Baptista, R.; Poloczek, M. Bayesian Optimization of Combinatorial Structures. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 462–471. [Google Scholar]

- Nguyen, V.; Gupta, S.; Rana, S.; Li, C.; Venkatesh, S. Regret for Expected Improvement over the Best-Observed Value and Stopping Condition. In Proceedings of the 9th Asian Conference on Machine Learning, Seoul, Republic of Korea, 15–17 November 2017; Volume 77, pp. 279–294. [Google Scholar]

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical Bayesian Optimization of Machine Learning Algorithms. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 2951–2959. [Google Scholar]

- Lyu, W.; Xue, P.; Yang, F.; Yan, C.; Hong, Z.; Zeng, X.; Zhou, D. An Efficient Bayesian Optimization Approach for Automated Optimization of Analog Circuits. IEEE Trans. Circuits Syst. I Regul. Pap. 2017, 65, 1954–1967. [Google Scholar] [CrossRef]

- Hu, H.; Li, P.; Huang, J.Z. Enabling High-Dimensional Bayesian Optimization for Efficient Failure Detection of Analog and Mixed-Signal Circuits. In Proceedings of the ACM 56th Annual Design Automation Conference 2019, Las Vegas, NV, USA, 2–6 June 2019; pp. 17:1–17:6. [Google Scholar]

- Lam, R.; Poloczek, M.; Frazier, P.; Willcox, K.E. Advances in Bayesian Optimization with Applications in Aerospace Engineering. In Proceedings of the AIAA Non-Deterministic Approaches Conference, Kissimmee, FL, USA, 8–12 January 2018; p. 1656. [Google Scholar]

- Sano, S.; Kadowaki, T.; Tsuda, K.; Kimura, S. Application of Bayesian optimization for pharmaceutical product development. J. Pharm. Innov. 2020, 15, 333–343. [Google Scholar] [CrossRef]

- Kocijan, J.; Grancharova, A. Application of Gaussian processes to the modelling and control in process engineering. In Innovations in Intelligent Machines-5; Springer: Berlin/Heidelberg, Germany, 2014; pp. 155–190. [Google Scholar]

- Wang, H.; Xu, H.; Yuan, Y.; Deng, J.; Sun, X. Noisy Multiobjective Black-box Optimization Using Bayesian Optimization. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Prague, Czech Republic, 13–17 July 2019; GECCO ’19. pp. 239–240. [Google Scholar]

- Kirschner, J.; Mutny, M.; Hiller, N.; Ischebeck, R.; Krause, A. Adaptive and Safe Bayesian Optimization in High Dimensions via One-Dimensional Subspaces. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Volume 97, pp. 3429–3438. [Google Scholar]

- Zhang, Y.; Liu, L.; Liang, J.; Chen, J.; Ke, C.; He, D. Application of a multi-strategy improved sparrow search algorithm in bridge crane PID control systems. Appl. Sci. 2024, 14, 5165. [Google Scholar] [CrossRef]

- Nasir, N.M.; Ghani, N.M.A.; Nasir, A.N.K.; Ahmad, M.A.; Tokhi, M.O. Neuro-modelling and fuzzy logic control of a two-wheeled wheelchair system. J. Low Freq. Noise Vib. Act. Control. 2025, 44, 588–602. [Google Scholar] [CrossRef]

- Imani, M.; Ghoreishi, S.F. Bayesian optimization objective-based experimental design. In Proceedings of the 2020 American control conference (ACC), Denver, CO, USA, 1–3 July 2020; pp. 3405–3411. [Google Scholar]

- Wang, X.; Jin, Y.; Schmitt, S.; Olhofer, M. Recent advances in Bayesian optimization. ACM Comput. Surv. 2023, 55, 1–36. [Google Scholar] [CrossRef]

- Solis, M.A.; Olivares, M.; Allende, H. A Switched Control Strategy for Swing-up and State Regulation for the Rotary Inverted Pendulum. Stud. Informatics Control. 2019, 28, 45–54. [Google Scholar] [CrossRef]

- Friedland, B. Control System Design: An Introduction to State-Space Methods; Courier Corporation: North Chelmsford, MA, USA, 2012. [Google Scholar]

- Crowe-Wright, I.J. Control Theory: The Double Pendulum Inverted on a Cart. Bachelor’s Thesis, University of New Mexico, Albuquerque, NM, USA, 2018. [Google Scholar]

- Willems, J. Mean Square Stability Criteria for Stochastic Feedback Systems. Int. J. Syst. Sci. 1973, 4, 545–564. [Google Scholar] [CrossRef]

- Thomas, S.S.; Palandri, J.; Lakehal-Ayat, M.; Chakravarty, P.; Wolf-Monheim, F.; Blaschko, M.B. Designing MacPherson Suspension Architectures Using Bayesian Optimization. In Proceedings of the 31st Benelux Conference on Artificial Intelligence (BNAIC 2019) and the 28th Belgian Dutch Conference on Machine Learning (Benelearn 2019), Brussels, Belgium, 6–8 November 2019. [Google Scholar]

- Rasmussen, C.E.; Williams, C.K.I. Gaussian Processes for Machine Learning; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Brochu, E.; Cora, V.M.; De Freitas, N. A Tutorial on Bayesian Optimization of Expensive Cost Functions, with Application to Active User Modeling and Hierarchical Reinforcement Learning. Technical Report. 2010. Available online: https://ora.ox.ac.uk/objects/uuid:9e6c9666-5641-4924-b9e7-4b768a96f50b (accessed on 1 August 2025).

- Franklin, G.F.; Powell, J.D.; Emami-Naeini, A.; Powell, J.D. Feedback Control of Dynamic Systems; Pearson: Upper Saddle River, NJ, USA, 2010; Volume 10. [Google Scholar]

- Khalil, H.K.; Grizzle, J.W. Nonlinear Systems; Prentice Hall: Upper Saddle River, NJ, USA, 2002; Volume 3. [Google Scholar]

| Symbol | Description | Value |

|---|---|---|

| Pendulum mass | kg | |

| Counterweight mass | kg | |

| Pendulum inertial moment | kg | |

| Arm inertial moment | kg | |

| r | Arm radius | m |

| Pendulum mass center | m | |

| h | Arm center of mass height | m |

| Arm friction coefficient | ||

| Pendulum friction coefficient | ||

| Armature resistor | 8 | |

| Motor inductance | 10 mH | |

| Motor inertia | kg | |

| Motor mutual inductance | N m/A | |

| Gear reduction coefficient | 59,927 | |

| External gear reduction coefficient | 16 | |

| g | Gravitational acceleration | m/ |

| System | Settling Time (s) | Max Overshoot (°) | ISE |

|---|---|---|---|

| Double Inverted Pendulum (BO) | ∼18 | 13.5 | 43.1 |

| Furuta (Step Reference, BO) | ∼6 | 10.0 | 170.2 |

| Furuta (Constant Reference, BO) | ∼9 | 40.0 | 129.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Solis, M.A.; Thomas, S.S.; Choque-Surco, C.A.; Taya-Acosta, E.A.; Coiro, F. Bayesian Optimization for the Synthesis of Generalized State-Feedback Controllers in Underactuated Systems. Mathematics 2025, 13, 3139. https://doi.org/10.3390/math13193139

Solis MA, Thomas SS, Choque-Surco CA, Taya-Acosta EA, Coiro F. Bayesian Optimization for the Synthesis of Generalized State-Feedback Controllers in Underactuated Systems. Mathematics. 2025; 13(19):3139. https://doi.org/10.3390/math13193139

Chicago/Turabian StyleSolis, Miguel A., Sinnu S. Thomas, Christian A. Choque-Surco, Edgar A. Taya-Acosta, and Francisca Coiro. 2025. "Bayesian Optimization for the Synthesis of Generalized State-Feedback Controllers in Underactuated Systems" Mathematics 13, no. 19: 3139. https://doi.org/10.3390/math13193139

APA StyleSolis, M. A., Thomas, S. S., Choque-Surco, C. A., Taya-Acosta, E. A., & Coiro, F. (2025). Bayesian Optimization for the Synthesis of Generalized State-Feedback Controllers in Underactuated Systems. Mathematics, 13(19), 3139. https://doi.org/10.3390/math13193139