1. Introduction

Image editing tools such as Photoshop, GIMP, and Photoscape have become highly accessible thanks to advancements in image processing and deep learning. However, this accessibility has also given rise to a concerning issue: the malicious exploitation of these tools to manipulate digital images and disseminate altered content across the Internet. Such actions have led to widespread public skepticism, misinformation, and outrage, triggering a cascade of public opinion crises and emerging as a significant threat to social stability.

Digital image forgery detection [

1] is a critical field that employs advanced scientific methods and cutting-edge technical approaches to analyze digital images meticulously. Its primary objective is to determine whether an image has been subjected to manipulation and, if so, to precisely identify the regions that have been altered.

As a long-standing research focus within the domain of information forensics, digital image forgery detection plays a pivotal role in equipping the public and governmental bodies with the tools to discern and counteract the dissemination of fraudulent digital images. It not only safeguards social stability but also protects the legitimate rights and interests of individuals and organizations. Moreover, it provides indispensable forensic evidence for judicial authorities, facilitating the accurate adjudication of cases involving digital image manipulation.

Currently, the most common image forgery methods are threefold [

2]: inpainting (removing content in an image that one does not wish to display), splicing (copying and pasting a segment of one image into another image), and copy-move (copying and pasting a segment of an image to other regions within the same image). Forensic methods targeting these forgery techniques can generally be divided into two categories: traditional feature extraction-based forensic algorithms and deep learning-based forensic algorithms.

Traditional feature extraction-based forensic algorithms mainly focus on extracting distinctive features from forged images to differentiate between authentic and forged regions. These features can be extracted through various means, including compression artifacts in media formats [

3,

4], inconsistencies in lighting [

5,

6], statistical patterns [

7], and local noise assessment [

8]. However, the greatest challenge faced by traditional detection algorithms is their inability to address multiple forgery methods using a single feature.

To address the aforementioned challenges, the state-of-the-art algorithms are currently mostly based on deep neural network (DNN) models. Some of these models focus solely on authenticating the image at the image level [

9], while others concentrate exclusively on locating forgery regions at the pixel level [

10,

11,

12,

13]. Some models perform authentication and forgery localization at both levels [

2,

14,

15,

16,

17,

18]. However, if the task of pixel-level localization is regarded as a simplified version of semantic segmentation, the design of the detection model may overly emphasize the extraction of semantic information from the image, thereby neglecting the differences between authentic and forged content. This can also lead to the model becoming overly reliant on the dataset, thereby giving rise to generalization issues [

2,

19]. Therefore, constructing and training a network model that can accurately identify forgery regions in complex scenarios (i.e., extracting semantically irrelevant features) has become a key issue.

To enable DNN models to accurately detect forgery traces, some studies have attempted to transform the RGB view into other views. Detection methods based on such transformations can be categorized into two types: noise perception-based algorithms [

9,

11,

20,

21,

22,

23,

24,

25,

26] and edge detection-based algorithms [

10,

27,

28,

29,

30,

31]. Algorithms based on noise-aware exploit the characteristic that the noise distribution of spliced or removed forgery images differs from that of authentic images. This difference can be exposed through noise perception algorithms. However, for image copy-move forgery, the forgery regions originate from the original image itself, which invalidates the aforementioned assumption in this type of manipulation.

The other approach, edge detection-based algorithms, attempts to identify edge inconsistencies between forgery and authentic regions. Existing techniques unify the features from each layer of the backbone network by summation or concatenation before feeding them into auxiliary branches. However, these methods still treat the features from each layer as semantically aware, and thus the issue of model generalization remains.

Moreover, in evaluating the generalization ability of models, existing deep learning methods, after being trained on public datasets, only assess performance at the pixel level using forged images from other public datasets, while neglecting the evaluation of performance on authentic images at the image level. In real-world scenarios, the probability of encountering authentic images is the highest, which also leads to a persistently high false positive rate for authentic images. Therefore, reducing the false positive rate for authentic images is of paramount importance.

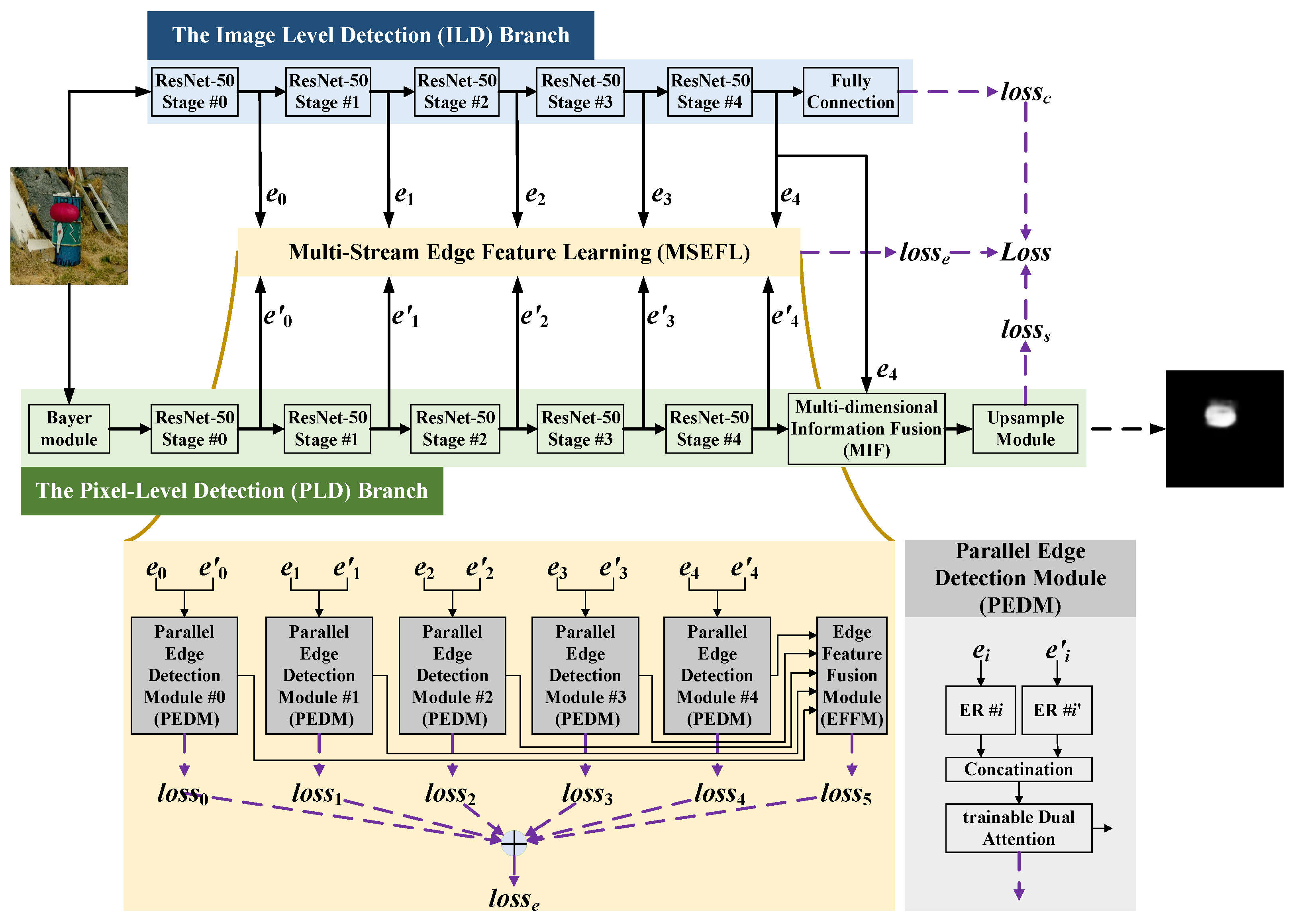

We introduce MMFD-Net (

Figure 1) to jointly detect three manipulation types and boost generalization. The network comprises three branches:

Top: Image-Level Detection (ILD); Middle: Multi-Stream Edge Feature Learning (MSEFL, fuses side outputs from ILD and PLD); Bottom: Pixel-Level Detection (PLD).

Both the ILD and PLD branches employ ResNet-50 as their backbone network, while the MSEFL branch takes the side outputs from the backbone networks of the aforementioned two branches as its input.

In summary, the contributions of the method are as follows:

Multi-Stream Edge Feature Learning (MSEFL):

A lightweight module that fuses low-level edges with high-level semantics to generate forgery-edge-sensitive representations. Edge-supervised training propagates these cues to the rest of the network, boosting manipulation-trace perception and domain transfer.

Multi-dimensional Information Fusion (MIF) in PLD:

Dual self-attention blocks attend to noise-view and color-view cues independently, then fuse them to achieve sharper pixel-level localization of forged regions.

Unified MMFD-Net framework:

The first architecture to jointly optimize image-level authenticity classification, pixel-level forgery segmentation, and edge-aware supervision. This end-to-end tri-task learning extracts semantic-agnostic forgery signatures, yielding superior detection accuracy and robust generalization.

2. Related Work

CNNs excel at modeling local correlations within images but struggle to capture global dependencies. Vision Transformers (ViTs) overcome this limitation via self-attention, establishing direct relationships among all image features and delivering stronger global perception. In image forgery forensics, each architecture offers distinct advantages; dual-stream networks that fuse both modalities jointly capture local and global cues, enhancing detection accuracy and robustness.

Beyond CNNs and ViTs, techniques such as LSTMs and GANs have also been adopted, further enriching the methodological landscape. When classified by the type of forgery traces they target, existing approaches fall into three categories: noise inconsistency, edge-based detection, and multi-feature fusion. We briefly outline implementations within these categories and highlight our contributions.

2.1. Forgery Detection Methods Based on Image Content Noise Features

In recent years, forgery detection methods based on content noise features have garnered widespread attention due to their sensitivity to manipulation traces. These methods primarily exploit inconsistencies in noise within an image to identify forgery regions. The following is a summary of relevant research on noise feature-based forensic methods: Zhou et al. [

11] combined RGB features with noise features to capture subtle differences between forgery and authentic regions. Huang et al. [

20] proposed using noise maps generated by Steganalysis Rich Model (SRM) filters to enhance detection accuracy. Niu et al. [

21] introduced a guided and multi-scale feature aggregation network for image forgery localization. Zhu et al. [

22] developed a two-step discriminative noise-guided approach that explicitly enhances the representation and utilization of noise inconsistencies. This method significantly improves detection accuracy and robustness. Kwon et al. [

24] proposed detecting and localizing image forgery by learning JPEG compression artifacts. This method leverages artifacts generated during the JPEG compression process as features of forgery traces. Wang et al. [

23] proposed the ObjectFormer method for capturing subtle forgery traces that are no longer visible in the RGB domain. Hu et al. [

9] introduced a Spatial Pyramid Attention Network (SPAN) architecture that effectively models the relationships between multi-scale image patches by constructing a pyramid of local self-attention blocks for detecting and localizing various types of image forgery. Guillermo et al. [

25] proposed the TruFor framework, which combines RGB images with a transformer that learns noise-sensitive fingerprints in a fusion architecture to extract both high-level and low-level traces, enabling robust detection of various image forgery methods.

2.2. Forgery Detection Methods Based on Edge-Based Detection

Forgery operations often leave edge traces around the manipulated regions, making edge feature-based detection an effective approach for image forgery detection. For example, Salloum et al. [

10] proposed a multi-task fully convolutional network (MFCN) to improve the localization accuracy of image splicing forgery regions. UGEE-Net [

27] focuses on the fusion and interaction of high-level features in the spatial domain, balancing global semantics and local details. Additionally, it incorporates frequency-domain features to extract edge information, further improving localization accuracy.

Sun et al. [

29] proposed SAFL-Net, which enhances the model’s generalization ability by constraining the feature extractor to learn semantic-agnostic features through the design of specific modules and auxiliary tasks. Lin et al. [

28] improved detection accuracy by combining multiple forgery traces and enhancing edge artifacts. This method leverages forgery traces along image edges, which are crucial for detecting manipulated regions. By enhancing edge artifacts, the method can more effectively identify and localize forgery regions in images. Ma et al. [

30] proposed the IML-ViT method, which is based on the Vision Transformer (ViT) and aims to improve image forgery detection performance by capturing forgery traces.

2.3. Forgery Detection Methods Based on Multi-Feature Fusion Methods

To enhance the robustness and accuracy of forgery detection, many researchers have begun to explore multi-feature fusion methods. These methods typically combine RGB features, noise features, and edge features to comprehensively capture forgery traces. For example, Hu et al. [

9] proposed a Spatial Pyramid Attention Network (SPAN), which integrates RGB features and noise features to improve the performance of forgery detection. Additionally, Chen et al. [

2] introduced a Multi-View Salient Structure Network (MVSS-Net) that enhances edge feature extraction by incorporating an edge-supervised branch. Han et al. [

19] designed a novel end-to-end network called HDF-Net to extract homogeneity difference features for precise localization of manipulation artifacts, significantly improving localization accuracy and edge refinement.

Despite the significant progress made in existing research, current methods still face challenges when dealing with complex forgery operations such as copy-move and splicing. In this paper, we propose the Multi-branch Multi-dimensional Forgery Detection Networks (MMFD-Net), which capture homogeneity difference features between forgery and authentic regions to achieve precise localization of forgery areas. MMFD-Net fuses color and noise features to detect forged images at both the image level and pixel level, while the Multi-Stream Edge Feature Learning (MSEFL) module learns low-level edge features and high-level abstract features between forgery and authentic regions. This enhances the model’s perception of forgery edges during feature extraction, thereby improving the accuracy and robustness of tamper detection.

3. The Proposed Method

Our goal is to build a multi-branch deep network, M. The network uses three different annotations for supervised learning of forgery features, which can not only determine whether an image has been manipulated but also indicate the forgery regions in the image. Moreover, the forgery edge annotation plays a key role in the supervised training. It can make the network pay attention to the artificial traces between the real and forgery regions, and improve the generalization ability of the network.

Given an input image

, for our proposed model

M, the image-level detection (ILD) module is denoted as

, the pixel-level detection (PLD) module is denoted as

, and the Multi-Stream Edge Feature Learning (MSEFL) module is denoted as

. At the image level,

outputs the probability

that the image has been forged. At the pixel level,

and

output a forgery region probability map

and a forgery edge probability map

, respectively, with the same dimensions as the detection image. The overall description of the proposed model is shown in Equation (

1).

In Equation (

1), the side output of

and detection image

x serve as inputs for

, while the side outputs of

and

serve as inputs for

.

In the inference phase, is used to predict whether the image has been forged at the image level, while predicts whether image pixels have been forged at the pixel level.

3.1. The Image-Level Detection Branch

In this branch, the main task is to perform binary classification of images based on whether there are traces of forgery within the image. Currently, in common image classification tasks, many network models can excellently complete tasks based on the semantic structure within images. However, unlike these classification tasks, the forgery regions in manipulated images are semantic-agnostic. Therefore, the model should classify images based on forgery traces. Additionally, because the pixels in forgery regions occupy a low proportion of the entire image, the model is required to retain forgery features even as the number of network layers increases to prevent network performance degradation due to gradient vanishing.

The core of ResNet-50 is Residual Learning, which enables the network to learn residual mappings rather than directly learning the target function by introducing Skip Connections. Additionally, skip connections allow gradient information to be directly transmitted from later layers to earlier layers, alleviating the vanishing gradient problem. Meanwhile, through layer-by-layer stacking of convolutional layers, the receptive field gradually expands, enabling it to capture both local features and model global patterns to adapt to different types of forgery methods. Moreover, forgery operations introduce subtle local artifacts (such as edge discontinuities and texture anomalies); thus, ResNet-50’s stacking approach can extract multi-level features from the input image. This is beneficial for detecting the edges of forgery regions. Here,

denotes the output of the fully connected layer;

denotes the side output of ResNet-50 stage

in ILD. As shown in

Figure 1, given the input image

x, ILD is defined as Equation (

2).

The side outputs () are used for subsequent edge detection supervised learning, while is used for binary classification supervised learning.

3.2. The Pixel-Level Detection Branch

In this branch, ResNet-50 is used as the backbone network, and a noise-aware convolutional neural network is introduced as the preprocessing module of PLD, replacing the fully connected layer with an upsampling layer. Meanwhile, a multi-dimensional information fusion (MIF) module based on self-attention is designed to fuse the forgery feature information of ILD and PLD. Here,

denotes the noise-aware convolutional neural network;

denotes the output of PLD, used for supervised learning of forgery region segmentation;

denotes the side output of ResNet-50 stage

in PLD. The PLD, as shown in

Figure 1, is defined as Equation (

3).

The side outputs () of PLD and the side outputs () of ILD are jointly used for subsequent edge detection supervised learning, while is combined with for feature enhancement of the forgery region, thereby conducting subsequent image segmentation supervised learning.

3.2.1. The Noise-Aware Module

According to Dong et al. [

2] and Bayar [

32], BayarConv has excellent noise perception capabilities. It can distinguish the differences in noise characteristics between the pasted regions and the authentic regions. BayarConv is a set of convolutional kernels for supervised training to detect noise characteristic differences. Utilizing this characteristic, implementing it as a preprocessing step for ResNet-50 is beneficial for subsequent modules to segment the forgery regions of the image based on noise differences.

In

BayarConv, each convolutional kernel

is subject to two constraints, as shown in Equation (

4).

In short, for the parameters of kernel

, its center weight is set to

, and the sum of the other weights equals 1. This constraint is applied throughout the entire supervised training process of the BayarConv module.

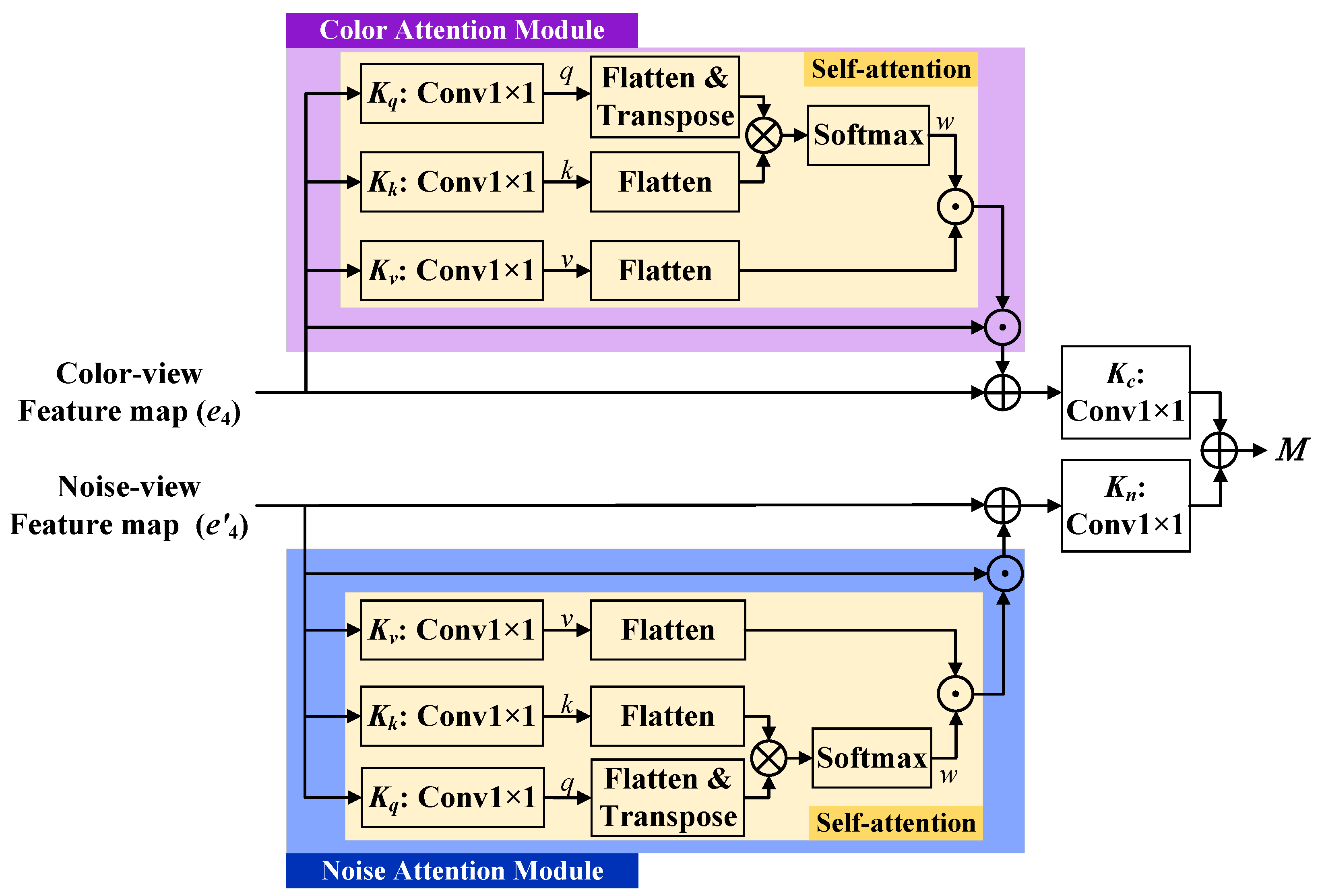

3.2.2. The Multi-Dimensional Information Fusion (MIF) Module

The ILD extracts image features from the color view, while the PLD extracts image features from the noise view. The forgery information extracted from a single branch is limited and insufficient to accurately localize the forgery region. The work of Dong et al. [

2] has demonstrated that fusing features from both branches can effectively localize the forgery region. Meanwhile, the self-attention mechanism has achieved remarkable performance in many tasks and can also be applied to fuse image features to obtain more prominent forgery region information. Inspired by this, we design a Multi-dimensional Information Fusion (MIF) module based on self-attention for locating the forgery region, as shown in

Figure 2. Here,

and

denote convolution kernels with

size, used to independently process the forgery region information of the two branches;

denotes a color attention module;

denotes a noise attention module; ∗ is the convolution operation. The process of MIF is defined in Equation (

5):

The features from the color view branch (

) and noise view branch (

) are separately enhanced by their corresponding attention modules (

and

). They are then weighted by convolution kernels (

,

) and linearly combined, producing a more discriminative representation of forgery regions. Here,

denotes the self-attention module, so

and

are defined in Equation (

6):

where × represents element-wise multiplication. This equation describes how self-attention (SA) is applied to both color and noise feature maps. By multiplying the input with its attention weights and adding it back to the original map, the model highlights the most relevant forgery-related patterns while preserving the original feature context. The specific implementation of self-attention is as follows:

- (a)

For an input map , three kernels (, , ) with size are used to implement convolution operations with I, respectively, to obtain three feature maps (queries q, values v, keys k);

- (b)

The three feature maps are flattened, and then q is transposed;

- (c)

q and k undergo matrix multiplication. The results are processed by the Softmax function to obtain attention weights w;

- (d)

The element-wise multiplication of v and w yields the final attention maps.

3.3. The Multi-Stream Edge Feature Learning Branch

In ResNet-50, the side outputs from the lower stages contain rich low-level edge features, while the side outputs from the higher stages possess high-level abstract features. Meanwhile, in the two branches, the backbone network extracts features from both the color view and noise view. As a result, the side outputs from the two branches complement each other in terms of tamper region edge information. Additionally, performing edge-supervised learning on the side outputs of the backbone has been shown to improve the model’s generalization ability and overall performance in image classification and segmentation tasks [

14].

Therefore, we propose a Multi-Stream Edge Feature Learning (MSEFL) Module for edge-supervised learning. By combining the side outputs from different stages, the MSEFL module can simultaneously leverage low-level edge features and high-level abstract features to form multi-level features. These multi-level features are then utilized for tamper edge-supervised learning, enhancing the model’s perception of boundary changes between forgery regions and authentic regions (and vice versa).

In the MSEFL module, defined in Equation (

7), there are five structurally identical Parallel Edge Detection Modules (PEDMs) and one Edge Feature Fusion Module (EFFM). Given two arrays of side outputs

and

from ILD and PLD, two side output elements of the two arrays are input into different PEDMs, respectively. The five output maps

from the PEDMs are not only used for subsequent supervised learning but also serve as inputs to the EFFM. Finally, the MSEFL module outputs six maps

used for supervised learning.

This equation defines the output of MSEFL. It produces six edge prediction maps (from different backbone stages and fusion layers), which are used to capture tampering boundaries at multiple levels of abstraction.

3.3.1. Parallel Edge Detection Module

The side outputs (

and

) of stage

i from ILD and PLD contain edge information corresponding to the respective stages of the two backbones. Therefore, in a PEDM, as shown in

Figure 1, Edge Refining (ER) modules are applied separately to

and

, followed by trainable Dual Attention (tDA) [

33] to fuse the two refined features.

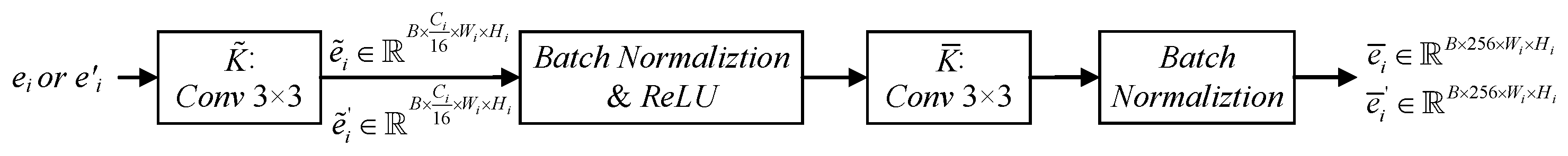

(1) ER module: To refine the forgery region edge, we designed an ER module, as illustrated in

Figure 3, to remove irrelevant edge information and preserve the forgery region edge. The specific implementation is as follows:

Given or , ER performs:

- (a)

Conv3 × 3 (stride 1, padding 1) with , followed by BatchNorm and ReLU;

- (b)

Conv3 × 3 (stride 1, padding 1) with , followed by BatchNorm.

The ER output is thus or . We apply ER to both and to obtain and . This concrete design stacks 3 × 3 convolutions with normalization and non-linearity while fixing channel widths for stable optimization.

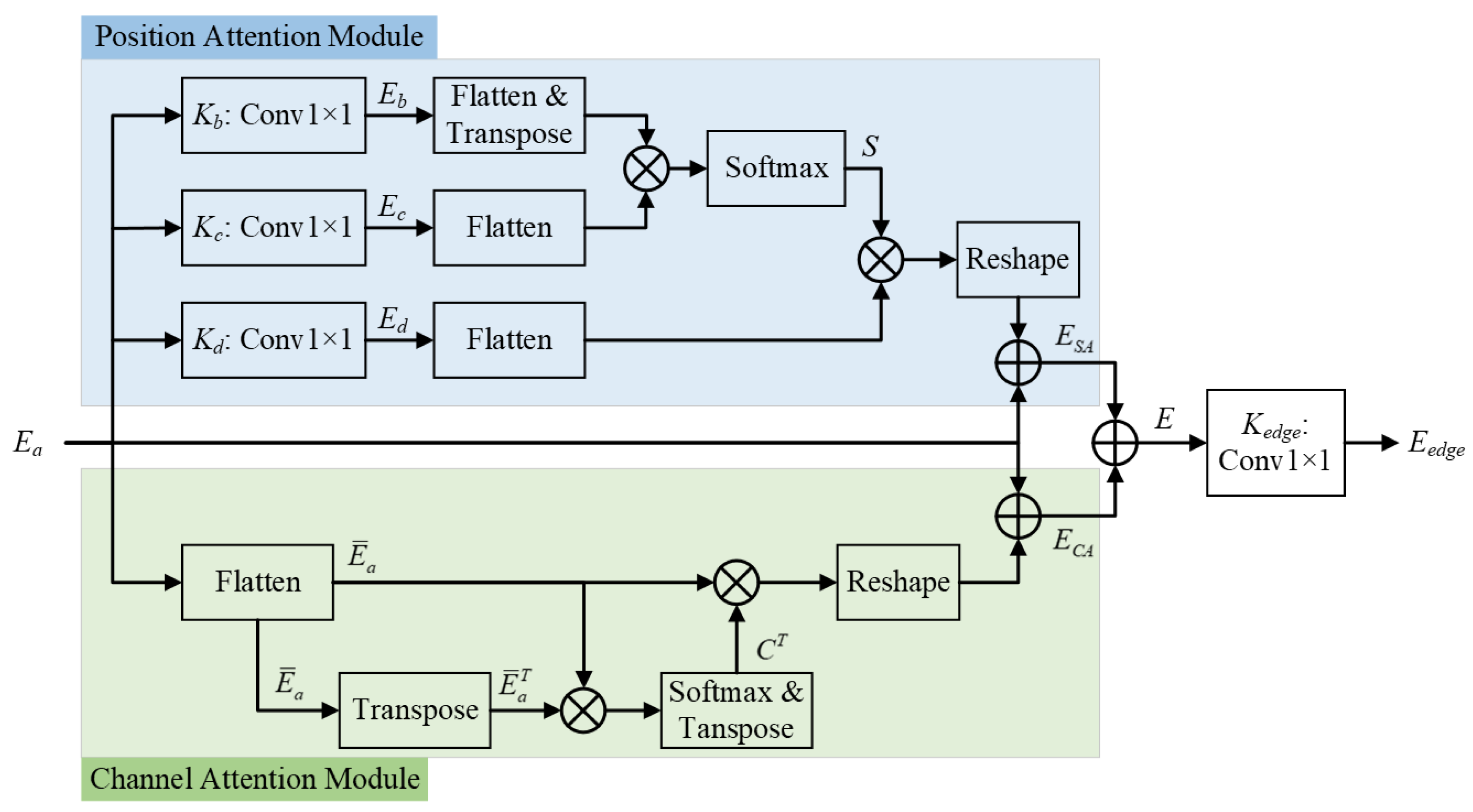

(2) tDA module: The output maps

and

from the ER module are concatenated to

, which contains forged region edge information from two branches. It is noteworthy that the information carried by tampered edges constitutes only a minor fraction of the overall image content. Therefore, we need to further integrate this information to enhance the model’s perception of forged region edges. To achieve this, the integration should be guided by an attention-driven mechanism to strengthen the features of tamper region edges while weakening the features of non-tamper region edges. Furthermore, these artifacts can be reliably captured if the model considers both where (spatial continuity) and what (channel semantics) aspects of the features. To this end, a trainable Dual Attention (tDA) module [

33], as shown in

Figure 4, combines two complementary attentions consisting of two attention modules: Position Attention (PA) module and Channel Attention (CA) module.

PA models long-range spatial dependencies by computing pairwise correlations across all pixel positions. This enables the network to highlight coherent edge regions and suppress isolated noisy responses. The specific implementation of PA is as follows:

- (a)

Three convolution kernels (, and ) are used to compute three projections of , i.e., , and .

- (b)

Here,

and

are flattened to

, and

is flattened to

, where

;

is the transposed matrix of

;

S denotes the spatial attention map. According to the softmax function, as shown in Equation (

8), obtained by calculation of spatial attention map

.

where × represents the matrix multiplication. Here,

S is computed by measuring the similarity between feature vectors at different pixel locations. This operation helps the model identify which spatial regions are strongly correlated, a key step for detecting boundary inconsistencies introduced by image manipulation.

- (c)

Here,

denotes the transposed matrix of

S;

is a trainable weight.

,

, and

calculated the feature map

according to Equation (

9).

where × represents the matrix multiplication,

is initialized as 0.

This equation refines the spatially attended features. The attention weights S are applied to the feature map , reshaped back to the original size, and combined with the input feature . The trainable parameter controls the balance between the original and attention-enhanced features, thereby emphasizing manipulation boundaries.

The CA module models the inter-dependencies between feature channels, thereby enhancing feature dimensions that are highly relevant to manipulation traces (e.g., abnormal textures or resampling patterns). The specific implementation of CA is as follows:

- (a)

Here,

is flattened to

, resulting in

;

is the transpose matrix of

. The channel attention map

is obtained through a calculation based on Equation (

10).

where × represents the matrix multiplication.

This equation defines the channel attention map C. It measures the correlations between feature channels, indicating how much each channel contributes to detecting forgery cues. Highly correlated channels are assigned stronger weights.

- (b)

Here,

denotes the transpose matrix of

C;

is a trainable scale parameter. The feature map

is calculated by

,

and

according to Equation (

11).

where

is initialized as 0.

This equation applies channel attention to enhance forgery-related channels. The attention-weighted channel features are reshaped and added back to the original input, with serving as a trainable scaling factor. This mechanism amplifies channels sensitive to tampered edges while suppressing irrelevant ones.

After completing the two attention modules, the feature maps and are added together to obtain the final map . And then, the edge prediction result is obtained using a convolution kernel for feature graph E.

3.3.2. Edge Feature Fusion Module

In the PEDMs, there are five single-channel edge predictions with edge information of different levels. The edge information should be fused to generate the final forgery region edge prediction. To this end, we design an Edge Feature Fusion Module (EFFM), whose detailed workflow is presented in Algorithm 1.

The five-edge prediction maps have different sizes, so we upsample them to the highest resolution by a parameter-free bilinear up-sampling operation. After that, they are concatenated together.

Subsequently, we expand channels with a Conv1 × 1, then apply Conv3 × 3–BN–ReLU twice to integrate spatial and channel cues to produce the final edge probability map

.

| Algorithm 1: Edge Feature Fusion Module (EFFM) |

Input:, where Output: ; ; ; ; ; // ; ; ; ; return |

3.4. Multi-Dimensional Supervision

In the training phase, the input training set is denoted by , where denotes the detected image set, denotes the corresponding ground truth for X. is the binary label, and and are the binary maps with forgery region and forgery edge, respectively. In the training set, the size of is resized to , and those of and are .

In the proposed model, the three branches have distinct task objectives. Therefore, the losses of all three branches need to be taken into account to enhance the performance of the network. The loss of ILD (image-scale loss) is used to boost the model’s specificity at the image level. The loss of PLD (pixel-scale loss) is used to boost the model’s sensitivity for the forgery region at the pixel level. The loss of MSEFL (edge loss) is used to learn semantic-agnostic information and boost the model’s generalization at both the image and pixel levels.

Image-scale Loss: In ILD, this is a binary classification task. Therefore, we use the famous binary cross-entropy (BCE) to calculate the image-scale loss, defined as Equation (

12).

This loss focuses on classifying each image as authentic or manipulated, ensuring that the model can make a reliable high-level decision about the presence of tampering. It is critical for improving image-level specificity and reducing false positives, preventing genuine images from being misclassified as forged. The image-level loss is most important when the primary objective is global classification rather than fine-grained localization.

Pixel-scale Loss: For a forgery image, the proportion of manipulated pixels is typically low. The Dice loss, defined in Equation (

13), has proved effective for learning from imbalanced data [

34], so we use it to compute the pixel-scale loss.

This loss targets the precise localization of manipulated regions within an image, enabling accurate pixel-wise identification and segmentation of tampered areas. It is key to improving sensitivity (recall) and reducing false negatives, ensuring that manipulated pixels are not missed. The pixel-level loss is most important when accurate localization is the goal. It is particularly effective under class-imbalance conditions where forged pixels are vastly outnumbered by authentic pixels.

Edge Loss: In computing the edge loss, the facing problem is the same as that of the pixel-scale loss, so the Dice loss is also used. Here, a collection

is outputted from MSEFL;

is the positive weight of the

stream, which should follow

;

denotes the Dice loss of the

stream at the pixel level, defined in Equation (

14):

Therefore, the edge loss is defined as Equation (

15):

This loss encourages learning semantic-agnostic forgery cues by leveraging edge information. By emphasizing boundary discontinuities and inconsistencies, it supports robust detection and localization of manipulated regions and improves generalization. The edge loss is most important when detection relies on boundary cues rather than semantic content, and it performs especially well for complex manipulations where semantics are not a reliable indicator of forgery.

Combined Loss: After computing the three losses, a combined loss is obtained by a linear combination, defined as Equation (

16):

where

are positive weights.

4. Experiments

4.1. Implementation Details

We implemented our model using PyTorch and trained it on an NVIDIA RTX 3090 GPU. In both training and testing, the size of all images was resized to . ILD and PLD, which use ResNet-50 as the backbone, were initialized with the corresponding models pre-trained on ImageNet.

Hyperparameter Setting. In the training phase, the Adam [

35] optimizer (

,

) with a weight decay of

was used to adjust the model parameters, with the learning rate adjusted cyclically from

to

using CosineAnnealingWarmRestarts with

and

. The batch size was set as four, and early stopping with a patience of 10 was adopted based on the validation

score. For the two hyperparameters (

and

) in the combined loss, we empirically set them as 0.1 and 0.2, respectively.

Data Augmentation. During the training process, we applied a fixed, seed-synchronized augmentation pipeline implemented with Albumentations, such that geometric transforms were applied identically to the image and mask, while photometric transforms affected the image only. The exact transformations and parameters of the operations are listed in detail in

Table 1. Beyond generic augmentations, we synthesized manipulation priors with two custom dual-target transforms: Copy–Move, which copies a random rectangular patch (

) to a different location (

p = 0.1), and Inpainting, which replaces a random window (

) using OpenCV inpainting (TELEA or Navier–Stokes;

).

4.2. Experiment Setting

Dataset. To ensure the scientific rigor and comparability of our research results, we carefully designed the selection and construction of our datasets.

For training and validation, we chose the well-established CASIAv2 dataset [

36], which provides a solid foundation for model training with its rich samples. For testing, we selected multiple widely recognized datasets, including COVER [

37], NIST16 [

38], CASIAv1 [

36], and IMD [

39], to comprehensively evaluate the model’s generalization ability and performance.

Following the dataset construction method of Dong et al. [

2], we combined DEFACTO [

40] and MS-COCO [

41] to create a training set named DEF-84k and a testing set named DEF-12k. There is no data overlap between these two datasets to prevent data leakage. For DEF-84k, there are 64,000 forged images from DEFACTO and 20,000 authentic images from MS-COCO. For DEF-12k, there are 6000 forged images from DEFACTO and 6000 authentic images from MS-COCO.

In DEF-84k and CASIA v2, we held out 10% of the data as a validation set using a fixed random seed (set as 2147483647) and stratified by manipulation type (copy–move/splicing/inpainting) to maintain class balance across the train/validation splits.

In summary, our experiments involve two training sets and six test sets, with specific details shown in

Table 2.

Evaluation Criteria. A comprehensive set of evaluation criteria is essential to assess detection models’ performance accurately. These criteria cover various aspects, including pixel-level and image-level detection. We calculate the score, which provides a balanced measure of these two metrics. Furthermore, we report the AUC (Area Under the ROC Curve) to evaluate the model’s ability to distinguish between forged and authentic images. An AUC value closer to 1 indicates better performance in distinguishing forged images from authentic ones.

In evaluating pixel-level manipulation detection, we calculated Precision and Recall for forgery pixel identification. To offer a comprehensive assessment of the model’s effectiveness, we also report the score, which serves as the harmonic mean of Precision and Recall, thereby balancing the trade-offs between these two metrics. Below are the detailed evaluation criteria used in our experiments:

Score (Pixel-Level): The

score is the harmonic mean of precision and recall, providing a balanced measure of the model’s ability to detect forgery pixels. A higher

score indicates better detection performance at the pixel level.

Com-

: The Com-

is the harmonic mean of pixel-level

and image-level

, providing a comprehensive measure of the model’s performance. Com-

is sensitive to the lowest value of pixel-

and image-

. In particular, it scores 0 when either pixel-

or image-

is 0, which does not hold for the arithmetic mean. A higher Com-

score indicates that the model performs well in both pixel-level and image-level detection.

During evaluation, some papers [

9,

15,

17] provide information on the optimal decision threshold, which can enable the model to achieve satisfactory performance in an ideal situation. However, in practice, the ideal situation does not always exist, since the decision threshold should be preset. To make the evaluation closer to real-world situations, we set a default decision threshold (0.5) for

computation at both the image level and the pixel level.

4.3. Ablation Study

In the ablation study, as listed in

Table 3, setups #1 (ILD) and #2 (PLD) are used as two complete detection algorithms. While keeping the ResNet-50 structure of PLD and ILD unchanged, the output of ResNet-50 stage-4 is used as a side output. After undergoing up-sampling operations, the up-sampling result of the side output is used for the localization of forgery areas. In addition, a fully connected module, analogous to the ILD structure, is incorporated into setup #2 to facilitate image-level implementation. In setups #3–#5, “Dual branch” represents ILD and PLD. MIF, based on self-attention, is used to fuse the image features of the two branches, so in setups #4 and #5, the columns of “Self-attention” are labeled as “+”. In setups #3–#5, if the columns of Dual Attention are labeled as “+”, MSEFL uses Dual Attention to fuse the edge features of the two branches; otherwise, MSEFL just concatenates the edge features in the channel dimension.

From the configuration described above, it is evident that Setup #1 (ILD) and Setup #2 (PLD) serve as the baseline for two independent branches. Setup #3 incorporates MSEFL on the dual-branch framework without attention-based fusion. Setup #4 builds upon Setup #3 by integrating MIF based on self-attention. Finally, Setup #5 enhances Setup #4 by introducing trainable dual attention (tDA) for cross-branch edge fusion within MSEFL.

(1) Comparison between ILD and PLD. Comparing ILD and PLD in

Table 3, ILD achieves a higher pixel-level

score of 45.3 on

cpmv., whereas PLD demonstrates superior performance on

spli. and

inpa., with respective

scores of 70.8 and 45.8. Consequently, the average pixel-level

score increases from 48.9 to 52.7 (+3.8), the image-level

score improves from 65.3 to 72.7 (+7.4), and the comprehensive

score (Com-

) rises from 55.9 to 61.1 (+5.2). For

cpmv., the forgery regions come from the original image, in which the noise distribution of the forgery image does not change significantly, so that comparing with PLD using the difference of noise distribution, ILD using the boundary artifacts achieves better performance. On the contrary,

spli. and

inpa. have a great difference in noise distribution, so PLD can achieve better results. Due to the quantity difference between

cpmv. and

spli./

inpa., PLD outperforms ILD in the image-level evaluation.

(2) Influence of multi-branch fusion. MSEFL serves as the adhesive to fuse multiple side outputs of the two branches for multi-stream supervised learning. Without incorporating attention fusion, implementing a two-branch multi-side output framework for multi-stream edge supervision results in a pixel-level mean increase from 52.7 in setup #2 to 54.3 (+1.6), an image-level score improvement from 72.7 to 74.1 (+1.4), and a Com- score enhancement from 61.1 to 62.7 (+1.6). This indicates that even without employing attention mechanisms, edge supervision already demonstrated stable enhancements in both image-level and pixel-level performance, thereby validating the effectiveness of mapping tampered boundaries across multi-scale and multi-source features.

(3) Influence of MIF. MIF uses self-attention to increase the difference between forged and authentic regions in PLD. Upon the incorporation of MIF in setup #4, compared with setup #3, a comprehensive enhancement in pixel-level scores across all three categories was observed: cpmv. increased from 44.8 to 47.9; spli. from 71.9 to 74.7; and inpa. from 46.2 to 50.6. This resulted in an average pixel-level improvement of +3.4 (from 54.3 to 57.7). Concurrently, the image-level score showed a marginal increase (from 74.1 to 74.7), while the Com- score experienced a more substantial rise of +2.4 (from 62.7 to 65.1). This indicates that the Color Perspective (ILD) and Noise Perspective (PLD) can form more discriminative tampering representations after being weighted and realigned by self-attention, with the enhancement primarily manifested in pixel-level localization accuracy.

(4) Influence of MSEFL with Dual Attention. MSEFL primarily processes the edge information of the side outputs from the corresponding stages of the two branches. It then uses the dual attention module to fuse the edge information from each stage of the two branches. Comparing with setup #4, the introduction of dual attention for cross-branch edge fusion in MSEFL in setup #5 leads to a continued enhancement in pixel-level mean, rising from 57.7 to 58.9 (+1.2). More notably, there was a significant leap in image-level AUC/ metrics (AUC 85.1→89.1; 74.7→81.3, +6.6), propelling the Com- from 65.1 to 68.3 (+3.2). It is the reason that tDA enhances the discriminability of “tampered boundary-context” along both spatial and channel dimensions, thereby fortifying image-level discrimination and exerting a positive pull on pixel-level localization.

In summary, a consistent and steady enhancement in pixel-level localization, image-level discrimination, and Com- is observed, progressing from the independent branches of setup #1 and #2 to the comprehensive structure of setup #5.

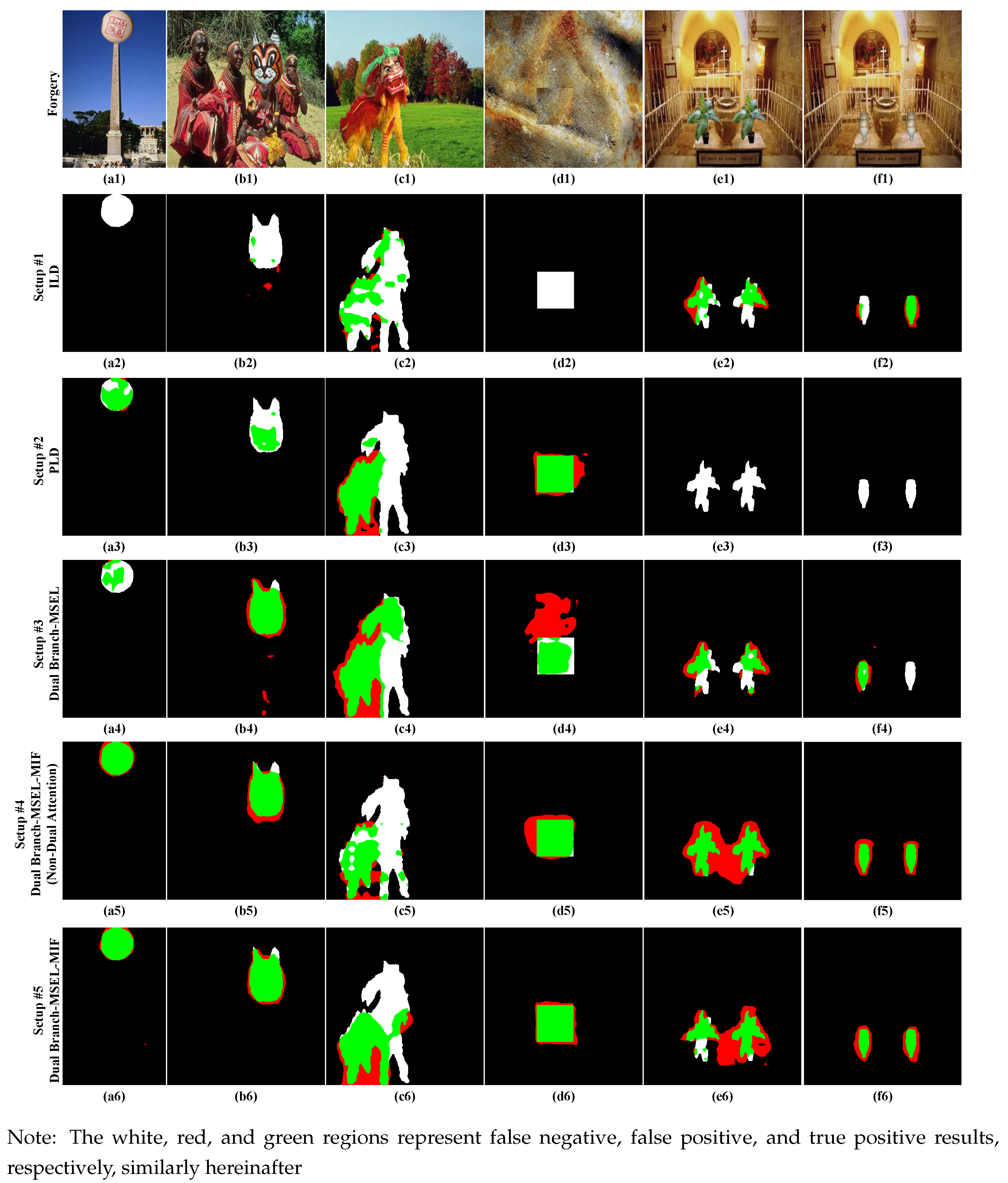

For more intuitively showing the detection effects of different setups,

Figure 5 presents the results of these setups on several detection images at the pixel level. In this figure, there are the white, red, and green regions, which represent false negative, false positive, and true positive results, respectively. Below, we also use the same manner for result visualization. An outstanding detection performance should contain the areas with more green pixels but fewer white and red pixels. Compared with other setups, setups #1 and #2 have too many white pixels. Setup #3 detects more green pixels, but is accompanied by more red pixels, which makes a step forward. Setups #4 and #5 are better than the previous three. Setup #5 is the best, where it contains more green pixels, fewer red or white pixels.

4.4. Comparison with State-of-the-Art

We collected pixel-level forgery detection performance data for eight models across five datasets, including H-LSTM [

14], ManTra-Net [

15], HP-FCN [

12], CR-CNN [

17], GSR-Net [

16], SPAN [

9], CAT-Net [

18], and MVSS-Net++ [

2]. The experimental data for these models are all sourced from the paper by Dong et al. [

2].

4.4.1. Pixel-Level Manipulation Detection

We also collected the source codes and corresponding training parameters of ManTra-Net [

15] and CAT-Net [

18], so we would show the detection results of the two algorithms and our proposed model, as shown in

Figure 6. The meanings of the green, red, and white colors can refer to

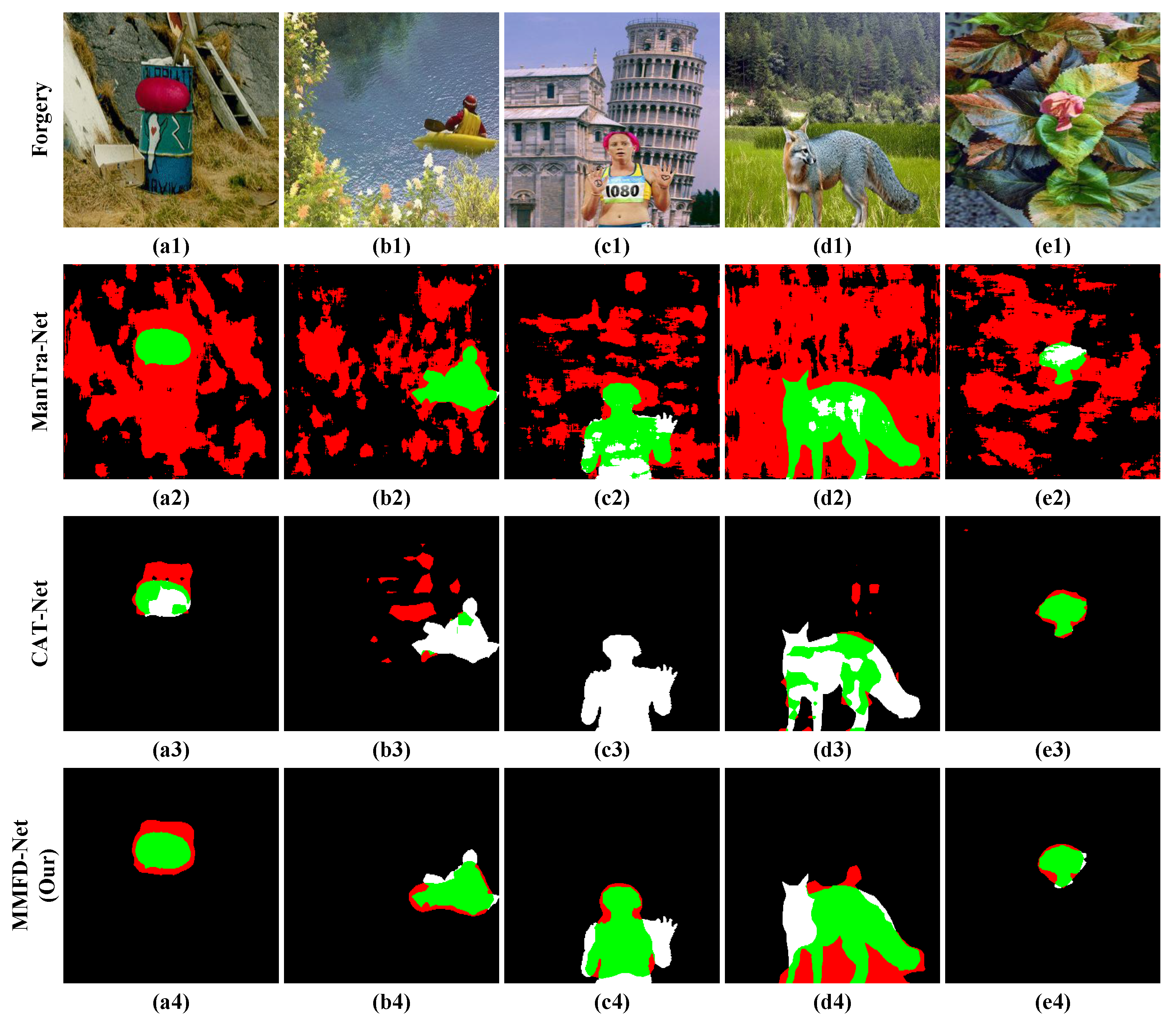

Section 4.3. In summary, the result with more green and less red or white is the best.

Observing

Figure 6, ManTra-Net has too many incorrectly detected pixels, while the results of CAT-Net have too many forgery pixels that are not detected. Comparing with the two algorithms, the MMFD-Net obviously is better, which increases the ratio of detected forgery pixels while reducing false detections, thereby enhancing overall performance. This conclusion is further confirmed by the subsequent quantitative comparison.

Through the above qualitative comparison, we can intuitively see the detection effect of the algorithm. In the following, we verify the effectiveness of the algorithm through more quantitative comparisons.

In

Table 4, the forgery detection performance of different models is evaluated using multiple datasets at the pixel level. Regarding evaluation metrics, we adopted the F1 score of Pixel-Level Detection Metrics, where the best result on each dataset is highlighted with bold font. Meanwhile, using the mean value of F1 scores of six datasets, labeled as Mean, to comprehensively evaluate the overall performance of the model.

In the COVER, CASIA v1, and IMD datasets, our proposed method achieves the best performance. In the NIST and DEF-12k datasets, we achieved the second-best performance, which was only 1.6% lower than H-LSTM and 0.8% lower than ManTra-Net, respectively. Compared with MVSS-Net++, the performance of our proposed method is almost the best in all datasets. The best performance of the ManTra-Net in DEK-12k is owed to the large-scale training data that comes from the COCO dataset. Compared with the models training on the CASIA v2, i.e., MVSS-net++, GSR-Net, and CR-CNN, the proposed method is better than them on almost all test datasets, which also proves that our method has much better generalization in the different data settings. Overall, the proposed method performs the best in Mean values is to be expected.

4.4.2. Image-Level Manipulation Detection

In

Table 5, we use four datasets to evaluate the image-level forgery detection performance of different models. Compared with

Table 4,

Table 5 does not use the NIST dataset to evaluate the performance of these models, because the NIST dataset does not provide authentic images for image-level assessment.

As listed in

Table 5, the AUC of the proposed model is much closer to 1 in all four datasets, which means that the proposed model has a much greater ability to distinguish between the forgery and authentic images. Meanwhile, the

scores of the proposed model are also the best among these compared models, which means that the proposed model has great performance in forgery image detection.

4.4.3. The Overall Performance

The overall performance, as measured by Com-

, which is computed from both pixel-level and image-level

scores, is listed in

Table 6. As shown in this table, the proposed model achieves the best performance, demonstrating that our method is more capable of adapting to real-world detection environments.

4.4.4. Comprehensive Analysis

MMFD-Net outperforms recent state-of-the-art methods such as MVSS-Net++ and CAT-Net. To elucidate why MMFD-Net surpasses these models, we analyze its key components and their contributions to the overall performance.

- 1.

Multi-Stream Edge Feature Learning (MSEFL)

MMFD-Net introduces an MSEFL module that leverages both low-level edge features and high-level abstract features. By explicitly focusing on the boundaries of manipulated regions—where tampering traces are most likely to appear—the module enhances both detection and localization. Aggregating edge cues across multiple network stages enables the model to capture fine-grained as well as higher-level boundary information, which is particularly beneficial in challenging cases with subtle edges. Because edge cues are relatively content-agnostic, MSEFL also improves generalization across manipulation types and datasets, helping to mitigate overfitting.

- 2.

Multi-Dimensional Information Fusion (MIF)

MMFD-Net employs an MIF module to integrate features from the color view and the noise view branches. Using a self-attention mechanism, the model dynamically reweights features and focuses on information most relevant to forgery detection. This fusion combines complementary cues—noise features are sensitive to inconsistencies introduced by tampering, while color features capture visual anomalies—yielding a richer and more comprehensive representation that improves discrimination between authentic and manipulated regions.

- 3.

Joint Supervision Learning

We train MMFD-Net with joint supervision over multiple tasks, including image-level classification, pixel-level localization, and edge learning. This multi-task strategy encourages the model to learn semantics-agnostic forgery cues that are crucial for robust detection and generalization. By learning from multiple complementary objectives, the model excels at both pixel-level and image-level detection, reducing the risk of overfitting and improving performance across manipulation types and datasets.

Given the architectural similarities (both adopt color and noise branches and consider manipulation edges) of MMFD-Net and MVSS-Net++, we provide a focused comparison. MVSS-Net++ explores manipulated boundaries by applying a fixed Sobel operator to side outputs from multiple ResNet stages. As a handcrafted operator, Sobel is not adapted through learning and may be less flexible in capturing diverse manipulation patterns. In contrast, MMFD-Net learns edge features end-to-end, allowing the boundary extractor to adapt to the data distribution and better model complex, variable tampering artifacts.

The superior performance of MMFD-Net stems from the synergy among advanced edge-feature learning (MSEFL), multi-dimensional fusion (MIF), and joint supervision. Edge-centric learning is critical for reliable localization, while fusion and multi-task training further enhance robustness and versatility. Together, these components enable MMFD-Net to achieve state-of-the-art results in image forgery detection and localization.

4.4.5. Computational Complexity and Efficiency

We follow a deployment-oriented protocol: RTX 3090 (24 GB), CUDA 12, PyTorch 1.8, FP32, input resolution , and batch size one. Latency is measured with CUDA events and synchronization using 20 warm-up and 200 measured iterations. GPU memory is the peak allocated value obtained after resetting CUDA memory statistics. Model size is given in parameters (M) and computational cost in GFLOPs at with a consistent counter.

Table 7 summarizes computational efficiency under our unified protocol. ManTra-Net is an extremely lightweight baseline (3.81 M parameters, 0.01 GFLOPs, 1.41 ms latency, 0.014 GB peak memory), but its detection accuracy in

Table 4,

Table 5 and

Table 6 is weak across most datasets. CAT-Net is substantially heavier (114.26 M parameters, 59,907.14 GFLOPs), with a latency of 41.77 ms and 0.43 GB peak memory, and its overall Com-F1 remains limited.

In contrast, MMFD-Net achieves a mean latency of 30.90 ms, which is 10.87 ms faster than CAT-Net (≈

reduction), while using 0.58 GB peak memory (increasing 0.15 GB; ≈

) and 154.59 M parameters (increasing 40.33 M; ≈

). Notably, its computational count is 1667.97 GFLOPs, whereas CAT-Net reports 59,907.14 GFLOPs. Coupled with the accuracy advantages in

Table 4,

Table 5 and

Table 6—MMFD-Net attains the highest mean Com-

of 45.7—these results indicate a favorable cost–benefit trade-off: the multi-branch design introduces moderate memory/parameter overhead but delivers lower end-to-end latency and clearly superior detection performance.

For deployment, when accuracy and real-time performance are both required on a single GPU, MMFD-Net achieves a favorable accuracy–efficiency trade-off and is recommended as the default detector. When resources are severely constrained (edge/embedded scenarios), ManTra-Net can serve as a fast pre-filter, with positives rechecked by MMFD-Net. CAT-Net is heavier and slower under our setting while being less accurate; unless compatibility dictates otherwise, MMFD-Net is the better practical choice.

5. Conclusions

The proposed Multi-branch Multi-dimensional Forgery Detection Networks (MMFD-Net) effectively enhance the performance and generalization ability of digital image forgery detection by integrating image-level classification, pixel-level tampering region localization, and edge information. The design of MMFD-Net fully utilizes the advantages of the multi-branch structure. It enhances the model’s perception of tampered regions through the Multi-dimensional Information Fusion (MIF) module and the Multi-Stream Edge Feature Learning (MSEFL) module, and further improves the robustness of the model through joint supervised learning. Experimental results demonstrate that MMFD-Net achieves excellent performance on multiple public datasets, especially in pixel-level and image-level detection tasks, where its comprehensive performance metric (Com-) outperforms several existing state-of-the-art methods. Moreover, MMFD-Net demonstrates good generalization ability in handling complex scenarios and various types of forgery methods, proving its potential for practical applications.

Despite the commendable performance of MMFD-Net in the aforementioned experiments, its limitations persist in detecting highly compressed or low-resolution images, as well as forged images generated by GANs.

- (1)

Limitations in handling highly compressed and low-resolution images.

High compression ratios can markedly degrade discriminative image cues, making it challenging for any forgery detection model to identify manipulated regions. Like other deep learning models, MMFD-Net may be affected by detail loss and amplified artifacts introduced by heavy compression. Low-resolution images pose related difficulties, including reduced feature richness and coarse boundary evidence. Because the MSEFL module relies on edge cues, it can be harder to detect subtle boundaries at low resolution, which may diminish localization accuracy.

As potential remedies, future work may apply preprocessing such as super-resolution enhancement or denoising before inference, and/or train the model on more diverse datasets that explicitly include heavily compressed and low-resolution images to improve robustness to these conditions.

- (2)

Limitations in handling GAN-generated forgeries.

GANs can produce highly realistic forgeries that are difficult to detect. MMFD-Net, like other detectors, may struggle when inconsistencies are extremely subtle and when the adversarial generation process exploits model weaknesses to evade detection.

To address this, future work could incorporate adversarial training—i.e., training on GAN-generated forgeries—to improve the model’s ability to recognize such manipulations.