1. Introduction

In recent decades, neural networks have become a fundamental tool for solving complex problems, ranging from image processing and speech recognition to time series forecasting and large-scale data classification. Their ability to learn hierarchical representations and extract features from unstructured data has positioned neural networks at the core of applications in computer vision, natural language processing, and applied artificial intelligence [

1,

2,

3,

4,

5]. As architectures and algorithms continue to evolve, the applicability of neural networks has expanded significantly across multiple domains.

In their standard form, neural networks employ predefined activation functions (e.g., ReLU, sigmoid) in different layers [

6]. While these nonlinear functions allow networks to model complex relationships, the resulting architectures often suffer from interpretability issues due to the opaque nature of their learned representations. In particular, linear and nonlinear transformations are treated independently, without explicit interactions, making the decision process difficult to trace and explain [

7]. This limitation has motivated the development of alternative architectures that balance predictive performance with interpretability.

One such alternative is the family of Kolmogorov–Arnold Networks (KAN). Rather than being a single new architecture, KAN are inspired by the celebrated Kolmogorov–Arnold representation theorem, which states that any multivariate continuous function can be represented as a finite composition of univariate continuous functions. This theoretical result provides a mathematically grounded framework for constructing neural networks where nonlinearity is distributed along edges through functional activations, instead of being concentrated in fixed activation nodes [

8,

9,

10]. Such a formulation has been explored in diverse contexts, from solving differential equations [

11] and fraud detection [

12,

13] to data analysis, forecasting [

14,

15,

16,

17], and real-time autonomous control systems.

Given the growing interest and rapid development of KAN-based models, there is now a fragmented body of literature that introduces a variety of structural variants, each with its own mathematical formulation, strengths, and limitations. To the best of our knowledge, no systematic review has yet consolidated these contributions. Therefore, the purpose of this article is not to propose a novel network, but to provide a comprehensive review that unifies the main structural variants of KAN, including Wavelet-KAN, Rational-KAN, MonoKAN, Physics-KAN, Linear Spline KAN, and Orthogonal Polynomial KAN. Throughout the paper, we adopt a consistent mathematical notation: input vectors are denoted as , intermediate features as , edge functions as , and outputs as . This notation will be used to ensure clarity and uniformity in all subsequent sections.

In this context, the research aligns with the Sustainable Development Goals (SDG). It contributes to SDG 9 (Industry, Innovation and Infrastructure) by providing a rigorous, standardized synthesis of KAN variants that supports interoperable and reproducible digital infrastructures. In this way, the study not only organizes and clarifies the state of the art on KAN with unified notation and comparable criteria, but also narrows the implementation gap by proposing methodological guidelines for AI systems that are accurate, interpretable, and energy-aware.

The remainder of the paper is organized as follows:

Section 2 introduces the mathematical background of the Kolmogorov–Arnold theorem and its neural interpretations.

Section 3 reviews the main KAN variants in detail, highlighting their mathematical structures, advantages, and limitations.

Section 4,

Section 5,

Section 6,

Section 7,

Section 8,

Section 9,

Section 10,

Section 11,

Section 12,

Section 13,

Section 14 and

Section 15 then develop, in progressive order, families and extensions based on splines, polynomials (Chebyshev, Gram, and other orthogonal ones), wavelets, monotonicity and physical constraints, rational functions, and piecewise linear activations, addressing their formulation, advantages, limitations, and applications, and including a comprehensive comparison. The

Section 16 provides a comparative discussion of these approaches, and

Section 17 concludes with perspectives and open challenges for future research.

2. Methodology and Model Architecture

The following section provides a description of the Kolmogorov–Arnold Network (KAN) architecture, intended to help the reader understand how the theoretical Kolmogorov–Arnold representation theorem is implemented in practice. This construction is typically realized using spline basis functions to approximate both the inner and outer univariate functions that appear in the decomposition [

8,

9,

10,

11]. The mathematical structure and its key components are described below.

2.1. Inner Functions as Spline Expansions

The inner univariate functions

, which act on each coordinate

of the input vector

, are parameterized by

univariate spline expansions. Each inner function is expressed as a linear combination of local spline basis functions:

where:

are spline basis functions (commonly cubic B-splines) that guarantee local smoothness and piecewise continuity [

12].

are trainable coefficients controlling the contribution of each basis.

K denotes the number of knots (partition points of the domain of ), which define local regions where different polynomial pieces apply.

Purpose: inner splines capture nonlinear relationships of each scalar input

with localized smooth adaptations, mitigating the global oscillations typical of high-order polynomial approximations [

13].

2.2. Aggregation of Inner Functions

The outputs of the inner functions are aggregated linearly to form intermediate latent features

:

where the index

q ranges up to

in accordance with the Kolmogorov–Arnold theorem, which requires

outer univariate functions for the representation of any

n-variate continuous function [

14]. These latent features

encode nonlinear interactions across the

n input coordinates.

2.3. Outer Functions as Splines

The outer univariate functions

transform the intermediate features into outputs. They are also represented via spline expansions:

where:

are spline basis functions ensuring continuity and differentiability [

15].

are trainable coefficients.

M is the number of basis functions selected for each outer spline.

Purpose: these functions combine nonlinear contributions from intermediate features

, yielding the hierarchical composition required by the Kolmogorov–Arnold decomposition [

16,

17].

2.4. Final Model Output

The prediction of the KAN is obtained by summing all transformed features:

This output construction preserves consistency with the Kolmogorov–Arnold representation theorem while combining both localized inner contributions and global outer transformations.

2.5. Trainable Parameters and Optimization

The parameter set of the KAN consists of:

Inner spline coefficients : approximately parameters, accounting for n inputs, intermediate sums, and K spline basis functions per coordinate.

Outer spline coefficients : parameters, with M basis functions per outer spline.

Optimization is typically performed via gradient-based algorithms (e.g., Adam), minimizing a loss such as the mean squared error over a dataset [

18,

19]. The inherent smoothness of splines acts as a form of implicit regularization. Explicit regularization techniques such as curvature penalization or

-norm regularization can be applied to further prevent oscillations or overfitting [

20,

21]. Input normalization is recommended to stabilize training, since splines are defined over compact intervals [

22].

3. Mathematical Foundations of Interpolation Methods

Understanding the theoretical underpinnings of Kolmogorov–Arnold Networks (KAN) requires a solid foundation in function approximation theory, particularly the Kolmogorov–Arnold representation theorem and its implications for neural architecture design. This section outlines the mathematical principles that justify the structure of KAN, including univariate function decomposition, spline-based function construction, and the transition from traditional neural activations to more expressive basis function models. By grounding KAN in formal mathematical constructs, we establish both the theoretical motivation and the functional versatility of this class of network

3.1. Lagrange Polynomials

The Lagrange polynomial interpolates

n points

through a linear combination of basis polynomials:

Each term

is a polynomial that equals 1 at

and 0 at other nodes. This ensures

. However, for large

n, the Runge phenomenon causes extreme oscillations [

23].

3.2. Bézier Curves

A Bézier curve of degree

m is defined using Bernstein polynomials:

The curve passes only through

and

, while intermediate points (

) act as “shape controllers”. The derivative at the endpoints depends on adjacent points:

Bézier curves are widely used in computer graphics and geometric modeling due to their intuitive control and smoothness properties [

24].

3.3. Piecewise Linear Interpolation

Within each interval

, the function is a straight line:

The function is continuous (

) but not differentiable at nodes. The maximum error (for

) is bounded by:

where

h is the maximum interval size. This method is simple and fast, although limited in smoothness [

25,

26].

3.4. Cubic Splines

Each segment of the cubic spline

in

is a third-degree polynomial:

Continuity conditions

(function, first, and second derivatives) are enforced at nodes. For a

natural spline, the tridiagonal system is solved:

Cubic splines are the foundation for many KAN variants due to their smoothness and local control properties [

27,

28,

29,

30].

3.5. Hermite Splines

Interpolates values

and derivatives

at each node:

Continuity

and explicit control of slopes at nodes are guaranteed, ideal for applications requiring known derivatives (e.g., trajectories with specified velocities) [

30]. In order to contextualize the choice of functional bases used within Kolmogorov–Arnold Networks, it is useful to briefly review classical interpolation techniques.

Table 1 summarizes the main methods, highlighting their advantages and disadvantages. This comparative overview provides the mathematical background against which spline- and polynomial-based variants of KANs can be better understood.

4. Advantages of Splines in KAN

Splines, when implemented in Kolmogorov–Arnold Networks (KAN), provide mathematical and computational advantages that enhance model performance and stability. This section highlights their properties, followed by a detailed example of cubic B-splines [

31,

32,

33].

4.1. Local Adaptability

Spline basis functions possess

compact support, meaning they are non-zero only over a finite subinterval of the domain. As a result, splines can adjust their shape in specific regions without affecting distant areas. In a physiological signal modeling task, for example, a spline can adapt locally to a cardiac peak at

while preserving stability in normal zones. Formally,

where

is non-zero only in the neighborhood of the knot

[

34,

35]. This property prevents overfitting at a global scale, since changes are localized.

4.2. Guaranteed Smoothness

Cubic splines ensure

continuity, i.e., continuity of the function and its first two derivatives. This property is crucial to avoid physically unrealistic discontinuities and to guarantee smooth gradients in the network output. For instance, in fluid dynamics simulations one requires

which minimizes the curvature energy functional

a standard regularization principle in physics and approximation theory [

34,

35].

4.3. Computational Efficiency

Efficient recursive algorithms such as De Boor’s algorithm allow evaluation of B-splines with complexity

per evaluation point. For cubic splines (

),

This is significantly more efficient than global interpolation methods such as Lagrange polynomials, which typically require

operations [

36,

37].

4.4. Interpretability of Coefficients

The coefficients in spline expansions carry a direct geometric interpretation. For example, for an outer spline

a large magnitude of

indicates a dominant influence of the basis function

on the output. This property enables the inspection of fitted models: coefficients can highlight overfitting, emphasize relevant regions of the input space, or identify dominant features [

38].

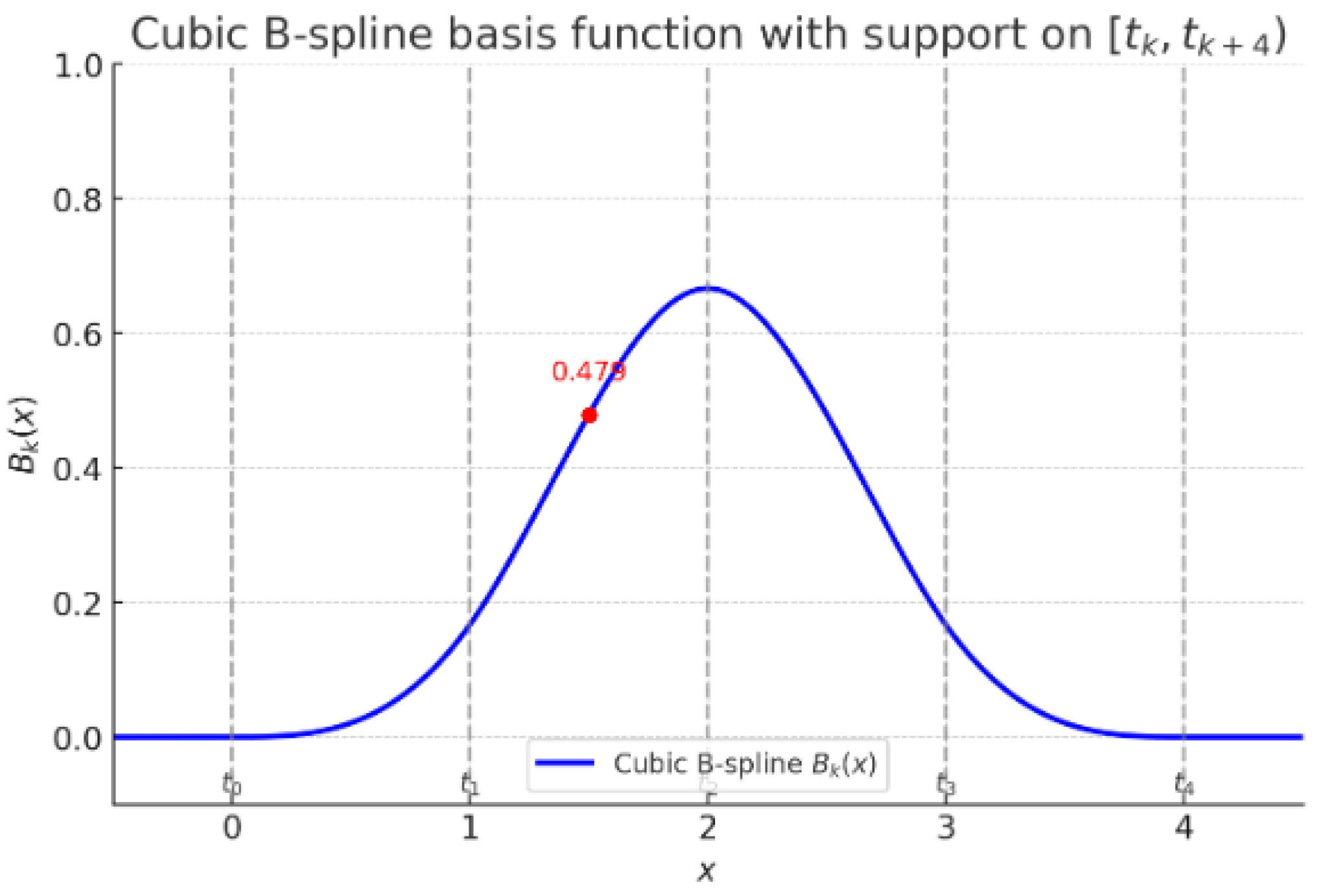

4.5. Detailed Example: Cubic B-Splines

A cubic B-spline basis function is defined piecewise over four consecutive knot intervals. Let

be a non-decreasing sequence of knots, and

the uniform knot spacing. Then

Key properties:

Local support: is non-zero only on .

Normalization: .

Smoothness: belongs to , ensuring continuity of , , and .

Numerical example: For uniform knots

(

), evaluating

at

gives

As shown in

Figure 1, ilustrates both the locality and the normalization property of the cubic B-spline.

5. New Architectures and Applications of Kolmogorov-Arnold Networks (KAN)

Kolmogorov-Arnold Networks (KAN) represent a significant evolution in the design of machine learning architectures, combining the classical Kolmogorov-Arnold representation theorem with modern techniques of interpolation through adaptive splines [

37,

38,

39]. These networks stand out for their ability to process dynamic and sequential data, such as financial time series or biomedical signals [

37,

38,

40], thanks to a structure that integrates internal (

) and external (

) functions in hierarchical layers. In finance, KAN are applied to market prediction, modeling nonlinearities in high-frequency data, and to option pricing, outperforming traditional methods like Black-Scholes in complex scenarios [

32]. In robotics, their implementation in reinforcement learning enables autonomous control of dynamic systems, optimizing real-time decision-making [

33,

34], while in healthcare, they facilitate treatment personalization through the analysis of individual biological responses [

35,

36]. Among their key advantages are local adaptability, which adjusts parameters in specific regions without affecting the global model, and the interpretability of coefficients, which reveal contributions from critical variables [

36,

37]. However, they face challenges such as robustness against noise in volatile financial environments and latency in embedded hardware for real-time robotic applications [

38]. Although promising, their industrial adoption requires advances in generalization through techniques like transfer learning [

39], thereby solidifying their potential to revolutionize fields demanding mathematical precision and practical adaptability [

41,

42,

43].

6. Classification of KAN Variants: Replacement of Splines with Chebyshev Polynomials

A key variant of Kolmogorov–Arnold Networks (KAN) replaces spline-based activation functions with Chebyshev polynomials, modifying both their mathematical structure and functional behavior [

44,

45]. In standard KAN, the inner functions

are defined using spline bases:

where

are local basis functions supported on intervals defined by knots. In the Chebyshev variant, splines are replaced with global orthogonal polynomials:

Chebyshev polynomials

minimize the maximum error in the

norm, achieving near-optimal polynomial approximation with exponential convergence for smooth functions [

44,

46]. However, since

is undefined outside

, direct use is unstable in unbounded domains.

To overcome this, a nonlinear mapping via the hyperbolic tangent compresses the domain:

which ensures inputs

are mapped into

, preserving stability [

45]. For instance, for

,

whereas

would be undefined.

6.1. Structural Impact on KAN

Switching from local splines to global Chebyshev polynomials produces important architectural changes. Local adaptability is sacrificed, but extrapolation capabilities are enhanced [

44]. Moreover, the model is simplified by removing explicit knot dependence, reducing the parameter count to the set of coefficients

.

6.2. Advantages and Disadvantages

The Chebyshev-based formulation provides several practical advantages. It offers numerical stability on bounded domains, ensures smooth extrapolation beyond the training interval, and typically requires fewer parameters compared to spline-based KAN. Furthermore, the reduced flexibility of global polynomials can help mitigate overfitting, particularly in noisy datasets [

44,

45]. Nevertheless, these benefits come at a cost. The replacement of local spline bases with global polynomials reduces the ability to model abrupt local variations and increases sensitivity to non-smooth or discontinuous functions. Additionally, the model is inherently restricted to bounded domains, requiring nonlinear mappings such as tanh for stability outside the canonical interval, which may introduce distortions [

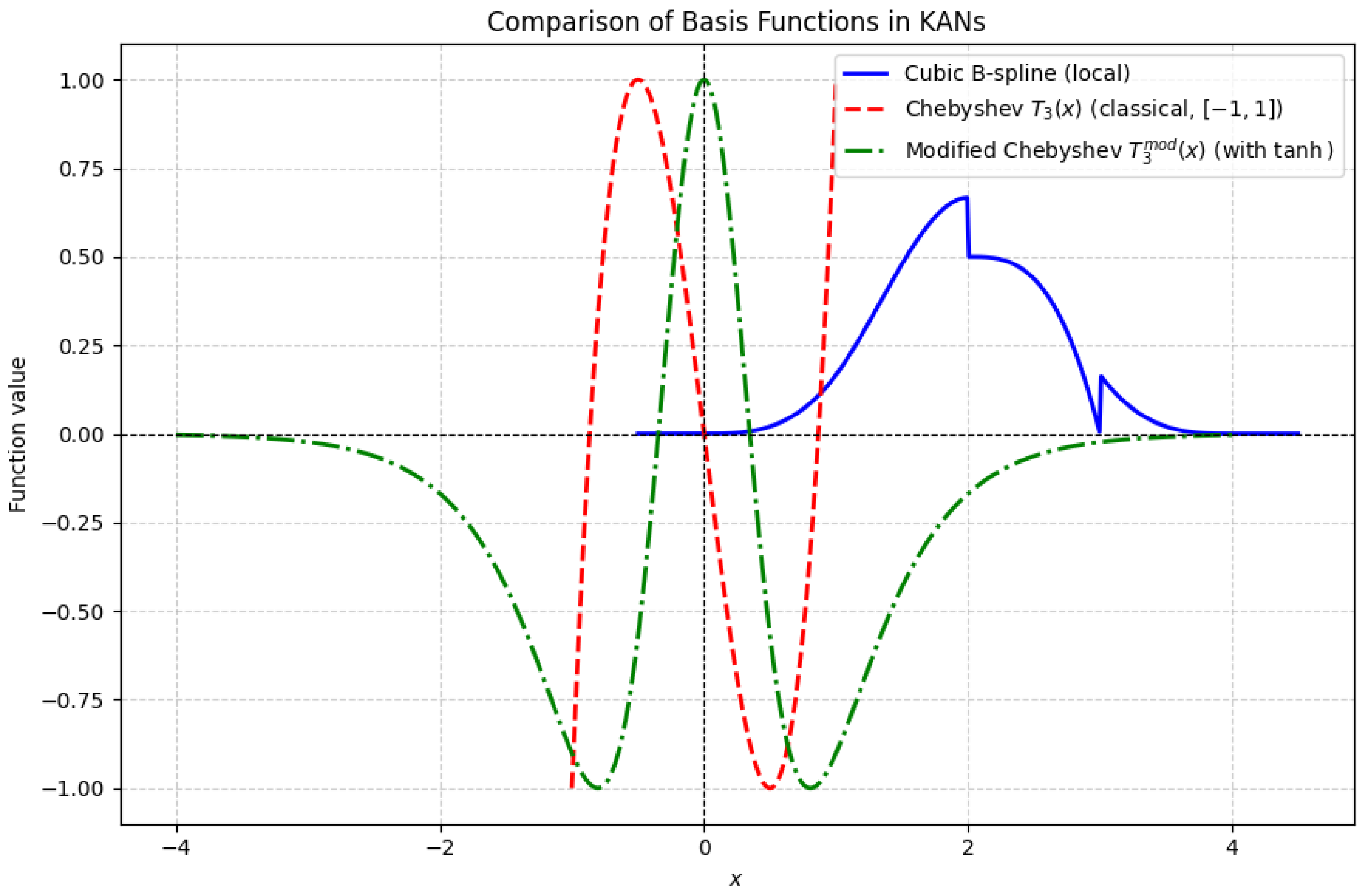

47]. In order to highlight the structural and functional differences introduced by replacing spline functions with Chebyshev polynomials,

Table 2 provides a comparative summary. This table contrasts spline-based KAN with both the classical and the modified Chebyshev formulations, emphasizing aspects such as support, adaptability, stability, parameterization, and domains of applicability. The comparative perspective allows the reader to clearly identify the trade-offs between local flexibility and global stability, as well as the advantages of domain extension achieved through nonlinear mappings.

To complement the tabular analysis,

Figure 2 illustrates the behavior of the basis functions employed in different KAN variants. The cubic B-spline demonstrates its local adaptability restricted to knot-defined intervals, while the classical Chebyshev polynomial

exhibits a smooth but globally constrained behavior within

. In contrast, the modified Chebyshev

extends this approximation to the entire real line by incorporating a tanh mapping, ensuring numerical stability across unbounded domains. This visual comparison reinforces the analytical discussion by showing the practical consequences of choosing local versus global basis functions.

7. Classification of KAN Variants: Gram Polynomials (GKAN)

A novel variant of Kolmogorov–Arnold Networks, termed GKAN, replaces traditional spline-based activations with orthogonal Gram polynomials. This approach optimizes computational efficiency in sequential and convolutional architectures while preserving approximation flexibility [

44]. In practice, GKAN address the trade-off between expressiveness and resource constraints in 1D-CNN and time-series tasks.

7.1. Structural and Mathematical Foundation

The GKAN formulation defines activations using Gram polynomials

, which are orthogonal on the interval

. A general activation can be written as

where the Gram polynomials are normalized Legendre polynomials:

7.2. Mathematical Purpose

The adoption of Gram polynomials in GKAN serves three key purposes. First, they reduce computational cost, since Gram polynomials avoid the recursive constructions typical of Chebyshev or spline bases, lowering time complexity from

to

. Second, they provide inherent orthogonality on

, minimizing numerical instability during coefficient fitting and ensuring robustness in high-dimensional data. Third, despite being global functions, the weighted expansion

retains sufficient flexibility to approximate localized features through appropriate coefficient adaptation. This combination ensures efficient, stable, and adaptable modeling for sequential data tasks [

45].

7.3. Example: Gram Polynomial Evaluation

For

and

, the Gram polynomial

is obtained from the Legendre polynomial

. Substituting yields:

7.4. Advantages and Disadvantages

The use of Gram polynomials in KAN presents important advantages. Their reduced computational complexity makes them particularly suitable for real-time and resource-constrained applications. The built-in orthogonality of

prevents matrix ill-conditioning and stabilizes gradient-based training, thereby improving robustness in high-dimensional tasks. However, they also exhibit limitations. Since Gram polynomials are defined on the bounded interval

, scaling transformations are required for general inputs. Furthermore, they offer less local adaptability than spline bases, which can restrict their performance in modeling abrupt variations [

47,

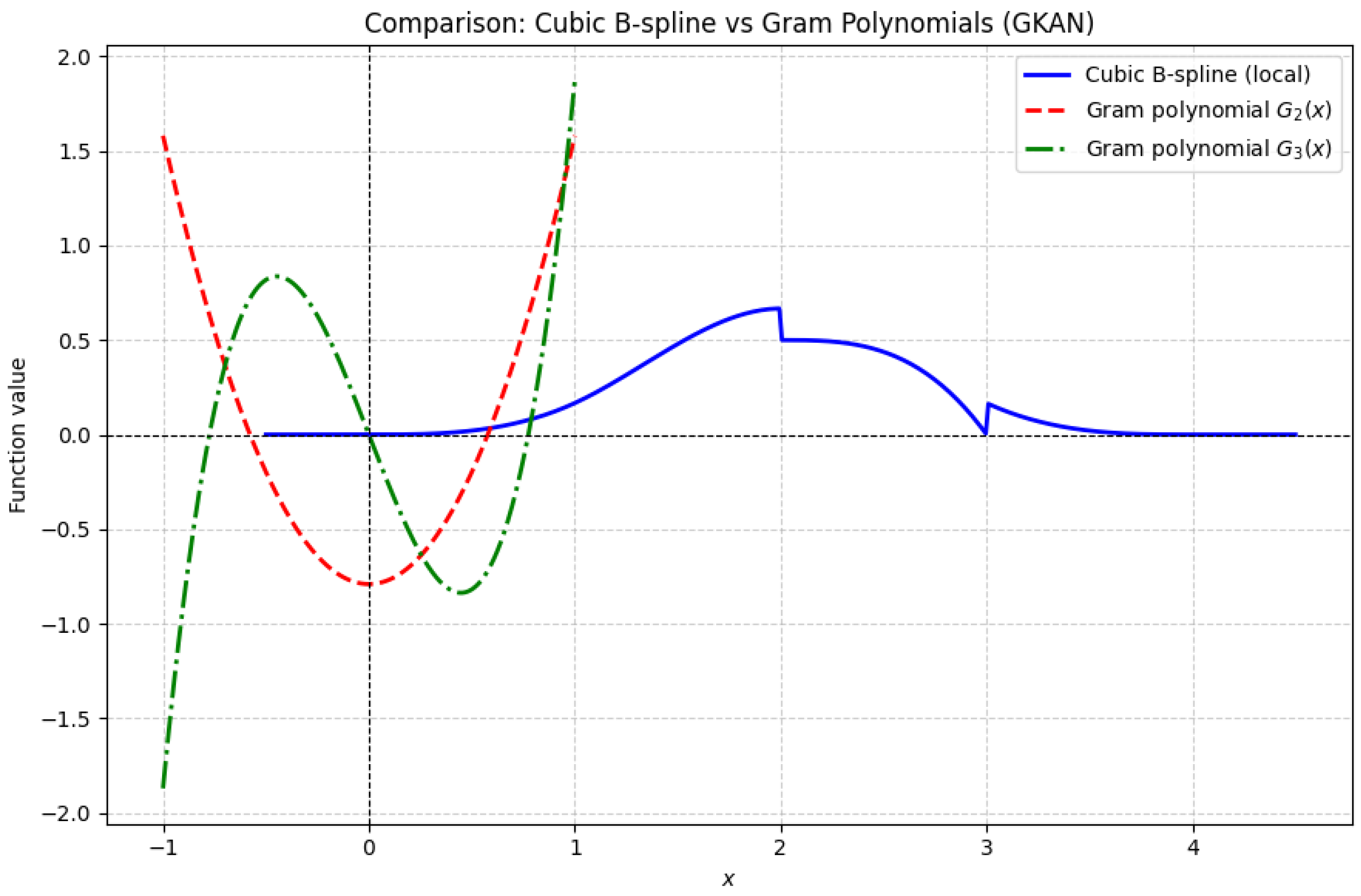

48]. To highlight the structural differences between spline-based, Chebyshev-based, and Gram-based activations,

Table 3 presents a comparative overview. This table emphasizes how Gram polynomials balance computational efficiency and numerical stability, while contrasting with the local adaptability of splines and the global approximation of Chebyshev polynomials. The comparison clarifies the trade-offs in expressiveness, stability, and suitability for different application domains.

To complement the Summary table,

Figure 3 illustrates the difference between a cubic B-spline and Gram polynomials of orders

and

. While the spline exhibits compact local support, Gram polynomials extend globally over the interval

, providing orthogonality and smooth approximation properties. This visualization reinforces the analytical discussion by contrasting local piecewise functions with global orthogonal bases.

8. Classification of KAN Variants: Wavelet-Based Activation (Wav-KAN)

The Wav-KAN variant introduces wavelet functions as replacements for splines in Kolmogorov–Arnold Networks, enabling multi-scale feature extraction essential for signals with heterogeneous frequency components, such as audio or seismic data [

49,

50,

51]. This adaptation leverages the time-frequency localization of wavelets to simultaneously capture abrupt transitions and smooth trends within the same architecture [

46].

8.1. Structural and Mathematical Foundation

In Wav-KAN, the activation functions are defined using dilated and translated wavelet bases of the form

, where

denotes the scale parameter and

the translation parameter. Both

and

are trainable, enabling adaptive resolution across different scales. The general expression is given by:

where

is the mother wavelet, such as Morlet or Daubechies [

52,

53].

8.2. Mathematical Purpose

The use of wavelets in Wav-KAN serves three purposes. First, they provide multi-scale analysis, enabling the model to capture simultaneously high-frequency details (such as edges in images) and low-frequency trends (such as seasonal components in time series) [

48]. Second, they promote sparse representations, since many wavelet coefficients vanish for structured signals, which reduces feature redundancy [

54]. Third, they preserve a local-global balance: locality is retained through translations

, while broader trends are modeled through hierarchical scaling with

[

55]. This combination ensures adaptability to both transient anomalies and persistent structures in non-stationary data.

8.3. Example: Morlet Wavelet Activation

For the Morlet wavelet defined as

, the activation at

with

and

is computed as:

8.4. Advantages and Disadvantages

The incorporation of wavelets in KAN provides significant advantages. Their multi-resolution capability makes them highly effective in denoising, compression, and pattern recognition tasks, while the trainable parameters

and

allow adaptability to non-stationary signals. Furthermore, their compatibility with Fourier-domain optimizations facilitates integration with spectral methods [

54,

55]. However, these benefits are counterbalanced by notable drawbacks. Wavelet-based activations require higher computational cost due to the parameterization of scale and translation, and the performance is sensitive to the choice of mother wavelet, which may not be straightforward and often requires empirical selection [

55].

To contextualize the role of wavelets within Kolmogorov–Arnold Networks,

Table 4 summarizes the main differences between spline-based, Chebyshev-based, and wavelet-based activations. This comparative perspective highlights how each approach balances locality, scalability, and computational complexity, providing a clearer understanding of the trade-offs involved in replacing splines with global or multi-scale basis functions.

To complement the comparative analysis,

Figure 4 illustrates the contrast between a cubic B-spline and a Morlet wavelet, which represent the fundamental bases of classical KAN and Wav-KAN, respectively. While the spline is restricted to compact local intervals defined by knots, the wavelet exhibits oscillatory behavior with exponential decay, enabling simultaneous representation of local and global structures. This visual contrast reinforces the theoretical discussion on locality versus multi-resolution adaptability.

9. Classification of KAN Variants: Monotonic Splines (MonoKAN)

The MonoKAN variant integrates Hermite cubic splines with derivative constraints to enforce monotonicity, a critical property in financial and actuarial models where functions must preserve order, such as non-decreasing mortality or credit risk curves [

49,

50]. This architecture ensures mathematically rigorous behavior while retaining the flexibility of spline-based representations [

50].

9.1. Structural and Mathematical Foundation

MonoKAN defines its activations using Hermite splines

with positivity constraints on both the weights

and the derivatives. The general formulation is given by

where each Hermite spline on the interval

takes the form

Monotonicity is guaranteed by constraining the first derivative,

ensuring that the resulting function is non-decreasing across the entire interval.

9.2. Mathematical Purpose

The design of MonoKAN fulfills three key objectives. First, it enforces monotonicity, guaranteeing that

never decreases, which is essential in applications such as actuarial life tables or dose-response relationships. Second, it embeds domain knowledge directly into the architecture by constraining derivatives, ensuring that risk or growth functions follow physically meaningful trends. Finally, the model improves interpretability since positive weights

align with additive contributions of risk or cumulative factors [

51].

9.3. Example: Hermite Spline Evaluation

For

on

with coefficients

,

,

, and

, the evaluation at

yields:

with derivative

demonstrating that the monotonicity condition holds.

9.4. Advantages and Disadvantages

The use of monotonic splines in KAN provides distinct benefits. By guaranteeing order-preserving activations, MonoKAN is highly interpretable and well-suited for domains where monotonic trends are fundamental, such as finance, insurance, or medicine. The explicit derivative constraints also reduce the risk of overfitting, as the hypothesis space is restricted to non-decreasing functions. Nevertheless, this comes at the cost of reduced expressiveness compared to unconstrained splines. In addition, optimization becomes more complex, as it must account for non-negativity and monotonicity conditions that increase the difficulty of training [

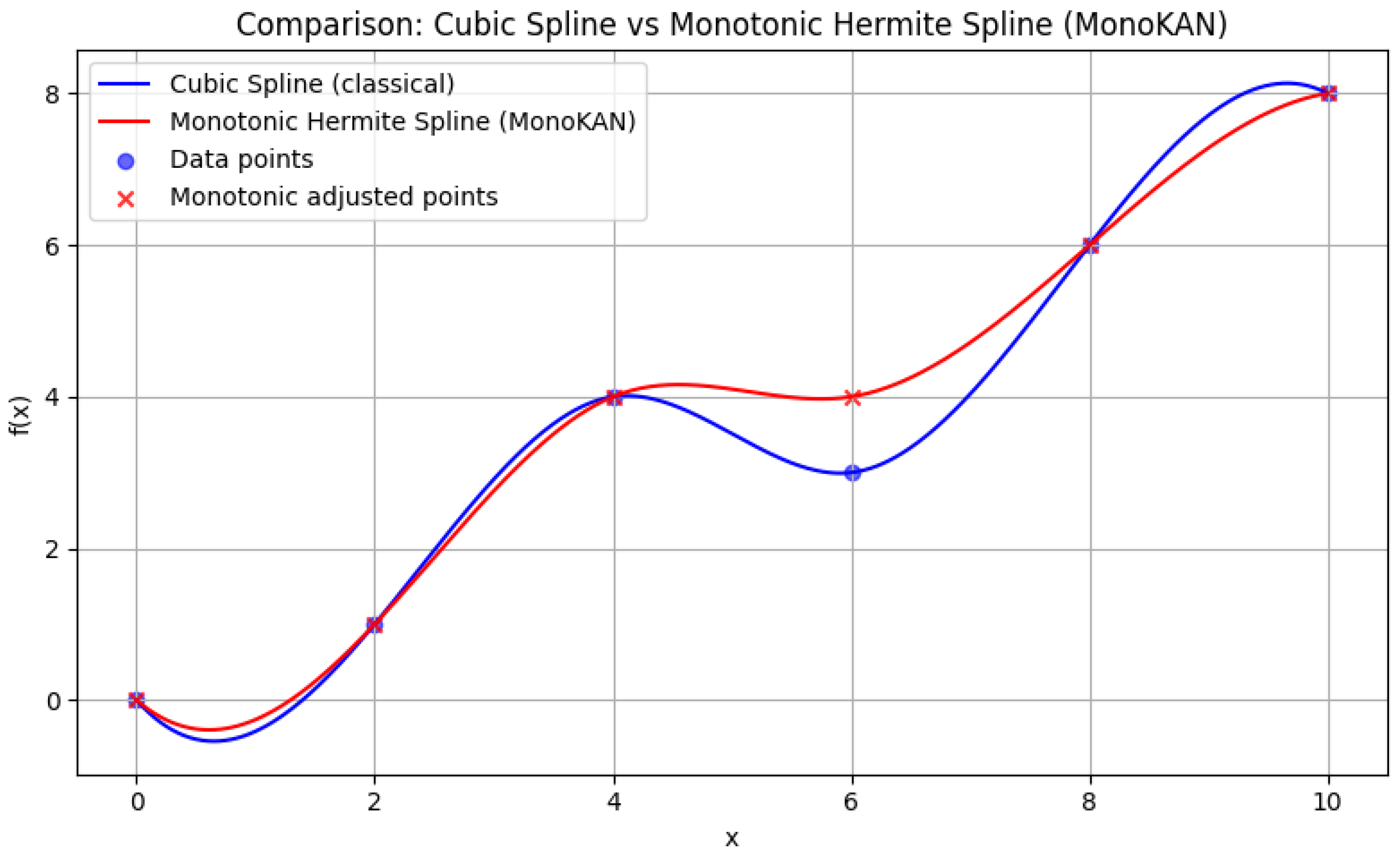

51]. To clarify the specific role of monotonicity constraints within Kolmogorov–Arnold Networks,

Table 5 compares classical spline-based activations, Gram polynomial expansions, and monotonic splines. The comparison highlights how MonoKAN trades some expressiveness for interpretability and order preservation, which are essential in domains where risk, growth, or probability functions must follow monotonic patterns.

To illustrate the impact of monotonicity constraints,

Figure 5 shows the difference between a standard cubic spline and a monotonic Hermite spline. While the classical spline can exhibit oscillations and local decreases, the constrained version ensures a non-decreasing trend across the entire interval, aligning the learned representation with domain requirements in finance and medicine.

10. Classification of KAN Variants: Replacement of Splines with Rational Functions

A key variant of Kolmogorov–Arnold Networks (KAN) replaces spline-based activation functions with

rational functions, altering both their mathematical structure and functional behavior [

54,

55]. In the rational formulation, the activation is expressed as:

which provides a compact representation for functions with sharp transitions or discontinuities. Rational activations are known to achieve exponential convergence rates for smooth functions, often requiring fewer parameters than splines to reach comparable accuracy [

56]. However, poles in the denominator may lead to numerical instabilities whenever

.

To mitigate this limitation, stabilized formulations introduce a constrained denominator:

where

ensures positivity and pole-free operation via sum-of-squares parameterization [

51]. This guarantees robustness even in unbounded domains. For example, with

,

,

, and

, the safe version yields

whereas the unconstrained formulation would be undefined if the denominator vanished.

Structurally, the use of rational functions modifies the nature of KAN by replacing locally supported splines with globally defined functions. This substitution reduces the parameter count from

to

, eliminates the dependence on knots, and introduces exponential approximation power at the cost of losing local adaptability [

54].

From an applied perspective, rational-based KAN offer a compact and efficient representation of sharp gradients and discontinuities, with improved generalization capabilities when compared to spline-based KAN. Nevertheless, their main drawbacks arise from sensitivity to denominator initialization, the need for explicit pole-avoidance mechanisms, and potential overflow in high-degree polynomials [

50]. In summary, Rational-KAN present a mathematically elegant alternative with strong approximation properties, but their stability must be carefully managed to ensure reliable deployment. The following

Table 6 summarizes the comparative aspects of Rational-based KAN with respect to spline-based KAN. It emphasizes their differences in terms of representation power, parameter efficiency, and numerical stability, highlighting both the potential benefits and the limitations that emerge from the rational formulation.

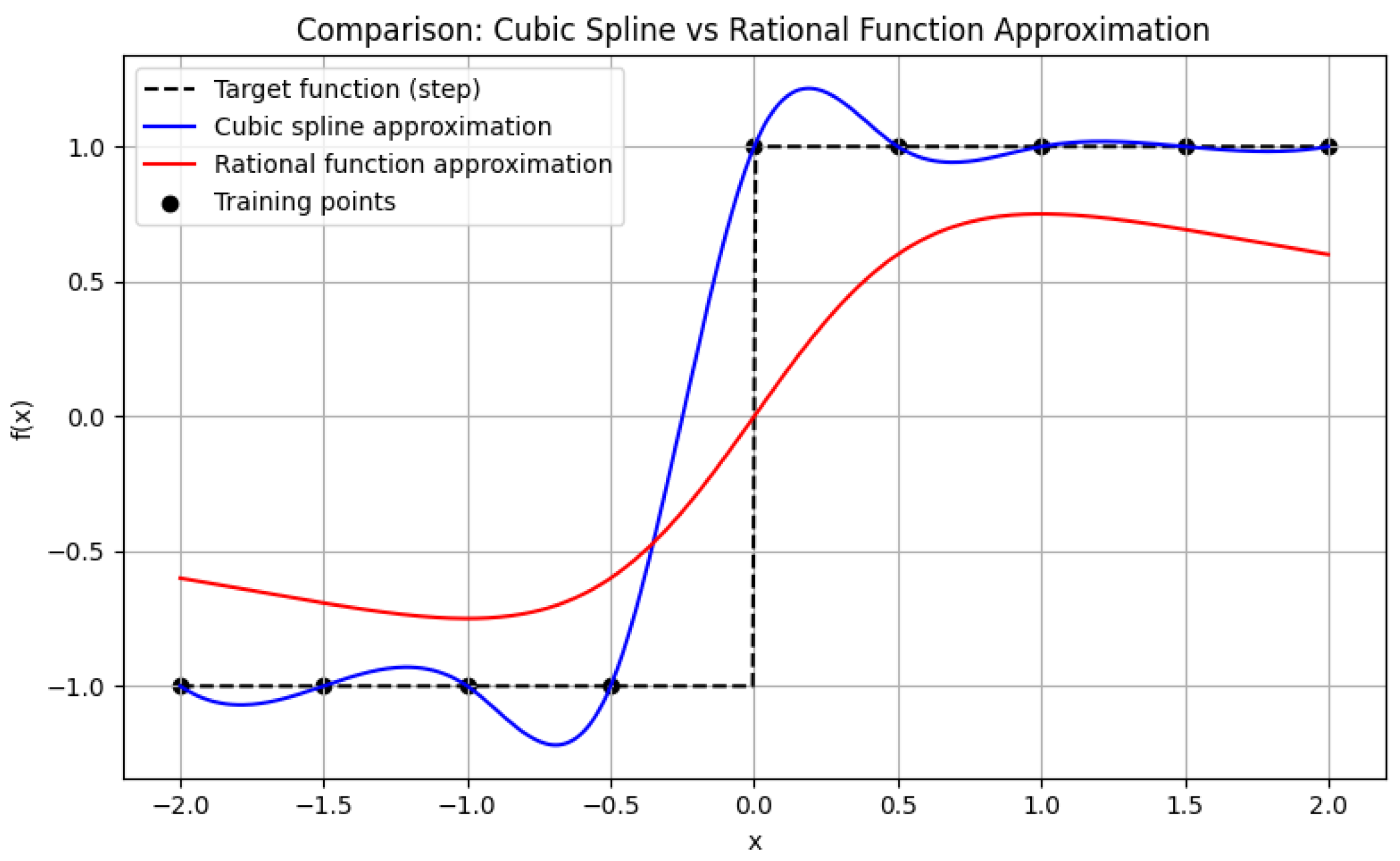

To illustrate the structural differences,

Figure 6 presents a comparison between a cubic spline approximation and a rational function approximation for a target function with a sharp discontinuity. While splines struggle to capture the abrupt transition without oscillations, the rational formulation achieves a more compact and accurate fit with fewer parameters.

11. Classification of KAN Variants: Physics-Constrained Splines

A key variant of Kolmogorov–Arnold Networks (KAN) augments spline-based activation functions with physics-informed constraints, fundamentally altering their optimization behavior while retaining their core mathematical structure [

50,

51]. In this formulation, the spline functions

are optimized under residual conditions derived from partial differential equations (PDEs), ensuring that the model is not only data-driven but also physically consistent [

35]. Mathematically, this corresponds to

where

represent differential operators encoding conservation laws and

act as Lagrange multipliers. While this mechanism enhances interpretability, it also introduces computational challenges, as direct PDE enforcement may destabilize the optimization when residual terms become dominant [

50].

To stabilize training, a differentiable physics loss is employed:

which acts as a regularizer and is optimized simultaneously with the data fidelity term. For example, in the case of Burgers’ equation

, the physics loss enforces the PDE dynamics while boundary terms preserve conditions on the spatial domain [

56]. This formulation aligns Physics-KAN with the broader family of Physics-Informed Neural Networks (PINNs), while maintaining spline-based flexibility.

From a structural perspective, Physics-KAN retain the locality of spline bases while reducing the dimensionality of the solution space through physics regularization. This integration enables training with significantly fewer labeled data points, improving extrapolation in unobserved domains and ensuring that predictions remain consistent with physical laws. Nevertheless, the approach comes with limitations: training incurs computational overhead due to PDE residual evaluations, convergence becomes sensitive to the weighting factor

, and the framework assumes access to differentiable physical models. Overall, Physics-KAN provide a promising balance between physical interpretability and data efficiency, albeit with increased training complexity. The following

Table 7 presents a comparative summary between traditional spline-based KAN and Physics-KAN. The goal is to highlight the added value of embedding physics-informed regularization, focusing on representation, generalization, and computational challenges.

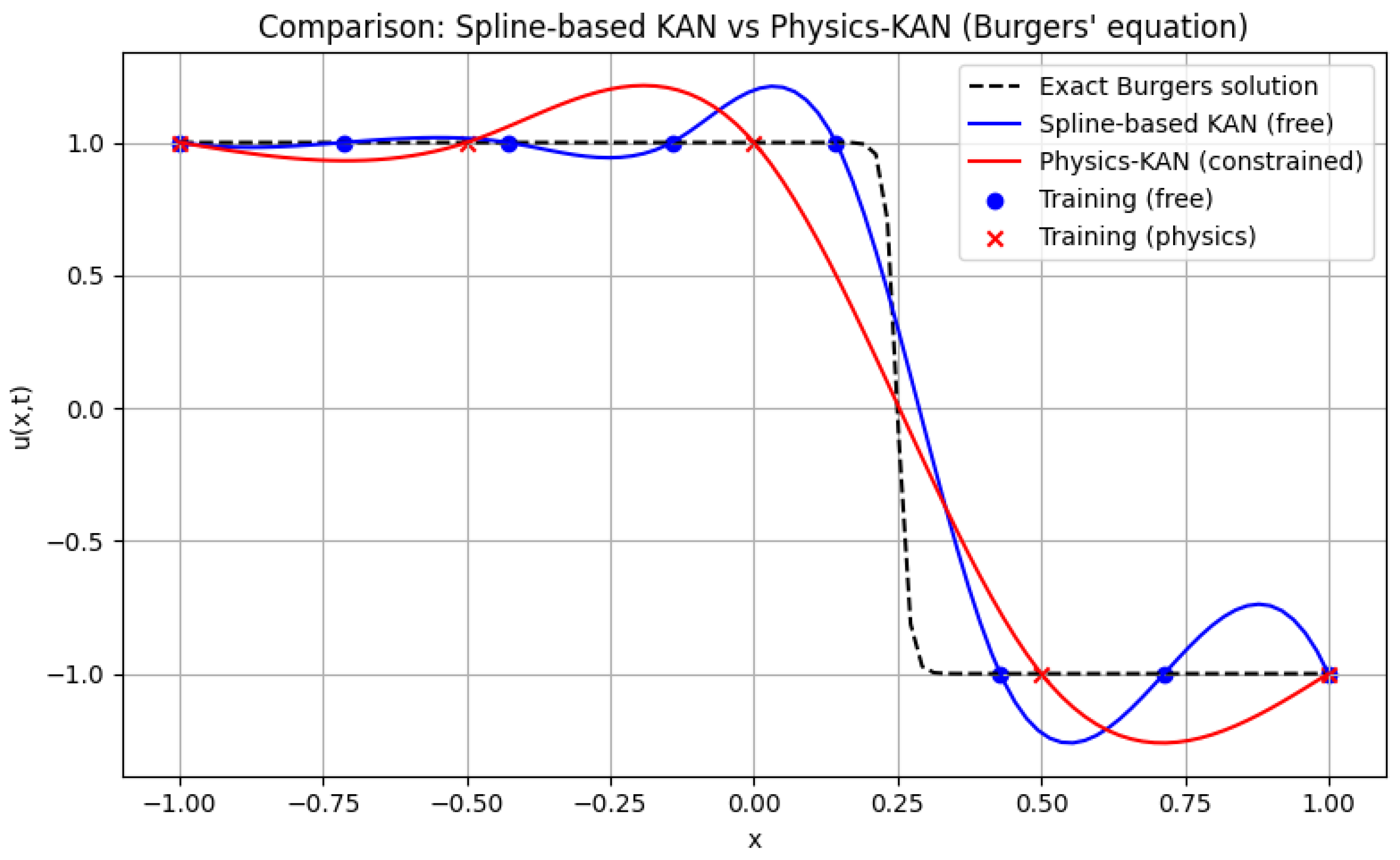

To visually demonstrate the effect of physics-informed constraints,

Figure 7 shows a comparison of spline-based and physics-constrained approximations for Burgers’ equation. The physics-regularized model enforces smoothness and adherence to PDE dynamics, while the unconstrained spline model deviates significantly in regions without data. To illustrate the practical effect of embedding physics constraints into Kolmogorov–Arnold Networks, we consider the one-dimensional Burgers’ equation, a canonical benchmark in computational fluid dynamics. Burgers’ equation is widely employed to test numerical schemes and physics-informed models because it combines nonlinear convection with diffusion effects, acting as a simplified prototype of the Navier–Stokes equations. Its form is given by:

where

denotes the velocity field, and

is the viscosity parameter.

This PDE is particularly relevant for Physics-KAN, as it highlights the importance of enforcing physical consistency when modeling dynamical systems with sharp gradients or shock-like structures. By comparing a spline-based KAN without constraints to a Physics-KAN regularized through the physics loss , it is possible to visualize how physical constraints improve the model’s extrapolation and stability beyond the training points.

12. Classification of KAN Variants: Linear Spline Activation Functions

A key variant of Kolmogorov–Arnold Networks (KAN) simplifies the spline-based activation functions by employing

linear splines, prioritizing computational efficiency while retaining adequate expressivity [

52,

53]. In this formulation, the basis functions are no longer high-order cubic splines but instead piecewise linear functions, often represented as triangular “hat” functions:

where

are equally spaced knots with spacing

h. Linear splines provide only

continuity, which implies exact reconstruction of piecewise linear functions with

convergence rate [

57], but lower approximation accuracy for smooth targets compared to cubic splines.

To further enhance efficiency and avoid unnecessary oscillations, a sparse derivative regularization is commonly applied:

which penalizes large second differences, promoting nearly linear regions. For example, with knots

and coefficients

, the evaluation at

yields

whereas a cubic spline implementation would require solving a tridiagonal system of equations.

Structurally, Linear-KAN introduce a significant simplification compared to cubic spline KAN [

53]. The computational complexity per activation decreases from

to

, enabling faster training and inference and allowing exact gradient computation. The parameter space also becomes more interpretable, shifting from abstract control points

to direct function values

at the knots. In practical terms, Linear-KAN offer 3–5x faster convergence and are natively compatible with gradient-based optimization methods. However, this comes at the cost of reduced expressivity: while they can represent piecewise linear functions exactly, they lack the smoothness of higher-order splines, requiring denser knots to achieve comparable accuracy and limiting their ability to model

-continuous phenomena. The trade-offs between cubic spline-based KAN and Linear-KAN are summarized in

Table 8, emphasizing the balance between computational efficiency and approximation accuracy.

To visually illustrate the effect of simplifying spline activations,

Figure 8 compares a cubic spline approximation and a linear spline approximation of a smooth function. While the cubic spline provides a smooth curve with continuous derivatives, the linear spline captures only piecewise linear trends, requiring a higher density of knots to achieve a similar level of accuracy.

13. Classification of KAN Variants: Orthogonal Polynomial Activations

A key variant of Kolmogorov-Arnold Networks (KAN) replaces spline-based activation functions with orthogonal polynomial bases, enhancing approximation power for smooth functions while maintaining numerical stability [

52,

53]. Originally, the internal functions

in standard KAN are defined using splines (e.g., cubic B-splines):

where

are local basis functions with support on specific intervals. In the orthogonal polynomial variant, these functions are replaced by:

where

are orthogonal polynomials (e.g., Legendre, Hermite) satisfying

[

57]. Legendre polynomials

are optimal for bounded domains

, while Hermite polynomials

suit unbounded domains. However, raw polynomials exhibit Runge’s phenomenon near domain boundaries [

58].

To mitigate this limitation, a spectral reprojection technique is applied:

where

is a bounded transformation mapping to

. For example, for Legendre polynomials with

in

:

whereas spline-based KAN would require multiple local evaluations to approximate the same point.

13.1. Structural Impact on KAN

KAN undergo fundamental changes when replacing local splines with global orthogonal polynomials. This transition enables spectral accuracy for analytic functions but sacrifices locality [

59]. Additionally, domain normalization

becomes critical, requiring a priori knowledge of input bounds, which may not always be available in real-world data.

13.2. Advantages and Disadvantages

The main advantages of this approach lie in its ability to achieve exponential convergence for smooth and analytic functions, which significantly improves approximation accuracy compared to spline-based models [

60]. Moreover, the recurrence relations of orthogonal polynomials provide an efficient and stable way to compute derivatives, making them especially useful in applications involving partial differential equations. Another major benefit is their inherent orthogonality, which avoids ill-conditioning in the coefficient matrices and ensures numerical stability even for high-order expansions [

58].

On the other hand, orthogonal polynomial activations also introduce significant drawbacks. They are highly sensitive to discontinuities, which often produce Gibbs oscillations near sharp transitions or non-smooth regions [

60]. Furthermore, their performance depends strongly on the scaling of the input domain: a poor choice of

may lead to numerical instabilities or poor generalization [

61]. Finally, unlike splines, which adapt locally, orthogonal polynomials lack locality, making them less effective in representing piecewise-smooth functions or data with abrupt variations [

60].

A comparative analysis between spline-based activations and orthogonal polynomial activations in KAN is summarized in

Table 9. This table highlights their differences in terms of locality, approximation power, computational efficiency, and numerical stability, providing a clear overview of the trade-offs inherent to each approach.

To further illustrate the differences between spline-based and orthogonal polynomial activations,

Figure 9 compares a cubic spline interpolation and a Legendre polynomial approximation on the same function. While splines provide localized adaptation to the data, orthogonal polynomials achieve global smoothness but exhibit oscillations near domain boundaries.

14. Classification of KAN Variants: Wavelet-Based Activation Functions

A relevant variant of Kolmogorov-Arnold Networks (KAN) replaces spline-based activation functions with wavelet bases, providing multi-resolution analysis and adaptive feature extraction [

60]. Originally, the internal functions

in standard KAN are defined using splines (e.g., cubic B-splines):

where

are local basis functions with support on specific intervals. In the wavelet variant, these functions are replaced by dilated-translated wavelets:

where

is a mother wavelet (e.g., Mexican Hat, Daubechies) satisfying

. Wavelets provide simultaneous localization in space and frequency domains: fine-scale wavelets (

) capture high-frequency details (edges, anomalies), while coarse scales (

) model global trends (smooth patterns). This property makes Wav-KAN particularly useful in applications such as audio signal denoising, seismic analysis, or image compression.

Since infinite sums over

are computationally infeasible, a dyadic truncation with adaptive scale selection is introduced [

61]:

where the set of scales

j is optimized during training. For example, with Mexican Hat wavelet

at

:

whereas splines would require multiple local basis evaluations to capture similar oscillatory behavior.

Structural Impact on KAN

KAN gain multi-resolution capabilities when replacing splines with wavelets [

62]. This transition enables hierarchical feature learning but sacrifices continuity guarantees. Additionally, each activation function becomes equivalent to a mini-wavelet transform, with parameters

optimized end-to-end.

In practice, wavelet-based KAN outperform splines in tasks requiring both local and global representation, such as biomedical imaging or transient signal analysis. However, they exhibit higher computational cost and strong dependence on the choice of the mother wavelet, which may affect generalization across datasets.

Advantages and disadvantages: The main advantages of Wav-KAN include the simultaneous capture of local and global features, natural denoising through scale thresholding, and compact representation of singularities [

60]. On the other hand, their disadvantages involve higher computational requirements, sensitivity to the choice of the mother wavelet, and the appearance of boundary artifacts in finite domains [

61]. To provide a clearer view of the trade-offs introduced by Wav-KAN,

Table 10 summarizes its main structural differences compared to spline-based activations. This highlights the balance between multi-resolution adaptability and computational complexity.

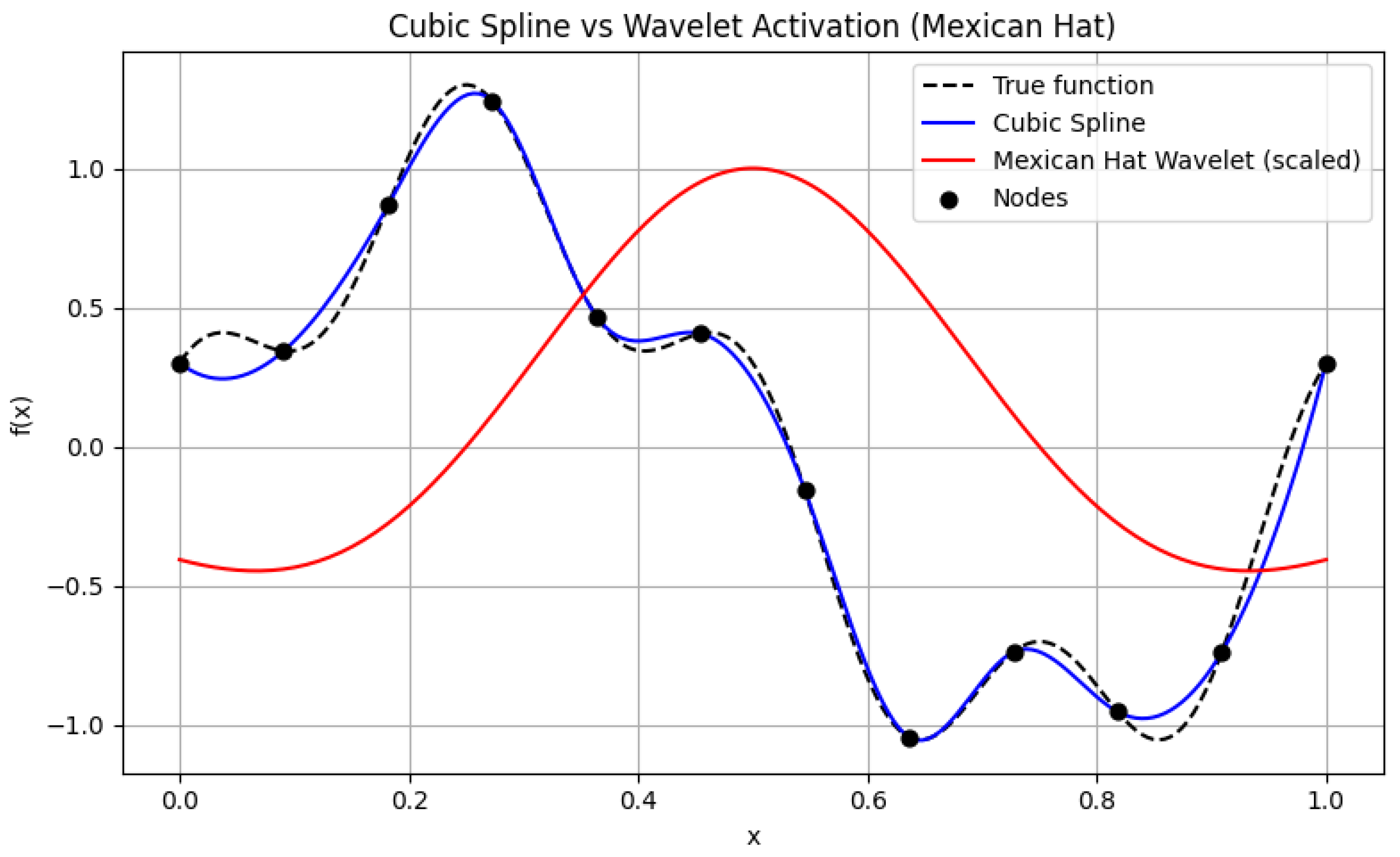

Figure 10 illustrates the comparison between a cubic spline and a Mexican Hat wavelet in modeling oscillatory data. The spline captures smooth local trends, while the wavelet shows superior adaptability to localized fluctuations.

15. Comparative Discussion of KAN Variants

The previous sections introduced different variants of Kolmogorov–Arnold Networks (KAN), each replacing or modifying the spline-based activations with alternative mathematical bases or constraints. While each approach offers unique advantages, their practical relevance depends on the target application, the type of data, and computational constraints. This section provides a comparative discussion of the main variants—Chebyshev-KAN, GKAN (Gram polynomials), Wav-KAN (Wavelet-based), Rational-KAN, MonoKAN (monotonic splines), Physics-constrained KAN, Linear Splines, and Orthogonal Polynomials—highlighting trade-offs in terms of accuracy, efficiency, interpretability, and domain suitability. The overall comparison of these approaches is summarized in

Table 11.

16. Discussion

The comparative analysis of Kolmogorov–Arnold Network (KAN) variants, highlights how diverse mathematical foundations lead to specialized behaviors and domain-specific advantages. Each variant introduces structural modifications to the classical KAN framework, not only improving approximation properties but also embedding prior knowledge such as monotonicity, physical consistency, or spectral accuracy. This shift reflects a broader trend in machine learning: the movement away from purely black-box models toward interpretable and domain-aware architectures.

Chebyshev KAN demonstrates stable extrapolation and polynomial efficiency, making it well-suited for global forecasting tasks such as climate dynamics [

51,

62]. However, its performance degrades in the presence of discontinuities. GKAN, based on Gram orthogonal polynomials, offers computational efficiency in low-dimensional signal processing tasks [

61], but is inherently limited to normalized domains (e.g.,

), restricting its scalability. Wav-KAN introduces multi-resolution analysis via wavelets, enabling localized time-frequency representations particularly beneficial in non-stationary signals such as EEGs [

60,

63]. This comes at the cost of computational overhead and sensitivity to wavelet selection.

MonoKAN enforces monotonic behavior through Hermite splines with derivative constraints, offering interpretability in domains such as actuarial modeling and healthcare risk assessment [

64]. Nevertheless, it trades expressive flexibility for guaranteed monotonicity. Rational KAN achieves compact representations of discontinuities and sharp features through rational functions, providing parameter efficiency and exact rational recovery [

63], but raises challenges due to potential poles and denominator instabilities [

65]. Physics-constrained KAN integrate PDE-based constraints into their training process, yielding physically consistent solutions with significantly reduced data requirements (up to 80%) [

60,

63], although they introduce substantial computational costs and demand differentiable physics models [

66].

Finally, linear spline KAN emphasize computational simplicity by replacing cubic splines with piecewise-linear functions, resulting in 3–5× faster training and inference [

57,

58], while orthogonal polynomial KAN achieve spectral accuracy with exponential convergence for smooth functions [

63], though they suffer from Gibbs oscillations near discontinuities and require precise domain normalization [

64,

65].

Collectively, these results reveal that no single KAN variant dominates across all contexts. Instead, their strengths are complementary: Chebyshev and Orthogonal KAN excel in smooth global domains, Wav-KAN in multi-resolution non-stationary tasks, MonoKAN in ordered risk functions, Physics-KAN in scientific modeling, Rational KAN in discontinuities, and Linear Spline KAN in efficiency-critical applications. Future research directions include hybrid architectures (e.g., Chebyshev-Wav-KAN for combining global extrapolation with local adaptivity), automated basis selection for task-specific adaptation [

65], and hardware acceleration of computationally intensive bases such as wavelets and Gram polynomials [

66]. By bridging classical approximation theory with modern deep learning, KAN variants provide a promising foundation for interpretable, reliable, and domain-aware AI models in high-stakes applications [

67,

68].

17. Conclusions

Kolmogorov–Arnold Networks (KAN) represent a significant advancement in the design of neural architectures grounded in classical mathematical theory. Unlike traditional neural networks that rely on fixed and opaque activation functions, KAN enable a more interpretable and controllable construction by using univariate functions based on splines and other functional bases. This structure, theoretically supported by the Kolmogorov–Arnold representation theorem, not only improves the traceability of model decisions but also opens the door to incorporating expert knowledge, physical constraints, and desirable properties such as monotonicity or multiresolution. As a result, KAN are particularly well-suited to contexts where explainability and control are essential, including medicine, finance, and engineering.

The comparative analysis carried out in this work highlights that each structural variant of KAN responds to specific domain-driven needs. For instance, Wav-KAN proves effective in modeling non-stationary signals such as EEGs due to its multiscale decomposition capabilities, whereas MonoKAN offers a robust solution in regulatory contexts by ensuring monotonic outputs, as required in actuarial models. Similarly, Rational-KAN and Physics-KAN provide advantages when greater precision or physical consistency is needed. This systematic review consolidates dispersed information and provides a unified mathematical perspective on these variants, emphasizing that there is no single optimal architecture, but rather multiple designs adaptable to the nature of the problem and the requirements of different application domains.

Despite their promise, several challenges remain. These include the efficient optimization of parameters in nonlinear architectures, the automated selection of functional bases suited to specific tasks, and the scalability of KAN in high-computation contexts. Furthermore, theoretical convergence guarantees are still missing for certain variants, limiting their adoption in highly sensitive applications. Our review also faces limitations: it is bounded by the current state of the literature, which remains in its early stage, and empirical validation of many variants is still scarce, requiring careful interpretation of the results presented here.

Nevertheless, the potential for hybridization—such as combining the global stability of Chebyshev-KAN with the localized precision of Wav-KAN—suggests a promising research avenue. Future work should also focus on establishing standardized benchmarks, exploring physics-informed and domain-aware extensions, and developing hardware-efficient implementations. Altogether, KAN mark the beginning of a new stage in the development of interpretable and mathematically grounded neural models, with high-impact applications in fields that demand transparency, precision, and adaptability. By consolidating and comparing their mathematical structures, this work aims to serve as a foundational reference for both theoretical advances and practical implementations of KAN.

Author Contributions

Conceptualization and supervision, M.G.F.; data curation, formal analysis, methodology, validation, writing—review and editing, F.L.B.-S., A.G.B.-R. and M.G.F.; investigation, F.L.B.-S., A.G.B.-R. and E.V.-C.; visualization and writing—original draft preparation, F.L.B.-S., A.G.B.-R., E.V.-C. and M.G.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. The APC was funded by Universidad Privada Norbert Wiener, Lima, Peru.

Data Availability Statement

The original contributions presented in this study are included in the article. For further inquiries, please contact the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kanagamalliga, S.; Varma, D.B. Improving Breast Cancer Diagnosis through Advanced Image Analysis and Neural Network Classifications. Procedia Comput. Sci. 2025, 252, 73–149. [Google Scholar] [CrossRef]

- Al-Shannaq, M.; Alkhateeb, S.N.; Wedyan, M. Using image augmentation techniques and convolutional neural networks to identify insect infestations on tomatoes. Heliyon 2025, 11, e41480. [Google Scholar] [CrossRef]

- Fan, Y.; Huang, H.; Han, H. Hierarchical convolutional neural networks with post-attention for speech emotion recognition. Neurocomputing 2025, 615, 128879. [Google Scholar] [CrossRef]

- Ain, N.U.; Sardaraz, M.; Tahir, M.; Abo Elsoud, M.W.; Alourani, A. Securing IoT Networks Against DDoS Attacks: A Hybrid Deep Learning Approach. Sensors 2025, 25, 1346. [Google Scholar] [CrossRef]

- Xie, X.; Wang, M. Research on the Time Series Prediction of Acoustic Emission Parameters Based on the Factor Analysis–Particle Swarm Optimization Back Propagation Model. Appl. Sci. 2025, 15, 1977. [Google Scholar] [CrossRef]

- Zeng, C.; Wang, J.; Shen, H.; Wang, Q. KAN versus MLP on Irregular or Noisy Functions. arXiv 2024, arXiv:2408.07906. [Google Scholar] [CrossRef]

- Azam, B.; Akhtar, N. Suitability of KAN for Computer Vision: A preliminary investigation. arXiv 2024, arXiv:2406.09087. [Google Scholar] [CrossRef]

- Somvanshi, S.; Javed, S.A.; Islam, M.M.; Pandit, D.; Das, S. A Survey on Kolmogorov-Arnold Network. arXiv 2024, arXiv:2411.06078. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljacic, M.; Hou, T.Y.; Tegmark, M. KAN: Kolmogorov-Arnold Networks. arXiv 2024, arXiv:2404.19756. [Google Scholar]

- Cang, Y.; Liu, Y.; Shi, L. Can KAN Work? Exploring the Potential of Kolmogorov-Arnold Networks in Computer Vision. arXiv 2024, arXiv:2411.06727. [Google Scholar] [CrossRef]

- Basina, D.; Vishal, J.R.; Choudhary, A.; Chakravarthi, B. KAT to KAN: A Review of Kolmogorov-Arnold Networks and the Neural Leap Forward. arXiv 2024, arXiv:2411.10622. [Google Scholar] [CrossRef]

- Guo, H.; Li, F.; Li, J.; Liu, H. KAN v.s. MLP for Offline Reinforcement Learning. arXiv 2024, arXiv:2409.09653. [Google Scholar] [CrossRef]

- Le, T.X.H.; Tran, T.D.; Pham, H.L.; Le, V.T.D.; Vu, T.H.; Nguyen, V.T.; Nakashima, Y. Exploring the Limitations of Kolmogorov-Arnold Networks in Classification: Insights to Software Training and Hardware Implementation. In Proceedings of the 2024 Twelfth International Symposium on Computing and Networking Workshops (CANDARW), Naha, Japan, 26–29 November 2024; pp. 110–116. [Google Scholar]

- Le, T.-T.-H.; Hwang, Y.; Kang, H.; Kim, H. Robust Credit Card Fraud Detection Based on Efficient Kolmogorov-Arnold Network Models. IEEE Access 2024, 12, 157006–157020. [Google Scholar] [CrossRef]

- Hollósi, J. Efficiency Analysis of Kolmogorov-Arnold Networks for Visual Data Processing. Eng. Proc. 2024, 79, 68. [Google Scholar]

- Zhang, J.; Zhao, L. Efficient greenhouse gas prediction using IoT data streams and a CNN-BiLSTM-KAN model. Alex. Eng. J. 2025; in press. [Google Scholar]

- Gong, S.; Chen, W.; Jing, X.; Wang, C. Research on Photovoltaic Power Prediction Method based on LSTM-KAN. In Proceedings of the 2024 6th International Conference on Electronic Engineering and Informatics (EEI), Chongqing, China, 28–30 June 2024; pp. 479–482. [Google Scholar]

- Zhang, M.; Fan, X.; Gao, P.; Guo, L.; Huang, X.; Gao, X.; Pang, J.; Tan, F. Monitoring Soil Salinity in Arid Areas of Northern Xinjiang Using Multi-Source Satellite Data: A Trusted Deep Learning Framework. Land 2025, 14, 110. [Google Scholar] [CrossRef]

- Mohan, K.; Wang, H.; Zhu, X. KAN for Computer Vision: An Experimental Study. arXiv 2024, arXiv:2411.18224. [Google Scholar] [CrossRef]

- Ter-Avanesov, B.; Beigi, H. MLP, XGBoost, KAN, TDNN, and LSTM-GRU Hybrid RNN with Attention for SPX and NDX European Call Option Pricing. arXiv 2024, arXiv:2409.06724. [Google Scholar] [CrossRef]

- Carrera-Rivera, A.; Ochoa, W.; Larrinaga, F.; Lasa, G. How-to conduct a systematic literature review: A quick guide for computer science research. MethodsX 2022, 9, 101895. [Google Scholar] [CrossRef] [PubMed]

- Ramirez, M.O.G.; De-la-Torre, M.; Monsalve, C. Methodologies for the design of application frameworks: Systematic review. In Proceedings of the 2019 8th International Conference on Software Process Improvement (CIMPS), Leon, Guanajuato, Mexico, 23–25 October 2019; pp. 1–10. [Google Scholar]

- Shukla, K.; Toscano, J.D.; Wang, Z.; Zou, Z.; Karniadakis, G.E. A comprehensive and FAIR comparison between MLP and KAN representations for differential equations and operator networks. Comput. Methods Appl. Mech. Eng. 2024, 431, 117290. [Google Scholar] [CrossRef]

- Gong, Q.; Xue, Y.; Liu, R.; Du, Z.; Li, S.; Wang, J.; Sun, G. A Novel LSTM-KAN Networks for Demand Forecasting in Manufacturing. In Proceedings of the 2024 5th International Conference on Big Data & Artificial Intelligence & Software Engineering (ICBASE), Wenzhou, China, 20–22 September 2024; pp. 468–473. [Google Scholar]

- Rigas, S.; Papachristou, M.; Papadopoulos, T.; Anagnostopoulos, F.; Alexandridis, G. Adaptive Training of Grid-Dependent Physics-Informed Kolmogorov-Arnold Networks. IEEE Access 2024, 12, 176982–176998. [Google Scholar] [CrossRef]

- Howard, A.A.; Jacob, B.; Murphy, S.H.; Heinlein, A.; Stinis, P. Finite basis Kolmogorov-Arnold networks: Domain decomposition for data-driven and physics-informed problems. arXiv 2024, arXiv:2406.19662. [Google Scholar]

- Afzal Aghaei, A. fKAN: Fractional Kolmogorov-Arnold Networks with trainable Jacobi basis functions. Neurocomputing 2025, 623, 129414. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, J.; Bai, J.; Anitescu, C.; Eshaghi, M.S.; Zhuang, X.; Rabczuk, T.; Liu, Y. Kolmogorov Arnold Informed neural network: A physics-informed deep learning framework for solving forward and inverse problems based on Kolmogorov Arnold Networks. Comput. Methods Appl. Mech. Eng. 2025, 433, 117518. [Google Scholar] [CrossRef]

- Patra, S.; Panda, S.; Parida, B.K.; Arya, M.; Jacobs, K.; Bondar, D.I.; Sen, A. Physics Informed Kolmogorov-Arnold Neural Networks for Dynamical Analysis via Efficent-KAN and WAV-KAN. arXiv 2024, arXiv:2407.18373. [Google Scholar]

- Guo, C.; Sun, L.; Li, S.; Yuan, Z.; Wang, C. Physics-informed Kolmogorov-Arnold Network with Chebyshev Polynomials for Fluid Mechanics. arXiv 2024, arXiv:2411.04516. [Google Scholar] [CrossRef]

- Qiu, R.; Miao, Y.; Wang, S.; Yu, L.; Zhu, Y.; Gao, X.-S. PowerMLP: An Efficient Version of KAN. arXiv 2024, arXiv:2412.13571. [Google Scholar] [CrossRef]

- Yu, T.; Qiu, J.; Yang, J.; Oseledets, I. Sinc Kolmogorov-Arnold Network and Its Applications on Physics-informed Neural Networks. arXiv 2024, arXiv:2410.04096. [Google Scholar]

- Jacob, B.; Howard, A.A.; Stinis, P. SPIKAN: Separable Physics-Informed Kolmogorov-Arnold Networks. arXiv 2024, arXiv:2411.06286. [Google Scholar] [CrossRef]

- Ranasinghe, N.; Xia, Y.; Seneviratne, S.; Halgamuge, S. GINN-KAN: Interpretability pipelining with applications in Physics Informed Neural Networks. arXiv 2024, arXiv:2408.14780. [Google Scholar] [CrossRef]

- Guilhoto, L.F.; Perdikaris, P. Deep Learning Alternatives of the Kolmogorov Superposition Theorem. arXiv 2025, arXiv:2410.01990. [Google Scholar]

- Toscano, J.D.; Oommen, V.; Varghese, A.J.; Zou, Z.; Ahmadi Daryakenari, N.; Wu, C.; Karniadakis, G.E. From PINNs to PIKAN: Recent Advances in Physics-Informed Machine Learning. arXiv 2024, arXiv:2410.13237. [Google Scholar] [CrossRef]

- Kiamari, M.; Kiamari, M.; Krishnamachari, B. GKAN: Graph Kolmogorov-Arnold Networks. arXiv 2024, arXiv:2406.06470. [Google Scholar] [CrossRef]

- Ai, G.; Pang, G.; Qiao, H.; Gao, Y.; Yan, H. GrokFormer: Graph Fourier Kolmogorov-Arnold Transformers. arXiv 2025, arXiv:2411.17296. [Google Scholar]

- Fang, T.; Gao, T.; Wang, C.; Shang, Y.; Chow, W.; Chen, L.; Yang, Y. KAA: Kolmogorov-Arnold Attention for Enhancing Attentive Graph Neural Networks. arXiv 2025, arXiv:2501.13456. [Google Scholar]

- Inzirillo, H.; Genet, R. SigKAN: Signature-Weighted Kolmogorov-Arnold Networks for Time Series. arXiv 2024, arXiv:2406.17890. [Google Scholar]

- Di Carlo, G.; Mastropietro, A.; Anagnostopoulos, A. Kolmogorov-Arnold Graph Neural Networks. arXiv 2024, arXiv:2406.18354. [Google Scholar]

- Inzirillo, H. Deep State Space Recurrent Neural Networks for Time Series Forecasting. arXiv 2024, arXiv:2407.15236. [Google Scholar] [CrossRef]

- Zheng, L.N.; Zhang, W.E.; Yue, L.; Xu, M.; Maennel, O.; Chen, W. Free-Knots Kolmogorov-Arnold Network: On the Analysis of Spline Knots and Advancing Stability. arXiv 2025, arXiv:2501.09283. [Google Scholar]

- Lin, H.; Ren, M.; Barucca, P.; Aste, T. Granger Causality Detection with Kolmogorov-Arnold Networks. arXiv 2024, arXiv:2412.15373. [Google Scholar] [CrossRef]

- Zhang, F.; Zhang, X. GraphKAN: Enhancing Feature Extraction with Graph Kolmogorov Arnold Networks. arXiv 2024, arXiv:2406.13597. [Google Scholar] [CrossRef]

- Gao, Y.; Tan, V.Y.F. On the Convergence of (Stochastic) Gradient Descent for Kolmogorov–Arnold Networks. arXiv 2024, arXiv:2410.08041. [Google Scholar] [CrossRef]

- Genet, R.; Inzirillo, H. TKAN: Temporal Kolmogorov-Arnold Networks. arXiv 2024, arXiv:2405.07344. [Google Scholar] [CrossRef]

- Gao, W.; Gong, Z.; Deng, Z.; Rong, F.; Chen, C.; Ma, L. TabKANet: Tabular Data Modeling with Kolmogorov-Arnold Network and Transformer. arXiv 2024, arXiv:2409.08806. [Google Scholar]

- Moradi, M.; Panahi, S.; Bollt, E.; Lai, Y.-C. Kolmogorov-Arnold Network Autoencoders. arXiv 2024, arXiv:2410.02077. [Google Scholar] [CrossRef]

- Cheon, M. Kolmogorov-Arnold Network for Satellite Image Classification in Remote Sensing. arXiv 2024, arXiv:2406.00600. [Google Scholar] [CrossRef]

- Yang, X.; Wang, X. Kolmogorov-Arnold Transformer. arXiv 2024, arXiv:2409.10594. [Google Scholar] [PubMed]

- Li, C.; Liu, X.; Li, W.; Wang, C.; Liu, H.; Liu, Y.; Chen, Z.; Yuan, Y. U-KAN Makes Strong Backbone for Medical Image Segmentation and Generation. arXiv 2024, arXiv:2406.02918. [Google Scholar] [CrossRef]

- SS, S.; AR, K.; R, G.; KP, A. Chebyshev Polynomial-Based Kolmogorov-Arnold Networks: An Efficient Architecture for Nonlinear Function Approximation. arXiv 2024, arXiv:2405.07200. [Google Scholar]

- Ferdaus, M.M.; Abdelguerfi, M.; Ioup, E.; Dobson, D.; Niles, K.N.; Pathak, K.; Sloan, S. KANICE: Kolmogorov-Arnold Networks with Interactive Convolutional Elements. arXiv 2024, arXiv:2410.17172. [Google Scholar] [CrossRef]

- Ta, H.-T.; Thai, D.-Q.; Tran, A.; Sidorov, G.; Gelbukh, A. PRKAN: Parameter-Reduced Kolmogorov-Arnold Networks. arXiv 2025, arXiv:2501.07032. [Google Scholar]

- Patel, S.B.; Goh, V.; FitzGerald, J.F.; Antoniades, C.A. 2D and 3D Deep Learning Models for MRI-based Parkinson’s Disease Classification: A Comparative Analysis of Convolutional Kolmogorov-Arnold Networks, Convolutional Neural Networks, and Graph Convolutional Networks. arXiv 2024, arXiv:2407.17380. [Google Scholar]

- Bodner, A.D.; Tepsich, A.S.; Spolski, J.N.; Pourteau, S. Convolutional Kolmogorov-Arnold Networks. arXiv 2024, arXiv:2406.13155. [Google Scholar] [PubMed]

- Reinhardt, E.A.F.; Dinesh, P.R.; Gleyzer, S. SineKAN: Kolmogorov-Arnold Networks Using Sinusoidal Activation Functions. Front. Artif. Intell. 2025, 7, 1462952. [Google Scholar] [CrossRef] [PubMed]

- Bozorgasl, Z.; Chen, H. Wav-KAN: Wavelet Kolmogorov-Arnold Networks. arXiv 2024, arXiv:2405.12832. [Google Scholar] [CrossRef]

- Wang, Y.; Yu, X.; Gao, Y.; Sha, J.; Wang, J.; Gao, L.; Zhang, Y.; Rong, X. SpectralKAN: Kolmogorov-Arnold Network for Hyperspectral Images Change Detection. arXiv 2024, arXiv:2407.00949. [Google Scholar]

- Al-qaness, M.A.A.; Ni, S. TCNN-KAN: Optimized CNN by Kolmogorov-Arnold Network and Pruning Techniques for sEMG Gesture Recognition. IEEE J. Biomed. Health Inform. 2025, 29, 188–197. [Google Scholar] [CrossRef] [PubMed]

- Jiang, C.; Li, Y.; Luo, H.; Zhang, C.; Du, H. KANNet: Kolmogorov–Arnold Networks and multi slice partition channel priority attention in convolutional neural network for lung nodule detection. Biomed. Signal Process. Control 2025, 103, 107358. [Google Scholar] [CrossRef]

- Jahin, M.A.; Masud, M.A.; Mridha, M.F.; Aung, Z.; Dey, N. KACQ-DCNN: Uncertainty-Aware Interpretable Kolmogorov-Arnold Classical-Quantum Dual-Channel Neural Network for Heart Disease Detection. arXiv 2024, arXiv:2410.07446. [Google Scholar] [CrossRef]

- Liu, T.; Xiao, H.; Sun, Y.; Zuo, K.; Li, Z.; Yang, Z.; Liu, S. PhysKANNet: A KAN-based model for multiscale feature extraction and contextual fusion in remote physiological measurement. Biomed. Signal Process. Control 2025, 100, 107111. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Fares, I.A.; Aseeri, A.O. CKAN: Convolutional Kolmogorov–Arnold Networks Model for Intrusion Detection in IoT Environment. IEEE Access 2024, 12, 134837–134851. [Google Scholar] [CrossRef]

- Batreddy, S.; Pamisetty, G.; Kothuri, M.; Verma, P.; Babu, C.S. Adaptive Neighborhood Sampling and KAN-Based Edge Features for Enhanced Fraud Detection. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData), Washington, DC, USA, 15–18 December 2024; pp. 3375–3381. [Google Scholar]

- Danish, M.U.; Grolinger, K. Kolmogorov–Arnold recurrent network for short term load forecasting across diverse consumers. Energy Rep. 2025, 13, 713–727. [Google Scholar] [CrossRef]

- Shuai, H.; Li, F. Physics-Informed Kolmogorov-Arnold Networks for Power System Dynamics. IEEE Open Access J. Power Energy 2025, 12, 46–58. [Google Scholar] [CrossRef]

Figure 1.

Cubic B-spline basis function with support on four consecutive intervals .

Figure 1.

Cubic B-spline basis function with support on four consecutive intervals .

Figure 2.

Comparison between different basis functions used in KAN. The cubic B-spline illustrates local adaptability, the classical Chebyshev polynomial demonstrates global approximation but is limited to , and the modified Chebyshev extends the domain to using a nonlinear tanh mapping.

Figure 2.

Comparison between different basis functions used in KAN. The cubic B-spline illustrates local adaptability, the classical Chebyshev polynomial demonstrates global approximation but is limited to , and the modified Chebyshev extends the domain to using a nonlinear tanh mapping.

Figure 3.

Comparison between a cubic B-spline and Gram polynomials of orders and . The spline demonstrates local adaptability constrained to knot-defined intervals, while Gram polynomials extend globally over , offering orthogonality and smooth approximation properties. This contrast highlights the shift from local piecewise functions to global orthogonal bases in GKAN.

Figure 3.

Comparison between a cubic B-spline and Gram polynomials of orders and . The spline demonstrates local adaptability constrained to knot-defined intervals, while Gram polynomials extend globally over , offering orthogonality and smooth approximation properties. This contrast highlights the shift from local piecewise functions to global orthogonal bases in GKAN.

Figure 4.

Comparison between a cubic B-spline and a Morlet wavelet, representing the activation bases of classical KAN and Wav-KAN, respectively. The spline shows local adaptability constrained to knot-defined intervals, while the Morlet wavelet displays oscillatory behavior with exponential decay, enabling multi-resolution representation of local and global patterns.

Figure 4.

Comparison between a cubic B-spline and a Morlet wavelet, representing the activation bases of classical KAN and Wav-KAN, respectively. The spline shows local adaptability constrained to knot-defined intervals, while the Morlet wavelet displays oscillatory behavior with exponential decay, enabling multi-resolution representation of local and global patterns.

Figure 5.

Comparison between a cubic spline and a monotonic Hermite spline. The classical spline allows oscillations that may lead to decreasing regions, whereas the monotonic variant enforces non-negativity of the derivative, ensuring non-decreasing behavior across the interval.

Figure 5.

Comparison between a cubic spline and a monotonic Hermite spline. The classical spline allows oscillations that may lead to decreasing regions, whereas the monotonic variant enforces non-negativity of the derivative, ensuring non-decreasing behavior across the interval.

Figure 6.

Comparison between cubic spline and rational function approximations of a discontinuous function. Rational-KAN provide a more compact representation, while splines show oscillatory artifacts around discontinuities.

Figure 6.

Comparison between cubic spline and rational function approximations of a discontinuous function. Rational-KAN provide a more compact representation, while splines show oscillatory artifacts around discontinuities.

Figure 7.

Comparison between spline-based KAN and Physics-KAN in approximating Burgers’ equation. The physics-constrained approach enforces PDE consistency, improving extrapolation beyond observed data.

Figure 7.

Comparison between spline-based KAN and Physics-KAN in approximating Burgers’ equation. The physics-constrained approach enforces PDE consistency, improving extrapolation beyond observed data.

Figure 8.

Comparison between cubic spline and linear spline approximations of a smooth function. The cubic spline achieves smoother behavior, while the linear spline approximates the function with piecewise linear segments, sacrificing smoothness for efficiency.

Figure 8.

Comparison between cubic spline and linear spline approximations of a smooth function. The cubic spline achieves smoother behavior, while the linear spline approximates the function with piecewise linear segments, sacrificing smoothness for efficiency.

Figure 9.

Comparison between cubic splines and Legendre polynomials in KAN activations. Splines adapt locally to variations, whereas orthogonal polynomials provide global smoothness with potential oscillations near boundaries.

Figure 9.

Comparison between cubic splines and Legendre polynomials in KAN activations. Splines adapt locally to variations, whereas orthogonal polynomials provide global smoothness with potential oscillations near boundaries.

Figure 10.

Comparison between a cubic spline and a Mexican Hat wavelet in modeling an oscillatory signal. The spline captures smooth local trends (blue), whereas the scaled Mexican Hat wavelet (red) provides multi-resolution adaptability to localized fluctuations; the dashed black line is the true signal.

Figure 10.

Comparison between a cubic spline and a Mexican Hat wavelet in modeling an oscillatory signal. The spline captures smooth local trends (blue), whereas the scaled Mexican Hat wavelet (red) provides multi-resolution adaptability to localized fluctuations; the dashed black line is the true signal.

Table 1.

Comparison of Interpolation Methods.

Table 1.

Comparison of Interpolation Methods.

| Method | Advantage | Disadvantage |

|---|

| Lagrange | Exact at points | Instability for large n |

| Bézier | Intuitive shape control | Does not interpolate all points |

| Linear | Simplicity | Lack of smoothness () |

| Cubic Splines | smoothness | High computational cost |

| Hermite | Derivative control | Requires extra data |

Table 2.

Comparison between spline-based KAN and Chebyshev-based variants.

Table 2.

Comparison between spline-based KAN and Chebyshev-based variants.

| Property | Cubic Splines (Classical KAN) | Chebyshev Polynomials | Modified Chebyshev |

|---|

| Support | Local (compact intervals defined by knots) | Global, restricted to | Global, extended to through |

| Adaptability | High flexibility for local variations | Limited, smooth global approximation | Smooth global approximation with domain extension |

| Numerical Stability | Stable under knot refinement | Unstable outside | Stable across the entire domain |

| Parameterization | Requires knot placement and coefficients | Only polynomial coefficients | Only polynomial coefficients (with nonlinear mapping) |

| Best suited for | Problems with abrupt local changes | Smooth functions on bounded domains | Functions requiring extrapolation or unbounded domains |

Table 3.

Comparison between spline-based, Chebyshev-based, and Gram polynomial activations in KAN.

Table 3.

Comparison between spline-based, Chebyshev-based, and Gram polynomial activations in KAN.

| Property | Cubic Splines (Classical KAN) | Chebyshev Polynomials | Gram Polynomials (GKAN) |

|---|

| Support | Local, compact intervals defined by knots | Global, restricted to (extended via tanh) | Global, orthogonal on |

| Adaptability | Strong adaptability to abrupt local variations | Effective for smooth global approximations, weak for local details | Moderate flexibility, adapts via weighted combinations of global bases |

| Numerical Stability | Stable under knot refinement, but prone to overfitting if knots are dense | Sensitive to instability outside | High stability due to orthogonality, prevents ill-conditioning |

| Computational Cost | Requires recursive evaluations of spline segments | Higher complexity for large k due to trigonometric evaluations | Reduced complexity , efficient for high-dimensional tasks |

| Best suited for | Piecewise-smooth functions, signals with local discontinuities | Smooth functions in bounded domains | Sequential data, real-time applications, and resource-constrained systems |

Table 4.

Comparison between spline-based, Chebyshev-based, and wavelet-based activations in KAN.

Table 4.

Comparison between spline-based, Chebyshev-based, and wavelet-based activations in KAN.

| Property | Cubic Splines (Classical KAN) | Chebyshev Polynomials | Wavelet Functions (Wav-KAN) |

|---|

| Support | Local, compact intervals defined by knots | Global, restricted to (extended via tanh) | Localized but multi-scale, defined by scale and translation |

| Adaptability | High flexibility for local variations | Effective for smooth global approximations, weak for abrupt local changes | Captures both local details and global patterns through multi-resolution |

| Numerical Stability | Stable under knot refinement | Unstable outside without modification | Stable across domains but sensitive to choice of mother wavelet |

| Parameterization | Knot positions and coefficients | Only polynomial coefficients | Trainable scale , translation , and weights |

| Best suited for | Piecewise-smooth functions, abrupt local changes | Smooth functions on bounded domains | Non-stationary signals, multi-resolution tasks (e.g., audio, images, seismic data) |

Table 5.

Comparison between classical splines, Gram polynomials, and monotonic splines in KAN.

Table 5.

Comparison between classical splines, Gram polynomials, and monotonic splines in KAN.

| Property | Cubic Splines (Classical KAN) | Gram Polynomials (GKAN) | Monotonic Splines (MonoKAN) |

|---|

| Support | Local, compact intervals defined by knots | Global, orthogonal over | Local, constrained Hermite splines |

| Adaptability | Highly flexible, capable of modeling abrupt local changes | Moderate flexibility, controlled by polynomial weights | Restricted to non-decreasing trends, reduced local variability |

| Numerical Stability | Stable under knot refinement but sensitive to overfitting | High stability due to orthogonality | Stable under constraints, but optimization is more complex |

| Interpretability | Moderate, coefficients depend on knot placement | Limited, interpretation tied to polynomial order | High, monotonicity and positive weights align with domain knowledge |

| Best suited for | General-purpose function approximation, irregular signals | Sequential and high-dimensional tasks with resource constraints | Finance, actuarial science, medicine, and other monotonic processes |

Table 6.

Comparison between spline-based KAN and Rational-KAN.

Table 6.

Comparison between spline-based KAN and Rational-KAN.

| Aspect | Spline-Based KAN | Rational-KAN |

|---|

| Locality | Local support via knots, highly adaptable to localized regions | Global representation, less flexible for abrupt local irregularities |

| Representation power | Polynomial-like behavior, limited for discontinuities | Exponential convergence, compact modeling of sharp gradients or discontinuities |

| Parameters | , dependent on knots | , independent of knots |

| Numerical stability | Stable under knot selection, low risk of divergence | Sensitive to denominator zeros, requires pole-avoidance mechanisms |

| Applications | Smooth signals, localized variability | Functions with discontinuities, rational-like behaviors, efficient for sharp transitions |

Table 7.

Comparison between spline-based KAN and Physics-KAN.

Table 7.

Comparison between spline-based KAN and Physics-KAN.

| Aspect | Spline-Based KAN | Physics-KAN |

|---|

| Locality | Local support of spline bases | Local support preserved, but constrained by PDEs |