1. Introduction

Anomaly detection, as an important research direction in data mining and machine learning, aims to identify anomalous instances that significantly deviate from normal patterns [

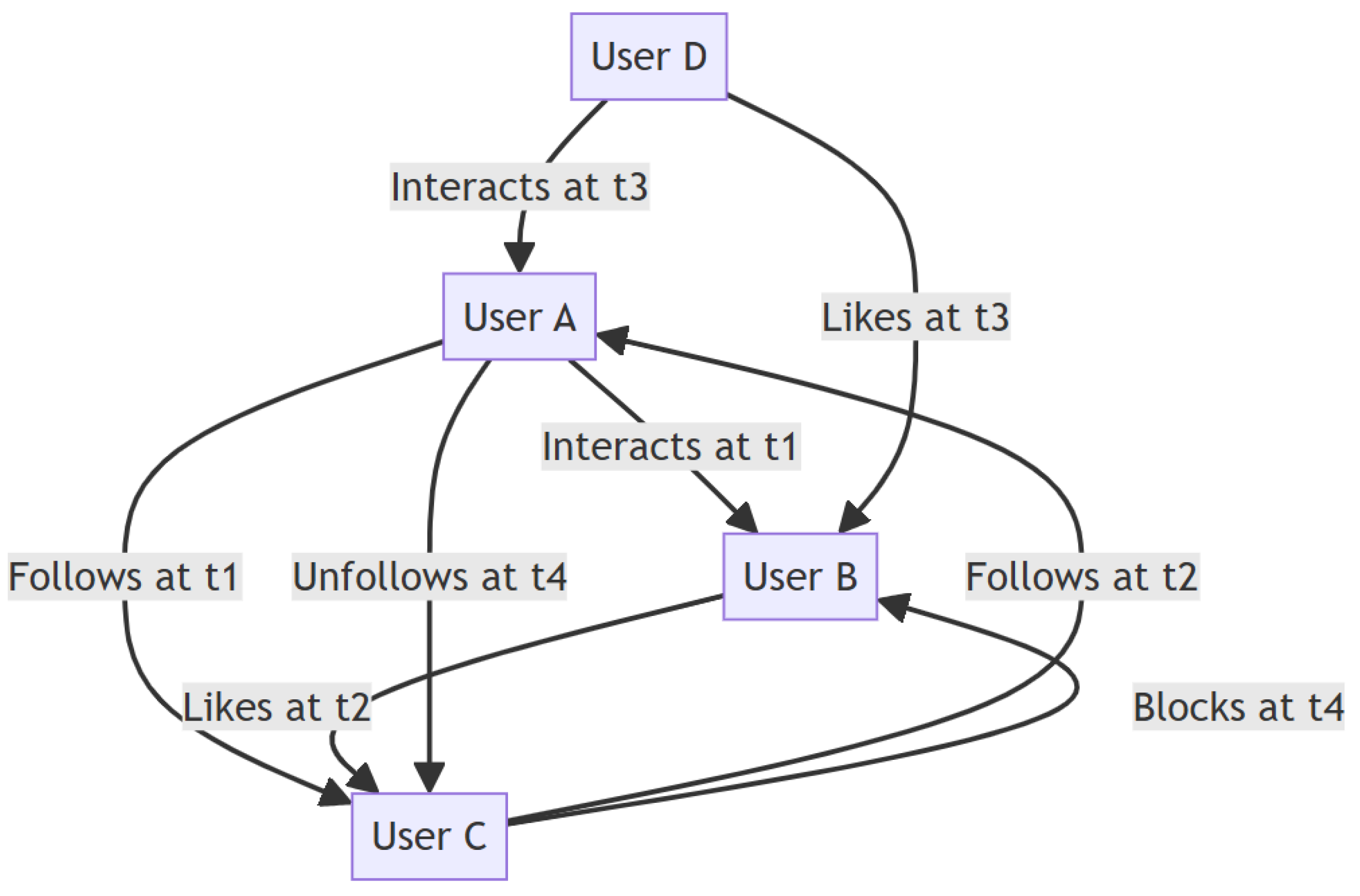

1]. In the real world, data in many application scenarios naturally possess graph structure characteristics, such as user interactions in social networks, transaction relationships in financial systems, and communication patterns in network security. These graph-structured data not only contain rich topological information but also evolve dynamically over time, forming dynamic graphs [

2].

Traditional anomaly detection methods are mainly designed for static data [

3] and have difficulty effectively handling complex relational patterns in graph-structured data. In recent years, the rapid development of Graph Neural Networks (GNNs) has provided new solutions for graph anomaly detection [

4,

5,

6]. However, existing graph anomaly detection research mainly focuses on static graphs [

7,

8], ignoring the dynamic evolution characteristics of graph structures in the real world.

Dynamic graph anomaly detection faces unique challenges. First, the topological structure and node attributes of graphs continuously change over time, and traditional static graph methods cannot capture such temporal evolution patterns [

9]. Second, anomalous behaviors in dynamic graphs often exhibit specific temporal patterns. Finally, in practical applications, obtaining large amounts of labeled data is costly, so it is necessary to fully utilize large amounts of unlabeled data under the guidance of a small amount of labeled samples [

10].

These limitations directly motivate our work: there is an urgent need for a framework that can (1) effectively capture continuous temporal dependencies in dynamic graphs, (2) model complex spatiotemporal relationships through advanced attention mechanisms, and (3) efficiently leverage unlabeled data in semi-supervised scenarios.

In recent years, various methods have been proposed for anomaly detection in dynamic graphs, such as TADDY [

11], which utilizes Transformer encoders to process spatiotemporal information. However, TADDY primarily adopts a discrete-time modeling approach, which may not fully capture the continuous interactions and temporal dependencies inherent in dynamic graphs. Our proposed method, TSAD, addresses these limitations by introducing a specialized architecture that leverages multi-head attention mechanisms and time-aware positional encoding, allowing for a more nuanced understanding of the temporal evolution of graph structures.

Existing dynamic graph neural network methods [

12,

13], although considering temporal information, mostly adopt discrete-time snapshot modeling approaches and have difficulty handling continuous-time dynamic interactions. Meanwhile, these methods mainly rely on supervised learning paradigms and perform poorly in anomaly detection tasks with scarce labeled data.

Recent semi-supervised dynamic graph anomaly detection methods [

11,

14], although attempting to combine temporal information, still have the following limitations: (1) Insufficient temporal modeling capability, simply treating time as input features, unable to fully capture complex temporal dependencies; (2) Insufficient utilization of unlabeled data, lacking effective mechanisms to mine useful information from large amounts of unlabeled samples.

The Transformer architecture, with its powerful sequence modeling capability and self-attention mechanism, has achieved tremendous success in natural language processing [

15] and computer vision. In recent years, researchers have begun exploring the application of the Transformer to graph data processing [

16], but its application in the field of dynamic graph anomaly detection is still in its infancy. The multi-head attention mechanism of the Transformer can effectively capture long-range dependencies, and positional encoding helps understand temporal patterns in sequences, making it very suitable for handling complex spatiotemporal relationships in dynamic graphs.

Based on the above analysis, this paper innovatively proposes a Transformer-based semi-supervised anomaly detection model for dynamic graphs (TSAD). The core of the model lies in leveraging the Transformer’s strength in continuous temporal modeling and semi-supervised learning mechanisms to simultaneously address two major challenges: “capturing complex temporal dependencies” and “utilizing unlabeled data.” Specifically, first, by introducing sine–cosine temporal positional encoding and a graph structure-aware attention mechanism, the model deeply integrates temporal and structural information of dynamic interactions, accurately characterizing the evolutionary process of the graph. Second, by designing an adaptive memory bank to maintain normal pattern prototypes and combining it with a confidence-based pseudo-label generation strategy and contrastive learning, the model efficiently learns discriminative features from unlabeled data, enabling more precise anomaly identification. Finally, through multi-objective joint optimization, the model demonstrates outstanding detection performance and robustness in label-scarce scenarios. The main contributions of this framework include the following:

Innovative Architecture Design: First introduction of the Transformer architecture into dynamic graph anomaly detection, effectively capturing long-range spatiotemporal dependencies between nodes through time-aware multi-head attention mechanisms.

Enhanced Temporal Modeling: Design of specialized temporal encoding schemes and positional embedding mechanisms, enabling the model to understand periodic patterns and temporal evolution patterns in dynamic graphs.

Semi-supervised Learning Optimization: Proposal of pseudo-label-based contrastive learning modules, combined with time-decay memory bank mechanisms, to fully exploit the potential of unlabeled data.

4. Model Framework

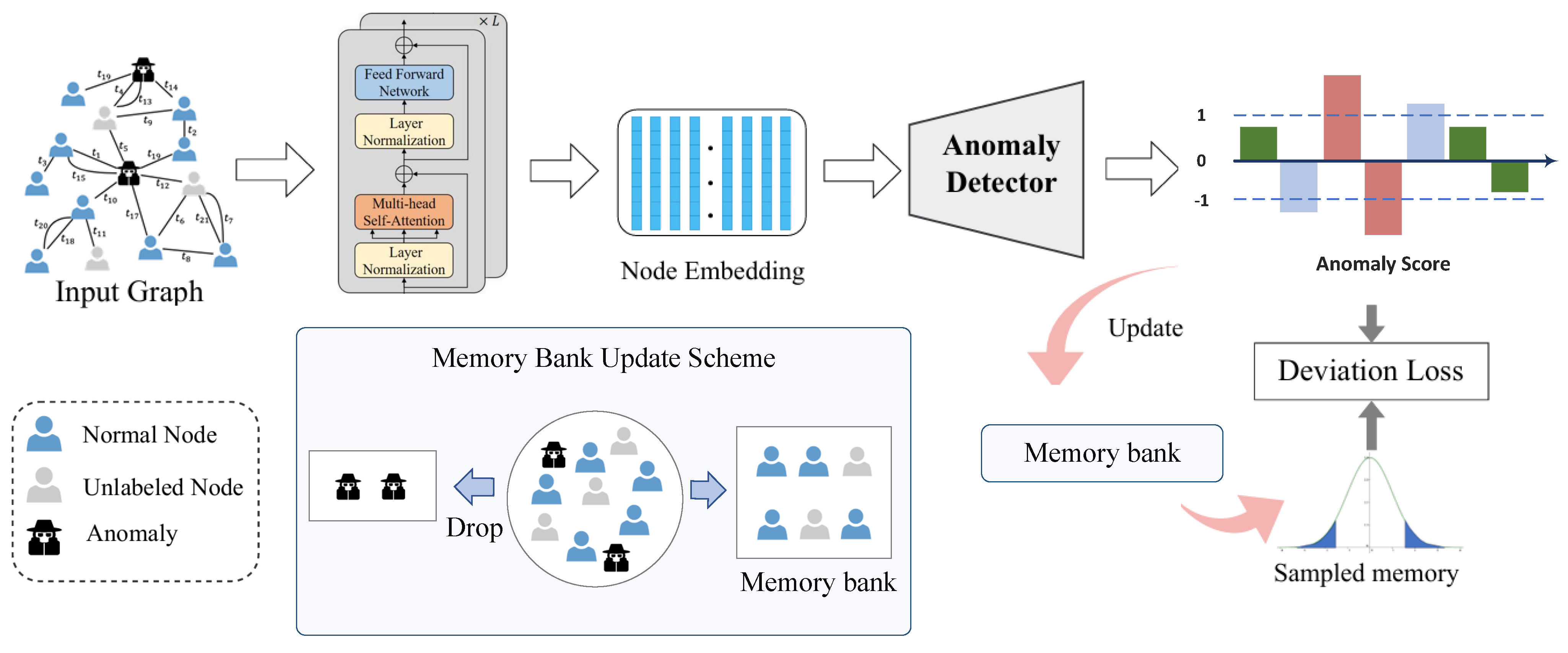

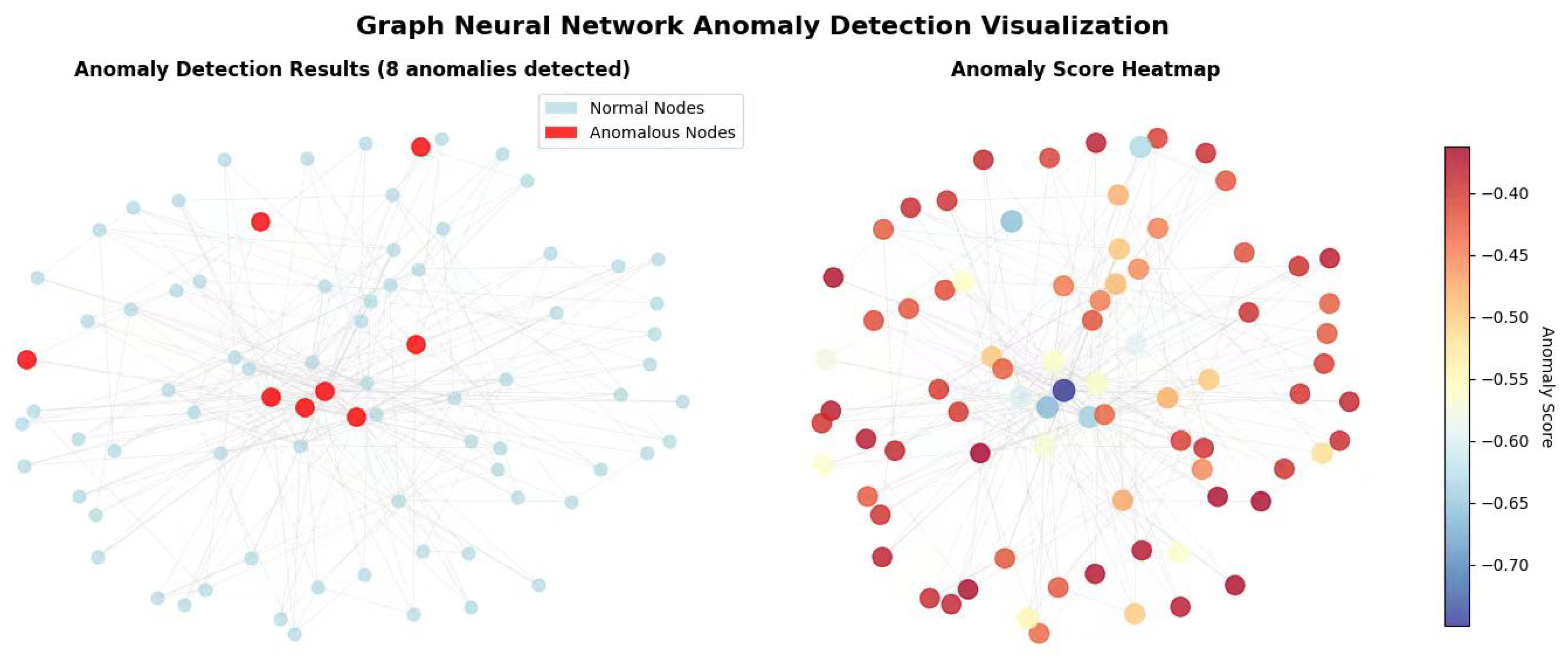

This section details the design and implementation of the TSAD framework. As shown in

Figure 2, the TSAD framework adopts end-to-end training and can effectively handle semi-supervised anomaly detection tasks in dynamic graphs.

Specifically, given a dynamic graph sequence input, the Transformer encoder first captures complex spatiotemporal dependencies and multi-scale feature representations between nodes. The adaptive memory storage mechanism maintains statistical distributions of normal samples, providing reliable reference benchmarks for pseudo-label generation. The confidence-based pseudo-label generation strategy combined with the collaborative contrastive learning framework fully exploits the potential value of unlabeled data. Finally, through joint optimization of multiple loss functions, precise identification of anomalous patterns in dynamic graphs is achieved.

The TSAD framework is specifically designed to tackle the challenges posed by dynamic graphs. Unlike TADDY, which relies on discrete snapshots, our approach employs a continuous-time representation that effectively captures the evolving nature of interactions. The core of our model is the multi-head attention mechanism, which not only facilitates the learning of long-range dependencies but also incorporates temporal information through a specially designed positional encoding scheme. This allows our model to adaptively focus on relevant interactions at different time steps, enhancing its ability to detect anomalies in dynamic contexts.

4.1. Multi-Head Attention Temporal Encoding Mechanism

4.2. Transformer-Based Dynamic Graph Encoding

To capture temporal evolution information in dynamic graphs, we design a specialized temporal positional encoding module. Inspired by positional encoding in Transformer [

15], we define a combination encoding of sine and cosine functions for each time step:

The reason for choosing sine and cosine functions at each timestamp is primarily twofold. First, these functions have good periodicity, which can effectively capture cyclic patterns in time series, which is crucial for analyzing node behavior in dynamic graphs. Second, the smoothness and phase information of sine and cosine functions enable the model to better understand trends in temporal changes, thereby improving the accuracy of anomaly detection. Therefore, using sine and cosine functions as a method of time encoding can enhance the model’s ability to capture complex temporal dependencies. where

t is the time step,

j is the dimension index, and

is the model dimension. The temporal encoding is added to node features to obtain time-aware node representations:

This design enables the model to distinguish the states of the same node at different times, providing important temporal information for subsequent anomaly detection.

The standard Transformer self-attention mechanism cannot directly handle graph structure information. We design a graph structure-aware attention mechanism that integrates the graph’s topological structure into attention computation. First, query, key, and value matrices are obtained through linear transformations:

To maintain the local structural characteristics of the graph, we introduce structural bias terms in attention computation, ensuring that only adjacent nodes can participate in attention calculation:

where the structural bias term

is set to 0 for adjacent nodes and negative infinity for non-adjacent nodes, thus achieving structural constraints after the softmax operation.

To capture different types of node relationship patterns and interaction modes, we adopt multi-head attention mechanisms. Each attention head focuses on learning specific types of node relationships, and outputs from multiple heads are fused through concatenation and linear transformation:

where

H is the number of attention heads and

is the output projection matrix. This design enhances the model’s ability to capture complex node relationships.

To model evolution patterns and long-term dependencies between nodes at different times, we introduce cross-temporal attention mechanisms. For a node’s representation sequence at historical moments, temporal attention weights are calculated:

Through weighted aggregation of historical information, node representations fused with temporal context are obtained:

Using the standard Transformer architecture, each Transformer layer includes multi-head self-attention sublayers and feed-forward neural network sublayers, with residual connections and layer normalization to stabilize the training process:

By stacking multiple Transformer layers, the model can learn more complex and abstract node representations, effectively capturing multi-level feature interaction patterns in dynamic graphs, providing high-quality node embedding representations for subsequent anomaly detection tasks.

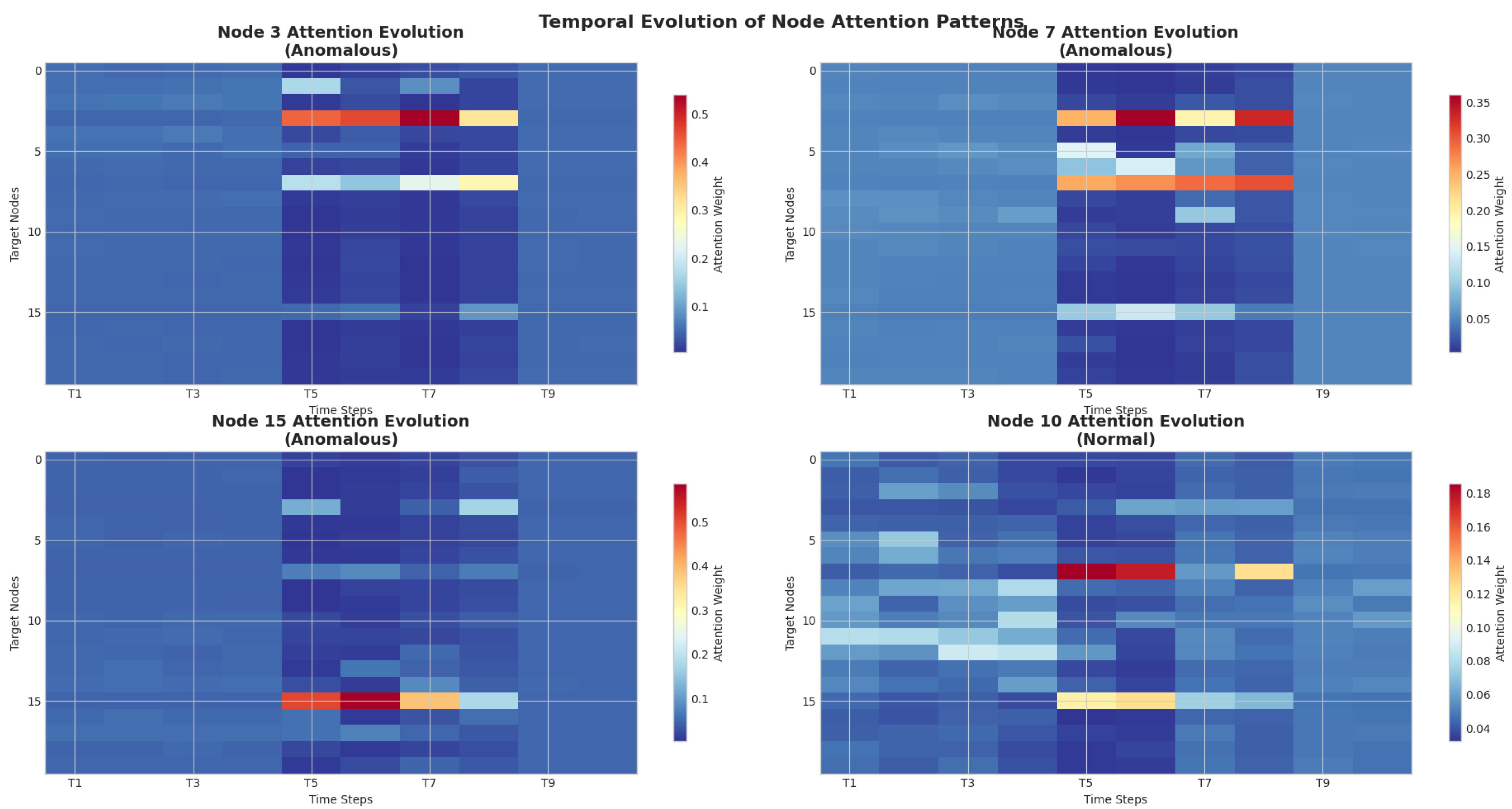

The effectiveness of multi-head attention in dynamic graph anomaly detection can be theoretically justified from multiple perspectives. First, the multi-head mechanism enables the model to capture different types of anomalous patterns simultaneously. Each attention head can focus on specific aspects of node behavior, such as structural anomalies, temporal irregularities, or attribute deviations. This parallel processing capability is particularly crucial for anomaly detection, where anomalous patterns may manifest across multiple dimensions.

Second, the attention mechanism provides natural interpretability for anomaly detection. The attention weights can be interpreted as importance scores, indicating which temporal interactions or neighboring nodes contribute most to the anomaly decision. This interpretability is essential for practical applications where understanding the reasoning behind anomaly detection is crucial.

Third, the temporal encoding mechanism enables the model to distinguish between normal periodic patterns and anomalous temporal deviations. By incorporating positional encoding that captures both absolute and relative temporal information, the model can identify subtle temporal anomalies that would be missed by simpler temporal modeling approaches.

4.3. Adaptive Memory Storage Mechanism

4.3.1. Memory Storage Structure Design

To maintain statistical distributions of normal samples and adapt to dynamic data changes, we design an adaptive memory storage mechanism. Memory storage

M contains

M memory slots, each storing a prototype vector and corresponding confidence weight:

where

represents the

j-th prototype vector and

represents the corresponding confidence weight. This design enables the model to dynamically maintain diverse patterns of normal samples. The selection of memory slot size

M is guided by the following principles:

Empirical Setting Principle: We set M to 64 based on empirical estimation of normal sample pattern diversity. Through preliminary analysis, we found that normal behavior patterns in most dynamic graph datasets can be effectively represented by dozens of prototype vectors.

Computational Efficiency Balance: provides a good balance between representation capability and computational overhead. Larger M would increase similarity computation cost, while smaller M may not adequately capture the diversity of normal patterns.

Experimental Validation: We tested the performance of and found that achieved the best results on all three datasets. This indicates that this setting is relatively applicable to different types of dynamic graphs.

4.3.2. Memory Reading and Similarity Computation

Given node representation

, the memory reading operation evaluates the node’s normality by computing cosine similarity with each memory slot:

Reading weights are computed through temperature-scaled softmax:

where

is the temperature parameter controlling the sharpness of the attention distribution.

4.3.3. Adaptive Update Strategy

For nodes labeled as normal, we use an exponential moving average strategy to update the most similar memory slot:

where

is the memory update rate and

is the confidence decay factor. This adaptive update mechanism enables memory storage to dynamically adjust as data distributions change.

4.4. Pseudo-Label Collaborative Contrastive Learning Strategy

4.4.1. Confidence-Based Pseudo-Label Generation

Based on the adaptive memory storage mechanism, we design a confidence-driven pseudo-label generation strategy. For unlabeled nodes, we first compute their matching degree with memory storage to evaluate anomaly levels.

The anomaly score for node

is defined as its weighted distance from the most similar prototype in memory storage:

where

is the cosine similarity between node

i and memory slot

j, and

is the corresponding confidence weight.

The anomaly score is normalized through the sigmoid function:

where

is a learnable scaling parameter.

To ensure pseudo-label quality, we adopt a dual-threshold strategy:

When and , (anomalous).

When and , (normal).

Otherwise, nodes remain unlabeled.

where and are confidence, thresholds for anomalous and normal cases, respectively, and is the similarity threshold.

4.4.2. Collaborative Contrastive Learning Design

To fully utilize unlabeled data, we design a collaborative contrastive learning strategy that constructs positive and negative sample pairs from both temporal and semantic dimensions. The contrastive learning process is guided by pseudo-labels (either ground-truth or predicted), which enable semantic-aware contrastive learning by grouping nodes with identical labels in the representation space. This approach, combined with temporal contrastive learning, allows the model to capture both semantic consistency and temporal continuity, going beyond purely structural or temporal contrastiveness.

Temporal positive pairs: Representations of the same node at adjacent time steps should have continuity, constructing temporal positive pairs:

Semantic positive pairs: Nodes with the same labels should cluster in the representation space:

Negative pairs: Node pairs with different labels and randomly sampled node pairs:

4.4.3. Multi-Level Contrastive Loss

Based on the constructed sample pairs, we design multi-level contrastive loss functions:

Temporal contrastive loss:

Semantic contrastive loss:

where

is the cosine similarity function,

is the temperature parameter, and

B is the node representations in the current batch.

Total contrastive loss:

where

is the balance parameter.

4.4.4. Dynamic Weight Adjustment

To improve pseudo-label quality and adapt to training process changes, we introduce a dynamic weight adjustment mechanism:

where

is the warm-up epochs,

is the confidence threshold, and

is the indicator function. This mechanism makes the model rely on labeled data in early training stages and gradually increases the weight of high-quality pseudo-labels as training progresses.

6. Conclusions and Future Work

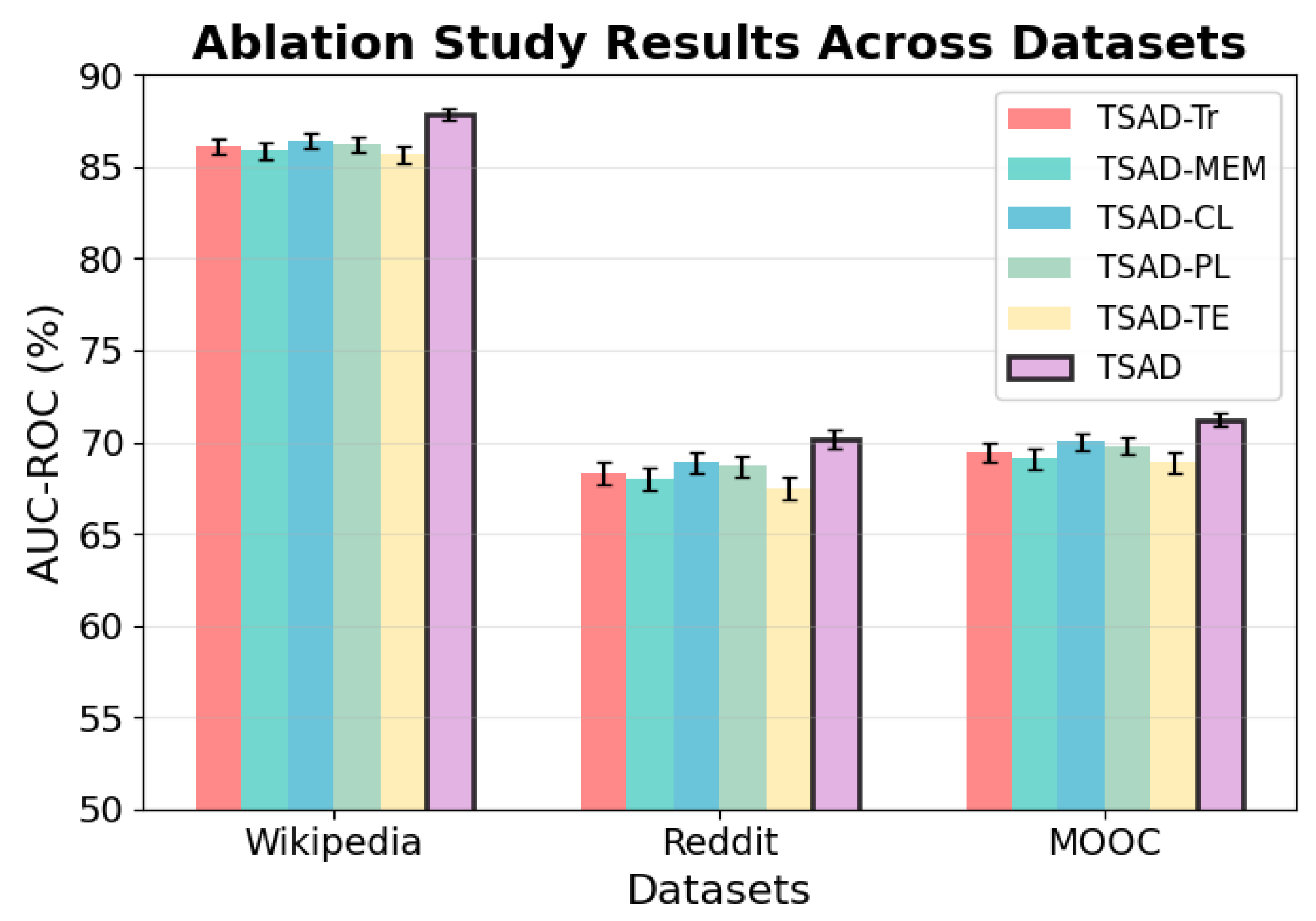

The TSAD framework proposed in this paper effectively addresses the key issues of insufficient multi-scale feature fusion and inadequate utilization of unlabeled data in dynamic graph anomaly detection by integrating multi-head attention mechanisms, adaptive memory storage, and pseudo-label collaborative contrastive learning. Experimental results show that TSAD achieves optimal performance on three real datasets, with an average improvement of 1.42 percentage points compared with baseline methods, validating the effectiveness of the framework.

While TSAD demonstrates superior performance, several limitations should be acknowledged:

Hyperparameter Sensitivity: The framework involves multiple hyperparameters (memory slots, confidence thresholds, etc.) that require careful tuning. Developing adaptive hyperparameter selection mechanisms would improve practical applicability.

Applicability of large-scale graphs: due to equipment limitations, the performance of the proposed method has not been verified on large-scale graphs.

In the future, we will verify the effectiveness of the proposed method on large-scale graphs and, at the same time, actively integrate technologies such as large language models to further enhance the performance of the model.