Abstract

In this paper, we consider the parameter estimation for the fractional Black–Scholes model of the form where and are the parameters to be estimated. Here, denotes a fractional Brownian motion with Hurst index . Using the quasi-likelihood method, we estimate the parameters and based on observations taken at discrete time points . Under the conditions , , and for some , as , the asymptotic properties of the quasi-likelihood estimators are established. The analysis further reveals how the convergence rate of approaching zero affects the accuracy of estimation. To validate the effectiveness of our method, we conduct numerical simulations using real-world stock market data, demonstrating the practical applicability of the proposed estimation framework.

Keywords:

asymptotic distribution; fractional Brownian motion; fractional Itô integral; quasi-likelihood estimation MSC:

60G22; 62M09

1. Introduction

In classical financial theory, the market is assumed to be arbitrage free and complete, and so standard Brownian motion is often used as noise to characterize the prices of financial derivatives. However, in real-world financial markets, prices often exhibit long-range dependence and non-stationarity, which are inconsistent with the characteristics of standard Brownian motion. Consequently, many authors have proposed using fractional Brownian motion (fBm) to construct market models (see, for examples, Mandelbrot and Van Ness [1]), with its simple structure and properties in memory noise. Unfortunately, starting with Rogers [2], there has been an ongoing dispute on the proper usage of fractional Brownian motion in option pricing theory. A troublesome problem arises because fBm is not a semimartingale and therefore, “no arbitrage pricing” cannot be used. Although this is a consensus, the consequences are not clear. The orthodox explanation is simple: fBm is not a suitable candidate for the price process. But, as shown by Cheridito [3], assuming that market participants cannot react immediately, any theoretical arbitrage opportunities will disappear. On the other hand, in 2003, Hu and ksendal [4] used the Wick–Itô-type integral to define a fractional market and showed the market was arbitrage free and complete. In that case, the prices of financial derivatives satisfied the following fractional Black–Scholes model:

with , where is a fractional Brownian motion with Hurst index , and are two parameters, and the integral denotes the fractional Itô integral (Skorohod integral). For further studies on fractional Brownian motion in Black–Scholes models, refer to works by Bender and Elliott [5], Biagini [6], Bjork-Hult [7], Cheridito [3], Elliott and Chan [8], Greene-Fielitz [9], Necula [10], Lo [11], Mishura [12], Rogers [2], Izaddine [13], and additional references cited therein.

In this paper, we consider the application of the quasi-likelihood method in continuous stochastic systems. Our goal is to establish the quasi-likelihood estimations for parameters and in Equation (1) and to establish their asymptotic behaviors. As is well known, there are many papers on parameter estimation of stochastic differential equations, but the use of the quasi-likelihood method to deal with parameter estimation problems of stochastic differential equations without independent increments has not been seen so far. Clearly, the solution of (1) does not have independent increments unless . We briefly describe the quasi-likelihood method as follows.

Let be a stochastic process such that its distribution contains unknown parameters with . Assume that are samples extracted from X, and that is the probability function (e.g., density function) of the increment for . Since the process X generally does not have independent increments, the function

is generally not a likelihood function. However, we can still use the usual method to obtain an estimator, which is called a quasi-likelihood estimator.

Let be fractional Brownian motion with Hurst index defined on the probability space . Consider the fractional Black–Scholes model as follows:

with , where , and the stochastic integral is the fractional Itô integral [14]. By using the Itô formula, we get

with , which is called the geometric fractional Brownian motion (gmfBm).

In this paper, for simplicity, throughout, we let H be known. Denote and

Now, let be known, and let the gmfBm be observed at some discrete time instants satisfying the following conditions:

- (C1)

- and as .

- (C2)

- There exists such that as .

We get a quasi-likelihood function of parameter and as follows

where is the density of the random variable . Then, the logarithmic quasi-likelihood function is given by

with , where . By using the quasi-likelihood function, we get that the estimators and of and satisfy the equations

When , by solving the above equation system, we get the estimators of and as follows:

where and for every . When , we have and the random variables

are independent identical distributions, the above logarithm quasi-likelihood function is a classical logarithm likelihood function, and we have

and the asymptotic behavior of the two estimators can be easily established, so in the discussion later in this paper, unless otherwise stated, it is assumed that .

Our study focuses on the asymptotic properties of two estimators. Given the Gaussian properties of the sample, we expect these estimators to exhibit quadratic variation, facilitating the derivation and simplification of their asymptotic behavior using fractional Brownian motion. To fully characterize this behavior, we rely on key properties of fractional Brownian motion, which not only underpin the theoretical understanding of complex stochastic processes but also provide a foundation for applying quasi-likelihood methods in parameter estimation.

The structure of this paper is as follows. In Section 2, we briefly describe the basic properties of fractional Brownian motion. In Section 3 and Section 4 we discuss the strong consistency and asymptotic normality of the estimator and analyze the asymptotic behavior under the cases where the parameter is known and unknown. To prove these two asymptotic behaviors, we rely on two key results related to fractional Brownian motion. Although these results have been proven for a finite observation interval, they also hold when the observation length tends to infinity. In Section 5, we consider the asymptotic behavior of the estimator . In Section 6, we provide numerical verification and empirical analysis of the estimators and . In Section 7, we conclude that the proposed fractional Brownian motion quasi-likelihood method performs well theoretically and empirically, offering a practical framework for financial parameter estimation.

2. Preliminaries

In this section, we briefly recall some basic results on fractional Brownian motion. For more aspects on the material, we refer to Bender [15], Biagini et al. [6], Cheridito-Nualart [16], Gradinaru et al. [17], Hu [4], Mishura [12], Nourdin [18], Nualart [19], Tudor [20], and references therein.

A zero mean Gaussian process defined on a complete probability space is called the fBm with Hurst index provided that and

for . Let be the completion of the linear space generated by the indicator functions with respect to the inner product

When , we know that , and when , we have

for all . The application

is an isometry from to the Gaussian space generated by , and it can be extended to . Denote by the set of smooth functionals of the form

where (f and all its derivatives are bounded) and . The derivative operator (the Malliavin derivative) of a functional F of the above form is defined as

The derivative operator is then a closable operator from into . We denote by the closure of with respect to the norm

The divergence integral is the adjoint of derivative operator . That is, we say that a random variable u in belongs to the domain of the divergence operator , denoted by , if

for every . In this case, is defined by the duality relationship

for any . Generally, the divergence is also called the Skorohod integral of a process u and denoted as

and the indefinite Skorohod integral is defined as . If the process is adapted, the Skorohod integral is called the fractional Itô integral, and the Itô formula

holds for all and .

3. The Strong Consistency of the Estimator

In this section, we obtain the consistency of the estimator in two cases. To establish the consistency of estimator , we need four lemmas, and proving these four lemmas requires several more statements. Therefore, we have placed the proof of these results at the end of this section. For simplicity, we denote by the convergence with probability one, as n tends to infinity; moreover, the symbol means that both sides have the same limit with probability one, as n tends to infinity.

Lemma 1.

Let be a fractional Brownian motion with Hurst index , and let such that the condition holds. Then, with probability one, we have

Lemma 2.

Let the conditions of Lemma 1 and condition hold. Denote

- (1)

- If , we have almost surely, as n tends to infinity.

- (2)

- If and the condition holds with , converges to almost surely, as n tends to infinity.

- (3)

- If and the condition holds with , converges to almost surely, as n tends to infinity.

3.1. The Strong Consistency of When Is Known

First, we consider the case where is known. By transforming based on Equation (4), we obtain the following result:

where

Lemma 3.

Let and , and let condition hold.

- (1)

- For , we have

- (2)

- For , if the condition hold with ,

Theorem 1.

Let μ be known and let the condition hold.

- (1)

- If , the estimator is strongly consistent.

- (2)

- If and the condition holds with , then the estimator is strongly consistent.

Proof.

When , by the fact that , Lemma 2, and Lemma 3, we obtain

almost surely, as , and statement (1) follows. Similarly, we may obtain statement (2). □

3.2. The Strong Consistency of When Is Unknown

In this subsection, we assume that both parameters and are unknown and establish the strong consistency of estimator as defined in (5). In this case, for , we obtain

for every , where and

Lemma 4.

Let , and let condition hold.

- (1)

- For we have

- (2)

- For , if the condition hold with , we have

Theorem 2.

Let μ be unknown and let the condition hold.

- (1)

- For , the estimator is strongly consistent.

- (2)

- For , if the condition holds with , then is strongly consistent.

Proof.

We first prove statement (2). When , we have

and combined with the fact that , Lemma 2, and Lemma 4, we obtain

By conditions (C1) and (C2), when and , we have

Thus, similar to the case where , we obtain statement (1). □

3.3. Proofs of Lemmas in Section 3

In this subsection, we complete the proof of several lemmas that were not proven above. To prove these lemmas and for the convenience of expression, we provide four lemmas.

Lemma 5

(Etemadi [21]). Let be a sequence of nonnegative random variables with finite second moments and such that:

- (1)

- The sequence satisfies and as .

- (2)

- The following series converges:

Then, as , almost surely.

Lemma 6.

For all and , we have

Proof.

When , the lemma is obtained from the proof of Lemma 3.3 in Yan et al. [22], and we can also prove the case in a completely similar way. □

Lemma 7.

Let and denote for . Then, we have:

- (1)

- For , is finite and nonzero.

- (2)

- For , , as .

- (3)

- For , as , we have

Proof.

The lemma is a simple calculus exercise. □

Lemma 8.

Let and . Denote

where with conditions (C1) and (C2). Then, for , and

for all .

Proof.

By Lemma 7, it follows that

for all , as n tends to infinity. Moreover, when , by conditions (C1) and (C2), we have

which imply

for all , as n tends to infinity. □

Now let us prove Lemma 1, Lemma 2, Lemma 3, and Lemma 4 one by one.

Proof of Lemma 1.

When , the lemma follows from the strong law of large numbers. Now, let . Then, the sequence satisfies condition (1) in Lemma 5. On the other hand, by the fact that

with and Lemma 6, we see that

for all . It follows that

for all . Thus, condition (2) in Lemma 5 holds, and the lemma follows. □

Proof of Lemma 2.

Recall that

where . It follows that

for all and .

When , statement (1) follows from the strong law of large numbers for independent random variables.

When , by Cauchy’s inequality, Lemma 8, and Lemma 1, one may show that

almost surely, as n tends to infinity. It follows from (13), Lemma 8, and Lemma 1 that

for all , as n tends to infinity. This shows that statement (1) holds. Similarly, we can also obtain statements (2) and (3). □

Proof of Lemma 3.

When , by Lemma 7, we obtain

It follows from Lemma 2 and the fact that

that

for all , as . This gives statement (1).

When and , by Lemma 7, we have

and

as n tends to infinity. It follows from the Lemma 2 that

as n tends to infinity, if and . This gives statement (2). □

Proof of Lemma 4.

By Lemma 7, we see that

as . Let . This yields

and

and we can obtain

Similarly, when ,

and when ,

This gives statement (1).

Now, let and . Lemma 7 implies that

Noting that

we obtain

and statement (2) follows. □

4. Asymptotic Normality of Estimator

In this section, we examine the asymptotic distribution of . We keep the notations from Section 3, and denote by and the convergence in distribution and probability, as n tends to infinity, respectively. From the structure of estimator , one can find its asymptotic distribution depends on the asymptotic distribution of . By the definition of , we can check that

for , where is given in Lemma 8 and

with . From the proof later given, we find that the two terms and admit same asymptotic velocity under some suitable assumptions of . However, when and conditions (C1) and (C2) hold, we know that (see proof of Lemma 9 in the following)

almost surely, as . But converges in for , and converges in distribution for . This indicates that and do not have the same asymptotic velocity for all , which means that such models have inflection points when . The reason for this situation is that tends to infinity. If we assume that tends to infinity logarithmically, the scenario is different. The following lemma provides the asymptotic normality of , and its proof is given at the end of this section.

Lemma 9.

Let be defined in Lemma 2, and let conditions and hold.

- (1)

- When , we havewhere denotes the normal random variable with mean a and variance , and

- (2)

- When , we obtain

- (3)

- When , we havewhere .

4.1. The Asymptotic Distribution of When Is Known

In this subsection, we obtain the asymptotic distribution of , provided is known. By (8), Lemma 3, and the fact that , for all , we get

with .

Lemma 10.

Let the condition hold, , and denote

- (1)

- For , we have as .

- (2)

- For , we have , as , provided that condition holds with .

- (3)

- For , we haveas n tends to infinity, provided that condition holds with .

Proof.

Let . By Lemma 7 we have

for all . Clearly, for and for if . It follows from (15) that

as n tends to infinity under the conditions of statements (1) and (2).

We now verify statement (3). Let . It follows from Lemma 2 that

as n tends to infinity, provided that since

as n tends to infinity. □

Theorem 3.

Let μ be known and let conditions (C1) and (C2) hold

- (1)

- Let and , then, as , we have

- (2)

- Let , then, as , we have

Proof.

Let . Then, we have

Moreover, we have for , and

for all and .

Theorem 4.

Let μ be known and . If conditions (C1) and (C2) hold with . We then have

as n tends to infinity, where is given in Lemma 9.

4.2. The Asymptotic Distribution of When Is Unknown

In this subsection, we consider the asymptotic distribution of estimator when is unknown. Based on (10), Lemma 4, and the fact that , we obtain the following result

with , where . As a corollary of Lemma 4, the following lemma provides an estimate for the remainder term

Lemma 11.

Let conditions and hold.

- (1)

- For , we have

- (2)

- For , we have , provided .

Proof.

By Lemma 7 and the proof of Lemma 4, we get

for all . Clearly, and for all . Moreover, when , we have

Similarly, for all and

for all . Noting that and for all , we obtain that

converges almost surely to 0 for and that it converges almost surely to 0 for provided . Thus, the lemma follows from Lemma 4 and (30). □

Theorem 5.

Let μ be unknown and let conditions (C1) and (C2) hold.

- (1)

- For , if , we have

- (2)

- For , we have

Proof.

Clearly, we have for all and

for , and moreover

for all . It follows that

for all , and for all , and

for all , since for and for all . Combining this with (29), Lemma 9, Lemma 11, and Slutsky’s theorem, we obtain the theorem. □

Lemma 12.

Let conditions and hold with . For , we have

Proof.

Similar to the proof of Lemma 11, we get

for all . It follows from Lemma 7 and Lemma 2 that

as n tends to infinity. □

Theorem 6.

Let and μ be unknown. If conditions and hold with , we then have

as n tends to infinity, where is given in Lemma 9.

4.3. Proofs of Lemmas in Section 4

In this subsection, we complete the proof of Lemma 9.

Proposition 1.

Let the conditions in Lemma 1 hold.

- (1)

- For , we havein distribution, where

- (2)

- For , we havein distribution.

- (3)

- For , we havein , where denotes a Rosenblatt random distribution with .

The lemma is an insignificant extension for some known results, and its proof is omitted (see, for examples, Theorem 5.4, Proposition 5.4, Theorem 5.5 in Tudor [20]). In fact, for , such convergence have been studied and can be found in Breuer and Major [23], Dobrushin and Major [24], Giraitis and Surgailis [25], Nourdin [26], Nourdin and Reveillac [27] and Tudor [20]. On the other hand, for more material on the Rosenblatt distribution and related process, refer to Tudor [20].

Proof of Lemma 9.

Let be given. We have

We also have for and

for . On the other hand, by Taylor’s expansion, we may prove

for if . It follows from Lemma 7 that for and

for . Combining the above three convergences and the proof of Lemma 8, we obtain that

for and

for . Thus, by (16) and Proposition 1, to end the proof, we check that

for all under some suitable conditions for . By the fact

with and , we get that

for all , where for .

Now, in order to end the proof, we estimate the last three items in (42) in the two cases and .

Cases I: . Clearly, the sequence

converges. It follows that

and

as n tends to infinity. Combining these with Lemma 8 and (42), we obtain convergence (41) for all . Thus, by Proposition 1, (16), (39), and Slutsky’s theorem, we obtain the desired asymptotic behavior

for all , and statement (1) follows.

Cases II: . From

and Taylor’s expansion, we get that

as n tends to infinity, which implies that

as n tends to infinity. Similarly, we also have

and

as n tends to infinity. On the other hand, we have

as n tends to infinity, where denotes the classical Beta function. It follows from Taylor’s expansion that

for all , as n tends to infinity. Combining these with Lemma 8 and (42), we obtain convergence (41) for all if . Thus, we obtain the desired asymptotic behavior

for all by Proposition 1, (16), and (40), and statement (2) follows.

Now, we verify statement (3). Let . By Lemma 7, we have

and moreover, from the proof of statement (2) in Lemma 9, we also have

where . Noting that

admits a normal distribution for all , we see that

from (52). It follows from (16), statement (3) in Proposition 1, and (51) that

as n tends to infinity. Moreover, by (51) we obtain

Combining this with (54), (53), and Proposition 1, we get

as n tends to infinity. Finally, by Proposition 1, (53), (55), and Slutsky’s theorem, we obtain

Thus, the three convergences in statements (2) and (3) follow. □

5. Asymptotic Behavior of Estimator

In this section, we consider the strong consistency and asymptotic distribution of . We keep the notations from Section 3.

Theorem 7.

Let and assume that condition (C1) holds. If is known, the estimator given by (4)

is strongly consistent and

Proof.

The theorem follows from the fact that

for all . □

Lemma 13.

When , and assuming that conditions (C1) and (C2) hold, estimator given by (5) satisfies

if the following conditions are satisfied

- (1)

- and

- (2)

- and

Proof.

When , based on statement (2) of Theorem 5 and Theorem 6, we obtain that when ,

as n tends to infinity. Thus, statement (1) is established. When and , we have

as n tends to infinity. Thus, statement (2) is established. □

Theorem 8.

Let conditions (C1) and (C2) hold and be unknown.

- (1)

- If , estimator given by (5) is a strongly consistent estimator. Furthermore, we have

- (2)

- If , estimator given by (5) is a strongly consistent estimator. Furthermore, if , we obtain

- (3)

- If , estimator given by (5) is a strongly consistent estimator. Moreover, if , we get

Proof.

For , applying a transformation to (5), we obtain

as n tends to infinity. Moreover,

as n tends to infinity. So statement (1) is proven. When and , by Lemma 13, we have

as n tends to infinity. Similarly, using statement (2) of Theorem 5 and the condition , we get

when and as n tends to infinity. Thus, statement (2) is established. When and , it follows from Lemma 13 that

From Theorem 6 and the fact that , for , we obtain

Consequently, statement (3) is established. □

6. Numerical Simulation and Empirical Analysis

In this section, the effectiveness of the proposed estimator is validated through numerical simulations. The results demonstrate that the estimator exhibits strong applicability and reliable performance in practical scenarios. To further assess the precision of the two estimation methods, Monte Carlo simulations were conducted in MATLAB 2017b, where the simulated estimates were compared against the true values, and their mean values and standard deviations were calculated to provide a comprehensive evaluation of the estimator’s performance. In addition, real trading data from the Chinese financial market were retrieved via the Tushare Pro platform using Python 3.10. With the known value of H, the parameters and were estimated, and track plots were generated in MATLAB and compared with the logarithmic closing prices of the stock, thereby further validating the effectiveness of the pseudo-likelihood estimation.

6.1. Numerical Simulation

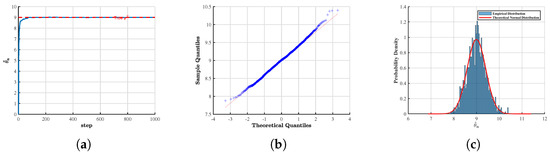

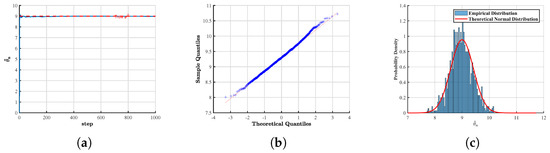

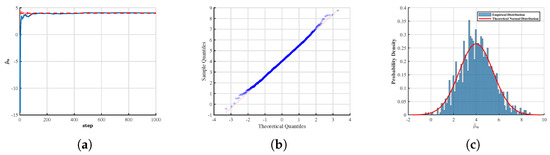

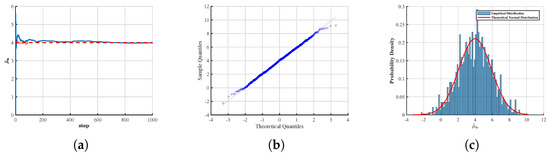

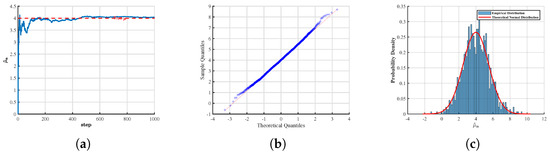

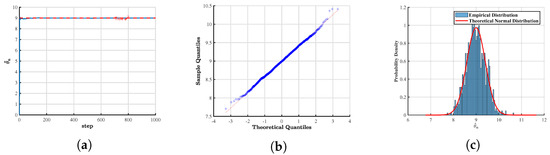

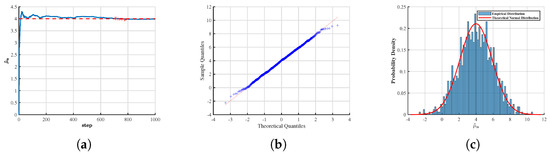

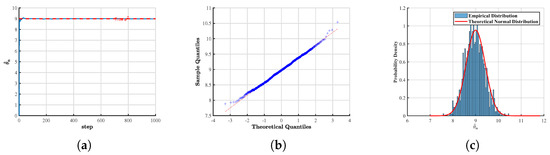

First, we emphasize that in all the figures presented below, the sample size was fixed at , and the time step was chosen as . The parameters were set to and . In the analysis of the asymptotic distribution, the number of replications, i.e., the simulated sample paths, was specified as . For the sake of notational consistency, we denote throughout the subsequent discussion. To assess the effectiveness and robustness of the proposed estimation method, we designed two primary experimental scenarios:

- 1.

- Case with Partially Known Parameters

- In the case where the parameter is known, we estimated the parameter and further examined its estimation path, quantile–quantile plot, and asymptotic distribution. The corresponding results for the estimator are presented for (Figure 1) and (Figure 2).

Figure 1. Asymptotic behavior of the estimators when is known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) asymptotic distribution of .

Figure 1. Asymptotic behavior of the estimators when is known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) asymptotic distribution of . Figure 2. Asymptotic behavior of the estimators when is known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) asymptotic distribution of .

Figure 2. Asymptotic behavior of the estimators when is known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) asymptotic distribution of . - In the case where the parameter is known, we estimated the parameter and examined its estimation path and asymptotic distribution. Similarly, figures present the estimation paths and asymptotic distribution of when (Figure 3) and (Figure 4).

Figure 3. Asymptotic behavior of the estimators when is known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) the asymptotic distribution of .

Figure 3. Asymptotic behavior of the estimators when is known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) the asymptotic distribution of . Figure 4. Asymptotic behavior of the estimators when is known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) asymptotic distribution of .

Figure 4. Asymptotic behavior of the estimators when is known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) asymptotic distribution of .

- 2.

- Case with Completely Unknown Parameters

- In this scenario, where both and are unknown, we estimated both parameters simultaneously and analyzed their estimation paths and asymptotic distributions. Figures present the estimation paths and asymptotic distribution of and when (Figure 5 and Figure 6) and (Figure 7 and Figure 8).

Figure 5. Asymptotic behavior of the estimators when both parameters are known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) asymptotic distribution of .

Figure 5. Asymptotic behavior of the estimators when both parameters are known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) asymptotic distribution of . Figure 6. Asymptotic behavior of the estimators when both parameters are known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) asymptotic distribution of .

Figure 6. Asymptotic behavior of the estimators when both parameters are known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) asymptotic distribution of . Figure 7. Asymptotic behavior of the estimators when both parameters are known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) asymptotic distribution of .

Figure 7. Asymptotic behavior of the estimators when both parameters are known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) asymptotic distribution of . Figure 8. Asymptotic behavior of the estimators when both parameters are known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) asymptotic distribution of .

Figure 8. Asymptotic behavior of the estimators when both parameters are known under . (a) Plot of ; (b) quantile–quantile plot of ; (c) asymptotic distribution of .

Case 1: The asymptotic behavior of the estimators of and when is known.

Case 2: The asymptotic behavior of the estimators of and when both parameters are unknown.

From the above figures, it can be observed that for different values of H, the numerical simulation results of the convergence and asymptotic properties of the estimators and are largely consistent with the theoretical predictions. The discrepancies are minor, indicating that the obtained estimates exhibit a high degree of accuracy.

In addition, to investigate the asymptotic behavior of the proposed estimators for different sample sizes, we considered three sample sizes: , 2000, and 3000. The comparison of theoretical variance with empirical variance, as well as the corresponding errors, was carried out. The specific experimental design is outlined as follows:

- Table 1: Theoretical variance, empirical variance, and their errors for parameter when is known.

Table 1. Comparison of theoretical and empirical variances of under known and various Hurst indices.

Table 1. Comparison of theoretical and empirical variances of under known and various Hurst indices. - Table 2: Theoretical variance, empirical variance, and their errors for parameter when is known.

Table 2. Comparison of theoretical and empirical variances of under known and various Hurst indices.

Table 2. Comparison of theoretical and empirical variances of under known and various Hurst indices. - Table 3: Joint analysis of the variance estimates and errors for both parameters when and are unknown.

Table 3. Comparison of theoretical and empirical variances of and under various Hurst indices and sample sizes.

Table 3. Comparison of theoretical and empirical variances of and under various Hurst indices and sample sizes.

The discrepancies are minor, indicating that the obtained estimates exhibit a high degree of accuracy.

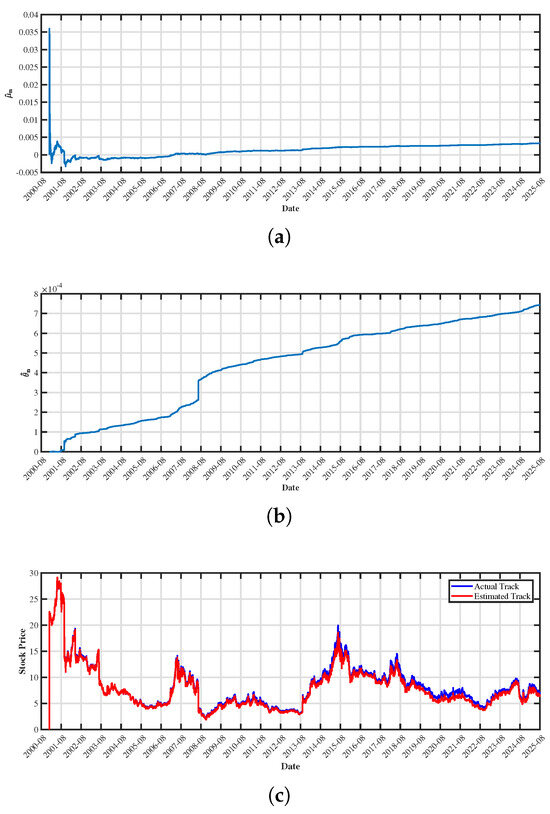

6.2. Empirical Analysis

To further evaluate the performance of the proposed model and estimation method in a real-world market setting, we conducted an empirical analysis using Heilan Home Co., Ltd. Jiangyin, Jiangsu Province, China (stock code: 600398), a representative stock from the Chinese A-share market. Daily closing price data were retrieved via the Tushare Pro platform using Python, covering the period from 28 December 2000, to 26 August 2025. Data cleaning and preprocessing were carried out to ensure consistency. As supported by the theoretical results in Section 3, the estimators were consistent as the sample size ; therefore, the full sample period was employed to guarantee robustness. The Hurst exponent of the stock return series was first estimated using the R/S method, yielding , which suggested the presence of long-memory effects.

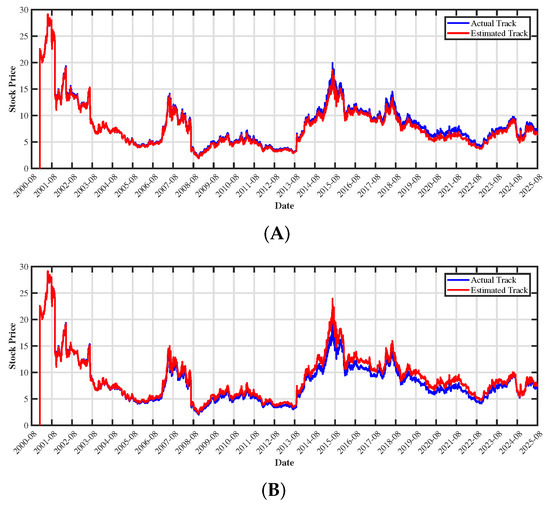

Based on this, the key model parameters and were estimated within the quasi-likelihood framework proposed in this paper. To provide an intuitive evaluation of model fit, simulated price track were generated in MATLAB using the estimated parameters and compared with the actual closing prices observed. The comparison demonstrated that the model captured the overall price dynamics effectively, thereby confirming both the applicability of the mixed fractional Brownian motion Black–Scholes framework and the reliability of the proposed quasi-likelihood estimation method on real financial data. Furthermore, we simulated stock price tracking using both the fractional Brownian motion model proposed in this study and the classical Black–Scholes model. The comparative results are presented in Figure 9 and Figure 10. As illustrated, our proposed model provides a notably better fit to the observed price dynamics, particularly in capturing volatility clustering and the long-memory behavior inherent in the price process. These results further highlight the advantages and practical applicability of our model in financial data modeling and empirical analysis.

Figure 9.

Parameter estimation and price comparison for stock 600398. (a) for stock 600398; (b) for stock 600398; (c) stock 600398: comparison of real and simulated prices.

Figure 10.

Comparison of classical and fractional Black–Scholes models. (A) Plot of 600398 from the fractional Black–Scholes model; (B) Plot of 600398 from the classical Black–Scholes model.

7. Conclusions

In this paper, we studied quasi-likelihood estimation for the fractional Black–Scholes model driven by fractional Brownian motion. Based on discrete observations of the geometric fractional Brownian motion, we constructed the quasi-likelihood function and derived the estimators and . We further analyzed the asymptotic properties of these estimators, including strong consistency and asymptotic normality, considering both cases where was known or unknown. Numerical simulations and an empirical analysis indicated that the quasi-likelihood estimation method provided accurate parameter estimates under high-frequency observations, while effectively capturing volatility clustering and long-memory characteristics of the price process. These results demonstrate the effectiveness and applicability of the proposed model and estimation methodology in financial data modeling and empirical studies. In summary, this study extended the application of fractional Brownian motion models in financial parameter estimation and provided a feasible methodological framework for estimating parameters in complex stochastic systems, offering both theoretical insights and practical guidance for future research.

Author Contributions

Conceptualization, W.L. and L.Y.; methodology, W.L., Y.X. and L.Y.; software, W.L. and Y.X.; validation, W.L. and Y.X.; formal analysis, L.Y.; writing—original draft preparation, W.L. and Y.X.; writing—review and editing, W.L., Y.X. and L.Y.; visualization, W.L. and Y.X.; supervision, L.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Grant Nos. 11971101, 12171081) and Shanghai Natural Science Foundation (Grant No. 24ZR1402900).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors thank the editor and the referees for their valuable comments and suggestions, which greatly improved the quality of this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mandelbrot, B.B.; Van Ness, J.W. Fractional Brownian motions, fractional noises and applications. SIAM Rev. 1968, 10, 422–437. [Google Scholar] [CrossRef]

- Rogers, L.C.G. Arbitrage with fractional Brownian motion. Math. Financ. 1997, 7, 95–105. [Google Scholar]

- Cheridito, P. Arbitrage in fractional Brownian motion models. Financ. Stochastics 2003, 7, 533–553. [Google Scholar] [CrossRef]

- Hu, Y.; Øksendal, B. Fractional white noise calculus and applications to finance. Infin. Dimens. Anal. Quantum Probab. Relat. Top. 2003, 6, 1–32. [Google Scholar] [CrossRef]

- Bender, C.; Elliott, R.J. Arbitrage in a discrete version of the Wick-fractional Black-Scholes market. Math. Oper. Res. 2004, 29, 935–945. [Google Scholar]

- Biagini, F.; Hu, Y.; Øksendal, B.; Zhang, T. Stochastic Calculus for Fractional Brownian Motion and Applications; Springer Science & Business Media: London, UK, 2008. [Google Scholar]

- Björk, T.; Hult, H. A note on Wick products and the fractional Black-Scholes model. Financ. Stochastics 2005, 9, 197–209. [Google Scholar]

- Elliott, R.J.; Chan, L. Perpetual American options with fractional Brownianmotion. Quant. Financ. 2003, 4, 123. [Google Scholar] [CrossRef]

- Greene, M.T.; Fielitz, B.D. Long-term dependence in common stock returns. J. Financ. Econ. 1977, 4, 339–349. [Google Scholar]

- Necula, C. Option Pricing in a Fractional Brownian Motion Environment. 2002. Available online: https://ssrn.com/abstract=1286833 (accessed on 11 September 2025).

- Lo, A.W. Long-term memory in stock market prices. Econom. J. Econom. Soc. 1991, 59, 1279–1313. [Google Scholar]

- Mishura, Y.; Shevchenko, G. The rate of convergence for Euler approximations of solutions of stochastic differential equations driven by fractional Brownian motion. Stochastics Int. J. Probab. Stoch. Process. 2008, 80, 489–511. [Google Scholar]

- Izaddine, H.G.; Deme, S.; Dabye, A.S. Analysis of fractional Black-Scholes, Ornstein-Uhlenbeck, and Langevin models: A minimum distance estimation approach. Gulf J. Math. 2025, 19, 217–250. [Google Scholar] [CrossRef]

- Alos, E.; Mazet, O.; Nualart, D. Stochastic calculus with respect to Gaussian processes. Ann. Probab. 2001, 29, 766–801. [Google Scholar] [CrossRef]

- Bender, C. An Itô formula for generalized functionals of a fractional Brownian motion with arbitrary Hurst parameter. Stoch. Process. Their Appl. 2003, 104, 81–106. [Google Scholar] [CrossRef]

- Cheridito, P.; Nualart, D. Stochastic integral of divergence type with respect to fractional Brownian motion with Hurst parameter H∈0,12. Ann. De L’Institut Henri Poincare (B) Probab. Stat. 2005, 41, 1049–1081. [Google Scholar] [CrossRef]

- Gradinaru, M.; Nourdin, I.; Russo, F.; Vallois, P. m-order integrals and generalized Itô’s formula; the case of a fractional brownian motion with any Hurst index. Ann. De L’Institut Henri Poincare (B) Probab. Stat. 2005, 41, 781–806. [Google Scholar] [CrossRef]

- Nourdin, I. Selected Aspects of Fractional Brownian Motion; Bocconi and Springer Series; Springer: Milan, Italy, 2012. [Google Scholar]

- Nualart, D. Malliavin Calculus and Related Topics, 2nd ed.; Springer: New York, NY, USA, 2006. [Google Scholar]

- Tudor, C. Analysis of Variations for Self-Similar Processes: A Stochastic Calculus Approach; Springer: Cham, Switzerland, 2013. [Google Scholar]

- Etemadi, N. On the laws of large numbers for nonnegative random variables. J. Multivar. Anal. 1983, 13, 187–193. [Google Scholar] [CrossRef]

- Yan, L.; Liu, J.; Chen, C. The generalized quadratic covariation for fractional Brownian motion with Hurst index less than 1/2. Infin. Dimens. Anal. Quantum Probab. Relat. Top. 2014, 17, 1450030. [Google Scholar] [CrossRef]

- Breuer, P.; Major, P. Central limit theorems for non-linear functionals of Gaussian fields. J. Multivar. Anal. 1983, 13, 425–441. [Google Scholar] [CrossRef]

- Dobrushin, R.L.; Major, P. Non-central limit theorems for non-linear functional of Gaussian fields. Z. Wahrscheinlichkeitstheorie Verwandte Geb. 1979, 50, 27–52. [Google Scholar] [CrossRef]

- Giraitis, L.; Surgailis, D. CLT and other limit theorems for functionals of Gaussian processes. Z. Wahrscheinlichkeitstheorie Verwandte Geb. 1985, 70, 191–212. [Google Scholar] [CrossRef]

- Nourdin, I. Asymptotic Behavior of Weighted Quadratic and Cubic Variations of Fractional Brownian Motion. Ann. Probab. 2008, 36, 2159–2175. [Google Scholar] [CrossRef]

- Nourdin, I.; Réveillac, A. Asymptotic Behavior of Weighted Quadratic Variations of Fractional Brownian Motion: The Critical Case H=1/4. Ann. Probab. 2009, 37, 2200–2230. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).