4.2. The Social Behavior Optimization Algorithm

- (1)

Introduction to the SBO algorithm

Social Behavior Optimization (SBO) is a novel group intelligence optimization method inspired by the human behavior of climbing the social ladder. The algorithm takes the individual self-improvement motivation as the core driving force and simulates the process of human beings in the social structure by imitating the elites, learning from successful experiences and iteratively improving their own behaviors so as to achieve an efficient search for the global optimal solution. The basic idea of SBO stems from research findings in cognitive science and behavioral economics, which show that humans, when faced with complex problems, tend to learn from individuals of higher social status or higher performance in order to improve their abilities. SBO formalizes this social learning mechanism as a computational model, constructing the optimization process through the framework of “elite demonstration + collective imitation”, reflecting an evolutionary mechanism analogous to that of mentoring and career paths in human societies.

In SBO, a “social network” is formed by sharing knowledge among intelligences, in which individuals gradually improve their “social status”, i.e., the value of the objective function, through observation, imitation and learning. During the operation of the algorithm, the strategies evolve naturally, creating a synergistic balance between exploration (searching for new opportunities) and exploitation (using the information already available) by different individuals. The working mechanism of SBO can be summarized in two main stages:

Elite engagement phase (Exploration): In this phase, the agent focuses on the best-performing solutions in the current population and expands the depth of exploration in potential areas by steering the search direction. This process is equivalent to the individual seeking a mentor or role model in the social system through whom to enter a more efficient growth path.

Resource acquisition and evaluation phase (Exploitation): Individuals take information from top-performing solutions and adapt and optimize their own solutions, similar to drawing on the experience of others in a professional field to improve one’s own competence. This phase strengthens the local development of the understanding and accelerates the convergence process.

SBO inherits and develops the basic ideas of the Human Behavior-Based Optimization (HBBO) approach [

24]. HBBO usually simulates human social interaction behaviors such as imitation, collaboration and innovation, and is suitable for dynamically changing or multi-objective problems. Similarly, Educational Competition Optimizer (ECO) improves the overall population search quality by introducing a competitive learning environment in which individuals compete and learn around the optimal solution [

25].

Compared with traditional group intelligence algorithms, SBO shows significant advantages in the following aspects:

Achieving a more natural balance between global exploration and local exploitation enhances the ability of algorithms to leapfrog local optima;

The sensitivity and debugging dependence on hyperparameters are drastically reduced and the portability and practicality of the algorithms are enhanced;

Good scalability to adapt to the optimization needs of high-dimensional complex problems.

- (2)

Mathematical modeling of SBO

In the initialization phase of the enhanced Social Behavior Optimization algorithm, a two-population design strategy is introduced to enhance population diversity and improve search efficiency. Specifically, as shown in Equation (12), two independent but corresponding populations X1 and X2 are constructed, where each population contains N individuals with index i ∈ {1, 2, …, N} corresponding to members of the same household. In this design, X1(i) and X2(i) represent individuals with different levels of knowledge and social status in the i-th family, thus reflecting the heterogeneity and behavioral diversity within the family at the initialization stage.

Each individual’s state is shown in Equation (13):

where

xi,j is the

j-th decision variable for the

i-th individual,

D is the number of decision variables, and

lbj and

ubj are the upper and lower bounds, respectively. This uniform initialization of the

N ×

D matrices of the two populations establishes the dimensional nature of the problem and ensures different starting points.

After initialization, the selection process identifies the elite members of each household to form the elite population

Xe. Specifically, for household

i, the selection of the elite scheme is shown in Equation (14).

where

is the objective function.

The two-population structure improves the algorithm’s coverage and global exploration ability in the search space by introducing role differences (common versus elite individuals) and information polygraphy, providing guarantees for convergence and robustness [

26]. The structure not only facilitates the implementation of the elite selection and learning renewal mechanism but also simulates the status-based social interaction and resource sharing mechanism in a real society, reflecting the role of individual strengths and strategic connections in social progress.

In the elite engagement phase, the SBO algorithm draws on the dynamic evolution mechanism of status structure in human societies to enhance the efficiency of deep mining and exploration of optimal solutions. This stage accelerates the progress of ordinary individuals by simulating the process of individuals actively seeking guidance from high-status mentors (i.e., elite individuals in the algorithm), which embodies the characteristics of information flow and resource sharing across families, thus breaking through the limitations posed by the traditional isolated-family framework and constructing a more adaptive and robust search mechanism.

For the specific implementation, the SBO algorithm uses a roulette wheel selection strategy [

27,

28,

29] to randomly select representative individuals from a candidate subset consisting of the best-performing individuals in each family. This probabilistic selection approach avoids the reliance on a single elite and enhances the diversity and depth of exploration of the algorithm while reflecting the nonlinear, strategic and uncertain characteristics of human social networks.

The selected elite individual , together with the optimal solution xb in the current population, forms a high-status search circle, which corresponds to a region of potential optimization value in the solution space and guides the other individuals to cluster toward the better solution. This type of social mobility modeling mechanism effectively facilitates the migration of individuals from the current region to more promising solution spaces, thus improving the overall search efficiency and convergence performance of the algorithm.

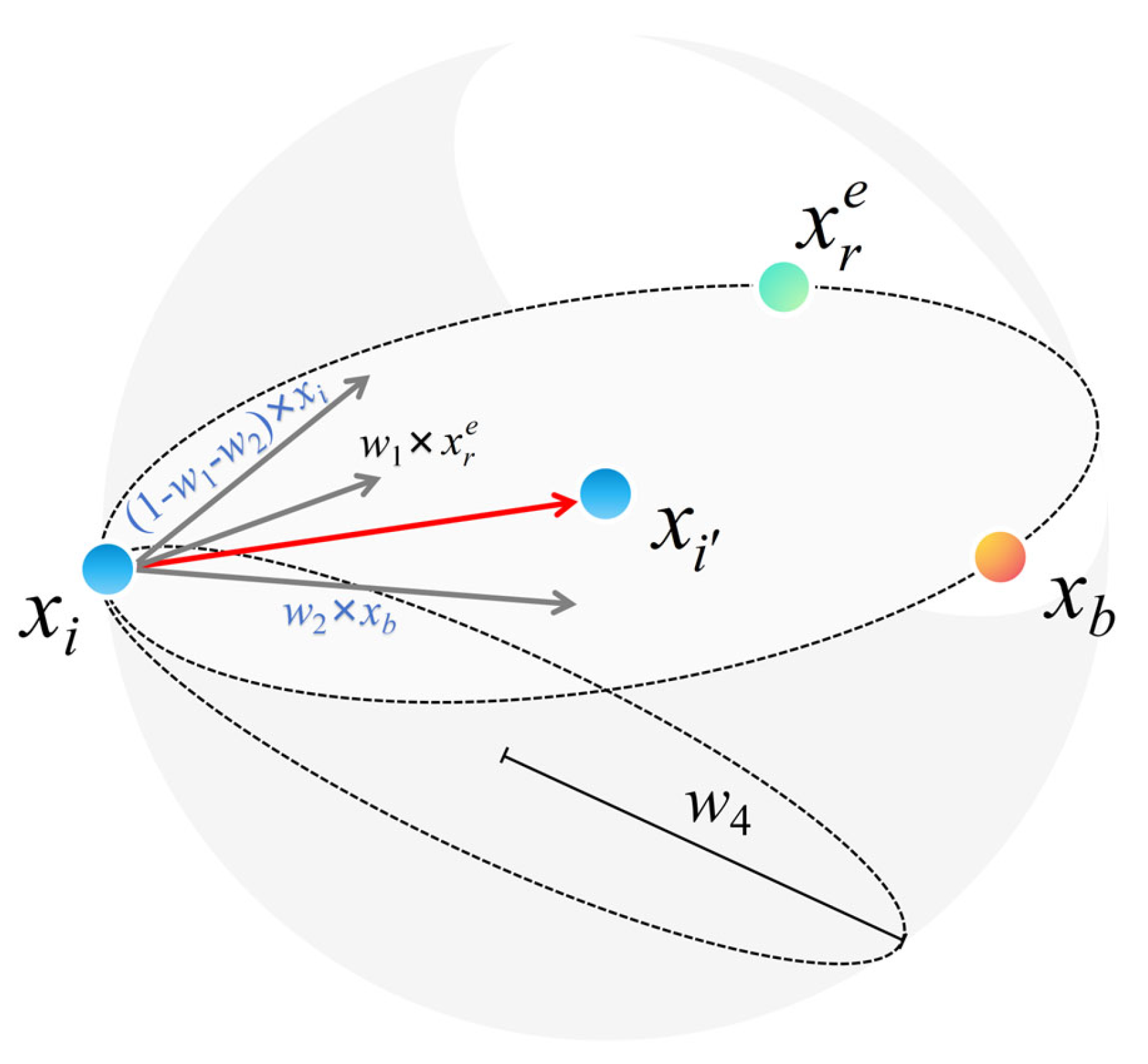

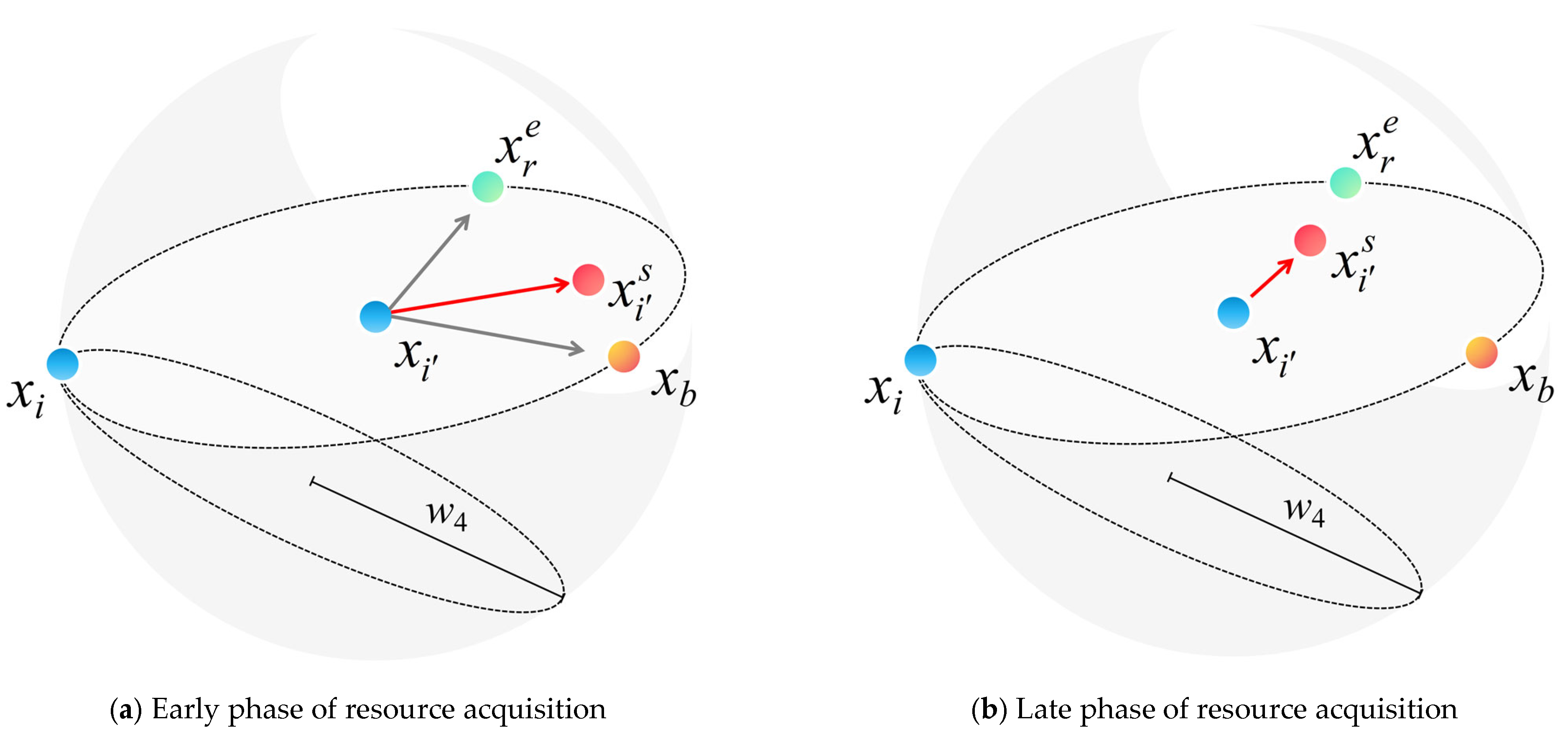

To mathematically model this behavior,

Figure 12 illustrates the generation process of an individual

xi within a high-status circle, thus defining a committed region in the space of understanding, whose mathematical form is described by Equation (15). This high-status circle constitutes an adaptive region in the space of understanding, within which the individual performs guided searches aimed at achieving an effective balance between structural evolution and exploratory perturbations. In this framework, the behavioral evolution of an individual is driven by several key factors, and its positional update mechanism reflects the intention to converge to a high-quality solution while retaining a certain degree of randomness to avoid falling into a local optimum. This modeling approach not only improves the orientation of the search process, but also enhances the coverage of the solution space, thus contributing to the overall optimization performance.

where

xi denotes the

i-th individual in the population,

denotes the next iteration, which is an elite individual selected from the random number population by the roulette method, and

xb is the best solution found so far. The movement strategy in Equation (15) ensures that individuals are influenced by their location, their high-performing peers and the best-known solution.

The parameters

w1 and

w2 are generated using randn to provide normally distributed randomness to weight the contributions of

xi,

and

xb. These values introduce randomness while ensuring that moves remain within logical limits, facilitating a controlled but varied search in the solution space. The parameters

w3 and

w4 are used as adjustable design variables to dynamically balance the effect of the high state circle on the global exploration and local mining behaviors in the algorithm, aiming to improve the adaptability and convergence performance of the algorithm, where

w3 is shown in Equation (16).

where

MaxFEs denotes the maximum number of function evaluations,

FEs is the current number of evaluations that have been performed, and

i is the individual index. This formula realizes an adaptive trade-off between the standard updating mechanism and the stochastic search strategy by dynamically adjusting the value of

w3 along with the optimization process, so as to flexibly regulate the extent of global exploration and local exploitation at different stages.

If rand ≥

w3, Equation (17), where

w4 is used as a scaling factor, is used to generate uniformly distributed random numbers between [−

w3,

w3]. This mechanism increases exploration diversity by enabling step-size adjustment, especially when escaping local optima.

By integrating structured learning mechanisms with exploratory flexibility, the SBO algorithm simulates the decision-making behavior of real-world individuals who actively explore unconventional paths to achieve optimal outcomes while pursuing successful paradigms. In particular, Equation (15) strategically calculates the optimal position in high-status circles, reflecting the process by which an individual acquires better development prospects with the help of a network of influence. As the evolutionary process advances, the solution gradually approaches more promising regions in the search space, achieving successive optimization. Meanwhile, another update formula introduces a random scaling factor w4 defined in the interval [−w3, w3], which enables the algorithm to jump out of the current search region while exploring the locally optimal solution, effectively suppressing the risk of the algorithm falling into the local optimum and improving the ability of global exploration of the solution space. This mechanism reflects the behavioral characteristics of human beings who actively deviate from the mainstream path and explore potential opportunities in the decision-making process. In summary, the SBO algorithm achieves a dynamic balance between exploitative and exploratory search, which not only improves the global quality of the solution and the optimization efficiency, but also has good problem adaptability and robustness, showing excellent performance in complex optimization tasks.

In the resource acquisition phase, individuals play a key role in the transition from exploration to exploitation by acquiring and integrating valuable information resources (similar to social capital) from their social networks. This phase begins by assigning all individuals in population X a flag vector, initialized to 1, as their initial success flag associated with the current state. This marker will be dynamically updated in subsequent resource assessment phases to reflect an individual’s performance in terms of efficacy as the status evolves.

The strategic mechanisms of resource acquisition are differentiated by the social status of the individual. For individuals in high social status, their resource integration process shows more selectivity, acquiring resources from two key sources of information: one originating from another high-status member within the same family unit, and the other from the best-performing individual in the group as a whole, as shown in Equation (18).

where

jidx = randi(

D), where

idx = 1, 2, 3, reflecting the mix of family and external elite influence. By providing a balanced blend of these two sources, the algorithm guides high-status individuals to make more strategic resource allocations based on leveraging the strengths of their networks, thereby enhancing their optimization capabilities and search efficiency.

On the other end of the social scale, unsuccessful socializers have to rely on family resources. Their resources are updated as shown in Equation (19).

where the row vector

m is initially zero and is updated by Equation (20) prior to the social interaction.

where

u = randperm (

D), provides a random permutation of the decision variable indexes.

As shown in

Figure 13, the resource acquisition phase directs the clustering of populations toward more promising areas in the solution space through a status-driven differential renewal strategy, thus enhancing the exploitability and utilization efficiency of the solution.

Figure 13a depicts that the top-performing individuals in the society optimize their location with the help of resources from high-status agent individuals, improving their local search accuracy through the introduction of external high-quality information. On the other hand,

Figure 13b demonstrates that the disadvantaged individual relies on familiar family resources for self-reorientation, attempting to find a path of improvement in a known environment. This mechanism reflects individual heterogeneity in resource integration and location updating strategies driven by social hierarchies. It also promotes effective synergy between exploration and exploitation for the overall population.

In the resource evaluation phase, the SBO algorithm determines the effectiveness of the resources acquired by the individual, aiming to assess whether the integration of resources positively affects the individual’s fitness (i.e., “health status”). This stage is based on the initialized marker vector, where “1” indicates an improvement in fitness (i.e., successful assessment), while “0” indicates no improvement in fitness (unsuccessful assessment), thus tracking and recording the evolutionary process of the individual. This enables the tracking and recording of the evolutionary process of an individual.

Specifically, if the objective function value of the updated individual

is better than that of the original

xi, the new state is retained, as shown in Equation (21).

At the same time, we refresh the

flag vector as shown in Equation (22).

Individuals who do not progress will remain in their current position while successful individuals will move to a superior position. This selective process reflects real-world social progress, where only valuable resources, those that clearly improve an agent’s position, are retained. This mechanism ensures the algorithm’s selective retention strategy after resource utilization, effectively improving the quality of understanding and the stability of the optimization process.

When the algorithm meets the termination conditions (e.g., reaches the maximum number of function evaluations or obtains an optimized solution validated by an augmented metric), it enters the integration phase. Until then, the algorithm cycles through the core mechanisms of “elite participation”, “resource acquisition”, and “resource evaluation” to continuously improve the population’s adaptation through state-driven interactions. In the consolidation phase, the algorithm extracts and generates the final solution reflecting the state evolution process based on the compilation of the objective function and the historical evaluation results, ensuring efficient resource allocation and forming structured analysis reports and application documents to support the interpretability of the solution and subsequent deployment requirements. This stage not only marks the end of the optimization process but also demonstrates the effective synergy and convergence of the algorithm between global exploration and local development.

The complete implementation framework of the SBO algorithm and the pseudo-code of the algorithm are shown in Algorithm 1.

| Algorithm 1. Pseudo-code for the SBO algorithm |

| SBO Algorithm Pseudo-Code |

| Input: N, D, MaxFEs, lb, ub, fobj |

| Output: xb |

| 1 Initialization: |

| 2 Initialize X, Xe, Fitting, Fite, flag |

| 3 Calculate Fitting and Fite |

| 4 Update Xe and xb |

| 5 while FEs < MaxFEs do |

| 6 Select from Xe by Roulette Wheel |

| 7 Elite Engagement: |

| 8 Update w1, w2, w3, w4 |

| 9 Update X by Equation (9) |

| 10 Apply Boundary control to X |

| 11 Initialize Xs as X |

| 12 Resource Acquisition: |

| 13 Initialize row vector m as 0 |

| 14 Update m by Equation (14) |

| 15 foreach xs ∈ Xs do |

| 16 if xs is successful then |

| 17 Updata xs by Equation (12) |

| 18 else |

| 19 Update xs by Equation (13) |

| 20 Resource Evaluation: |

| 21 Update X by Equation (15) |

| 22 Update flag by Equation (16) |

| 23 Consolidation: |

| 24 Update Xe and xb |

| 25 FEs → FEs +2N |

The computational complexity of the enhanced SBO algorithm is O(T⋅N⋅(F + D)), where T is the number of iterations, N is the population size, D is the problem dimension, and F is the cost of the fitness function evaluation. This indicates that the overall complexity scales linearly with the population size and number of iterations, making SBO computationally efficient for high-dimensional problems.

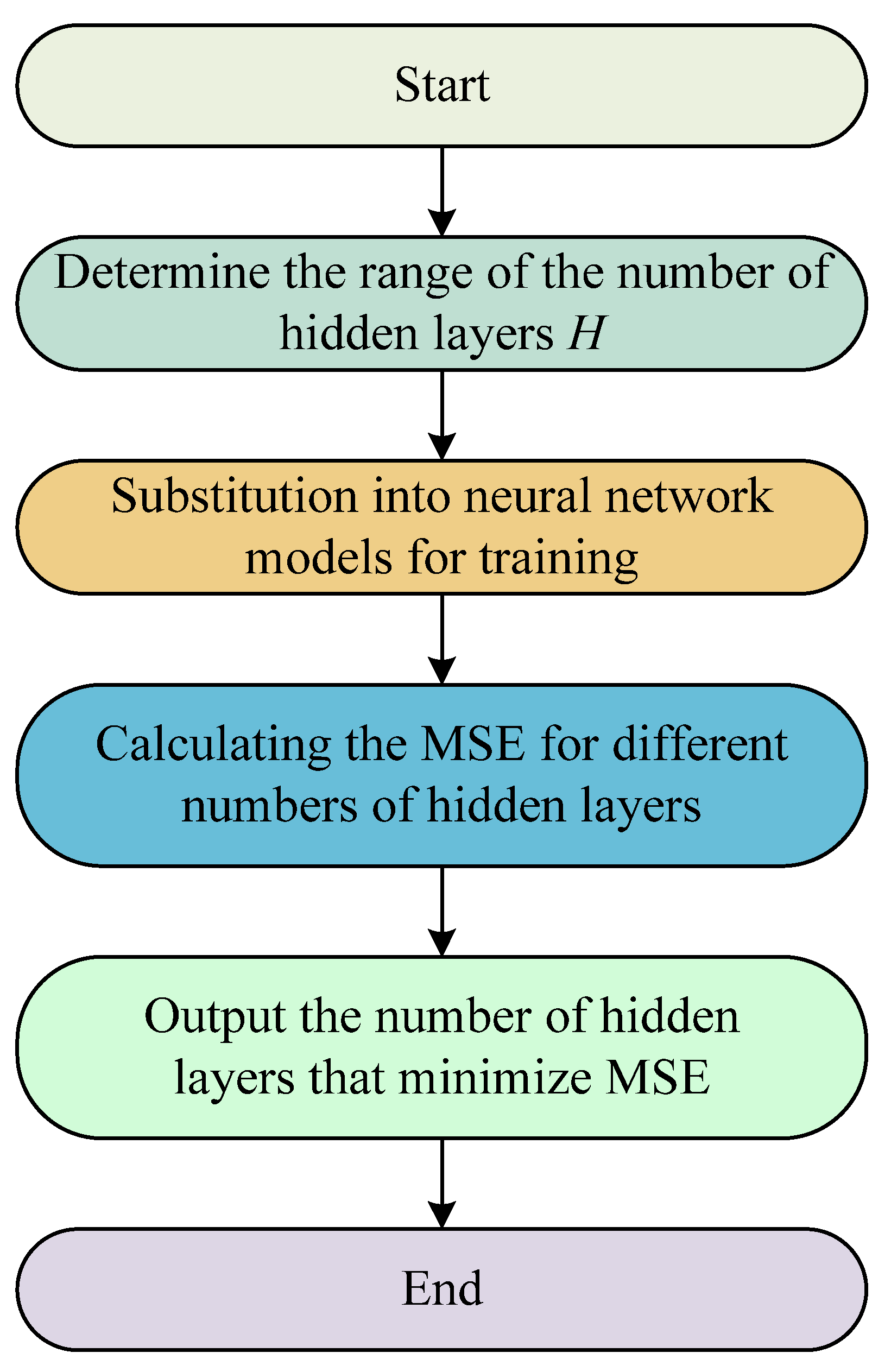

4.3. SBO–BP Neural Network Prediction Model

In the process of constructing the SBO–BP neural network prediction model, in order to determine the optimal number of hidden layers in the network structure, this thesis sets a reasonable range for the number of hidden layers based on Equation (23) and conducts layer-by-layer testing by means of loop iteration. Specifically, for each candidate number of layers, the neural network model is constructed and trained separately, and its corresponding mean square error (MSE) is recorded as the performance evaluation index. The number of hidden layer layers that minimizes the mean square error is finally selected as the optimal structure of the model, and the test results of this process are shown in

Figure 14.

where

H is the number of hidden layers,

M is the number of input layers,

P is the number of output layers and

a is an integer between [1, 10].

In terms of determining the network hyperparameters, the final number of hidden layers selected in this thesis is 10, and the number of training times is set to 1000 to ensure that the model has sufficient learning capability. In terms of learning rate, it is set to 0.001 to balance the training convergence speed and stability. The training accuracy threshold is also set to 1 × 10−6 to meet the high demand for prediction accuracy.

In order to improve the accuracy and generalization ability of the BP neural network prediction model, this thesis introduces the enhanced SBO algorithm to globally optimize the initial weights and thresholds of the BP neural network so as to overcome the problems of the traditional BP algorithm, which is prone to slow convergence speeds falling into the local minima during the training process. In order to verify the effectiveness and advantages of the SBO algorithm in parameter optimization, a variety of newly proposed intelligent optimization algorithms with excellent performance after 2023 were selected as the comparison algorithms in this thesis, such as the Newton-Raphson-Based Optimizer (NRBO, 2024) [

30], GOOSE algorithm (GOOSE, 2024) [

31], Chinese pangolin optimizer (CPO, 2025) [

32], Snow ablation optimizer (SAO, 2023) [

33], and Enzyme Action Optimizer (EAO, 2024) [

34]. Control tests were conducted based on the same network structure with experimental data. To ensure the fairness and scientific validity of the comparison experiments, all optimization algorithms were tested against each other in the experiments using a unified network structure, dataset division and evaluation index system, in which the population size was 50 and the maximum number of iterations was 200.

The convergence trend of the iterative process is shown in

Figure 15, which visually reflects the differences between the algorithms in terms of convergence speed and global search ability in optimizing the weights and thresholds of the BP neural network structure. The results show that all the algorithms show a certain convergence ability at the beginning of the iteration, but there are obvious differences in the convergence speed and the final adaptation value. The SBO algorithm reaches a lower fitness value within the first 30 iterations, indicating that it has a stronger initial global exploration capability. In the later stage of convergence, the fitness value of SBO continues to converge to 0.0061, which is better than the remaining five algorithms, reflecting its advantages in terms of optimization accuracy and stability. In contrast, CPO and GOOSE have slightly lower final convergence accuracies, although they also show a faster decreasing trend in the initial stage, while NRBO, SAO and EAO have lower decreasing rates and final performances than SBO, showing that their optimization capabilities are relatively limited. In summary, SBO shows better performance in both the convergence speed and quality of the solution, which further verifies the performance advantage of the SBO algorithm in optimizing the structure of BP neural networks, and provides an effective support for the construction of the high-precision prediction model SBO–BP.

As shown in

Table 3, all six algorithms exhibit a clear decreasing trend in fitness value, indicating their effectiveness in solving the optimization problem. Among them, the SBO algorithm achieves the best final performance with a fitness value of 0.00614, ranking first in accuracy. Although its initial value is relatively high (0.4817), SBO converges rapidly and outperforms all other methods. CPO also shows competitive results, with a low final fitness value of 0.00705, ranking second. In contrast, SAO starts with the highest initial value (0.5012) and converges the slowest, resulting in the worst final performance (0.01791), likely due to premature convergence or local optima. GOOSE and NRBO achieve moderate results, while EAO demonstrates stable but less competitive performance. These results suggest that SBO is particularly well-suited for high-precision, nonlinear multi-objective optimization problems, validating its integration with NSGA-II for enhanced global search capability and solution diversity.

4.4. SBO–BP Neural Network Model Prediction Results

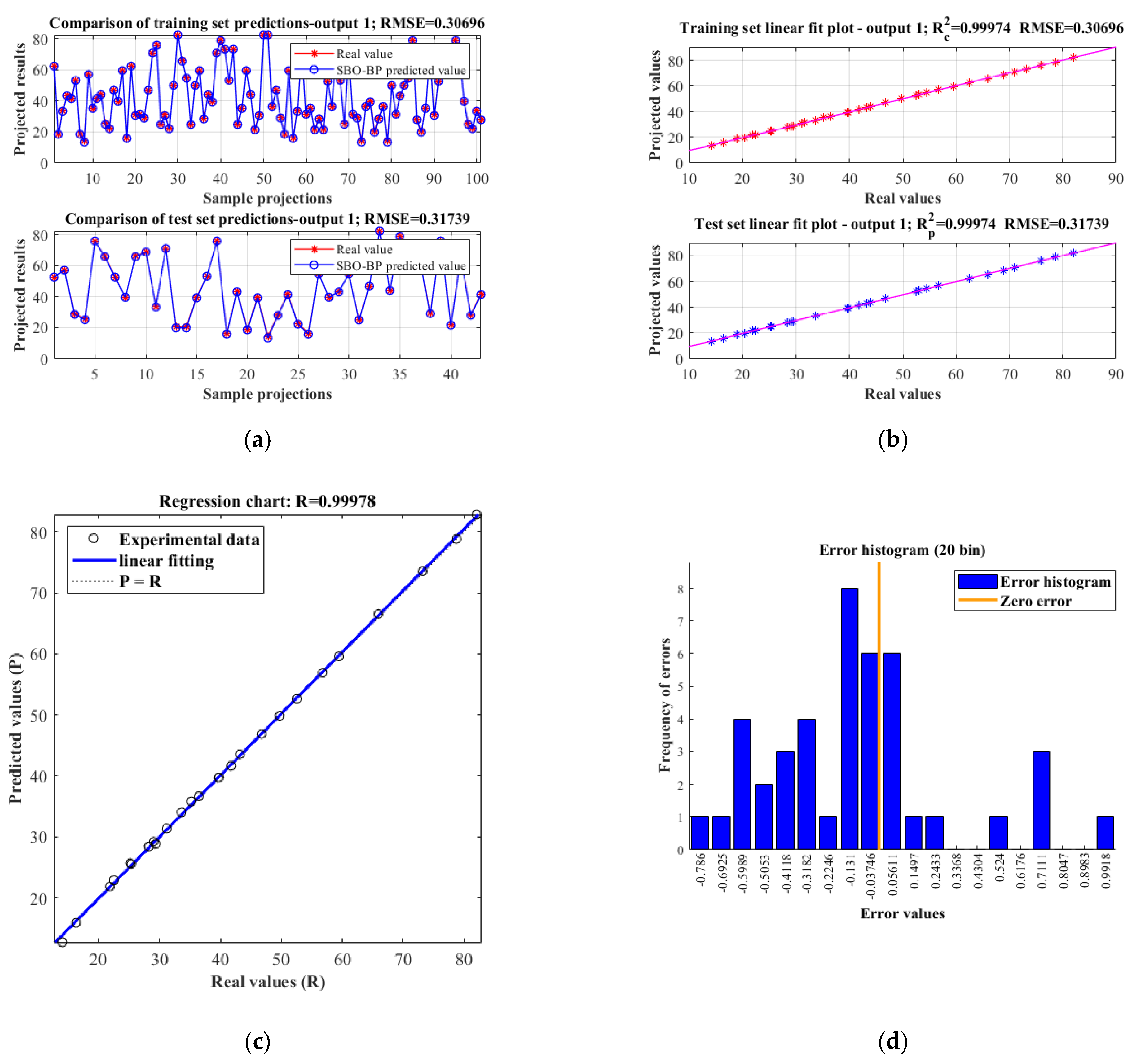

In this thesis, the prediction performance of the SBO–BP neural network model is comprehensively evaluated by training test comparison plots, linear fitting plots, regression plots and error histograms.

First, the predicted values of end-of-piston speed

Ve for the test set were experimentally compared with the AMESim simulation values, as shown in

Figure 16. The results show that the RMSE of the model on the training set and test set were 0.30696 and 0.31739, respectively, and the predicted values are more consistent with the AMESim simulation values. The correlation coefficient in the regression plot reaches 0.99978, indicating a strong linear correlation between the predicted results and the true values. The R-Square (R

2) of both training and testing in the linear fitting plot is 0.99974, which further verifies the fitting accuracy. The error histogram shows that most of the errors are concentrated in the interval close to zero, indicating small model errors and strong prediction stability.

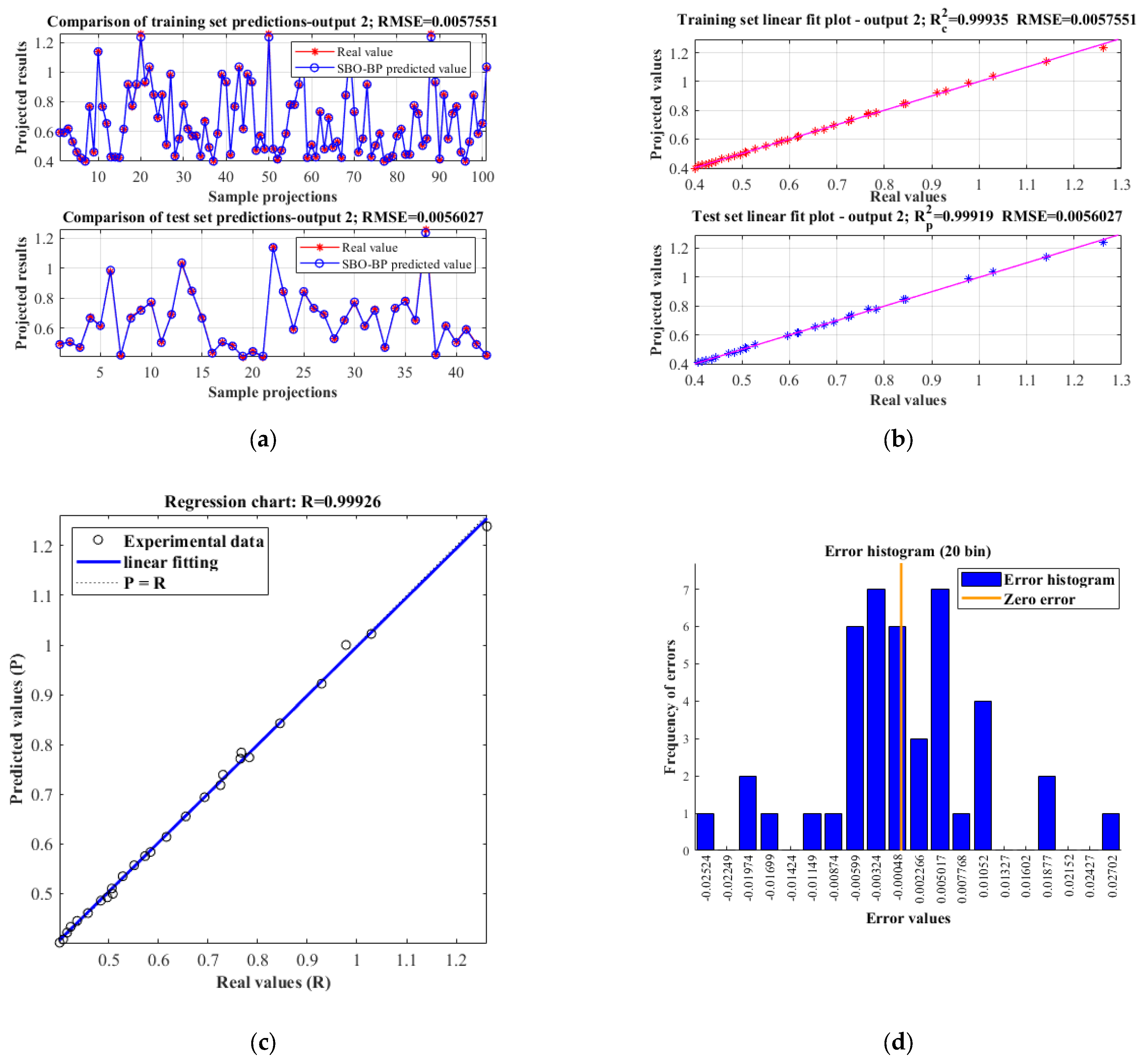

Second, in order to verify the accuracy of the SBO–BP neural network model in the task of buffer time

T prediction, we conducted a modeling prediction and error analysis on the second set of outputs, as shown in

Figure 17. From the prediction comparison plots of the training set and the test set, the model predictions highly overlap with the AMESim simulation values, with RMSEs of 0.0057551 and 0.0056027, respectively, indicating that the model maintains high prediction accuracies on different datasets. In the regression plot, the data points are highly distributed along the ideal fitting line (P = R) with a correlation coefficient R

2 = 0.99926, reflecting an extremely strong linear correlation between the model output and the actual values. In the linear fitting plots, the coefficients of determination of the training and test sets are

= 0.99935 and

= 0.99919, respectively, and the fitting curves are almost overlapped with the ideal fitting line, which further proves that the model has superior fitting ability and generalization performance. The error histogram shows that most of the errors are concentrated in the range of [−0.004, 0.004], showing a symmetric distribution, and the very small error values indicate that the prediction results have strong consistency and stability.

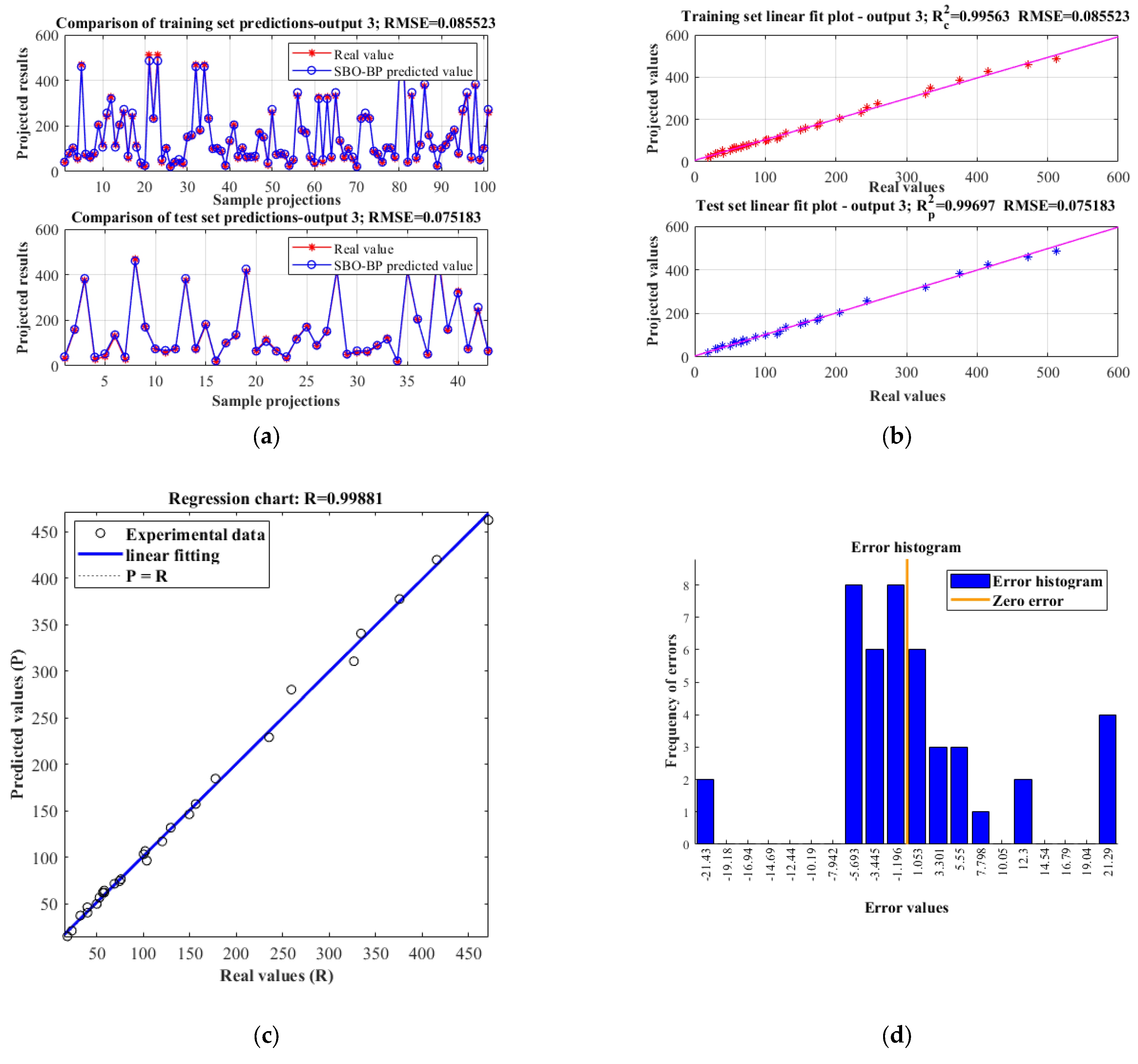

Third, for the task of predicting the rate of change of speed

Vr at the end of the piston, the SBO–BP neural network model exhibits good modeling performance, as shown in

Figure 18. The prediction results are highly consistent with the AMESim simulation values, with RMSEs of 0.085523 and 0.075183 for the training and test sets, respectively, and a correlation coefficient of 0.99881. In the linear fitting plot, the coefficients of determination of the training and test sets are 0.99563 and 0.99697, respectively, and the fitting curves are almost coincident with the ideal line, indicating that the model fitting accuracy is high. The error histogram shows that the errors are mainly concentrated near zero, with a more symmetrical distribution and less extreme errors, indicating that the model has strong stability and generalization ability, and is able to effectively predict the nonlinear characteristics of the rate of change of the speed at the end of the piston.

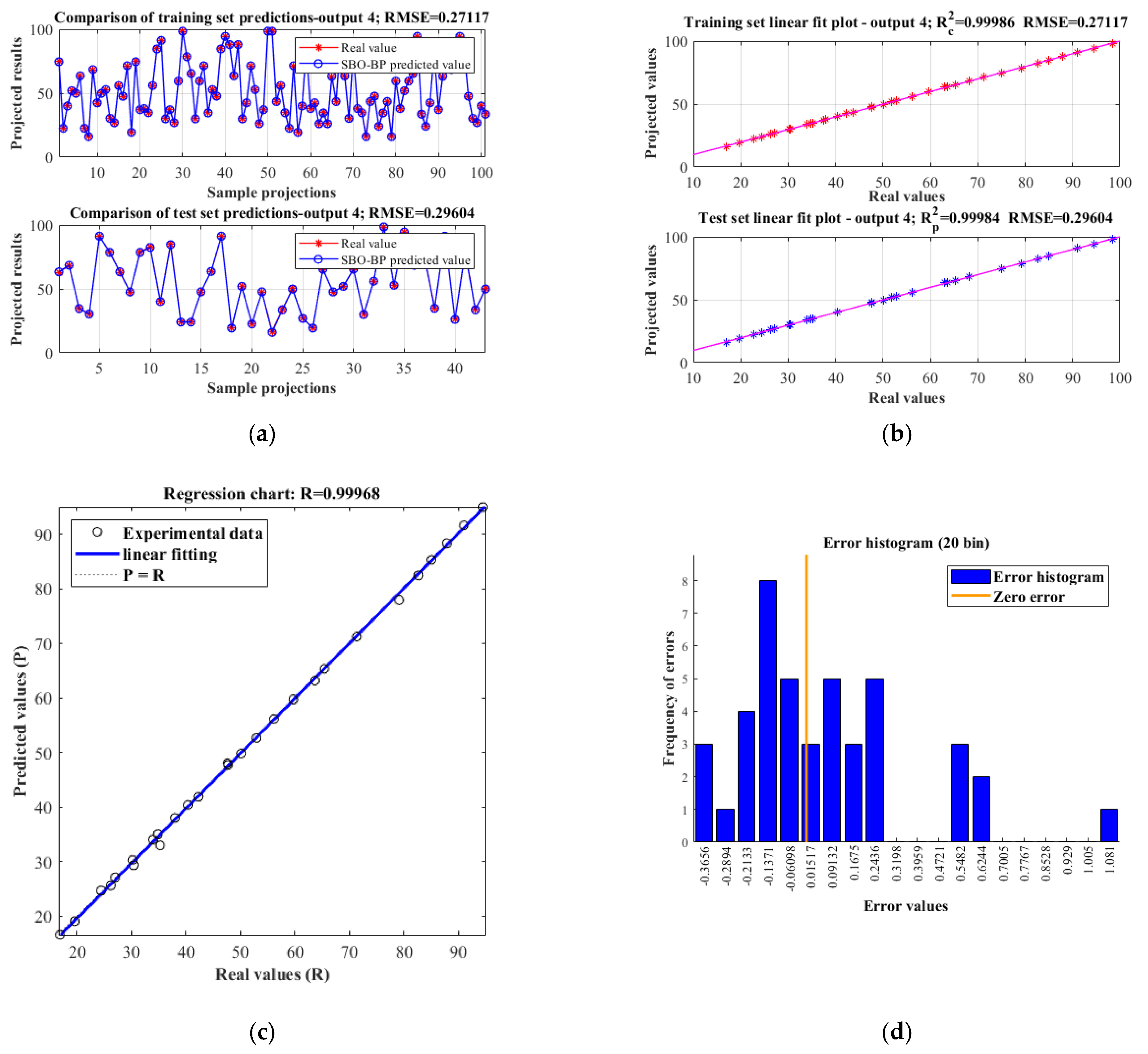

Finally, for the task of predicting the hydraulic oil reflux speed

Vh, the SBO–BP neural network model exhibits excellent modeling performance, as shown in

Figure 19. The RMSEs of the training and test sets are 0.27117 and 0.29604, respectively, and the prediction results are more consistent with the AMESim simulation values. The correlation coefficient in the regression plot reaches 0.99968, and the coefficient of determination in the linear fitting plot is 0.99986 and 0.99984 for the training and test sets, respectively, which indicates that the model’s fitting accuracy is high. The error histograms show that the errors are mainly concentrated around zero with a symmetrical distribution and few extreme errors, reflecting the good stability and generalization ability of the model over most samples, which is suitable for the accuracy modeling needs of hydraulic systems.

In order to further validate the accuracy of the SBO–BP prediction model, this thesis introduces four typical regression prediction performance evaluation metrics: the mean absolute percentage error (MAPE), the R-Square (R2), the mean absolute error (MAE), and the mean square error (MSE), and conducts comparative analyses among five improved BP neural network models. The above metrics comprehensively evaluate the model performance from multiple dimensions, such as error magnitude, relative accuracy and goodness-of-fit, aiming to reveal the differences in prediction accuracy and generalization ability among the models in a more systematic way.

- (1)

Mean Absolute Percentage Error (MAPE)

MAPE is used to measure the average relative error of the predicted value relative to the actual value, reflecting the relative error level of the model at different magnitudes, calculated as Equation (24).

where

n denotes the total number of samples,

yi denotes the true value of the

i-th sample and

denotes the predicted value of the

i-th sample.

- (2)

R-Square (R2)

R

2 is used to reflect the degree of fitting between the predicted value and the real value. The value domain is [0, 1], the closer to 1, the better the model fitting. The calculation formula is shown in Equation (25).

where

is the average of the true values.

- (3)

Mean Absolute Error (MAE)

MAE is used to measure the average absolute value of the difference between the predicted value and the true value with good interpretability and is calculated as Equation (26).

- (4)

Mean Square Error (MSE)

MSE is used to measure the mean of the squared prediction errors, which is more sensitive to larger errors and is calculated as Equation (27).

From the analysis of the results in

Table 4, it can be seen that the SBO–BP model performs well in a number of key performance evaluation indexes and is significantly better than the other compared models, including NRBO–BP, GOOSE–BP, CPO–BP, SAO–BP and BMO–BP. Specifically, in terms of R

2, the SBO–BP model maintains a high level above 0.999 on both the training and test sets, which is better than some of the comparison models, fully demonstrating the good generalization performance of the model. For example, in the prediction of “End-piston speed

Ve” and “End-piston speed change rate

Vr”, the R

2 values of the test set of the SBO–BP model reach above 0.9998, which is at the leading level. In terms of the error category, SBO–BP also shows lower prediction bias. In the prediction of “Buffer time

T”, the mean absolute percentage error (MAPE) of the test set is 0.5808%, and the mean absolute error (MAE) is 0.3817%, which are significantly better than the other models, showing higher prediction accuracy. Meanwhile, in the prediction task of “hydraulic oil reflux speed

Vh”, the mean square error (MSE) of SBO–BP is 1.0317 × 10

1, which is lower than that of some comparative algorithms, further verifying its stability and robustness. In summary, the SBO–BP model performs well in various evaluation indexes, combining high accuracy, strong fitting ability and good generalization performance.