1. Introduction

Fixed point theory in Hilbert spaces is fundamental for solving equations, optimization problems, and analyzing dynamical systems. A function f in a Hilbert space H has a fixed point at x if , often representing equilibrium states or optimal solutions.

A mapping

is nonexpansive if it satisfies

for all

, ensuring stability in iterative methods used in approximation and optimization. In 2012, Hideaki Iiduka [

1] introduced an iterative algorithm grounded in fixed-point theory to address convex minimization problems involving nonexpansive mappings. He further implemented this algorithm to tackle the network bandwidth allocation problem, which serves as a practical instance of convex minimization—a core topic in networking that has been the focus of extensive research. When a data rate is allocate to a network source, it generates a corresponding utility, typically modeled as a concave function. A commonly used utility function for each source is given by

where

, and

is the weighted parameter for source

S. The allocation for each source must be managed through a control mechanism to avoid network congestion. This mechanism distributes bandwidth by solving an optimization problem that maximizes the total utility function while ensuring that the sum of the transmission rates of all sources sharing a link does not exceed its capacity. The network bandwidth allocation problem is represented as follows [

1].

where

T is the nonexpansive mapping, and

is composed of

as defined in (

1).

Quasi-nonexpansive mappings generalize this by focusing on fixed points, requiring that for any fixed point p of T and any , facilitating convergence towards these points. The relationship between nonexpansive and quasi-nonexpansive mappings is foundational: all nonexpansive mappings are inherently quasi-nonexpansive, but the reverse does not necessarily hold. This distinction allows quasi-nonexpansive mappings to encompass a broader class of operators, providing more application flexibility while maintaining critical convergence properties.

For example, the mapping if and if is quasi-nonexpansive but not nonexpansive, as it does not consistently maintain distances between all points. Another example is the mapping defined by if and if , which is quasi-nonexpansive but not nonexpansive, as it projects points outside the unit circle onto it.

A crucial principle related to quasi-nonexpansive mappings is the demiclosedness principle. This principle states that if a sequence

converges weakly to

x and the sequence

converges strongly to

, then

x is a fixed point of

T. This result is fundamental in operator theory and functional analysis, providing a robust tool for establishing the convergence of iterative methods. Numerous studies have focused on solving fixed-point problems of quasi-nonexpansive mappings using the demiclosedness condition and define the mapping

, where

T is a quasi nonexpensive mapping with

, which plays a vital role in these investigations, as evidenced by references [

2,

3,

4].

The general split feasibility problem (General SFP), introduced by Kangtunyakarn [

5], is an extension of the traditional split feasibility problem (SFP), which was first introduced by Censor and Elfving [

6]. The traditional SFP involves finding a point

x in a closed convex set

C such that its image under a linear operator

A falls within another convex set

Q. Mathematically, it is represented as follows:

where

is a bounded linear operator, and

and

are closed convex sets.

The General SFP further extends this framework by involving two operators A and B, requiring the solution to satisfy the following: Find such that , where are bounded linear operators. The solution set of this problem can be represented by . This generalization allows for addressing more complex scenarios involving multiple constraints and objectives. Kangtunyakarn has also connected the solution set to the fixed-point problem. Further details can be found in the next section.

In practical terms, SFPs, including traditional and general forms, are critical in various applications. In medical imaging, SFPs are used to reconstruct images from projection data, ensuring the reconstructed images meet specific criteria such as physical constraints and noise minimization. In signal processing, they aid in designing filters and reconstructing signals from incomplete or corrupted data, which is essential in applications like echo cancellation and noise reduction. Additionally, SFPs are used in optimization and operations research for solving resource allocation, network design, and logistics problems, as well as in data science and machine learning for training algorithms and models, especially in high-dimensional data scenarios. See, for example [

7,

8,

9].

Researchers such as Censor and Elfving [

6] and Censor and Segal [

10] have significantly advanced this field, developing multiple sets of split feasibility problems and split variational inequality problems, incorporating various constraints and projection methods. These advancements highlight the versatility and utility of the SFP framework in solving complex real-world challenges across disciplines.

G.M. Korpelevich [

11] was the first to introduce the extragradient method, which has since become a fundamental technique for solving variational inequality problems. This method, known for its robust convergence properties, is defined as follows:

where

denotes the projection onto the set

C,

is the step size parameter, and

F is a nonlinear mapping associated with the problem at hand. Specifically,

F represents the gradient of the objective function in optimization problems or a monotone operator in variational inequality problems. The choice of

is crucial for ensuring the algorithm’s convergence and is often determined by a line-search procedure or fixed in advance based on problem characteristics.

Building upon Korpelevich’s foundational work, researchers have developed enhancements to improve the performance of this method.

X. J. Long, J. Yang and Y. J. Cho [

12] build upon Korpelevich’s extragradient method to enhance its performance for variational inequalities. The modifications aim to increase convergence speed and computational efficiency. The improved algorithm consists of the following steps:

Similarly, B. Tan and S. Y. Cho [

13] leverage Korpelevich’s method as a basis and further enhance it by incorporating a new line-search rule. The modified algorithm consists of the following steps:

G.M. Korpelevich’s original method has significantly impacted the field of optimization and variational inequalities. His extragradient method introduced an effective way to iteratively approach solutions for variational inequality problems, providing a robust framework that subsequent researchers built to create more efficient and robust algorithms. Both Xian-Jun Long, Jing Yang, and Yeol Je Cho acknowledged the importance of Korpelevich’s contributions and used his method as the starting point for their advancements. This highlights the lasting relevance and adaptability of Korpelevich’s work in the ongoing development of mathematical optimization techniques. Numerous researchers have continued to expand upon Korpelevich’s foundational work, developing variations and enhancements tailored to specific applications and more complex problem settings, as demonstrated in references [

14,

15,

16]. This ongoing research ensures that the extragradient method remains a vital tool in optimization and variational inequalities, proving its versatility and enduring significance.

Building upon the foundational techniques established by G.M. Korpelevich, precisely the Subgradient Extragradient method, this research develops an innovative approach to finding fixed points of quasi-nonexpansive mappings without relying on the demi-closedness condition. This method leverages the robustness of Korpelevich’s technique, adapting it to a broader class of operators while maintaining critical convergence properties.

Detailed computational examples are provided to validate the effectiveness of this new method, demonstrating its practical applicability and robustness. Additionally, the implications of these findings are extended to address various complex problems, showcasing the versatility of the approach. The solutions derived through this technique offer significant improvements in areas such as optimization, dynamical systems analysis, and solving equilibrium problems, proving its utility across multiple domains. This research enhances the theoretical understanding of quasi-nonexpansive mappings and presents a viable tool for practical applications related to mathematical problems and their applications.

2. Preliminaries

The foundational definitions, propositions, and lemmas necessary for the rigorous development of the theory are established in this section. A careful review of fundamental concepts forms the basis of our analysis, accompanied by the introduction of novel analytical tools crafted specifically to facilitate the proofs of the main theorems. These tools mark a significant advancement in addressing the challenges inherent to the core problems under investigation. The material presented provides the essential groundwork, ensuring that the reader is well prepared to engage with the advanced theoretical constructs and proofs that follow. Consider a Hilbert space H and a nonempty closed convex subset C of H. The metric projection of a point onto the set C is a fundamental concept in functional analysis and optimization.

The metric projection

is defined as the unique point in

C that minimizes the distance to

x, formally expressed by

This means that

is the closest point to

x within

C, satisfying the equality

The uniqueness and existence of are guaranteed by the properties of the Hilbert space and the convexity of C.

Furthermore, the projection

is a firmly nonexpansive operator, ensuring that

for any

. This property is essential in proving the convergence of iterative methods in convex optimization.

Additionally, the metric projection satisfies a key variational inequality

for all

indicating that

is orthogonal to the tangent cone of

C at

.

Thus, the metric projection is a crucial tool for exploring the geometric properties of convex sets in Hilbert spaces and is widely applied in mathematical analysis and related fields.

Lemma 1 ([

17])

. Let H be a Hilbert space and C a nonempty closed convex subset of H. Suppose A is a mapping from C into H, and let . For any , the point u is a solution to the variational inequality problem if and only ifwhere denotes the metric projection of H onto C. Lemma 2 ([

18])

. Let and be sequences of non-negative real numbers such thatfor all where is a sequence in and is a real sequence. Assume that . Then, the following results hold:- (i)

If for some , then is a bounded sequence.

- (ii)

If and , then .

Lemma 3 ([

19])

. Let X be a real inner product space. Then- (i)

- (ii)

for all and for all

The subsequent lemma is fundamental in establishing the main theorem in the following section.

Lemma 4. Let X be a real inner product space. Thenfor all and Proof. Let

and

. Putting

. From Lemma 3, we have

Therefore, Equation (

2) holds for all

and

. □

Lemma 5 ([

20])

. Let C be a nonempty closed convex subset of a real Hilbert space H, and let be a quasi-nonexpansive mapping. Then Remark 1. From Lemma 1 and Lemma 5, we havefor all Lemma 6 ([

5])

. Let and be real Hilbert spaces and be nonempty closed convex subsets of and , respectively. Let be bounded linear operators with being adjoint of A and B, respectivel, y with . Then the following equations are equivalent.- (i)

,

- (ii)

,

for all where are spectral radii of and , respectively, with and .

Lemma 7 ([

21])

. Let be a sequence of real numbers that do not decrease at infinity, in the sense that there exists a subsequence of such that for all . Also, we consider the sequence of integers defined byThen is a nondecreasing sequence verifying and, for all , Lemma 8. Let C and Q be nonempty closed convex subsets of real Hilbert spaces and , respectively. Let be a continuous quasi-nonexpansive mapping, and let be bounded linear operators with and denoting the spectral radius of and , respectively. Assume that the set is nonempty. Let , and let the sequence be constructed bywhere The set used in the iteration are defined as follows: The iteration is then given byfor all where with , , and with . Then the sequences , and are bounded.

Proof. Let

. By applying the method described in [

5], we can conclude that

J is

-inverse strongly monotone.

Based on the definition of the sequence

, we obtain the following:

Given that

, we deduce that

. From the properties of

and

, it follows that

. Defining

, we arrive at the following:

Since

, and given that

along with the monotonicity of

J, we obtain the following:

From (

4), we have

Let

, and define

. Therefore, we have the following:

From

, then it follows that

It implies that

From the above, it follows that

Utilizing (

6) and the characteristics of the quasi-nonexpansive mapping, we deduce that

By invoking (

3), (

5), and (

7), we obtain the following

From Lemma 2, it can be concluded that the sequence

is bounded, as are the sequences

and

. □

4. Applications

This section explores the application of mapping concepts in addressing various mathematical problems. Theorems 2–4 can be viewed as direct consequences and extensions of the main result established in Theorem 1. Specifically, Theorem 2 adapts the general framework of Theorem 1 to the case where the underlying mappings are nonspreading mappings, thereby simplifying the iterative process under this particular structure. Theorem 3 further develops this idea by considering nonexpansive mappings and refining the convergence conditions, which remain consistent with the scope of the assumptions in Theorem 1. Finally, Theorem 4 illustrates how the general convergence framework of Theorem 1 can also be applied to minimization models via the function , showing that the proposed method unifies both feasibility-type formulations and optimization-type formulations. Thus, Theorems 2–4 provide concrete refinements and applications of the general principle proved in Theorem 1.

4.1. Fixed-Point Problem of Nonlinear Mapping

The concept of nonspreading mapping was introduced by Kohsaka and Takahashi [

22] in 2008 to address the fixed-point problem in Hilbert spaces, which are real vector spaces equipped with an inner product structure. Typically, studies on mappings in Hilbert spaces focus on distance-preserving properties, such as nonexpansive mappings, which ensure that the distance between two points does not increase under the mapping.

Nonspreading mappings, however, are defined with a stricter condition. Specifically, a mapping

on a nonempty closed convex subset

C of a Hilbert space

H is called nonspreading if it satisfies the following inequality for all

This definition was proposed to generalize certain properties of nonexpansive mappings while introducing new tools for solving fixed-point problems.

Nonspreading mappings are closely related to quasi-nonexpansive mappings, which satisfy the inequality for all and , where denotes the set of fixed points of T. While quasi-nonexpansive mappings ensure that distances from points to any fixed point do not increase, nonspreading mappings impose an additional structure that further constrains the behavior of the mapping.

It is important to note that if the fixed-point set is nonempty, then a nonspreading mapping T will also be quasi-nonexpansive. This is because the nonspreading condition naturally implies the quasi-nonexpansive property when fixed points exist, making nonspreading mappings a special case of quasi-nonexpansive mappings under this condition.

By utilizing the main theorems and properties of nonspreading mappings, it is possible to establish further significant theorems in fixed-point theory and related fields. These properties provide a foundational framework that can be applied to prove strong convergence results and other critical findings in mathematical analysis and optimization.

Theorem 2. Let C and Q be nonempty closed convex subsets of real Hilbert spaces and , respectively. Let be a continuous nonspreading mapping, and let be bounded linear operators with and as the spectral radius of and , respectively. Assume that . Let , and consider the sequence generated bywhere The set used in the iteration are defined as follows: The iteration is then given byfor all where with , , , and . Suppose that the following conditions hold: Then the sequence converges strongly to .

Theorem 3. Let C and Q be nonempty closed convex subsets of real Hilbert spaces and , respectively. Let be a nonexpansive mapping, and let be bounded linear operators with and as the spectral radius of and , respectively. Assume that . Let , and consider the sequence generated bywhere The set used in the iteration are defined as follows: The iteration is then given byfor all where with , , , and . Suppose that the following conditions hold: Then the sequence converges strongly to .

These theorems illustrate how both nonspreading and nonexpansive mappings can be applied to establish strong convergence results in iterative processes. They demonstrate the versatility and utility of these mapping concepts in fixed-point theory and optimization.

4.2. Minimization Problem

Consider the sets

and

where

and

are Hilbert spaces, and let

be a bounded linear operator. Let

be a continuously differentiable function. The optimization problem can be formulated as follows:

where the objective is to find a point

such that

for all

.

During the investigation of this minimization problem, the general constrained minimization problem is introduced as follows:

Here,

denotes the set of all solutions to the Equation (

15), defined by

.

The minimization problem described plays a crucial role in various applied mathematics and engineering fields. By minimizing the function , one can find an optimal solution that satisfies specific constraints, making this framework applicable to several real-world problems.

For instance, such minimization problems are often used in signal processing, where the goal is to reconstruct a signal that best matches observed data while satisfying certain constraints. Similarly, in machine learning, optimization techniques like this are used to train models by minimizing loss functions, ensuring the model fits the training data while generalizing well to unseen data.

Moreover, this problem formulation can be applied in control theory, where minimizing an objective function subject to constraints is essential for designing systems that maintain desired performance levels. In resource allocation, such as in network bandwidth distribution, the minimization problem ensures efficient use of limited resources while meeting various user demands.

In economics, optimization problems are used to determine the best allocation of resources or to find equilibria in markets. Lastly, in inverse problems, this minimization framework is vital for deriving unknown parameters from observed data, ensuring that the solutions are consistent with the physical models.

The subsequent results elucidate the relationship between the general split feasibility problem and the general constrained minimization problem.

Lemma 9 ([

5])

. Let and be real Hilbert spaces, and let C and Q be nonempty, closed, convex subsets of and , respectively. Let be bounded linear operators, and suppose that and are the adjoints of A and B, respectively. Define the function asAssume that . The following conditions are equivalent:

- (i)

,

- (ii)

.

The result of this theorem can be directly proved by utilizing Lemma 9 and the main theorem.

Theorem 4. Let C and Q be nonempty closed convex subsets of real Hilbert spaces and , respectively. Let be a continuous quasi-nonexpansive mapping, and let be bounded linear operators with and denoting the spectral radius of and , respectively. Let the function be differentiable continuous function defined by . Assume that the set is nonempty. Let , and let the sequence be constructed byandThe set used in the iteration are defined as follows:for all where with , , and with . Suppose that the following conditions hold: Then, the sequence converges strongly to , which is a minimizer of the function .

5. Examples

In the following example, we present a numerical illustration of the Enhanced Subgradient Extragradient method developed in Theorem 1. The aim is to demonstrate the practical applicability of the algorithm in the setting of real Hilbert spaces. We provide detailed computations involving the mappings, adjoints, spectral radius, projections, and iteration steps in order to clarify the structure and mechanism of the method. This example not only shows the convergence behavior of the sequence but also highlights the validity of the theoretical framework, the structural operation of the algorithm, and its potential for applications in various scenarios. In this way, both the theoretical predictions and the practical performance of the proposed scheme are confirmed.

Example 1. (Convergence of Iterative Process). We work in .

Adjoints and operator products: Since we have Thus, the spectral radius are Hence, the step parameter satisfies In our case we choose which is admissible.

Parameters and initial point:

,

Iteration scheme: For Iteration steps and calculations: Step 1: Calculating

Calculate :Given , we haveCalculate :By calculating and substitutingCalculate and : Based on the properties of convex projection and initial settings, we find and , meaning the conditions are met for all . Update :With and , we obtain Step 2: Calculating

Calculate :Since , we haveCalculate :Since , we haveCalculate :This holds for all , so . Calculate :This holds for all , so . Update :With and , we obtain Step 3: Calculating

Calculate :Since , we haveCalculate :Since , we haveCalculate :This holds for all , so . Calculate :This holds for all , so . Update :With and , we obtain The following Table 1 shows the result of the sequences and where , , and . Conclusion

The sequence converges to , and we have shown that and for , supporting the convergence criteria specified in Theorem 1.

By this example, we have demonstrated the practical application of the Enhanced Subgradient Extragradient method to solve a fixed-point problem. The iterative process converges to a fixed point, aligning with the theoretical expectations outlined in Theorem 1. This example confirms the theorem’s claims and provides a clear computational illustration of the principles of the theorem.

Remark 2. The above numerical example does not only verify Theorem 1 (feasibility formulation), but can also be reinterpreted under the minimization framework of Theorem 4. Hence, although the computational setting is the same, it simultaneously supports both Theorems 1 and 4, by showing consistency between the feasibility and minimization perspectives.

In addition, under the minimization formulation of Theorem 4, with C, Q, , , the mappings A, B, T, and all parameters defined as in Example 1, we can formulate the minimization model as .with gradientAlgorithm (Theorem 4 specialized to Example 1): For Next, we shall compare our method with classical algorithms, including Gradient Descent (GD), Projected Gradient Descent (PGD), and the Projection Method (PM). Baseline methods for comparison:Metrics and stopping rule: We monitor ,and the fixed-point residual . The stopping condition isThen, the sequence converges to , which is a minimizer of the function . Table 2 below presents results demonstrating that our method achieves faster convergence, smaller feasibility violation, and lower objective values than the classical methods, thereby making the applications more convincing. Discussion The proposed algorithm converges in fewer than 1000 iterations, achieving very small feasibility violation and low objective values. By contrast, GD requires more than 3000 iterations to reach similar accuracy, while PGD and PM both need over 2000 iterations. These results confirm that Example 1 not only supports Theorem 1 (feasibility formulation) but also provides strong numerical evidence for Theorem 4 (minimization setting).

Traffic flow analysis typically involves collecting data on parameters such as traffic volume, speed, and density using various methods, including surveys, traffic cameras, and sensors. Linear algebra can also be applied to model traffic flow on a single-lane road. In this context, traffic flow is represented using matrix equations, where the matrix corresponds to traffic density at different time points, and the vector represents the flow of traffic. There is extensive research on traffic flow; see, for example, refs. [

23,

24,

25,

26]. In the next example, we solve the traffic flow problem for four roads using the algorithm presented in Theorem 1.

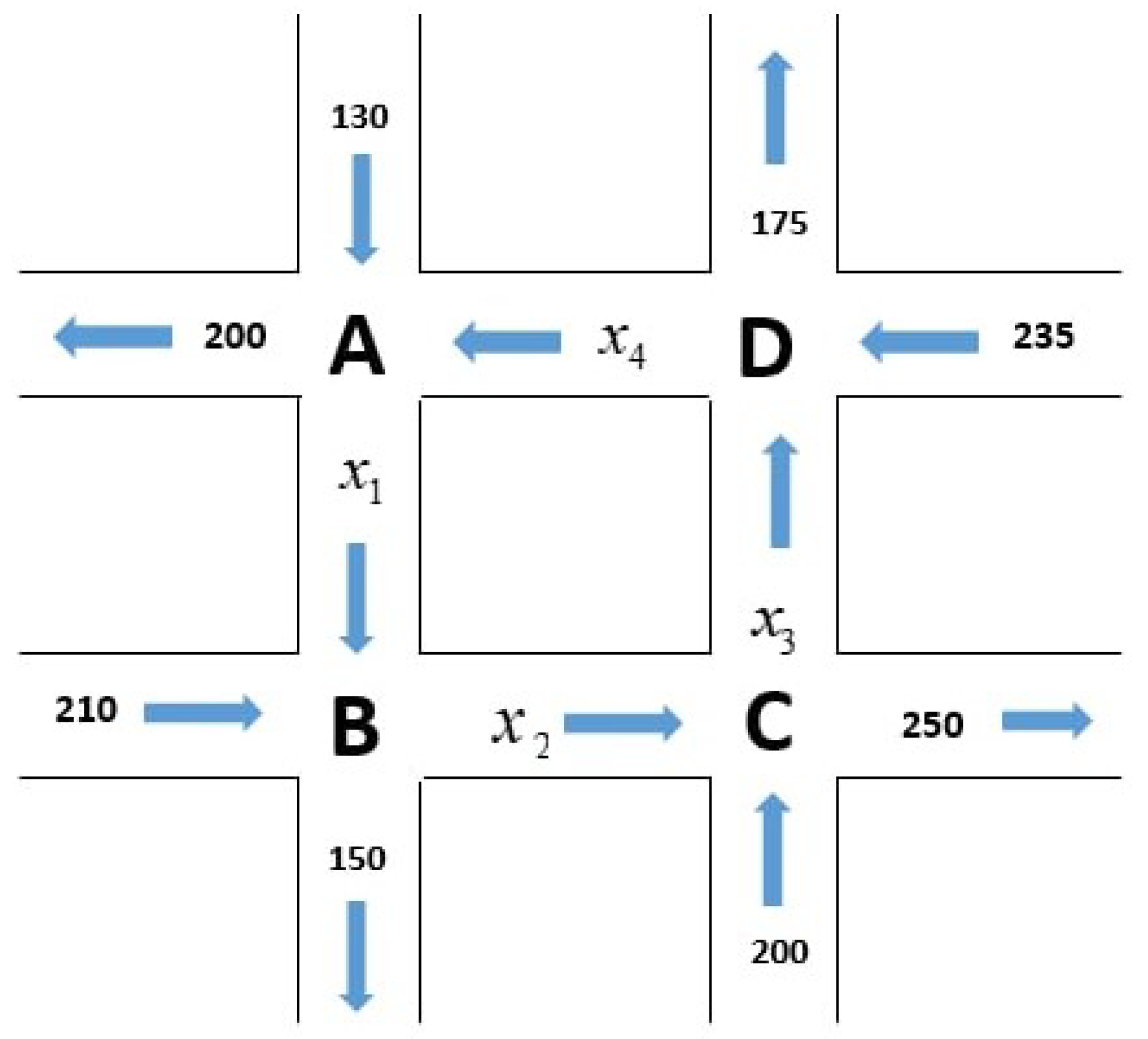

Example 2. Let us examine a traffic flow problem in a city, where the traffic flows on four roads are depicted in Figure 1. Each road is a one-way street, following the specified directions, with units given in vehicles per hour. The four linear equations based on the four intersections in Figure 1 can be obtained as follows (Traffic in = Traffic out):Let and Take , , , which is the solution of the system of linear equations where and Next, we will find the solution and .

Since , , and , we obtain .

Since , then .

It follows thatLet the sequence generated by . Let T be an Identity matrix. Take in Theorem 1. Given the parameters , , , and . We can rewrite the sequence in (1) as follows:andfor all Then the sequence converges strongly to , for all .

Solution. It obvious to check that the spectral radius of matrix

is 2. Then, we have

. So, we can take

. By the definition of

T, we have

T is a continuous quasi-nonexpansive mapping. Thus from Theorem 1, we can conclude that the sequence

converges strongly to

Then

Given that traffic flow cannot be negative, consider the case where

. In this scenario, we find

and

will be zero. By applying the condition

and substituting

into all the equations of the system, we determine that all

as 70 represents the minimum value required to maintain positive traffic flow. Hence,

For instance, if the road between the intersection

A and

B is a road under construction and there is a need to limit the number of vehicles using this road, then the minimum value of vehicles traveling on the road between the intersection

A and

B is

This implies that

,

, and

. So that, the road between the intersection A and B is closed.

Networks consisting of branches and junctions serve as models in various fields such as economics, traffic analysis, and electrical engineering. In such a network model, it is assumed that the total inflow to a junction equals the total outflow from it. In the next example, we solve the flow through a network composed of five junctions by using the algorithm presented in Theorem 1.

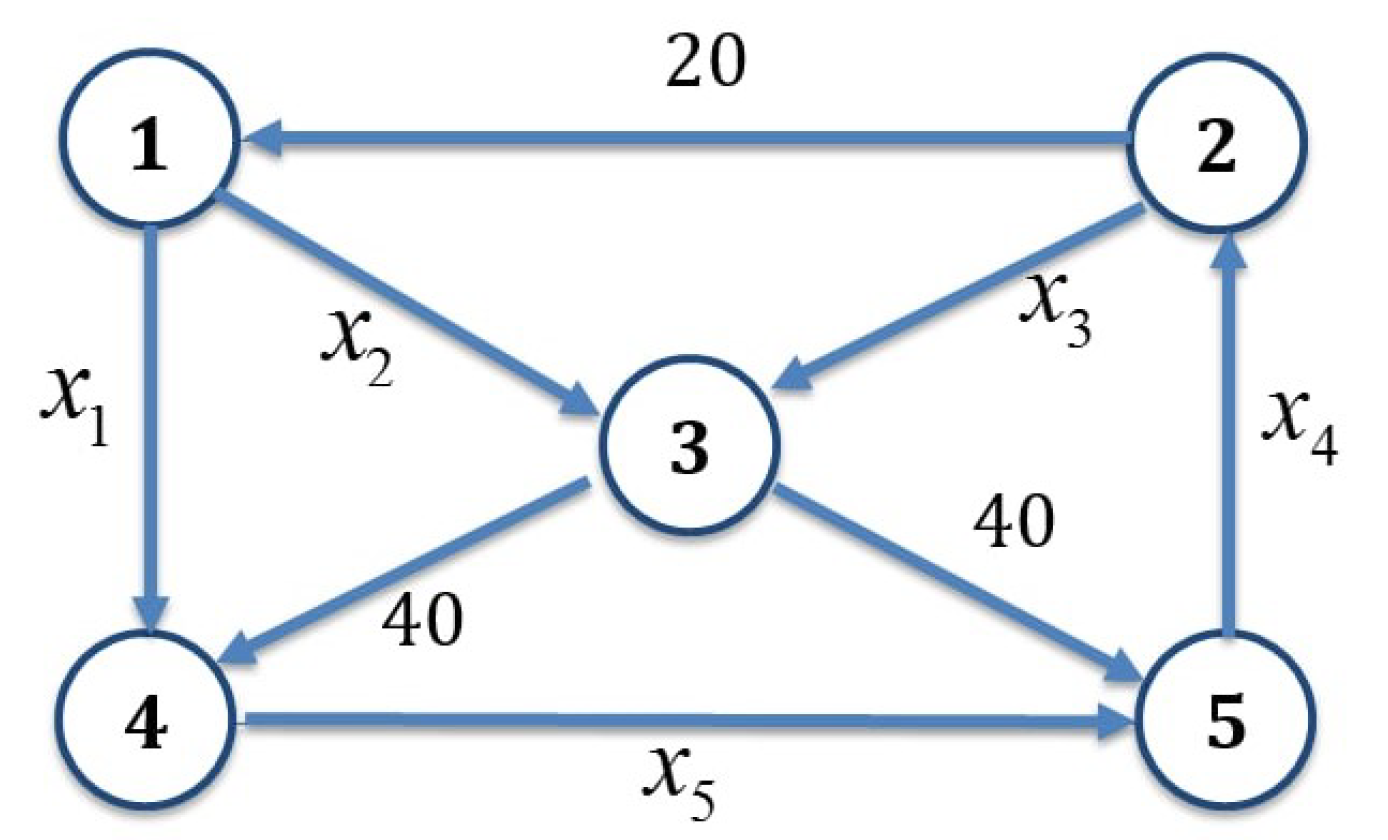

Example 3. Set up a system of linear equations to represent the network shown in Figure 2. Each of the network’s five junctions gives rise to a linear equation, as follows.Let and Take , , , where is the solution of the system of linear equations where and Next, we will find the solution and .

Since , , , and , we obtain .

Since , then .

It follows thatLet the sequence generated by . Let T be an Identity matrix. Take in Theorem 1. Given the parameters , , , and . We can rewrite the sequence in (1) as follows:andfor all Then the sequence converges strongly to , for all .

Solution. It is obvious to check that the spectral radius of matrix

is 2. Then, we have

. So, we can take

. By the definition of

T, we have

T as a continuous quasi-nonexpansive mapping. Thus from Theorem 1, we can conclude that the sequence

converges strongly to

Then

where

p is any real number, so this system has infinitely many solutions. Assume that the flow along the branch labeled

can be controlled. Based on the solution of this example, it is then possible to regulate the flow corresponding to the other variables. In particular, if

, the flow of

would be reduced to zero.

In the following example, we consider the metric projection onto a band

where

and

It is clear that

C is closed and convex with

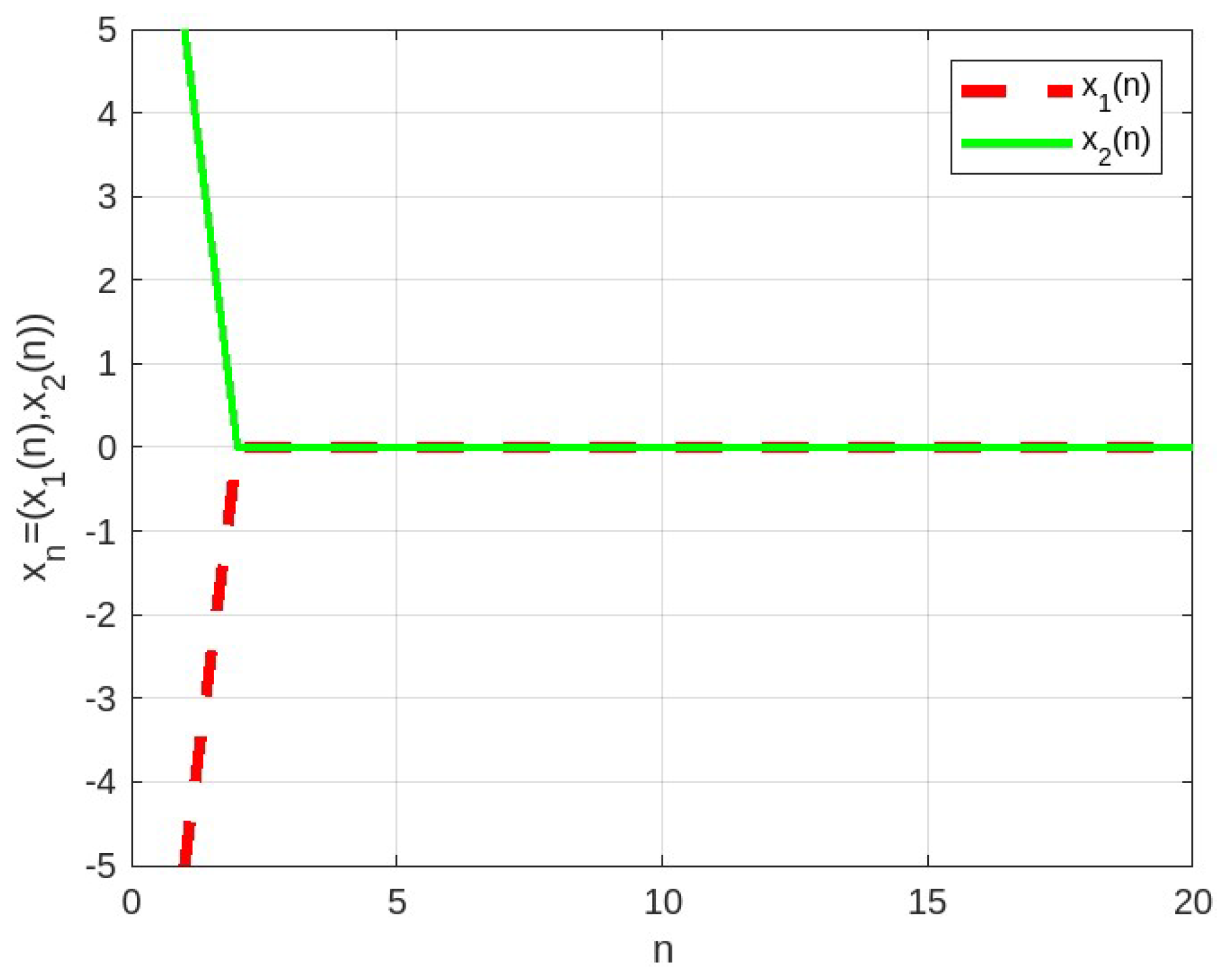

Example 4. Let be the set of real numbers, , , for every andfor every Let defined by and letGiven the parameters , , , , and We can rewrite the sequence in (1) as follows:andfor all Then the sequence converges strongly to .

Solution. It obvious to check that the spectral radius of is 4, and the spectral radius of is . Then, we have . So, we can take . By the definition of T, we have T is a continuous quasi-nonexpansive mapping. Thus, from Theorem 1 we can conclude that converges strongly to

The following

Figure 3 shows the result of the sequence

where

,

, and

.