A Lightweight Hybrid CNN-ViT Network for Weed Recognition in Paddy Fields

Abstract

1. Introduction

- We introduce RepEfficientViT, a new hybrid CNN–ViT architecture tailored for lightweight yet highly accurate weed identification.

- By applying structural re-parameterization to convolutional layers, the proposed model achieves further acceleration in inference without additional parameters or complexity.

- Experimental results on the constructed weed dataset demonstrate that RepEfficientViT outperforms representative CNN and Transformer counterparts in both accuracy and efficiency.

2. Materials and Methods

2.1. Dataset Construction

2.2. Hybrid CNN–Transformer and Ensemble Approaches

2.3. Design of RepEfficientViT

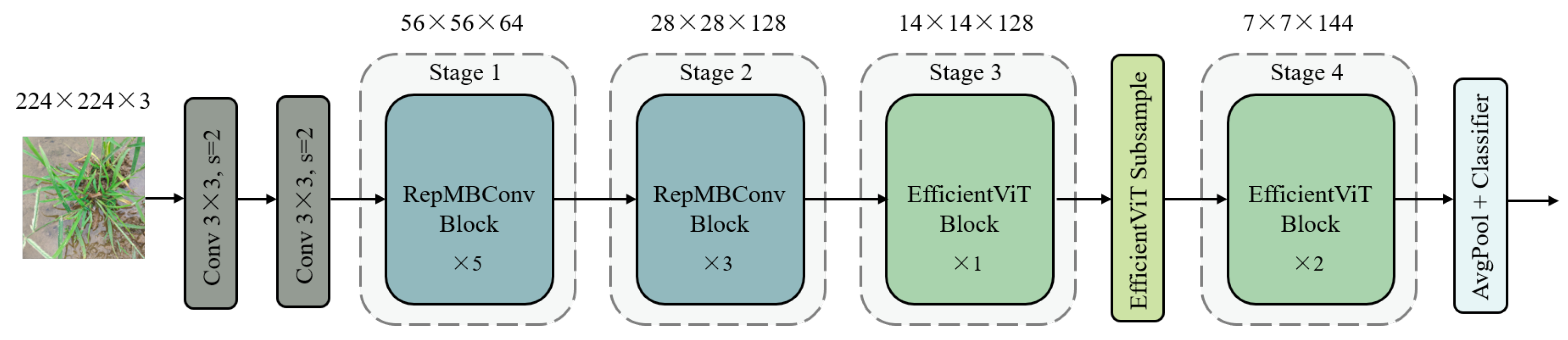

2.3.1. Algorithm Design Overview

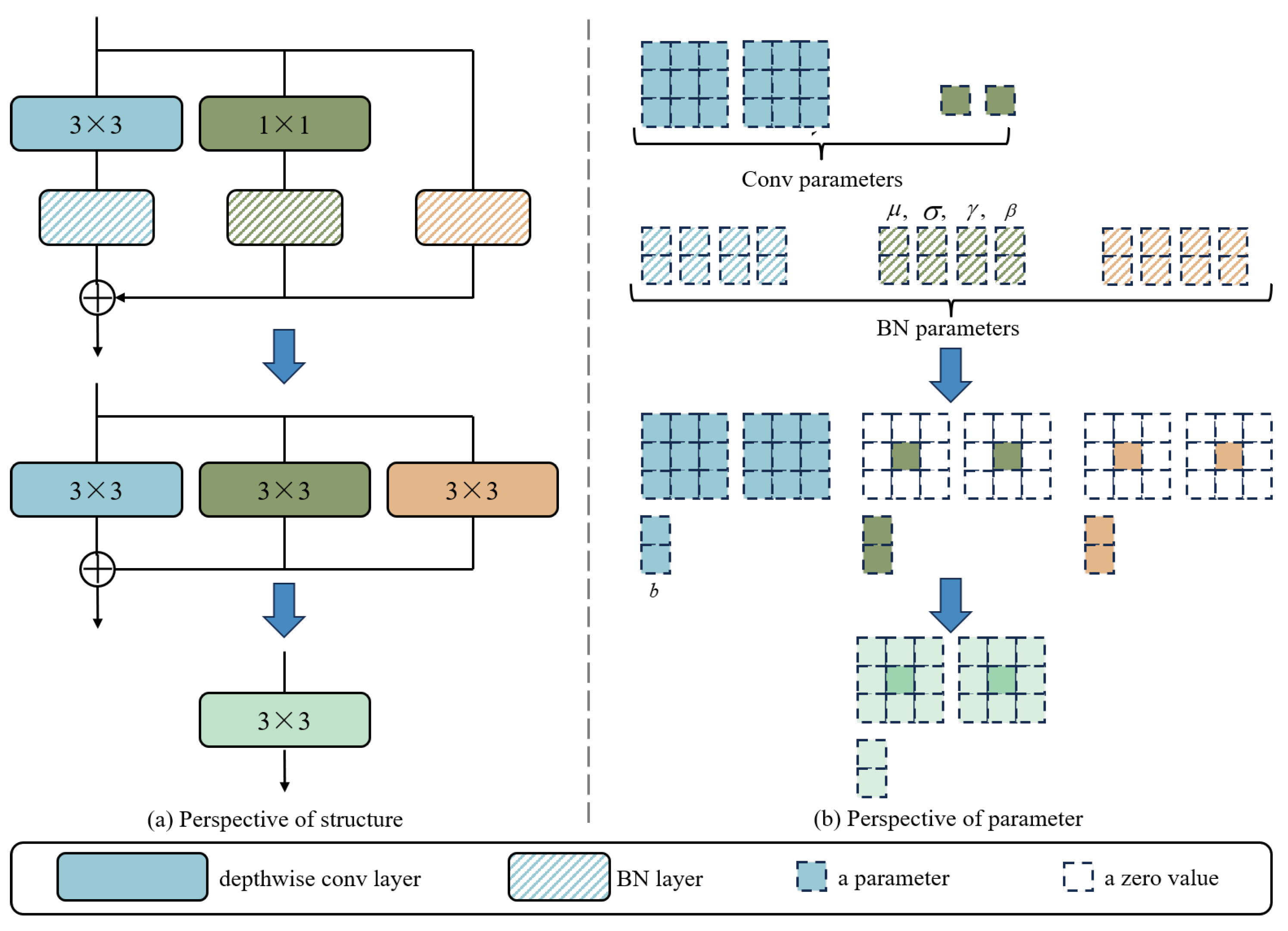

2.3.2. RepMBConv Block

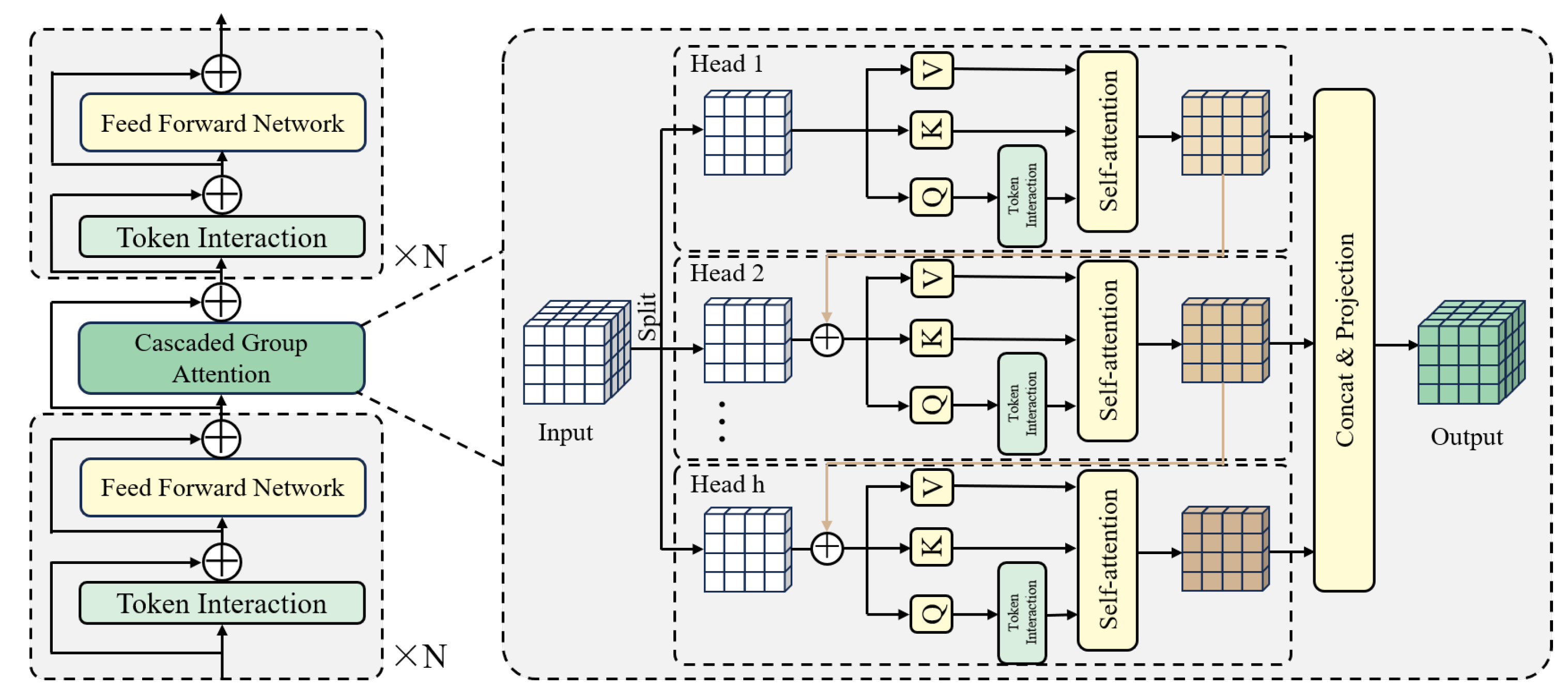

2.3.3. EfficientViT Block

3. Results and Discussion

3.1. Experiment Setup

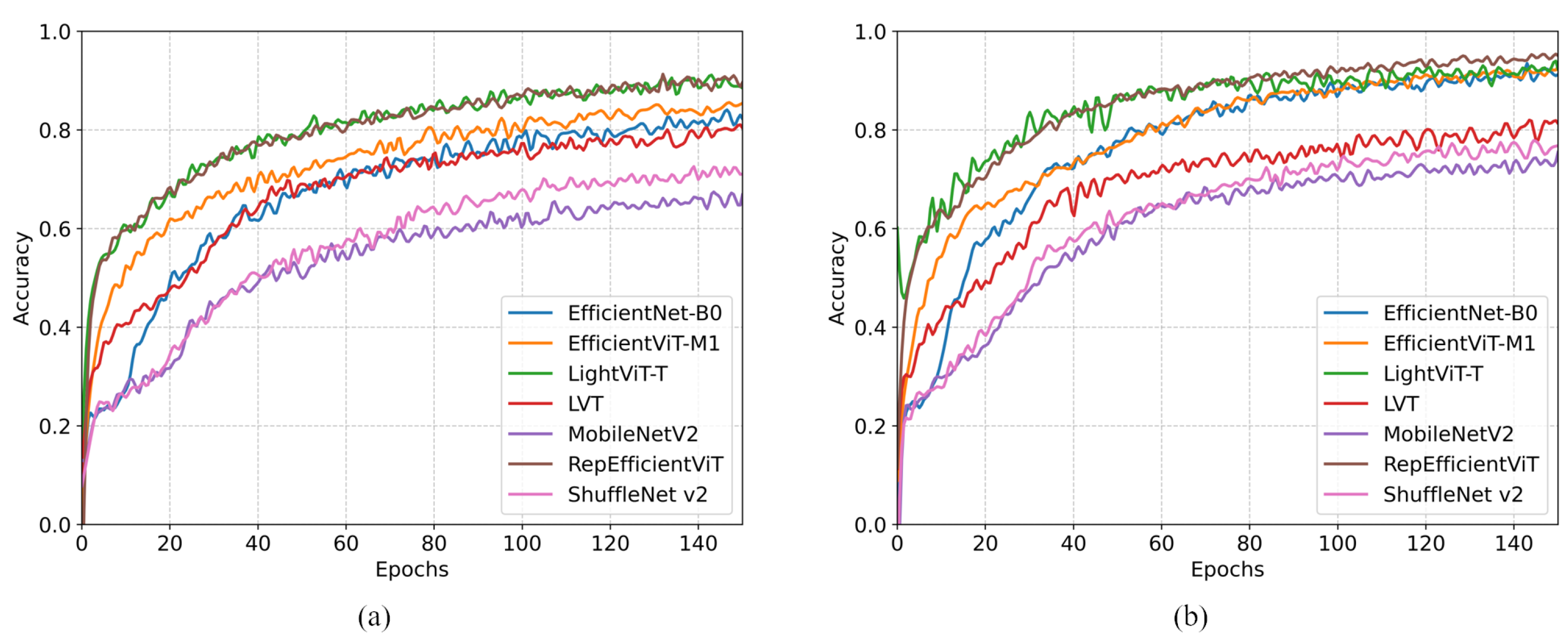

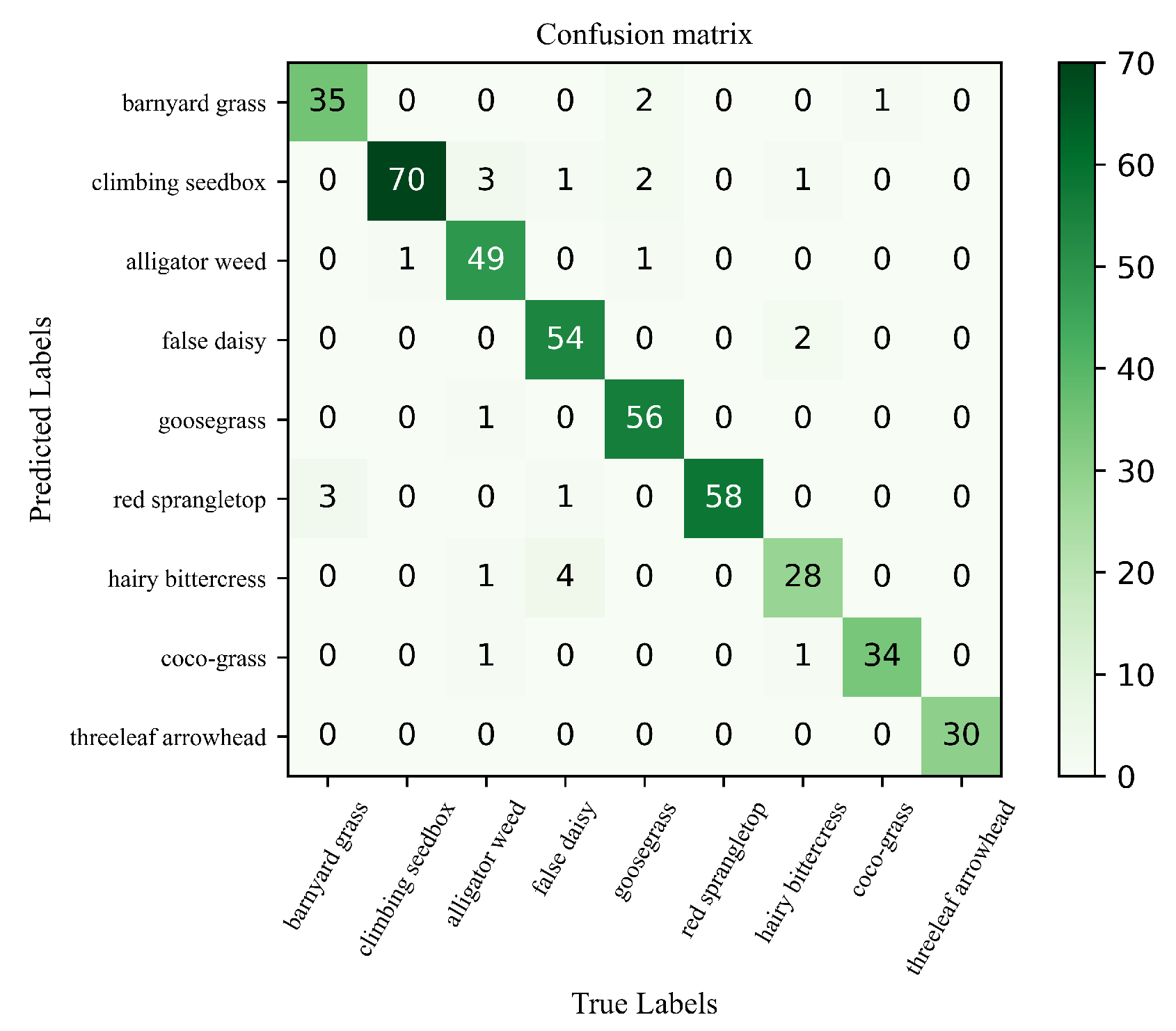

3.2. Model Evaluating

3.3. Ablation Studies

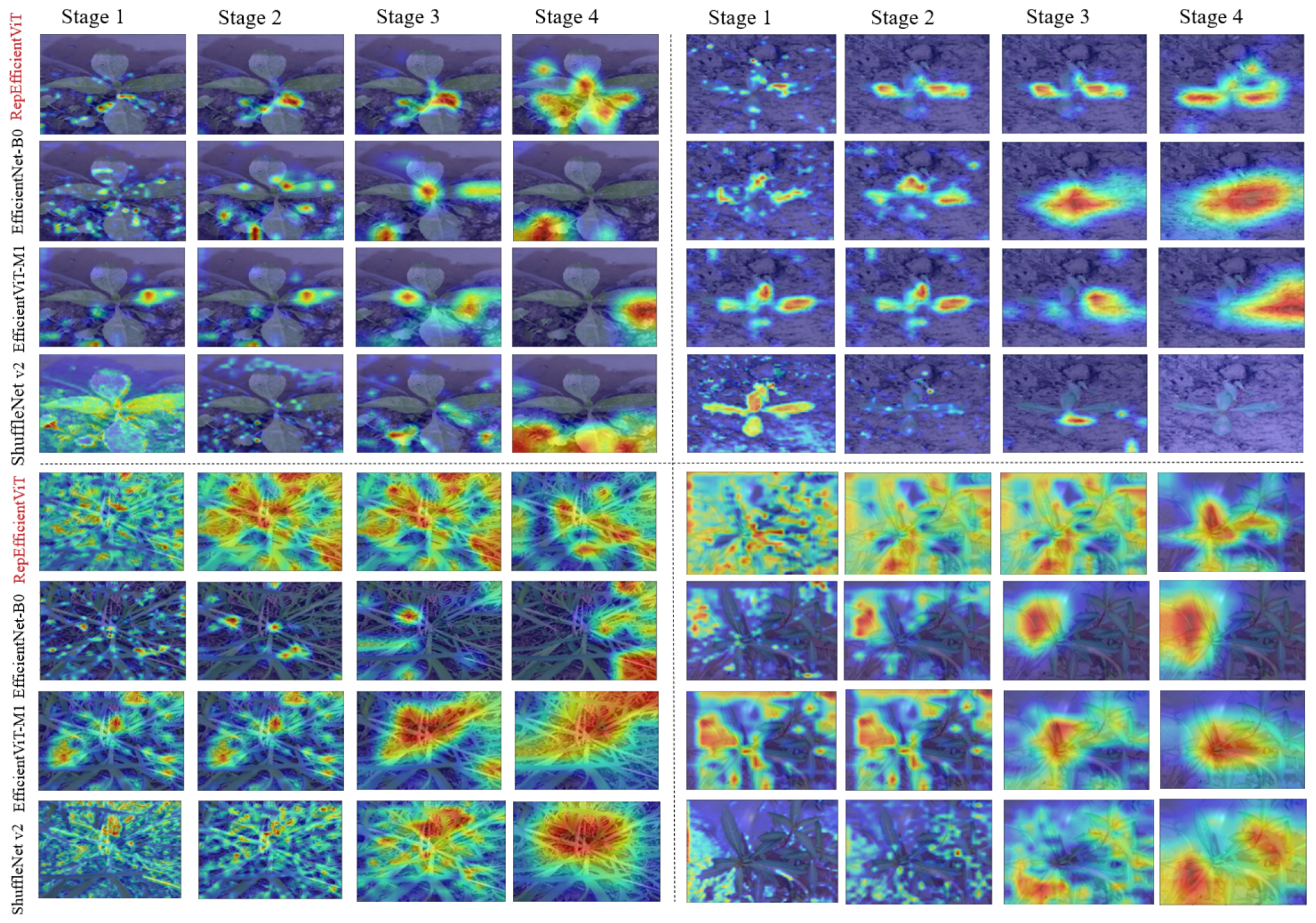

3.4. Visualization of Grad-CAM

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hosoya, K.; Sugiyama, S. Weed communities and their negative impact on rice yield in no-input paddy fields in the northern part of Japan. Biol. Agric. Hortic. 2017, 33, 215–224. [Google Scholar] [CrossRef]

- Patterson, D.T.; Westbrook, J.K.; Joyce, R.J.V.; Lingren, P.D.; Rogasik, J. Weeds, insects, and diseases. Clim. Change 1999, 43, 711–727. [Google Scholar] [CrossRef]

- Mahzabin, I.A.; Rahman, M.R. Environmental and health hazard of herbicides used in Asian rice farming: A review. Fundam. Appl. Agric. 2017, 2, 277–284. [Google Scholar]

- Li, J.; Zhang, W.; Zhou, H.; Yu, C.; Li, Q. Weed detection in soybean fields using improved YOLOv7 and evaluating herbicide reduction efficacy. Front. Plant Sci. 2024, 14, 1284338. [Google Scholar] [CrossRef] [PubMed]

- Lam, O.H.Y.; Dogotari, M.; Prüm, M.; Vithlani, H.N.; Roers, C.; Melville, B.; Zimmer, F.; Becker, R. An open source workflow for weed mapping in native grassland using unmanned aerial vehicle: Using Rumex obtusifolius as a case study. Eur. J. Remote Sens. 2021, 54 (Suppl. S1), 71–88. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Nasiri, A.; Omid, M.; Taheri-Garavand, A.; Jafari, A. Deep learning-based precision agriculture through weed recognition in sugar beet fields. Sustain. Comput. Inform. Syst. 2022, 35, 100759. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Sharpe, S.M.; Schumann, A.W.; Boyd, N.S. Detection of Carolina geranium (Geranium carolinianum) growing in competition with strawberry using convolutional neural networks. Weed Sci. 2019, 67, 239–245. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Osorio, K.; Puerto, A.; Pedraza, C.; Jamaica, D.; Rodríguez, L. A deep learning approach for weed detection in lettuce crops using multispectral images. AgriEngineering 2020, 2, 471–488. [Google Scholar] [CrossRef]

- Espejo-Garcia, B.; Panoutsopoulos, H.; Anastasiou, E.; Rodríguez-Rigueiro, F.J.; Fountas, S. Top-tuning on transformers and data augmentation transferring for boosting the performance of weed identification. Comput. Electron. Agric. 2023, 211, 108055. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Castellano, G.; De Marinis, P.; Vessio, G. Weed mapping in multispectral drone imagery using lightweight vision transformers. Neurocomputing 2023, 562, 126914. [Google Scholar] [CrossRef]

- Jiang, K.; Afzaal, U.; Lee, J. Transformer-based weed segmentation for grass management. Sensors 2022, 23, 65. [Google Scholar] [CrossRef] [PubMed]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. (NeurIPS) 2021, 34, 12077–12090. [Google Scholar] [CrossRef]

- Strudel, R.; Garcia, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 7262–7272. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, S.; Dai, B.; Yang, S.; Song, H. Fine-grained weed recognition using Swin Transformer and two-stage transfer learning. Front. Plant Sci. 2023, 14, 1134932. [Google Scholar] [CrossRef] [PubMed]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-style convnets great again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 13733–13742. [Google Scholar] [CrossRef]

- Chen, Y.; Dai, X.; Chen, D.; Liu, M.; Dong, X.; Yuan, L.; Liu, Z. Mobile-Former: Bridging MobileNet and Transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 5270–5279. [Google Scholar] [CrossRef]

- Maaz, M.; Shaker, A.; Cholakkal, H.; Khan, S.; Zamir, S.W.; Anwer, R.M.; Khan, F.S. EdgeNeXt: Efficiently Amalgamated CNN-Transformer Architecture for Mobile Vision Applications. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 3–20. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A ConvNet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 11976–11986. [Google Scholar] [CrossRef]

- Mehta, S.; Rastegari, M. Separable Self-Attention for Mobile Vision Transformers. arXiv 2022, arXiv:2206.02680. [Google Scholar] [CrossRef]

- Nanni, L.; Loreggia, A.; Barcellona, L.; Ghidoni, S. Building Ensemble of Deep Networks: Convolutional Networks and Transformers. IEEE Access 2023, 11, 124962–124974. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1314–1324. [Google Scholar] [CrossRef]

- Dosovitskiy, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Liu, X.; Peng, H.; Zheng, N.; Yang, Y.; Hu, H.; Yuan, Y. EfficientViT: Memory efficient vision transformer with cascaded group attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 14420–14430. [Google Scholar] [CrossRef]

- Loshchilov, I. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Tan, M. EfficientNet: Rethinking model scaling for convolutional neural networks. arXiv 2019, arXiv:1905.11946. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNet V2: Practical guidelines for efficient CNN architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Springer: Munich, Germany, 2018; pp. 116–131. [Google Scholar] [CrossRef]

- Huang, T.; Huang, L.; You, S.; Wang, F.; Qian, C.; Xu, C. LightViT: Towards light-weight convolution-free vision transformers. arXiv 2022, arXiv:2207.05557. [Google Scholar] [CrossRef]

- Yang, C.; Wang, Y.; Zhang, J.; Zhang, H.; Wei, Z.; Lin, Z.; Yuille, A. Lite vision transformer with enhanced self-attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 11998–12008. [Google Scholar] [CrossRef]

| Weed Species | Training | Validation | Testing |

|---|---|---|---|

| Barnyard grass | 116 | 38 | 38 |

| Climbing seedbox | 213 | 71 | 71 |

| Alligator weed | 169 | 55 | 55 |

| False daisy | 181 | 60 | 60 |

| Goosegrass | 184 | 61 | 61 |

| Red sprangletop | 174 | 58 | 58 |

| Hairy bittercress | 100 | 32 | 32 |

| Coco-grass | 109 | 35 | 35 |

| Threeleaf arrowhead | 94 | 30 | 30 |

| Name | Parameter |

|---|---|

| CPU | Intel Core i7-11700 (Intel Corporation, Santa Clara, CA, USA) |

| GPU | NVIDIA GeForce RTX 3090 (NVIDIA Corporation, Santa Clara, CA, USA) |

| System | Ubuntu 20.04 |

| Programming Language | Python 3.7.13 |

| Deep Learning Framework | PyTorch 1.12.1 |

| Model | FLOPs | Params | Acc. (%) | Prec. (%) | Rec. (%) | F1 (%) | Time (ms) |

|---|---|---|---|---|---|---|---|

| EfficientNet-B0 | 412.56 M | 4.02 M | 91.59 | 91.87 | 92.01 | 91.94 | 50.03 |

| MobileNetV2 | 326.28 M | 2.24 M | 75.45 | 79.70 | 76.68 | 78.16 | 116.65 |

| ShuffleNet V2 | 151.69 M | 1.26 M | 80.68 | 86.86 | 80.62 | 81.72 | 22.12 |

| EfficientViT-M1 | 164.22 M | 2.77 M | 92.27 | 92.39 | 92.72 | 92.55 | 26.35 |

| LightViT-T | 738.74 M | 8.09 M | 93.18 | 93.01 | 93.50 | 93.25 | 44.08 |

| LVT | 731.63 M | 3.42 M | 85.45 | 85.93 | 85.49 | 85.71 | 35.91 |

| RepEfficientViT | 223.54M | 1.34 M | 94.77 | 94.75 | 94.93 | 94.84 | 25.13 |

| Protocol | Acc (%) | F1 (%) |

|---|---|---|

| Random split (6:2:2) | 94.8 | 94.8 |

| Device-split | 91.3 | 91.0 |

| Model | Params (M) | FLOPs (M) | Acc/F1 (%) | Latency (ms) |

|---|---|---|---|---|

| Mobile-Former-294M | 3.5 | 294 | 93.12/93.05 | 42.7 |

| EdgeNeXt-XXS | 2.3 | 280 | 93.84/93.71 | 36.9 |

| ConvNeXt-Tiny | 28.6 | 4500 | 94.10/94.02 | 87.5 |

| MobileViT2-XXS | 2.0 | 230 | 93.56/93.48 | 31.2 |

| RepEfficientViT (ours) | 1.34 | 223.54 | 94.77/94.84 | 25.1 |

| Model | FLOPs | Params | Accuracy (%) | Precision (%) | Recall (%) | F1 (%) | Inference Time (ms) |

|---|---|---|---|---|---|---|---|

| EfficientViT-M1 | 164.22 M | 2.77 M | 92.27 | 92.39 | 92.72 | 92.55 | 26.35 |

| MBConv + EfficientViT | 226.35 M | 1.34 M | 93.41 | 93.69 | 93.54 | 93.61 | 34.96 |

| MBConv (Three Branches) + EfficientViT | 229.30 M | 1.35 M | 94.77 | 94.75 | 94.93 | 94.84 | 36.37 |

| RepEfficientViT | 223.54 M | 1.34 M | 94.77 | 94.75 | 94.93 | 94.84 | 25.13 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, T.; Wang, Y.; Yang, C.; Zhang, Y.; Zhang, W. A Lightweight Hybrid CNN-ViT Network for Weed Recognition in Paddy Fields. Mathematics 2025, 13, 2899. https://doi.org/10.3390/math13172899

Liu T, Wang Y, Yang C, Zhang Y, Zhang W. A Lightweight Hybrid CNN-ViT Network for Weed Recognition in Paddy Fields. Mathematics. 2025; 13(17):2899. https://doi.org/10.3390/math13172899

Chicago/Turabian StyleLiu, Tonglai, Yixuan Wang, Chengcheng Yang, Youliu Zhang, and Wanzhen Zhang. 2025. "A Lightweight Hybrid CNN-ViT Network for Weed Recognition in Paddy Fields" Mathematics 13, no. 17: 2899. https://doi.org/10.3390/math13172899

APA StyleLiu, T., Wang, Y., Yang, C., Zhang, Y., & Zhang, W. (2025). A Lightweight Hybrid CNN-ViT Network for Weed Recognition in Paddy Fields. Mathematics, 13(17), 2899. https://doi.org/10.3390/math13172899